1. Introduction

All graphs in this paper are finite, undirected and simple. The starting point of our investigation is the following celebrated conjecture of Alon, Krivelevich and Sudakov:

Conjecture 1.1 (Alon–Krivelevich–Sudakov [Reference Alon, Krivelevich and Sudakov3, Conjecture 3.1]). For every graph

![]() $F$

, there is a constant

$F$

, there is a constant

![]() $c_F \gt 0$

such that if

$c_F \gt 0$

such that if

![]() $G$

is an

$G$

is an

![]() $F$

-free graph of maximum degree

$F$

-free graph of maximum degree

![]() $\Delta \geqslant 2$

, then

$\Delta \geqslant 2$

, then

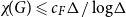

![]() $\chi\!(G) \leqslant c_F\Delta/\log\!\Delta$

.

$\chi\!(G) \leqslant c_F\Delta/\log\!\Delta$

.

Here we say that

![]() $G$

is

$G$

is

![]() $F$

-free

if

$F$

-free

if

![]() $G$

has no subgraph (not necessarily induced) isomorphic to

$G$

has no subgraph (not necessarily induced) isomorphic to

![]() $F$

. As long as

$F$

. As long as

![]() $F$

contains a cycle, the bound in Conjecture 1.1 is best possible up to the value of

$F$

contains a cycle, the bound in Conjecture 1.1 is best possible up to the value of

![]() $c_F$

, since there exist

$c_F$

, since there exist

![]() $\Delta$

-regular graphs

$\Delta$

-regular graphs

![]() $G$

of arbitrarily high girth with

$G$

of arbitrarily high girth with

![]() $\chi\!(G) \geqslant (1/2)\Delta/\log\!\Delta$

[Reference Bollobás7]. On the other hand, the best known general upper bound is

$\chi\!(G) \geqslant (1/2)\Delta/\log\!\Delta$

[Reference Bollobás7]. On the other hand, the best known general upper bound is

![]() $\chi\!(G) \leqslant c_F \Delta \log \log\!\Delta/\log\!\Delta$

due to Johansson [Reference Johansson16] (see also [Reference Molloy21]), which exceeds the conjectured value by a

$\chi\!(G) \leqslant c_F \Delta \log \log\!\Delta/\log\!\Delta$

due to Johansson [Reference Johansson16] (see also [Reference Molloy21]), which exceeds the conjectured value by a

![]() $\log \log\!\Delta$

factor.

$\log \log\!\Delta$

factor.

Nevertheless, there are some graphs

![]() $F$

for which Conjecture 1.1 has been verified. Among the earliest results along these lines is the theorem of Kim [Reference Kim18] that if

$F$

for which Conjecture 1.1 has been verified. Among the earliest results along these lines is the theorem of Kim [Reference Kim18] that if

![]() $G$

has girth at least

$G$

has girth at least

![]() $5$

(that is,

$5$

(that is,

![]() $G$

is

$G$

is

![]() $\{K_3, C_4\}$

-free), then

$\{K_3, C_4\}$

-free), then

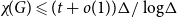

![]() $\chi\!(G) \leqslant (1 + o(1))\Delta/\log\!\Delta$

. (Here and in what follows

$\chi\!(G) \leqslant (1 + o(1))\Delta/\log\!\Delta$

. (Here and in what follows

![]() $o(1)$

indicates a function of

$o(1)$

indicates a function of

![]() $\Delta$

that approaches

$\Delta$

that approaches

![]() $0$

as

$0$

as

![]() $\Delta \to \infty$

.) Johansson [Reference Johansson15] proved Conjecture 1.1 for

$\Delta \to \infty$

.) Johansson [Reference Johansson15] proved Conjecture 1.1 for

![]() $F = K_3$

; that is, Johansson showed that if

$F = K_3$

; that is, Johansson showed that if

![]() $G$

is triangle-free, then

$G$

is triangle-free, then

![]() $\chi\!(G) \leqslant c\Delta/\log\!\Delta$

for some constant

$\chi\!(G) \leqslant c\Delta/\log\!\Delta$

for some constant

![]() $c \gt 0$

. Johansson’s proof gave the value

$c \gt 0$

. Johansson’s proof gave the value

![]() $c = 9$

[Reference Molloy and Reed22, p. 125], which was later improved to

$c = 9$

[Reference Molloy and Reed22, p. 125], which was later improved to

![]() $4 + o(1)$

by Pettie and Su [Reference Pettie and Su23] and, finally, to

$4 + o(1)$

by Pettie and Su [Reference Pettie and Su23] and, finally, to

![]() $1+o(1)$

by Molloy [Reference Molloy21], matching Kim’s bound for graphs of girth at least

$1+o(1)$

by Molloy [Reference Molloy21], matching Kim’s bound for graphs of girth at least

![]() $5$

.

$5$

.

In the same paper where they stated Conjecture 1.1, Alon, Krivelevich and Sudakov verified it for the complete tripartite graph

![]() $F = K_{1,t,t}$

[Reference Alon, Krivelevich and Sudakov3, Corollary 2.4]. (Note that the case

$F = K_{1,t,t}$

[Reference Alon, Krivelevich and Sudakov3, Corollary 2.4]. (Note that the case

![]() $t = 1$

yields Johansson’s theorem.) Their results give the bound

$t = 1$

yields Johansson’s theorem.) Their results give the bound

![]() $c_F = O(t)$

for such

$c_F = O(t)$

for such

![]() $F$

, which was recently improved to

$F$

, which was recently improved to

![]() $t + o(1)$

by Davies, Kang, Pirot and Sereni [Reference Davies, Kang, Pirot and Sereni11, §5.6]. Numerous other results related to Conjecture 1.1 can be found in the same paper.

$t + o(1)$

by Davies, Kang, Pirot and Sereni [Reference Davies, Kang, Pirot and Sereni11, §5.6]. Numerous other results related to Conjecture 1.1 can be found in the same paper.

Here we are interested in the case when the forbidden graph

![]() $F$

is bipartite. It follows from the result of Davies, Kang, Pirot and Sereni mentioned above that if

$F$

is bipartite. It follows from the result of Davies, Kang, Pirot and Sereni mentioned above that if

![]() $F = K_{t,t}$

, then Conjecture 1.1 holds with

$F = K_{t,t}$

, then Conjecture 1.1 holds with

![]() $c_F = t + o(1)$

. Prior to this work, this has been the best known bound for all

$c_F = t + o(1)$

. Prior to this work, this has been the best known bound for all

![]() $t \geqslant 3$

(the graph

$t \geqslant 3$

(the graph

![]() $F = K_{2,2}$

satisfies Conjecture 1.1 with

$F = K_{2,2}$

satisfies Conjecture 1.1 with

![]() $c_F = 1 + o(1)$

by [Reference Davies, Kang, Pirot and Sereni11, Theorem 4]). We improve this bound to

$c_F = 1 + o(1)$

by [Reference Davies, Kang, Pirot and Sereni11, Theorem 4]). We improve this bound to

![]() $1 + o(1)$

for all

$1 + o(1)$

for all

![]() $t$

(so only the lower order term actually depends on the graph

$t$

(so only the lower order term actually depends on the graph

![]() $F$

):

$F$

):

Theorem 1.2. For every bipartite graph

![]() $F$

and every

$F$

and every

![]() $\varepsilon \gt 0$

, there is

$\varepsilon \gt 0$

, there is

![]() $\Delta _0 \in{\mathbb{N}}$

such that every

$\Delta _0 \in{\mathbb{N}}$

such that every

![]() $F$

-free graph

$F$

-free graph

![]() $G$

of maximum degree

$G$

of maximum degree

![]() $\Delta \geqslant \Delta _0$

satisfies

$\Delta \geqslant \Delta _0$

satisfies

![]() $\chi\!(G) \leqslant (1+\varepsilon )\Delta/\log\!\Delta$

.

$\chi\!(G) \leqslant (1+\varepsilon )\Delta/\log\!\Delta$

.

As witnessed by random

![]() $\Delta$

-regular graphs, the upper bound on

$\Delta$

-regular graphs, the upper bound on

![]() $\chi\!(G)$

in Theorem 1.2 is asymptotically optimal up to a factor of

$\chi\!(G)$

in Theorem 1.2 is asymptotically optimal up to a factor of

![]() $2$

[Reference Bollobás7]. Furthermore, this bound coincides with the so-called shattering threshold for colourings of random graphs of average degree

$2$

[Reference Bollobás7]. Furthermore, this bound coincides with the so-called shattering threshold for colourings of random graphs of average degree

![]() $\Delta$

[Reference Zdeborová and Krząkała25, Reference Achlioptas and Coja-Oghlan1], as well as the density threshold for factor of i.i.d. independent sets in

$\Delta$

[Reference Zdeborová and Krząkała25, Reference Achlioptas and Coja-Oghlan1], as well as the density threshold for factor of i.i.d. independent sets in

![]() $\Delta$

-regular trees [Reference Rahman and Virág24], which suggests that reducing the number of colours further would be a challenging problem, even for graphs

$\Delta$

-regular trees [Reference Rahman and Virág24], which suggests that reducing the number of colours further would be a challenging problem, even for graphs

![]() $G$

of large girth. Indeed, it is not even known if such graphs admit independent sets of size greater than

$G$

of large girth. Indeed, it is not even known if such graphs admit independent sets of size greater than

![]() $(1+o(1))|V(G)| \log\!\Delta/\Delta$

.

$(1+o(1))|V(G)| \log\!\Delta/\Delta$

.

In view of the results in [Reference Alon, Krivelevich and Sudakov3] and [Reference Davies, Kang, Pirot and Sereni11], it is natural to ask if a version of Theorem 1.2 also holds for

![]() $F = K_{1,t,t}$

. We give the affirmative answer in the paper [Reference Anderson, Bernshteyn and Dhawan5], where we use some of the techniques developed here to prove that every

$F = K_{1,t,t}$

. We give the affirmative answer in the paper [Reference Anderson, Bernshteyn and Dhawan5], where we use some of the techniques developed here to prove that every

![]() $K_{1,t,t}$

-free graph

$K_{1,t,t}$

-free graph

![]() $G$

satisfies

$G$

satisfies

![]() $\chi\!(G) \leqslant (4+o(1))\Delta/\log\!\Delta$

. In other words, we eliminate the dependence on

$\chi\!(G) \leqslant (4+o(1))\Delta/\log\!\Delta$

. In other words, we eliminate the dependence on

![]() $t$

in the constant factor, although we are unable to reduce it all the way to

$t$

in the constant factor, although we are unable to reduce it all the way to

![]() $1 + o(1)$

.

$1 + o(1)$

.

Returning to the case of bipartite

![]() $F$

, we establish an extension of Theorem 1.2 in the context of DP-colouring (also known as correspondence colouring), which was introduced a few years ago by Dvořák and Postle [Reference Dvǒrák and Postle12]. DP-colouring is a generalisation of list colouring. Just as in ordinary list colouring, we assume that every vertex

$F$

, we establish an extension of Theorem 1.2 in the context of DP-colouring (also known as correspondence colouring), which was introduced a few years ago by Dvořák and Postle [Reference Dvǒrák and Postle12]. DP-colouring is a generalisation of list colouring. Just as in ordinary list colouring, we assume that every vertex

![]() $u \in V(G)$

of a graph

$u \in V(G)$

of a graph

![]() $G$

is given a list

$G$

is given a list

![]() $L(u)$

of colours to choose from. In contrast to list colouring though, the identifications between the colours in the lists are allowed to vary from edge to edge. That is, each edge

$L(u)$

of colours to choose from. In contrast to list colouring though, the identifications between the colours in the lists are allowed to vary from edge to edge. That is, each edge

![]() $uv \in E(G)$

is assigned a matching

$uv \in E(G)$

is assigned a matching

![]() $M_{uv}$

(not necessarily perfect and possibly empty) from

$M_{uv}$

(not necessarily perfect and possibly empty) from

![]() $L(u)$

to

$L(u)$

to

![]() $L(v)$

. A proper DP-colouring then is a mapping

$L(v)$

. A proper DP-colouring then is a mapping

![]() $\varphi$

that assigns a colour

$\varphi$

that assigns a colour

![]() $\varphi (u) \in L(u)$

to each vertex

$\varphi (u) \in L(u)$

to each vertex

![]() $u \in V(G)$

so that whenever

$u \in V(G)$

so that whenever

![]() $uv \in E(G)$

, we have

$uv \in E(G)$

, we have

![]() $\varphi (u)\varphi (v) \notin M_{uv}$

. Note that list colouring is indeed a special case of DP-colouring which occurs when the colours “correspond to themselves,” i.e., for each

$\varphi (u)\varphi (v) \notin M_{uv}$

. Note that list colouring is indeed a special case of DP-colouring which occurs when the colours “correspond to themselves,” i.e., for each

![]() $c \in L(u)$

and

$c \in L(u)$

and

![]() $c' \in L(v)$

, we have

$c' \in L(v)$

, we have

![]() $cc' \in M_{uv}$

if and only if

$cc' \in M_{uv}$

if and only if

![]() $c = c'$

.

$c = c'$

.

Formally, we describe DP-colouring using an auxiliary graph

![]() $H$

called a DP-cover of

$H$

called a DP-cover of

![]() $G$

. Here we treat the lists of colours assigned to distinct vertices as pairwise disjoint (this is a convenient assumption that does not restrict the generality of the model). The definition below is a modified version of the one given in [Reference Bernshteyn6]:

$G$

. Here we treat the lists of colours assigned to distinct vertices as pairwise disjoint (this is a convenient assumption that does not restrict the generality of the model). The definition below is a modified version of the one given in [Reference Bernshteyn6]:

Definition 1.3. A

DP-cover

(or a

correspondence cover

) of a graph

![]() $G$

is a pair

$G$

is a pair

![]() $\mathcal{H} = (L, H)$

, where

$\mathcal{H} = (L, H)$

, where

![]() $H$

is a graph and

$H$

is a graph and

![]() $L\;\colon V(G) \to 2^{V(H)}$

is a function such that:

$L\;\colon V(G) \to 2^{V(H)}$

is a function such that:

The set

$\{L(v) \,:\, v \in V(G)\}$

forms a partition of

$\{L(v) \,:\, v \in V(G)\}$

forms a partition of

$V(H)$

.

$V(H)$

.For each

$v \in V(G)$

,

$v \in V(G)$

,

$L(v)$

is an independent set in

$L(v)$

is an independent set in

$H$

.

$H$

.For

$u$

,

$u$

,

$v \in V(G)$

, the induced subgraph

$v \in V(G)$

, the induced subgraph

$H[L(u) \cup L(v)]$

is a matching; this matching is empty whenever

$H[L(u) \cup L(v)]$

is a matching; this matching is empty whenever

$uv \notin E(G).$

$uv \notin E(G).$

We refer to the vertices of

![]() $H$

as

colours

. For

$H$

as

colours

. For

![]() $c \in V(H)$

, we let

$c \in V(H)$

, we let

![]() $L^{-1}\!(c)$

denote the

underlying vertex

of

$L^{-1}\!(c)$

denote the

underlying vertex

of

![]() $c$

in

$c$

in

![]() $G$

, i.e., the unique vertex

$G$

, i.e., the unique vertex

![]() $v \in V(G)$

such that

$v \in V(G)$

such that

![]() $c \in L(v)$

. If two colours

$c \in L(v)$

. If two colours

![]() $c$

,

$c$

,

![]() $c' \in V(H)$

are adjacent in

$c' \in V(H)$

are adjacent in

![]() $H$

, we say that they

correspond

to each other and write

$H$

, we say that they

correspond

to each other and write

![]() $c \sim c'$

. An

$c \sim c'$

. An

![]() $\mathcal{H}$

-colouring

is a mapping

$\mathcal{H}$

-colouring

is a mapping

![]() $\varphi\;\colon V(G) \to V(H)$

such that

$\varphi\;\colon V(G) \to V(H)$

such that

![]() $\varphi (u) \in L(u)$

for all

$\varphi (u) \in L(u)$

for all

![]() $u \in V(G)$

. Similarly, a

partial

$u \in V(G)$

. Similarly, a

partial

![]() $\mathcal{H}$

-colouring

is a partial map

$\mathcal{H}$

-colouring

is a partial map

![]() $\varphi \colon V(G) \dashrightarrow V(H)$

such that

$\varphi \colon V(G) \dashrightarrow V(H)$

such that

![]() $\varphi (u) \in L(u)$

whenever

$\varphi (u) \in L(u)$

whenever

![]() $\varphi (u)$

is defined. A (partial)

$\varphi (u)$

is defined. A (partial)

![]() $\mathcal{H}$

-colouring

$\mathcal{H}$

-colouring

![]() $\varphi$

is

proper

if the image of

$\varphi$

is

proper

if the image of

![]() $\varphi$

is an independent set in

$\varphi$

is an independent set in

![]() $H$

, i.e., if

$H$

, i.e., if

![]() $\varphi (u) \not \sim \varphi (v)$

for all

$\varphi (u) \not \sim \varphi (v)$

for all

![]() $u$

,

$u$

,

![]() $v \in V(G)$

such that

$v \in V(G)$

such that

![]() $\varphi (u)$

and

$\varphi (u)$

and

![]() $\varphi (v)$

are both defined. A DP-cover

$\varphi (v)$

are both defined. A DP-cover

![]() $\mathcal{H}$

is

$\mathcal{H}$

is

![]() $k$

-fold

for some

$k$

-fold

for some

![]() $k \in{\mathbb{N}}$

if

$k \in{\mathbb{N}}$

if

![]() $|L(u)| \geqslant k$

for all

$|L(u)| \geqslant k$

for all

![]() $u \in V(G)$

. The

DP-chromatic number

of

$u \in V(G)$

. The

DP-chromatic number

of

![]() $G$

, denoted by

$G$

, denoted by

![]() $\chi _{DP}(G)$

, is the smallest

$\chi _{DP}(G)$

, is the smallest

![]() $k$

such that

$k$

such that

![]() $G$

admits a proper

$G$

admits a proper

![]() $\mathcal{H}$

-colouring with respect to every

$\mathcal{H}$

-colouring with respect to every

![]() $k$

-fold DP-cover

$k$

-fold DP-cover

![]() $\mathcal{H}$

.

$\mathcal{H}$

.

An interesting feature of DP-colouring is that it allows one to put structural constraints not on the base graph, but on the cover graph instead. For instance, Cambie and Kang [Reference Cambie and Kang10] made the following conjecture:

Conjecture 1.4 (Cambie–Kang [Reference Cambie and Kang10, Conjecture 4]). For every

![]() $\varepsilon \gt 0$

, there is

$\varepsilon \gt 0$

, there is

![]() $d_0 \in{\mathbb{N}}$

such that the following holds. Let

$d_0 \in{\mathbb{N}}$

such that the following holds. Let

![]() $G$

be a triangle-free graph and let

$G$

be a triangle-free graph and let

![]() $\mathcal{H} = (L,H)$

be a DP-cover of

$\mathcal{H} = (L,H)$

be a DP-cover of

![]() $G$

. If

$G$

. If

![]() $H$

has maximum degree

$H$

has maximum degree

![]() $d \geqslant d_0$

and

$d \geqslant d_0$

and

![]() $|L(u)| \geqslant (1+\varepsilon )d/\log d$

for all

$|L(u)| \geqslant (1+\varepsilon )d/\log d$

for all

![]() $u \in V(G)$

, then

$u \in V(G)$

, then

![]() $G$

admits a proper

$G$

admits a proper

![]() $\mathcal{H}$

-colouring.

$\mathcal{H}$

-colouring.

The conclusion of Conjecture 1.4 is known to hold if

![]() $d$

is taken to be the maximum degree of

$d$

is taken to be the maximum degree of

![]() $G$

rather than of

$G$

rather than of

![]() $H$

[Reference Bernshteyn6] (notice that

$H$

[Reference Bernshteyn6] (notice that

![]() $\Delta (G) \geqslant \Delta (H)$

, so a bound on

$\Delta (G) \geqslant \Delta (H)$

, so a bound on

![]() $\Delta (G)$

is a stronger assumption than a bound on

$\Delta (G)$

is a stronger assumption than a bound on

![]() $\Delta (H)$

). Cambie and Kang [Reference Cambie and Kang10, Corollary 3] verified Conjecture 1.4 when

$\Delta (H)$

). Cambie and Kang [Reference Cambie and Kang10, Corollary 3] verified Conjecture 1.4 when

![]() $G$

is not just triangle-free but bipartite. Amini and Reed [Reference Amini and Reed4] and, independently, Alon and Assadi [Reference Alon and Assadi2, Proposition 3.2] proved a version of Conjecture 1.4 for list colouring, but with

$G$

is not just triangle-free but bipartite. Amini and Reed [Reference Amini and Reed4] and, independently, Alon and Assadi [Reference Alon and Assadi2, Proposition 3.2] proved a version of Conjecture 1.4 for list colouring, but with

![]() $1 + o(1)$

replaced by a larger constant (

$1 + o(1)$

replaced by a larger constant (

![]() $8$

in [Reference Alon and Assadi2]). To the best of our knowledge, it is an open problem to reduce the constant factor to

$8$

in [Reference Alon and Assadi2]). To the best of our knowledge, it is an open problem to reduce the constant factor to

![]() $1 + o(1)$

even in the list colouring framework.

$1 + o(1)$

even in the list colouring framework.

Notice that in Cambie and Kang’s conjecture, the base graph

![]() $G$

is assumed to be triangle-free (which, of course, implies that

$G$

is assumed to be triangle-free (which, of course, implies that

![]() $H$

is triangle-free as well). In principle, it is possible that

$H$

is triangle-free as well). In principle, it is possible that

![]() $H$

is triangle-free while

$H$

is triangle-free while

![]() $G$

is not, and it seems that the conclusion of Conjecture 1.4 could hold even then. We suspect that Conjecture 1.1 should also hold in the following stronger form:

$G$

is not, and it seems that the conclusion of Conjecture 1.4 could hold even then. We suspect that Conjecture 1.1 should also hold in the following stronger form:

Conjecture 1.5.

For every graph

![]() $F$

, there is a constant

$F$

, there is a constant

![]() $c_F \gt 0$

such that the following holds. Let

$c_F \gt 0$

such that the following holds. Let

![]() $G$

be a graph and let

$G$

be a graph and let

![]() $\mathcal{H} = (L,H)$

be a DP-cover of

$\mathcal{H} = (L,H)$

be a DP-cover of

![]() $G$

. If

$G$

. If

![]() $H$

is

$H$

is

![]() $F$

-free and has maximum degree

$F$

-free and has maximum degree

![]() $d \geqslant 2$

and if

$d \geqslant 2$

and if

![]() $|L(u)| \geqslant c_F d/\log d$

for all

$|L(u)| \geqslant c_F d/\log d$

for all

![]() $u \in V(G)$

, then

$u \in V(G)$

, then

![]() $G$

admits a proper

$G$

admits a proper

![]() $\mathcal{H}$

-colouring.

$\mathcal{H}$

-colouring.

After this discussion, we are now ready to state our main result:

Theorem 1.6.

There is a constant

![]() $\alpha \gt 0$

such that for every

$\alpha \gt 0$

such that for every

![]() $\varepsilon \gt 0$

, there is

$\varepsilon \gt 0$

, there is

![]() $d_0 \in{\mathbb{N}}$

such that the following holds. Suppose that

$d_0 \in{\mathbb{N}}$

such that the following holds. Suppose that

![]() $d$

,

$d$

,

![]() $s$

,

$s$

,

![]() $t \in{\mathbb{N}}$

satisfy

$t \in{\mathbb{N}}$

satisfy

If

![]() $G$

is a graph and

$G$

is a graph and

![]() $\mathcal{H} = (L,H)$

is a DP-cover of

$\mathcal{H} = (L,H)$

is a DP-cover of

![]() $G$

such that:

$G$

such that:

-

(i)

$H$

is

$H$

is

$K_{s,t}$

-free,

$K_{s,t}$

-free, -

(ii)

$\Delta (H) \leqslant d$

, and

$\Delta (H) \leqslant d$

, and -

(iii)

$|L(u)| \geqslant (1+\varepsilon )d/\log d$

for all

$|L(u)| \geqslant (1+\varepsilon )d/\log d$

for all

$u \in V(G)$

,

$u \in V(G)$

,

then

![]() $G$

has a proper

$G$

has a proper

![]() $\mathcal{H}$

-colouring.

$\mathcal{H}$

-colouring.

If

![]() $F$

is an arbitrary bipartite graph with parts of size

$F$

is an arbitrary bipartite graph with parts of size

![]() $s$

and

$s$

and

![]() $t$

, then an

$t$

, then an

![]() $F$

-free graph is also

$F$

-free graph is also

![]() $K_{s,t}$

-free. Thus, Theorem 1.6 yields the following result for large enough

$K_{s,t}$

-free. Thus, Theorem 1.6 yields the following result for large enough

![]() $d_0$

as a function of

$d_0$

as a function of

![]() $s$

,

$s$

,

![]() $t$

and

$t$

and

![]() $\varepsilon$

:

$\varepsilon$

:

Corollary 1.7.

For every bipartite graph

![]() $F$

and

$F$

and

![]() $\varepsilon \gt 0$

, there is

$\varepsilon \gt 0$

, there is

![]() $d_0 \in{\mathbb{N}}$

such that the following holds. Let

$d_0 \in{\mathbb{N}}$

such that the following holds. Let

![]() $d \geqslant d_0$

. Suppose

$d \geqslant d_0$

. Suppose

![]() $\mathcal{H} = (L,H)$

is a DP-cover of

$\mathcal{H} = (L,H)$

is a DP-cover of

![]() $G$

such that:

$G$

such that:

-

(i)

$H$

is

$H$

is

$F$

-free,

$F$

-free, -

(ii)

$\Delta (H) \leqslant d$

, and

$\Delta (H) \leqslant d$

, and -

(iii)

$|L(u)| \geqslant (1+\varepsilon )d/\log d$

for all

$|L(u)| \geqslant (1+\varepsilon )d/\log d$

for all

$u \in V(G)$

.

$u \in V(G)$

.

Then

![]() $G$

has a proper

$G$

has a proper

![]() $\mathcal{H}$

-colouring.

$\mathcal{H}$

-colouring.

Setting

![]() $d = \Delta (G)$

in Corollary 1.7 gives an extension of Theorem 1.2 to DP-colouring:

$d = \Delta (G)$

in Corollary 1.7 gives an extension of Theorem 1.2 to DP-colouring:

Corollary 1.8.

For every bipartite graph

![]() $F$

and

$F$

and

![]() $\varepsilon \gt 0$

, there is

$\varepsilon \gt 0$

, there is

![]() $\Delta _0 \in{\mathbb{N}}$

such that every

$\Delta _0 \in{\mathbb{N}}$

such that every

![]() $F$

-free graph

$F$

-free graph

![]() $G$

with maximum degree

$G$

with maximum degree

![]() $\Delta \geqslant \Delta _0$

satisfies

$\Delta \geqslant \Delta _0$

satisfies

![]() $\chi _{DP}(G) \leqslant (1 + \varepsilon )\Delta/\log\!\Delta$

.

$\chi _{DP}(G) \leqslant (1 + \varepsilon )\Delta/\log\!\Delta$

.

We close this introduction with a few words about the proof of Theorem 1.6. To find a proper

![]() $\mathcal{H}$

-colouring of

$\mathcal{H}$

-colouring of

![]() $G$

we employ a variant of the so-called “Rödl Nibble” method, in which we randomly colour a small portion of

$G$

we employ a variant of the so-called “Rödl Nibble” method, in which we randomly colour a small portion of

![]() $V(G)$

and then iteratively repeat the same procedure with the vertices that remain uncoloured. (See [Reference Kang, Kelly, Kühn, Methuku and Osthus17] for a recent survey on this method.) Throughout the iterations, both the maximum degree of the cover graph and the minimum list size are decreasing, but we show that the former is decreasing at a faster rate than the latter. Thus, we eventually arrive at a situation where

$V(G)$

and then iteratively repeat the same procedure with the vertices that remain uncoloured. (See [Reference Kang, Kelly, Kühn, Methuku and Osthus17] for a recent survey on this method.) Throughout the iterations, both the maximum degree of the cover graph and the minimum list size are decreasing, but we show that the former is decreasing at a faster rate than the latter. Thus, we eventually arrive at a situation where

![]() $\Delta (H) \ll |L(v)|$

for all

$\Delta (H) \ll |L(v)|$

for all

![]() $v \in V(G)$

, and then it is easy to complete the colouring. The specific procedure in our proof is essentially the same as the one used by Kim [Reference Kim18] (see also [Reference Molloy and Reed22, Chapter 12]) to bound the chromatic number of graphs of girth at least

$v \in V(G)$

, and then it is easy to complete the colouring. The specific procedure in our proof is essentially the same as the one used by Kim [Reference Kim18] (see also [Reference Molloy and Reed22, Chapter 12]) to bound the chromatic number of graphs of girth at least

![]() $5$

, suitably modified for the DP-colouring framework. We describe it in detail in §3. The main novelty in our analysis is in the proof of Lemma 4.5, which allows us to control the maximum degree of the cover graph after each iteration. This is the only part of the proof that relies on the assumption that

$5$

, suitably modified for the DP-colouring framework. We describe it in detail in §3. The main novelty in our analysis is in the proof of Lemma 4.5, which allows us to control the maximum degree of the cover graph after each iteration. This is the only part of the proof that relies on the assumption that

![]() $H$

is

$H$

is

![]() $K_{s,t}$

-free. The proof of Lemma 4.5 involves several technical ingredients, which we explain in §5. In §6, we put the iterative process together and verify that the colouring can be completed.

$K_{s,t}$

-free. The proof of Lemma 4.5 involves several technical ingredients, which we explain in §5. In §6, we put the iterative process together and verify that the colouring can be completed.

2. Preliminaries

In this section, we outline the main probabilistic tools that will be used in our arguments. We start with the symmetric version of the Lovász Local Lemma.

Theorem 2.1 (Lovász Local Lemma; [Reference Molloy and Reed22, §4]). Let

![]() $A_1$

,

$A_1$

,

![]() $A_2$

, …,

$A_2$

, …,

![]() $A_n$

be events in a probability space. Suppose there exists

$A_n$

be events in a probability space. Suppose there exists

![]() $p \in [0, 1)$

such that for all

$p \in [0, 1)$

such that for all

![]() $1 \leqslant i \leqslant n$

we have

$1 \leqslant i \leqslant n$

we have

![]() $\mathbb{P}[A_i] \leqslant p$

. Further suppose that each

$\mathbb{P}[A_i] \leqslant p$

. Further suppose that each

![]() $A_i$

is mutually independent from all but at most

$A_i$

is mutually independent from all but at most

![]() $d_{LLL}$

other events

$d_{LLL}$

other events

![]() $A_j$

,

$A_j$

,

![]() $j\neq i$

for some

$j\neq i$

for some

![]() $d_{LLL} \in{\mathbb{N}}$

. If

$d_{LLL} \in{\mathbb{N}}$

. If

![]() $4pd_{LLL} \leqslant 1$

, then with positive probability none of the events

$4pd_{LLL} \leqslant 1$

, then with positive probability none of the events

![]() $A_1$

, …,

$A_1$

, …,

![]() $A_n$

occur.

$A_n$

occur.

Aside from the Local Lemma, we will require several concentration of measure bounds. The first of these is the Chernoff Bound for binomial random variables. We state the two-tailed version below:

Theorem 2.2 (Chernoff; [Reference Molloy and Reed22, §5]). Let

![]() $X$

be a binomial random variable on

$X$

be a binomial random variable on

![]() $n$

trials with each trial having probability

$n$

trials with each trial having probability

![]() $p$

of success. Then for any

$p$

of success. Then for any

![]() $0 \leqslant \xi \leqslant \mathbb{E}[X]$

, we have

$0 \leqslant \xi \leqslant \mathbb{E}[X]$

, we have

We will also take advantage of two versions of Talagrand’s inequality. The first version is the standard one:

Theorem 2.3 (Talagrand’s Inequality; [Reference Molloy and Reed22, §10.1]). Let

![]() $X$

be a non-negative random variable, not identically 0, which is a function of

$X$

be a non-negative random variable, not identically 0, which is a function of

![]() $n$

independent trials

$n$

independent trials

![]() $T_1$

, …,

$T_1$

, …,

![]() $T_n$

. Suppose that

$T_n$

. Suppose that

![]() $X$

satisfies the following for some

$X$

satisfies the following for some

![]() $\gamma$

,

$\gamma$

,

![]() $r \gt 0$

:

$r \gt 0$

:

-

(T1) Changing the outcome of any one trial

$T_i$

can change

$T_i$

can change

$X$

by at most

$X$

by at most

$\gamma$

.

$\gamma$

. -

(T2) For any

$s\gt 0$

, if

$s\gt 0$

, if

$X \geqslant s$

then there is a set of at most

$X \geqslant s$

then there is a set of at most

$rs$

trials that certify

$rs$

trials that certify

$X$

is at least

$X$

is at least

$s$

.

$s$

.

Then for any

![]() $0 \leqslant \xi \leqslant \mathbb{E}[X]$

, we have

$0 \leqslant \xi \leqslant \mathbb{E}[X]$

, we have

The second version of Talagrand’s inequality we will use was developed by Bruhn and Joos [Reference Bruhn and Joos9]. We refer to it as Exceptional Talagrand’s Inequality. In this version, we are allowed to discard a small “exceptional” set of outcomes before constructing certificates.

Theorem 2.4 (Exceptional Talagrand’s Inequality [Reference Bruhn and Joos9, Theorem 12]). Let

![]() $X$

be a non-negative random variable, not identically 0, which is a function of

$X$

be a non-negative random variable, not identically 0, which is a function of

![]() $n$

independent trials

$n$

independent trials

![]() $T_1$

, …,

$T_1$

, …,

![]() $T_n$

, and let

$T_n$

, and let

![]() $\Omega$

be the set of outcomes for these trials. Let

$\Omega$

be the set of outcomes for these trials. Let

![]() $\Omega ^* \subseteq \Omega$

be a measurable subset, which we shall refer to as the

exceptional set

. Suppose that

$\Omega ^* \subseteq \Omega$

be a measurable subset, which we shall refer to as the

exceptional set

. Suppose that

![]() $X$

satisfies the following for some

$X$

satisfies the following for some

![]() $\gamma \gt 1$

,

$\gamma \gt 1$

,

![]() $s\gt 0$

:

$s\gt 0$

:

-

(ET1) For all

$q\gt 0$

and every outcome

$q\gt 0$

and every outcome

$\omega \notin \Omega ^*$

, there is a set

$\omega \notin \Omega ^*$

, there is a set

$I$

of at most

$I$

of at most

$s$

trials such that

$s$

trials such that

$X(\omega ') \gt X(\omega ) - q$

whenever

$X(\omega ') \gt X(\omega ) - q$

whenever

$\omega ' \not \in \Omega ^*$

differs from

$\omega ' \not \in \Omega ^*$

differs from

$\omega$

on fewer than

$\omega$

on fewer than

$q/\gamma$

of the trials in

$q/\gamma$

of the trials in

$I$

.

$I$

. -

(ET2)

$\mathbb{P}[\Omega ^*] \leqslant M^{-2}$

, where

$\mathbb{P}[\Omega ^*] \leqslant M^{-2}$

, where

$M = \max \{\sup\!X, 1\}$

.

$M = \max \{\sup\!X, 1\}$

.

Then for every

![]() $\xi \gt 50\gamma \sqrt{s}$

, we have:

$\xi \gt 50\gamma \sqrt{s}$

, we have:

Finally, we shall use the Kővári–Sós–Turán theorem for

![]() $K_{s,t}$

-free graphs:

$K_{s,t}$

-free graphs:

Theorem 2.5 (Kővári–Sós–Turán [Reference Kővàri, Sós and Turán19]; see also [Reference Hyltén-Cavallius13]). Let

![]() $G$

be a bipartite graph with a bipartition

$G$

be a bipartite graph with a bipartition

![]() $V(G) = X \sqcup Y$

, where

$V(G) = X \sqcup Y$

, where

![]() $|X| = m$

,

$|X| = m$

,

![]() $|Y| = n$

, and

$|Y| = n$

, and

![]() $m \geqslant n$

. Suppose that

$m \geqslant n$

. Suppose that

![]() $G$

does not contain a complete bipartite subgraph with

$G$

does not contain a complete bipartite subgraph with

![]() $s$

vertices in

$s$

vertices in

![]() $X$

and

$X$

and

![]() $t$

vertices in

$t$

vertices in

![]() $Y$

. Then

$Y$

. Then

![]() $|E(G)| \leqslant s^{1/t} m^{1-1/t} n + tm$

.

$|E(G)| \leqslant s^{1/t} m^{1-1/t} n + tm$

.

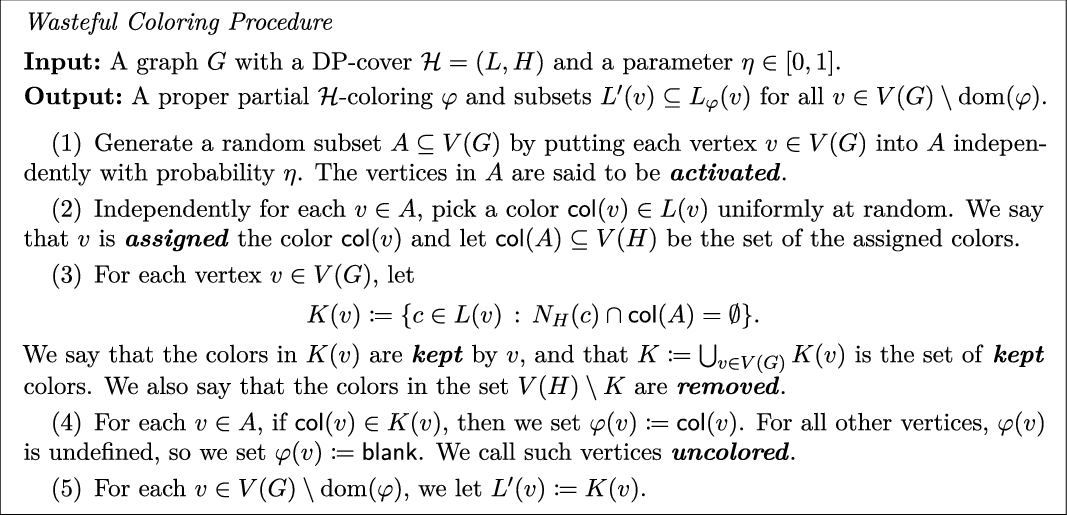

3. The wasteful colouring procedure

To prove Theorem 1.6, we will start by showing we can produce a partial

![]() $\mathcal{H}$

-colouring of our graph with desirable properties. Before we do so, we introduce some notation used in the next lemma. When

$\mathcal{H}$

-colouring of our graph with desirable properties. Before we do so, we introduce some notation used in the next lemma. When

![]() $\varphi$

is a partial

$\varphi$

is a partial

![]() $\mathcal{H}$

-colouring of

$\mathcal{H}$

-colouring of

![]() $G$

, we define

$G$

, we define

![]() $L_\varphi (v) \;:\!=\; \{c \in L(v) \,:\, N_H(c) \cap \mathrm{im}(\varphi ) = \emptyset \}$

. Given parameters

$L_\varphi (v) \;:\!=\; \{c \in L(v) \,:\, N_H(c) \cap \mathrm{im}(\varphi ) = \emptyset \}$

. Given parameters

![]() $d$

,

$d$

,

![]() $\ell$

,

$\ell$

,

![]() $\eta$

,

$\eta$

,

![]() $\beta \gt 0$

, we define the following functions:

$\beta \gt 0$

, we define the following functions:

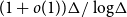

\begin{align*} \textsf{keep}(d, \ell, \eta ) &\;:\!=\; \left (1 - \frac{\eta }{\ell }\right )^{d}, \\ \textsf{uncolor}(d, \ell, \eta ) &\;:\!=\; 1 - \eta \, \textsf{keep}(d, \ell, \eta ),\\ \ell '(d, \ell, \eta, \beta ) &\;:\!=\; \textsf{keep}(d, \ell, \eta )\, \ell - \ell ^{1 - \beta }, \\ d'(d, \ell, \eta, \beta ) &\;:\!=\; \textsf{keep}(d, \ell, \eta )\, \textsf{uncolor}(d, \ell, \eta )\, d + d^{1 - \beta }. \end{align*}

\begin{align*} \textsf{keep}(d, \ell, \eta ) &\;:\!=\; \left (1 - \frac{\eta }{\ell }\right )^{d}, \\ \textsf{uncolor}(d, \ell, \eta ) &\;:\!=\; 1 - \eta \, \textsf{keep}(d, \ell, \eta ),\\ \ell '(d, \ell, \eta, \beta ) &\;:\!=\; \textsf{keep}(d, \ell, \eta )\, \ell - \ell ^{1 - \beta }, \\ d'(d, \ell, \eta, \beta ) &\;:\!=\; \textsf{keep}(d, \ell, \eta )\, \textsf{uncolor}(d, \ell, \eta )\, d + d^{1 - \beta }. \end{align*}

The meaning of this notation will become clear when we describe the randomised colouring procedure we use to prove the following lemma.

Lemma 3.1.

There are

![]() $\tilde{d} \in{\mathbb{N}}$

,

$\tilde{d} \in{\mathbb{N}}$

,

![]() $\tilde{\alpha } \gt 0$

with the following property. Let

$\tilde{\alpha } \gt 0$

with the following property. Let

![]() $\eta \gt 0$

,

$\eta \gt 0$

,

![]() $d$

,

$d$

,

![]() $\ell$

,

$\ell$

,

![]() $s$

,

$s$

,

![]() $t \in{\mathbb{N}}$

satisfy:

$t \in{\mathbb{N}}$

satisfy:

-

(1)

$d \geqslant \tilde{d}$

,

$d \geqslant \tilde{d}$

, -

(2)

$\eta d \lt \ell \lt 8d$

,

$\eta d \lt \ell \lt 8d$

, -

(3)

$s \leqslant d^{1/4}$

,

$s \leqslant d^{1/4}$

, -

(4)

$t \leqslant \dfrac{\tilde{\alpha }\log d}{\log \log d}$

,

$t \leqslant \dfrac{\tilde{\alpha }\log d}{\log \log d}$

, -

(5)

$\dfrac{1}{\log ^5d} \lt \eta \lt \dfrac{1}{\log d}.$

$\dfrac{1}{\log ^5d} \lt \eta \lt \dfrac{1}{\log d}.$

Then whenever

![]() $G$

is a graph and

$G$

is a graph and

![]() $\mathcal{H} = (L, H)$

is a DP-cover of

$\mathcal{H} = (L, H)$

is a DP-cover of

![]() $G$

such that

$G$

such that

-

(6)

$H$

is

$H$

is

$K_{s,t}$

-free,

$K_{s,t}$

-free,

-

(7)

$\Delta (H) \leqslant d$

,

$\Delta (H) \leqslant d$

, -

(8)

$|L(v)| \geqslant \ell$

for all

$|L(v)| \geqslant \ell$

for all

$v \in V(G)$

,

$v \in V(G)$

,

there exist a partial proper

![]() $\mathcal{H}$

-colouring

$\mathcal{H}$

-colouring

![]() $\varphi$

and an assignment of subsets

$\varphi$

and an assignment of subsets

![]() $L'(v) \subseteq L_\varphi (v)$

to each

$L'(v) \subseteq L_\varphi (v)$

to each

![]() $v \in V(G) \setminus \mathrm{dom}(\varphi )$

such that, setting

$v \in V(G) \setminus \mathrm{dom}(\varphi )$

such that, setting

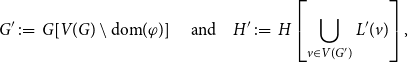

\begin{equation*} G' \;:\!=\; G\!\left [V(G)\setminus \mathrm {dom}(\varphi )\right ] \quad \text {and} \quad H' \;:\!=\; H\left [\bigcup _{v \in V(G')} L'(v)\right ], \end{equation*}

\begin{equation*} G' \;:\!=\; G\!\left [V(G)\setminus \mathrm {dom}(\varphi )\right ] \quad \text {and} \quad H' \;:\!=\; H\left [\bigcup _{v \in V(G')} L'(v)\right ], \end{equation*}

we get that for all

![]() $v \in V(G')$

,

$v \in V(G')$

,

![]() $c \in L'(v)$

and

$c \in L'(v)$

and

![]() $\beta = 1/(25t)$

:

$\beta = 1/(25t)$

:

To prove Lemma 3.1, we will carry out a variant of the the “Wasteful Coloring Procedure,” as described in [Reference Molloy and Reed22, Chapter 12]. As mentioned in the introduction, essentially the same procedure was used by Kim [Reference Kim18] to bound the chromatic number of graphs of girth at least

![]() $5$

. We describe this procedure in terms of DP-colouring below:

$5$

. We describe this procedure in terms of DP-colouring below:

In §§4 and 5, we will show that, with positive probability, the output of the Wasteful Coloring Procedure satisfies the conclusion of Lemma 3.1. With this procedure in mind, we can now provide an intuitive understanding for the functions defined in the beginning of this section. Suppose

![]() $G$

,

$G$

,

![]() $\mathcal{H} = (L,H)$

satisfy

$\mathcal{H} = (L,H)$

satisfy

![]() $|L(v)| = \ell$

and

$|L(v)| = \ell$

and

![]() $\Delta (H) = d$

. If we run the Wasteful Coloring Procedure with these

$\Delta (H) = d$

. If we run the Wasteful Coloring Procedure with these

![]() $G$

and

$G$

and

![]() $\mathcal{H}$

, then

$\mathcal{H}$

, then

![]() $\textsf{keep}(d,\ell, \eta )$

is the probability that a colour

$\textsf{keep}(d,\ell, \eta )$

is the probability that a colour

![]() $c \in L(v)$

is kept by

$c \in L(v)$

is kept by

![]() $v$

(i.e.,

$v$

(i.e.,

![]() $c \in K(v)$

), while

$c \in K(v)$

), while

![]() $\textsf{uncolor}(d, \ell, \eta )$

is approximately the probability that a vertex

$\textsf{uncolor}(d, \ell, \eta )$

is approximately the probability that a vertex

![]() $v \in V(G)$

is uncoloured (i.e.,

$v \in V(G)$

is uncoloured (i.e.,

![]() $\varphi (v) = \textsf{blank}$

). The details of these calculations are given in §4. Note that, assuming the terms

$\varphi (v) = \textsf{blank}$

). The details of these calculations are given in §4. Note that, assuming the terms

![]() $\ell ^{1-\beta }$

and

$\ell ^{1-\beta }$

and

![]() $d^{1-\beta }$

in the definitions of

$d^{1-\beta }$

in the definitions of

![]() $\ell '(d, \ell, \eta, \beta )$

and

$\ell '(d, \ell, \eta, \beta )$

and

![]() $d'(d, \ell, \eta, \beta )$

are small, we can write

$d'(d, \ell, \eta, \beta )$

are small, we can write

In other words, an application of Lemma 3.1 reduces the ratio

![]() $d/\ell$

roughly by a factor of

$d/\ell$

roughly by a factor of

![]() $\textsf{uncolor}(d, \ell, \eta )$

. In §6 we will show that Lemma 3.1 can be applied iteratively to eventually make the ratio

$\textsf{uncolor}(d, \ell, \eta )$

. In §6 we will show that Lemma 3.1 can be applied iteratively to eventually make the ratio

![]() $d/\ell$

less than, say,

$d/\ell$

less than, say,

![]() $1/8$

, after which the colouring can be completed using the following proposition:

$1/8$

, after which the colouring can be completed using the following proposition:

Proposition 3.2.

Let

![]() $G$

be a graph with a

$G$

be a graph with a

![]() $DP$

-cover

$DP$

-cover

![]() $\mathcal{H} = (L,H)$

such that

$\mathcal{H} = (L,H)$

such that

![]() $|L(v)| \geqslant 8d$

for every

$|L(v)| \geqslant 8d$

for every

![]() $v \in V(G)$

, where

$v \in V(G)$

, where

![]() $d$

is the maximum degree of

$d$

is the maximum degree of

![]() $H$

. Then, there exists a proper

$H$

. Then, there exists a proper

![]() $\mathcal{H}$

-colouring of

$\mathcal{H}$

-colouring of

![]() $G$

.

$G$

.

This proposition is standard and proved using the Lovász Local Lemma. Its proof in the DP-colouring framework can be found, e.g., in [Reference Bernshteyn6, Appendix].

For the reader already familiar with some of the applications the “Rödl Nibble” method to graph colouring problems, let us make a comment about one technical feature of our Wasteful Coloring Procedure. For the analysis of constructions of this sort, it is often beneficial to assume that every colour has the same probability of being kept. It is clear, however, that in our procedure the probability that a colour

![]() $c \in V(H)$

is kept depends on the degree of

$c \in V(H)$

is kept depends on the degree of

![]() $c$

in

$c$

in

![]() $H$

: the larger the degree, the higher the chance that

$H$

: the larger the degree, the higher the chance that

![]() $c$

gets removed. The usual way of addressing this issue is by introducing additional randomness in the form of “equalizing coin flips” that artificially increase the probability of removing the colours of low degree. (See, for example, the procedure in [Reference Molloy and Reed22, Chapter 12].) However, it turns out that we can avoid the added technicality of dealing with equalising coin flips by leveraging the generality of the DP-colouring framework. Namely, by replacing

$c$

gets removed. The usual way of addressing this issue is by introducing additional randomness in the form of “equalizing coin flips” that artificially increase the probability of removing the colours of low degree. (See, for example, the procedure in [Reference Molloy and Reed22, Chapter 12].) However, it turns out that we can avoid the added technicality of dealing with equalising coin flips by leveraging the generality of the DP-colouring framework. Namely, by replacing

![]() $H$

with a supergraph, we may always arrange

$H$

with a supergraph, we may always arrange

![]() $H$

to be

$H$

to be

![]() $d$

-regular (see Proposition 4.1). This allows us to assume that every colour has the same probability of being kept, even without extra coin flips. This way of simplifying the analysis of probabilistic colouring constructions was introduced by Bonamy, Perrett and Postle in [Reference Bonamy, Perrett and Postle8] and nicely exemplifies the benefits of working with DP-colourings compared to the classical list-colouring setting.

$d$

-regular (see Proposition 4.1). This allows us to assume that every colour has the same probability of being kept, even without extra coin flips. This way of simplifying the analysis of probabilistic colouring constructions was introduced by Bonamy, Perrett and Postle in [Reference Bonamy, Perrett and Postle8] and nicely exemplifies the benefits of working with DP-colourings compared to the classical list-colouring setting.

4. Proof of Lemma 3.1

In this section, we present the proof of Lemma 3.1, apart from one technical lemma that will be established in §5. We start with the following proposition which allows us to assume that the given DP-cover of

![]() $G$

is

$G$

is

![]() $d$

-regular.

$d$

-regular.

Proposition 4.1.

Let

![]() $G$

be a graph and

$G$

be a graph and

![]() $(L,H)$

be a DP-cover of

$(L,H)$

be a DP-cover of

![]() $G$

such that

$G$

such that

![]() $\Delta (H) \leqslant d$

and

$\Delta (H) \leqslant d$

and

![]() $H$

is

$H$

is

![]() $K_{s,t}$

-free for some

$K_{s,t}$

-free for some

![]() $d$

,

$d$

,

![]() $s$

,

$s$

,

![]() $t \in{\mathbb{N}}$

. Then there exist a graph

$t \in{\mathbb{N}}$

. Then there exist a graph

![]() $G^*$

and a DP-cover

$G^*$

and a DP-cover

![]() $(L^*, H^*)$

of

$(L^*, H^*)$

of

![]() $G^\ast$

such that the following statements hold:

$G^\ast$

such that the following statements hold:

-

•

$G$

is a subgraph of

$G$

is a subgraph of

$G^*$

,

$G^*$

, -

•

$H$

is a subgraph of

$H$

is a subgraph of

$H^*$

,

$H^*$

, -

• for all

$v \in V(G)$

,

$v \in V(G)$

,

$L^\ast (v) = L(v)$

,

$L^\ast (v) = L(v)$

, -

•

$H^*$

is

$H^*$

is

$K_{s,t}$

-free,

$K_{s,t}$

-free,

-

•

$H^*$

is

$H^*$

is

$d$

-regular.

$d$

-regular.

Proof. Set

![]() $N = \sum _{c \in V(H)}\!(d-\deg _H\!(c))$

and let

$N = \sum _{c \in V(H)}\!(d-\deg _H\!(c))$

and let

![]() $\Gamma$

be an

$\Gamma$

be an

![]() $N$

-regular graph with girth at least

$N$

-regular graph with girth at least

![]() $5$

. (Such

$5$

. (Such

![]() $\Gamma$

exists by [Reference Imrich14, Reference Margulis20].) Without loss of generality, we may assume that

$\Gamma$

exists by [Reference Imrich14, Reference Margulis20].) Without loss of generality, we may assume that

![]() $V(\Gamma ) = \{1,\ldots,k\}$

, where

$V(\Gamma ) = \{1,\ldots,k\}$

, where

![]() $k \;:\!=\; |V(\Gamma )|$

. Take

$k \;:\!=\; |V(\Gamma )|$

. Take

![]() $k$

vertex-disjoint copies of

$k$

vertex-disjoint copies of

![]() $G$

, say

$G$

, say

![]() $G_1$

, …,

$G_1$

, …,

![]() $G_k$

, and let

$G_k$

, and let

![]() $(L_i, H_i)$

be a DP-cover of

$(L_i, H_i)$

be a DP-cover of

![]() $G_i$

isomorphic to

$G_i$

isomorphic to

![]() $(L,H)$

. Define

$(L,H)$

. Define

![]() $X_i \;:\!=\; \{c\in V(H_i)\,:\, \deg _{H_i}\!(c) \lt d\}$

for every

$X_i \;:\!=\; \{c\in V(H_i)\,:\, \deg _{H_i}\!(c) \lt d\}$

for every

![]() $1\leqslant i \leqslant k$

. The graphs

$1\leqslant i \leqslant k$

. The graphs

![]() $G^\ast$

and

$G^\ast$

and

![]() $H^\ast$

are obtained from the disjoint unions of

$H^\ast$

are obtained from the disjoint unions of

![]() $G_1$

, …,

$G_1$

, …,

![]() $G_k$

and

$G_k$

and

![]() $H_1$

, …,

$H_1$

, …,

![]() $H_k$

respectively by performing the following sequence of operations once for each edge

$H_k$

respectively by performing the following sequence of operations once for each edge

![]() $ij \in E(\Gamma )$

, one edge at a time:

$ij \in E(\Gamma )$

, one edge at a time:

-

(1) Pick arbitrary vertices

$c \in X_i$

and

$c \in X_i$

and

$c' \in X_j$

.

$c' \in X_j$

. -

(2) Add the edge

$cc'$

to

$cc'$

to

$E(H^*)$

and the edge

$E(H^*)$

and the edge

$L_i^{-1}\!(c)L_j^{-1}\!(c')$

to

$L_i^{-1}\!(c)L_j^{-1}\!(c')$

to

$E(G^*)$

.

$E(G^*)$

. -

(3) If

$\deg _{H^*}\!(c) = d$

, remove

$\deg _{H^*}\!(c) = d$

, remove

$c$

from

$c$

from

$X_i$

.

$X_i$

. -

(4) If

$\deg _{H^*}\!(c') = d$

, remove

$\deg _{H^*}\!(c') = d$

, remove

$c'$

from

$c'$

from

$X_j$

.

$X_j$

.

Since

![]() $\Gamma$

is

$\Gamma$

is

![]() $N$

-regular, throughout this process the sum

$N$

-regular, throughout this process the sum

![]() $\sum _{c \in V(H_i)}\! (d - \deg _{H^\ast }\!(c))$

decreases exactly

$\sum _{c \in V(H_i)}\! (d - \deg _{H^\ast }\!(c))$

decreases exactly

![]() $N$

times, which implies that the resulting graph

$N$

times, which implies that the resulting graph

![]() $H^\ast$

is

$H^\ast$

is

![]() $d$

-regular. Furthermore, since

$d$

-regular. Furthermore, since

![]() $\Gamma$

has girth at least

$\Gamma$

has girth at least

![]() $5$

and

$5$

and

![]() $H$

is

$H$

is

![]() $K_{s,t}$

-free,

$K_{s,t}$

-free,

![]() $H^*$

is also

$H^*$

is also

![]() $K_{s,t}$

-free. Hence, if we define

$K_{s,t}$

-free. Hence, if we define

![]() $L^*\colon V(G^*) \to 2^{V(H^*)}$

so that

$L^*\colon V(G^*) \to 2^{V(H^*)}$

so that

![]() $L^*(v) = L_i(v)$

for all

$L^*(v) = L_i(v)$

for all

![]() $v \in V(G_i)$

, then

$v \in V(G_i)$

, then

![]() $(L^*,H^*)$

is a DP-cover of

$(L^*,H^*)$

is a DP-cover of

![]() $G^*$

satisfying all the requirements.

$G^*$

satisfying all the requirements.

Suppose

![]() $d$

,

$d$

,

![]() $\ell$

,

$\ell$

,

![]() $s$

,

$s$

,

![]() $t$

,

$t$

,

![]() $\eta$

and a graph

$\eta$

and a graph

![]() $G$

with a DP-cover

$G$

with a DP-cover

![]() $\mathcal{H} = (L, H)$

satisfy the conditions of Lemma 3.1. By removing some vertices from

$\mathcal{H} = (L, H)$

satisfy the conditions of Lemma 3.1. By removing some vertices from

![]() $H$

if necessary, we may assume that

$H$

if necessary, we may assume that

![]() $|L(v)| = \ell$

for all

$|L(v)| = \ell$

for all

![]() $v \in V(G)$

. Furthermore, by Proposition 4.1, we may assume that

$v \in V(G)$

. Furthermore, by Proposition 4.1, we may assume that

![]() $H$

is

$H$

is

![]() $d$

-regular. Since we may delete all the edges of

$d$

-regular. Since we may delete all the edges of

![]() $G$

whose corresponding matchings in

$G$

whose corresponding matchings in

![]() $H$

are empty, we may also assume that

$H$

are empty, we may also assume that

![]() $\Delta (G) \leqslant \ell d$

. Suppose we have carried out the Wasteful Coloring Procedure with these

$\Delta (G) \leqslant \ell d$

. Suppose we have carried out the Wasteful Coloring Procedure with these

![]() $G$

and

$G$

and

![]() $\mathcal{H}$

. As in the statement of Lemma 3.1, we let

$\mathcal{H}$

. As in the statement of Lemma 3.1, we let

\begin{equation*} G' \;:\!=\; G[V(G)\setminus \mathrm {dom}(\varphi )] \quad \text {and} \quad H' \;:\!=\; H\left [\bigcup _{v \in V(G')} L'(v)\right ]. \end{equation*}

\begin{equation*} G' \;:\!=\; G[V(G)\setminus \mathrm {dom}(\varphi )] \quad \text {and} \quad H' \;:\!=\; H\left [\bigcup _{v \in V(G')} L'(v)\right ]. \end{equation*}

For each

![]() $v \in V(G)$

and

$v \in V(G)$

and

![]() $c \in V(H)$

, we define the random variables

$c \in V(H)$

, we define the random variables

Note that if

![]() $v \in V(G')$

, then

$v \in V(G')$

, then

![]() $\ell '(v) = |L'(v)|$

; similarly, if

$\ell '(v) = |L'(v)|$

; similarly, if

![]() $c \in V(H')$

, then

$c \in V(H')$

, then

![]() $d'(c)= \deg _{H'}\!(c)$

. As in Lemma 3.1, we let

$d'(c)= \deg _{H'}\!(c)$

. As in Lemma 3.1, we let

![]() $\beta \;:\!=\; 1/(25t)$

. For the ease of notation, we will write

$\beta \;:\!=\; 1/(25t)$

. For the ease of notation, we will write

![]() $\textsf{keep}$

to mean

$\textsf{keep}$

to mean

![]() $\textsf{keep}(d,\ell,\eta )$

,

$\textsf{keep}(d,\ell,\eta )$

,

![]() $\textsf{uncolor}$

to mean

$\textsf{uncolor}$

to mean

![]() $\textsf{uncolor}(d, \ell, \eta )$

, etc. We will show, for

$\textsf{uncolor}(d, \ell, \eta )$

, etc. We will show, for

![]() $d$

large enough, that:

$d$

large enough, that:

Lemma 4.2.

For all

![]() $v \in V(G)$

,

$v \in V(G)$

,

![]() $\mathbb{E}[\ell '(v)] = {\textsf{keep}}\, \ell$

,

$\mathbb{E}[\ell '(v)] = {\textsf{keep}}\, \ell$

,

Lemma 4.3.

For all

![]() $v \in V(G)$

,

$v \in V(G)$

,

![]() $\mathbb{P}\Big [\big |\ell '(v) - \mathbb{E}[\ell '(v)]\big | \gt \ell ^{1-\beta }\Big ] \leqslant d^{-100}$

.

$\mathbb{P}\Big [\big |\ell '(v) - \mathbb{E}[\ell '(v)]\big | \gt \ell ^{1-\beta }\Big ] \leqslant d^{-100}$

.

Lemma 4.4.

For all

![]() $c \in V(H)$

,

$c \in V(H)$

,

![]() $\mathbb{E}[d'(c)] \leqslant {\textsf{keep}}\, {\textsf{uncolor}}\, d + d/\ell$

,

$\mathbb{E}[d'(c)] \leqslant {\textsf{keep}}\, {\textsf{uncolor}}\, d + d/\ell$

,

Lemma 4.5.

For all

![]() $c \in V(H)$

,

$c \in V(H)$

,

![]() $\mathbb{P}\big [d'(c) \gt \mathbb{E}[d'(c)] - d/\ell + d^{1-\beta }\big ] \leqslant d^{-100}.$

$\mathbb{P}\big [d'(c) \gt \mathbb{E}[d'(c)] - d/\ell + d^{1-\beta }\big ] \leqslant d^{-100}.$

Together, these lemmas will allow us to complete the proof of Lemma 3.1, as follows.

Proof of Lemma 3.1. Take

![]() $\tilde d$

so large that Lemmas 4.2–4.5 hold. Define the following random events for every vertex

$\tilde d$

so large that Lemmas 4.2–4.5 hold. Define the following random events for every vertex

![]() $v \in V(G)$

and every colour

$v \in V(G)$

and every colour

![]() $c\in V(H)$

:

$c\in V(H)$

:

We will use the Lovász Local Lemma, Theorem 2.1. By Lemma 4.2 and Lemma 4.3, we have:

\begin{align*} \mathbb{P}[A_v] &= \mathbb{P}\big [\ell '(v) \leqslant \textsf{keep} \, \ell - \ell ^{1-\beta }\big ]\\ &= \mathbb{P}\big [\ell '(v) \leqslant \mathbb{E}[\ell '(v)] - \ell ^{1-\beta }\big ] \\ & \leqslant d^{-100}. \end{align*}

\begin{align*} \mathbb{P}[A_v] &= \mathbb{P}\big [\ell '(v) \leqslant \textsf{keep} \, \ell - \ell ^{1-\beta }\big ]\\ &= \mathbb{P}\big [\ell '(v) \leqslant \mathbb{E}[\ell '(v)] - \ell ^{1-\beta }\big ] \\ & \leqslant d^{-100}. \end{align*}

By Lemma 4.4 and Lemma 4.5, we have:

\begin{align*} \mathbb{P}[B_{c}] &= \mathbb{P}\big [d'(c) \geqslant \textsf{keep} \, \textsf{uncolor} \, d + d^{1-\beta }\big ]\\ & \leqslant \mathbb{P}\big [d'(c) \geqslant \mathbb{E}[d'(c)] - (d/\ell )+ d^{1-\beta }\big ] \\ & \leqslant d^{-100}. \end{align*}

\begin{align*} \mathbb{P}[B_{c}] &= \mathbb{P}\big [d'(c) \geqslant \textsf{keep} \, \textsf{uncolor} \, d + d^{1-\beta }\big ]\\ & \leqslant \mathbb{P}\big [d'(c) \geqslant \mathbb{E}[d'(c)] - (d/\ell )+ d^{1-\beta }\big ] \\ & \leqslant d^{-100}. \end{align*}

Let

![]() $p \;:\!=\; d^{-100}$

. Note that events

$p \;:\!=\; d^{-100}$

. Note that events

![]() $A_v$

and

$A_v$

and

![]() $B_{c}$

are mutually independent from events of the form

$B_{c}$

are mutually independent from events of the form

![]() $A_u$

and

$A_u$

and

![]() $B'_{\!\!c}$

where

$B'_{\!\!c}$

where

![]() $c' \in L(u)$

and

$c' \in L(u)$

and

![]() $u \in V(G)$

is at distance at least

$u \in V(G)$

is at distance at least

![]() $5$

from

$5$

from

![]() $v$

. Since we are assuming that

$v$

. Since we are assuming that

![]() $\Delta (G) \leqslant \ell d$

, there are at most

$\Delta (G) \leqslant \ell d$

, there are at most

![]() $1 + (\ell d)^4$

vertices in

$1 + (\ell d)^4$

vertices in

![]() $G$

of distance at most

$G$

of distance at most

![]() $4$

from

$4$

from

![]() $v$

. For each such vertex

$v$

. For each such vertex

![]() $u$

, there are

$u$

, there are

![]() $\ell + 1$

events corresponding to

$\ell + 1$

events corresponding to

![]() $u$

and the colours in

$u$

and the colours in

![]() $L(u)$

, so we can let

$L(u)$

, so we can let

![]() $d_{LLL} \;:\!=\; (\ell +1)(1 + (\ell d)^4) = O(d^9)$

. Assuming

$d_{LLL} \;:\!=\; (\ell +1)(1 + (\ell d)^4) = O(d^9)$

. Assuming

![]() $d$

is large enough, we have

$d$

is large enough, we have

so, by Theorem 2.1, with positive probability none of the events

![]() $A_v$

,

$A_v$

,

![]() $B_{c}$

occur, as desired.

$B_{c}$

occur, as desired.

The proofs of Lemmas 4.2–4.4 are fairly straightforward and similar to the corresponding parts of the argument in the girth-

![]() $5$

case (see [Reference Molloy and Reed22, Chapter 12]). We present them here.

$5$

case (see [Reference Molloy and Reed22, Chapter 12]). We present them here.

Proof of Lemma 4.2. Consider any

![]() $c \in L(v)$

. We have

$c \in L(v)$

. We have

![]() $c \in K(v)$

exactly when

$c \in K(v)$

exactly when

![]() $N_H(c) \cap \textsf{col}(A) = \emptyset$

, i.e., when no neighbour of

$N_H(c) \cap \textsf{col}(A) = \emptyset$

, i.e., when no neighbour of

![]() $c$

is assigned to its underlying vertex. The probability of this event is

$c$

is assigned to its underlying vertex. The probability of this event is

![]() $\left (1 -\eta/\ell \right )^{d} = \textsf{keep}$

. By the linearity of expectation, it follows that

$\left (1 -\eta/\ell \right )^{d} = \textsf{keep}$

. By the linearity of expectation, it follows that

![]() $\mathbb{E}[\ell '(v)] = \textsf{keep}\, \ell$

.

$\mathbb{E}[\ell '(v)] = \textsf{keep}\, \ell$

.

Proof of Lemma 4.3. It is easier to consider the random variable

![]() $r(v) \;:\!=\; \ell - \ell '(v)$

, the number of colours removed from

$r(v) \;:\!=\; \ell - \ell '(v)$

, the number of colours removed from

![]() $L(v)$

. We will use Theorem 2.3, Talagrand’s Inequality. Order the colours in

$L(v)$

. We will use Theorem 2.3, Talagrand’s Inequality. Order the colours in

![]() $L(u)$

for each

$L(u)$

for each

![]() $u \in N_G(v)$

arbitrarily. Let

$u \in N_G(v)$

arbitrarily. Let

![]() $T_u$

be the random variable that is equal to

$T_u$

be the random variable that is equal to

![]() $0$

if

$0$

if

![]() $u \not \in A$

and

$u \not \in A$

and

![]() $i$

if

$i$

if

![]() $u \in A$

and

$u \in A$

and

![]() $\textsf{col}(u)$

is the

$\textsf{col}(u)$

is the

![]() $i$

-th colour in

$i$

-th colour in

![]() $L(u)$

. Then

$L(u)$

. Then

![]() $T_u$

,

$T_u$

,

![]() $u \in N_G(v)$

is a list of independent trials whose outcomes determine

$u \in N_G(v)$

is a list of independent trials whose outcomes determine

![]() $r(v)$

. Changing the outcome of any one of these trials can affect

$r(v)$

. Changing the outcome of any one of these trials can affect

![]() $r(v)$

at most by

$r(v)$

at most by

![]() $1$

. Furthermore, if

$1$

. Furthermore, if

![]() $r(v) \geqslant s$

for some

$r(v) \geqslant s$

for some

![]() $s$

, then this fact can be certified by the outcomes of

$s$

, then this fact can be certified by the outcomes of

![]() $s$

of these trials. Namely, for each removed colour

$s$

of these trials. Namely, for each removed colour

![]() $c \in L(v) \setminus K(v)$

, we take the trial

$c \in L(v) \setminus K(v)$

, we take the trial

![]() $T_u$

corresponding to any vertex

$T_u$

corresponding to any vertex

![]() $u \in N_G(v)$

such that

$u \in N_G(v)$

such that

![]() $u \in A$

and

$u \in A$

and

![]() $\textsf{col}(u)$

is adjacent to

$\textsf{col}(u)$

is adjacent to

![]() $c$

in

$c$

in

![]() $H$

. Thus, we can now apply Theorem 2.3 with

$H$

. Thus, we can now apply Theorem 2.3 with

![]() $\gamma =1$

,

$\gamma =1$

,

![]() $r=1$

to get:

$r=1$

to get:

\begin{align*} \mathbb{P}\Big [\big |\ell '(v) - \mathbb{E}[\ell '(v)]\big | \gt \ell ^{1-\beta }\Big ] &=\mathbb{P}\Big [\big |r(v) - \mathbb{E}[r(v)]\big | \gt \ell ^{1-\beta }\Big ] \\ &= \mathbb{P}\Big [\big |r(v) - \mathbb{E}[r(v)]\big | \gt \frac{\ell ^{1-\beta }}{2} + \frac{\ell ^{1-\beta }}{2}\Big ] \\ &\leqslant \mathbb{P}\Big [\big |r(v) - \mathbb{E}[r(v)]\big | \gt \frac{\ell ^{1-\beta }}{2} + 60\sqrt{\mathbb{E}[r(v)]}\Big ] \\ &\leqslant 4\exp \left (-\frac{\ell ^{2(1-\beta )}}{32\, (1-\textsf{keep})\, \ell }\right ) \\ &\leqslant 4\exp{\left (-\frac{\ell ^{1-2\beta }}{32}\right )} \\ &\leqslant 4\exp{\left (-\frac{(d/\log ^5d)^{1-2\beta }}{32}\right )} \\ &\leqslant d^{-100}, \end{align*}

\begin{align*} \mathbb{P}\Big [\big |\ell '(v) - \mathbb{E}[\ell '(v)]\big | \gt \ell ^{1-\beta }\Big ] &=\mathbb{P}\Big [\big |r(v) - \mathbb{E}[r(v)]\big | \gt \ell ^{1-\beta }\Big ] \\ &= \mathbb{P}\Big [\big |r(v) - \mathbb{E}[r(v)]\big | \gt \frac{\ell ^{1-\beta }}{2} + \frac{\ell ^{1-\beta }}{2}\Big ] \\ &\leqslant \mathbb{P}\Big [\big |r(v) - \mathbb{E}[r(v)]\big | \gt \frac{\ell ^{1-\beta }}{2} + 60\sqrt{\mathbb{E}[r(v)]}\Big ] \\ &\leqslant 4\exp \left (-\frac{\ell ^{2(1-\beta )}}{32\, (1-\textsf{keep})\, \ell }\right ) \\ &\leqslant 4\exp{\left (-\frac{\ell ^{1-2\beta }}{32}\right )} \\ &\leqslant 4\exp{\left (-\frac{(d/\log ^5d)^{1-2\beta }}{32}\right )} \\ &\leqslant d^{-100}, \end{align*}

where the first and last inequalities hold for

![]() $d$

large enough.

$d$

large enough.

Proof of Lemma 4.4. Let

![]() $u \in N_G(v)$

and

$u \in N_G(v)$

and

![]() $c'\in L(u) \cap N_H(c)$

. We need to bound the probability that

$c'\in L(u) \cap N_H(c)$

. We need to bound the probability that

![]() $\varphi (u) = \textsf{blank}$

and

$\varphi (u) = \textsf{blank}$

and

![]() $c' \in K(u)$

. We split into the following cases.

$c' \in K(u)$

. We split into the following cases.

Case 1:

![]() $u \notin A$

and

$u \notin A$

and

![]() $c' \in K(u)$

. This occurs with probability

$c' \in K(u)$

. This occurs with probability

![]() $(1-\eta ) \textsf{keep}$

.

$(1-\eta ) \textsf{keep}$

.

Case 2:

![]() $u \in A$

,

$u \in A$

,

![]() $\textsf{col}(u)=c'' \neq c'$

,

$\textsf{col}(u)=c'' \neq c'$

,

![]() $\varphi (u) = \textsf{blank}$

and

$\varphi (u) = \textsf{blank}$

and

![]() $c' \in K(u)$

. In this case, there must be some

$c' \in K(u)$

. In this case, there must be some

![]() $w \in N_G(u)$

such that

$w \in N_G(u)$

such that

![]() $\textsf{col}(w) \sim c''$

. Since

$\textsf{col}(w) \sim c''$

. Since

![]() $c' \in K(u)$

, we must have

$c' \in K(u)$

, we must have

![]() $\textsf{col}(w) \not \sim c'$

. For each

$\textsf{col}(w) \not \sim c'$

. For each

![]() $w \in N_G(u)$

,

$w \in N_G(u)$

,

Therefore, we can write

\begin{align*} &\mathbb{P}\big [\varphi (u) = \textsf{blank}\, |\, \textsf{col}(u) = c'',\, c' \in K(u)\big ]\\ &=\, 1 - \left (1 - \frac{\eta }{\ell - \eta }\right )^d\\ &=\, 1 - \textsf{keep} \, \left (1 - \frac{\eta ^2}{(\ell - \eta )^2}\right )^d\\ &\leqslant \, 1 - \textsf{keep} + \textsf{keep} \frac{d\eta ^2}{(\ell -\eta )^2}\\ &\leqslant \, 1 - \textsf{keep} + \frac{1}{\ell }, \end{align*}

\begin{align*} &\mathbb{P}\big [\varphi (u) = \textsf{blank}\, |\, \textsf{col}(u) = c'',\, c' \in K(u)\big ]\\ &=\, 1 - \left (1 - \frac{\eta }{\ell - \eta }\right )^d\\ &=\, 1 - \textsf{keep} \, \left (1 - \frac{\eta ^2}{(\ell - \eta )^2}\right )^d\\ &\leqslant \, 1 - \textsf{keep} + \textsf{keep} \frac{d\eta ^2}{(\ell -\eta )^2}\\ &\leqslant \, 1 - \textsf{keep} + \frac{1}{\ell }, \end{align*}

where the last inequality follows since

![]() $\textsf{keep} \leqslant 1$

,

$\textsf{keep} \leqslant 1$

,

![]() $\eta d \lt \ell$

,

$\eta d \lt \ell$

,

![]() $\eta \lt 1/\log d$

, and

$\eta \lt 1/\log d$

, and

![]() $d$

is large enough.

$d$

is large enough.

Putting the two cases together, we have:

\begin{align*} &\mathbb{P}\big [\varphi (u) = \textsf{blank}, \, c' \in K(u)\big ] \,\\&\leqslant \, (1-\eta )\, \textsf{keep} + \eta \, \left (1 - \frac{1}{\ell }\right )\, \textsf{keep} \, \left (1 - \textsf{keep} + \frac{1}{\ell }\right ) \\ &\leqslant \, \textsf{keep}\, \textsf{uncolor} + \frac{1}{\ell }. \end{align*}

\begin{align*} &\mathbb{P}\big [\varphi (u) = \textsf{blank}, \, c' \in K(u)\big ] \,\\&\leqslant \, (1-\eta )\, \textsf{keep} + \eta \, \left (1 - \frac{1}{\ell }\right )\, \textsf{keep} \, \left (1 - \textsf{keep} + \frac{1}{\ell }\right ) \\ &\leqslant \, \textsf{keep}\, \textsf{uncolor} + \frac{1}{\ell }. \end{align*}

Finally, by linearity of expectation, we conclude that

proving the lemma.

The proof of Lemma 4.5 is quite technical and will be given in §5. It is the only part of our argument that relies on the fact that

![]() $H$

is

$H$

is

![]() $K_{s,t}$

-free. To explain why proving Lemma 4.5 is difficult, consider an arbitrary colour

$K_{s,t}$

-free. To explain why proving Lemma 4.5 is difficult, consider an arbitrary colour

![]() $c \in V(H)$

. The value

$c \in V(H)$

. The value

![]() $d'(c)$

depends on which of the neighbours of

$d'(c)$

depends on which of the neighbours of

![]() $c$

in

$c$

in

![]() $H$

are kept. This, in turn, is determined by what happens to the neighbours of the neighbours of

$H$

are kept. This, in turn, is determined by what happens to the neighbours of the neighbours of

![]() $c$

. Since we are only assuming that

$c$

. Since we are only assuming that

![]() $H$

is

$H$

is

![]() $K_{s,t}$

-free, the neighbourhoods of the neighbours of

$K_{s,t}$

-free, the neighbourhoods of the neighbours of

![]() $c$

can overlap with each other. Roughly speaking, we will need to carefully analyse the structure of these overlaps to make sure that Talagrand’s inequality can be applied.

$c$

can overlap with each other. Roughly speaking, we will need to carefully analyse the structure of these overlaps to make sure that Talagrand’s inequality can be applied.

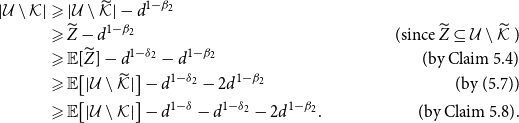

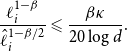

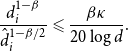

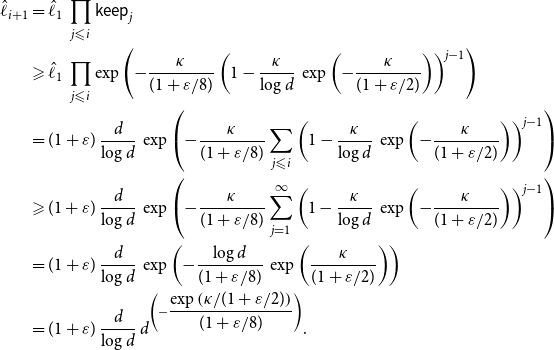

5. Proof of Lemma 4.5

Throughout this section, we shall use the following parameters, where

![]() $t$

is given in the statement of Lemma 3.1:

$t$

is given in the statement of Lemma 3.1:

Fix a vertex

![]() $v \in V(G)$

and a colour

$v \in V(G)$

and a colour

![]() $c \in L(v)$

. We need too show that, with high probability, the random variable

$c \in L(v)$

. We need too show that, with high probability, the random variable

![]() $d'(c)$

does not significantly exceed its expectation. To this end, we make the following definitions:

$d'(c)$

does not significantly exceed its expectation. To this end, we make the following definitions:

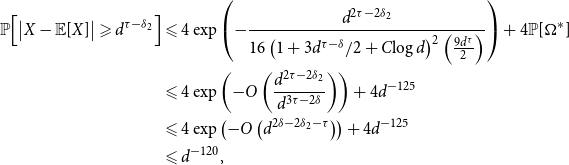

Then

![]() $d'(c) = |\mathcal{U} \cap \mathcal{K}|$

. We will show that

$d'(c) = |\mathcal{U} \cap \mathcal{K}|$

. We will show that

![]() $|\mathcal{U}|$

is highly concentrated and prove that, with high probability,

$|\mathcal{U}|$

is highly concentrated and prove that, with high probability,

![]() $|\mathcal{U} \setminus \mathcal{K}|$

is not much lower than its expected value. Using the identity

$|\mathcal{U} \setminus \mathcal{K}|$

is not much lower than its expected value. Using the identity

![]() $|\mathcal{U} \cap \mathcal{K}| = |\mathcal{U}| - |\mathcal{U}\setminus \mathcal{K}|$

will then give us the desired upper bound on

$|\mathcal{U} \cap \mathcal{K}| = |\mathcal{U}| - |\mathcal{U}\setminus \mathcal{K}|$

will then give us the desired upper bound on

![]() $d'(c)$

.

$d'(c)$

.

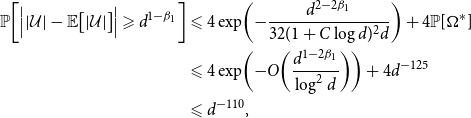

Lemma 5.1.

![]() $\mathbb{P}\bigg [\Big ||\mathcal{U}|- \mathbb{E}\big [|\mathcal{U}|\big ]\Big | \geqslant d^{1-\beta _1}\bigg ] \leqslant d^{-110}$

.

$\mathbb{P}\bigg [\Big ||\mathcal{U}|- \mathbb{E}\big [|\mathcal{U}|\big ]\Big | \geqslant d^{1-\beta _1}\bigg ] \leqslant d^{-110}$

.

Proof. We use Theorem 2.4, Exceptional Talagrand’s Inequality. Let

![]() $V_c \;:\!=\; L^{-1}(N_H(c))$

. In other words,

$V_c \;:\!=\; L^{-1}(N_H(c))$

. In other words,

![]() $V_c$

is the set of neighbours of

$V_c$

is the set of neighbours of

![]() $v$

whose lists include a colour corresponding to

$v$

whose lists include a colour corresponding to

![]() $c$