Automated black-box boundary value detection

- Published

- Accepted

- Received

- Academic Editor

- Antonia Lopes

- Subject Areas

- Algorithms and Analysis of Algorithms, Software Engineering

- Keywords

- Software testing, Boundary value detection, Boundary value analysis, Boundary value exploration, Program derivative

- Copyright

- © 2023 Dobslaw et al.

- Licence

- This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. For attribution, the original author(s), title, publication source (PeerJ Computer Science) and either DOI or URL of the article must be cited.

- Cite this article

- 2023. Automated black-box boundary value detection. PeerJ Computer Science 9:e1625 https://doi.org/10.7717/peerj-cs.1625

Abstract

Software systems typically have an input domain that can be subdivided into sub-domains, each of which generates similar or related outputs. Testing it on the boundaries between these sub-domains is critical to ensure high-quality software. Therefore, boundary value analysis and testing have been a fundamental part of the software testing toolbox for a long time and are typically taught early to software engineering students. Despite its many argued benefits, boundary value analysis for a given software specification or application is typically described in abstract terms. This allows for variation in how testers apply it and in the benefits they see. Additionally, its adoption has been limited since it requires a specification or model to be analysed. We propose an automated black-box boundary value detection method to support software testers in performing systematic boundary value analysis. This dynamic method can be utilized even without a specification or model. The proposed method is based on a metric referred to as the program derivative, which quantifies the level of boundariness of test inputs. By combining this metric with search algorithms, we can identify and rank pairs of inputs as good boundary candidates, i.e., inputs that are in close proximity to each other but with outputs that are far apart. We have implemented the AutoBVA approach and evaluated it on a curated dataset of example programs. Furthermore, we have applied the approach broadly to a sample of 613 functions from the base library of the Julia programming language. The approach could identify boundary candidates that highlight diverse boundary behaviours in over 70% of investigated systems under test. The results demonstrate that even a simple variant of the program derivative, combined with broad sampling and search over the input space, can identify interesting boundary candidates for a significant portion of the functions under investigation. In conclusion, we also discuss the future extension of the approach to encompass more complex systems under test cases and datatypes.

Introduction

Ensuring software quality is critical, and while much progress has been made to improve formal verification approaches, testing is still the de facto method and a crucial part of modern software development. A central problem in software testing is how to meaningfully and efficiently cover an input space that is typically very large. A fundamental, simplifying assumption is that even for such large input spaces, there are subsets of inputs, called partitions or sub-domains, that the software will handle in the same or similar way (Goodenough & Gerhart, 1975; Richardson & Clarke, 1985; Grindal, Offutt & Andler, 2005). Thus, if we can identify such partitions, we only need to select a few inputs from each partition to test and ensure they are correctly handled.

While many different approaches to partition testing have been proposed (Goodenough & Gerhart, 1975; Richardson & Clarke, 1985; Ostrand & Balcer, 1988; Hamlet & Taylor, 1990; Grochtmann & Grimm, 1993), one that comes naturally to many testers is boundary value analysis (BVA) and testing (Myers, 1979; White & Cohen, 1980; Clarke, Hassell & Richardson, 1982). It is based on the intuition that developers are more likely to get things wrong around the boundaries between input partitions, i.e., where there should be a change in how the input is processed and in the output produced (Clarke, Hassell & Richardson, 1982). By analysing a specification, testers can identify partitions and boundaries between them. They should then select test inputs on either side of these boundaries and thus, ideally, verify both correct behaviours in the partitions and that the boundary between them is in the expected, correct place (Clarke, Hassell & Richardson, 1982; British Computer Society, 2001). But note that identifying the boundaries, and thus partitions, is the critical step; a tester can then decide whether to focus only on them or sample some non-boundary inputs “inside” of each partition.

Empirical studies on the effectiveness of partition and boundary value testing do not provide a clear picture. While early work claimed that random testing was as or more effective (Hamlet & Taylor, 1990), they were later countered by studies showing clear benefits to BVA (Reid, 1997; Yin, Lebne-Dengel & Malaiya, 1997). A more recent overview of the debate also provided theoretical results on the effectiveness and discussed the scalability of random testing in relation to partition testing methods (Arcuri, Iqbal & Briand, 2011). Regardless of the relative benefits of the respective techniques, we argue that improving partition testing and boundary value analysis has both practical and scientific value; judging their value will ultimately depend on how applicable and automatic they can be made.

A problem with partition testing, in general, and BVA, in particular, is that there is no clear and objective method for identifying partitions or the boundaries between them. Already Myers (1979) pointed out the difficulty of presenting a “cookbook” method and that testers must be creative and adapt to the software being tested. Later work describes BVA and partition testing as relying on either a partition model (British Computer Society, 2001), categories/classifications of inputs or environment conditions (Ostrand & Balcer, 1988; Grochtmann & Grimm, 1993), constraints (Richardson & Clarke, 1985; Ostrand & Balcer, 1988), or checkpoints (Yin, Lebne-Dengel & Malaiya, 1997) that are all to be derived from the specification. But they do not guide this derivation step in detail. Several authors have also pointed out that existing methods do not give enough support to testers (Grindal, Offutt & Andler, 2005) and that BVA is, at its core, a manual and creative process that cannot be automated (Grochtmann & Grimm, 1993). More recent work can be seen as overcoming the problem by proposing equivalence partitioning and boundary-guided testing from formal models (Hübner, Huang & Peleska, 2019). However, this assumes that such models are(readily) available or can be derived and maintained without major costs.

One alternative is to view and provide tooling for using BVA as a white-box testing technique. Pandita et al. (2010) use instrumentation of control flow expressions and dynamic, symbolic execution to generate test cases that increase boundary value coverage (Kosmatov et al., 2004). However, it is unclear how such boundaries, internal to the code, relate to the boundaries that traditional, black-box BVA would find. Furthermore, it requires instrumentation and advanced tooling, which might be costly and unavailable. It should be noted that white-box testing is limited to situations where source code is available; black-box testing approaches do not have this limitation.

Here we address the core problem of how to automate black-box boundary value analysis. We build on our recent work that proposed a family of metrics to quantify the boundariness of software inputs (Feldt & Dobslaw, 2019) and combine it with search and optimization algorithms to detect promising boundary candidates automatically. These can then be (interactively) presented to testers and developers to help them explore meaningful boundary values and create corresponding test cases (Dobslaw, de Oliveira Neto & Feldt, 2020). Our search-based, black-box, and automated boundary value detection method does not require manual analysis of a specification nor the derivation of any intermediate models. It can even be used when no specifications nor models are available. Since it is based on generic metrics of boundariness, it can principally be applied even for software with non-numeric, structured, and complex inputs and/or outputs. However, for brevity and since the overall approach is novel, we focus on implementing our AutoBVA method on testing software with arbitrarily many numeric arguments but with any type of output. In future work, we will utilize the existing hooks and empirically evaluate AutoBVA for ever more complex software to better understand the critical parts and to understand to what extent manual intervention is required for higher-level software interfaces. In this article, our main contributions are:

-

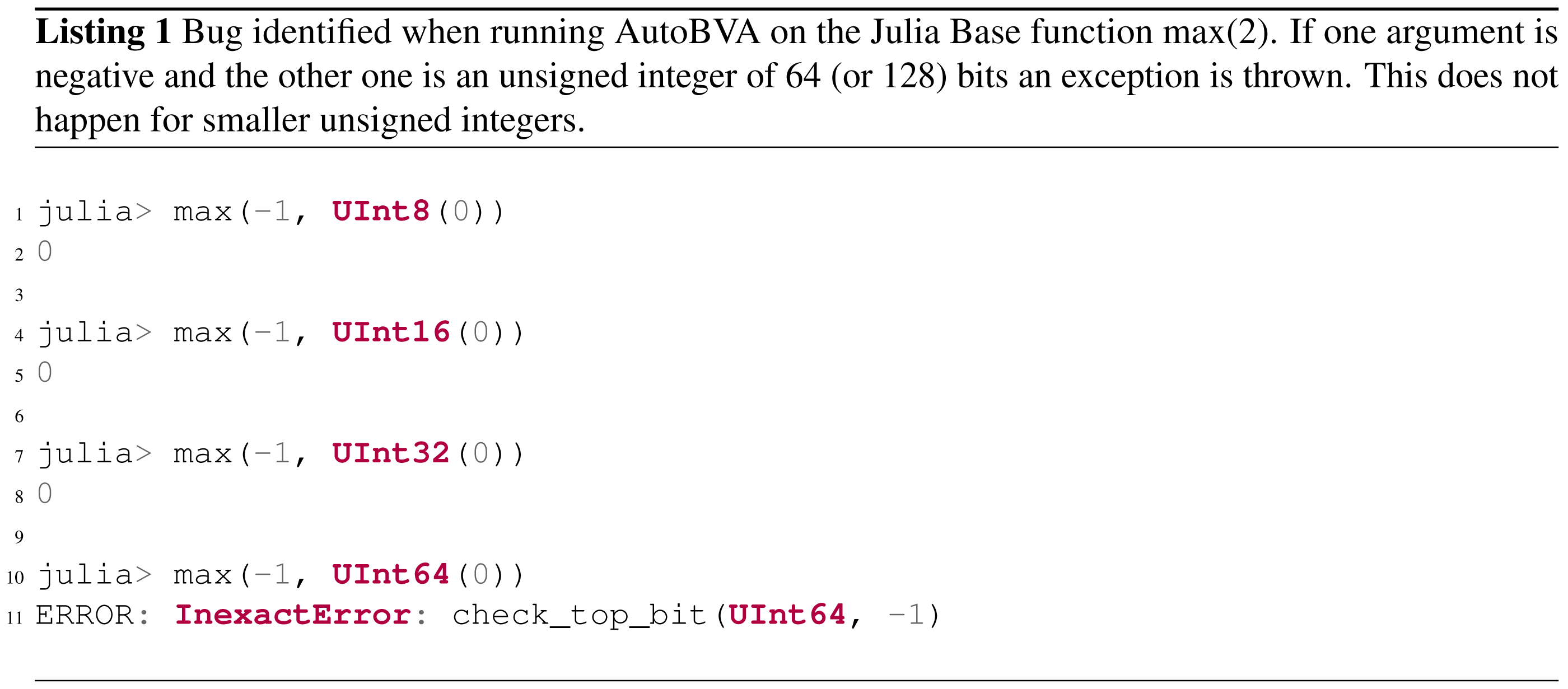

A generic method for and implementation of automated boundary value analysis (AutoBVA) that uses a simple and fast variant of the program derivative (Feldt & Dobslaw, 2019) for quickly searching for boundary behaviour. One of the boundaries found by AutoBVA revealed inconsistencies between the implementation and documentation in the Julia language. (https://github.com/JuliaLang/julia/pull/48973)

-

The comparison of two random sampling strategies within the tool: uniform and bituniform, as well as a more sophisticated heuristic local-search algorithm, and

-

An empirical evaluation of AutoBVA on four curated and 654 broadly sampled software under test (SUT) to understand its capabilities in detecting boundary candidates. The code and artefacts from our experiment are available in a replication package. (https://doi.org/10.5281/zenodo.7677012)

Our results show that the proposed method can be effective even when using a simple and fast program derivative. We also see that the choice of sampling strategy affects the efficiency of boundary candidate detection-uniform random sampling without the use of compatible type sampling does, in most cases, perform poorly (see Appendix A). For some investigated programs, the two heuristic local search strategies complement each other in the boundary-finding capabilities.

The rest of this article is organized as follows. After providing a more detailed background and overview of related work in the ‘Related Work’ section, we present AutoBVA in ‘Automated Boundary Value Analysis’. The empirical evaluation is detailed in ‘Empirical Evaluation’ followed by the results in ‘Results and Analysis’. The results are discussed in ‘Discussion’, and the article concludes in the ‘Conclusions’ section. Appendix A and B contain details of two screening studies that supported AutoBVA meta-parameter choices for its detection and summarisation phases, respectively. An earlier version of this article has previously been made available as a pre-print (https://arxiv.org/abs/2207.09065); consequently several parts of this article overlap heavily with that pre-print.

Related Work

In the following, we provide a brief background to boundary value analysis and the related partition testing concepts of domain testing and equivalence partitioning.

White & Cohen (1980) proposed a domain testing strategy that focuses on identifying boundaries between different (sub-)domains of the input space and ensuring that boundary conditions are satisfied. As summarised by Clarke, Hassell & Richardson (1982): “Domain testing exploits the often observed fact that points near the boundary of a domain are most sensitive to domain errors. The method proposes the selection of test data on and slightly off the domain boundary of each path to be tested.”. This is clearly connected to the testing method typically called boundary value analysis (BVA), first described more informally by Myers (1979) but later also included in software testing standards (Reid, 1997; Reid, 2000; British Computer Society, 2001). Jeng & Weyuker (1994) even describe domain testing as a sophisticated version of boundary value testing.

While White & Cohen (1980) explicitly said their goal was to “replace the intuitive principles behind current testing procedures by a methodology based on a formal treatment of the program testing problem” this has not led to automated tools, and a BVA is typically performed manually by human testers. Worth noting is also that while boundary value analysis is typically described as a black-box method (Myers, 1979; Reid, 1997; British Computer Society, 2001), requiring a specification, the White and Cohen papers are less clear on this, and their domain testing strategy could also be applied based on the control flow conditions of an actual implementation.

The original domain testing paper (White & Cohen, 1980) made several simplifying assumptions, such as the boundary being linear, defined by “simple predicates”, and that test inputs are continuous rather than discrete. While none of these limitations should be seen as fundamental, they do leave a practitioner in a difficult position since it is not explicit what the method entails when some or all of these assumptions are not fulfilled. Even though later formulations of BVA as a black-box method (British Computer Society, 2001) avoid these assumptions, they, more fundamentally, do not give concrete guidance to testers on how to identify boundaries or the partitions they define.

As one example, the BCS standard (British Computer Society, 2001) states that “(BVA) ...uses a model of the component that partitions the input and output values of the component into ordered sets with identifiable boundaries.” and that “a partition’s boundaries are normally defined by the values of the boundaries between partitions, however where partitions are disjoint the minimum, and maximum values in the range which makes up the partition are used” but do not give guidance on where to find or how to create such a partition model1 if none is already at hand. This problem was clear already from Myers’s original description of BVA (Myers, 1979), which stated, “It is difficult to present a cookbook for boundary value analysis since it requires a degree of creativity and a certain amount of specialisation to the problem at hand”.

Later efforts to formalise BVA ideas have not addressed this. For example, Richardson & Clarke (1985) partition analysis method makes a clear difference between partitions derived from the specification versus from the implementation and proposes to compare them but relies on the availability of a formal specification and does not detail how partitions can be derived from it. Jeng & Weyuker (1994) proposed a simplified and generalized domain testing strategy with the explicit goal of automation but only informally discussed how automation based on white-box analysis of path conditions could be done.

A very different approach is Pandita et al. (2010), which presents a white-box automated testing method to increase the Boundary Value Coverage (BVC) metric (originally presented by Kosmatov et al. (2004)). The core idea is to instrument the SUT with additional control flow expressions to detect values on either side of existing control flow expressions. An existing test generation technique to achieve branch coverage (Pandita et al. (2010) uses the dynamic symbolic execution test generator Pex) can then be used to find inputs on either side of a boundary. The experimental results were encouraging in that BVC could be increased by 23% on average and also lead to increases (11% on average) in the fault-detection capability of the generated test inputs.

There have been several studies that empirically evaluate BVA. While an early empirical study by Hamlet & Taylor (1990) found that random testing was more effective, its results were challenged in later work (Reid, 1997; Yin, Lebne-Dengel & Malaiya, 1997). Reid (1997) investigated three testing techniques on a real-world software project and found that BVA was more effective at finding faults than equivalence partitioning and random testing. Yin, Lebne-Dengel & Malaiya (1997) compared a method based on checkpoints, manually encoding qualitatively different aspects of the input space, combined with antirandom testing2 to different forms of random testing and found the former to be more effective. The checkpoint encoding can be seen as a manually derived model of essential properties of the input space and, thus, indirectly defines potentially overlapping partitions.

Even recent work on automating partition and boundary value testing has either been based on manual analysis or required a specification/model to be available. Hübner, Huang & Peleska (2019) recently proposed a novel equivalence class partitioning method based on formal models expressed in SysML. An SMT solver is used to sample test inputs inside or on the border of identified equivalence classes. They compared multiple variants of their proposed technique with conventional random testing. The one that sampled 50% of test inputs within and 50% on the boundaries between the equivalence partitions performed best as measured by mutation score.

Related work on input space modelling has also been done to improve combinatorial testing. Borazjany et al. (2013) proposed to divide the problem into two phases, where the first models the input structure while the latter models the input parameters. They propose that ideas from partition testing can be used for the latter stage. However, for analysing the input structure, they propose a manual process that can support only two types of input structures: flat (e.g., for command line parameters that have no apparent relation) or graph-structured (e.g., for XML files for which the tree structure can be exploited).

We have previously proposed a family of metrics to quantify the boundariness of pairs of software inputs (Feldt & Dobslaw, 2019). This generalises the classical definition of functional derivatives in mathematics, which we call program derivatives. Instead of using a standard subtraction (“-”) operator to measure the distance between inputs and outputs, we leverage general, information theoretical results on quantifying distance and diversity. We have previously used such measures to increase and evaluate test diversity’s benefits (Feldt et al., 2008; Feldt et al., 2016). In a recent study, we used the program derivatives to explore input spaces and visualize boundaries for testers and developers (Dobslaw, de Oliveira Neto & Feldt, 2020). Here, we automate this approach by coupling it to search and optimization algorithms.

In summary, early results on partition and boundary value analysis/testing require a specification and do not provide detailed advice or any automated method to find boundary values. One automated method has been proposed, but it requires a system model of the SUT. One other method can automatically increase a coverage metric for boundary values but is white-box and requires both instrumentation of the SUT as well as advanced test generation based on symbolic execution. In contrast to existing research, we propose an automated, black-box method to identify boundary candidates that is simple to implement for integer arguments and conceptually extendable to arbitrary data, even with complex structures.

Automated Boundary Value Analysis

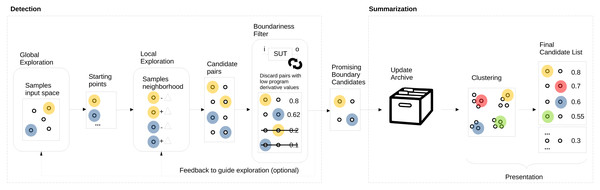

We propose to automate boundary value analysis by a detection method that outputs a set of input/output pairs that are then summarised and presented to testers. Figure 1 shows an overview of our proposed approach. The two main parts are detection (on the left), which produces a list of promising boundary candidates that are then summarised and presented to the tester (on the right). The boundary value detection method is based on two key elements: (1) search to explore (both globally and locally) the input domain coupled with (2) the program derivative to quantify the boundariness of input pairs. While exploration acts as a new boundary candidate input pair generator, the program derivative acts as a filter and selects only promising candidate pairs for further processing. An archive is updated with the new pairs for summarisation, and only the unique and good boundary candidates are kept. The final list of promising candidates in the archive is then summarised and presented to the tester, who can select the most interesting, meaningful, or surprising ones to turn them into test cases or start a dialogue regarding the intent with the product owner. For the summarisation step, we propose using clustering to avoid showing multiple candidates that are very similar to each other.

Figure 1: AutoBVA framework for automated boundary value analysis.

In this section, we describe the three main parts of our approach: selection in ‘Selection: Program Derivative’, search/exploration in ‘Exploration: Generation of Candidate Pairs’, and summarisation in ‘Summarisation: validity-value similarity clustering’. Since selection is the most critical step, we first formally define the program derivative and exemplify its application by the simple bytecount SUT in ‘Example: program derivative for bytecount’. It follows an explanation of the AutoBD search/exploration with its global search component (‘Global Sampling’) and two alternative local search strategies ‘Local Neighbour Sampling’ (LNS) and ‘Boundary Crossing Search’ (BCS). Finally, the Summarisation section introduces our approach to categorizing boundary candidates into coarse-grained validity groups by taking advantage of output types to then cluster them individually.

Selection: program derivative

We argue that the critical problem in boundary value analysis is judging what is a boundary and what is not. If we could quantify how close to a boundary a given input is, we could then use a plethora of methods to find many such inputs and keep only the ones closest to a boundary. Together such a set could indicate where the boundaries of the software are or at least indicate areas closer to the actual boundaries.

In a previous work (Feldt & Dobslaw, 2019), we proposed the program derivative for quantifying boundariness. The idea is based on a generalisation of the classic definition of the derivative of a function in (mathematical) analysis. In analysis, the definition is typically expressed in terms of one point, x, and a delta value, h, which together define a second point after summation. The derivative is then the limit as the delta value approaches zero:

The derivative measures the sensitivity to a change in the function given a change in the input value. A large (absolute value of a) derivative indicates that the function changes a lot, even for a minimal input change. If the function f here instead was the SUT, and the slight change in inputs would cross a boundary, it is reasonable that the output would also change more than if the change did not cross a boundary. We could then use this program derivative to screen for input pairs that are good candidates to cross the boundaries of a program.

The key to generalizing from mathematical functions to programs is to realize that programs typically have many more than one input, and their types can differ greatly from numbers. Also, there can be many outputs, and their types might vary from the types of inputs. Instead of simply using subtraction (“-”) both in the numerator and denominator, we need two distance functions, one for the outputs (do) and one for the inputs (di). Also, rather than finding the closest input to calculate the derivative of a single input, for our purposes here, we only need to quantify the boundariness of any two individual inputs. We thus define the program difference quotient (PDQ) for program P and inputs3 a and b as Feldt & Dobslaw (2019):

Since the PDQ is parameterized on the input and output distance functions, this defines not a single but a whole family of different measures. A tester can choose distance functions to capture meaningful output differences and/or inputs. In the original program derivative paper, Feldt & Dobslaw (2019), we argued for compression-based distance functions as a good and general choice. However, a downside with these is that they can be relatively costly to calculate, which could be a hindrance when used in a search-based loop, potentially requiring many distance calculations. Also, compression-based distances using mainstream string compressors such as zlib or bzip2 might not work well for short strings, as commonly seen in testing.

In this work, we thus use one of the least costly output distance functions one can think of: strlendist as the difference in length of outputs when they are printed as strings. This distance function works regardless of the type of output involved. A downside is that it is coarse-grained and will not detect smaller differences in outputs of the same length. Still, if a simple and fast distance function can suffice to detect boundaries, this can be a good baseline for further investigation. Also, our framework is flexible and can use multiple distance functions for different purposes in its different components. For example, one could use strlendist during search and exploration while using more fine-grained NCD-based output distance when updating the archive or during summarisation (see Fig. 1).

For the input distance function, this will typically vary depending on the SUT. We can use simple vector functions such as Euclidean distance if inputs can be represented as numbers or a vector of numbers. For more complex input types, one can use string-based distance functions like Normalised Compression Distance (NCD) (Feldt et al., 2008; Feldt et al., 2016; Feldt & Dobslaw, 2019) or even simpler ones like Jaccard or the related Overlap Coefficient distance (Jaccard, 1912).

Example: program derivative for bytecount

We illustrate our framework with the simple bytecount function that is one of the most copied snippets of code on Stack Overflow but also known to contain a bug (Baltes & Diehl, 2019; Lundblad, 2019).4 bytecount is a function that translates an input number of bytes into a human-readable string with the appropriate unit, e.g., “MB” for megabytes etc. For example, for an input of 2,099, it returns the output “2.1 kB”, and for the input 9950001, it returns “10.0 MB”.

Table 1 shows a set of manually selected examples of boundary candidate pairs, using a single input distance function (subtraction) but two different output distances: strlendist and Jacc(1), the Jaccard distance based on 1-grams (Jaccard, 1912). The Jaccard distance can approximate compression distances but is also fast to calculate and applicable for short strings. Correspondingly, in our example table, there are two different PDQ values, and we have sorted them in descending order based on the PDQ2, i.e., that uses the Jacc(1) output distance function.

| Row | Input 1 | Input 2 | Output 1 | Output 2 | do1 (strlendist) | do2 (Jacc(1)) | di | PDQ1 | PDQ2 |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 9 | 10 | 9B | 10B | 1 | 0.75 | 1 | 1 | 0.75 |

| 2 | 999949999 | 999950000 | 999.9 MB | 1.0 GB | 2 | 0.63 | 1 | 2 | 0.63 |

| 3 | 99949 | 99950 | 99.9 kB | 100.0 kB | 1 | 0.43 | 1 | 1 | 0.43 |

| 4 | 99949 | 99951 | 99.9 kB | 100.0 kB | 1 | 0.43 | 2 | 0.50 | 0.21 |

| 5 | 99951 | 99952 | 100.0 kB | 100.0 kB | 0 | 0.0 | 1 | 0.0 | 0.0 |

| 6 | 99948 | 99949 | 99.9 kB | 99.9 kB | 0 | 0.0 | 1 | 0.0 | 0.0 |

Starting from the bottom of the table, on row 6, the bytecount output is the same for the input pair (99948, 99949). This leads to PDQ values of 0.0 regardless of the output distance used. The PDQ values are zero also for the example on row 5; even though the output has changed compared to row 6, they are still the same within the pair. We are thus in a different partition since the outputs differ from the ones on row 6, but we are not crossing any clear boundary, and the PDQ values are still zero.

The example in row 4 shows a potential boundary crossing. Even though the input distance is now greater, at 2, the outputs differ, so both PDQ values are non-zero. However, this example pair can be improved further by subtracting 1 from 99951 to get the pair (99949, 99950) shown in row 3. Since the denominator in the PDQ calculation is smaller, the PDQ value is higher, and we consider it a better boundary candidate. In fact, it is the input pair with the highest PDQ value of those with these two outputs; thus, it can be considered the optimal input pair to show the “99.9 kB” to “100.0 kB” boundary.

Finally, the examples on rows 2 and 1 show input pairs for which the two PDQ measures used differ in ranking. While we can agree that both of these input pairs detect boundaries, different testers might have different preferences on which one is the more preferred. Given that PDQ1, using the bytecount output distance is so simple and quick to compute, we will use that in the experiments of this study. Future work can explore trade-offs in the choice of distance functions as well as how to combine them. Note that regardless of how the input pairs have been found, they can be sorted and selected using different output distance functions when presented.

Exploration: generation of candidate pairs

While the program derivative can help evaluate whether an input pair is a good candidate to detect a boundary, there are many possible such pairs to consider. Thus, we need ways to explore the input space and propose good candidate pairs, i.e., that have high program derivative values. A natural way to approach this is as a search-based software engineering problem (Harman & Jones, 2001; Afzal, Torkar & Feldt, 2009; Feldt, 1998), with the program derivative as the goal/fitness function and a search algorithm that tries to optimize for higher program derivative values.

However, detecting the boundaries is insufficient to find and return one candidate pair. Most software will have multiple and different boundaries in their input domain. Furthermore, boundaries are typically stretched out over (consecutive) sets of inputs. The search and exploration procedure we chose should thus output a set of input pairs that are, ideally, spread out over the input domain (to find multiple boundaries) as well as over each boundary (to help testers identify where it is).

An additional concern when using search-based approaches is the shape of the fitness landscape, i.e., how the fitness value changes over the space being searched (Smith, Husbands & O’Shea, 2002; Yu & Miller, 2006). Many search and optimisation approaches assume, or at least benefit from, a smooth landscape, i.e., small steps in the space lead to only a tiny change in the fitness value. Whether we can assume this to be the case for our problem is unclear. The program derivative might be very high right at the boundary while showing minimal variation when inside the partitions on either side of the boundary. Worst case, this could be a kind of needle-in-a-haystack fitness landscape (Yu & Miller, 2006) where there is little to no indication within a sub-domain to guide a search towards its edges, where the boundary is.

Given these differences compared to how search and optimisation have typically been applied in software testing, we formulate our approach in a generic sense. We can then instantiate it using different search procedures and empirically study which are more or less effective. However, given that we are searching for a pair of two inputs with a significant derivative in the program, we must tailor even the most basic random search baseline to this problem.

We, therefore, formulate AutoBVA detection as a generic framework with customizable components of significance. Two essential abstractions are (1) the global sampling/generation strategy to decide starting points for (2) the local exploration/mutation strategy to modify those starting points in a structured way to detect good, candidate boundary pairs. Algorithm 1 outlines this generic, 2-step automated boundary detection. Given a Software under test SUT, it returns a set of boundary candidate pairs (BC). It has three additional parameters: a way to quantify the boundariness of pairs of inputs (Q), a (global) sampling strategy (SS) to propose starting points for local exploration and a (local) boundary search (BS) strategy. These exploration strategies capture two different types of sampling/search procedures. The global one explores the input space of the SUT as a whole by sampling different input points (line 3). The local strategy will then search from the starting point (line 4) by manipulating it by applying mutations. Each of the potential new candidates (in PBC) is then evaluated and their boundariness is compared to a threshold (line 5) and added to the final set (BC) returned. The bytecount function simply captures the fact that we might not use a fixed threshold but rather can allow more complex updating schemes where the threshold is based on the candidates that have already been found. For example, the threshold could be taken as some percentile (say, 90%) of the boundariness values of the candidate set saved so far. Alternatively, even more, elaborate boundariness testing and candidate set update procedures can be used, such as rather than simply adding to the current set whenever a sufficiently good boundary value is found, we could save a top list of the highest boundariness values found so far. The update would then be generalized so that it can also delete previously added candidate pairs that are no longer promising.

_______________________

Algorithm 1 Automated Boundary Detection - AutoBD-2step____________________

Require: Software under test SUT, Boundariness quantifier Q, Sampling strat-

egy SS, Boundary search BS

Ensure: Boundary candidates BC

1: BC = ∅

2: while stop criterion not reached do

3: input = SS.sample(SUT,BC) # globally sample a starting point

4: PBC = BS.search(SUT,Q,input) # locally explore and detect potential

candidate(s)

5: BC = BC ∪{c|c ∈ PBC ∧ Q.evaluate(c) > threshold(BC)}

6: end while

7: return BC______________________________________________________________________ The sampling strategy defines where we start looking, i.e., what input we start our search from. The local search strategy defines how we manipulate that input to identify boundary candidates. For complex data types, such as XML trees or images, more sophisticated generators are required. For numbers, the shelf sampling from basic distributions, such as uniform at random, suffices. We use uniform at-random sampling as a sampling baseline.

To illustrate, consider a SUT that takes a single integer number as input. The sampler returns a random number, say 10. Let us assume that the local search has access to increment and decrement mutations. In the most naive form, the local search simply applies each mutation operator once per argument to obtain a neighbourhood of distance one. The candidate pairs (9, 10) and (10, 11) would be probed in the local search. If the SUT in question were actually the above-mentioned bytecount PBC, it would then return all candidates above a threshold - here, the candidate pair (9, 10) since it has the highest PDQ value. Since this is among the simplest mechanisms for deriving boundary candidates, it informed our formulation of the Local Neighbourhood Search below, which we use as a baseline.

The benefit of creating candidate input pairs in two steps may become particularly obvious when the inputs are of a complex and structured datatype, such as an XML tree. If search was done with a single, global exploration step, it would be relatively unlikely that the two inputs of a candidate pair would have a small distance or that there even is a useful way of generating inputs that lead from one point to the other. By doing this in two steps, we could first sample XML tree instances and then explore their neighbourhoods in the input space by small mutations. This mechanism, however, is not limited to exploring only the very near neighbourhood of a point but may be used to explore the space by a repeated application of mutation operators. This idea informed our second local search strategy. Future work may still look into using global sampling only. Still, the combination of arbitrary inputs to detect boundaries seems non-trivial and might require tailored crossover mechanisms for input types.

For this study, we first benchmark the impact of the global sampling (uniform, bituniform) in a screening study to understand its effect on the results. The study is presented in Appendix A. We found that uniform sampling (often referred to as random sampling) performed substantially worse-in fact, it did not find any boundary candidates in any attempt when another feature was deactivated. Consequently, we simplified the experimental design of the main study to bituniform sampling. Next, we explain the two boundary search algorithms mentioned above: Local Neighbour Sampling and Boundary Crossing Search.

Global sampling

As mentioned above, the initial implementation of AutoBVA for validation of overall applicability is limited to numbers. By our findings on poor performance in the screening (see Appendix A) for uniform (random) sampling but promising performance for bituniform sampling, we deem uniform sampling insufficient as a sampling baseline (Algorithm 1, line 3). We hypothesise that this is because it favours larger numbers not covering the entire input spectrum-and arguably less of the often more interesting regions, including the switch between positive and negative numbers around zero. Bituniform sampling selects random numbers with a bit-shift that inserts leading zeros. The number of leading zeros is decided uniformly at random in the range of 0 (no change to the orignal number) and the bit-size of the datatype (e.g., 64 for 64 bit integers).

For a broad exploratory sampling, we introduce a complementing technique that we call compatible type sampling (CTS), i.e., argument-wise sampling based on compatible types per argument. An example of a compatible type is any integer type with a specific bit size. For instance, in the Julia programming language, we use in our experiments, booleans (Bool), 8-bit (Int8), and 32-bit integers (Int32) types are compatible because they all are sub-types of integer.

More details and justification for the global sampling strategy we use here, bituniform sampling combined with CTS, can be found in Appendix A. We do note that in general, more advanced test input generation strategies (Feldt & Poulding, 2013) can be used, and adapting them to the SUT and its arguments will likely be important when further generalizing our framework. We revisit the topic of handling more complex software in ‘Discussion’.

Local neighbour sampling

Because we are searching for pairs, among the simplest imaginable strategies for local search in Algorithm 1 (line 4), local neighbourhood sampling (LNS), is presented as Algorithm 2. The basic idea with LNS is to structurally sample neighbouring inputs, i.e., inputs close to a given starting point i, to form potential candidate pairs, including i. The algorithm processes mutations over all individual arguments (line 3), considering all provided mutation operators mos (line 4). For integer inputs, the mutation operators are basic subtraction and addition (of 1). Outputs are produced for the starting point (line 2) and each neighbour (line 6) to form the candidate pairs (line 7). Without filtering by, e.g., a program derivative, they are all blindly added to the set of potential boundary candidates (line 8), returned by the algorithm (line 11). LNS is a trivial baseline implementation to understand better what is possible using AutoBD without a sophisticated search. LNS will invariably return pairs that contain starting point i as one side of the boundary candidate. We include this method as a baseline to understand whether a more sophisticated and time-consuming method is justified.

________________________________________________________________________________________

Algorithm 2 search – Local Neighbour Sampling (LNS)____________________________

Require: Software under test SUT, mutation operators mos, Starting Point i

Ensure: potential boundary candidates PBC

1: PBC = ∅

2: o = SUT.execute(i)

3: for a ∈ arguments(SUT) do

4: for mo ∈ mos[a] do

5: n = mo.apply(i,a)

6: on = SUT.execute(n)

7: c = 〈i,o,n,on〉

8: PBC = PBC ∪{c}

9: end for

10: end for

11: return PBC____________________________________________________________________ Boundary crossing search

Boundary crossing search (BCS) is a more sophisticated heuristic local search strategy (see Algorithm 3) that uses a boundariness quantifier Q, in our experiments the program derivative. BCS seeks a locally derived potential boundary candidate pair that stands out compared to starting point i. For a random direction (argument a in line 1 and mutation operator mo in line 2) a neighbouring input inext gets mutated (line 3) and outputs for both inputs are produced (line 4) to define the initial candidate (line 5) for which the boundariness gets calculated (line 6).

________________________________________________________________________________________

Algorithm 3 search – Boundary Crossing Search (BCS)____________________________

Require: Software under test SUT, Boundariness quantifier Q, mutation op-

erators mos, Starting Point i

Ensure: potential boundary candidates PBC

1: a = rand(arguments(SUT)) # select random argument

2: mo = rand(mos[a]) # select random mutation operator for argument

3: inext = mo.apply(i,a) # mutate input a first time in single dimension

4: o = SUT.execute(i),onext = SUT.execute(inext) # produce outputs

5: cinit = 〈i,o,inext,onext〉 # instantiate initial candidate

6: Δinit = Q.evaluate(cinit) # calculate candidate distance

7: c = 〈i1,o1,i2,o2〉, with i1 obtained by a finite number of chained mutations

mo of a over i, and

8: i2 = mo.apply(i1,a), and

9: o1 = SUT.execute(i1), and

10: o2 = SUT.execute(i2), and

11: Δc = Q.evaluate(c), and

12: Δc > Δinit or cinit == c

13: return {c}____________________________________ Lines 7–12 describe the constraints and conditions for a resulting boundary candidate c to stand out locally. This search can be implemented in a variety of ways. We implemented a binary search that first identifies the existence of a boundary crossing by taking ever greater steps and calculating the difference Δc to find an input for the state in which the boundariness is greater than Δinit, thereby guaranteeing the necessary condition in line 12.5 Once that is achieved, the algorithm squeezes that boundary to obtain c, which is the nearest point to cinit that ensures a greater local difference in neighbouring inputs, and by that guarantees the neighbouring constraint in line 8.

We illustrate the search by finding a date (three integer inputs) boundary candidate with starting point thirteenth of February 2022, or (13, 2, 2022), and using the program derivative with bytecount as output metric. Let us further assume that argument 1 or day in combination with mutation operator subtraction are randomly selected. We probe and get Date(i) = “2022-13-02”. BCS will probe the initial neighbour mo(i) = inext = (12, 2, 2022), which results in “2022-12-02” and no conceivable difference, with Δinit = 0. The algorithm will apply the mutation operator in a binary fashion, meaning first twice mo(mo(inext)), then four times, and so on until it perceives a larger difference.6 BCS thus first tries days 10 and then 6 with no perceivable difference still7 Next, at -2 it perceives a difference due to an error message that signals the date out of bounds. From here on, it backtracks to squeeze the boundary to identify the two neighbouring points for which the transition happens, as guided by the program derivative. There are multiple strategies to do this, and a simple one is to apply binary cuts between the two points to eventually find the candidate pair 〈(0, 2, 2022), (1, 2, 2022)〉 for which the input distance is atomic, and the program derivative locally maximal (in the starting point, argument, and mutation operator). In the general case, these detected potential boundaries could both separate two valid outputs, as well as a combination of valid and error outputs (such as in our case), or even two error cases. As explained in the following section, we use this grouping information in the boundary candidate summarisation.

Summarisation: validity-value similarity clustering

The boundary candidate set resulting from AutoBD-2step (Algorithm 1) can be extensive. However, human information processing is limited, and more fundamentally many of the boundary pairs found can be similar to each other and represent the same or a very similar boundary. Take, for instance, the Date example with a great number of different boundary pairs crossing the same behavioural boundary between valid dates and the invalid 0 for the day - 〈(0, m, y), (1, m, y)〉. The summary method(s) we choose should thus not only limit the number of candidates presented, but those candidates also need to be different and represent different groups of behaviours and boundaries over the input space.

Furthermore, the goals for the summary step will likely differ depending on what the boundary candidates are to be used for; comparing a specification to actual boundaries in an implementation is a different use case than adding boundary test cases to increase coverage. Thus we cannot provide one general solution for summarisation, and future work will have to inspect different methods to cluster, select, and prioritize but also visualize the boundary candidates.

In the following, we propose one particular but very general summarisation method. We hope it can show several building blocks needed when creating summarisation methods for specific use cases and act as a general fallback method that can provide value regardless of the use case. This is also the method we use in the experimental evaluation of the article. We consider a general, black-box situation with no specification available. Thus, we only want to use information about the boundary candidates themselves. The general idea is to identify meaningful clusters of similar candidates, then sample only one or a few representative candidates per cluster and present them to the tester.

For instance, Table 2 contains a subset of (nine) candidate pairs found by our method for the bytecount example introduced above. Different features can be considered when grouping and prioritizing. We see that candidates differ at least in the output type, i.e., whether the outputs are valid return values or exceptions, as well as in the actual values of inputs and/or outputs themselves. For example, both outputs for the candidate on row 2 are strings and are considered normal, valid return values. On the other hand, for row 9, both outputs are (Julia) exceptions indicating that a string of length 6 (“kMGTPE”) has been accessed at (illegal) positions 9 and 10, respectively.

| Row | Input 1 | Input 2 | Out 1 | Out 2 | Validity |

|---|---|---|---|---|---|

| 1 | false | true | falseB | trueB | VV |

| 2 | 9 | 9B | 10 | 10B | VV |

| 3 | −10 | −9 | −10B | −9B | VV |

| 4 | 999949 | 999950 | 999.9 kB | 1.0 MB | VV |

| 5 | 99949999999999999 | 99950000000000000 | 99.9 PB | 100.0 PB | VV |

| 6 | 9950000000000001999 | 9950000000000002000 | 9.9 EB | 10.0 EB | VV |

| 7 | −1000000000000000000000000000000 | −999999999999999999999999999999 | −1000000000000000000000000000000B | −999999999999999999999999999999B | VV |

| 8 | 999999999999994822656 | 999999999999994822657 | 1000.0 EB | BoundsError(“kMGTPE”, 7) | VE |

| 9 | 999999999999990520104160854016 | 999999999999990520104160854017 | BoundsError(“kMGTPE”, 9) | BoundsError(“kMGTPE”, 10) | EE |

We argue that the highest level boundary in boundary value analysis is between valid and invalid output values. Any time there is an exception thrown when the SUT is executed, we consider the output to be invalid; if not, the output is valid.8 Since we consider pairs of inputs, two outputs per candidate can be grouped into three, what we call, validity groups:

-

VV: two valid outputs in the boundary pair.

-

VE: one valid output and one invalid (error) output.

-

EE: two invalid (error) outputs.

We use validity as the top-level variation dimension and produce individual summaries for these three groups. Table 2 indicates the validity group in the rightmost column (VV for lines 1–7, VE in line 8, and EE in line 9). We may consider other characteristics within each validity group to categorize candidates further. For example, we could use the type of the two outputs to create additional (sub-)groups. This is logical since it is not clear that comparing the similarity of values of different types is always meaningful. However, for many SUTs and their validity groups, the types might not provide further differentiation as they often are the same.

In the final step, we propose to cluster based on the similarity of features within the identified sub-groups. A type-specific similarity function can be used, or a general, string-based distance function can be used after converting the values to strings. We can create a distance matrix per sub-group after calculating the pair-wise similarities (distances). This can then be used with any clustering method while in the experiments in this article, we used k-means clustering (Likas, Vlassis & Verbeek, 2003). We select one representative (or short) boundary pair from each cluster to present to the tester. The distance matrix can also be used with dimensionality reduction methods to visualize the validity-type sub-group. We thus call our summarisation method validity-value similarity clustering.

The duality of having two sites of a boundary exposes more structure than thinking in test cases as single points with the expected outcomes only. Therefore, the validity groups are not meant to be a sufficient differentiator but a tool to exploit general structure for better separation for most, if not all SUTs.

Empirical Evaluation

This section starts by explaining the overall aim of the experimental study-broken up into research questions RQ1–RQ3-with its two investigations. Details about the experimental design and setup can be found in ‘Selection: Program Derivative’, with subsections for each investigation. ‘Setup of summarisation step’ then describes the applied clustering approach with the utilized metrics that define the feature space for the summarisation in both investigations.

We run our framework using the two local search strategies, LNS and BCS, introduced above (independent variable) in two investigations and study the sets of boundary candidates returned in detail. Investigation 1 offers a deep analysis of four curated SUTs of different types, whereas Investigation 2 tests the applicability of all compatible functions in Julia’s core library called Base (control variables). All SUTs are program functions. We evaluate the degree to which the framework can find many diverse and high-quality candidate boundary pairs. Specifically, we address the following research questions:

-

RQ1: Can AutoBVA identify large quantities of boundary candidates?

-

RQ2: Can AutoBVA robustly identify boundary candidates that cover a diverse range of behaviours?

-

RQ3: To what extent can AutoBVA reveal relevant behaviour or potential faults?

Through RQ1, we try to understand to what extent AutoBVA can pick up potential boundary candidates (PBC) by comparing two local search strategies. We analyse the (1) overall quantities and (2) quantities of uniquely identified PBCs using a basic boundary quantifier. The uniqueness here is measured in relation to the set of all PBCs for a SUT over all repetitions of our experiments, irrespective of the local search applied.

Through RQ2, we try to understand how well AutoBVA covers the space of possible boundaries between equivalence partitions in the input space of varying behaviour. For a given arbitrary SUT, we argue that there is no one-size-fits-all approach to extracting/generating “correct” equivalence partitions; many partitions and, thus, boundaries exist and which ones a tester considers might depend on the particular specification, the details with which it has been written, the interests and experience of the tester, etc. Therefore, we use our summarisation method (validity-value similarity clustering as described in ‘Summarisation: validity-value similarity clustering’) to group similar PBCs within each validity group (VV, VE, and EE) and apply clustering per each group. We answer RQ2 by analysing how the PBCs found by each exploration method cover those different clusters. Comparing the coverage of these clusters allows us to interpret the behaviour triggered by the set of PBCs. For instance, the two boundary candidates identified for the Date constructor SUT, bc1 = (28-02-2021, 29-02-2021) and bc2 = (28-02-2022, 29-02-2022) are different PBCs, but they do cover the same valid-error boundary.9 It is, therefore, not clear that finding those two specific PBCs, or many similar boundary candidates showing a similar boundary, helps identify diverse boundary behaviour.

The quantitative approaches used for RQ1 and RQ2 cannot probe whether the candidates found are high quality, i.e., if the boundaries they indicate are unexpected or essential to test. For RQ3, we thus perform a qualitative analysis of the identified PBCs by manually investigating each cluster, systematically sampling candidates with varied output lengths, and analysing them. Consequently, we examine whether and how often AutoBVA can identify relevant, rare, and/or fault-revealing SUT behaviour.

A sub-question for all three RQs is how the different local search/exploration strategies compare against one another in detecting unique boundary candidates. In short, the dependent variables in our experiment are the number of (unique) candidates found (RQ1), the number of (unique) clusters covered (RQ2), and the characteristics of interesting candidates (RQ3) found by each exploration approach. Next, we detail how we set up each stage of AutoBVA in our experiments.

Setup of selection and exploration step

Before the main experiment, we performed two screening studies to configure (1) the (global) sampling strategy and (2) the clustering of boundary candidates (see Appendices A and B). Table 3 summarises the setup of both investigations. The sampling method is fixed as the best-performing configuration from the screening study and uses both bituniform sampling and CTS for all experiments. The boundariness quantifier is based on the program derivative with bytecount for the outputs, i.e., the length difference between the stringified versions of outputs. Since all input parameters of the SUTs in this study are integer types, numerical distance is used (implicitly) as input distance. In the search, we use an increment (add to integer) and a decrement (subtract from integer) as mutation operators. We repeat each experiment execution 20 times to account for variations caused by the pseudorandom number generator used during the search. A constant and permissive threshold of 0 for adding boundary candidates to BC is applied in this study, i.e., all pairs with any difference in output length are added. Setup specific for each investigation is taken up below.

| Parameter | Investigation 1 | Investigation 2 |

|---|---|---|

| Sampling method (SS) | bituniform + CTS activated | same as Investigation 1 |

| Exploration strategy (ES) | LNS, BCS | same as Investigation 1 |

| Boundariness quantifier (PD) | strlendist | same as Investigation 1 |

| Threshold | 0 | same as Investigation 1 |

| Mutation operators (m) | increment/decrement (++/–) | same as Investigation 1 |

| SUTs | bytecount, BMI-Value, BMI-Class, Date | 580 functions (Julia Base) |

| Stop criterion | {30,600} seconds | 30 seconds |

Investigation 1

For each SUT, we conducted two series of runs, one short for 30 s each and one longer for 600 s (10 min), to understand the convergence properties of AutoBVA. We selected those two time-limits to loosely assess a tester’s more direct (30 s) or offline (10 min) usage.

We investigate four SUTs: a function to print byte counts, Body Mass Index (BMI) calculation as value and category, and the constructor for the type Date. The SUTs have similarities (i.e., unit-level that have integers as input), but each has peculiar properties and different sizes of input vectors. For instance, when creating dates, the choice of months affects the day’s range validity (and vice-versa), whereas the result of a BMI classification depends on the combination of both input values (height and weight). Below, we explain the input, output, and reasoning for choosing each SUT. The code for each SUT is available in our reproduction package (https://doi.org/10.5281/zenodo.7677012).

bytecount (i1: Int): Receives an integer representing a byte value (i1). The function returns a human-readable string (valid) or an exception, signalling whether the input is out of bound (invalid). The largest valid inputs are those represented as Eta-bytes. We chose this SUT because it is the most copied code in StackOverflow. Moreover, the code faults the boundary values when switching between scales of bytes (e.g., from 1,000 kB to 1.0 MB).

bmi_value (h: Int, w: Int): Receives integer values for a person’s height (h, in cm) and weight (w, in kg). The function returns a floating point value resulting from w/(h/100)2 (valid) or an exception message when specifying negative height or weight (invalid). The SUT was chosen because the output is a ratio between both input values, i.e., different height and weight combinations yield the same BMI values.

bmi_classification (h: Int, w: Int):: Receives integer values for a person’s height (h, in cm) and weight (w, in kg). Based on the result of bytecount, the outcome is a category string serving as a health indicator with six categories, spanning from underweight all the way to severely obese in the valid range, and causing exceptions if called with negative height or weight values. This design was chosen because the boundaries between classes depend on the combination of the input values, leading to various valid and invalid combinations.

date (year: Int, month: Int, day: Int): Receives an integer value representing a year, month, and day. The function returns the string for the specified date in the proleptic Gregorian calendar (valid).10 Otherwise, it returns specific exception messages for incorrect combinations of year, month, and day values (invalid). The Date SUT was chosen because it has many boundary cases conditional to the combination of outputs (e.g., the maximum valid day value varies depending on the month or the year during a leap year).

Our choice of SUTs offers a gradual increase in the input complexity, where the tester needs to understand (1) individual input values, (2) how they will be combined according to the specification, and (3) how changing them impacts the behaviour of the SUT. For instance, when choosing the input for the year in the date constructor, a tester can choose arbitrary integer values (case ‘1’) or think of year values that interact with February 29 to check leap year dates (case ‘2’). Another example would be choosing a test input for BMI, in which the tester needs to manually calculate specific height and weight combinations to verify all possible BMI classifications (case ‘3’). A tester must check the boundaries for the types used (e.g., maximum or minimum integer values) in parallel to all those cases. Note that systematically thinking of inputs to reveal boundaries is a multi-faceted problem that depends on the SUT specification (e.g., what behaviours should be triggered), the values acceptable for the input type and the output created independently of being a valid outcome or an exception.

Investigation 2

To investigate AutoBVA’s generalizability and mitigate selection bias in a realistic scenario, we test it on a non-curated set of actual SUTs (the base module in Julia). Unlike Investigation 1, we limit the execution time per SUT to 30 s as we mostly want to understand whether interesting candidates can be gathered.

The base module in Julia 1.8.0 contains a wide range of basic services that all Julia programs can access by default. We run AutoBVA in 580 functions out of 2,375 (24.4%). In Julia, each function (name) yields several methods that, in turn, have unique signatures (name + input parameters). For instance, the bytecount function comes with four methods, of which each is considered a unique SUT in our study to obtain a more fine-grained detection assessment. Ultimately, the 580 functions resulted in 613 SUTs investigated in our experiment.

The resulting sample of 613 methods is divided between 259 explicitly exported methods and 358 non-exported ones. Non-exported methods can be used in Julia with the globally unique full namespace qualifier. We could not run AutoBVA on all functions because (1) our current framework implementation only supports functions with inputs of type integer, and (2) we sample only functions with at most three input parameters. To support future research studies aiming to also automatically sample from Julia Base functions, we share the following obstacles that we faced:

-

Circa 20 exported functions were pre-filtered as they were deemed too low-level (operators such as and, or, and multiplier that might have direct representations in the command set of the CPU).

-

A set of 36 functions crashed the AutoBVA due to memory allocation issues for large integer inputs. Another special case that crashed AutoBVA was when it used, as a SUT, the exit function that exits Julia.

Numbers for LNS and BCS are reported separately to highlight their respective impact for very short runtimes, which may benefit the more straightforward strategy. RQ1 is addressed by reporting the proportion of functions for which boundary candidates could be detected. Average and standard deviation measures are offered for the quantitatively successful ones, i.e., those for which candidates could be detected. RQ2 is firstly addressed quantitatively by the number of successfully summarised SUTs. A SUT is successfully summarised if a mean Silhouette score of above 0.6 is reported for all its clusterings in the validity groups-a value that is commonly recognized as an acceptable/good clustering. Secondly, (unique) cluster coverage is reported in an aggregate per exploration strategy. RQ3 is addressed by observations about success factors in successful SUTs and potential reasons for issues that did not lead to successful detection. This will be exemplified by several non-successful ones and their commonalities and a selection of the two successful ones with the best Silhouette scores that are analyzed similarly to the four SUTs in Investigation 1 through cluster representative tables.

Setup of summarisation step

To summarise a large set of boundary candidates, we have to decide and extract a set of complementing features able to group similar candidates and set apart those that are dissimilar. Ideally, we want a generic procedure which can give good results for many different types of SUTs. We want to select features so boundary pairs with similar outputs are grouped. The focus of this study has been on detection, while the following reasoning regarding simplicity and feature selection guided the applied summarisation.

We implement the AutoBVA summarisation by validity-value similarity clustering using k-means clustering Hartigan & Wong (1979). We choose k-means clustering because it is one of the simplest, well-studied, and understood clustering algorithms and widely available Xu & Tian (2015). Clustering was done per validity group to avoid mixing pairs that have very different output types, namely: VV (String, String), VE (String, Error), and EE (Error, Error). We span a feature space over the boundary candidates to capture a diverse range of properties and allow for a diversified clustering from which to sample representatives per cluster. We extract features from the output differences between boundary candidates since input distances within the boundary candidates are already factored into the selection of the candidates. Moreover, outputs can easily be compared in their “stringified” version using a generic information theoretical measure Q, typically a string distance function.

Our goal is that the features that span the space shall be generic and capture different aspects of the boundary candidates. We, therefore, introduce two feature types: (1) WD captures the differences within a boundary candidate as the distance between the first and the second output; (2) U is a two-attribute feature that captures the uniqueness of a candidate based on the distance between the first (U1) and second (U2) output to the corresponding outputs of all other candidates in the set.11 Considering Q as the distance measure chosen for the outputs, we define U and WD for a boundary candidate j ∈ BC, j = (outj1, outj2) as:

To understand which combination of distance measures (Q) yields better clustering of boundary candidates, we conducted a screening study using three different string distances to measure the distance between the outputs, namely, strlendist, Levensthein (Lev) and Overlap Coefficient of length two.12 These common metrics cover different aspects of string (dis)similarity, each with its own trade-off. For instance, strlendist is efficient but ignores the characters of both strings, whereas Overlap compares combinations of characters but disregards specific sequences in which those characters show up (e.g., missing complete names or identifiers); lastly, Lev is the least efficient but more sensitive to differences between the strings. Nonetheless, all three measures only consider lexicographic similarity and are not sensitive to semantics, such as synonyms or antonyms.

For simplicity, the screening study was done only on the bytecount SUT. Details of the screening study and examples of features extracted from boundary candidates are presented in Appendix B. Our screening study reveals that strlendist (WD) and Overlap (WD, U) is the best combination of features and distance measures that yield clusters of good fit with high discriminatory power. Choosing those types of models yields more clusters that can be differentiated with high accuracy, hence allowing for a more consistent comparison of cluster coverage between exploration strategies. Moreover, clearer clusters are also useful in practice, allowing AutoBVA to suggest testers with more diverse individual boundary candidates.

Formally, we create a feature Matrix M over boundary candidates of a SUT with each row i representing each attribute from the features over each boundary candidate j, as defined below.

For this experiment, each M has four rows, one per attribute in the chosen features: strlendist (WD), Overlap (WD), Overlap (U1) and Overlap (U2). The number of columns (j) varies depending on the number of candidates found per exploration approach and SUT. Since k-means clustering is a heuristic algorithm, we run the clustering 100 times on each SUTs feature matrix M to retain the model of clustering of best fit according to the Silhouette score. To evaluate the coverage of the boundary candidates (RQ2), we choose the clustering discriminating best, i.e., the one resulting in the most clusters based on the top five percentile Silhouette scores.

We improve overall clustering quality by selecting only a diverse subset of boundary candidates for the clustering. For that, we create an initial diversity matrix as of above using 1,000 randomly selected candidates.13 We then substitute the least diverse 100 candidates (based on the sum of all normalized diversity readings) with 100 of the remaining candidates. Until no more candidates exist, we repeat this step to receive M for clustering. In the second step, we assign all candidates not part of M to the cluster with the closest cluster centre. A positive side-effect of this procedure is that it is much more memory efficient (limiting matrix sizes roughly to 1,000 candidates × 4 features).

Results and Analysis

We here present the results and analyze them in correspondence to RQ1–RQ3 (‘RQ1-Boundary candidate quantities, RQ2-Robust coverage of diverse behaviours, RQ3-Identifying relevant boundaries’). The answers combine Investigations 1 and 2, and each section concludes with a list of key findings. RQ3, with its focus on relevant behaviour and potential faults, demands a thorough analysis, which is why it is subdivided into a subsection per SUT for Investigation 1 (‘Bytecount, BMI classification’), a subsection for Investigation 2 on Julia Base (‘RQ3 and Investigation 2’), and an RQ3 summary subsection (Summary for RQ3).

RQ1-Boundary candidate quantities

Table 4 summarises the number of common and unique boundary candidates found by the two search strategies, LNS and BCS of Investigation 1. For each SUT and search strategy, it shows results for the 30-second and the 600-second runs individually. For each time control, the mean and standard deviation, over the 20 repetitions, of the number of potential boundary candidates as well as the number of unique candidates, is listed. For example, we can see that BCS in a 600-second run for the Date SUT finds, on average, 897.4 +/- 82.6 PBCs out of a total of 45456 unique ones (found over all runs and search strategies). And overall, 7,276 of the total 45,456 PBCs were uniquely only found by BCS, i.e., in none of the LNS runs, any of these 7,276 were found.

| 30 seconds | 600 seconds | ||||||

|---|---|---|---|---|---|---|---|

| SUT | Strategy | Total | # PBC found | # Unique | Total | # PBC found | # Unique |

| bytecount | LNS | 57 | 10.05 ± 0.8 | 0 | 59 | 12.8 ± 0.8 | 0 |

| BCS | 57 | 56.7 ± 0.5 | 44 | 57.85 ± 0.4 | 43 | ||

| BMI class | LNS | 25,207 | 1, 747.85 ± 86.6 | 23,238 | 358,956 | 24, 421.7 ± 354.9 | 332,120 |

| BCS | 25,207 | 149.85 ± 13.3 | 1,276 | 1, 944.8 ± 74.5 | 18,358 | ||

| BMI | LNS | 90,027 | 6, 147.45 ± 95.1 | 86,157 | 1,280,955 | 87, 319.0 ± 1747.8 | 1,226,314 |

| BCS | 90,027 | 272.7 ± 15.8 | 2,481 | 3873.05 ± 124.4 | 36,186 | ||

| Julia Date | LNS | 2,444 | 246.1 ± 13.7 | 2,232 | 45,456 | 4, 351.6 ± 86.3 | 37,216 |

| BCS | 2,444 | 21.6 ± 5.9 | 191 | 897.4 ± 82.6 | 7,276 | ||

| Exported | Strategy | # SUTs | # SUTs success (%) | # PBCs per run (30 s.) |

|---|---|---|---|---|

| yes | LNS | 259 | 197 (76%) | 1, 042 ± 76 |

| BCS | 198 (76%) | 554 ± 40 | ||

| no | LNS | 354 | 243 (69%) | 578 ± 67 |

| BCS | 247 (70%) | 391 ± 37 |

We see that overall, AutoBVA produces a large number of boundary candidates with either exploration strategy. Except for bytecount, there is also a large increase in the number of candidates found as the execution time increases. While the number of candidates found per second does taper off also for BMI-class, BMI and Julia Date (from 166, 552, and 81 per second for the short runs to 117, 380, and 76 for the long runs, respectively), longer execution clearly has the potential to identify more candidates. Since the 20 times longer execution time for bytecount only finds one additional candidate (58 total versus 57 for 30 s), it might be useful to terminate search and exploration when the rate of new candidates found goes below some threshold.

For the bytecount SUT, only BCS finds a unique set of candidates, meaning that BCS also identified all boundaries identified by LNS. This means that only 14 (58–44) of all candidates found were found by LNS, even after 20 runs of 600 s, and BCS also found all of those. For the other SUTs, LNS clearly finds more candidates and more unique candidates, between 5 and 15 times more, depending on the SUT.

The boundary detection statistics for Investigation 2 are summarised in Table 5. Boundary candidates could be identified with both exploration strategies in roughly 76% of the exported SUTs and 70% of the non-exported SUTs. Even though BCS could cover more SUTs in numbers, the difference is not statistically significant. LNS detects significantly more candidates.

| # of Clusters | # of Pairs | Silhouette Score | |||||||

|---|---|---|---|---|---|---|---|---|---|

| SUT | VV | VE | EE | VV | VE | EE | VV | VE | EE |

| bytecount | 6 | 1 | 1 | 57 | 1 | 1 | 0.95 | 1.0 | 1.0 |

| BMI | 3 | 3 | – | 579,030 | 759,380 | 0 | 0.82 | 0.0 | – |

| BMI-class | 13 | 2 | – | 7,288 | 367,125 | 0 | 1.0 | 1.0 | – |

| Julia Date | 7 | 2 | 3 | 368 | 77,207 | 253,875 | 0.73 | 0.89 | 0.89 |

Thus, overall, LNS produces a higher quantity of boundaries. This is expected since it is a random search strategy with minimal local exploration. The effect can likely be explained by two reasons that may also interplay. First, the low algorithmic overhead of the LNS search method enables it to make more calls to the underlying SUT, given a fixed time budget. Second, a proportion of the BCS searches can fail and return no boundary candidates since the input landscape does not regularly lead to changes in output partition through single-dimensional mutations, i.e., to one input. However, the quantity of boundary candidates might not directly translate to finding more diverse and “better” boundary candidates; next, we thus consider RQs 2 and 3 addressing this issue.

RQ2-Robust coverage of diverse behaviours

Using our validity-value similarity clustering summarisation method, we obtained between six and 15 clusters in Investigation 1 across the different SUTs and validity groups (VV, VE and EE) with high clustering quality scores (see Table 6). We see that most clusters were differentiated in the VV group. No EE candidates were identified for BMI and BMI-class because of the lack of detected boundary candidates. We also find that, except for the clustering for BMI in group VE, all attempted clusterings had a high discriminate score.

We summarise the coverage of clusters per SUT, exploration strategy, and execution time in Table 7. For example, we can see that for the Julia Date SUT, after we merged all candidates found by any of the methods in any of the runs and clustered them, we found 11 clusters. In a 30-second run, LNS covered 4.9 +/- 0.3 of them and covered one cluster that was not covered by BCS (in a 30-second run), while in a 600-second run, BCS covered 7.5 +/- 0.8 clusters and covered six that were not covered by LNS (in a 600-second run).

LNS shows consistent but modest cluster coverage growth with increasing running time. In other words, on average, boundary candidates were found using LNS to cover one or two more clusters when increasing the execution time from 30 s to 10 min. In contrast, BCS shows more cluster coverage improvement over time, where five additional clusters were covered when searching for 10 min, both for BMI classification and Julia Date. It shows no such growth for bytecount or BMI. Still, it has also “saturated” for these SUTs already after 30 s, i.e., it has covered the total number of clusters found after 30 s and thus has little to no potential for further improvements.

| Total | 30 seconds | 600 seconds | ||||

|---|---|---|---|---|---|---|

| SUT | Strategy | Clusters | # Found | # Unique | # Found | # Unique |

| bytecount | LNS | 8 | 5.25 ± 0.6 | 0 | 6.0 ± 0.0 | 0 |

| BCS | 8.0 ± 0.0 | 2 | 8.0 ± 0.0 | 2 | ||

| BMI-class | LNS | 15 | 14.95 ± 0.2 | 3 | 15.0 ± 0.0 | 0 |

| BCS | 9.35 ± 0.9 | 0 | 14.25 ± 0.6 | 0 | ||

| BMI | LNS | 6 | 6.0 ± 0.0 | 0 | 6.0 ± 0.0 | 0 |

| BCS | 6.0 ± 0.0 | 0 | 6.0 ± 0.0 | 0 | ||

| Julia Date | LNS | 11 | 4.9 ± 0.3 | 1 | 5.0 ± 0.0 | 0 |

| BCS | 2.7 ± 1.1 | 1 | 7.5 ± 0.8 | 6 | ||

In many cases, BCS and LNS cover the same clusters, but some exceptions exist. For instance, only BCS found boundaries between the valid-error and error-error partitions for bytecount-clusters 7 and 8 (see ‘Bytecount’). In contrast, considering the 30-second search, only LNS identified candidates in BMI classification that cover the transitions between (underweight, normal) and (normal, overweight)-clusters 8 and 13 (see Section ‘BMI classification’). However, with increased execution time, BCS was the only strategy to find unique clusters (final column of Table 7). Particularly, if we look at Julia Date (10 min), BCS covers six unique clusters-including two clusters with “valid” outputs but unexpectedly long month strings. In RQ3, we further explain and compare these clusters for each SUT and argue their importance.

The comparisons above highlight the trade-off between time and effectiveness of the exploration strategies. Overall, LNS can be more effective in covering clusters in a short execution (BMI-class and Julia Data), but this is not always the case (e.g., bytecount). And with more execution time, BCS generally catches up to LNS (BMI-class) and often surpasses it (bytecount and Julia Date) both on average and in the number of uncovered unique clusters. Clearly, attributes of the SUTs will affect cost-effectiveness, e.g., the number of arguments in the input, (complexity of) specification, or the theoretical number of clusters that could be obtained to capture boundary behaviour.

From Table 7, we also note that the standard deviations are typically low, so the method is overall robust to random variations during the search. Still, we do note that the best method for Julia Date (BCS) only finds 7–8 of the total 11 clusters. This is not so for the other three SUTs, where it tends to find all of the clusters in a 600-second run.