Chimpanzee facial gestures and the implications for the evolution of language

- Published

- Accepted

- Received

- Academic Editor

- Jennifer Vonk

- Subject Areas

- Animal Behavior, Anthropology, Evolutionary Studies, Zoology

- Keywords

- Chimpanzees, Pan troglodytes, Gestures, Facial expressions, Communication, Signaling properties

- Copyright

- © 2021 Florkiewicz and Campbell

- Licence

- This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. For attribution, the original author(s), title, publication source (PeerJ) and either DOI or URL of the article must be cited.

- Cite this article

- 2021. Chimpanzee facial gestures and the implications for the evolution of language. PeerJ 9:e12237 https://doi.org/10.7717/peerj.12237

Abstract

Great ape manual gestures are described as communicative, flexible, intentional, and goal-oriented. These gestures are thought to be an evolutionary pre-cursor to human language. Conversely, facial expressions are thought to be inflexible, automatic, and derived from emotion. However, great apes can make a wide range of movements with their faces, and they may possess the control needed to gesture with their faces as well as their hands. We examined whether chimpanzee facial expressions possess the four important gesture properties and how they compare to manual gestures. To do this, we quantified variables that have been previously described through largely qualitative means. Chimpanzee facial expressions met all four gesture criteria and performed remarkably similar to manual gestures. Facial gestures have implications for the evolution of language. If other mammals also show facial gestures, then the gestural origins of language may be much older than the human/great ape lineage.

Introduction

Great apes are known for their elaborate use of gestures (Byrne et al., 2017). Gestures are commonly defined as flexibly and intentionally produced bodily movements used during bouts of communication to achieve a goal (Byrne et al., 2017; Moore, 2016; Byrne & Cochet, 2017). Over 80 different gesture types have been identified across the great apes, which vary in their meaning and usage (Byrne et al., 2017). Most gesture types identified in behavioral ethograms describe movement of body or limbs, and we refer to them as ‘manual gestures.’ For example: over 90% of gesture types listed in the St. Andrews Catalogue of great ape gestures involve the hands, arms, legs, feet, and torso (Byrne et al., 2017), with particular focus on the arms and hands (N = 43 or 51% of gesture types). One distinguishing feature of great apes is a tendency towards upright posture, which is associated with increased suspensory behavior (such as brachiation, Andrews, 2020). Increased reliance on suspensory behavior resulted in anatomical changes associated with greater flexibility and mobility of the hands, wrists, and arms (Andrews, 2020), and these anatomical changes may explain why great apes frequently gesture with these body parts. The transition to bipedal locomotion in the hominid lineage resulted in greater-still freedom of the hands and arms (Corballis, 1999). In humans (Homo sapiens), manual gestures play an important role in the production, comprehension, and learning of language (Goldin-Meadow & Alibali, 2014). However, humans also gesture with the face, with movements of the lips, chins, and eyebrows all documented as deictic gestures (Enfield, 2002).

The ability to gesture with the face could be beneficial for species reliant on quadrupedal locomotion. Monkeys, for example, rely almost exclusively on quadrupedalism, whether terrestrial or arboreal, which is associated with decreased flexibility of the hands (Tamagawa et al., 2020). Therefore, faces may be a better place to look for gestures in quadrupeds than the arms and hands, since the face is not associated with locomotor constraints. To begin the search for facial gestures in nonhumans, we chose to study chimpanzees (Pan troglodytes) for two reasons: first, their well-described manual gestures, and second, their phylogenetic position between humans and monkeys. Firstly, the well-documented manual gestures of chimpanzees (Byrne et al., 2017; Hopkins & Leavens, 1998; Leavens & Hopkins, 1998; Liebal, Call & Tomasello, 2004; Leavens, Russell & Hopkins, 2005; Hobaiter, 2011; Roberts, Vick & Buchanan-Smith, 2012; Roberts, Vick & Buchanan-Smith, 2013; McCarthy, Jensvold & Fouts, 2013; Hobaiter & Byrne, 2014; Graham et al., 2018; Heesen et al., 2019; Roberts & Roberts, 2019) provide a benchmark against which to compare the characteristics of facial expressions (Fig. 1). While there is evidence for the use of manual gestures outside of the great apes (Laidre, 2011; Gupta & Sinha, 2019; Molesti, Meguerditchian & Bourjade, 2020), it is limited in comparison to the extensive research available on great ape species. Thus, we would not have the same degree of performance metrics to apply to facial expressions. For these reasons, we feel that the best approach to begin the search for facial gestures is in a species with a documented repertoire of manual gestures.

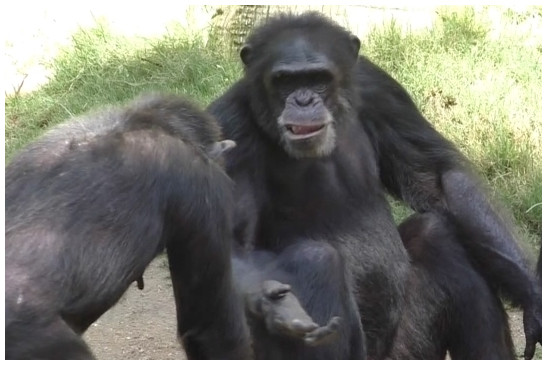

Figure 1: Photograph.

Great ape manual gestures are often defined as flexibly and intentionally produced bodily movements during bouts of communication to achieve a goal. It is possible that chimpanzee facial expressions are also capable of being used as gestures. In this photo, an adult male (right) beckons to an adult female (left) using a lower lip relaxer face and a reach gesture. This suggests that both signals are being used to achieve a goal. This goal is eventually met when the female approaches the male for affiliative contact. By comparing facial expressions with the described gesture repertoires of chimpanzees, we sought evidence of whether facial expressions like this might also be gestural. Photo credit: Brittany Florkiewicz.Secondly, the great apes (Pan troglodytes, Pan paniscus, Gorilla spp., Pongo spp.) have unique anatomy that corresponds to their phylogenetic position between humans and the other mammals. The great apes exhibit variation in their locomotor behavior, including quadrupedal knuckle-walking (which is more upright than monkeys who walk on their palms), brachiation, and occasional bipedalism (Kivell & Schmitt, 2009). Great apes therefore occupy a transitional stage with a tendency towards more upright posture than monkeys, but less than humans. The result may be greater freedom by great apes to produce a wide variety of manual gestures with their hands (Hobaiter & Byrne, 2014; Graham et al., 2018; Genty et al., 2009), but the continued presence of terrestrial quadrupedalism and brachiation could still favor selection for gesturing with the face. Great apes make frequent use of facial expressions for communication (Van Hooff, 1967; Parr & Waller, 2006). This has also been observed in platyrrhine monkeys, such as capuchins (Sapajus apella, Weigel, 1979; Visalberghi, Valenzano & Preuschoft, 2006; De Marco & Visalberghi, 2007), but it is currently unclear as to whether the term ‘gesture’ can also be applied to monkey and great ape facial expressions.

Similar to great apes, research on gestural communication in monkeys focuses predominantly on the hands, arms, legs, and torso (Laidre, 2011; Gupta & Sinha, 2019; Molesti, Meguerditchian & Bourjade, 2020). For example: out of the 67 movements labeled as gestures for olive baboons (Papio anubis), only nine (or 13.43%) are associated with muscle movement of the face region (such as the eyebrows, eyes, nose, and mouth; Molesti, Meguerditchian & Bourjade, 2020). While the literature on great ape gestural communication is more extensive, there is disagreement regarding whether facial expressions can be used as gestures. In some great ape gestural ethograms, such as those used to study orangutan communication, facial expression types such as air bite, bite, duck lips, formal bite, and play face are classified as gestures (Liebal & Pika, 2006; Cartmill & Byrne, 2010). But it appears that other facial expression types, such as grin, open mouth, relaxed open mouth, pout face, and protruded lips are classified separately as expressions, not gestures (Liebal & Pika, 2006). In addition, some facial expression types that were initially classified as gestures (such as the play face, Cartmill & Byrne, 2010) were not incorporated into recently constructed gestural ethograms (Byrne et al., 2017).

Research on whether facial expressions are capable of being used as gestures would provide important insight into the evolution of human language. While human language consists of many unique properties (such as being able to refer to the past, present, and future, Fitch, 2010), it also has shared features with non-human primate communication, such as flexibility and syntax (Fitch, 2010; Hewes et al., 1973; Plooj, 1978; Arnold & Zuberbühler, 2006). This has led researchers to suggest that the evolutionary precursor to human language can be found in non-human primate communication (Fitch, 2010; Arnold & Zuberbühler, 2006; Armstrong & Wilcox, 2007; Dediu & Levison, 2013; Seyfarth & Cheney, 2018). Some researchers propose that gestural communication of great apes is the most likely candidate, given the similarities between great ape gestures and human language (Fitch, 2010; Corballis, 2002). According to Corballis (2002), the evolution of human language was a multi-step process: great ape manual gestures led to more symbolic forms of manual communication in the hominid lineage, which then led to the development of spoken language. This multi-step process has often been referred to as the “hand to mouth” theory of language evolution (Corballis, 2002).

Those who promote a gestural origin of language sometimes state that other forms of communication, such as vocalizations and facial expressions, cannot be potential precursors due to their strong association with emotion, making them inflexible and spontaneous (rather than flexible and intentional, Hewes et al., 1973; Corballis, 2002; Pollick & De Waal, 2007). The ability to communicate flexibly and intentionally with vocalizations and facial expressions is thought to have appeared later in hominid evolution, closer to the emergence of Homo sapiens (Corballis, 2002). If facial expressions are capable of being used as gestures, it suggests that both facial gestures and manual gestures were important communication systems in the evolution of human language. This idea has often been referred to as the “multimodal theory” of language evolution, which states that all modes of communication (facial, vocal, and gestural) were important pre-requisites for the establishment of language (Fröhlich et al., 2019) and co-evolved closely together (McNeill, 2012).

If facial expressions are used as gestures, this would also suggest that the evolutionary precursor to human language could be much older than previously assumed. Those who promote a gestural origin of language argue that the “evolutionary starting point” of human language can be traced to great ape gestural communication (Tomasello & Call, 2019). Great ape facial gestures could be consistent with this view, as they could have arisen along with manual gestures within great apes. But, the presence of facial gestures would also raise the possibility that this “starting point” might be traced back further, potentially even to the last common ancestor of mammals. Some facial expression types are found across a wide variety of mammals and are both morphologically and functionally similar across species (Darwin, 1872). One example of this includes grins, which are produced by canids (Canis lupus, Canis domesticus), cats (Felis catus), opossums (Didelphis virginiana), tree shrews (family Soricide), lemurs (Lemur spp., Haplemur spp., Propithecus spp.), platyrrhine monkeys (Callitrhix geofroyi, Sapajus spp., Lagothrix spp., Tamarinus illigeri), haplorrhine monkeys (Macaca mulatta, Madrillus spinx), and chimpanzees (Pan troglodytes) in response to startling and potentially dangerous stimuli (Darwin, 1872; Andrew, 1963). If grins and other facial expression types shared among mammals are capable of being produced as gestures in primates, it is possible that gestural communication can also be found in other mammals.

| Gesture properties | Measured variables | Variable type | Statistical test | Operational definition | Relevant citations |

|---|---|---|---|---|---|

| Communicative | Mechanical Ineffectiveness | Binary | Binomial Test; Composite gesture score; GLMM | The movement(s) are motorically ineffective; they are not used to complete gross motor behaviors, such as walking, climbing, chewing, etc. | Cartmill (2008); De Marco & Visalberghi (2007); Fröhlich & Hobaiter (2018) |

| Communicative | Recipient ID | Binary | Binomial Test; Composite gesture score; GLMM | The signaler directs their behavior towards a conspecific, which suggests that the behavior is ‘socially directed’. | Tomasello & Call (2019); Fröhlich & Hobaiter (2018); Pika & Fröhlich (2019) |

| Intentional | Response Waiting [Overall] |

Binary | Binomial Test; Composite gesture score; GLMM | One or both forms of response waiting (response waiting while persisting and/or response waiting at the end of the signal) are observed. See below for definitions/criteria. This suggests that the signaler is deliberately communicating with the recipient. | Byrne et al. (2017); Graham et al. (2018); Roberts & Roberts (2019); Hobaiter & Byrne (2011) |

| Intentional | Response Waiting [at End of Signal] |

Binary | Binomial Test; Composite gesture score; GLMM | At the end of a signal, the signaler fixates their gaze at the recipient, waiting for a behavioral response. This suggests that the signaler is deliberately communicating with the recipient. | Byrne et al. (2017); Graham et al. (2018); Roberts & Roberts (2019); Hobaiter & Byrne (2011) |

| Intentional | Response Waiting [while Persisting] |

Binary | Binomial Test; Composite gesture score; GLMM | As the signaler is persisting with a signal, they fixate their gaze at the recipient, waiting for a behavioral response. This suggests that the signaler is deliberately communicating with the recipient. | New measure introduced in this study, since it is also plausible for a signaler to wait for a response as they persist with the signal. |

| Intentional | Receiver Attention | Binary | Binomial Test; Composite gesture score; GLMM | The signaler produces the signal while the recipient(s) are looking at and have their body positioned towards the signaler. This suggests that the signaling behavior is sensitive to audience effects and that the signaler is deliberately attempting to communicate with the recipient. | Byrne et al. (2017); Graham et al. (2018); Tomasello & Call (2019) |

| Flexible | Elaboration | Binary | Binomial Test; Composite gesture score; GLMM | After the signal is produced, the signaler modifies the physical form of that signal or switches to a new signal type. Elaboration is often used as a way to ‘repair’ potential communicative failure that may have occurred during the initial production of the signal. | Byrne et al. (2017); Leavens, Russell & Hopkins (2005); Roberts, Vick & Buchanan-Smith (2012) |

| Flexible | Persistence | Binary | Binomial Test; Composite gesture score; GLMM | The signaler repeats and/or holds the signal that they are producing. Persistence is often used as a way to ‘repair’ potential communicative failure that may have occurred during the initial production of the signal. | Byrne et al. (2017); Leavens, Russell & Hopkins (2005); Roberts, Vick & Buchanan-Smith (2012) |

| Flexible | Generalized Behavioral Context | Categorical | context tie index score, Mann–Whitney U | Each signal observed was assigned to 1 of 10 possible behavioral contexts which best described the social interaction, which included: affiliation, agonism, arousal (general), feeding, grooming, locomotion, playing, resting, sex, or unsure/unknown. | Pollick & De Waal (2007) |

| Goal Associated | Immediate Interaction Outcome | Binary | Binomial Test; Composite gesture score; GLMM | After the signal is produced, there is an immediate behavioral change in the recipient(s). This behavioral response may/may not satisfy the presumed goal of the signaler. | Byrne et al. (2017); Hobaiter & Byrne (2014); Cartmill & Byrne (2010) |

| Goal Associated | Final Interaction Outcome | Binary | Binomial Test; Composite gesture score; GLMM | After the signaler ceases all communication, there is a clear behavioral response from the recipient(s). This behavioral response may/may not satisfy the presumed goal of the signaler. | Byrne et al. (2017); Hobaiter & Byrne (2014); Cartmill & Byrne (2010) |

| Goal Associated | Presumed Goal | Binary | Binomial Test; Composite gesture score; GLMM | The signaler has a clear and intended behavioral response that they wish to elicit from the recipient(s) which can be hypothesized by the researcher. This behavioral response may be produced immediately following the behavior of the signaler (immediate interaction outcome) or after the signaler ceases all communication (final interaction outcome). If the signaler ceases their communication, it is assumed that this hypothesized goal was achieved (or cannot be achieved). | Byrne et al. (2017); Hobaiter & Byrne (2014); Cartmill & Byrne (2010); Halina, Liebal & Tomasello (2018) |

The history of both facial and gestural signaling research inspired our main goal, which was to examine whether chimpanzees use facial expressions as gestures. We hypothesized that if facial expressions can be gestural, then facial expressions should perform similarly to manual gestures on measures of gesture properties and variables (Table 1). To test this hypothesis, we compared facial expressions to manual gestures using: (1) the average number of gesture variables exhibited for each signaling observation and signal type (which we refer to as composite gesture scores, or CGS); (2) context tie indices (CTI scores), which were initially designed by Pollick and de Waal to test contextual flexibility (Pollick & De Waal, 2007); and (3) the prevalence of each gesture property and corresponding variable using binomial tests and generalized linear mixed models.

We experienced three major obstacles in devising our study. First, much of the gesture research is qualitative (Leavens & Hopkins, 1998; Hobaiter & Byrne, 2014; Genty et al., 2009; Liebal & Pika, 2006), making comparisons difficult and prone to bias. We therefore devised a novel metric (CGS) to quantify the presence and absence of important gesture properties (and their corresponding variables) for all observed facial expressions and manual gestures. There are four important, widely accepted properties of gestures in the literature: gestures are communicative, intentional, flexible, and goal oriented (Byrne et al., 2017; Moore, 2016; Byrne & Cochet, 2017). Each property was operationalized with two to four measures (Table 1). For example, the gesture property of ‘communicative’ was operationalized by two measures, mechanical ineffectiveness and direction at a specific receiver (Table 1). For each recorded manual gesture and facial expression we scored presence/absence for each measure. We then took the percentage of all manual gestures and facial expressions that showed each measure and compared them to evaluate performance. Hence, to evaluate being communicative we generated a composite of two measures, thus our term composite gesture score (CGS).

The second obstacle we faced was that there may be variation in the prevalence of gesture properties (and corresponding variables) due to contextual factors. For example, Leavens, Russell & Hopkins (2005) found that chimpanzee manual gestures vary in the extent to which they exhibit persistence and elaboration. This variation appears to be attributed to factors such as food quality and availability. Chimpanzees were much more likely to exhibit persistence and elaboration in their gesturing behavior when presented with lower quality food items (such as chow) as opposed to higher quality and highly desired food items (such as bananas) (Leavens, Russell & Hopkins, 2005). Because some gesture properties vary in prevalence, it is possible that a given signal type may not exhibit all four gesture properties (and their corresponding variables) simultaneously in each instance of use. To account for this possibility, we compared the variation in gesture properties exhibited by manual gestures to that of facial expressions.

Finally, previous studies have focused on only one or two important gesture properties (such as flexibility or goal-association only, Leavens, Russell & Hopkins, 2005; Hobaiter & Byrne, 2014) and their corresponding variables. Variables used to measure each gesture property also vary extensively between studies and sometimes overlap with other important gesture properties. For example: studies examining intentionality in gestural communication vary in the number of variables examined, which ranges from one to seven (Graham et al., 2019). Some of these variables (such as receiver attention state, persistence, and satisfaction with a goal) are also variables used to measure other important gesture properties (such as flexibility and goal association, Byrne et al., 2017; Leavens, Russell & Hopkins, 2005; Roberts, Vick & Buchanan-Smith, 2012; Hobaiter & Byrne, 2014; Cartmill & Byrne, 2010). Therefore, we coded and compared all previously described gesture properties (N = 4) and their corresponding variables (N = 12) using our novel scoring metric (composite gesture scores), rather than focusing on a select few.

The results of our study will provide insight into the communicative properties associated with facial expressions and their implications for the evolution of human language.

Material and Methods

Subjects and data collection

We studied 18 chimpanzees (Pan troglodytes) at the Los Angeles Zoo and Botanical Gardens, which consisted of 13 adults (>seven years old) and five infants (≤seven years old). Out of these 18 chimpanzees, 15 were born and reared at the Los Angeles Zoo. Additional information on the names, birth dates, birth places, genetic relatedness, and husbandry of the chimpanzees can be found in the online supplement (Table S1 and Figs. S1–S2).

We selected chimpanzees for the current study because they frequently produce a wide variety of facial signal (Parr et al., 2007) and gesture types (Hobaiter, 2011; Hobaiter & Byrne, 2014). We collected data during the summer months (June to August) from 2017 to 2019, Monday to Friday, between 8:00 and 14:00, which we identified as peak activity hours in a 2016 pilot study. We recorded the chimpanzees with a Panasonic Full HD Video Camera Camcorder HC-V770 with an external shotgun microphone (Sennheiser MKE400) to improve audio quality. We used two methods when recording the chimpanzees: the opportunistic sampling method and the focal sampling method (Altmann, 1974; Florkiewicz & Campbell, 2021).

Data coding

We defined signals as actions performed by a signaler that attempted to alter the behavior of others (Maynard-Smith & Harper, 1995) and recorded the signaler ID. In this study, we defined a facial signal as facial muscle movement used for the purpose of communication. Facial muscle movements used for biological maintenance (such as blinking, feeding, and yawning) and object manipulation (such as chewing or scraping on objects) were not considered in this study. We placed facial expressions into behavioral categories (i.e., facial expression types) based on similarities in key muscle movements (using chimpFACS, Parr et al., 2007). We considered a total of nine facial expression types in this study, six of which were derived from Parr et al. Parr et al. (2007). We added three additional facial expression types (lipsmacking face, lower lip relaxer face, and raspberry face) to this ethogram from our 2016 pilot study. We did not consider neutral faces in the current study since it is difficult to evaluate whether they are capable of being used in a communicative manner. We excluded whimper faces due to a small number of observations (N = 1). Our final ethogram of facial expressions and manual gestures can be found in Table 2.

| Facial Expression Ethogram | |

|---|---|

| Type | Description |

| Ambiguous Face | Communicative movement of the face is observed but does not physically resemble the facial expression types described below. |

| Bared Teeth Face | Corners of the lips are drawn backwards, exposing both rows of teeth. |

| Lipsmacking Face | Mouth is opened and closed rapidly, producing a low sound. |

| Lower Lip Relaxer Face | Bottom lip is relaxed away from the gums and bottom row of teeth. |

| Pant-Hoot Face | Lips are funneled together, with vocalization being produced. |

| Play Face | Mouth is opened, lips possibly drawn backwards, and bottom row of teeth possibly exposed. Sometimes accompanied with a vocalization. |

| Pout Face | Lips are funneled close together and pushed outwards while the chin is furrowed upward. |

| Raspberry Face | Lips are pressed tightly together (sometimes with the tongue) to create a loud sound. |

| Scream Face | Similar to bared teeth face, but jaw is stretched, and a loud vocalization is produced. |

| Manual Gesture Ethogram | |

| Type | Description |

| Ambiguous Touch | Light contact of the fingertips, fingers, palm, and/or hand onto the recipient’s body. |

| Arm Raise | One or both arms are raised vertically above the shoulder. |

| Bite | A part of the recipient’s body is held between (or against) the lips or teeth of the signaler. |

| Clap | Hands are brought together to create a loud, audible sound. |

| Dangle | Signaler hangs from the hands above a recipient while shaking their feet. |

| Directed Push | The palm is placed on the recipient’s body, with force being exerted to try and move the recipient towards a specific location. |

| Embrace | Both arms are wrapped around a recipient, with physical contact being made and maintained. |

| Hand Fling | Signaler makes rapid movements of the hands or arms towards the recipient. |

| Jump | Both feet leave the ground simultaneously with the signaler’s body being displaced afterwards. |

| Kick | One or both feet are brought into short contact with the recipient’s body. |

| Look | Signaler moves closely towards the recipient and holds eye contact. |

| Object Move | An object is displaced by a signaler, with contact being maintained throughout the movement. |

| Piourette | Signaler twirls around the body’s vertical axis. |

| Present Body Part | A body part is deliberately moved and exposed to a recipient. |

| Present Climb on Me | The back is deliberately moved and exposed to a recipient. The signaler maintains a quadrupedal stance throughout the movement. |

| Present Sexual | Genitals are deliberately moved and exposed to a recipient. |

| Punch Other | Signaler closes their first and makes contact with the recipient’s body. |

| Push | The palm is placed on the recipient’s body, with force being exerted to try and move the recipient towards an unspecific location. |

| Reach | One or both arms are extended towards the recipient, with the palm being oriented upward. |

| Roll Over | Signaler rolls onto their back, which exposes their stomach to a recipient. |

| Slap Other | Either the palm of the hand or an object is brought into contact with the recipient’s body. |

| Slap Other with Object | An object is brought into hard contact with the recipient’s body. |

| Stomp | The sole of one or both feet are lifted vertically and brought into quick contact with the ground. |

| Swing/Rock | Large back and forth movement of the body (and occasionally arms) while being seated, standing quadrupedally, or standing bipedally. |

| Tandem Walk | Signaler positions their arm over the body of the recipient, with both the signaler and recipient walking forward while remaining side by side. |

| Tap/Slap/Knock Object | Quick movement(s) of the hand and/or arm are directed towards an object. |

| Throw Object | Object is thrown into the air and is displaced from the initial starting point. |

We focused primarily on gestures that have already been described in the literature (Byrne et al., 2017; Hobaiter, 2011). In these studies, gestures are defined as bodily movements produced during bouts of communication (Hobaiter & Byrne, 2011). Hereafter, we will refer to these signals as ‘manual gestures.’ Using these definitions and ethograms, we placed manual gestures into behavioral categories (i.e., gesture types) based on similarity in movement. Initially, we included 35 manual gesture types in this study as derived Hobaiter (2011) and Byrne et al. (2017). The number of gesture types decreased to 27 after conducting inter-observer reliability. Other manual gesture types mentioned in Hobaiter (2011) and Byrne et al. (2017) were not incorporated into the current study since they were seldom observed (where each type had less than ten observations). Removing manual gestures that are seldom observed helps to promote a more conservative interpretation of our results.

We evaluated all facial expressions and manual gestures using the four main properties that are common throughout the gesture literature. Gestures are typically defined as bodily movements which are communicative, intentional, flexible, and associated with a goal (Byrne et al., 2017; Moore, 2016; Byrne & Cochet, 2017). We measured 12 variables to quantify these four gesture properties. Of these, we coded 11 as binary variables, which included: mechanical ineffectiveness, receiver attention, recipient ID, response waiting at the end of the signal, response waiting while persisting with the signal, total response wait time (or response waiting overall), persistence, elaboration, immediate interaction outcomes, final interaction outcomes, and presumed goals (see Table 1 for details). For our 12th variable, we coded the generalized behavioral context that each signal occurred in using Pollick and de Waal’s behavioral categories (Pollick & De Waal, 2007).

We coded video footage in ELAN 5.6-AVFX (https://archive.mpi.nl/tla/elan) using a custom annotation template, which contained all of the variables mentioned above. A copy of this template can be found in the online supplement (Template S1).

Inter-observer reliability

We conducted inter-observer reliability on 10% of the video clips from 2018, which contained both facial expressions and manual gestures. This video footage contained 149 facial expressions (or 13.67% of all facial expressions) and 270 manual gestures (or 11.46% of all manual gestures). We calculated percentage of agreement for all variables mentioned above. In previous chimpanzee gesture studies, percentages of agreement at or above 70% were classified as good agreement (Hobaiter, 2011). We calculated Cohen’s Kappa for manual gesture types, which is a common practice in chimpanzee gesture studies (Hobaiter, 2011; Cartmill, 2008). A Cohen’s Kappa of 0.61 or higher is typically considered substantial agreement (McHugh, 2012). The average percentage of agreement across all variables was 79.61%. Facial expression types and manual gesture variables had a good level of agreement (with percentages being above 70%). Initially, manual gesture types had a lower agreement (48.52%). This is because manual gesture types that were morphologically similar to one another (such as touch other, hand on, and grab) were difficult to distinguish from one another. This resulted in lower agreement when separated. Therefore, we condensed the 35 manual gesture types into 27 categories (Table 2) based on morphological similarities, and agreement increased to 65.56%. After condensing gesture types, Cohen’s Kappa was close to substantial agreement (0.604). Additional details can be found in the online supplement (Table S2).

Data analysis

We exported data from ELAN into R 3.6.2. (R Core Team, 2020). For each signal observed, we created a composite gesture score (CGS) by adding the number of gesture variables observed. To create these scores, we used the 11 gesture variables which were coded as being present or absent. The final gesture variable (generalized behavioral contexts) was not included here, since this variable was categorical. The total number of gesture variables which were coded as being present were used for each individual signal’s score. For example: a signal with a CGS of 8 means that this signal exhibited eight out of the 11 gesture variables considered in this study (see Table 1 for a list of possible gesture variables). We then averaged composite gesture scores across facial expression and manual gesture types. We compared the average CGS for each modality using a Mann–Whitney U test (base functions in R 3.6.2). Next, we used binomial tests to determine whether the proportion of observed binary gesture variables for each modality were the result of chance. Chance occurrence would mean that the observers were as likely to score the variable as absent as they were present, statistically speaking. Thus, a binomial score within the realm of chance would indicate that the gesture variable was not reliably associated with the signal, as on any given observation the variable might be present or might not. By requiring a rate significantly above chance we ensured that we only interpreted gesture variables as representative features of a signal if the variable was consistently scored as present. Chance level was set to 50%, and we evaluated scores at the 95% confidence level.

It is possible for two signal types to have the same average CGS but differ in the types of variables observed. Therefore, we used binomial Generalized Linear Mixed Models (GLMMs) to examine the association between binary gesture variables and each mode of communication. We used GLMMs for two main reasons. First, GLMMs help to account for the pooling fallacy (Waller et al., 2013). The communicative signals of up to 18 individuals are incorporated in this study over the span of three years. This inevitably leads to having more signaling samples than individuals. GLMMs are beneficial for addressing the asymmetrical relationship between number of signals and individuals (Waller et al., 2013). Second, GLMMs help to account for idiosyncratic differences in signal use (Waller et al., 2013). For example, some individuals may use a signal as a gesture while others do not. GLMMs can be used to account for individual differences through the use of random effects (Waller et al., 2013).

We implemented Binomial GLMMs with a logit link function using the “lme4″package in R 3.6.2 (R Core Team, 2020; Bates et al., 2015). We compared each model to a null counterpart model where only signaler ID (not signal type) was included as the outcome variable (as outlined in R Core Team, 2020). This is to determine whether signal modality has a significant influence on each gesture variable. We made these comparisons using a likelihood-ratio test using the ANOVA function in base R (Waller et al., 2013). GLMMs were implemented for the following ten variables: receiver attention, recipient ID, response waiting at the end of the signal, response waiting while persisting with the signal, total response wait time (or response waiting overall), persistence, elaboration, immediate interaction outcomes, final interaction outcomes, and presumed goals. Mechanical ineffectiveness was not considered in GLMMs since almost all facial expressions and manual gestures exhibited this variable. In each model, gesture variables were set as the outcome variable, with signal modality and signaler ID set as explanatory variables. Signaler ID was set as a random variable.

We present odds ratios (OR) comparing the strength of association between facial expressions and gestures with the results of each GLMM. Finally, we created context tie index (CTI) scores for each facial expression and manual gesture type for the generalized behavioral contexts. This metric was initially established by Pollick & De Waal (2007) to examine the strength of association between behavioral contexts and signal types (i.e., flexibility in the meaning of signals). For each signal type (Table 2), we calculated the proportion of observed behavioral contexts. For example, the ‘directed push’ gesture was observed in three distinct behavioral contexts: locomotion (N = 9 or 75.00%), play (N = 2 or 16.67%), and sex (N = 1 or 8.33%). We then selected the largest proportion (and corresponding behavioral context) for that signal type’s CTI score (Pollick & De Waal, 2007). For the ‘directed push’ gesture, the composite gesture score would be 0.75, since locomotion was the most frequently observed behavioral context. Signal types with larger CTI scores (i.e., close to 100% or 1.00) have a strong association with a single, specific behavioral context, and signal types with low CTI scores (i.e., close to 0% or 0.00) are associated with multiple contexts. Therefore, Pollick & De Waal (2007) equated high CTI scores with less flexible usage and low CTI scores with more flexible usage. CTI scores for each signal type can be found in Table 3. We compared CTI scores for facial expressions and manual gestures using a Mann–Whitney U test (using base functions in R 3.6.2).

| Modality | Signal type | CTI score |

|---|---|---|

| Facial Expression | Bared Teeth Face | 0.25 |

| Facial Expression | Pout Face | 0.27 |

| Facial Expression | Lower Lip Relaxer Face | 0.31 |

| Manual Gesture | Swing/Rock | 0.32 |

| Manual Gesture | Amb. Touch | 0.34 |

| Facial Expression | Amb. Face | 0.36 |

| Manual Gesture | Jump | 0.36 |

| Manual Gesture | Arm Raise | 0.38 |

| Manual Gesture | Reach | 0.4 |

| Manual Gesture | Bite | 0.48 |

| Facial Expression | Pant Hoot Face | 0.49 |

| Manual Gesture | Kick | 0.5 |

| Manual Gesture | Punch Other | 0.5 |

| Manual Gesture | Clap | 0.52 |

| Manual Gesture | Hand Fling | 0.52 |

| Facial Expression | Scream Face | 0.52 |

| Manual Gesture | Tandem Walk | 0.55 |

| Manual Gesture | Push | 0.55 |

| Manual Gesture | Tap/Slap/Knock Object | 0.58 |

| Manual Gesture | Present Body Part | 0.61 |

| Manual Gesture | Slap Other | 0.65 |

| Manual Gesture | Stomp | 0.69 |

| Facial Expression | Raspberry Face | 0.72 |

| Manual Gesture | Roll Over | 0.74 |

| Manual Gesture | Directed Push | 0.75 |

| Manual Gesture | Embrace | 0.77 |

| Manual Gesture | Present Sexual | 0.79 |

| Manual Gesture | Throw Object | 0.84 |

| Manual Gesture | Slap Other with Object | 0.88 |

| Manual Gesture | Object Move | 0.89 |

| Manual Gesture | Present Climb on Me | 0.91 |

| Manual Gesture | Dangle | 0.93 |

| Facial Expression | Play Face | 0.93 |

| Manual Gesture | Look | 0.95 |

| Facial Expression | Lipsmacking Face | 0.99 |

| Manual Gesture | Pirouette | 1 |

Datasets (Data S1) and code (R Script S1) used to conduct these analyses can be found in the online supplement.

Results

We observed 3,446 signals across 156.5 h of video footage, of which 1,090 were facial expressions and 2,356 were manual gestures. Both facial expressions and manual gestures varied in the extent to which they exhibited the four gesture properties (and the corresponding 11 gesture variables, Table 4). The median composite gesture score (CGS) for manual gestures (median = 8.48, range = 7.31−9.63) was not significantly different from that of facial expressions (median = 8.13, range = 6.08−9.27, Mann–Whitney U = 90, p = 0.257, Table 3).

| Gesture variables | Proportion of facial expressions (p <0.05)? | Binomial test significant for facial expressions (p <0.05)? | Proportion of manual gestures | Binomial test significant for manual gestures? (p <0.05) |

|---|---|---|---|---|

| Mechanical Ineffectiveness | 100.00% | p < 0.001*** | 98.94% | p < 0.001*** |

| Receiver Attention | 49.08% | p = 0.565 | 58.96% | p < 0.001*** |

| Recipient ID | 75.87% | p < 0.001*** | 95.97% | p < 0.001*** |

| Response Waiting (End)a | 18.35% | p < 0.001*** | 25.21% | p < 0.001*** |

| Response Waiting (Persisting) | 62.20% | p < 0.001*** | 59.97% | p < 0.001*** |

| Response Waiting (Overall) | 64.40% | p < 0.001*** | 71.82% | p < 0.001*** |

| Persistence | 89.45% | p < 0.001*** | 70.76% | p < 0.001*** |

| Elaboration | 93.94% | p < 0.001*** | 87.14% | p < 0.001*** |

| Immediate Interaction Outcome | 71.38% | p < 0.001*** | 80.73% | p < 0.001*** |

| Final Interaction Outcome | 88.17% | p < 0.001*** | 89.43% | p < 0.001*** |

| Presumed Goal | 70.83% | p < 0.001*** | 91.30% | p < 0.001*** |

Notes:

Gestures are defined as being communicative, intentional, flexible, and goal associated (Byrne et al., 2017; Moore, 2016; Byrne & Cochet, 2017). Our primary goal was to evaluate whether facial expressions also possess these traits, and therefore should be considered gestures. To do this, we present the results for each criterion separately, showing how facial expressions performed and how they compared to manual gestures. Proportions and binomial test results can be found in Table 4. GLMM results can be found in Tables 5 and 6. For detailed information on how gesture properties (and their corresponding variables) differed across signal types, see the online supplement (Data S1).

| Gesture variables | Null model | Regular model | P-Value |

|---|---|---|---|

| Receiver Attention | 4691.2 | 4670.8 | p < 0.001*** |

| Recipient ID | 2075.0 | 1867.6 | p < 0.001*** |

| Response Waiting (at End of Signal) | 3688.2 | 3677.1 | p < 0.001*** |

| Response Waiting (while Persisting) | 4465.5 | 4454.0 | p < 0.001*** |

| Response Waiting (Overall) | 4002.4 | 4001.8 | p = 0.1098 |

| Persistence | 3727.6 | 3579.2 | p < 0.001*** |

| Elaboration | 2324.6 | 2277.0 | p < 0.001*** |

| Immediate Interaction Outcome | 3610.0 | 3581.7 | p < 0.001*** |

| Final Interaction Outcome | 2329.8 | 2331.8 | p = 0.7949 |

| Presumed Goal | 2612.4 | 2492.8 | p < 0.001*** |

Notes:

| Model | Predictor variable | Estimate | Std. Error | z value | p value | OR (FE/GE) |

|---|---|---|---|---|---|---|

| Receiver Attention | (Intercept) | −0.03989 | 0.08666 | −0.46 | p = 0.645 | 0.6936887 |

| Signal Type GE | 0.36573 | 0.07716 | 4.74 | p < 0.001∗∗∗ | ||

| Recipient ID | (Intercept) | 1.3308 | 0.2045 | 6.508 | p < 0.001∗∗∗ | 0.1599745 |

| Signal Type GE | 1.8327 | 0.1336 | 13.717 | p < 0.001∗∗∗ | ||

| Response Waiting (at End of Signal) |

(Intercept) | −1.51649 | 0.11351 | −13.360 | p < 0.001∗∗∗ | 0.7109288 |

| Signal Type GE | 0.34118 | 0.09549 | 3.573 | p < 0.001∗∗∗ | ||

| Response Waiting (while Persisting) |

(Intercept) | 0.54779 | 0.13885 | 3.945 | p < 0.001∗∗∗ | 1.350992 |

| Signal Type GE | −0.30084 | 0.08249 | −3.647 | p < 0.001∗∗∗ | ||

| Response Waiting (Overall) |

(Intercept) | 0.67214 | 0.16025 | 4.194 | p < 0.001∗∗∗ | 0.8712955 |

| Signal Type GE | 0.13777 | 0.08584 | 1.605 | p = 0.108 | ||

| Persistence | (Intercept) | 2.1205 | 0.1131 | 18.75 | p < 0.001∗∗∗ | 3.500197 |

| Signal Type GE | −1.2528 | 0.1110 | −11.29 | p < 0.001∗∗∗ | ||

| Elaboration | (Intercept) | 2.7702 | 0.1614 | 17.167 | p < 0.001∗∗∗ | 2.624528 |

| Signal Type GE | −0.9649 | 0.1467 | −6.576 | p < 0.001∗∗∗ | ||

| Immediate Interaction Outcome | (Intercept) | 0.9864 | 0.10922 | 9.031 | p < 0.001∗∗∗ | 0.6081248 |

| Signal Type GE | 0.49738 | 0.08954 | 5.555 | p < 0.001∗∗∗ | ||

| Final Interaction Outcome | (Intercept) | 2.1545 | 0.16075 | 13.403 | p < 0.001∗∗∗ | 0.9689051 |

| Signal Type GE | 0.03159 | 0.12076 | 0.262 | p = 0.794 | ||

| Presumed Goal | (Intercept) | 1.0665 | 0.2272 | 4.693 | p < 0.001∗∗∗ | 0.3084879 |

| Signal Type GE | 1.1761 | 0.1070 | 10.988 | p < 0.001∗∗∗ |

Communicative

We measured communicativeness by whether the movement (facial or other) was mechanically ineffective and directed toward one, clear recipient (Table 1). All facial expressions were mechanically ineffective (100.00%, Binomial Test, p < 0.05), and most were produced towards a recipient (75.87%, Binomial Test, p < 0.05). We also observed this pattern with manual gestures; 98.94% were mechanically ineffective (Binomial Test, p < 0.05) and 95.97% were produced towards one, clear recipient (Binomial Test, p < 0.05). Modality was a significant predictor of recipient ID (Likelihood Ratio Test, X2(1) = 209.43, p < 0.001), with facial expressions significantly less likely to exhibit this property than manual gestures (GLMM, p < 0.001, OR = 0.160).

Intentional

We measured intentionality by whether the movement was sensitive to receiver attention, exhibited response waiting at the end of the movement, exhibited response waiting while persisting in the movement, or exhibited response waiting overall (i.e., the presence of any or both forms of response waiting; Table 1). Approximately half of all observed facial expressions were sensitive to receiver attention (49.08%), and this was not significantly different from chance (Binomial Test, p = 0.565). Facial expressions exhibited response waiting while persisting (62.20%, Binomial Test, p < 0.05) and response waiting overall (64.40%, Binomial Test, p < 0.05) significantly above chance. Only a small proportion of facial expressions exhibited response waiting at the end of the signal, and this was significantly below chance level (18.35%, Binomial Test, p < 0.05).

Manual gestures exhibited a similar pattern. Approximately half of all manual gestures were sensitive to receiver attention (58.96%), which was significantly above chance level (Binomial Test, p < 0.05). Manual gestures exhibited response waiting while persisting (59.97%, Binomial Test, p < 0.05) and response waiting overall (71.82%, Binomial Test, p < 0.05) significantly above chance. A small proportion of manual gestures exhibited response waiting at the end, and this was significantly below chance level (25.21%, Binomial Test, p < 0.05).

Modality was a significant predictor of receiver attention (Likelihood Ratio Test, X2(1) = 22.395, p < 0.001), response waiting at the end (Likelihood Ratio Test, X2(1) = 13.041, p < 0.001), and response waiting while persisting (Likelihood Ratio Test, X2(1) = 13.486, p < 0.001). But modality did not predict response waiting overall (Likelihood Ratio Test, X2(1) = 2.557, p = 0.110). Facial expressions were significantly less likely to exhibit sensitivity to receiver attention (GLMM, p < 0.001, OR = 0.694) and response waiting at the end of the signal (GLMM, p < 0.001, OR = 0.711) when compared to manual gestures; however, facial expressions were significantly more likely to exhibit response waiting while persisting (GLMM, p < 0.001, OR = 1.351).

Flexible

We measured flexibility by whether the movement was sensitive to persistence and elaboration (Table 1). Facial expressions exhibited persistence (89.45%, Binomial Test, p < 0.05) and elaboration (93.94%, Binomial Test, p < 0.05) significantly above chance, and manual gestures showed the same pattern (persistence = 70.76%, Binomial Test, p < 0.05; elaboration = 87.14%, Binomial Test, p < 0.05). Modality was a significant predictor of persistence (Likelihood Ratio Test, X2(1) = 150.38, p < 0.001) and elaboration (Likelihood Ratio Test, X2(1) = 50.225, p < 0.001), with facial expressions being significantly more likely to exhibit both properties when compared to manual gestures (GLMM, p<0.001: persistence OR = 3.500, elaboration OR = 2.625).

Facial expressions (median = 0.49, range = 0.25−0.99) exhibited lower average context tie indices (CTI scores) compared to manual gestures (median = 0.61, range = 0.32−1.00, see Table 3). These results suggest that facial expressions exhibit greater contextual flexibility compared to manual gestures. However, these differences were not significantly different (Mann–Whitney U = 86, p = 0.201). To compare our results to those published by Pollick & De Waal (2007), we compared a subset of facial expressions (N = 4) and manual gestures (N = 3) observed in both studies (highlighted in gray in Table 3). CTI scores were very similar across select facial expressions (median = 0.40, range = 0.25−0.52) and manual gestures (median = 0.38, range = 0.34−0.40, Mann–Whitney U = 6, p = 1.000), suggesting similarities in contextual flexibility. In contrast, Pollick & De Waal (2007) report manual gestures as having significantly greater contextual flexibility when compared to facial expressions.

Goal associated

We measured goal association by whether the movement was sensitive to immediate interaction outcomes, final interaction outcomes, and having a clear presumed goal (Table 1). Facial expressions were associated with an immediate interaction outcome (71.38%, Binomial Test, p < 0.05), a final interaction outcome (88.17%, Binomial Test, p < 0.05), and a clear presumed goal (70.83%, Binomial Test, p < 0.05). We also observed this pattern with manual gestures: immediate interaction outcome (80.73%, Binomial Test, p < 0.05), final interaction outcome (89.43%, Binomial Test, p < 0.05), and a clear presumed goal (91.30%, Binomial Test, p < 0.05). Modality was a significant predictor of immediate interaction outcomes (Likelihood Ratio Test, X2(1) = 30.259, p < 0.001) and presumed goals (Likelihood Ratio Test, X2(1) = 121.600, p < 0.001) but not final interaction outcomes (Likelihood Ratio Test, X2(1) = 0.0675, p = 0.7949). When compared to manual gestures, facial expressions were significantly more likely to be associated with an immediate interaction outcome (GLMM, p < 0.001, OR = 0.608) but significantly less likely to be associated with a presumed goal (GLMM, p < 0.001, OR = 0.308).

Discussion

Our goal was to examine whether chimpanzee facial expressions meet the criteria for gestures (Table 1) and should be considered as such. In contrast to previous studies, we quantified all previously described gesture properties and corresponding variables, as opposed to a select few or relying on qualitative evidence. Out of the 11 binary gesture variables examined, facial expressions exhibited nine significantly above chance level, and manual gestures exhibited ten (Table 4). The nine gesture variables shown by facial expressions correspond to all four of the main properties of gestural communication, which include: communicativeness, intentionality, flexibility, and goal association.

There was some disagreement between our results for intentionality and those of other studies. Response waiting while persisting and overall were both significantly associated with both manual gestures and facial expressions. However, response waiting at the end of the signal occurred significantly below chance level for both facial expressions and manual gestures. In the gesture literature, response waiting at the end of the signal is often used as a measure of intentionality (Byrne et al., 2017; Graham et al., 2018; Roberts & Roberts, 2019; Hobaiter & Byrne, 2011), but previous studies have not quantified this variable to the extent that we did. Thus, it appears that response waiting at the end of a signal is rare and may not be as helpful for the identification of gestures when compared to other forms of response waiting. However, it is important to note that there is extensive disagreement regarding the definition of intentionality (Tomasello & Call, 2019) and the validity of variables associated with intentionality (Graham et al., 2019). To address these concerns, we recommend examining and comparing multiple gesture properties and corresponding variables simultaneously, as we attempted, since variables associated with other gesture properties (such as flexibility and goal association) can be used to provide additional support for intentionality (Tomasello & Call, 2019).

We also examined how the gesture properties observed in facial expressions compared to manual gestures using a new scoring metric (composite gesture scores, or CGS) based on the number of gesture properties (and corresponding variables) exhibited for each signal. We found no significant differences in average CGS across facial expressions and manual gestures. Thus, facial expressions are just as likely to take on as many gesture variables as manual gestures. When directly comparing facial expressions to manual gestures, we found that facial expressions are more strongly predicted by three variables, whereas manual gestures are more strongly predicted by five variables (Table 6). Out of these eight variables, six occur significantly above chance level in both facial expressions and manual gestures. There were no significant differences between facial expressions and manual gestures for the remaining three variables. Overall, this pattern shows that facial expressions performed similarly to manual gestures.

When measuring flexibility using our categorical variable, we also observed no significant differences in context tie indices (CTI scores) across facial expressions and manual gestures (Table 3). This finding suggests that facial expressions are just as likely to demonstrate contextual flexibility as manual gestures. Interestingly, facial expressions exhibited more contextual flexibility on average when compared to manual gestures. Earlier studies have demonstrated the opposite pattern. For example: Pollick & De Waal (2007) found that gestures produced by chimpanzees and bonobos were significantly more likely to exhibit contextual flexibility when compared to facial expressions. When making a direct comparison on a subset of gesture types that were included in both studies, we found no significant differences in contextual flexibility between select facial expression and manual gesture types. It is not clear how to reconcile our findings with those of Pollick and de Waal (Pollick & De Waal, 2007), but differences in sample sizes, signal types observed, signaling ethograms, or study populations could all have contributed to different results.

Since facial expressions met all four of the key criteria for gestures and performed similarly to manual gestures across these variables, we conclude that chimpanzee facial expressions can be gestural. Our results suggest that a revision to how facial expressions are understood is in order. The term ‘facial expression’ stems from the idea that the facial muscle movement is attributed to expressions of emotion (Bell, 1806; Descartes,; Fridlund, 1994; Elliott & Jacobs, 2013), which are both discrete and universal (Ekman, 1970; Ekman, 1999). As a result, facial expressions are often perceived as being spontaneous and inflexible (Ekman, 1993). Because gestures are defined as intentionally and flexibly produced communicative movements (Byrne et al., 2017), they are often described as being a separate mode of communication when compared to facial expressions (Liebal et al., 2014). Our results suggest that facial expressions can be used as facial gestures.

The reverse may also be true, that manual gestures can be spontaneous and inflexible. For example: in the current study, manual gestures such as pirouette, look, and dangle had higher CTI scores, which are associated with reduced flexibility in meaning. In contrast, facial expressions such as the bared teeth face, pout face, and lower lip relaxer face had lower CTI scores, suggesting greater flexibility in meaning when compared to these manual gestures. The idea that gestures can be spontaneous and inflexible is also supported by the literature on body language. Researchers of body language argue that both facial expressions and gestures with the hands, arms, and body can be the result of emotion and still exhibit gesture variables (such as being sensitive to audience effects, (Gelder, 2009; Corneau et al., in press). In some cases, the gesture types described are referred to as bodily expressions and are “recognized as reliably as facial expressions” [66 page 3475]. Thus, the sharp distinction between gestures and expressions, regardless of body part, may need to be revised in favor of a more holistic, unified concept.

The existence of facial gesturing in chimpanzees has important implications for the evolution of human language. As previously mentioned, those who support a gestural origin of language (Armstrong, 2008) sometimes argue that other forms of communication, such as facial expressions and vocalizations, cannot be evolutionary precursors due to their strong association with emotion (Hewes et al., 1973; Corballis, 2002; Pollick & De Waal, 2007). As a result, it is believed that the evolutionary starting point of human language can be traced to great ape gestural communication (Tomasello & Call, 2019). The results of our study suggest that the evolutionary starting point of human language may be older than the great ape lineage.

Chimpanzee facial expression types and their corresponding muscle movements can be found in other primate (Van Hooff, 1967) and non-primate species (Darwin, 1872; Andrew, 1963). Similar to chimpanzees, pigtailed macaques (Macaca nemestrina) produce a facial expression (silent bared-teeth display) that varies in its meaning (Flack & De Waal, 2007). This finding suggests that monkey facial expressions may be used in a flexible manner, which is considered to be an important gesture property (Byrne et al., 2017). Mice produce a variety of facial expressions (Langford et al., 2010; Dolensek et al., 2020) and distinct muscle movements, which include bulging of the nose and cheeks (Langford et al., 2010). These muscle movements are similar to those observed in chimpanzees, which include the nose wrinkler (AU9) and cheek raiser (AU6, Parr et al., 2007), and they have also been observed in humans, macaques, gibbons, orangutans, dogs, and cats (Waller, Julle-Daniere & Micheletta, 2020). If chimpanzee facial expressions and their corresponding muscle movements are gestural, it is possible that these other species with shared expressions and muscle movements are also capable of using their face to gesture. Conversely, species outside of the great apes may lack the control or freedom of movement needed to gesture with their faces. Direct tests for facial gestures outside of great apes are needed to resolve these competing hypotheses.

Research on the gesture properties of mammal facial expressions and vocalizations would help to determine whether the ability to gesture with the face is uniquely derived in hominids or more widespread than previously assumed. We may find that rather than the product of recent evolution, the mechanisms needed for language to emerge have been gradually evolving through much, if not all, of the mammalian lineage. In this case, an additional step would need to be added to the “hand to mouth” theory of language evolution (Corballis, 2002) to include the emergence of mammalian facial gestures. In this revised theory, mammalian facial (and possibly vocal) gestures provided the basis for great ape manual gestures to evolve. The ability to produce manual gestures then led to more symbolic forms of communication (such as sign language), and subsequently to the development of spoken language (Corballis, 2002). It remains to be determined whether facial gestures will be found outside of chimpanzees, but chimpanzees provide a potential important bridge from facial to manual gestures.

Future studies should also address whether vocalizations are capable of taking on important gesture properties. In the current study, we examined the properties of nine types of facial expressions. Some of these facial expressions are associated with vocalizations (such as the lipsmacking face, pant hoot face, raspberry face, and scream face), whereas others are not often associated with vocalizations (such as the ambiguous face, bared teeth face, lower lip relaxer face, and pout face). When separating these eight facial expression types from one another based on modality, interesting patterns emerge. The four facial expression types associated with vocalizations exhibited a higher average context tie index score (average = 0.68, median = 0.62, range = 0.52−0.99) when compared to the four facial expression types which are seldom associated with vocalizations (average = 0.30, median = 0.29, range = 0.25−0.36); these differences appear to be statistically significant (Mann–Whitney U = 0, p = 0.029). Facial expressions associated with vocalizations had a lower average composite gesture score (average = 7.01, median = 6.91, range = 6.08−8.13) when compared to the four facial expression that are not typically associated with vocalizations (average = 8.29, median = 8.74, range = 6.57−9.10); however, these differences are not statistically significant (Mann–Whitney U = 14, p = 0.114). These results suggest that facial expressions associated vocalizations exhibit reduced contextual flexibility and fewer gesture variables on average compared to facial expressions without vocalizations. However, it is difficult to make conclusions with these results alone, since there were instances recorded where facial expressions not often associated with vocalizations were produced with one and vice versa. Some facial expression types (such as the play face) also fall between both categories, since they can be produced with a vocalization but it is not required for it to be produced.

Ultimately, the focus on the hands as the origins of gesturing may be a narrow, anthropocentric view. Outside of the great apes, animals are much more dependent upon their hands for locomotion, limiting the ability and opportunity to use them to communicate. But the face has no such restrictions. To take a deeper look into the origins of human flexible and intentional communication, we need to look to the faces and vocalizations of other mammals for gesture properties through a quantitative approach. Our newly devised scoring metric (composite gesture scores) can be applied to a variety of species, including both primate and non-primate mammals. According to Graham et al. (2019), it is currently difficult to evaluate the gesture properties of signals without a more rigorous and quantified approach. In addition, very few studies have examined multiple gesture properties and corresponding variables at once. We hope that our composite gesture scores will be useful for cross-species comparisons and for ensuring replicability in future studies.

Conclusion

We conclude that chimpanzees are capable of using their faces to gesture. Current theories about the gestural origins of human language propose that this process began with great ape manual gestures. But, if other mammals are also gesturing with their faces, then the properties associated with human language may have been gradually evolving through much, if not all, of the mammalian lineage. We propose that researchers look to the faces of other mammals for gesture properties to examine this possibility.

Supplemental Information

Supplementary Tables

A list of all chimpanzees who were housed at the Los Angeles Zoo and the inter-reliability scores for all variables of interest.

ELAN Template Part 1

Used to code gesture properties and their corresponding variables.

ELAN Template Part 2

Used to code gesture properties and their corresponding variables.

First video example of chimpanzee facial and manual gestures

An example of a social interaction featuring both facial and manual gestures. The manual gestures were described by Byrne et al. Byrne et al. (2017) and Hobaiter (Hobaiter, 2011), and the facial expression was described by Parr et al. Parr et al. (2007). Ben (left at 00:03) is following Uki (right at 00:03) as she runs away from him. Ben tries to stop Uki’s retreat by producing a ‘grab gesture’, which is directed at Uki’s back leg in a mechanically ineffective, receiver specific manner (00:04). Uki spins away, eventually turning to face Ben. While Uki is facing him, Ben produces a ‘lower lip relaxer’ while bobbing his head up and down (00:06). Uki does not respond to Ben’s facial expression, and eventually looks away for a brief moment. When Uki turns to face him again, Ben sits on the ground and produces a ‘reach gesture’ towards Uki (00:14) followed by a low intensity ‘pant hoot’ (00:15). After a few seconds, Uki finally responds by approaching Ben and making bodily contact (0:20). We coded Ben as having the goal of ‘give affiliation’ because Uki’s approach and bodily contact is when Ben ceased his communicative attempts. In this interaction, Ben’s facial expressions are communicative (i.e., mechanically ineffective and directed at a specific receiver), intentional (i.e., exhibit response waiting in between signaling attempts and while holding signals), flexible (i.e., show persistence by holding the signals and elaboration by following up with a new facial expression and a manual gesture), and goal-oriented (i.e., associated with the goal of wanting to give affiliation to Uki). Ben’s manual gestures are also communicative (i.e., mechanically ineffective and directed at a specific receiver), intentional (i.e., exhibit response waiting in between signaling attempts and while holding signals), flexible (i.e., show persistence by holding the ‘reach gesture’ and elaboration by following up with a facial expression and a new manual gesture), and goal-oriented (i.e., associated with the goal of wanting to give affiliation to Uki). Information on this social interaction can be found in the raw data (see Florkiewicz and Campbell Data S1.xlsx) in rows 1015-1018. Video credit: Brittany Florkiewicz.

Second video example of chimpanzee facial and manual gestures

An example of a social interaction featuring both facial and manual gestures. The manual gestures were described by Byrne et al. Byrne et al. (2017) and Hobaiter (Hobaiter, 2011), and the facial expression was described by Parr et al. Parr et al. (2007). Julie (right) approaches Jean (left) and makes contact with Jean’s back using a ‘touch other gesture’ in a mechanically ineffective, receiver specific manner (00:03). Julie sits on the rock that Jean was initially occupying and Jean begins to move away (00:05). Julie then produces a ‘grab pull gesture’, making contact with Jean’s left wrist (00:11), but Jean moves away (00:13). Julie shows persistence by producing a ‘grab gesture’ multiple times (00:14 & 00:17). Julie also shows elaboration by producing a ‘lower lip relaxer’ (00:15). In between gestures, Julie looks directly at Jean waiting for her to respond. Finally, after Julie’s final ‘grab gesture’ (00:17), Jean approaches Julie, Julie directs an ‘embrace gesture’ to Jean, and Julie also begins to produce a ‘rocking gesture’. We coded Julie as having the goal of ‘give affiliation’ because the embrace is when Julie ceased her communicative attempts. In this interaction, Julie’s facial expression is communicative (i.e., mechanically ineffective and directed at a specific receiver), intentional (i.e., exhibits response waiting while she is persisting), flexible (i.e., shows persistence by holding the signal and elaboration by following up with a manual gesture), and goal-oriented (i.e., associated with the goal of wanting to give affiliation to Jean). Julie’s manual gestures are also communicative (i.e., mechanically ineffective and directed at a specific receiver), intentional (i.e., exhibit response waiting while she is persisting), flexible (i.e., show persistence by repeating the signals, show elaboration by adding a facial expression and changing manual gestures), and goal-oriented (i.e., associated with the goal of wanting to give affiliation to Jean). Information on this social interaction can be found in the raw data (see Florkiewicz and Campbell Data S1.xlsx) in rows 1173-1178. Video credit: Brittany Florkiewicz.