A target tracking method based on adaptive occlusion judgment and model updating strategy

- Published

- Accepted

- Received

- Academic Editor

- Imran Ashraf

- Subject Areas

- Artificial Intelligence, Computer Vision, Real-Time and Embedded Systems, Robotics, Visual Analytics

- Keywords

- Target tracking, Adaptive occlusion judgment, Four evaluation indicators, Model updating, Double thresholds

- Copyright

- © 2023 Cai et al.

- Licence

- This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. For attribution, the original author(s), title, publication source (PeerJ Computer Science) and either DOI or URL of the article must be cited.

- Cite this article

- 2023. A target tracking method based on adaptive occlusion judgment and model updating strategy. PeerJ Computer Science 9:e1562 https://doi.org/10.7717/peerj-cs.1562

Abstract

Target tracking is an important research in the field of computer vision. Despite the rapid development of technology, difficulties still remain in balancing the overall performance for target occlusion, motion blur, etc. To address the above issue, we propose an improved kernel correlation filter tracking algorithm with adaptive occlusion judgement and model updating strategy (called Aojmus) to achieve robust target tracking. Firstly, the algorithm fuses color-naming (CN) and histogram of gradients (HOG) features as a feature extraction scheme and introduces a scale filter to estimate the target scale, which reduces tracking error caused by the variations of target features and scales. Secondly, the Aojmus introduces four evaluation indicators and a double thresholding mechanism to determine whether the target is occluded and the degree of occlusion respectively. The four evaluation results are weighted and fused to a final value. Finally, the updating strategy of the model is adaptively adjusted based on the weighted fusion value and the result of the scale estimation. Experimental evaluations on the OTB-2015 dataset are conducted to compare the performance of the Aojmus algorithm with four other comparable algorithms in terms of tracking precision, success rate, and speed. The experimental results show that the proposed Aojmus algorithm outperforms all the algorithms compared in terms of tracking precision. The Aojmus also exhibits excellent performance on attributes such as target occlusion and motion blur in terms of success rate. In addition, the processing speed reaches 74.85 fps, which also demonstrates good real-time performance.

Introduction

Motion target tracking (Lu & Xu, 2019; Wang et al., 2021) is one of the most active research areas in computer vision. With the continuous improvement of hardware facilities and the rapid development of artificial intelligence technology, motion target tracking technology is widely used in intelligent video surveillance (Zeng et al., 2020), human-computer interaction (Zhou & Liu, 2021), medical diagnosis (Al-Battal et al., 2021) and other fields. In the field of intelligent video surveillance, target tracking technology is commonly used in monitoring of vehicle violations and has been proved to be effective. In the field of medical diagnosis, tracking technology is frequently used in tracking microscopic items like cells. In terms of human-computer interaction, tracking technology is mostly utilized in robot vision and virtual environments, which primarily use visual technology to provide the tracking effect similar to human eyes. In the past two decades, target tracking technology has made tremendous developments. However, tracking targets are often limited by complex application environments, such as different illumination changes, interference from complex backgrounds, changes in their own scales, and occlusion by other objects. Therefore, improving the precision and robustness of tracking algorithms in complex environments and satisfying real-time applications become important research topics in visual target tracking.

Nowadays, the mainstream algorithms of target tracking can be classified into two categories. One is based on correlation filtering (Wei & Kang, 2017; Meng & Li, 2019), which determines the correlation region by establishing a correlation filter to find the maximum response value in the two adjacent frames, and then lock the target. Compared with the earlier tracking algorithms based on optical flow method (Xiao et al., 2016) and feature matching (Uzkent et al., 2015), the advantages for this category are fast speed and good robustness in the case of target occlusion, illumination change and motion blur (Liu et al., 2017). Another is based on deep learning (Li, Li & Porikli, 2016), which uses convolutional neural networks training to extract object features in the last frame and matches the object in the next frame. That is, the object is continually tracked during training. For one thing, the former is inferior to the latter when dealing with complex scenarios, such as target occlusion, out-of-view, scale variation etc. For another, the latter tracks object more slowly. Therefore, finding a solution not only meets the demands of accurate tracking in a variety of complex scenarios, but also achieves fast running are still an active research area. In this work, a target tracking algorithm based on adaptive occlusion judgment and model updating strategy, called Aojmus, is proposed to address the poor tracking performance in complex scenarios mentioned above. The Aojmus is designed on the basis of KCF algorithm and integrated with correlation filtering method which has the advantage in processing speed.

The contributions of this work can be summarized in three folds:

1. We propose to fuse CN and HOG features as the feature representation of the tracked target, which improves the discrimination and re-detection ability. Meanwhile, the scale filter is introduced to solve the defect of poor tracking precision of the target due to scale change.

2. We design four kinds of occlusion judgment indicators to solve tracking failure which caused by occlusion. These four indicators can adaptively judge the occlusion of the target during tracking. A double threshold mechanism is introduced to judge the degree of occlusion, which determines the update strategy of the tracker.

3. We use a weighted fusion strategy to fuse the results of occlusion judgments to ensure that the model update rate of the tracker changes dynamically with the judgment results of each frame, avoiding the tracking drift problem caused by fixed model update rate of most trackers.

The rest of this article is organized as follows. In “Literature Review”, related works about target tracking are surveyed. In “Preliminaries”, some prerequisites of the methodology are introduced. In “Methodology”, we describe the architecture of the proposed algorithm, including statistical analysis, algorithm design and related parameter setting. In “Experiments and analysis”, we compare and analyze the performance of the algorithm in quantitative and qualitative aspects. In “Conclusion”, we summarize this study and discuss possible future work.

Literature review

Bolme et al. (2010) were the first to apply the correlation filtering method to target tracking and proposed the MOSSE algorithm, which achieves tracking speed of 669 fps, but with a slightly poor precision of 43.1 . To solve the problem of insufficient samples of MOSSE algorithm, Henriques et al. (2012) proposed CSK algorithm, which acquired a large number of samples through the method of cyclic shift. Moreover, the computational complexity is reduced by frequency domain processing, and thereby a robust and accurate filter is obtained. Subsequently, Henriques et al. (2015) proposed the kernelized correlation filter (KCF) tracking algorithm on the basis of CSK, which utilized the histogram of oriented gradients (HOG) feature instead of grayscale feature and introduced a circular matrix to reduce the computational effort. The algorithm also incorporates multi-channel data to improve the operation speed and meet the requirement of real-time in the process of tracking. Inspired by the scale pooling technique, Yang & Zhu (2014) and Danelljan et al. (2014a) proposed SAMF and DSST algorithms respectively, which solved the problem of scale adaptation of KCF algorithm. The SAMF algorithm fused HOG feature and color-naming (CN) (Danelljan et al., 2014b) feature for the first time on the basis of KCF, which improved the tracking precision, but the speed is significantly reduced. Similarly, the DSST algorithm also achieved scale adaption, but the overall performance is inferior. Danelljan et al. (2017a) used convolutional neural network (CNN) to extract depth features on the feature model of the target while keeping the motion model (cyclic matrix) and observation model (correlation filter) unchanged, achieving a significant increase in precision and success rate. The research interest in correlation filtering-based target tracking has declined because the precision is difficult to improve further when dealing with target occlusion, disappearance, or non-rigid object tracking. In recent years, some representative studies have still emerged, such as ASRCF (Dai et al., 2019), ARCF (Huang et al., 2019), and PRCF (Sun et al., 2019).

Another class of target tracking algorithms is based on deep learning (Li, Li & Porikli, 2016), which uses convolutional neural networks for feature extraction and classification of targets to achieve target tracking. Some of them incorporate correlation filtering and deep learning, such as the HCF (Ma et al., 2015). The MDNet algorithm proposed by Nam & Han (2016) was one of the early algorithms that used deep learning alone to implement target tracking. The algorithm trains each domain separately while updating the parameters of the shared layer during training so that these parameters can be adapted to all datasets. When tracking, MDNet uses a pre-trained CNN network to track the target and thereby locate the target. Here are some similar algorithms, such as SiamDW (Zhang & Peng, 2019), SiamCAR (Guo et al., 2020) and HiFT (Cao et al., 2021), etc. Despite the superior performance of deep learning-based tracking algorithms in achieving tracking precision, they still face the disadvantages of insufficient initial training samples and slow tracking speed.

Preliminaries

In this section, to help establish an understanding of the essential elements involved in the proposed methodology, the kernelized correlation filter (KCF), classifier training, fast detection and model updating are illustrated in advance.

Kernelized correlation filter

The core idea of the kernel correlation filtering (KCF) algorithm is to calculate the matching degree between the predicted region and the target by establishing a kernel function based on the ridge regression. By moving the complex calculation to the frequency domain with fast Fourier transform, the fast tracking for target is achieved. Similar to most discriminative tracking algorithms, KCF algorithm also performs target detection before filter model training. It firstly trains a model of the initial position of the target, then detects whether the target exists in the prediction region of the next frame, and finally uses Gaussian kernel to calculate the correlation between two adjacent frames and determines the position of the target according to its maximum response value in the target region. The basic principles of the kernel correlation filtering algorithm, including classifier training, fast detection and model update, are described below.

Classifier training

The classifier is obtained by training ridge regression. Let be the training sample, where is the regression expectation corresponding to sample . The ridge regression on the training sample yields the linear regression function . To prevent the overfitting phenomenon, the classifier needs to be regularized as follows:

(1) where is the classifier parameter and is the regularization parameter. The closed-form solution of the above equation is:

(2)

In the process of generating a large amount of information of target and background using the circular matrix, the feature space formed by the sample set appears nonlinear. Therefore, the Gaussian kernel function is introduced for linear transformation, and the result is , where . So far, the solution of is transformed to the solution of coefficient , which eventually yields:

(3) where K is the kernel correlation matrix. To reduce the complexity of the calculation, Eq. (3) is transformed into the frequency domain with the discrete Fourier transform (DFT). Then the solution becomes:

(4)

The purpose of classifier training is to solve the weight coefficient , where is the first row element of the kernel cycle matrix K, and denotes the DFT of vector.

Fast detection and model updating

After classifier training, in order to locate the target position of the current frame, the KCF algorithm uses the target position of the previous frame as a template, then detects it in the candidate region of the current frame and determines the target position by finding the maximum value of . To increase the calculation speed, the KCF algorithm transfers the solution from the time domain to the frequency domain as follows:

(5) where denotes the kernel correlation between the target sample and the candidate detection region , represents the response distribution in the candidate region and the position where its maximum value is located indicates the actual position of the target in the current frame.

To ensure each frame in the video sequence can be processed, the KCF algorithm uses linear interpolation to update the filter template and the target feature template as follows:

(6) where denotes the model update rate and is time stamp.

Methodology

As target occlusion, scale variation, illumination variation affect the performance of tracking, it is of great significance to conquer such problems. The KCF algorithm increases the training samples through the circular matrix, which in turn improves the tracking accuracy. Meanwhile, by transferring to the frequency domain to avoid matrix inversion operations, the computation is greatly reduced.

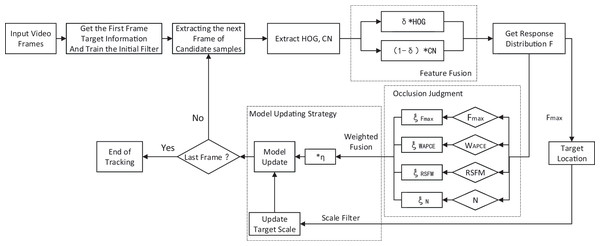

However, the KCF algorithm often fails to track in the case of target occlusion or target loss because the update model learns the features of the occluded object and causes the model to get the wrong target features in the accumulation of subsequent frames, which in turn leads to tracking failure. In addition, the tracking box of KCF algorithm cannot meet the scale variation of the target, which can also greatly reduce the precision of tracking. To address these problems, this article proposes a target tracking algorithm, call Aojmus, based on an adaptive occlusion judgment and model update strategy. The flow chart of the algorithm is shown in Fig. 1.

Figure 1: Flow chart of Aojmus algorithm.

In this section, we describe the specific implementation of Aojmus, including feature fusion, scale estimation, occlusion judgment and model updating.

Feature fusion design

The HOG features can effectively depict the local contour and shape information of the target and are very robust to illumination changes, but are poorly adapted to target deformation and fast motion. The CN features can well represent the global color information of the target and have excellent stability to target deformation and fast motion, but are sensitive to illumination and color changes. Therefore, we employ linear fusion of HOG feature and CN feature (Xie & Zhao, 2021) to achieve feature complementarity and improve tracking precision.

The process of linear weighted fusion of these two feature vectors is as follows:

(7) where , , represent HOG feature, CN feature and fused feature respectively, and is the weighted coefficient of feature fusion. In this article, set to ensure that the advantages of HOG and CN feature can be fully utilized.

Multi-scale estimation

The scale variation of target is one of the important factors affecting the tracking results. As the position change of two consecutive frames is often larger than the scale change, like DSST (Danelljan et al., 2014a), this article first uses a two-dimensional position filter to determine the position information and then implements scale evaluation by training a one-dimensional scale filter.

Let be the training sample and be the optimal correlation filter. The minimum cost function is solved with ridge regression as follows:

(8) where is the feature dimension, represents the regression expectation corresponding to the training sample , and is the regularization factor. The scaling filter can be obtained by solving the above equation in Fourier domain:

(9) where represents complex conjugate of the DFTs of correlation outputs and is introduced to avoid zero denominator in case of the zero frequency component in . By detecting the image block in the new frame, we can obtain the response of scale filter as:

(10)

Up to this point, the response value of the scale filter can be calculated from Eq. (10), and a new scale estimate can be determined based on the result of the maximum value. The selection principle of target sample size for scale evaluation is as follows:

(11) where P and R are respectively the width and height of the target in the previous frame, is the scale factor, is the length of the scale filter.

Adaptive occlusion judgment and model updating strategy

Target occlusion often occurs during tracking. In this section, we make a detailed analysis and propose an adaptive judgment method. Using the original model update rate in the KCF algorithm, the response of occlusion is analyzed with the FaceOcc1 image sequence in the OTB2015 (Wu, Lim & Yang, 2015) dataset as an example.

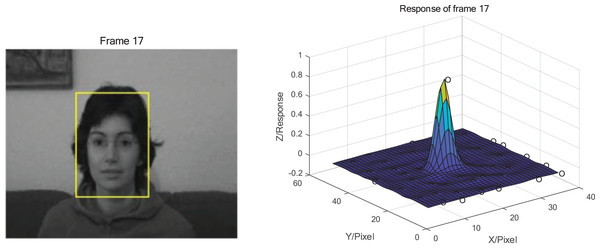

From the response distribution in Fig. 2, it can be seen that the main peak of the response in target box during tracking without occlusion is dominated and there is no other obvious peaks. The maximum peak response is close to 1. When the occlusion exists, as shown in Fig. 3, the maximum peak response decreases significantly, and the rest of the peak responses increase and become more prominent. It can be concluded that when the occlusion occurs, the fixed model update strategy learns the features of the occlusion and applies this feature to the search of the next frame which leading to the appearance of other peaks besides the main peak. Hence, the presence of occlusion can be determined based on the response distribution of the target.

Figure 2: Tracking results and response distribution at frame 17 of the FaceOcc1 sequence without occlusion.

Photo credit: Visual Tracker Benchmark.Figure 3: Tracking results and response distribution when occlusion appears at frame 91 of FaceOcc1 sequence.

Photo credit: Visual Tracker Benchmark.Let be the maximum peak response in each frame. The number of peak response points that exceed the maximum peak response by a certain proportion, denoted as N, can be expressed as:

(12) where denotes the peak response other than the maximum peak, S represents the target area, and is a proportionality coefficient which is set to 0.1 in this article.

Besides the two judgment indicators of and N, two more judgment indicators, average Peak-to-Correlation energy (APCE) (Wang, Liu & Huang, 2017) and ratio between the second and first major mode (RSFM) (Lukevic et al., 2017) are introduced in this article to ensure the robustness of the occlusion judgment.

The APCE can well reflect the variation of response and changes significantly when the target is obscured. It can be expressed as follows:

(13) where denotes the minimum value of the response, and denotes the response value of pixel in -th row and -th column. When occlusion appears, the value of will decrease significantly.

The RSFM reflects the prominence of the main peak in the response map and is defined as follows:

(14) where represents the response value of the second peak. The larger the value of RSFM is, the more prominent the main peak will be and the higher reliability the tracking will have, and vice versa.

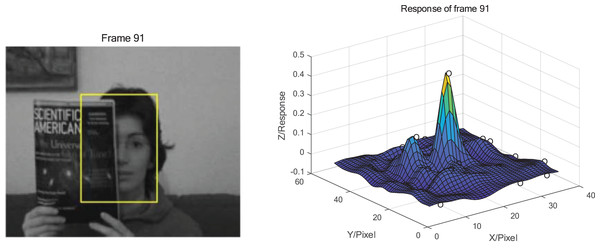

In this article, we use the four evaluation indicators mentioned above, , N, and RSFM, to determine whether the target is occluded or not. To verify the four indicators, we select the first 200 frames of the video sequence in FaceOcc1 for test. The relevant results of these four indicators for each frame are calculated and their cumulative averages are shown in Fig. 4.

Figure 4: Line graphs of , , RSFM and N for the front 200 frames of FaceOcc1 as an example.

Photo credit: Visual Tracker Benchmark.As shown in Fig. 4, the evaluation indicators fluctuate significantly between frame 85 to 100. The , , RSFM are relatively low whereas N is relatively high, which indicates the existence of severe occlusion. By comparing the moments when occlusion appears in the original video sequence, it can be found that the above four indicators satisfy well for the judgment of occlusion as they are complementary to some extent.

In order to accurately determine whether occlusion exists and the degree of occlusion, this article implements adaptive evaluation by setting dynamic double thresholds. The thresholds are selected based on the average value of each evaluation indicator for the previous frames, namely:

(15) where, denotes the weighted coefficient, and denotes the threshold of the corresponding evaluation indicator. For every indicator, there are two thresholds. The weighted coefficient of each evaluation indicator is obtained by analyzing the cumulative average curve in Fig. 4, as shown in Table 1.

| 1 | 0.85 | |

| 1 | 0.7 | |

| 1 | — | |

| 1 | — |

As can be seen from Eq. (14), the value of rises sharply when there is severe occlusion in current frame, resulting the output of RSFM to be 0.5. When it occurs, the lower limit of in Table 1 is set to 0.5 particularly. As N is usually not sensitive to occlusion, it is set to a single threshold for simplicity.

When occlusion appears, the fixed model update rate will cause the tracker to learn the information of the occlusion object and lead to tracking drift. In this article, the Aojmus algorithm adaptively generates a suitable model update rate according to the degree of occlusion in current frame. When occlusion occurs, the model update rate is appropriately increased to ensure that the target information of the part not occluded in the tracking frame is fully learned by the model so as to enhance the model’s recognition capability, which can be used for accurate localization in next frame. The update strategy is defined as:

(16) where, , represent the output values of occlusion judgment. They are concluded from experiments, as shown in Table 2.

| 1 | 1.2 | 1.5 | |

| 1 | 0.8 | 0.5 | |

| 1 | 1.2 | 1.5 | |

| 1.2 | — | 1 |

There are four judgment results in the improved algorithm. In order to guarantee that can accurately reflect the degree of occlusion, this article uses a weighted fusion of the four output to obtain the final model update rate as follows:

(17)

Finally, we substitute the final model update rate into Eq. (6) to obtain:

(18)

The proposed algorithm, Aojmus, is presented in Algorithm 1.

| Input: |

| Current frame It; The video for tracking Svideos; |

| Target position and scale of previous frame; |

| The target feature template, and the filter template, ; |

| The scale model , . |

| Output: |

| Target position pt and scale st of current frame; |

| The updated target feature template, and filter template, ; |

| The updated scale model , . |

| 1: for each do |

| 2: Sample the new patch from It at ; |

| 3: Extract a scale sample zscale from It at pt and ; |

| 4: Extract the HOG and CN features and fused with Eq. (7); |

| 5: Calculate the response with Eq. (5), and get Fmax; |

| 6: Calculate , WAPCE and RSFM with Eqs. (12)–(14), and adaptively judge whether there is occlusion and the scope; |

| 7: Get the and ξ by Eqs. (16) and (17); |

| 8: Compute the scale correlations yscale using zscale, and in Eq. (10); |

| 9: Set st to the maximum of yscale; |

| 10: Use Eq. (18) to update and with and adaptively; |

| 11: Use Eq. (9) to update and with and . |

| 12: Return pt and the updated , , , . |

| 13: end for |

Experiments and analysis

In this section the proposed Aojmus algorithm is compared with the other four relatively excellent tracking algorithms. We use three metrics to evaluate the performance of the algorithm, and select the representative video sequences to compare and analyze the tracking effect.

Experimental environment and parameters

The platform for the experiments in this article is Matlab 2018a, and the hardware environment is a computer with Intel(R) Xeon(R) CPU E5-2620 v3 @ 2.40 GHz and 16 GB RAM. The parameters of the algorithm are set as follows: the regularization parameter and the initial model update rate .

In this article, 66 video sequences provided by OTB-2015 are used for experimental verification. The dataset contains 11 attributes of common scenarios in target tracking, such as occlusion (OCC), deformation (DEF), illumination variation (IV), motion blur (MB), out-of-plane rotation (OPR), fast motion (FM), out-of-view (OV), in-plane rotation (IPR), low resolution (LR), scale variation (SV), and background clutter (BC). The performance evaluations are carried out with quantitative and qualitative analysis.

Experimental comparison and analysis

Quantitative analysis

In order to evaluate the performance of the algorithm in this article, MSCF (Zheng et al., 2021), Staple (Bertinetto et al., 2016), fDSST (Danelljan et al., 2017b) and KCF algorithms with high performance were selected for comparison. Three statistical criteria of precision (Pr) (Wu, Lim & Yang, 2013), success rate (Sr) (Wu, Lim & Yang, 2013) and tracking speed ( ) were used for evaluation respectively. The Pr refers to the error of center position, namely the Euclidean distance in pixel unit, , between the center of tracking box for each frame and the actual center in the benchmark. The final result is expressed with the average of errors.

(19)

The smaller the value of is, the closer the tracked target center to the actual location is and the better the algorithm performs in terms of pricision.

Let be the overlap between the tracking box in the current frame, , and the actual box in benchmark, . It can be expressed as:

(20)

The success rate, , is expressed as the average of whose value is greater than the given threshold in the whole video sequence.

(21)

The value of reflect the number of frames whose tracking box is closer to the real rectangle box. Obviously, the greater the is, the better the performance of the algorithm will be.

The tracking speed, , refers to the number of video frames processed by the algorithm in each second, also known as frame rate with the unit of FPS (frames per second). It is defined as follows:

(22) where, indicates the total number of frames of the video sequence, and is the time taken by the algorithm to run the whole video sequence.

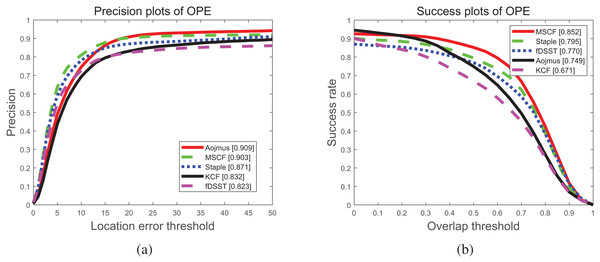

To evaluate the performance of the proposed algorithm, Aojmus, we make a comparison with other four algorithms, MSCF, Staple, fDSST and KCF on OTB-2015 as shown in Figs. 5 and 6 and Tables 3–5. The evaluation method used is a one pass evaluation (OPE), which means that after initializing the target, the whole video sequence is run at once. The location error threshold for precision plots and the overlap threshold for success rate plots are set as 20 pixels and 0.5, respectively.

Figure 5: The tracking precision plots (A) and success rate plots (B) of our algorithm and others on OTB-2015.

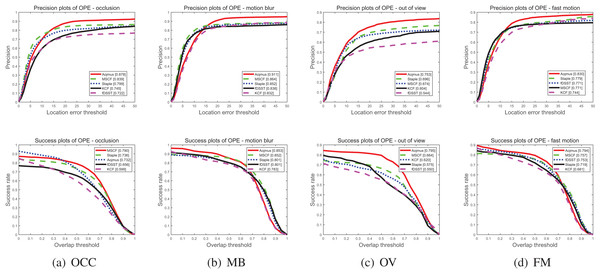

Figure 6: Precision plots (above) and success rate plots (below) under OCC (A), MB (B), OV (C), FM (D).

| Algorithms | OCC | DEF | FM | IPR | MB | OV | OPR | SV | IV | BC | LR |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Aojmus | 0.878 | 0.900 | 0.830 | 0.916 | 0.911 | 0.753 | 0.895 | 0.992 | 0.906 | 0.892 | 0.996 |

| MSCF | 0.839 | 0.941 | 0.771 | 0.878 | 0.864 | 0.674 | 0.881 | 0.868 | 0.876 | 0.877 | 0.988 |

| Staple | 0.799 | 0.878 | 0.779 | 0.860 | 0.852 | 0.696 | 0.816 | 0.851 | 0.863 | 0.860 | 0.797 |

| fDSST | 0.722 | 0.777 | 0.771 | 0.799 | 0.838 | 0.544 | 0.759 | 0.796 | 0.876 | 0.891 | 0.731 |

| KCF | 0.722 | 0.813 | 0.744 | 0.818 | 0.832 | 0.604 | 0.803 | 0.805 | 0.863 | 0.882 | 0.785 |

| Algorithms | OCC | DEF | FM | IPR | MB | OV | OPR | SV | IV | BC | LR |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Aojmus | 0.732 | 0.727 | 0.794 | 0.762 | 0.853 | 0.795 | 0.718 | 0.651 | 0.684 | 0.744 | 0.662 |

| MSCF | 0.790 | 0.891 | 0.757 | 0.805 | 0.852 | 0.664 | 0.799 | 0.794 | 0.851 | 0.863 | 0.931 |

| Staple | 0.736 | 0.798 | 0.719 | 0.765 | 0.801 | 0.575 | 0.724 | 0.726 | 0.793 | 0.787 | 0.604 |

| fDSST | 0.656 | 0.710 | 0.753 | 0.733 | 0.801 | 0.550 | 0.678 | 0.715 | 0.815 | 0.827 | 0.705 |

| KCF | 0.599 | 0.661 | 0.681 | 0.662 | 0.783 | 0.620 | 0.628 | 0.520 | 0.644 | 0.750 | 0.285 |

| Algorithms | Frames per second (FPS) |

|---|---|

| Aojmus | 74.85 |

| MSCF | 16.01 |

| Staple | 9.28 |

| fDSST | 83.29 |

| KCF | 219.03 |

Figure 5 illustrates the precision and success rate of these five algorithms for running the whole sequences at once on the OTB-2015 dataset, from which the precision and success rate of Aojmus can be obtained are 0.909 and 0.749, respectively. Compared with others, the Aojmus performs the best in terms of precision and has improved 0.5 than the second ranked MSCF algorithm. Though the success rate is not outstanding, the Aojmus still shows high performance for OCC, MB, OV, and FM as shown in Fig. 6.

Tables 3 and 4 respectively show the precision and success rate of the five algorithms under 11 attributes. As it can be seen that the Aojmus algorithm performs best on 10 of these attributes. In terms of success rate, the Aojmus appears more robust in dealing with fast motion, motion blur and out of view problems. Despite the disadvantages in other aspects, the Aojmus is not much inferior to other excellent algorithms. For the tracking speed, though the Aojmus is inferior to fDSST and KCF as shown in Table 5, it outperforms others in other aspects as shown in Figs. 5 and 6. From the above comparisons, the Aojmus exhibits good overall performance.

Qualitative analysis

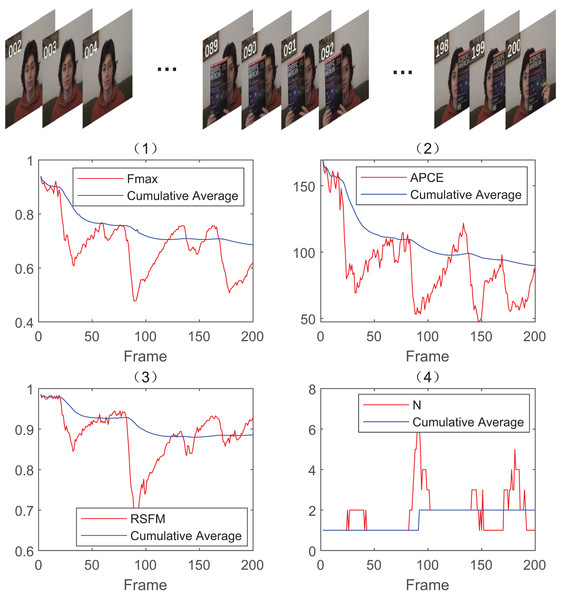

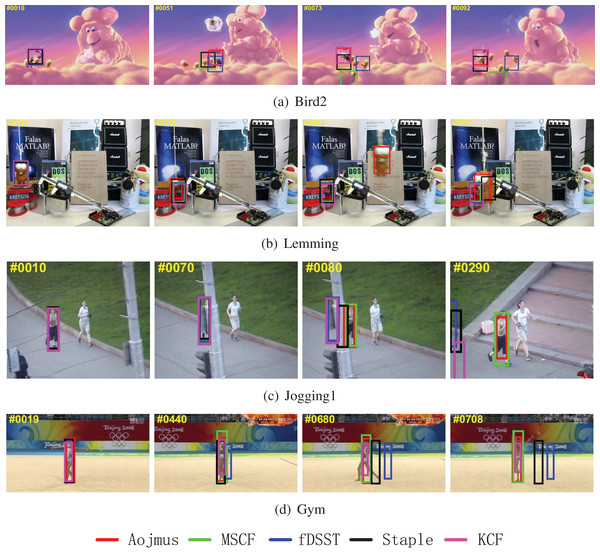

Like our previous work in Wang et al. (2022), in order to better verify the advantages of the proposed algorithm, four groups of representative video sequences are selected for analysis, as shown in Fig. 7.

Figure 7: Tracking results of different algorithms on (A) Bird2, (B) Lemming, (C) Jogging1 and (D) Gym.

Photo credit: Visual Tracker Benchmark.The video sequence of Bird2 contains OCC, DEF, FM, IPR, and OPR attributes. In frame 10, all the five tracking algorithms work properly. With the progress of tracking, the MSCF, fDSST, and KCF algorithms begin to show significant tracking drift at frame 51 affected by occlusion, deformation, and rotation problems. From frame 73, only the Aojmus and Staple can track accurately after the target (bird) flips.

The video sequence of Lemming contains IV, SV, OCC, FM, OPR, and OV attributes. From frame 10 to frame 370, as the target is not significantly affected by occlusion, fast motion and illumination changes, and the position of the target does not change after the occlusion, all the algorithms can track the target accurately. After the 370th frame, the occluded target reappears. As the tracking models of the other algorithms use fixed update rate and learn non-target information, they are unable to locate the target again in the subsequent frames, while the Aojmus can maintain accurate localization until the end of tracking. In addition, comparing the tracking boxes at 900th frame, it can be shown the Aojmus is also well adaptive to the scale change of the target.

The video sequence of Jogging1 contains OCC, DEF, and OPR attributes. The target is heavily occluded at frames 70 to 80. When the target reappears, the Aojmus is able to accurately locate the target using adaptive occlusion judgment and continue the model update to ensure that tracking is performed reliably. The other algorithms except MSCF fail to cope with the occlusion problem.

The video sequence of Gym contains SV, DEF, IPR, and OPR attributes. At frame 19, the target begins to rotate and deform, and all algorithms can basically guarantee normal tracking. When it comes to 440th frame, the fDSST algorithm shows obvious drift, and fails to track the target. With the increase of target deformation and rotation, only the Aojmus, MSCF and KCF can keep tracking properly till frame 680. The Aojmus can adjust the tracking box with the scale of target dynamically whereas the MSCF and KCF fail to do so.

The above experimental results show that the Aojmus proposed in this article can cope well with a variety of influence appearing in the tracking process, especially in the aspects of occlusion, scale change and deformation. On the whole, the Aojmus is robust for target tracking in complex scenes, and typically provides a new idea to deal with occlusion problems.

Conclusions

In target tracking, scholars have conducted in-depth research in many aspects to be able to predict the position of moving targets more accurately. However, due to the variability of the tracked target and scene, it is not easy to develop an algorithm that takes into account the above 11 influencing factors simultaneously, especially in solving the problems of target occlusion, deformation and scale variation. The previous researches, which typically uses one judgment indicator to address the occlusion problem, can’t obtain outstanding overall performance. In this study, considering the complex scenarios and the requirement of mutual-complementarity of technologies, we propose four indicators, , N, and RSFM as conditions to make the occlusion judgment more accurate. Moreover, we introduce an adaptive model updating strategy, fuse the results of the occlusion judgement and apply them into the model updating, which improves the precision in predicting the target position. As tracking is processed frame by frame where different influence factors may be encountered, this study presents a dynamic dual thresholds to compose the update strategy and achieves an accurate judgment of the existence and degree of occlusion, which solves the problem of tracking drift. In order to make full use of the feature information of target and reduce the influence of scale variation, we also incorporate a multi-feature fusion scheme and a scale estimation model in the backbone of the algorithm, which provides a good basis for later obscuration judgments and model updates.

The experimental results show that the Aojmus precedes the other typical tracking algorithms in terms of tracking precision, which has been increased by 0.6 and 3.8 respectively compared with the excellent algorithms, MSCF and Staple. Despite the Aojmus is not the best in terms of success rate, it surpasses the other four compared algorithms with respect to target occlusion, scale variation, fast motion, out-of-plane rotation and deformation. As the Aojmus is based on the kernel correlation filtering method, it runs well in real-time with high tracking speed of 74.85 frames per second, striking a good balance between tracking effectiveness and speed. It can be concluded that the kernel correlation filter-based multi-indicator occlusion judgement mechanism and adaptive model updating strategy can solve the common problems of target tracking while maintaining the overall performance. In future, we plan to investigate the feasibility of synthesizing our method with convolutional neural networks to improve the overall performance further and extend the application to indoor mobile robot and vehicle violation.