Abstract

While past research has demonstrated the power of defaults to nudge decision makers toward desired outcomes, few studies have examined whether people understand how to strategically set defaults to influence others’ choices. A recent paper (Zlatev et al. Proceedings of the National Academy of Sciences, 114, 13643–13648, 2017) found that participants exhibited “default neglect,” or the failure to set optimal defaults at better than chance levels. However, we show that this poor performance is specific to the complex and potentially confusing paradigms they used, and does not reflect a general lack of understanding regarding defaults. Using simple scenarios, Experiments 1A and 1B provide clear evidence that people can optimally set defaults given their goals. In Experiment 2, we conducted a direct and conceptual replication of one of Zlatev et al.’s original studies, which found that participants selected the optimal default significantly less than chance. While our direct replication found results similar to those in the original study, our conceptual replication, which simplified the task, instead found the opposite. Experiment 3 manipulated the framing of the option attributes, which were confounded with the default in the original study, and found that the original framing led to below-chance performance while the alternate framing led to above-chance performance. Together, our results cast doubt on the prevalence and generalizability of default neglect, and instead suggest that people are capable of setting optimal defaults in attempts at social influence.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Setting defaults is an effective and increasingly popular behavioral intervention to “nudge” or influence people’s choices in desired directions (Benartzi et al., 2017; Thaler & Sunstein, 2008). A default is an option that is imposed on people unless they actively select a different option. For example, people in some countries are not considered organ donors unless they choose to be, or opt in, while people in other countries are considered organ donors unless they actively choose not to be, or opt out. Although people face the same options under both defaults, the effective consent rate is much higher when organ donation is the default than when it is not (Johnson & Goldstein, 2003; Steffel, Williams, & Tannenbaum, 2019). Such default effects have been found to occur in a variety of important domains beyond organ donation, including retirement plan participation (Madrian & Shea, 2001), green energy use (Pichert & Katsikopoulos, 2008), and flu vaccination (Chapman, Li, Colby, & Yoon, 2010).

Default effects are powerful, but can people harness this power by strategically setting defaults to influence others’ choices? For example, if a company wants their employees to participate in a retirement plan, would the company know to make participation, rather than non-participation, the default? Zlatev, Daniels, Kim, and Neale (2017) recently claimed that the answer is often “no.” In their paradigm, participants played the role of “choice architect” (CA) and chose one of two options to present as the default to the “choice maker” (CM). After testing over 2,800 total participants across 11 studies, Zlatev et al. (2017) concluded that CAs often exhibit “default neglect” in their attempts at social influence, selecting the optimal default at chance level. Furthermore, this default neglect appears to persist despite experience and feedback.

These results are surprising for three reasons. First, the relationship between the CA’s default-selection (set default to X) and the CM’s resulting behavior (accept X) is exceedingly simple and direct. Second, McKenzie, Liersch, and Finkelstein (2006) found that, when asked to play the role of policymakers, participants’ own preferences influenced their choice of default. For example, participants who were willing to be organ donors, and who thought that others ought to be organ donors, were more likely to choose the organ donor default. Relatedly, participants drew different inferences about policymakers depending on which default the policymakers had selected. For example, participants inferred that policymakers who selected the organ donor default were more likely to be organ donors themselves and to think that people ought to be organ donors. This view of defaults as “implicit recommendations” suggests that CAs choose defaults that correspond to their preferred outcome, and that CMs are aware of this when deciding whether to stay with, or switch from, the default.Footnote 1

Third, Altmann, Falk, and Grunewald (2013) tested whether different incentive structures affect how CAs use defaults to communicate implicit information to CMs in a repeated default-setting game. When the CAs’ and CMs’ interests were fully aligned, CAs selected the optimal default 98% of the time. However, when their interests were misaligned, CAs no longer selected informative defaults, but instead responded nearly at random (56%). Rather than showing default neglect, these results demonstrate that CAs not only can set defaults optimally, but that they can adaptively change this behavior depending on their goals in a strategic social interaction.

Thus, there is evidence that strongly suggests that CAs are aware of how defaults influence decision makers and can choose defaults accordingly. Why, then, did Zlatev et al. (2017) find “a striking failure to use and understand a simple and powerful social influence tactic” (p. 13647)? Jung, Sun, and Nelson (2018) proposed that this poor performance reflects the particular paradigm and presentation of stimuli that was used rather than a general failure to understand defaults. When Jung et al. (2018) attempted to conceptually replicate the “default game” of Zlatev et al. (2017) in three new contexts, they instead found that CAs chose the optimal default better than chance (65.3% overall). Zlatev, Daniels, Kim, and Neale (2018), however, argued that these conceptual replications did not examine default-setting behavior per se, but rather beliefs about the default effect.

We agree with Jung et al.’s (2018) general contention that the conflicting findings arise from the different materials that were used. We argue, more specifically, that the tasks in which default neglect was observed by Zlatev et al. (2017) are atypically complex, potentially confusing, and feature an important confound – all factors that would systematically tend to obscure default-setting competence. First, the default option is confounded with the framing of the options (i.e., when X [Y] is the default, the choice is verbally framed by highlighting the advantages and disadvantages of a switch to Y [X]; see Fig. 1A for an example). Thus, CAs must select default-frame pairs, a more complex task in which the default is not the sole relevant factor. (Notably, in the one task version without a default-framing confound – the “preselect” radio-button conditions of Study 3, which mention advantages/disadvantages for both default and non-default options – 79% of CAs chose the optimal default.) Second, in several tasks (Studies 1f–h and 2), CAs are told that they will select a “piece of advice” to give the CM, but then, rather than selecting advice or a stand-alone default, they must select one of two descriptions of imaginary scenarios (Fig. 1A); this scenario-selection task may be difficult for CAs to interpret. Finally, in some studies (1a-c, i, k, and 3), CAs don’t simply choose a default, but rather choose to be paired, for purposes of an incentive game, with another respondent who saw one of two scenarios, adding a layer of strategic complexity to the task. If people are capable of selecting optimal defaults, their competence may be masked in tasks that are unclear, complex, and/or highlight extraneous information. A more suitable test of strategic default selection would employ unambiguous tasks, free of extraneous complexity, in which only the default (and not the accompanying framing of advantages and disadvantages) is at issue.

In four experiments, we examined whether CAs can set optimal defaults in such paradigms. Unlike McKenzie et al. (2006), we randomly assigned participants to prefer one of two target outcomes, and unlike Jung et al. (2018), we asked participants to choose one of two options to set as the default. Experiment 1A used an interpersonal context that participants likely have experience with, while Experiment 1B employed a policy-setting context. In Experiment 2, we more directly investigated whether default neglect is specific to the complex materials used by Zlatev et al. (2017). This experiment included both a direct and a conceptual replication of one of the original studies, in which below-chance default-setting performance was reported. We predicted that default-setting performance would improve when the task is clarified and simplified to provide a more straightforward test of default understanding. In Experiment 3, we orthogonally varied the default and the framing of the options, to determine the effect of the default-framing confound in the original paradigm. Together, these experiments clarify the scope of people’s default-setting competence, as well as the special conditions in which their understanding is not expressed in task performance. All data and materials can be found on this project’s OSF page (https://osf.io/659k8/).

Experiment 1A

Experiment 1A used a simple interpersonal scenario to test whether people understand how to set defaults to influence others toward choosing a particular option.

Method

Participants were 146 University of California San Diego undergraduate students (Mage = 19.8 years; 84 females, 59 males, one other, two declined to state) who received partial course credit. Participants in this experiment, as well as in Experiments 1B and 2, were recruited from the Psychology Department subject pool and run at individual computer stations in groups of up to six.

Participants were asked to imagine that they were meeting a friend for lunch, but the restaurant (X or Y) had not been decided. Participants were randomly assigned to one of two conditions: they were told that they preferred eating at either Restaurant X or Restaurant Y. Participants then chose between two text messages to send to their friend: “I’ll meet you at Restaurant X at noon. If you would rather meet at Restaurant Y, just let me know.” and “I’ll meet you at Restaurant Y at noon. If you would rather meet at Restaurant X, just let me know.” Afterward, participants were asked to explain why they chose to send the text message that they did.

Results and discussion

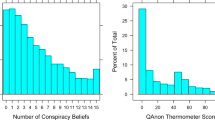

Participants assigned to prefer Restaurant X chose the optimal default (“I’ll meet you at Restaurant X…”) 87.8% of the time, which was significantly greater than chance, χ2(1, N = 74) = 40.88, p < .001, 95% CI [77.7%, 93.9%], and participants who were assigned to prefer Restaurant Y chose the optimal default 87.5% of the time, which was significantly greater than chance, χ2(1, N = 72) = 39.01, p < .001, 95% CI [77.1%, 93.8%].Footnote 2 Overall, participants selected the optimal default 87.7% of the time, χ2(1, N = 146) = 81.38, p < .001, 95% CI [81.0%, 92.3%] (see Fig. 2), clearly demonstrating default sensitivity.

Experiment 1B

While Experiment 1A examined interpersonal communication and influence, Experiment 1B targeted policy-making.

Method

Participants were 167 University of California San Diego undergraduate students (Mage = 19.8 years; 125 females, 42 males) who received partial course credit. They were asked to imagine that they were involved in designing an academic program that requires taking both a statistics and a methods course. Participants were randomly assigned to one of two conditions: they were either told that they preferred that students take the statistics course first and the methods course second, or the methods course first and the statistics course second. Participants were then asked whether they would automatically enroll students in the statistics course the first quarter and the methods course the second quarter, or automatically enroll students in the methods course the first quarter and the statistics course the second quarter. Both options also mentioned that if students wanted, they could change the assigned order by making a request. Afterward, participants were asked to explain why they chose to automatically enroll students in the order that they did.

Results and discussion

Participants who were assigned to prefer that students take the statistics course first chose the optimal default 79.8% of the time, which was significantly greater than chance, χ2(1, N = 84) = 28.58, p < .001, 95% CI [69.3%, 87.4%], and participants who were assigned to prefer that students take the methods course first chose the optimal default 75.9% of the time, which was significantly greater than chance, χ2(1, N = 83) = 21.25, p < .001, 95% CI [65.0%, 84.3%]. Overall, participants selected the optimal default 77.8% of the time, χ2(1, N = 167) = 50.68, p < .001, 95% CI [70.6%, 83.7%] (see Fig. 2), and again demonstrated default sensitivity.

While most participants in Experiment 1B selected the optimal default, a substantial minority (22.2%) made seemingly “non-optimal” selections. Do these selections indicate that around one in five participants have false beliefs about how defaults work? To explore this question, we examined the explanations given by the 37 participants who selected non-optimal defaults. The large majority of these explanations (30/37) revealed that participants were not selecting defaults based on their task-assigned preferences, instead expressing the opposite preference. In some cases, participants evidently substituted their true personal preference for the preference assigned to them in the task (e.g., invoking a reason for taking statistics prior to methods, even though a methods-first preference was instructed). It is also possible that some participants simply misunderstood the preference instructions. In any event, most “non-optimal” default selections apparently arose from failure to accept task-assigned preferences, rather than false beliefs about default effects. As high as the optimal default selection rate is in Experiment 1B, it likely underestimates the proportion of participants who understand how defaults work in this setting.Footnote 3

Experiment 2

To directly examine whether default neglect depends on the complexity of the experimental materials, Experiment 2 includes both a direct and a conceptual replication of Zlatev et al.’s (2017) Study 1h. In that study, participants (CAs) were asked to influence a new employee (CM) toward taking a job with higher pay or a job with more vacation days (Fig. 1A). Surprisingly, CAs selected the optimal default at a rate significantly below chance. As noted above, however, this surprising finding may reflect specific features of the default-setting task that Zlatev et al. (2017) used. The task instructions (giving advice) do not clearly match the task structure (select an imaginary scenario), and within the scenarios, the choice of a default is confounded with the choice of a problem frame – i.e., whether the (dis)advantages of one option or the other are highlighted. Participants might interpret this task in different ways, and some might focus more on the framing of the trade-offs than on the choice of a default.

In our conceptual replication (Fig. 1B), the same job context and options are presented in a paradigm that is simpler, clearer, and free of the default-framing confound. Specifically, CAs are explicitly asked to assign the employee to an automatic default (not to give advice), and their task only involves the selection of a default (not an imaginary scenario or a trade-off framing). We predicted that the presentation of the scenario and options in the conceptual replication would be easier to understand, and would result in improved default-setting performance.

Method

Participants were 524 University of California San Diego undergraduate students (Mage = 20.0 years, two did not report age; 339 females, 185 males) who received partial course credit.

Participants were randomly assigned to one of four conditions in a 2 (Replication: direct vs. conceptual) × 2 (Target option: higher pay vs. more vacation days) between-subjects design. The direct replication conditions used the original materials from Study 1h of Zlatev et al. (2017). In that study, participants were asked to imagine that they are the CEO of a firm, and to choose which one of two pieces of advice to give a new employee who is deciding between two jobs at their firm. One piece of advice tells the employee to imagine already having the job with higher pay, and the other tells the employee to imagine already having the job with more vacation days. For both pieces of advice, the advantage and drawback of switching to the other job are listed. Participants were randomly assigned the goal of convincing the new employee to choose the job with higher pay or the job with more vacation days.

In the conceptual replication conditions, we retained the job context, but changed the presentation of the information and options. First, we removed the advice-giving aspect of the problem that requires imagining already having one of the two jobs. Instead, we simply listed the same trade-offs between the two jobs. Second, participants now chose between automatically assigning the new employee to the job with higher pay and the job with more vacation days. In both cases, participants were also told that the employee can switch to the other job by making a simple request. Again, participants were randomly assigned the goal of getting the new employee to choose the job with higher pay or the job with more vacation days.

After making their choice, participants on the following screen were asked to explain why they chose the option that they did, and to rate how difficult it was to understand the scenario and options that they read. For the two measures of understanding, participants were asked “How easy or hard was it to understand the scenario?” on a 7-point scale ranging from 1 (Very hard to understand) to 7 (Very easy to understand), and “How well did you understand the options you were choosing between?” on a 7-point scale ranging from 1 (Not at all well) to 7 (Very well).

Results and discussion

Figure 2 shows the percentage of participants who chose the optimal default in our direct and conceptual replications. First, we were able to replicate Zlatev et al.’s (2017) original results. Participants assigned to prefer that the new employee take the job with higher pay chose the optimal default 34.6% of the time, which was significantly less than chance, χ2(1, N = 130) = 11.7, p < .001, 95% CI [26.6%, 43.5%], while participants assigned to prefer that the new employee take the job with more vacation days chose the optimal default 42.6% of the time, which was not significantly different from chance, χ2(1, N = 129) = 2.51, p = .113, 95% CI [34.1%, 51.6%]. Overall, participants chose the optimal default 38.6% of the time, which was significantly less than chance, χ2(1, N = 259) = 12.99, p < .001, 95% CI [32.7%, 44.9%].

In our conceptual replication, however, we found the opposite pattern. In contrast to the original results, participants assigned to prefer that the new employee take the job with higher pay now chose the optimal default 59.8% of the time, which was significantly greater than chance, χ2(1, N = 132) = 4.73, p = .03, 95% CI [50.9%, 68.2%], and participants assigned to prefer that the new employee take the job with more vacation days similarly chose the optimal default 63.2% of the time, which was again significantly greater than chance, χ2(1, N = 133) = 8.69, p = .003, 95% CI [54.3%, 71.2%]. Overall, participants chose the optimal default 61.5% of the time, which was significantly greater than chance, χ2(1, N = 265) = 13.59, p < .001, 95% CI [55.3%, 67.3%]. The modifications of the paradigm had a dramatic impact on how often participants chose the optimal default, χ2(1, N = 524) = 26.57, p < .001. Finally, participants were also asked to rate how easy or hard it was to understand the scenario, and how well they understood the options they were choosing between. As expected, participants rated both the scenario, Mconceptual = 6.11, Mdirect = 5.42, t(481.18) = 6.33, p < .001, and the options, Mconceptual = 6.22, Mdirect = 5.71, t(485.78) = 5.09, p < .001, as easier to understand in the conceptual replication compared to the direct replication.

Experiment 3

Experiment 2 showed that by simplifying the materials and eliminating the default-framing confound in Zlatev et al.’s (2017) Study 1h, the percentage of participants selecting the optimal default reversed from significantly below chance to significantly above chance. While the complex and potentially confusing character of the original task naturally explains a reduction in optimal default selection, what explains the significant reversal to below-chance performance? One possibility is the default-framing confound already mentioned: In Zlatev et al.’s Study 1h, when selecting one job as the default, the CA is also electing to frame their description in terms of the advantages and disadvantages of the other job (see Fig. 1A). That is, the CA must choose between two confounded default-frame pairs: [set default to higher-pay job + highlight (dis)advantages of more-vacation job] vs. [set default to more-vacation job + highlight (dis)advantages of higher-pay job]. If, in persuading CMs to choose a target job, CAs prefer to highlight the attributes of the target job rather than the alternative, then optimal default selection and preferred framing would push in opposite directions, and the overall rate of default selection would depend on the relative strength of these conflicting effects. It is also possible that the emphasis on “advice-giving” in the instructions may tend to draw CAs’ attention to the frame over the default, increasing the likelihood of below-chance optimal default selection.

To test this possibility, we conducted a preregistered experiment that manipulated whether differences between the jobs are framed in terms of the (dis)advantages of switching to the new job or of staying with the current (default) job. We expected to replicate the below-chance performance in the former framing, but to find above-chance performance in the latter framing.

Method

Participants were 779 University of California San Diego undergraduate students recruited from the Rady School of Management participant pool (Mage = 20.99 years, three did not report age, six did not provide a valid number; 394 females, 383 males, two did not report gender) who received partial course credit. Data for this experiment were collected during a pandemic in the USA, with 440 participants completing the study on a computer in-lab and 339 participants completing it fully online. The experiment was preregistered.

Participants were randomly assigned to one of four conditions in a 2 (Target option: higher pay vs. more vacation days) × 2 (Framing: switch vs. stay) between-subjects design. In the novel “stay-framing” conditions, the stimuli were closely modeled after those in Zlatev et al.’s (2017) original Study 1h (see Fig. 1A), except that the wording of Options A and B was modified to highlight the advantage and drawback of the current job. Accordingly, the second paragraph for Option A (where the current job pays $630 a week and has 10 paid vacation days) was rewritten as follows:

You can switch to another job in a different office of the same company. Your current job is the same as the new job, except for one distinct advantage and one distinct drawback:

-

The advantage of your current job is that it pays you $30 more per week ($630 rather than $600).

-

The drawback of your current job is that it offers you 10 fewer vacation days per year (10 rather than 20 days).

The advantage and drawback in Option B (where the current job pays $600 a week and has 20 paid vacation days) were similarly reframed as follows:

-

The advantage of your current job is that it offers you 10 more vacation days per year (20 rather than 10 days).

-

The drawback of your current job is that it pays you $30 less per week ($600 rather than $630).

The “switch-framing” conditions were essentially identical to Zlatev et al.’s (2017) Study 1h, highlighting the advantage and drawback of the new job rather than the current job, with minor wording changes to maximize comparability to the novel stay-framing conditions. For example, in Option A, the new job’s advantage was expressed as follows: “The advantage of the new job is that it offers you 10 more vacation days per year (20 rather than 10 days).” (See the OSF page for full materials and preregistration for this experiment.)

Independent of framing condition, participants were randomly assigned the goal of convincing the new employee to choose the job with higher pay or the job with more vacation days. Finally, after making their choice, all participants were asked to explain why they chose the option that they did.

Results and discussion

Figure 2 shows the percentage of participants who chose the optimal default as a function of how the advantages and drawbacks were framed. First, we again replicated Zlatev et al.’s (2017) original results when the (dis)advantages were framed in terms of switching to the new job. Participants assigned to prefer that the new employee take the job with higher pay chose the optimal default 42.6% of the time, which was significantly less than chance, χ2(1, N = 195) = 4.02, p = .045, 95% CI [35.6%, 49.8%], while participants assigned to prefer that the new employee take the job with more vacation days chose the optimal default 45.9% of the time, which was not significantly different from chance, χ2(1, N = 196) = 1.15, p = .284, 95% CI [38.8%, 53.2%]. Overall, participants chose the optimal default 44.2% of the time, which was significantly less than chance, χ2(1, N = 391) = 4.95, p = .026, 95% CI [39.3%, 49.3%].

When the (dis)advantages were framed in terms of staying with the current job, however, we found the opposite pattern. In contrast to the original results, participants assigned to prefer that the new employee take the job with higher pay now chose the optimal default 53.6% of the time, which was not significantly different than chance, χ2(1, N = 194) = 0.87, p = .35, 95% CI [46.3%, 60.7%], and participants assigned to prefer that the new employee take the job with more vacation days chose the optimal default 60.8% of the time, which was significantly greater than chance, χ2(1, N = 194) = 8.66, p = .003, 95% CI [53.5%, 67.7%]. Overall, participants chose the optimal default 57.2% of the time, which was significantly greater than chance, χ2(1, N = 388) = 7.80, p = .005, 95% CI [52.1%, 62.2%]. Simply changing the framing of the option attributes had a large impact on how often participants chose the optimal default, χ2(1, N = 779) = 12.60, p < .001.

Default selection rates in all conditions are close to 50%, registering either slightly below (in the switch framing) or slightly above chance (in the stay framing). This observation is unsurprising, because we retained the complex and potentially confusing original “advice” task in this experiment. While these task properties may reduce the rate of above-chance default selection, the results of Experiment 3 suggest that the reversal to below-chance performance is driven by the frame-default confound.

General discussion

In contrast to prior claims of default neglect (Zlatev et al., 2017, 2018), the present findings suggest that people understand that decision makers are more likely to stay with the default option and are able to select defaults accordingly. We agree with Zlatev et al. (2018) that default-setting performance should not be conceptualized dichotomously, but as a spectrum from total default neglect (50% optimal or chance) to perfect optimality (100% optimal). However, where results fall on this continuum can, at least in part, be explained by differences in the complexity of the experimental materials, with simpler materials resulting in better performance. Furthermore, simple scenarios focusing on default selection without confounding factors (e.g., the selection of different defaults paired with different wordings of the problem) provide a more meaningful test of default sensitivity or neglect.

Zlatev et al.’s (2017) original results across 11 studies found widespread default neglect, with Study 1h in particular demonstrating that CAs selected the optimal default less than chance. If, as their results suggested, default neglect were a robust phenomenon, that would be both consequential and highly surprising. (Indeed, when Zlatev et al. (2017) asked members of the Society for Judgment and Decision Making to predict whether people could successfully use defaults to influence others’ choices, 90% believed they could.) It is thus not entirely surprising (cf. Wilson & Wixted, 2018) that this effect does not consistently hold up in the conceptual replications reported here and elsewhere (e.g., Jung et al., 2018). When we presented participants with simple scenarios in Experiments 1A and 1B, we instead found that they were excellent at setting defaults, with performance closer to perfect optimality than chance (87.7% and 77.8% of the time, respectively). Interestingly, Zlatev et al. (2017) themselves also tested default selection using a different – and simpler – paradigm (in Study 3) in which CAs chose an option to initially pre-select for the CM, with no default-framing confound. Similar to our results, CAs selected the optimal default 79% of the time. Even more telling that default neglect depends on special features of the initial paradigm, not only were we able to directly replicate the worse than chance performance (39%) of Study 1h using the original materials, we found that most participants (62%) selected the optimal default in our simplified and unconfounded version of the same scenario (Experiment 2). Furthermore, when we inverted the original confound between the default and the framing of (dis)advantages, we were able to turn below-chance performance into above-chance performance (Experiment 3).

The fact that CAs select optimal defaults when randomly assigned specific persuasive preferences provides additional support for the suggestion (McKenzie et al., 2006) that the choice of default can convey an implicit recommendation. Notably, if defaults provide choice-relevant information to the CM, then default effects are not necessarily irrational when CMs are influenced by this informative cue. Broadly speaking, the CA’s decision to present the options in a particular way can signal implicit information, and CMs can infer this information from the given choice context. The present findings also align with prior evidence (Sher & McKenzie, 2006) that a speaker’s choice of frame “leaks” information about the speaker’s attitudes, with more favorable attitudes prompting the selection of more positive frames. Thus, while defaults, frames, and other nudges are often regarded as ways to leverage our cognitive biases for good, choice architecture can alternatively be viewed as an implicit social interaction where choice-relevant information is transmitted and used (Krijnen, Tannenbaum, & Fox, 2017; McKenzie, Sher, Leong, & Müller-Trede, 2018).

Zlatev et al. (2017) correctly note that widespread default neglect could have significant social implications, and they propose that in real-world settings CAs may require external assistance if they are to understand how to harness the power of defaults. Before intervening to correct a purported bias or shortcoming, however, it is important to first establish its scope and generality. The current results, in tandem with convergent findings in the literature (Altmann et al., 2013; Jung et al., 2018; McKenzie et al., 2006), indicate that default neglect is unlikely to be a robust phenomenon. Of course, these findings leave open many questions concerning people’s finer-grained understanding of default effects (i.e., whether realistic moderators that influence the size of default effects similarly moderate intuitive predictions of default effectiveness). But the available evidence, taken together, does not suggest a need to educate people on how to set optimal defaults.

Notes

In addition, McKenzie et al. (2006) asked participants how they thought that employee participation in a retirement plan would be influenced by the default. When participants were told that the company selected being enrolled in the plan as the default, 86% thought that more employees would be enrolled, and when they were told that the company had selected not being enrolled as the default, 89% thought that fewer employees would be enrolled.

To maximize comparability, we report the same analyses as those in Zlatev et al. (2017) when evaluating default-setting performance in each experiment.

Explanations for the smaller number (18) of participants who selected the non-optimal default in Experiment 1A are more difficult to interpret. While some seem to suggest a reluctance to nudge CMs in one-on-one social interactions and/or the operation of indirect pragmatic inferences, the explanations are generally more ambiguous and less uniform than those in Experiment 1B (see OSF page for all explanations).

References

Altmann, S., Falk. A, & Grunewald, A. (2013). Incentives and information as driving forces of default effects. IZA Discussion Paper No. 7610.

Benartzi, S., Beshears, J., Milkman, K. L., Sunstein, C. R., Thaler, R. H., Shankar, M., Tucker-Ray, W., Congdon, W. J., & Galing, S. (2017). Should governments invest more in nudging? Psychological Science, 28, 1041–1055.

Chapman, G. B., Li, M., Colby H., & Yoon, H. (2010). Opting in vs. opting out of influenza vaccination. Journal of the American Medical Association, 304, 43–44.

Johnson, E. J., & Goldstein, D. (2003). Do defaults save lives? Science, 302, 1338–1339.

Jung, M. H., Sun, C., & Nelson, L. D. (2018). People can recognize, learn, and apply default effects in social influence. Proceedings of the National Academy of Sciences, 115, E8105–E8106.

Krijnen, J. M. T., Tannenbaum, D., & Fox, C. R. (2017). Choice architecture 2.0: Behavioral policy as an implicit social interaction. Behavioral Science & Policy, 3, 1–18.

Madrian, B. C., & Shea, D. F. (2001). The power of suggestion: Inertia in 401(k) participation and savings behavior. Quarterly Journal of Economics, 116, 1149–1187.

McKenzie, C. R. M., Liersch, M. J., & Finkelstein, S. R. (2006). Recommendations implicit in policy defaults. Psychological Science, 17, 414–420.

McKenzie, C. R. M., Sher, S., Leong, L. M., & Müller-Trede, J. (2018). Constructed preferences, rationality, and choice architecture. Review of Behavioral Economics, 5, 337–360.

Pichert, D., & Katsikopoulos, K. V. (2008). Green defaults: Information presentation and pro-environmental behaviour. Journal of Environmental Psychology, 28, 63-73.

Sher, S., & McKenzie, C. R. M. (2006). Information leakage from logically equivalent frames. Cognition, 101, 467-494.

Steffel, M., Williams, E. F., & Tannenbaum, D. (2019). Does changing defaults save lives? Effects of presumed consent organ donation policies. Behavioral Science & Policy, 5(1), 69–88.

Thaler, R. H., & Sunstein, C. R. (2008). Nudge: Improving decisions about health, wealth, and happiness. New York: Penguin.

Wilson, B. M., & Wixted, J. T. (2018). The prior odds of testing a true effect in cognitive and social psychology. Advances in Methods and Practices in Psychological Science, 1, 186-197.

Zlatev, J. J., Daniels, D. P., Kim, H., & Neale, M. A. (2017). Default neglect in attempts at social influence. Proceedings of the National Academy of Sciences, 114, 13643–13648.

Zlatev, J. J., Daniels, D. P., Kim, H., & Neale, M. A. (2018). Default neglect persists over time and across contexts. Proceedings of the National Academy of Sciences, 115, E8107–E8108.

Author note

All data, materials, and preregistration are available at https://osf.io/659k8/. Experiment 3 was preregistered. Funding was provided by a Scholar Award from the James S. McDonnell Foundation to Shlomi Sher. The authors have no conflicts of interest to disclose.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

McKenzie, C.R.M., Leong, L.M. & Sher, S. Default sensitivity in attempts at social influence. Psychon Bull Rev 28, 695–702 (2021). https://doi.org/10.3758/s13423-020-01834-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-020-01834-4