Abstract

Cognitive and physical effort are typically regarded as costly, but demands for effort also seemingly boost the appeal of prospects under certain conditions. One contextual factor that might influence choices for or against effort is the mix of different types of demand a decision maker encounters in a given environment. In two foraging experiments, participants encountered prospective rewards that required equally long intervals of cognitive effort, physical effort, or unfilled delay. Monetary offers varied per trial, and the two experiments differed in whether the type of effort or delay cost was the same on every trial, or varied across trials. When each participant faced only one type of cost, cognitive effort persistently produced the highest acceptance rate compared to trials with an equivalent period of either physical effort or unfilled delay. We theorized that if cognitive effort were intrinsically rewarding, we would observe the same pattern of preferences when participants foraged for varying cost types in addition to rewards. Contrary to this prediction, in the second experiment, an initially higher acceptance rate for cognitive effort trials disappeared over time amid an overall decline in acceptance rates as participants gained experience with all three conditions. Our results indicate that cognitive demands may reduce the discounting effect of delays, but not because decision makers assign intrinsic value to cognitive effort. Rather, the results suggest that a cognitive effort requirement might influence contextual factors such as subjective delay duration estimates, which can be recalibrated if multiple forms of demand are interleaved.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Evaluating whether to pursue a desirable outcome often entails assessing the time and effort it will require. In psychology and economics, demands for both time and effort have traditionally been identified as costs that detract from the net subjective value of rewards. Decision makers tend to prefer low-effort and immediate rewards over larger rewards that demand longer delays and higher effort (Ainslie, 1975; Frederick et al., 2002; Hull, 1943; Kool et al., 2010; Kool & Botvinick, 2018; Shenhav et al., 2017; Treadway et al., 2009; Walton et al., 2006; Westbrook et al., 2013; Westbrook & Braver, 2015). However, people also sometimes appear to favor effortful courses of action in everyday decisions. Experimental and theoretical work has chiefly explained this phenomenon by suggesting a reciprocal relationship between effort and outcomes, whereby exerting effort boosts the perceived value of ensuing outcomes (Hernandez Lallement et al., 2014; Kacelnik & Marsh, 2002; Mochon et al., 2012), and positive outcomes imbue value into the preceding effortful behavior (i.e. learned industriousness, Eisenberger, 1992). These proposals raise questions about what features can make an effortful task attractive in its own right, and how contextual factors can alter its perceived costs (Inzlicht et al., 2018). Understanding what governs the intrinsic costs and rewards of effort could improve our ability to motivate completion of effortful daily activities such as schoolwork or physical exercise.

Recent work has proposed that the subjective costs of delay and cognitive effort are modulated by the value of the alternative ways one’s time and cognitive resources could otherwise be used, implying that decision makers track opportunity costs (Fawcett et al., 2012; Kurzban et al., 2013; Otto & Daw, 2019). Preferences for or against effortful courses of action could therefore depend on the presence of other types of demands and opportunities in the same choice environment (Kool & Botvinick, 2018). For example, exerting high effort for a reward might be aversive, but the same level of work might become appealing if the only alternative is the boredom of an equivalent period of passive waiting (for instance, a person might choose to take a cumbersome way home rather than wait for a delayed train, even if the resulting time of arrival is the same). Effects of context on effort preferences have been illustrated by experiments on charitable giving, in a which high-effort donation method (such as a run) attracted larger donations than a low-effort method (such as a picnic) when either was available alone (the “martyrdom effect”), but the high-effort event was disfavored when both options were offered as alternatives (Olivola & Shafir, 2013).

Standard models in neuroeconomics and behavioral economics formalize the effects of effort or delay on valuation in terms of discounting functions (Ainslie, 1975; Frederick et al., 2002; Green et al., 1994; Kable & Glimcher, 2007; Klein-Flügge et al., 2015; Kool et al., 2010; Westbrook et al., 2013). Discounting functions appear to have a similar form for delay and effort (Massar et al., 2015; Prevost et al., 2010; Seaman et al., 2018), although there is ongoing debate about how the shape of the discounting function might vary across types of demands (Arulpragasam et al., 2018; Białaszek et al., 2017; Chong et al., 2017; Hartmann et al., 2013; Klein-Flugge et al., 2016; Kool & Botvinick, 2018; Prevost et al., 2010) and the degree to which discount rates correlate across cost domains (Seaman et al., 2018; Westbrook et al., 2013). A limitation of the discounting approach is that it does not directly probe how aspects of the decision context, such as opportunity costs, might influence cost evaluation, and constrains the understanding of behavioral demands to one in which such demands necessarily reduce the value of prospects.

Single choice foraging paradigms, in which decision makers choose to accept or reject individually presented prospects, provide a natural way to investigate contextual influences on cost evaluation (Constantino & Daw, 2015; Garrett & Daw, 2020; Krebs et al., 1977; Mobbs et al., 2018; Stephens & Krebs, 1986). Such paradigms require weighing a prospective reward against the perceived opportunity cost of time. Specifically, foragers must decide whether to spend their limited time by harvesting a currently available resource or by seeking out more profitable (or less costly) alternatives instead. This could involve, for example, deciding when to leave a patch of depleting richness (e.g. when to quit a job that provides diminishing opportunities for growth), or choosing whether to pursue or forgo offers presented serially (e.g. whether to accept your first job offer or wait for new potential ones). Here we focus on this latter type of foraging, traditionally called prey selection (Krebs et al., 1977), as it provides a means to examine how choices to accept or reject individual prospects are influenced by contextual knowledge about other potentially obtainable alternatives in the same environment.

Foraging has recently attracted high scientific interest due to its ecological validity, which is rooted in evolutionarily conserved choice behaviors (Hayden, 2018; Mobbs et al., 2018). The prey selection foraging paradigm allows for the estimation of optimal, reward maximizing choice patterns on the basis of the opportunity cost of time (Charnov, 1976; Krebs et al., 1977). The opportunity cost depends on the richness of the environment, which can be experimentally manipulated by balancing the amount of time it takes to harvest an offer (handling time) and to search for a new one (travel time). In nature, this balance is exemplified when a squirrel deliberates between the handling time to crack a nut open versus the time it takes to travel to a new one. We can then interpret observed deviations from such optimal behavior, and examine how foraging behavior changes when the handling time is filled with cognitive or physical effort in comparison with an unfilled delay (thus disentangling effort from delay duration). In contrast to multi-alternative economic choice paradigms, which often treat choices as independent events, the sequential nature of foraging encourages individuals to consider both focal and global elements of the decision context. Extensive previous work on modeling foraging decisions provides computational tools to formalize relevant decision variables (Stephens & Krebs, 1986).

Across two behavioral experiments, we tested the overarching hypothesis that preferences would vary depending on whether individuals faced a single type or multiple types of behavioral demands (i.e. cognitive effort, physical effort, and delay). Experiment 1 used a between-subject design in which each participant foraged for rewards of varying magnitudes that imposed varying durations of a single form of demand. Experiment 2 used a within-subject design in which individuals foraged for varying forms of demand in addition to varying reward magnitudes. First, we examined whether the discounting effects consistently observed in multi-alternative economic choice paradigms persisted when these options were presented serially, and whether the resulting foraging patterns depended on the demands imposed to obtain rewards. The existence of a well-defined optimal, reward-maximizing choice strategy helped us identify when demands boosted or detracted from the attractiveness of rewards (signified by tendencies to overharvest poor offers or underharvest advantageous offers, respectively). Second, we probed whether the perceived cost (or value) of demands was intrinsic or context-dependent. Varying the level of exposure to multiple types of demands across experiments allowed us to assess whether cost evaluation was dependent on the availability of alternative avenues of action. Further, including cognitive demands and physical demands let us examine whether such effects might apply differently to distinct types of effort.

Our results provided mixed support for our pre-registered hypotheses, which are detailed in https://osf.io/2rsgm/registrations. For Experiment 1, we hypothesized that 1) decision makers would integrate reward and timing information in order to approximate optimal foraging strategies, as reflected by acceptance rates (based on previous reports of human foragers that approximate optimality, albeit with a tendency to overharvest; e.g. Constantino & Daw, 2015; Garrett & Daw, 2020); 2) acceptance rates would be higher for trials that demanded physical effort and cognitive effort than for unfilled delay or effortless engagement (based on results from pilot data and previous findings from single-alternative choice paradigms; e.g., Olivola & Shafir, 2013. See also Eisenberger, 1992; Inzlicht et al., 2018); and 3) acceptance rates for each type of demand would remain stable over time, and participants would rarely exhibit within-trial reversals (accepting a trial but then quitting without receiving the reward). This prediction stemmed from the intuition that participants would arrive at a decision strategy that was generally consistent with the task’s stable statistical structure, as seen in previous studies of human foragers. Consistent with our predictions, we found that individuals could approximate optimality by favoring higher rewards and shorter delays. We also found that people in the Cognitive group accepted more trials than optimal, whereas Physical and Wait groups under-accepted, partially matching our predictions but raising the possibility that cognitive effort might add value to outcomes (or attenuate subjective temporal costs). Finally, acceptance rates (and differences in acceptance rates across demands) were stable within each cost condition, in line with expectations. These findings motivated the design of Experiment 2, in which effort and delay trials were intermixed so that rejecting a trial of one type could potentially lead to a trial of the other type. For Experiment 2, we hypothesized that 1) acceptance rates would once again approximate optimality, and would show effects of reward magnitude similar to those seen in Experiment 1; 2) in contrast to Experiment 1, participants would accept unfilled-delay trials at a higher rate than trials with either form of effort (given that people often opt for the easiest way to achieve a desired outcome; e.g. Hull, 1943; Kool et al., 2010; Olivola & Shafir, 2013); and 3) that choices would once again be consistent and stable over time. As before, decision makers approached the optimal strategy for the foraging environment, resembling the pattern observed in Experiment 1. Contrary to our second and third hypotheses, we found that acceptance rates were initially higher for cognitive effort than for delay, but this apparent preference disappeared over time as decision makers foraged for types of demands in addition to rewards.

Computational modeling results suggested that the normative predictions of traditional foraging models were insufficient to explain our data. Instead, the data supported a model in which demands imposed a bias on the estimated opportunity cost of time, which converged as individuals gained experience with interleaved types of demands. We hypothesize that this bias arises from modulations of subjective time. For instance, cognitive effort could have become appealing by virtue of compressing the perceived trial time, a perception that could be recalibrated with exposure to alternative demands. A subjective shortening of experienced durations during cognitive effort could be due to the ongoing recruitment of working memory (e.g. preventing individuals from estimating the elapsed time), or because the discrete events within the cognitive task subdivided the time interval. These results expand our understanding of the attractiveness of effort, suggesting potential reward-independent factors that could be leveraged to motivate effort engagement in diverse, everyday scenarios.

Experiment 1: Between-subject Manipulation of Subjective Costs During Foraging

Methods

Participants

The study was preregistered with the Open Science Framework (https://osf.io/2rsgm/registrations). The data and code for all analyses can be found in https://github.com/ctoroserey/Cost_studies. All experimental procedures were approved by the Boston University Institutional Review Board, and written consent was acquired for all participants. For Experiment 1, we recruited individuals until the planned number of 84 eligible participants was achieved (58 Female, median age = 21, range = 18 - 31; number excluded before reaching goal = 8). The sample size was determined by means of power analysis (ANOVA), using a significance level of 0.05, power of 0.8, an effect size of f = 0.45 (estimated from a pilot study), and three groups (one for each cost type). The resulting per-group sample was 20, which we increased to 21 in order to match three possible block orders. Therefore, this power analysis gave us an initial sample size goal of 63 total participants. We then added a fourth group of 21 participants who experienced a minimally effortful condition in order to determine whether effort or mere task engagement was driving our results, bringing the total sample size to n=84.

Participant data sets could be excluded on the basis of any of the following preregistered criteria: 1) Withdrawal: if the participant did not complete the study (1 participant); 2) Inattentiveness: a catch trial was placed at the end of each experimental block, asking participants to press a key within 3 seconds (time requirement based on pilot study response times). A participant who failed two or more of these checks was excluded and replaced (5 participants). 3) Improbable choice behavior: The task was structured so that the largest reward amount should always be accepted. A participant who quit every trial in at least one block was assumed not to have followed or understood task instructions, or to have disengaged from the task altogether (0 participants). 4) Performance: Participants were forced to travel if they made 2 mistakes in a cognitive effort trial (see task procedures below), or if they gripped below threshold during physical trials. Any participant with more than 30% forced travels was excluded (1 participant).

Foraging Task

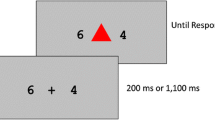

The experimental task was run using PsychoPy 2 (v1.85.1, Peirce et al., 2019) on a Macbook Pro laptop. Participants foraged for monetary rewards in an environment in which each trial required physical effort, cognitive effort, or passive waiting for a set period of time (the “handling time”) in order to obtain the reward (Fig. 1A). Their goal was to maximize their gains within a fixed total amount of playing time (six 7-minute blocks). On each trial, a monetary offer was displayed for 2 s, and participants had the opportunity to expend time and/or effort during the handling time in order to earn it. Upon completion of a trial, participants saw a 2 s feedback message displaying the reward obtained, which was followed by a travel time to the next offer. Alternatively, the participant could quit at any point during the handling time by pressing the spacebar, and immediately start traveling. Participants received their earnings in the form of a real monetary bonus that was added to a fixed $12 participation amount (possible range of task-based earnings = $0 - $15.50, rounded up to the nearest quarter).

Task design and optimal behavior. A: General foraging trial structure. On each trial, participants were offered an opportunity to earn money by sustaining effort or waiting during the handling time (2, 10, or 14 s). The end of a trial was followed by a travel time (handling and travel times always added up to 16 s). Participants could skip unfavorable trials and immediately start traveling to a potentially better offer. In Experiment 1, the type of demand was fixed per participant and handling time varied per block. In Experiment 2, handling time was fixed at 10 s, but a combination of effort and delay trials changed per block. B: Possible earnings per second for each acceptance threshold (i.e. the smallest amount accepted) for each handling time in Experiment 1. Circles denote the reward-maximizing threshold for each block, which is described in the table. Experiment 2 presented only the 10 s handling time, for which it was optimal to skip all 4-cent offers

Participants were divided into four groups. Each participant in the first three groups was assigned to one of three types of costs (cognitive effort, physical grip-force effort, or delay), and a fourth group experienced a low-effort physical task that required gripping above a negligible force level. This last condition was added to test the possibility that any differences in acceptances between effort and delay were driven by task engagement alone. Each group was unaware that other cost conditions existed. Participants exerted physical effort by gripping a handheld dynamometer (Biopac Systems, United States) using their dominant hand. Gripping requirements in the physical effort condition were calibrated at 20% of maximum voluntary contraction (acquired at the beginning of the session). Participants could maintain any force level above this threshold to remain in the trial. The low-effort condition was equivalent except that the grip-force threshold was set to 5%. Cognitive effort entailed switching among Stroop, dot motion judgment, and flanker tasks. Task switching has previously been shown to impose subjective costs (Kool et al., 2010). In the Stroop task, one of three color names was displayed on the screen (red, blue or green), with a font color that was either congruent (e.g. the word red presented in red) or incongruent (e.g. the word red presented in blue). Participants had to select the color of the font (i.e., they had to suppress their tendency to read the word). For the motion judgment task, 100 solid white dots moved on the screen. A fraction of these dots moved coherently to the left or right, while the rest moved in random directions (coherence could be either 30% or 40%, uniformly sampled). Participants had to respond with the direction of the coherent movement. In the flanker task, rows of arrowheads pointed either to the left or the right (maximum of 3 rows, 3 to 13 arrowheads per row). Participants responded with the direction of the center arrowhead, which could point in the same or opposite direction of its neighbors. The tasks were configured so that responses always involved a left or right key press (e.g. for Stroop, two colored circles were presented at each side of the screen). During the handling time, cognitive tasks and their configurations were randomly sampled, and were presented for 1 s followed by a 1 s inter-stimulus interval. Participants were asked to respond within each task’s presentation time (i.e., there was a 1 s response deadline). Before the experiment, participants trained in each cognitive task until they correctly performed six consecutive trials of each type. While in the handling time, if participants failed to maintain an above-threshold grip during the physical effort task (following the 1 s grace period) or made two mistakes during the cognitive task, they were forced to travel and missed the reward.

There were three block types, in which handling times of 2, 10, or 14 seconds were paired with travel times of 14, 6, and 2 seconds, respectively. All combinations added up to 16 seconds, meaning that accepted trials took the same amount of time in all conditions, but rejected trials were shorter in environments with shorter travel times. Timing parameters were held constant within each 7-min block. Each block type was presented twice in pseudorandom order (total session length = 42 minutes). Reward amounts varied per trial (4, 8, or 20 cents with equal probability), with the constraint that every reward was presented twice every six trials. This prevented sequences from being dominated by a single amount during any window of time. Timing information was disclosed at the beginning of each block, and each trial’s reward amount was displayed during a 2 s offer window before the trial began. Participants received training prior to the experimental session and were told about all possible timing conditions and reward amounts in order to avoid any biases that could arise through experience-dependent learning (Dundon et al., 2020; Garrett & Daw, 2020). Finally, we encouraged participants to carefully evaluate their options by informing them that accepting all offers would not maximize their rewards.

Reward-maximizing Strategy

Foraging theory posits that the decision to accept a delayed reward should depend on the opportunity cost of time to be incurred in obtaining it, which in turn depends on the richness of the environment (Charnov, 1976; Stephens & Krebs, 1986). In this study, the richness of the environment was manipulated by the length of the handling and travel times. Shorter travel times produced richer environments by making it possible to save more time by skipping low-reward trials. Since time combinations were fixed per block, we calculated each block’s optimal accept/reject strategy by computing the expected rate of gain (g(*)) from all decision strategies according to the following equation:

where R and p are the reward magnitude and acceptance probability of offer i, respectively, Thandling is the handling time for the block, Ttravel is the travel time, Toffer is the duration of the offer window (2 s), and Treward is the duration of the window displaying the reward earned (2 s, only presented for completed trials). This gives the average reward per second attainable in each block as a function of the acceptance threshold (i.e. the lowest amount the participant is willing to accept in a given timing environment). Since an ideal forager should always either accept or reject any given category of prey (Krebs et al., 1977), probabilities other than 0 and 1 were not considered. The resulting possible strategies were to accept only 20 cents, accept 8 and 20 cents, or accept everything. Fig. 1B shows possible earnings per second for each choice strategy, as well as the lowest amount participants should accept in order to maximize their rewards (circled dots). For example, a participant in a 10 s handling-time block should accept 8-cent and 20-cent rewards (and reject 4-cent rewards) to maximize their reward rate; a participant in a 14 s handling-time block should accept only 20-cent rewards, and a participant in a 2 s handling-time block should accept everything. Note that accepting every offer was often detrimental to earnings.

Analyses

All analyses were performed in R 3.5.2 (R Core Computing Team, 2017). First we tested whether decision makers integrated delay and reward information. To address the prediction that participants would be more likely to accept higher reward and shorter handling time trials, we fit a mixed-effects logistic regression to predict trial-wise acceptances (using the lme4 package, Bates et al., 2015), giving a random intercept to each subject to account for biases in participant behavior. We included continuous regressors for handling time and reward, as well as two covariates that probed the influence of recent history on choices. The first was a term containing the number of consecutive unobtained offers prior to a given trial (unobtained offers could be due to quits or forced travels). The second regressor tracked the sum of the reward amounts on the previous n offers. We identified the n that minimized Akaike’s Information Criterion (AIC) among 6 versions of the model (ranging from 2 previous trials up to 7, beyond which the model failed to converge), and report all coefficients from the winning model, which used the sum of the previous 6 offers.

Our foraging task was configured such that over- and under-accepting were detrimental to total earnings. To confirm this, we fit a general linear model with constant, linear, and quadratic terms to estimate the correspondence between proportion of trials completed (independent variable) and total earnings (dependent variable). A significant quadratic coefficient thus would signal that the task statistics operated generally as expected. Next, to determine the optimality of the group’s decisions, we examined whether each cost type produced a bias to over or under-accept offers of 4 and 8 cents (assuming that 20cent-offers would always be accepted, given our design). The reward-maximizing strategy was to always reject these offers for handling times of 14 s, reject 4 cents and accept 8 cents for 10 s, and accept both for 2 s, yielding a combined optimal overall acceptance rate of 50%. We performed a two-sided one-sample chi-squared test of proportions against the null probability of 0.5 for each type of cost (with trials pooled across participants in each group). Therefore, a significant difference indicated that participants either over- or under-accepted rewards.

The analyses above differed slightly from the pre-registered plan. Rather than pooling coefficients from individual logistic regressions, we favored obtaining a single coefficient from a mixed model that accounted for participant-level random effects. The chi-squared test was adopted from the Experiment 2 preregistration, as we found it better suited to the question than the original formulation (i.e. individual tests for each combination of experimental parameters).

Next, we compared acceptance rates across cost conditions. We first performed a one-way ANOVA on the proportion of trials accepted using group as a factor. We then compared the proportion completed across all 4 groups using non-parametric permutation contrasts (6 tests). For each test, on each of 5000 permutation iterations, participants’ group labels were randomly shuffled without replacement, and the difference in mean acceptance rates across iterations created an empirical null distribution. The unpermuted group mean difference was then evaluated against this permuted distribution. The same approach was used to test differences in total earnings.

In order to further look at the effect of handling time, offer amount, and cost condition, we computed the probability of accepting a trial with a mixed-effects logistic regression. Our a priori model of interest included all three variables as fixed main effects, and a random intercept per subject. Cost condition was modeled with three categorical terms, with the fourth condition as the reference condition. We ran three versions of the model with different reference conditions, in order to test all pairwise differences among the four cost conditions. We then examined whether this a priori model outperformed both simpler and more complex models. We used both AIC and Bayesian Information Criterion (BIC) to determine the model that minimized the negative log-likelihood while penalizing the addition of parameters. The regressors included in the 8 candidate models were: 1) intercept only; 2) cost condition only; 3) handling time only; 4) offer amount only; 5) handling time and offer amount; 6) cost condition, handling time, and offer amount main effects (a priori model from above); 7) adding a handling-time-by-offer-amount interaction; and 8) all possible two-way interactions. We predicted that model 6 would have the lowest AIC and BIC, as it was the simplest model that included information about all three trial-varying factors (reward amount, timing, and effort). Nested models with similar AIC were statistically compared using an analysis-of-deviance. The significance test was computed as the probability of the reduction in deviance, based on a chi-square distribution with degrees of freedom equal to the difference in the number of parameters between models.

Reward-maximizing prey foragers should either accept or reject offers based on their profitability relative to the available alternatives (Krebs et al., 1977; Stephens & Krebs, 1986). However, decision makers often curtail persistence before reaching a delayed prospect based on a continuous reevaluation of the prospect’s value or a lapse in self control (Ainslie, 1975; McGuire & Kable, 2012, 2015; Mischel & Ebbesen, 1970). Therefore, we examined whether participants were consistent (not quitting trials that were originally accepted) and stable (maintaining similar acceptance rates over time) in their choices. To evaluate consistency, we visually examined survival curves indicating at what point during the handling time participants quit a trial; large numbers of late quit times would indicate a lack of consistency. We interrogated stability by computing each participant’s total proportion of acceptances in the first and second half of the experiment for every block type, comparing the mean proportion of acceptances using paired permutations (5000 iterations). We then tested whether the observed differences in costs were still present in each half of the experiment by applying the winning model from the mixed-effects logistic regression analysis to each half separately.

Results

Decision Makers Integrate Delay and Reward Information

Four groups of participants faced with different behavioral demands (physical effort, cognitive effort, low-effort task engagement, or passive delay) chose their preferred strategy to maximize rewards in foraging environments of varying richness. We will refer to the four groups below as Physical, Cognitive, Easy, and Wait. Environmental richness was dictated by the time it took to obtain a reward (the handling time) and the time between trials (the travel time). We hypothesized 1) that participants would approximate reward-maximizing behavior by preferentially accepting higher rewards and shorter delays; 2) that participants confronted with effortful demands would be more likely to accept trials than those faced with passive delay, regardless of handling time and reward amount; and 3) that choices would be stable over time and consistent within trials.

We performed a mixed-effects logistic regression to address the first hypothesis, pooling across the four conditions. Since participants in the effort groups were forced to travel when they failed to perform above threshold (see Methods), we distinguish between acceptances (not explicitly quitting a trial during the handling period) and completions (successfully obtaining a reward). Even so, the proportion of forced travels was low for the Cognitive group (5% on average across participants) and 0 for the Physical group (i.e. no forced travels in this group). Overall, participants accepted an average of 64% of trials (SD = 17%, range = 35% to 100%). In line with our predictions, larger reward amounts significantly increased acceptance probabilities (β = 0.49, SE = 0.01, p < 0.001), whereas longer delays decreased them (β = -0.34, SE = 0.01, p < 0.001). Two additional regressors showed that having missed out on consecutive rewards decreased the probability of acceptance (β = -0.06, SE = 0.03, p < 0.001), and that participants became more selective when recent offer history was richer (β = -0.01, SE = 0, p < 0.001). The counterintuitive reduction in acceptances following consecutive unobtained rewards was driven by the Wait group (removing this group from the model yielded a p > 0.1).

The next set of analyses examined the hypothesis that both Cognitive and Physical groups would uniformly accept more trials than the Wait and Easy groups. Fig. 2A shows that the Cognitive group consistently accepted more offers, which resulted in lower earnings (middle). One-way ANOVAs showed that differences among groups were significant both for overall proportion accepted (F(3, 80) = 6.94, p < 0.001), and total earnings (F(3, 80) = 8.07, p < 0.001). Post-hoc permutations (5000 iterations) comparing mean acceptance rates between every pair of costs confirmed that acceptance rates of the Cognitive group (mean = 0.74, SD = 0.13) were higher than those of the Physical (mean = 0.6, SD = 0.15; p = 0.003, Cohen’s D = 1.02) and Wait groups (mean = 0.55, SD = 0.11; p < 0.001, Cohen’s D = 1.55). It also showed that those faced with the easy task accepted more than those in the Wait group, although responses from the Easy group were more variable (mean Easy = 0.68, SD = 0.19; p = 0.01, Cohen’s D = 0.85) (all other p > 0.05). In line with its higher acceptance rates, the Cognitive group had significantly lower earnings (mean = 13.55, SD = 0.84) than either the Physical group (mean = 14.03, SD = 0.53; p = 0.035, Cohen’s D = 0.69) or the Wait group (mean = 14.5, SD = 0.58; p < 0.001, Cohen’s D = 1.32). In addition, the Wait group earned more than the Physical (p = 0.007, Cohen’s D = 0.85) and Easy groups (mean Easy = 13.94, SD = 0.52; p = 0.002, Cohen’s D = 1.02).

Overall choice behavior in Experiment 1. A: Proportion accepted per cost. B: Total number of dollars earned by each group by the end of the experiment (not including the participation reward). C: The relationship between proportion accepted and total earned. Consistent with the foraging design, participants who over and under accepted earned the least. These results suggest that people were willing to pursue cognitively effortful actions at the expense of earnings

We next examined the optimality of these decisions. The foraging task was configured so that there was a single reward-maximizing strategy per block type, and participants were informed that accepting everything would not maximize their gains. Accordingly, participants who over- or under-accepted earned the least (Fig. 2C; general linear model with quadratic term, F = 25.65, β = -8.99, SE = 2.42, R2 = 0.39, p < 0.001). We performed group-level chi-squared tests of proportions (with trials pooled across participants) to examine whether each cost biased decision makers to under- or over-accept 4 and 8 cent offers, which collectively should have been accepted 50% of the time across the three timing environments. We found a significant over-acceptance of these trials by the Cognitive group (proportion accepted = 0.59; χ2 = 73.89, p < 0.001), but under-acceptances by the Physical group (proportion accepted = 0.39; χ2 = 123.81, p < 0.001) and Wait group (proportion accepted = 0.31; χ2 = 359.13, p < 0.001), with no significant difference for the Easy group. Together, the findings discussed so far indicate that although participants reacted appropriately to reward and timing contingencies, departures from optimality were systematically influenced by what form of cost was at stake. The findings raise the possibility that cognitive effort can boost the value of an offer, as reflected by higher acceptances at the expense of earnings.

We next probed whether these results held uniformly across reward and timing parameter combinations. Fig. 3A shows the mean proportion of acceptances (± SEM) per combination of handling time, reward, and cost type, with reward-maximizing acceptance rates depicted by the gray circles. Qualitatively, the figure confirms two important predictions. First, participants adapted to the richness of each timing block, gravitating towards optimality regardless of the cost they faced. Second, effects of cost type were consistent across the three timing conditions, and acceptances were uniformly highest for the Cognitive group. We tested these observations with a mixed-effects logistic regression model, which estimated the probability of accepting an offer as a function of handling time, reward amount, and cost type (see Methods for details). The model showed significant main effects of handling time (β = -0.34, SE = 0.01, p < 0.001) and offer amount (β = 0.49, SE = 0.01, p < 0.001). Comparisons among all conditions are shown on Fig. 3B. The Cognitive group was significantly more likely to accept offers than the Physical group (β = 1.5, p = 0.005) or the Wait group (β = 2.11, p < 0.001), but not the easy group.

Comparison of acceptance rates across cost types for each combination of reward amount and handling time. A: Mean acceptance rate in each group for every combination of handling time and reward (with SEM). Grey points indicate the reward-maximizing acceptance rate for each combination, which was always either 0 or 1. B: Matrix of coefficients that resulted from switching the reference cost condition in a mixed-effects logistic regression model. Each element represents how much more likely a group was to accept offers compared to the reference condition (row). White asterisks denote the p-value for significant comparisons (one, two, and three askterisks correspond to p-values under 0.05, 0.01, and 0.001, respectively). C: AIC and BIC values for a mixed-effects model comparison, ranging from a baseline intercept-only model to a full-interaction model. Both metrics yielded similar values across models

These observations were not better explained by simpler or more complex regression models. Fig. 3C shows AIC and BIC values for models of increasing complexity, starting with an intercept-only configuration (see Methods for details). Here we focus on AIC, as both metrics yielded comparable results. As predicted, the a priori model with all main effects performed better than those relying on a single parameter (a priori model pseudo R2 = 0.82), as well as the model with only main effects for handling time and reward amount (AICa-priori = 3416, AIChandling/reward = 3427, χ2(3) = 16.87, p < 0.001).

Contrary to our expectations, the a priori model was outperformed by models that added an interaction between handling and reward (AICHR_interaction = 3394, χ2(1) = 24.16, p < 0.001) as well as one that considered all possible interactions (AICall_interactions = 3300, χ2(7) = 129.58, p < 0.001). However, upon examination, neither of these models offered further interpretable insights on the observed differences across costs. The overall pattern of results supported the hypotheses that handling and reward amounts were integrated in the expected fashion, and that the rate of acceptances was affected by the demand faced. The results offered only partial support for the hypothesis that effort would induce higher acceptance rates; higher acceptance rates were observed for cognitive effort but not for physical effort.

Consistency and Stability of Choices

Next, we tested the hypothesis that foragers would exhibit within-trial consistency and across-trial stability in their choices. Participants were deemed consistent if they tended not to quit after engaging in a trial (that is, if they pressed the spacebar to reject a trial either quickly or not at all). To examine this, we plotted survival curves of trial rejection times across all participants (Fig. 4A), with black crosses signaling censored observations either for completed trials (at 2 s, 10 s, and 14 s) or forced travels. These curves show that most decisions to quit happened within the first second into the handling time, and participants rarely quit afterwards (only 4% of quits occurred later than 1 s). The pattern of results in the Physical and Easy groups reflected that participants sometimes quit by letting the 1 s initial grace period expire without gripping (rather than by pressing the spacebar); such trials were coded as quits rather than as forced travels. Plotting the quitting time distributions across blocks for the first 1 s of the handling time (ECDFs on Fig. 4A) showed that participants in the Wait and Cognitive groups responded faster as the experiment progressed. These response time observations suggested clarity in participant choices.

Choice consistency and stability in Experiment 1. A: Survival curve plots of trial rejection times. B: Distributions of trial rejection times within the first 1 s of the handling time. C: Acceptance rate in the second half of the session as a function of acceptance rate in the first half of the session. Dashed lines indicate the mean for each group. D: proportion accepted over time per group

Stability was defined as the maintenance of similar acceptance rates throughout the experimental session. The scatterplot in Fig. 4C shows the overall proportion accepted by each participant before and after the midpoint of the session. Paired permutations showed no change in acceptance rates for the Wait and Easy groups (p > 0.05), but we found significantly lower acceptance rates in the second half of the session for the Physical group (p = 0.024, Cohen’s D = 0.27) and the Cognitive group (p < 0.001, Cohen’s D = 0.56). Fig. 5A shows that the reduction in acceptance rates in effort groups over time was steeper for longer handling times, a hallmark of effortful demands (Treadway et al., 2009). We then replicated the mixed-effects logistic regression from the previous section separately for each half of the session, which confirmed that differences among demands were mostly stable (Fig. 5B; a significant difference between Physical and Easy groups during the second half of the session was due to the decrease in acceptance rates by the Physical group). Together, the consistency and stability of behavior suggested that decision makers had a clear representation of the environment.

Acceptance behavior over time in Experiment 1. A: Offer acceptance rates per handling time, reward amount, cost type, and session half, showing that effortful demands (Cognitive and Physical) were less frequently accepted over time, especially for the longest handling time (14 s). B: Mixed-effects logistic regression model coefficients denoting the comparison of acceptance rates across cost conditions for each half of the experimental session separately (mirrored along the diagonal). Even though acceptance rates decreased in the effort groups, the differences in acceptance rates across cost conditions were preserved

Experiment 2: Within-subject Comparison of Costs

Experiment 1 demonstrated that human decision makers could appropriately integrate reward and timing information to make single-alternative accept/reject decisions in a foraging environment. In addition, acceptance rates were higher in a group of participants for whom rewards were associated with demands for cognitive effort than in groups for whom rewards were associated with physical effort or mere delay. One possible interpretation of the findings of Experiment 1 might be that cognitively effortful trials were intrinsically rewarding, either because participants placed value on effort per se or because they found the cognitive tasks more interesting than a boring, unfilled delay or hand-grip task. An alternative possible interpretation is that cognitive effort led to higher acceptance rates by altering the task context in a more general way, such as by influencing the perception of temporal durations or opportunity costs. We reasoned that if the effortful tasks were truly preferred, participants ought to continue preferentially accepting cognitive effort trials in an environment in which effort and non-effort trials were randomly intermixed within-subject, where rejecting a delay trial would potentially lead to an opportunity to accept a subsequent effort trial. On the other hand, if cognitive effort were not preferred, interleaving different types of demand in the same environment might reveal a pattern of effort avoidance, given that participants now could effectively substitute low-effort trials for high-effort trials. Experiment 2 tested which types of trials decision makers would preferentially accept when they were allowed to forage for different types of demand in addition to different sizes of rewards. The objective was to address how the presence of multiple alternative forms of demand in the same choice environment would impact the subjectively assessed costs of effort and delay.

Methods

Participants

We collected data from a new sample of 48 participants (39 Female, median age = 21, range = 18 - 36; number excluded before reaching goal = 6). Sample size was once again determined by means of a power analysis (repeated measures ANOVA), using a significance level of 0.05, power of 0.8, an anticipated effect size of f = 0.5, and four factors (one for each demand-type condition; see below for details). The resulting sample size was 45, which we increased to 48 in order to balance the potential order of blocks. We excluded participants using the same criteria as in Experiment 1 (4 participants were excluded because they failed the inter-block attention checks).

Within-participant Manipulation of Cost Type

The original between-subjects task from Experiment 1 was modified so that participants foraged for cost types in addition to rewards. Every participant experienced all forms of cost (delay, physical effort, and cognitive effort). There were two block types, in which delay trials were interspersed with either physical-effort or cognitive-effort trials. This setup prevented participants from having to rapidly switch between response modalities across trials (i.e. keyboard and handgrip). Each block combination (Physical/Wait and Cognitive/Wait) was experienced three times by each participant in 7-minute-long, interleaved blocks, for a total session duration of 42 minutes. Half of the participants experienced a block sequence that started with the Physical/Wait block and the other half experienced a sequence that started with the Cognitive/Wait block. Timing parameters were held fixed throughout the experiment and were matched to the middle condition from Experiment 1 (10 s handling time and 6 s travel time). Participants were informed that this timing combination would be constant prior to beginning the experiment. The upcoming effort type was disclosed at the beginning of each block, and reward/cost offers were displayed at the start of each trial (e.g. “8 cents for physical effort”). Unlike the previous experiment, participants could express their decision to quit by pressing the spacebar during the offer window (not only during the handling time), in which case the travel time would begin immediately at the end of the 2 s offer window. Participants were trained in each type of demand until they reached the same criteria as in Experiment 1.

Analyses

The analytical pipeline was mostly preserved from Experiment 1. We first tested whether decision makers integrated reward information. We performed a mixed-effects logistic regression with regressors for offer amount, number of consecutive offer misses, and the sum of the n previously observed offers (we identified n by comparing the AIC of different history lengths). We then confirmed that under- and over-acceptance were detrimental to total earnings by fitting the same quadratic linear model discussed above. The optimal strategy was to reject 4-cent offers and accept 8-cent offers, yielding an ideal overall acceptance rate of 50% (not counting 20-cent offers, which should have always been accepted). For these trials, we performed a two-sided one-sample chi-squared test of proportions against the null probability of 0.5 for each cost condition.

To compare acceptance rates among cost conditions, we first estimated acceptance probabilities using a mixed-effects logistic regression. The model included cost condition and reward amount as fixed main effects, and participant as a random intercept (handling time was not modeled, as participants experienced a single time combination). Cost condition was modeled with three categorical terms, with the fourth condition as the reference condition (two effort conditions, and a delay condition paired with each effort type). We ran three versions of the model with different reference conditions, in order to test all relevant pairwise differences among the four cost conditions. We compared the a priori model to alternative parameterizations using AIC and BIC, varying model complexity as follows: 1) intercept only; 2) cost condition only; 3) reward only; 4) cost condition and reward main effects (a priori model); and 5) adding a two-way interaction of cost condition and reward. We predicted that model 4 would have the last appreciable decrease in the negative log-likelihood, as it was the simplest model that contained information about both reward and effort. Models with similar AIC were formally compared using the analysis-of-deviance approach from Experiment 1.

Next, we probed within-trial consistency and across-trial stability of choice behavior. First, we visualized the number of quit responses during the handling time with a survival curve, and plotted the quit response time distribution as a function of block to examine choice consistency over time. Next, we computed the proportion of quits that occurred during the choice window versus during the handling time. Earlier quits were seen as signifying greater consistency. We then computed each participant’s total acceptance rate in the first two and last two blocks, and compared them using a paired permutation analysis (5000 iterations). This analysis did not include the third and fourth block because the alternating, counterbalanced sequence of block types would have led to unequal numbers in the first three and last three blocks for individual participants.

Results

Decision Makers Integrate Reward Information

In Experiment 2, we tested decision makers’ preferences for cognitive effort or physical effort relative to unfilled delay. We modified the prey-selection foraging task, so each participant experienced every type of demand in blocks that interleaved wait trials with either physical or cognitive effort (with a fixed 10 s handling time and 6 s travel time, to allow for comparisons between experiments). We separately analyzed Wait trials from the two block types, labeling the two sets of Wait trials according to the type of effort with which they were paired (“Wait-C” for cognitive, and “Wait-P” for physical). We hypothesized that 1) reward offer amounts would influence decisions in the same way as in Experiment 1; 2) interleaving effort and delay would produce higher acceptance rates for wait trials than for cognitive or physical effort trials, in contrast to the pattern observed in Experiment 1. and 3) that choice patterns would remain stable over time and consistent within trials.

We first tested the hypothesis that participants would successfully integrate reward and timing information. A mixed-effects logistic regression showed that larger reward amounts significantly increased acceptance probabilities (β = 0.762, SE = 0.024, p < 0.001), and that participants became more selective if they had recently observed large offers (β = -0.015, SE = 0.002, p < 0.001). Model comparisons suggested that participants were influenced by the 7 most recent rewards. Unlike Experiment 1, participants were unaffected by the preceding number of consecutive unobtained rewards (p = 0.434). As before, a linear model predicting earnings showed that participants who completed too few or too many trials earned the least, in line with the experimental design (Fig. 6A; general linear model with quadratic term, F = 11.21, β = -5.53, SE = 2.71, R2 = 0.33, p = 0.047). When assessing the optimality of acceptances for 4 and 8 cents (99% of 20 cent offers were correctly accepted), chi-squared tests against the optimal rate of 50% showed that participants under-accepted physical effort trials (proportion accepted = 0.46, χ2 = 6.53, p = 0.01; all other p > 0.05). Despite this deviation, participants earned a similar total amount per cost condition on average (mean Cognitive = 3.13, SD = 0.52; mean Wait-C = 3.56, SD = 0.27; mean Physical = 3.44, SD = 0.25; mean Wait-P = 3.59, SD = 0.24). These results convey that, in contrast to Experiment 1, participants were similarly successful across the different cost conditions.

Within-subject acceptance behavior. A: Quadratic relationship between trial completions and total earnings. B: Proportion of acceptances of each reward per cost condition. C: Matrix of mixed-effects coefficients showing comparisons among cost conditions (while controlling for reward amount). Cognitive and Physical trials were compared to one another and were each compared to Wait trials from the same block (Wait-C and Wait-P, respectively). We omitted comparisons to Wait trials in the opposite block type (gray cells), which have little interpretability and were not addressed in our predictions. Asterisks mark statistically significant comparisons. D: AIC and BIC values for models of increasing complexity (AllMain refers to the a priori model which included main effects of cost condition and reward offer amount.)

Next, we addressed the hypothesis that passive waiting trials would be preferentially accepted relative to effortful trials, reflecting a pattern of effort minimization. Even though the overall reward acceptance pattern was similar to the 10 s handling time condition in Experiment 1 (Fig. 6B), differences among cost conditions were less evident. Moreover, these differences contradicted our predictions, as cognitive effort continued to be accepted at the highest rate. A mixed effects logistic regression confirmed once again that increases in offer amount made acceptances significantly more likely (β = 0.76, SE = 0.02, p < 0.001), but that the only significant difference across cost conditions was a higher acceptance rate for cognitive-effort offers than physical-effort offers (Fig. 6C; β = 0.38, p < 0.001). Neither Cognitive trials nor Physical trials had significantly different acceptance rates from the Wait trials that were interleaved in the same block (Cognitive versus Wait-C: β = 0.06, p = 0.565; Physical versus Wait-P: β = -0.18, p = 0.11). Model comparisons showed that a model with all main effects explained a large portion of the variance (R2 = 0.82), and there were similar AIC and BIC values for any model including reward amount (Fig. 6D). An analysis of deviance among the three models showed that adding cost condition to reward amount significantly improved the fit (AICreward = 1859, AICa-priori = 1852, χ2(3) = 13.24, p = 0.004) and that including a term for the interaction between reward amount and cost condition improved the fit still further (AICInteraction = 1830, χ2(3) = 28.34, p < 0.001). These results contradicted our hypothesis that Wait trials would be favored, but also did not indicate participants had a straightforward preference for Cognitive trials relative to the corresponding Wait-C trials. Instead, cost condition had only relatively small effects on acceptance rates when cost conditions varied within-participant. To shed further light on the dynamics underlying the small overall effect, we next examined how it varied over the course of the experimental session.

Consistency and Stability of Choices

Consistent with Experiment 1, a survival analysis showed that participants made most of their choices quickly within the offer window (Fig. 7A). The median percentage of quits during the handling time was 2.61% (SD = 7.67), with only 2 participants quitting over 20% of trials during the handling time. Cumulative quitting time distributions showed that responses during the offer window became faster over time (Fig. 7B). Once in the handling time, the overall percentage of forced travels was low for both Cognitive trials (median = 5.99%, SD = 9.59) and Physical trials (median = 0%, SD = 3.62). The results suggest that decision makers were consistent in their adopted strategies, and were able to perform the tasks well.

Choice consistency and stability in Experiment 2. A: Survival curves showing trial rejection times for each cost condition. B: Distribution of trial rejection times within the offer window. C: Acceptance rate in the last two blocks as a function of acceptance rate in the first two blocks. Dashed lines indicate the mean for each cost. D: Acceptance rates in the three successive occurrences of each block type

However, contrary to our prediction that choices would reflect a stable cognitive representation of task structure, participants accepted significantly fewer trials in the second half of the experiment, regardless of cost condition (paired permutations; Cognitive: meanpre = 0.76, SDpre = 0.17, meanpost = 0.65, SDpost = 0.21, p < 0.001, Cohen’s D = 0.6; Physical: meanpre = 0.71, SDpre = 0.2, meanpost = 0.65, SDpost = 0.21, p = 0.011, Cohen’s D = 0.27; Wait-C: meanpre = 0.73, SDpre = 0.2, meanpost = 0.66, SDpost = 0.22, p = 0.004, Cohen’s D = 0.31; Wait-P: meanpre = 0.72, SDpre = 0.2, meanpost = 0.66, SDpost = 0.22, p = 0.01, Cohen’s D = 0.3; Fig. 8). The reduction in acceptances was not driven by a performance decline, as the proportion of forced travels was not significantly different between the first and second half of the experiment (paired permutation with 5000 iterations, p = 0.141). Notably, the pattern of choices from the first part of the experiment resembled that from the corresponding 10 s handling-time condition in Experiment 1 (compare Fig. 5A, middle to Fig. 8A). Mixed effects logistic regressions performed on each half separately confirmed that the relative preference for cognitive effort seen previously was preserved at the beginning of the experiment (Fig. 8B, lower triangle), but that it became undetectable over time amid an overall decline in acceptance rates as participants gained experience with cognitive effort, physical effort, and passive waiting trials (upper triangle). This fading preference for cognitive effort was observed regardless of which block participants experienced first, although its magnitude appeared to be reduced for those who experienced the physical block first (Fig. 8, bottom)

Acceptance rates over time in Experiment 2. A: Proportion accepted in the first two and last two blocks of the experiment. An initial preference for cognitive effort trials faded amid a global decrease in acceptance rates. B: Mixed effects coefficient matrix for early and late trials separately. The pattern for early trials – but not for late trials – resembled what was observed in Experiment 1 (Fig. 3B). C: Results presented separately for participants who experienced the Cognitive/Wait block type first or who experienced the Physical/Wait block type first

Together, our findings show that the stable preferential acceptance of cognitive effort trials that we observed during single-demand foraging slowly faded if alternative demands were introduced. This finding raises the question of how features of the cognitive task might be re-evaluated as a function of other experiences in the same decision context. We probed this question by fitting a series of computational models to data from both Experiments 1 and 2.

Computational Modeling of Foraging Behavior

We developed a computational model to identify cognitive operations that could explain the complex patterns observed across Experiments 1 and 2. We identified four key aspects of the behavioral results that an adequate computational model should be able to capture: 1) modest under- and over-harvesting of offers relative to the optimal strategy as a function of reward offer amount (e.g. Fig. 3A); 2) dependence of choices on recent offer history; 3) higher acceptance rates if all reward offers were associated with cognitive effort (Experiment 1); and 4) gradual abolishment of the tendency to over-accept cognitive effort trials if other forms of demand were interleaved in the same environment (Experiment 2). These conditions excluded a number of potential explanatory candidates that were not modeled, such as learned industriousness, boredom, fatigue, or asymmetric learning of environmental richness (see Discussion).

Methods

Model Fitting and Model Comparison Procedures

For each model, both population-level and individual subject-level parameters were fit simultaneously using an Expectation-Maximization algorithm (Huys et al., 2011, 2012). Briefly, it was assumed that, at the population level, subject-level values for each parameter were distributed according to a Gaussian distribution, defined by a mean and standard deviation. Accordingly, point estimates for the individual subject parameters were found via maximum a posteriori (MAP) estimation using the population distribution for each parameter as the priors, and the variance in each individual subject parameter estimate was calculated by taking the inverse of the Hessian matrix at the MAP estimate. Next, the mean of the prior was updated by taking the weighted mean of individual subject estimates, and the variance of the prior was updated by taking the weighted variance across the parameter estimates of individual subjects. This process was repeated until the mean and variance of the prior for all parameters converged (a change of less than .1% across successive iterations). Full mathematical details of the algorithm can be found in Huys et al. (2011, 2012), and the algorithm was implemented using a custom written R package, GaussExMax, available on GitHub (github.com/gkane26/GaussExMax).

Model comparison was performed by calculating Bayesian Information Criterion at the group level (integrated BIC or iBIC, Huys et al., 2011, 2012; Kane et al., 2019). iBIC penalizes the likelihood of the data given model parameters, p(d| model), for model flexibility (the number of parameters, k), and the size of the penalty depends on the number of observations o:

A Laplace approximation was used to find the log marginal likelihood, the likelihood of the data for each subject given the population level distribution over each parameter (Huys et al., 2011; Huys et al., 2012; Kane et al., 2019).

Model Definitions

We began by fitting a base model derived from the normative predictions of the Marginal Value Theorem (MVT, Charnov, 1976), which states that a delayed reward should be accepted if its magnitude surpasses the opportunity cost of time incurred in obtaining it (see Reward-maximizing Strategy for details). This evaluation rule can be expressed as

such that the reward R of trial i is favorable if it is larger than the average amount one could earn per second in the environment γ (the opportunity cost) during the handling time \({T}_{{{handling}}_i}\), with high values of γ promoting selectivity. We fit this and all subsequent models using a softmax decision rule to determine the probability of accepting the offer on a given trial

The value of γ in this base model was computed as an individual’s empirical rate of earnings by the time of each offer, with the free temperature parameter β indexing decision noise. Differences among the between-subject cost conditions in Experiment 1 could then be due to the impact of each cost on decision noise, which could have also driven the gradual convergence of acceptance rates in Experiment 2.

A second possibility is that individuals updated the estimate of gamma at different rates when faced with different demands. Previous accounts have demonstrated biases that can impact the learning of the opportunity cost in foraging paradigms (Dundon et al., 2020; Garrett & Daw, 2020). We therefore tested a second candidate model, an adaptive model that allowed γ to evolve over time, adapting a learning rule from Constantino and Daw (2015)

where Ai is an acceptance indicator (0 or 1), and τi is the duration of the trial. The duration was given by

such that the handling time was counted only for accepted trials. Higher values of the learning rate α (ranging from 0 to 1) resulted in a larger update toward the current trial’s rate of earnings weighted by its duration (first term in the equation). Free parameters for this adaptive model were the learning rate α and the initial value of γ in addition to the temperature β. These modifications allowed for the tracking of recent reward history, while maintaining the same evaluation rule

where values of γ were now updated on each trial i according to Eq. 3, rather than by the empirical rate of earnings.

A third possibility was that rather than impacting the learning rate, features specific to each cost condition additively biased the estimate of γ. Such bias could remain stable if only one type of demand was experienced (Experiment 1), but adjust over time as biases from other tasks were experienced (Experiment 2). For example, such biases could reflect an individual’s global estimation of the richness of the environment, could reflect any direct utility or disutility of the experience during the handling time, or could reflect a focal estimation of the passage of time experienced during performance of each task (e.g. how long 10 seconds subjectively feels). Altered perceived time durations have occasionally been associated with levels of cognitive engagement (Csikszentmihalyi, 2014; Wearden, 2016), and would be expected to impact the subjective estimate of a trial’s reward rate. Intuitively, the subjective duration of a fixed handling time could be recalibrated toward a consensus estimate as decision makers experienced different types of pre-reward demands. Our third candidate model, biased model, added a demand-specific term to γ that was gradually integrated across tasks for those participants who experienced multiple cost conditions. The overall model took the following form

where γ was the mean total rate of earnings per subject (mean = 0.55 cents per second, SD = 0.02). Negative values of Ci would therefore suggest a boost in offer value produced by a particular form of demand. On each trial i, Ci was the cost bias produced by each demand cdemand weighted against a global bias estimate cglobal

with wi controlling how early participants started integrating the consensus cost cglobal into their decisions. The value of this weight was given by

where the term in parentheses was the proportion of the experiment completed so far (note that the organization of the experimental session was fully disclosed), with high values of the free parameter αw denoting a late integration of the global converging bias. Since participants in Experiment 1 experienced a single type of demand, the values of cdemand and cglobal where the same and αw did not play a role. This resulted in fitting only two free parameters, cdemand and β, for that data set. For Experiment 2, we fixed each value of cdemand (i.e. ccognitive, cwait, and cphysical) to the mean of the fitted parameters from each demand group from Experiment 1, and fit three free parameters: the global bias cglobal, the convergence rate αw, and temperature β. This dynamic allowed for differences in acceptance rates across cost conditions to remain stable in Experiment 1 but gradually collapse in Experiment 2.

Finally, we asked if the biases imposed by each form of demand could be isolated from a flexible computation of the opportunity cost of time. Our fourth candidate model, an adaptive + biased model, combined the main features of the adaptive model and the biased model, such that γ was estimated on each trial per Eq. 3, with demands imposing distinct and potentially converging biases as described in Eq. 6-8. The form of this fourth model was

We fit four free parameters to Experiment 1 data (the temperature β, learning rate α, initial value of γ, and demand-specific bias cdemand) and five free parameters to Experiment 2 data (the temperature β, learning rate α, initial value of γ, consensus bias cglobal, and the rate of bias integration αw). The resulting parameters were compared across cost groups (in Experiment 1) and across experiments using ANOVAs, and the cdemand biases computed in Experiment 1 were compared against 0 using one-sample t-tests.

Results

The fourth model produced the lowest iBIC in both experiments (Fig. 9A; Experiment 1 iBIC = 3552.2; Experiment 2 iBIC = 1818.57), indicating that the tenets of the Marginal Value Theorem were necessary but not sufficient to explain the observed adaptive behavior. Fig. 9B-C show parameter fits from the winning model for both experiments. The learning rate α and the bias cdemand were of particular interest, as these parameters distinguished whether different forms of demand globally affected the integration of the environmental richness or directly modulated an offer’s perceived cost, respectively. Analyses of variance showed significant differences among Experiment 1 groups only for cdemand (F(2, 60) = 7.01, p = 0.002), with α being similar for all groups. Pairwise post-hoc permutations confirmed that the Cognitive group (mean = -0.08, SD = 0.14) had lower values for the bias parameter than either the Wait group (mean = 0.11, SD = 0.18; p < 0.001; Cohen’s D = 1.16) or the Physical group (mean = 0.09, SD = 0.21; p = 0.006; Cohen’s D = 0.95). Parameter estimates were significantly greater than zero for the Wait group (t(20) = -2.51, p = 0.021), and marginally significantly lower than zero for the Cognitive group (t(20) = 1.99, p = 0.06).

Computational modeling results. A: iBIC values for four candidate models of choice behavior for both experiments. Lower values indicate better fit. B: Estimates of the bias parameter c-demand for participants in the Physical, Cognitive, and Wait cost conditions of Experiment 1. Negative values suggest the Cognitive group acted as if cognitive demands attenuated the perceived opportunity cost of the delay. C: Estimates of other model parameters indicated consistent foraging behavior across conditions and experiments. D: Synthetic behavior for Experiment 2 generated by the winning model. Error bars correspond to the empirical mean acceptance rate ± SEM per cost type, whereas lines and shaded areas show the model-generated mean acceptance rate and its SEM, respectively. The model’s behavior recapitulated the initial apparent preference for cognitive effort and the eventual convergence of acceptance rates by the end of the session

The mean values of cdemand from each group were carried over to the model of Experiment 2, and were aggregated over time according to a weighted decay into a final cglobal value. As can be seen in Fig. 9C, other parameter values were comparable across experiments, pointing to consistency in the way decision makers approached our foraging scenarios. In addition, values of αw suggested that cdemand estimates converged towards the second half of the experiment (mean = 1.75, SD = 2.07), gradually reducing bias by the end of the experimental session (cglobal mean = -0.02, SD = 0.06). Model fits provided good qualitative resemblance to the observed choice dynamics in each experiment. This is illustrated in Fig. 9, in which 95% confidence intervals from within-subject fits are overlaid on empirical choices from Experiment 2.

Discussion

Understanding the sporadic attractiveness of effort remains elusive. In this study, we sought to identify factors underlying this phenomenon, and tested the hypothesis that the perceived cost or value of effort could depend on whether the decision environment contains opportunities to achieve the same outcome through different means. We conducted two foraging experiments that exposed individuals to a single or intermixed types of demands (cognitive effort, physical effort, or passive delay). We found a tendency toward engaging with cognitively effortful prospects when individuals faced a single form of demand in Experiment 1, whereas an initially similar choice pattern faded over time in Experiment 2 when other costs were also experienced.

Our findings provided partial support for our pre-registered hypotheses (https://osf.io/2rsgm/registrations). In accord with our predictions, decision makers in both experiments successfully approximated reward-maximizing strategies, as evidenced by acceptance rates near optimal levels. This is in line with previous foraging experiments, where the main deviation from optimality has been a tendency to overharvest (Cash-Padgett & Hayden, 2020; Constantino & Daw, 2015; Garrett & Daw, 2020; Le Heron et al., 2020; Wikenheiser et al., 2013). In contrast, our prediction that effort requirements would lead to greater acceptance rates only held for the Cognitive group, and this tendency did not reverse as predicted in Experiment 2. These hypotheses originally stemmed from prevalent proposals that incentivized work should become more appealing when presented in isolation (Eisenberger, 1992; Inzlicht et al., 2018), but that people would opt for the easiest way to achieve desired outcomes when multiple alternatives are available (Hull, 1943; Kool et al., 2010; Olivola & Shafir, 2013). Instead, our novel use of foraging paradigms, in addition to our inclusion of types of effort that are often studied separately, highlighted that reward-independent task characteristics can promote or inhibit the pursuit of effortful prospects.

The observed choice dynamics ruled out a number of candidate explanations. For instance, if the subjective costs and benefits of effort or delay were intrinsic (or if participants merely found the cognitive tasks enjoyable), we would not have expected to observe the gradual convergence in acceptances when all three forms of demand were intermixed in the same environment. This line of reasoning also speaks against a direct role of boredom in our results. The aversiveness of boredom can lead people to attempt any available activity in order to avoid it (Westgate, 2020; Wilson et al., 2014). In our paradigm, such a tendency would have been expected to lead to a persistent preference for either effortful condition. Lastly, the gradual convergence of acceptance rates across cost conditions rules out the possibility that our sample was mostly composed of demand seekers. Some individuals express a strong need for cognition, which can bias studies of mental labor (Cacioppo et al., 1996; Sayalı & Badre, 2019). Even though the prevalence of this trait in our college-level population could have driven the preferences for cognitive effort observed in both experiments, we would have expected such individuals to maintain this preference in the face of alternatives. Similarly, it is possible that people were driven to improve in the cognitive tasks, a motivation that might have boosted acceptance rates. The consistently high performance shown in the cognitive tasks (i.e., relatively few forced travels) reduces the likelihood of this possibility in our view, but it cannot be ruled out. Another potential explanation worth considering is fatigue. Previous accounts have distinguished between short term fatigue, which operates in short term bursts that require interleaved rest, and long-term fatigue, which produces a slow decay in performance that is unrecoverable (Bächinger et al., 2019; Meyniel et al., 2013; Müller et al., 2021). On one hand, we found no sequential effects of quitting on choices, speaking against the type of periodic rest that is characteristic of short-term fatigue. In terms of long-term fatigue, even though participants decreased their acceptance rates for effort in both experiments (a hallmark of effortful exertion), we would have expected fatigued participants in Experiment 2 to increase engagement with Wait trials, substituting Wait trials for effort trials in order to preserve their earnings. Furthermore, our observations are unlikely to be due to the risk of failing to successfully complete effortful trials, as participants remained accurate throughout both experiments, and efficacy was consistent across cost conditions and experiments (Frömer et al., 2021). Finally, we cannot conclude that cognitive effort gained value from its rewarding outcomes, as has been proposed in the past (Eisenberger & Cameron, 1996). This phenomenon would not have been expected to benefit a single type of demand differentially because cognitive effort, physical effort, and unfilled delay were rewarded at the same rate.