Abstract

Traditional theories of vision assume that figures and grounds are assigned early in processing, with semantics being accessed later and only by figures, not by grounds. We tested this assumption by showing observers novel silhouettes with borders that suggested familiar objects on their ground side. The ground appeared shapeless near the figure’s borders; the familiar objects suggested there were not consciously perceived. Participants’ task was to categorize words shown immediately after the silhouettes as naming natural versus artificial objects. The words named objects from the same or from a different superordinate category as the familiar objects suggested in the silhouette ground. In Experiment 1, participants categorized words faster when they followed silhouettes suggesting upright familiar objects from the same rather than a different category on their ground sides, whereas no category differences were observed for inverted silhouettes. This is the first study to show unequivocally that, contrary to traditional assumptions, semantics are accessed for objects that might be perceived on the side of a border that will ultimately be perceived as a shapeless ground. Moreover, although the competition for figural status results in suppression of the shape of the losing contender, its semantics are not suppressed. In Experiment 2, we used longer silhouette-to-word stimulus onset asynchronies to test whether semantics would be suppressed later in time, as might occur if semantics were accessed later than shape memories. No evidence of semantic suppression was observed; indeed, semantic activation of the objects suggested on the ground side of a border appeared to be short-lived. Implications for feedforward versus dynamical interactive theories of object perception are discussed.

Similar content being viewed by others

Although seemingly effortless, visually perceiving the world around us requires extensive processing. To produce a coherent percept, the visual system must organize the information it receives, and perceptual organization entails selection (Peterson & Kimchi, 2013). For instance, when two abutting regions of the visual field share a border, only one of them is typically perceived as an object (i.e., a figure); the other is not. The figure is perceived as having a definite shape, whereas the other region appears to continue behind the figure as its local background (or ground) and is perceived as shapeless near the figure’s borders. An important question regarding perceptual organization is “How much processing occurs for the regions ultimately perceived as shapeless grounds before figure–ground assignments are made?” Clearly, some processing of both figures and grounds must occur in order for perceptual organization to take place, but it remains unclear whether such processing is restricted to image-based factors that are processed at low levels in the visual hierarchy, or whether higher-level processing occurs as well.

According to traditional views, figure–ground segregation occurs at a low-level stage of a serial, hierarchical processing stream (e.g., Hebb, 1949; Koffka, 1935; Köhler, 1929/1947; Riesenhuber & Poggio, 1999; Zhou, Friedman, & von der Heydt, 2000). Image-based factors, including symmetry, convexity, small area, and closure, determine which regions will be perceived as figures; regions possessing these features are more likely to be perceived as figures than are abutting regions that are asymmetric, concave, larger in area, or surrounding. According to these traditional views, representations that depend upon the past experience of the individual, such as memories of object structure and meaning, are accessed only after figure–ground assignment has occurred, and then only for figures, not for grounds.

Contrary to this traditional view, research has indicated that past experience exerts an influence on figure assignment: A region on the side of a border where a well-known object is sketched is more likely to be perceived as figure when it depicts that object in its canonical, upright orientation rather than an inverted orientation (e.g., Gibson & Peterson, 1994; Peterson & Gibson, 1994b; Peterson, Harvey, & Weidenbacher, 1991; for other demonstrations of past experience effects, see Navon, 2011; Vecera & Farah, 1997). The orientation dependency of these effects is critical to the claim that past experience is involved, inasmuch as, by definition, objects that have a canonical orientation have been experienced far more often in that orientation than in an inverted orientation. Therefore, orientation inversion changes their familiarity but leaves lower-level features known to influence figure assignment unchanged.

Evidence that object memories exert an influence on figure assignment indicates that such memories are accessed prior to figure assignment, or simultaneously with it (see Grill-Spector & Kanwisher, 2001). These early experiments could not speak directly to the important question of whether object memories are accessed for regions perceived as grounds, however, because the displays used initially were otherwise ambiguous, in that the abutting regions were matched as closely as possible for image-based factors. Thus, it was possible that object memories were accessed only for regions perceived as figures. Peterson and Gibson (1994a) took a further step by manipulating the symmetry versus asymmetry of two abutting regions sharing a central border in a 2 × 2 design in which one region sketched a familiar object and the other region did not. Familiarity effects were evident in figure–ground reports made by observers who viewed these displays for short durations: When both regions were symmetric, participants reported perceiving the figure lying on the side of the border where a familiar object was sketched more often when the displays were upright than when they were inverted (77% vs. 66% of trials). Hence, familiarity enhanced the effects of symmetry. Familiarity also seemed to compete with symmetry: Participants saw the figure on the familiar side of the border less often in upright displays when the region depicting the familiar object was asymmetric and the abutting region was symmetric (48% of trials, which was still more often than in inverted displays: 38% of trials). Taken together, Peterson and Gibson’s (1994a) results suggested that figure assignment is based on competition and cooperation between image-based factors and past experience embodied in access to object memories. When the two compete, as they do when the region depicting an upright familiar object is asymmetric and the abutting region is symmetric, each wins the competition approximately half the time and is perceived as the figure. This interpretation assumes that object memories were accessed for regions that were ultimately perceived as grounds when past experience lost the competition to symmetry. However, another explanation remained possible—that on trials on which the figure was perceived on the symmetric (unfamiliar) side of the border, object memories for the opposite side were not accessed in time to affect figure assignment. Thus, the question of whether object memories are accessed for regions perceived as grounds remained unanswered.

Peterson and Skow (2008; see also Peterson & Kim, 2001) directly tested whether memories of object structure are accessed for regions perceived as grounds. To do so, they used stimuli in which portions of well-known objects were suggested on the ground side of a figure’s border. Participants were unaware of these well-known objects because they lay on the ground side, which appeared shapeless near the silhouette’s border. Reasoning within an inhibitory competition model of figure assignment in which the region that loses the competition for figural status is suppressed (Grossberg, 1994; Kienker, Sejnowski, Hinton, & Schumacher, 1986; Sejnowski & Hinton, 1987), Peterson and Skow expected that if memories of the well-known objects suggested on the ground side were accessed before the figure was assigned on the opposite side of the border, then those object memories should be inhibited when the figure was perceived on the opposite side of the border. Accordingly, the researchers predicted that responses requiring activation of the object memories matching the objects suggested on the ground side of their figures would be slowed (reflecting the inhibition).

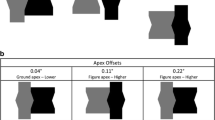

Peterson and Skow (2008) tested this prediction using silhouettes that were small, enclosed, symmetrical, and surrounded; these image-based features all favored the interpretation that the region enclosed by the silhouette borders was the figure. All of the silhouettes were novel in shape (see Fig. 1). The vertical borders of half of these silhouettes suggested a novel, meaningless shape on the ground side as well (control silhouettes, Fig. 1a). The vertical borders of the other half of the silhouettes suggested portions of real-world objects on the ground side (experimental silhouettes, Fig. 1b). Importantly, participants perceived the outsides of all silhouettes as shapeless grounds; they did not consciously perceive the real-world objects suggested on the ground side of the experimental silhouettes.Footnote 1 These silhouettes were shown individually and briefly at fixation and were followed immediately by a line drawing of either a well-known or a novel object. Participants’ task was to press a key as quickly as possible to classify the line drawing as portraying a real-world or a novel object. The critical condition involved line drawings of real-world objects shown after experimental silhouettes: The line drawing depicted either another version of the object suggested on the outside of the silhouette or an object from a different superordinate category. As would be expected if memories of the well-known objects suggested on the ground side of the experimental silhouettes were first accessed and then inhibited when the figure was assigned on the opposite side, participants were slower to accurately classify line drawings depicting another version of the object suggested in the ground of the preceding experimental silhouette than an object from a different superordinate category.Footnote 2 Peterson and Skow’s (2008) line drawings depicted objects with the same name and same general shape as the object on the ground side of the silhouette, yet the line-drawn object and the silhouette had different borders. Hence, their results index more than the suppression of the edge units activated by the preceding silhouette. Therefore, Peterson and Skow’s experiments demonstrated that, contrary to the traditional assumption, memories of object structure are accessed for regions ultimately perceived as shapeless grounds.

Samples of novel silhouettes used in Peterson and Skow (2008). (A) Control silhouettes in which the ground sides (white sides) of the silhouettes suggested novel, meaningless shapes. (B) Experimental silhouettes in which portions of familiar, real-world objects were suggested on the ground sides of the black silhouettes. Shown here, from left to right, are boots, butterflies, and bunches of grapes.

Important questions remained, including “Are the superordinate semantics of the object suggested on the ground side of a border activated as well as its shape structure? And if so, are they, like memories of object structure, suppressed?” Research has shown that coarse category distinctions (e.g., between figures representing living and nonliving things) are evident in neural responses within the first 150 ms of processing (Clarke, Taylor, Devereux, Randall, & Tyler, 2013; Dell’Acqua et al., 2010; Fabre-Thorpe, 2011; Liu, Agam, Madsen, & Kreiman, 2009; Thorpe, Fize, & Marlot, 1996). Given this fast access to category representations, it is possible that, in addition to memories of object structure, coarse category knowledge regarding the objects suggested on the ground sides of the borders of the experimental silhouettes used by Peterson and Skow (2008) was activated before perceptual organization had determined that side of the border to be shapeless. To further understand what type of processing occurs before figure assignment, Peterson, Cacciamani, Mojica, and Sanguinetti (2012) adapted Peterson and Skow’s paradigm to investigate whether the meaning (semantics) as well as the shape of objects suggested on the ground side of borders was accessed.

Peterson et al.’s (2012) participants categorized common words as naming natural or artificial objects. The words were presented one at a time; each was preceded by a novel silhouette with a portion of a real-world object suggested on the ground side of its border (i.e., one of the experimental silhouettes used by Peterson & Skow, 2008). Peterson et al. manipulated the relationship between the word and the object suggested in the ground of the preceding silhouette. The word named either the same object from the same conceptual category (natural or artificial) as the real-world object in the ground of the silhouette, a different object from the same conceptual category, or a different object from a different conceptual category (see Fig. 2).

Stimuli and conditions used in Peterson et al. (2012). The two rightmost columns (the different-object conditions) were also used in the present study. The arrows represent time. The words were presented centered on the same location as the previously displayed silhouettes. Portions of real-world objects are suggested in the ground regions of all of the novel silhouettes. The real-world objects shown here, from left to right, are (top row) hands, leaves, and axes; (bottom row) anchors, umbrellas, and seahorses.

Evidence that semantics are accessed for regions ultimately determined to be grounds could take one of two forms: (1) responses to same-category words could be slowed relative to responses to different-category words, indicating that inhibition of the semantics of objects suggested by regions ultimately determined to be grounds is similar to the inhibition of shape reported by Peterson and Skow (2008), or (2) responses to same-category words could be speeded relative to responses to different-category words, indicating that the semantics of objects suggested by regions ultimately determined to be grounds are accessed but not inhibited. The latter result could be obtained if the competition involved in figure assignment were for the perception of shape near a border, not for meaning.

As would be expected if semantic knowledge is activated before figure assignment but, for the most part, is not inhibited, Peterson et al.’s (2012) participants were faster to categorize words naming objects from the same category as the object suggested on the ground side of the preceding silhouette, relative to words naming objects from a different category. The semantic system represents shape properties as well as other semantic features (Tyler & Moss, 2001). Consistent with this result and those of Peterson and Skow (2008), Peterson et al. also found evidence that the shape features of the object suggested in the ground were inhibited: For same-category words, participants categorized words naming the same object that was suggested in the silhouette’s ground more slowly than words naming a different object from the same category. Thus, Peterson et al. provided supporting evidence that shape features for objects on the ground side of a figure are inhibited, but importantly, their results also suggest that this inhibition does not apply to non-shape aspects of semantics.

Although Peterson et al.’s (2012) findings imply that coarse category knowledge is activated for shapes on the ground side of borders and, unlike shape properties, is not suppressed, there remains a compelling alternative explanation: that the features of the silhouette borders per se, rather than the semantics of the objects suggested on the ground side, activated natural versus artificial object semantics and were responsible for the shorter word categorization response times in the same- versus different-category conditions. Natural objects are more likely to have curved borders, whereas artificial objects are more likely to have straight borders (Kurbat, 1997; Zusne, 1975). If the silhouette borders per se had these different features by virtue of suggesting different categories of objects on their ground sides, then the presentation of silhouettes with these different features just before the words may have activated the coarse semantics of natural versus artificial objects, thereby speeding categorization responses to same-category words and slowing responses to different-category words. According to this alternative account, semantic access from the objects suggested on the ground sides did not play a role in the results reported by Peterson et al. Because this alternative explanation of their results cannot be ruled out, those results do not challenge the traditional assumption that semantic activation occurs for figures and not for grounds.

Preliminary experiment

As a preliminary test of the feasibility of this alternative interpretation, we asked eight naive viewers to rate the curvature of the borders of Peterson et al.’s silhouettes on a scale from 0 (completely straight) to 100 (completely curved). These pilot data showed that silhouettes that suggested portions of natural objects on their ground sides were indeed rated as being curvier than those that suggested portions of artificial objects on their ground sides [mean ratings of 67.0 and 36.9, respectively; t(7) = 6.75, p < .001]. Therefore, taken alone, Peterson et al.’s (2012) study cannot answer the question of whether semantics are accessed for an object suggested on the ground side of a border: Their participants’ responses may have been primed by features of the borders of the figures themselves, rather than by the semantics of the objects suggested on the ground side of the borders. If the alternative interpretation is correct, the previous results cannot speak to whether semantics are accessed for the objects suggested on the ground side, nor can they speak to the question of whether or not semantics are suppressed, if activated. Because the answers to these questions are critically important for understanding how object perception occurs, the present study was conducted to better test this question.

The present study

In the present study, we sought evidence that the semantics of objects that were suggested on the ground side of the borders of a figure were accessed in the course of figure assignment by presenting the silhouettes in two orientations: their original orientation, in which the object suggested on the ground side of the border was in its familiar, upright orientation (henceforth, the “upright orientation”), and an orientation in which the object suggested on the ground side of the border was upside down (henceforth, the “inverted orientation”). The features of the borders (curved vs. straight) remain the same over the change in orientation from upright to inverted. Hence, if those features are responsible for the faster response times in the same- versus different-category conditions tested by Peterson et al. (2012), the same pattern should be obtained regardless of the orientation of the silhouette preceding the word. However, inverting stimuli that depict objects with a typical upright orientation slows access to memories of object structure (Jolicœur, 1985; Oram & Perrett, 1992). Moreover, Peterson and colleagues found that influences from object memories on figure assignment are substantially reduced by stimulus inversion (Gibson & Peterson, 1994; Peterson & Gibson, 1994a, b; Peterson et al., 1991), leading them to conclude that only object memories accessed quickly after stimulus onset can influence figure–ground perception. We reasoned that if the semantics of objects suggested on the ground side of a silhouette's borders are accessed, then we should observe faster word categorization response times in the same- versus the different-category conditions when upright, but not inverted, silhouettes precede the words. Since Peterson and Skow (2008) and Peterson et al. (2012) have already shed light on access to and the suppression of structural information pertaining to grounds, the present study focuses solely on semantics; thus, only the two different-object conditions were tested here: same-category and different-category (see the two rightmost columns of Fig. 2).

The present study had a second aim: if semantics are accessed for grounds, to gauge whether they are subsequently suppressed (as object features are), and if not, to gauge how long semantic activation lasts. To this end, we manipulated the silhouette-to-word stimulus onset asynchrony (SOA). Peterson et al.(2012) used a silhouette-to-word SOA of 83 ms. Here, we tested longer SOAs, so that if we replicated their results with upright but not inverted silhouette primes, we could simultaneously extend them to a longer SOA.

Moreover, using longer SOAs allowed us to test the possibility that the semantics of objects suggested on the ground side of a figure’s borders are accessed and, like structural information, ultimately suppressed. This might be the case if it takes longer to access semantic information than structural object properties. Given that it is likely that activation precedes inhibition, the 83-ms SOA used by Peterson et al. (2012) may have assayed the time when semantic information was activated. Testing longer SOAs would allow us to better investigate whether nonshape attributes of the object suggested on the ground side of a border (i.e., semantics/meaning) are not suppressed when that object loses the competition for figural status, as might be expected if the competition occurs between shape properties only, or instead, whether semantics are suppressed, albeit later in time.

Précis

In Experiment 1, participants were significantly more efficient at categorizing words when they followed silhouettes that suggested upright portions of real-world objects from the same rather than from a different category on their ground sides; importantly, this pattern was not observed when the silhouettes were inverted. Thus, Experiment 1 replicated and extended the results reported by Peterson et al. (2012). Here, replication was obtained under conditions designed to allow us to rule out an interpretation in which responses were affected by the features of the silhouette figures per se, rather than by activation of the semantics of the objects suggested in the grounds of the silhouettes. Experiment 1 showed that semantic activation from grounds can be assayed using a silhouette-to-word SOA of 133 ms—longer than the 83-ms SOA at which Peterson et al. observed semantic facilitation and Peterson and Skow (2008) observed shape suppression, and longer than the 100-ms SOA at which Peterson and Skow were no longer able to measure suppression of responses to the shape of the object suggested on the ground side of their stimuli. Experiment 2 used silhouette-to-word SOAs of 166 and 250 ms to further test whether suppression of the semantics of the shape suggested on the ground side of a border occurs later in time. Once again, no evidence of suppression was observed at either SOA. Moreover, the 166- and 250-ms SOAs were not sufficient to produce a measurable facilitation of semantic categorization responses, either. Therefore, Experiment 2 sets limits on the longevity of semantic activation initiated by objects suggested on the ground side of a border.

Experiment 1

Method

Participants

Thirty-four undergraduate students (25 female, 9 male) from the University of Arizona participated in this experiment in order to partially fulfill course requirements. Participants gave informed consent before taking part in the experiment. All participants were native English speakers and reported normal or corrected-to-normal visual acuity. An additional 13 participants were removed from the analysis: Of these, three had error rates that exceeded our criterion (>15% incorrect), six had a mean score in at least one condition that differed from the condition mean by more than two standard deviations (these participants were classified as “outliers”), and four were removed because they indicated in post-experiment questioning that they were aware of the real-world objects in the grounds of at least one of the silhouettes (see the Procedure section for a discussion of our post-experiment questions).

Stimuli and apparatus

The stimuli consisted of both words and silhouettes (listed by condition in the Appendix). The words (N = 32) were those used by Peterson et al. (2012); all of the words named concrete objects that were either natural (N = 16) or artificial (N = 16). Extreme care was taken in these experiments to ensure that the words used in the same-category condition were equated with those used in the different-category condition with regard to length, word frequency, and baseline response time—factors that have been shown to affect categorization response times (see Peterson et al., 2012, for more information). The words subtended an average of 0.6° × 1.7° of visual angle in height (H) and width (W), respectively, and were presented in black Times New Roman font centered on a white background.

The silhouettes (N = 32) were those used by Peterson et al. (2012). The silhouettes were small, enclosed, and symmetric, such that classic Gestalt configural cues favored perceiving the inside, black region as the figure (see the stimuli in the rightmost two columns of Fig. 2). The black region inside the silhouette’s borders always portrayed a novel shape; however, portions of real-world objects were suggested in the white region on the outside of the vertical borders of each silhouette. The stimuli were designed so that participants would see the white region as the ground to the black silhouettes and be unaware of the real-world objects suggested in the grounds. (See below for the post-experiment questioning procedure used to ascertain that participants were indeed unaware of the objects suggested in the silhouette grounds.) Half of the real-world objects suggested on the ground side of the silhouettes were natural objects (N = 16), and half were artificial objects (N = 16). All of the silhouettes subtended an average visual angle of 2.9° H × 3.4° W and were presented centered on a white background that was 17.4° H × 22.6° W, adding surroundedness to the cues favoring the inside of the silhouette as being the figure.

All of the words named different objects than those shown on the outside of the silhouettes. The silhouettes and words were paired in two conditions (see the right two columns of Fig. 2): same-category (N = 16) and different-category (N = 16). In the same-category condition, the real-world object suggested on the ground side of the silhouette was from the same conceptual category (natural or artificial) as the object named by the word shown afterward. In the different-category condition, these conceptual categories differed in that one was natural whereas the other was artificial.

A 21-in. Sony CRT monitor and a personal computer were used to present the stimuli and record responses. Participants viewed the monitor from a distance of 96 cm and utilized a chinrest to maintain their head position and viewing distance. Participants used a foot pedal to initiate each trial and to advance through the instructions. Responses were recorded using a custom response box with two horizontally arranged buttons. The presentation software was DMDX (Forster & Forster, 2003).

Design and procedure

Instructions were presented on the computer screen and were simultaneously read to the participant by the experimenter. Participants were told that their task was to categorize words as naming natural or artificial objects as quickly and as accurately as possible. Natural objects were defined as items found in nature (i.e., animals and plants), and artificial objects were defined as man-made items (i.e., tools, appliances, and instruments). The participants responded using a button box with the left and right buttons labeled “natural” and “artificial” (assignments of the buttons to these categories were balanced across participants).

A sample trial is shown in Fig. 3. Each trial began with a fixation cross. When the participant’s eyes were fixated on the cross and they were ready for a trial to begin, they pressed the foot pedal. Upon foot pedal press, a black silhouette was displayed for 50 ms, followed by a blank white screen for 83 ms (producing a silhouette-to-word SOA of 133 ms). A word then appeared in the center of the screen, and participants pressed a button to indicate their category response. Response times (RTs) were recorded from the onset of the word. After participants responded (or after 1,500 ms had elapsed), the fixation cross for the next trial appeared.

Trial structure used in both Experiments 1 and 2. Shown here is an upright different-category trial. The object suggested by the ground side of the black silhouette is an anchor, which is an artificial object, whereas the word “celery” requires a “natural object” response. The size of the screen frame is reduced here for illustrative purposes.

Before the experimental trials, participants were given 16 practice trials (eight words naming natural objects and eight words naming artificial objects, randomly intermixed). The silhouettes presented during the practice trials portrayed novel shapes on the outside as well as the inside of their borders (i.e., no real-world objects were suggested in the grounds of the silhouettes used on practice trials). The words and silhouettes presented in the practice trials were not used during the experimental trials. Participants were given feedback (“correct” or “wrong”) on their performance on the practice trials, but not the experimental trials.

Thirty-two experimental trials followed the practice trials. The silhouettes were upright for half of these trials (N = 16; eight same-category, eight different-category) and inverted for the remaining half (N = 16; eight same-category, eight different-category). Upright and inverted trials were randomly intermixed. The orientation of a given stimulus was balanced across participants, with half of the participants viewing a given stimulus upright and half viewing it inverted.

Post-experiment questioning

After the experimental trials, participants were asked a series of detailed questions to determine whether they saw (or thought they saw) any of the real-world objects suggested on the ground sides of the silhouettes. Participants typically reported that they did not see any real-world objects, because the silhouette presentation time was short and their attention was focused on the words rather than the silhouettes. Many remarked that if they noticed anything before the word, it was simply a black meaningless shape. To ascertain that participants did not perceive the real-world objects in the ground, the experimenter showed each participant a sample silhouette (not used in the experimental trials) and identified and traced the portion of the real-world object suggested on the ground side. While doing so, the experimenter first asked whether the participant was able to recognize the real-world object that was being traced. After a few moments, all participants were able to switch their percept such that they perceived the outside of the silhouette as the figure, and subsequently were able to recognize the real-world object (after which they often expressed surprise at its presence). The experimenter then directly asked whether the participant had ever noticed anything similar during the experiment—that is, real-world objects suggested on the outsides of the silhouettes. The data from participants who reported that they saw or thought they saw any real-world objects suggested on the ground sides of the silhouettes at any point during the experiment were eliminated from the analysis. Our threshold for elimination was highly conservative: Participants were removed from the analysis for displaying even the slightest hint of having seen the real-world objects during the experiment, and they did not have to name any objects that they saw. The data from four participants were not analyzed for this reason. The remaining participants reported barely even noticing the presence of the black silhouettes, because their attention was focused on the words. Thus, we are reasonably confident that those participants whose data we analyzed perceived the inside black region as the figure in the briefly exposed silhouette, as was expected given the Gestalt configural cues in play (symmetry, small area, closure, and surroundedness), as well as expectation, attention, and fixation (Peterson & Gibson, 1994b; Vecera, Flevaris, & Filapek, 2004).

Results

In our data analysis, we observed that high error rates accompanied low RTs in some conditions but not in others (see Table 1). Accordingly, we combined these two measures into inverse efficiency scores (Townsend & Ashby, 1983; for recent uses, see Gould, Rushworth, & Nobre, 2011; Kennett & Driver, 2014; Kimchi & Peterson, 2008; Romei, Driver, Schyns, & Thut, 2011; Salvagio, Cacciamani, & Peterson, 2012; Urner, Schwarzkopf, Friston, & Rees, 2013). Inverse efficiency (IE) scores are determined by dividing the mean RT by the proportion correct. IE scores combine both speed and accuracy into one simplified measure; in effect, they adjust RTs upward for high errors. Thus, a lower IE score indicates better performance (equivalent to faster RTs).

Mean IE scores for correct categorization responses are shown in Fig. 4. Categorization responses were more efficient when the word named an object from the same rather than a different category from the object suggested on the ground side of the preceding silhouette. Importantly, this difference was evident when the silhouettes were upright but not when they were inverted. A 2 × 2 within-subjects analysis of variance (ANOVA) on mean IE scores revealed a significant interaction between category condition (same/different) and orientation (upright/inverted), F(1, 33) = 5.98, p < .03, with a larger difference between IE scores in the same-category than in the different-category conditions for upright (625 vs. 680) than for inverted (656 vs. 666) silhouettes. Follow-up t tests revealed that the difference between same-category versus different-category conditions was statistically significant for upright silhouettes, t(33) = 4.36, p < .001, but not for inverted silhouettes, p > .54. Additionally, performance in the same-category condition was significantly better when the silhouettes were upright than when they were inverted, t(33) = 2.04, p = .05, whereas performance in the different-category condition was not changed by stimulus inversion, p > .42. A significant main effect of condition, F(1, 33) = 8.196, p < .01, was subsumed by the interaction between orientation and category condition.Footnote 3

Experiment 1 results. Error bars represent standard errors of the means of the difference scores (different-category minus same-category). * p < .05, ** p < .01

The above analyses did not include the three participants who exceeded our error criterion (>15% incorrect) or the six participants who were classified as outliers (responses more than two standard deviations above or below the mean). In order to determine whether excluding these participants changed the pattern of our results, a separate IE analysis was conducted in which these high-error and outlier participants were included. The same pattern was observed as when those participants had been removed. Specifically, t tests revealed that, for upright stimuli, performance was still significantly better in the same- than in the different-category condition, t(42) = 4.00, p < .001 (IE scores of 657 and 716, respectively), and this difference was not statistically significant for inverted silhouettes, p > .24 (IE scores of 687 and 707, respectively). Additionally, IE scores in the upright same-category condition were lower than those in the inverted same-category condition, though with these high variability participants, the difference was only marginally significant, t(42) = 1.76, p = .08. This analysis shows that applying our standard procedure of excluding high-error participants and outliers did not substantially alter the pattern of results; it simply reduced the variability of our measures.

Discussion

In Experiment 1, we found that word categorization responses were more efficient when words followed upright silhouettes that suggested real-world objects from the same rather than from a different category on their ground sides; inverting the silhouettes eliminated this effect.

The orientation dependency of our results rules out the hypothesis that the same- versus different-category effects arise from the relationship between the degree of curvature of the silhouette borders and the features of objects in the category of the paired word. Thus, Experiment 1 shows that the coarse semantics of the object suggested on the ground side of the silhouette figure are processed, even though that region is ultimately perceived as a locally shapeless ground to the black silhouette figure. Recall that the participants whose data we analyzed were unaware of the real-world objects suggested on the ground side of the silhouettes, as ascertained by rigorous post-experiment questioning. Thus, the present results provide the first unequivocal behavioral evidence that coarse category knowledge is activated for portions of real-world objects suggested on the ground side of the borders of figures, contrary to the traditional view that semantic knowledge is accessed by figures only and not by grounds. Indeed, we have definitively shown that coarse categorization responses are facilitated when the words that follow upright silhouettes name objects from the same coarse category (natural vs. artificial) as the object suggested on the ground side of the silhouette, rather than a different category, and critically, that this facilitation is observed for same-category words that follow an upright silhouette but not an inverted silhouette. The orientation dependence of our results is essential for ruling out the alternative interpretation that responses are facilitated due to the correspondence between the features of the borders of the figure per se and the object category named by the test word. The features of the borders are unchanged with an orientation change from upright to inverted, yet the speed of access to object memories and semantic knowledge regarding the object suggested on the ground side of the silhouette is slowed by stimulus inversion (Peterson & Gibson, 1994, b; Peterson et al., 1991). Previous results have shown that this slowing substantially reduced the influences of object memory on figure assignment. We assumed that access to semantic associations would also be slowed by stimulus inversion, and the results of Experiment 1 show that it was.

The results of Experiment 1 show that the semantic knowledge activated by the side of a border ultimately deemed to be ground rather than figure remains activated for some time after the silhouette appears—at least long enough to affect categorization responses to words that onset 133 ms after the silhouette (the mean RTs were approximately 625 ms). The continued activation of the semantics associated with the object suggested on the ground side of a border contrasts with the suppression of responses to the shape of that object. Peterson and Skow (2008; Peterson & Kim, 2001) asked participants to decide whether line drawings shown after silhouettes like those we used here depicted real-world or novel objects. Their participants’ object-decision RTs were slowed when the line drawing depicted the same object as was suggested on the ground side of the border of the preceding silhouette, rather than a different object. They took their data as evidence that responses to the shape of the object that loses the competition to be seen as figure are suppressed. Our data suggest that, although suppression is applied to the shape properties of the losing competitor (which have a direct bearing on object perception across the border), it is not applied to other related aspects of the losing competitor, such as its semantics.

A remaining question, however, was whether it simply takes longer for suppression to be applied to semantic than to shape properties. Peterson and Skow (2008) observed evidence of suppression of shape properties using a silhouette-to-line-drawing SOA of 83 ms, but not with a 100-ms SOA. Peterson et al. (2012) observed evidence of semantic facilitation (rather than suppression) using an 83-ms silhouette-to-word SOA. In Experiment 1, we extended the SOA by 50 ms, and once again observed evidence of semantic facilitation using a 133-ms silhouette-to-word SOA. To provide a stringent test of whether semantic information is suppressed somewhat later in time, in Experiment 2 we tested silhouette-to-word SOAs of 166 ms (Exp. 2A) and 250 ms (Exp. 2B).

There were three possible outcomes of Experiment 2: (1) Participants’ performance might be worse for same- than for different-category words following upright but not inverted silhouettes, indicating that the semantics of the losing competitor for figural status are accessed and suppressed as shape is; it just required a longer silhouette-to-word SOA to observe the suppression. (2) Participants’ performance might remain better for same- than for different-category words following upright but not inverted silhouettes, indicating that semantic activation persists beyond that measured with a 133-ms silhouette-to-word SOA. (3) Participants’ responses might no longer be different for same-category than for different-category words following upright silhouettes; such a result would place a limit on the temporal persistence of semantic activation arising from an object suggested by grounds, at least as measured by word categorization responses.

An extensive masked-priming literature has suggested that semantic facilitation decays over time, favoring the third possible outcome. For instance, many studies have found evidence of semantic priming with short prime-to-target SOAs (under 150 ms; Dehaene et al., 1998; Forster & Davis, 1984; see Forster, Mohan, & Hector, 2003 for a review), but not with longer SOAs (Bueno & Frenck-Mestre, 2008; Greenwald, Draine, & Abrams, 1996), although factors such as prime duration, task, prime-target similarity, and target duration can affect the SOAs over which priming is observed (Bueno & Frenck-Mestre, 2008; Hutchinson, 2003; Lucas, 2000; McRae & Boisvert, 1998; Plaut, 1995). Hence, if no evidence of suppression were to be observed, Experiment 2 might begin to identify the temporal limits of semantic activation from objects suggested on the ground side of the silhouette borders.

Experiment 2

Method

Participants

Thirty-four (24 female, 10 male) and 27 (19 female, 8 male) undergraduate students from the University of Arizona participated in Experiments 2A and 2B, respectively, after giving informed consent in order to partially fulfill course requirements. All of the participants were native English speakers and reported normal or corrected-to-normal visual acuity. In Experiment 2A, the data from an additional 19 participants were removed from the analysis: Of these, four had an error rate greater than 15%, 13 were classified as outliers, and two were removed because they indicated in post-experiment questioning that they were aware of (or might have been aware of) the real-world objects on the ground side of the silhouettes. In Experiment 2B, the data from an additional 18 participants were removed: four were classified as outliers, eight exceeded our error criterion, and six reported being aware of the real-world objects in the ground.

Stimuli and apparatus

The stimuli and apparatus in Experiment 2 were identical to those of Experiment 1.

Design and procedure

The design and procedure were the same as in Experiment 1, with one exception: After the silhouette had appeared on the screen for 50 ms, the blank white screen was presented for 116 ms in Experiment 2A and 200 ms in Experiment 2B. Hence, the silhouette-to-word SOAs were 166 and 250 ms, respectively.

Results and discussion

The mean IE scores for correct categorization responses are shown in Fig. 5, where it is clear that evidence of semantic activation arising from objects suggested on the ground side of the border of the silhouette was not detected with silhouette-to-word SOAs of 166 or 250 ms, nor did we find evidence of semantic suppression (Figs. 5A and 5B, respectively).

Experiment 2A

Mean IE scores in the same- and different-category conditions for the upright condition were approximately equal (637 and 639, respectively). A 2 × 2 within-subjects ANOVA with factors of match between the categories of the word and the object suggested on the ground side of the silhouette (same/different) and orientation (upright/inverted) revealed no significant main effects or interactions, all ps > .46.Footnote 4

A separate analysis was conducted that included the four high-error participants and the 13 outliers, in order to determine whether removing these participants changed our pattern of results. A 2 × 2 within-subjects ANOVA on participants’ IE scores with the factors Orientation (upright/inverted) and Category Condition (same/different) revealed no significant main effects or interactions, ps > .15. Additionally, follow-up t tests revealed no significant differences between any two conditions, ps > .10. Thus, as in Experiment 1, we can be certain that removing those participants from our primary analysis did not substantially alter our pattern—in this case, a lack of significance.

Experiment 2B

Mean IE scores in the same- and different-category conditions for the upright condition were approximately equal (680 and 690, respectively). A 2 × 2 ANOVA on IE scores with the factors orientation (upright/inverted) and category condition (same/different) revealed no significant main effects or interactions, ps > .15. Additionally, t tests showed no significant differences between any of the conditions, ps > .12, indicating that performance was equivalent across both conditions and both orientations.Footnote 5

A separate analysis was conducted that included the 18 high-error participants removed from the main analysis in Experiment 2B. A 2 × 2 within-subjects ANOVA on IE scores with the factors orientation (upright/inverted) and category condition (same/different) revealed no significant main effects or interactions, ps > .23. Additionally, follow-up t tests revealed no significant differences between any two conditions, ps > .32. This lack of significance indicates that removing these participants did not alter our pattern of results.

Comparison to Experiment 1

In order to compare the mean IE scores in Experiment 2 to those in Experiment 1, we conducted a between-subjects three-way ANOVA with the factors category condition (same/different), orientation (upright/inverted), and Experiment (Exp. 1/Exp. 2) for Experiments 2A and 2B. A significant three-way interaction was obtained for both ANOVAs [i.e., comparing Exp. 1 to 2A, F(1, 66) = 3.99, p = .05, and Exp. 1 to 2B, F(1, 59) = 3.95, p = .05]. This finding indicates that unlike the participants in Experiment 1, the participants in Experiments 2A and 2B did not perform better on same- than on different-category trials in the upright condition (cf. Fig. 4 to Figs. 5A and 5B), whereas participants in all experiments showed no difference in performance in the inverted condition. In other words, semantic activation was no longer evident in the categorization responses to words that appeared 166 ms after silhouette onset, nor did we find any evidence of semantic suppression. The results of Experiment 2B show that the 166-ms SOA condition (Exp. 2A) is not just a null point between facilitation and suppression. The three-way ANOVAs also revealed no main effect of experiment (ps > .30 and .12 for Exps. 2A and 2B, respectively), indicating that overall performance was not significantly different between the experiments (the mean IE scores were 656, 637, and 692 for Experiments 1, 2A, and 2B, respectively).

Our results, together with those of Peterson et al. (2012), show that the semantics of the object suggested on the ground side of the silhouette are activated and are not suppressed. Although the present experiment cannot assess exactly how long semantic activation lasts, on the basis of the results of Experiment 2, we can conclude that it decays to a level such that it can no longer be measured in semantic categorization responses within 800 ms (determined by adding the 166-ms SOA to the mean IE scores).

General discussion

The primary aim of the present study was to provide a stringent test of whether semantic information can be accessed for the side of a border ultimately determined to be a shapeless ground. The traditional, serial-processing view of object perception states that visual information is processed in a unidirectional, feedforward-only manner, and semantics are accessed only for the perceived object (the figure), not for the ground (Hebb, 1949; Koffka, 1935; Köhler, 1929/1947; Riesenhuber & Poggio, 1999; Serre, Oliva, & Poggio, 2007; Zhou et al., 2000). To rigorously test this assumption, we presented novel silhouettes with portions of real-world objects suggested (but not consciously perceived) on the ground side of the borders to naive participants whose task was to categorize subsequently presented words as naming natural or artificial objects. Using an SOA of 133 ms, we found that participants’ natural/artificial categorization responses were significantly more efficient for words following upright silhouettes whose grounds suggested an object from the same category versus a different category as the word; no differences were observed for inverted silhouettes. Because the orientation of the silhouettes was varied in Experiment 1, it provides the first unambiguous behavioral demonstration that prior to figure assignment, semantics are accessed for regions that suggest portions of upright well-known objects, even though those regions are ultimately perceived as shapeless, meaningless grounds.

A previous study (Peterson et al., 2012) had addressed the same question without manipulating the orientation of the silhouette. Using an 83-ms silhouette-to-word SOA, they too found that participants’ natural/artificial categorization responses were more efficient for words following silhouettes whose grounds suggested an object from the same category rather than a different category as the word. However, these previous results were equivocal, because the authors used upright stimuli only and not inverted stimuli. Therefore, it was impossible to know whether the results truly assayed semantic activation by the object suggested on the ground side of the silhouette, or whether the different features of the silhouette borders per se activated natural versus artificial objects. Our addition of the orientation manipulation allowed us to confidently attribute our results to semantic access for the objects suggested on the ground side of the silhouette border. Indeed, inverting the stimuli leaves the curved versus straight border features of the silhouettes unchanged, but slows access to object memories such that they do not exert an influence on figure assignment (Peterson & Gibson, 1994a, 1994b; Peterson et al., 1991), nor do they robustly activate semantics. Therefore, the results of Experiment 1 contradict the traditional view that semantic access occurs for figures only and not for grounds, and thus significantly contributes to our understanding of object perception.

Recent neurophysiological evidence has supported our claim that the meaning of objects suggested on the ground side of a border is assessed in the course of figure assignment. Sanguinetti, Allen, and Peterson (2014) found that a neural marker of semantic processing, the N400 event-related potential, was modulated in a repetition paradigm by meaningful objects suggested in the ground regions of silhouettes like the ones used in the present study, despite the fact that participants were not consciously aware of them. The present results provide the necessary behavioral evidence that allow the N400 waveforms to be interpreted as evidence that the semantics of the objects suggested on the ground side of the border of the silhouette figures were accessed. It is important to note that without the behavioral results that we report here, the neurophysiological evidence is insufficiently constrained. Conversely, Sanguinetti et al.’s data provide converging neurophysiological evidence for our behavioral data.

These results also substantially extend the existing research on unconscious semantic access. Although evidence of implicit semantic influences from unperceived items has been found in many different paradigms (e.g., Costello, Jiang, Baartman, McGlennen, & He, 2009; Dell’Acqua & Grainger, 1999; Goodhew, Visser, Lipp, & Dux, 2011; Greenwald et al., 1996; Huckauf, Knops, Nuerk, & Willmes, 2008; Koivisto & Revonsuo, 2007; Luck, Vogel, & Shapiro, 1996; Van den Bussche, Van den Noortgate, & Reynvoet, 2009), no previous behavioral studies had investigated whether semantic activation arises from objects suggested in regions that perceptual organizing processes have determined to be shapeless grounds. Thus, the previous studies did not challenge the traditional assumption that semantic access occurs for figures and not for grounds, as the present study does.

An alternative to the traditional sequential-processing view is that perception entails a nonselective fast, feedforward pass of processing in which objects that might be perceived are processed to the highest levels, and that perceptual organization operating via interactive feedforward and feedback processing loops later selects objects that will be perceived (Bullier, 2001; Lamme & Roelfsema, 2000; see Peterson & Cacciamani, 2013, for a review). Our findings demonstrate that grounds are processed at higher levels than has been supposed in the traditional account. Therefore, our results are consistent with the proposal that the first pass of processing is nonselective. The present results do not provide evidence that feedback is involved in figure–ground perception, although other research has (e.g., Likova & Tyler, 2008; Salvagio et al., 2012; Strother, Lavell, & Vilis, 2012).

It is interesting to consider why Peterson and Skow (2008) found evidence that the shape features of unperceived objects in grounds are suppressed, such that responses to subsequently presented line drawings containing those features are slowed, whereas we found evidence that the semantics of objects suggested in grounds are not suppressed, in that responses to subsequently presented words are speeded. These differing findings may be due to the fact that shape features undergo inhibitory competition for figural status, which leads to suppression of the loser (see Likova & Tyler, 2008; Salvagio et al., 2012), but this competition—and the resulting suppression—does not extend to high-level semantic information.Footnote 6

Additionally, the present study and Peterson and Skow’s (2008) study employed different tasks, which may also account for the differing findings. Peterson and Skow’s participants reported whether a line drawing depicted a real-world or a novel object—a task designed to probe object shape. The categorization task used in the present experiments may, too, have required access to object shape, since shape information may be included in a larger semantic network (Tyler & Moss, 2001); however, given the high level of categorization needed (natural vs. artificial), activation of the broader semantic system was also necessary. Thus, it is likely that both semantics and shape information were accessed in both studies, but the resulting effects on responses (speeding or slowing) might have depended on the information required for the task and conditions. Consistent with this, Peterson et al. (2012) found evidence suggesting that both shape information and semantic information are accessed for grounds but have differential effects on behavior. They included a condition in which the word named the same object that was suggested on the ground side of the preceding silhouette. In this condition, responses were slowed, as compared to a condition in which a different object from the same category as the upcoming word was suggested in the silhouette ground (625 vs. 613 ms), consistent with the hypothesis that the part of the semantic system representing the specific shape suggested on the ground side was suppressed, and this suppression reduced activation of the broader natural or artificial object category. Note, however, that responses in both of these same-category conditions were faster than those in the different-category condition (645 ms), which indicates that semantics were accessed, thereby facilitating responses. Conversely, in Peterson and Skow (2008), semantics may have been accessed, and the resulting response facilitation (though not observed in RTs, due to the object decision task) might have reduced the degree to which shape suppression slowed RTs. These interactions between semantics and shape are interesting to consider and should be explored further in future studies.

It is not clear whether a lexical decision task or a task requiring basic-level categorization of the words or lexical decisions would show a pattern similar to the one that we report here. Recent work has shown that access to superordinate categorical information may be faster than access to basic- and subordinate-level access (Macé, Joubert, Nespoulous, & Fabre-Thorpe, 2009; Mohan & Arun, 2012). Moreover, neuroimaging studies have shown that access to superordinate versus subordinate category information recruits different neural resources, with the former involving the posterior inferior temporal cortex, and the latter activating more anterior regions, including areas of the medial temporal lobe (Kriegeskorte et al., 2008; Raposo, Mendes, & Marques, 2012; Tyler et al., 2004). Thus, using a task requiring superordinate categorization as we did in our study may be most likely to produce an observable effect, although it would be interesting to investigate whether effects similar to ours would be observed with a task that required more fine-grained word categorizations.

Experiment 1 showed that the semantics of the object suggested on the ground side of the border of a figure are accessed and, unlike shape properties, are not suppressed. Experiment 2 used longer silhouette-to-word SOAs in order to further test whether semantics are suppressed, but at a later point in time. We found no evidence that semantics are suppressed, even with silhouette-to-word SOAs as long as 166 and 250 ms. We did find, however, that semantic activation decays over time, such that when the category of the word matched that of the object suggested in the ground of an upright silhouette that preceded the word by 166 ms, we were no longer able to measure facilitation in word categorization responses.

These results should not be taken as evidence that semantic activation decays completely between 133 and 166 ms post silhouette onset. The only conclusion that can be reached is that semantic activation has decayed sufficiently by 166 ms after silhouette onset that it no longer exerts a measurable influence on natural/artificial word categorization performance (with IE scores averaging 625–680). It is not known where in the course of word categorization semantic priming exerts its influence. We can say that semantic activation almost certainly lasts longer than 133 ms, but how much longer is unknown, in light of the present data. Our results coincide nicely with the finding in the masked-priming literature that semantic priming is evident at short but not long SOAs (Bueno & Frenck-Mestre, 2008; Dehaene et al., 1998; Forster & Davis, 1984; Forster et al., 2003).

Converging evidence has come from psychophysiology studies, in which it has been shown that coarse categorical information pertaining to objects (i.e., figures) is activated with the first 150 ms of processing (Clarke et al., 2013; Dell’Acqua et al., 2010; Fabre-Thorpe, 2011; Liu et al., 2009; Thorpe et al., 1996). Moreover, the semantic activation is not sustained: The signal dissipates within 150–200 ms of stimulus onset (Dell’Acqua et al., 2010; Kiefer & Spitzer, 2000). That the effects of semantic access to ground regions are evident very early and diminish over time is consistent with the hypothesis that the semantic activation for ground regions is being accessed on an initial, fast, feedforward pass through the visual system, prior to the completion of figure assignment. Ultimately, the ground region is deemed shapeless and meaningless, and the high-level semantics that had been originally activated are no longer active. Hence, our effects are only observable in participants’ categorization behavior at short SOAs.

Could it be that, at the longer SOAs tested in Experiment 2, the silhouette fell outside a temporal attentional window centered on the target, and therefore could not influence categorization performance (see Naccache, Blandin, & Dehaene, 2002)? We think not, because according to Naccache et al., this attentional window extends 200–300 ms prior to the target; our SOAs fell within this attentional window.

The results of the present study hinge on our assumption that the insides of the silhouettes were perceived as the figure, whereas the outsides were perceived as shapeless grounds. The silhouettes were carefully designed such that the inside would be perceived as the figure, due to the presence of Gestalt configural cues such as small area, closure, symmetry, and surroundedness. Moreover, the silhouettes were centered on the participant’s fixation point, and fixated regions are more likely to be perceived as figures (Peterson & Gibson, 1994b). In addition, expectation has an effect on perception, and participants expected to see a closed silhouette on each trial. To be certain that we included data only from participants who perceived the insides of the silhouettes as figures, we asked participants in rigorous post-experiment questioning whether they had perceived any of the real-world objects in the ground, as we have done in previously published studies using these stimuli (Peterson & Kim, 2001; Peterson & Skow, 2008; Salvagio et al., 2012; Sanguinetti et al., 2014; Trujillo, Allen, Schnyer, & Peterson, 2010). If participants reported that they saw any real-world objects (or even thought they had), their data were eliminated from the analysis (four participants in Exp. 1, two participants in Exp. 2A, and six participants in Exp. 2B). Because we asked these questions only after the experiment, the possibility that participants may have noticed the real-world objects during a trial but simply forgot them by the end of the experiment can never be fully ruled out. We could not ask observers whether they saw the real-world objects on the ground side of the borders after each trial. Had we done so, we would have alerted participants to their presence, which likely would have changed their behavior such that they began to look for objects in the grounds, and this would have sabotaged our intention to probe semantic access for objects suggested in the grounds. Given our extensive questioning and conservative elimination threshold, we are reasonably confident that the participants whose data were included in the analyses did not consciously perceive the real-world objects suggested on the ground sides of the silhouettes. In a related experiment, Sanguinetti et al. (2014) found that an event-related potential (the P600) that indexes recognition memory was observed for repetitions of silhouettes that portrayed real-world objects on the figure sides of their borders, but not for repetitions of silhouettes that that suggested real-world objects on the ground sides of their borders. Had their participants been aware of the real-world objects suggested in the grounds, even on a trial-by-trial basis, the P600 should have been larger with repetition, and yet it was not. This is corroborating evidence for participants’ self-reports at the end of our study.

Conclusion

This study has uncovered two important findings that significantly increase our understanding of figure–ground segregation. First, we have demonstrated that the semantics of objects that are suggested on the ground side of a figure are activated in the course of figure assignment. This novel result contradicts traditional views of object perception that state that semantic access occurs only for regions ultimately perceived as figures, not for regions determined to be grounds at the borders they share with figures. Instead, our study supports the notion that object perception entails a nonselective fast pass of processing during which the regions vying for figural status are processed to high levels. Indeed, we have shown that regions ultimately perceived as shapeless, meaningless grounds are processed to the level of semantics prior to or during figure assignment. Second, we have demonstrated that semantic information activated for objects suggested on the side of a border that is ultimately perceived as a ground is not suppressed, unlike the shape properties of those objects. The activation of semantic knowledge is short-lived, although at least as assayed by categorization responses, it seems to be longer-lived than the suppression of shape properties. This result is further evidence in support of a fast, nonselective evaluation of regions that could be perceived as objects, regardless of final figural status. Together, these results support a dynamical view of object processing that posits that high and low levels of the visual hierarchy interact in evaluating both figures and grounds in order to produce a final percept.

Notes

Although readers of this article might easily be able to perceive these real-world objects, the participants in this study were naïve: They were not informed about figure–ground segregation prior to the experiment. For more information, see the Method section.

Peterson and Skow (2008) found evidence of inhibition only when the well-known objects were suggested on the ground side of the silhouette’s borders; evidence of facilitation was obtained when they were sketched on the figure side, ruling out alternative explanations of their results.

Similar patterns were evident in the RTs and errors. RTs were significantly faster for same- versus different-category words when the silhouettes were upright, t(33) = 3.64, p < .01, but not when they were inverted, p > .11. The interaction between condition and orientation was not significant in an ANOVA performed on RTs alone, however, F(1, 33) = 2.51, p > .12. Errors rates were significantly lower for same- than for different-category trials when the silhouettes were upright, t(33) = 2.23, p < .05, but not when they were inverted, p > .10. In addition, error rates were significantly lower for same-category trials when the silhouettes were upright than when they were inverted, t(33) = 2.42, p < .05. The interaction between condition and orientation was not significant in an ANOVA performed on errors alone, however, F(1, 33) = 2.73, p > .11.

A 2 × 2 within-subjects ANOVA on mean RTs with the factors Category Condition (same/different) and Orientation (upright/inverted) revealed no significant main effects or interactions, ps > .18. Moreover, t tests reveal no significant differences between any two conditions, ps > .10. The same analysis conducted on participants’ error rates also showed no significant main effects or interactions, ps > .73. Additionally, t tests revealed no significant differences between any of the conditions, ps > .80. See Table 1 for the mean RTs and error rates.

A 2 × 2 within-subjects ANOVA on mean RTs with the factors Category Condition (same/different) and Orientation (upright/inverted) revealed no significant differences, ps > .14. The same analysis conducted on participants’ error rates also showed no significant main effects or interactions, ps > .65. See Table 1 for the mean RTs and error rates.

Even if competition does extend to semantics, there would be little competition in our stimuli, because the insides of the silhouettes portrayed novel shapes that lacked meaning, and thus, their engagement in competition with the meaningful grounds at the level of semantics would be negligible.

References

Bueno, S., & Frenck-Mestre, C. (2008). The activation of semantic memory: Effects of prime exposure, prime–target relationship, and task demands. Memory & Cognition, 36, 882–898. doi:10.3758/MC.36.4.882

Bullier, J. (2001). Integrated model of visual processing. Brain Research Reviews, 36, 96–107.

Clarke, A., Taylor, K. I., Devereux, B., Randall, B., & Tyler, L. K. (2013). From perception to conception: How meaningful objects are processed over time. Cerebral Cortex, 23, 187–197.

Costello, P., Jiang, Y., Baartman, B., McGlennen, K., & He, S. (2009). Semantic and subword priming during binocular suppression. Consciousness and Cognition, 18, 375–382. doi:10.1016/j.concog.2009.02.003

Dehaene, S., Naccache, L., Le Clec’H, G., Koechlin, E., Mueller, M., Dehaene-Lambertz, G., & Le Bihan, D. (1998). Imaging unconscious semantic priming. Nature, 395, 597–600. doi:10.1038/26967

Dell’Acqua, R., & Grainger, J. (1999). Unconscious semantic priming from pictures. Cognition, 73, B1–B15. doi:10.1016/S0010-0277(99)00049-9

Dell’Acqua, R., Sessa, P., Peressotti, F., Mulatti, C., Navarrete, E., & Grainger, J. (2010). ERP evidence for ultra-fast semantic processing in the picture–word interference paradigm. Frontiers in Psychology, 1, 177. doi:10.3389/fpsyg.2010.00177

Fabre-Thorpe, M. (2011). The characteristics and limits of rapid visual categorization. Frontiers in Psychology, 2, 243. doi:10.3389/fpsyg.2011.00243

Forster, K. I., & Davis, C. (1984). Repetition priming and frequency attenuation in lexical access. Journal of Experimental Psychology: Learning, Memory, and Cognition, 10, 680–698. doi:10.1037/0278-7393.10.4.680

Forster, K. I., & Forster, J. C. (2003). DMDX: A windows display program with millisecond accuracy. Behavior Research Methods, Instruments, & Computers, 35, 116–124. doi:10.3758/BF03195503

Forster, K. I., Mohan, K., & Hector, J. (2003). The mechanics of masked priming. In S. Kinoshita & S. J. Lupker (Eds.), Masked priming: The state of the art (pp. 2–21). New York, NY: Psychology Press.

Gibson, B. S., & Peterson, M. A. (1994). Does orientation-independent object recognition precede orientation-dependent recognition? Evidence from a cueing paradigm. Journal of Experimental Psychology: Human Perception and Performance, 20, 299–316.

Goodhew, S. C., Visser, T. A., Lipp, O. V., & Dux, P. E. (2011). Competing for consciousness: Prolonged mask exposure reduces object substitution masking. Journal of Experimental Psychology: Human Perception and Performance, 37, 588–596. doi:10.1037/a0018740

Gould, I. C., Rushworth, M. F., & Nobre, A. C. (2011). Indexing the graded allocation of visuospatial attention using anticipatory alpha oscillations. Journal of Neurophysiology, 105, 1318–1326. doi:10.1152/jn.00653.2010

Greenwald, A. G., Draine, S. C., & Abrams, R. L. (1996). Three cognitive markers of unconscious semantic activation. Science, 273, 1699–1702. doi:10.1126/science.273.5282.1699

Grill-Spector, K., & Kanwisher, N. (2001). Common cortical mechanisms for different components of visual object recognition: A combined behavioral and fMRI study. Journal of Vision, 1(3), 474. doi:10.1167/1.3.474

Grossberg, S. (1994). 3-D vision and figure–ground separation by visual cortex. Perception & Psychophysics, 55, 48–120. doi:10.3758/BF03206880

Hebb, D. O. (1949). The organization of behavior: A neuropsychological approach. New York, NY: Wiley.

Huckauf, A., Knops, A., Nuerk, H. C., & Willmes, K. (2008). Semantic processing of crowded stimuli? Psychological Research, 72, 648–656.

Hutchinson, K. A. (2003). Is semantic priming due to association strength or feature overlap? A microanalytic review. Psychonomic Bulletin & Review, 10, 785–813. doi:10.3758/BF03196544

Jolicœur, P. (1985). The time to name disoriented natural objects. Memory & Cognition, 13, 289–303.

Kennett, S., & Driver, J. (2014). Within-hemifield posture changes affect tactile–visual exogenous spatial cueing without spatial precision, especially in the dark. Attention, Perception, & Psychophysics, 76, 1121–1135. doi:10.3758/s13414-013-0484-3

Kiefer, M., & Spitzer, M. (2000). Time course of conscious and unconscious semantic brain activation. Cognitive Neuroscience, 11, 2401–2407.

Kienker, P. K., Sejnowski, T. J., Hinton, G. E., & Schumacher, L. E. (1986). Separating figure from ground with a parallel network. Perception, 15, 197–216. doi:10.1068/p150197

Kimchi, R., & Peterson, M. A. (2008). Figure–ground segmentation can occur without attention. Psychological Science, 19, 660–668. doi:10.1111/j.1467-9280.2008.02140.x

Koffka, K. (1935). Principles of Gestalt psychology. New York, NY: Harcourt.

Köhler, W. (1947). Gestalt psychology: An introduction to new concepts in modern psychology. New York, NY: Liveright. Original work published 1929.

Koivisto, M., & Revonsuo, A. (2007). How meaning shapes seeing. Psychological Science, 18, 845–849. doi:10.1111/j.1467-9280.2007.01989.x

Kriegeskorte, N., Mur, M., Ruff, D. A., Kiani, R., Bodurka, J., Esteky, H., & Bandettini, P. A. (2008). Matching categorical object representations in inferior temporal cortex of man and monkey. Neuron, 60, 1126–1141. doi:10.1016/j.neuron.2008.10.043

Kurbat, M. A. (1997). Can the recognition of living things really be selectively impaired? Neuropsychologia, 35, 813–827.

Lamme, V. A. F., & Roelfsema, P. R. (2000). The distinct modes of vision offered by feedforward and recurrent processing. Trends in Neuroscience, 23, 571–579. doi:10.1016/S0166-2236(00)01657-X

Likova, L. T., & Tyler, C. W. (2008). Occipital network for figure/ground organization. Experimental Brain Research, 189, 257–267. doi:10.1007/s00221-008-1417-6

Liu, H., Agam, Y., Madsen, J. R., & Kreiman, G. (2009). Timing, timing, timing: Fast decoding of object information from intracranial field potentials in human visual cortex. Neuron, 62, 281–290.

Lucas, M. (2000). Semantic priming without association: A meta-analytic review. Psychonomic Bulletin & Review, 7, 618–630. doi:10.3758/BF03212999

Luck, S. J., Vogel, E. K., & Shapiro, K. L. (1996). Word meanings can be accessed but not reported during the attentional blink. Nature, 383, 616–618. doi:10.1038/383616a0

Macé, M. J.-M., Joubert, O. R., Nespoulous, J.-L., & Fabre-Thorpe, M. (2009). The time-course of visual categorizations: You spot the animal faster than the bird. PLoS ONE, 4(e5927), 1–12. doi:10.1371/journal.pone.0005927

McRae, K., & Boisvert, S. (1998). Automatic semantic similarity priming. Journal of Experimental Psychology: Learning, Memory, and Cognition, 24, 558–572. doi:10.1037/0278-7393.24.3.558

Mohan, K., & Arun, S. P. (2012). Similarity relations in visual search predict rapid visual categorization. Journal of Vision, 12(11), 19. doi:10.1167/12.11.19. 1–24.

Naccache, L., Blandin, E., & Dehaene, S. (2002). Unconscious masked priming depends on temporal attention. Psychological Science, 13, 416–424. doi:10.1111/1467-9280.00474

Navon, D. (2011). The effect of recognizability on figure–ground processing: Does it affect parsing or only figure selection? Quarterly Journal of Experimental Psychology, 64, 608–624. doi:10.1080/17470218.2010.516834

Oram, M. W., & Perrett, D. I. (1992). Time course of neural responses discriminating different views of the face and head. Journal of Neurophysiology, 68, 70–84.

Peterson, M. A., & Cacciamani, L. (2013). Toward a dynamical view of object perception. In S. J. Dickinson & Z. Pizlo (Eds.), Shape perception in human and computer vision: An interdisciplinary perspective (pp. 443–457). London, UK: Springer.

Peterson, M. A., Cacciamani, L., Mojica, A. J., & Sanguinetti, J. S. (2012). Meaning can be accessed for the ground side of a figure. Journal of Gestalt Theory, 34, 297–314.

Peterson, M. A., & Gibson, B. S. (1994a). Must figure–ground organization precede object recognition? An assumption in peril. Psychological Science, 5, 253–259.

Peterson, M. A., & Gibson, B. S. (1994b). Object recognition contributions to figure–ground organization: Operations on outlines and subjective contours. Perception & Psychophysics, 56, 551–564.

Peterson, M. A., Harvey, E. M., & Weidenbacher, H. J. (1991). Shape recognition contributions to figure–ground reversal: Which route counts? Journal of Experimental Psychology: Human Perception and Performance, 17, 1075–1089. doi:10.1037/0096-1523.17.4.1075

Peterson, M. A., & Kim, J. H. (2001). On what is bound in figures and grounds. Visual Cognition, 8, 329–348. doi:10.1080/13506280143000034

Peterson, M. A., & Kimchi, R. (2013). Perceptual organization. In D. Reisberg (Ed.), The Oxford handbook of cognitive psychology (pp. 9–31). Oxford, UK: Oxford University Press.

Peterson, M. A., & Skow, E. (2008). Inhibition competition between shape properties in figure–ground perception. Journal of Experimental Psychology: Human Perception and Performance, 34, 251–267. doi:10.1037/0096-1523.34.2.251

Plaut, D. C. (1995). Semantic and associative priming in a distributed attractor network. In J. D. Moore, J. F. Lehman, & A. Lesgold (Eds.), Proceedings of the Seventeenth Annual Conference of the Cognitive Science Society (Vol. 17, pp. 37–42). Hillsdale, NJ: Erlbaum.

Raposo, A., Mendes, M., & Marques, J. F. (2012). The hierarchical organization of semantic memory: Executive function in the processing of superordinate concepts. NeuroImage, 59, 1870–1878.

Riesenhuber, M., & Poggio, T. (1999). Hierarchical models of object recognition in cortex. Nature Neuroscience, 2, 1019–1025. doi:10.1038/14819

Romei, V., Driver, J., Schyns, P. G., & Thut, G. (2011). Rhythmic TMS over parietal cortex links distinct brain frequencies to global versus local visual processing. Current Biology, 21, 334–337.

Salvagio, E., Cacciamani, L., & Peterson, M. A. (2012). Competition-strength-dependent ground suppression in figure–ground perception. Attention, Perception, & Psychophysics, 74, 964–978. doi:10.3758/s13414-012-0280-5