Author Contributions

Conceptualization, V.C. and M.R.; Methodology, V.C.; Software, V.C., A.P. (Agnese Pasqualetti) and D.B.; Validation, D.B. and A.P. (Agnese Pasqualetti); Formal analysis, D.B. and A.P. (Agnese Pasqualetti); Investigation, V.C., D.B. and A.P. (Agnese Pasqualetti); Resources, M.R.; Data curation, M.R., V.C., D.B. and A.P. (Agnese Pasqualetti); Writing—original draft preparation, V.C. and M.R.; Writing—review and editing, V.C., M.G.B., G.C., A.P. (Andrea Piemonte) and M.R.; Visualization, M.R., V.C., D.B. and A.P. (Agnese Pasqualetti); Supervision, V.C., M.G.B., G.C., A.P. (Andrea Piemonte) and M.R.; Project administration, M.G.B., G.C., A.P. (Andrea Piemonte) and M.R. The draft preparation was divided as follows:

Section 1. Introduction, V.C.; 2.1. State-of-the-art—3D surveying and modeling of grid structures of the late 19th–20th–21st century, G.C. and A.P. (Andrea Piemonte); State-of-the-art—Supervised ML and CNN-based classification methods, V.C.; 3.1. Materials—La Vela spatial grid structure, M.R.; 3.2. 3D surveying and data acquisition, M.R.; 4.1. Supervised machine learning for the classification of laser scan data, A.P. (Agnese Pasqualetti); 4.2. Deep Learning for automated masking of UAV images prior to photogrammetric processing, D.B.; 4.3. Data fusion and construction of the BIM model, V.C.; 5.1. Multi-level classification of laser scan data, A. Pa.; 5.2. Classification of UAV images and improvement of the photogrammetric point cloud, D.B.; 5.3. Data fusion and construction of the BIM model, V.C.; 6. Conclusions, M.G.B. All authors have read and agreed to the published version of the manuscript.

Figure 1.

Examples of architectural structures based on space grid structures.

Figure 1.

Examples of architectural structures based on space grid structures.

Figure 2.

La Vela spatial grid structure.

Figure 2.

La Vela spatial grid structure.

Figure 3.

Original project panels of La Vela.

Figure 3.

Original project panels of La Vela.

Figure 4.

Schematic diagram of the structure with nodes and grids classification (left image), topographic network, reference system, and GCP (right image).

Figure 4.

Schematic diagram of the structure with nodes and grids classification (left image), topographic network, reference system, and GCP (right image).

Figure 5.

RPAS schema with related photogrammetric blocks acquired from the eight flights and three images (two nadiral and one oblique from block 4) with a high contrast between the structure data and background.

Figure 5.

RPAS schema with related photogrammetric blocks acquired from the eight flights and three images (two nadiral and one oblique from block 4) with a high contrast between the structure data and background.

Figure 6.

Distribution schema of 3D scanning stations and full range-based point cloud.

Figure 6.

Distribution schema of 3D scanning stations and full range-based point cloud.

Figure 7.

Integration between the active (for intrados) and passive (for extrados) survey techniques. The schema highlights the variable GSD and the filtering sphere (dashed red circle) imposed in range-based data cleaning (fixed at a 30 m of radius).

Figure 7.

Integration between the active (for intrados) and passive (for extrados) survey techniques. The schema highlights the variable GSD and the filtering sphere (dashed red circle) imposed in range-based data cleaning (fixed at a 30 m of radius).

Figure 8.

Comparison of a single mesh between range-based and image-based data with relative integration between the two systems. Outliers without overlap were excluded from the comparison.

Figure 8.

Comparison of a single mesh between range-based and image-based data with relative integration between the two systems. Outliers without overlap were excluded from the comparison.

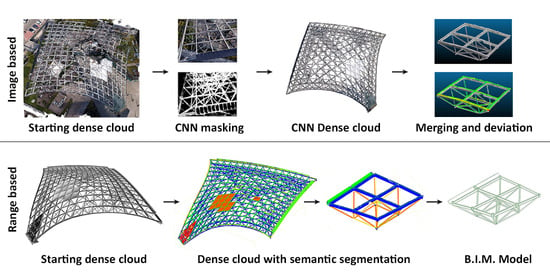

Figure 9.

Research process pipeline highlighting the main development steps.

Figure 9.

Research process pipeline highlighting the main development steps.

Figure 10.

Tree diagram of the structure classification phase.

Figure 10.

Tree diagram of the structure classification phase.

Figure 11.

Photogrammetric blocks divided into eight flight missions.

Figure 11.

Photogrammetric blocks divided into eight flight missions.

Figure 12.

Workflow for the automated masking of UAV images in photogrammetric processing.

Figure 12.

Workflow for the automated masking of UAV images in photogrammetric processing.

Figure 13.

Creation of the training set masks.

Figure 13.

Creation of the training set masks.

Figure 14.

Two selected mesh grids for the LoR analysis.

Figure 14.

Two selected mesh grids for the LoR analysis.

Figure 15.

Training set at the first classification level.

Figure 15.

Training set at the first classification level.

Figure 16.

Classified point cloud, first level of classification.

Figure 16.

Classified point cloud, first level of classification.

Figure 17.

Training set and classification results at the second classification level: Classes 12 and 13.

Figure 17.

Training set and classification results at the second classification level: Classes 12 and 13.

Figure 18.

Training set and classification results at the second classification level: Classes 10 and 11.

Figure 18.

Training set and classification results at the second classification level: Classes 10 and 11.

Figure 19.

Training set and classification results at the third classification level: Classes 12 and 13.

Figure 19.

Training set and classification results at the third classification level: Classes 12 and 13.

Figure 20.

Spherical and cylindrical nodes of the TLS point cloud.

Figure 20.

Spherical and cylindrical nodes of the TLS point cloud.

Figure 21.

Timing comparison between the manual and ML-MR approaches.

Figure 21.

Timing comparison between the manual and ML-MR approaches.

Figure 22.

Masks resulting from the first attempt.

Figure 22.

Masks resulting from the first attempt.

Figure 23.

The most significant masks resulting from the second attempt compared to those from the first attempt; images related to flight 8.

Figure 23.

The most significant masks resulting from the second attempt compared to those from the first attempt; images related to flight 8.

Figure 24.

Accuracy and loss graphs for the first training set.

Figure 24.

Accuracy and loss graphs for the first training set.

Figure 25.

Changing the brightness and contrast of images from the UAV platform.

Figure 25.

Changing the brightness and contrast of images from the UAV platform.

Figure 26.

Masks resulting from the third attempt compared to those from the second attempt. Images related to flight 3.

Figure 26.

Masks resulting from the third attempt compared to those from the second attempt. Images related to flight 3.

Figure 27.

Masks resulting from the sum of the third attempt with those of the second attempt.

Figure 27.

Masks resulting from the sum of the third attempt with those of the second attempt.

Figure 28.

Prediction of the classification on the test set of some flights of all of the attempts made.

Figure 28.

Prediction of the classification on the test set of some flights of all of the attempts made.

Figure 29.

From left to right: dense cloud obtained with no prior image masking; dense cloud obtained via CNN-based image masking; dense cloud via the final rectification process.

Figure 29.

From left to right: dense cloud obtained with no prior image masking; dense cloud obtained via CNN-based image masking; dense cloud via the final rectification process.

Figure 30.

Comparison between the manual and AI masking times.

Figure 30.

Comparison between the manual and AI masking times.

Figure 31.

Merging of the two total dense clouds from the TLS and RPAS survey.

Figure 31.

Merging of the two total dense clouds from the TLS and RPAS survey.

Figure 32.

Deviation analysis on the total dense point cloud between the TLS and RPAS data.

Figure 32.

Deviation analysis on the total dense point cloud between the TLS and RPAS data.

Figure 33.

Elements with a high total dense cloud deviation.

Figure 33.

Elements with a high total dense cloud deviation.

Figure 34.

The TLS and RPAS merged dense clouds for the two grids, with respective analysis of the deviation and reconstructed mesh.

Figure 34.

The TLS and RPAS merged dense clouds for the two grids, with respective analysis of the deviation and reconstructed mesh.

Figure 35.

The mesh reconstruction examples for the classes of rectangular and tubular beams.

Figure 35.

The mesh reconstruction examples for the classes of rectangular and tubular beams.

Figure 36.

Solid geometries built starting from the approximate mesh.

Figure 36.

Solid geometries built starting from the approximate mesh.

Figure 37.

Reconstructed model deviation analysis with respect to the survey data for the first mesh grid.

Figure 37.

Reconstructed model deviation analysis with respect to the survey data for the first mesh grid.

Figure 38.

Classified point cloud and corresponding BIM model for the two selected mesh grids.

Figure 38.

Classified point cloud and corresponding BIM model for the two selected mesh grids.

Table 1.

Classes of reliability identified to evaluate the 3D reconstruction process.

Table 1.

Classes of reliability identified to evaluate the 3D reconstruction process.

| Level of Reliability | Deviation |

|---|

| High | ≤10 mm |

| Medium | 10 mm < x ≤ 20 mm |

| Low | >20 mm |

Table 2.

Estimate of the ML-MR classification time via RF for a single classification level (in bold the total time required).

Table 2.

Estimate of the ML-MR classification time via RF for a single classification level (in bold the total time required).

| ML-MR Classification via Machine Learning | Minutes |

|---|

| 1. Creation of the training set | 120 |

| 2. Feature extraction and selection | 60 |

| 3. RF training | 60 |

| 4. Back interpolation | 30 |

| Total time required | 270 (4.5 h.) |

Table 3.

Time required for automated image masking activities (in bold the total time).

Table 3.

Time required for automated image masking activities (in bold the total time).

| AI Masking | Minutes | Working Days * |

|---|

| 1. Creation of the training set | 9695 | 21 |

| 2. Editing in Lightroom | 80 | - |

| 3. Deep learning network training | 270 | - |

| 4. Mask rectification | 135 | - |

| Total time required | 10,180 | 21 |

Table 4.

Comparison of the annotation times between manual masking and AI masking.

Table 4.

Comparison of the annotation times between manual masking and AI masking.

| Manual masking | ≈100 working days

(Manual single image annotation ≈ 35 min) |

| AI masking | ≈21 working days

|

Table 5.

The LoR evaluation for the first grid, based on deviation from the survey data.

Table 5.

The LoR evaluation for the first grid, based on deviation from the survey data.

| Element | Deviation from Survey Data (mm) | Level of Reliability |

|---|

| Rectangular beams | 10 | High |

| Tubular beams | 4 | High |

| Spherical knots | 13 | Medium |

| Cylindrical knots | 40 | Low |

| Gutter | 15 | Medium |