High-Throughput Phenotyping of Soybean Maturity Using Time Series UAV Imagery and Convolutional Neural Networks

Abstract

:1. Introduction

2. Materials and Methods

2.1. Experimental Setup

2.2. Image Acquisition

2.3. Image Processing

2.4. Resolution

2.5. Data Augmentation

2.6. Model Development

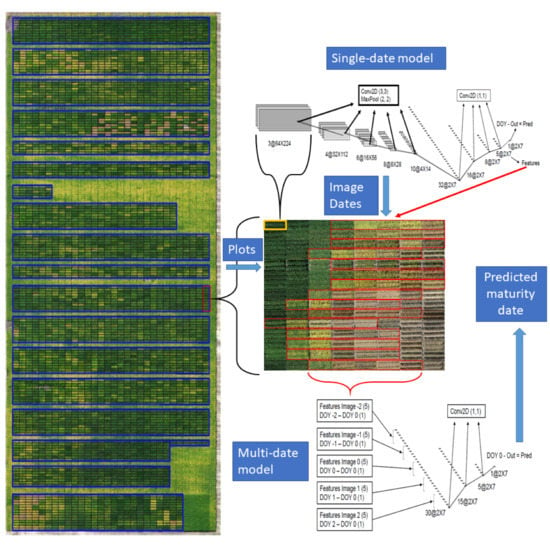

2.7. Single-Date Model Architecture

2.8. Multi-Date Model Architecture

2.9. Model Parameters

2.10. Model Training

2.11. Model Validation

2.12. Model Uncertainty

3. Results

3.1. Single Date Model

3.2. Overall Performance

3.3. Resolution

3.4. Data Augmentation

3.5. Uncertainty

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Hartman, G.L.; West, E.D.; Herman, T.K. Crops that feed the World 2. Soybean—Worldwide production, use, and constraints caused by pathogens and pests. Food Secur. 2011, 3, 5–17. [Google Scholar] [CrossRef]

- Liu, S.; Zhang, M.; Feng, F.; Tian, Z. Toward a “Green Revolution” for Soybean. Mol. Plant 2020, 13, 688–697. [Google Scholar] [CrossRef]

- Cobb, J.N.; DeClerck, G.; Greenberg, A.; Clark, R.; McCouch, S. Next-generation phenotyping: requirements and strategies for enhancing our understanding of genotype—Phenotype relationships and its relevance to crop improvement. Theor. Appl. Genet. 2013, 126, 867–887. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Moreira, F.F.; Hearst, A.A.; Cherkauer, K.A.; Rainey, K.M. Improving the efficiency of soybean breeding with high-throughput canopy phenotyping. Plant Methods 2019, 15, 139. [Google Scholar] [CrossRef]

- Morales, N.; Kaczmar, N.S.; Santantonio, N.; Gore, M.A.; Mueller, L.A.; Robbins, K.R. ImageBreed: Open-access plant breeding web–database for image-based phenotyping. Plant Phenome J. 2020, 3, 1–7. [Google Scholar] [CrossRef]

- Reynolds, M.; Chapman, S.; Crespo-Herrera, L.; Molero, G.; Mondal, S.; Pequeno, D.N.; Pinto, F.; Pinera-Chavez, F.J.; Poland, J.; Rivera-Amado, C.; et al. Breeder friendly phenotyping. Plant Sci. 2020. [Google Scholar] [CrossRef]

- Fehr, W.; Caviness, C.; Burmood, D.; Pennington, J. Stage of soybean development. Spec. Rep. 1977, 80, 929–931. [Google Scholar]

- Koga, L.J.; Canteri, M.G.; Calvo, E.S.; Martins, D.C.; Xavier, S.A.; Harada, A.; Kiihl, R.A.S. Managing soybean rust with fungicides and varieties of the early/semi-early and intermediate maturity groups. Trop. Plant Pathol. 2014, 39, 129–133. [Google Scholar] [CrossRef] [Green Version]

- Andrea, M.C.d.S.; Dallacort, R.; Tieppo, R.C.; Barbieri, J.D. Assessment of climate change impact on double-cropping systems. SN Appl. Sci. 2020, 2, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Alliprandini, L.F.; Abatti, C.; Bertagnolli, P.F.; Cavassim, J.E.; Gabe, H.L.; Kurek, A.; Matsumoto, M.N.; De Oliveira, M.A.R.; Pitol, C.; Prado, L.C.; et al. Understanding soybean maturity groups in brazil: Environment, cultivar classifi cation, and stability. Crop Sci. 2009, 49, 801–808. [Google Scholar] [CrossRef]

- Song, W.; Sun, S.; Ibrahim, S.E.; Xu, Z.; Wu, H.; Hu, X.; Jia, H.; Cheng, Y.; Yang, Z.; Jiang, S.; et al. Standard Cultivar Selection and Digital Quantification for Precise Classification of Maturity Groups in Soybean. Crop Sci. 2019, 59, 1997–2006. [Google Scholar] [CrossRef] [Green Version]

- Mourtzinis, S.; Conley, S.P. Delineating soybean maturity groups across the United States. Agron. J. 2017, 109, 1397–1403. [Google Scholar] [CrossRef] [Green Version]

- Zdziarski, A.D.; Todeschini, M.H.; Milioli, A.S.; Woyann, L.G.; Madureira, A.; Stoco, M.G.; Benin, G. Key soybean maturity groups to increase grain yield in Brazil. Crop Sci. 2018, 58, 1155–1165. [Google Scholar] [CrossRef]

- Araus, J.L.; Kefauver, S.C.; Zaman-Allah, M.; Olsen, M.S.; Cairns, J.E. Translating High-Throughput Phenotyping into Genetic Gain. Trends Plant Sci. 2018, 23, 451–466. [Google Scholar] [CrossRef] [Green Version]

- Yu, N.; Li, L.; Schmitz, N.; Tian, L.F.; Greenberg, J.A.; Diers, B.W. Development of methods to improve soybean yield estimation and predict plant maturity with an unmanned aerial vehicle based platform. Remote Sens. Environ. 2016, 187, 91–101. [Google Scholar] [CrossRef]

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Xu, B.; Yang, X.; Zhu, D.; Zhang, X.; et al. Unmanned aerial vehicle remote sensing for field-based crop phenotyping: Current status and perspectives. Front. Plant Sci. 2017, 8. [Google Scholar] [CrossRef]

- Reynolds, D.; Baret, F.; Welcker, C.; Bostrom, A.; Ball, J.; Cellini, F.; Lorence, A.; Chawade, A.; Khafif, M.; Noshita, K.; et al. What is cost-efficient phenotyping? Optimizing costs for different scenarios. Plant Sci. 2019, 282, 14–22. [Google Scholar] [CrossRef] [Green Version]

- Borra-Serrano, I.; De Swaef, T.; Quataert, P.; Aper, J.; Saleem, A.; Saeys, W.; Somers, B.; Roldán-Ruiz, I.; Lootens, P. Closing the Phenotyping Gap: High Resolution UAV Time Series for Soybean Growth Analysis Provides Objective Data from Field Trials. Remote Sens. 2020, 12, 1644. [Google Scholar] [CrossRef]

- Tsaftaris, S.A.; Minervini, M.; Scharr, H. Machine Learning for Plant Phenotyping Needs Image Processing. Trends Plant Sci. 2016, 21, 989–991. [Google Scholar] [CrossRef] [Green Version]

- Schnaufer, C.; Pistorius, J.L.; LeBauer, D. An open, scalable, and flexible framework for automated aerial measurement of field experiments. In Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping V; Thomasson, J.A., Torres-Rua, A.F., Eds.; SPIE: Bellingham, WA, USA, 2020; p. 9. [Google Scholar] [CrossRef]

- Ampatzidis, Y.; Partel, V.; Costa, L. Agroview: Cloud-based application to process, analyze and visualize UAV-collected data for precision agriculture applications utilizing artificial intelligence. Comput. Electron. Agric. 2020, 174, 105457. [Google Scholar] [CrossRef]

- Matias, F.I.; Caraza-Harter, M.V.; Endelman, J.B. FIELDimageR: An R package to analyze orthomosaic images from agricultural field trials. Plant Phenome J. 2020, 3, 1–6. [Google Scholar] [CrossRef]

- Khan, Z.; Miklavcic, S.J. An Automatic Field Plot Extraction Method From Aerial Orthomosaic Images. Front. Plant Sci. 2019, 10, 1–13. [Google Scholar] [CrossRef]

- Tresch, L.; Mu, Y.; Itoh, A.; Kaga, A.; Taguchi, K.; Hirafuji, M.; Ninomiya, S.; Guo, W. Easy MPE: Extraction of Quality Microplot Images for UAV-Based High-Throughput Field Phenotyping. Plant Phenomics 2019, 2019, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Chen, C.J.; Zhang, Z. GRID: A Python Package for Field Plot Phenotyping Using Aerial Images. Remote Sens. 2020, 12, 1697. [Google Scholar] [CrossRef]

- Zhou, J.J.; Yungbluth, D.; Vong, C.N.; Scaboo, A.; Zhou, J.J. Estimation of the Maturity Date of Soybean Breeding Lines Using UAV-Based Multispectral Imagery. Remote Sens. 2019, 11, 2075. [Google Scholar] [CrossRef] [Green Version]

- Jiang, Y.; Li, C. Convolutional Neural Networks for Image-Based High-Throughput Plant Phenotyping: A Review. Plant Phenomics 2020, 2020, 1–22. [Google Scholar] [CrossRef] [Green Version]

- Dobrescu, A.; Giuffrida, M.V.; Tsaftaris, S.A. Doing More With Less: A Multitask Deep Learning Approach in Plant Phenotyping. Front. Plant Sci. 2020, 11, 1–11. [Google Scholar] [CrossRef]

- David, E.; Madec, S.; Sadeghi-Tehran, P.; Aasen, H.; Zheng, B.; Liu, S.; Kirchgessner, N.; Ishikawa, G.; Nagasawa, K.; Badhon, M.A.; et al. Global Wheat Head Detection (GWHD) dataset: A large and diverse dataset of high resolution RGB labelled images to develop and benchmark wheat head detection methods. arXiv 2020, arXiv:2005.02162. [Google Scholar]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Yuan, W.; Wijewardane, N.K.; Jenkins, S.; Bai, G.; Ge, Y.; Graef, G.L. Early Prediction of Soybean Traits through Color and Texture Features of Canopy RGB Imagery. Sci. Rep. 2019, 9, 14089. [Google Scholar] [CrossRef] [Green Version]

- Yang, Q.; Shi, L.; Han, J.; Yu, J.; Huang, K. A near real-time deep learning approach for detecting rice phenology based on UAV images. Agric. For. Meteorol. 2020, 287, 107938. [Google Scholar] [CrossRef]

- Wang, X.; Xuan, H.; Evers, B.; Shrestha, S.; Pless, R.; Poland, J. High-throughput phenotyping with deep learning gives insight into the genetic architecture of flowering time in wheat. GigaScience 2019, 8, 1–11. [Google Scholar] [CrossRef]

- QGIS Development Team. QGIS Geographic Information System Software, Version 3.10; Open Source Geospatial Foundation: Beaverton, OR, USA, 2020. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 8026–8037. [Google Scholar]

- Christenson, B.S.; Schapaugh, W.T.; An, N.; Price, K.P.; Prasad, V.; Fritz, A.K. Predicting soybean relative maturity and seed yield using canopy reflectance. Crop Sci. 2016, 56, 625–643. [Google Scholar] [CrossRef] [Green Version]

- Young, S.N.; Kayacan, E.; Peschel, J.M. Design and field evaluation of a ground robot for high-throughput phenotyping of energy sorghum. Precis. Agric. 2019, 20, 697–722. [Google Scholar] [CrossRef] [Green Version]

- Zhao, D.; Yu, G.; Xu, P.; Luo, M. Equivalence between dropout and data augmentation: A mathematical check. Neural Netw. 2019, 115, 82–89. [Google Scholar] [CrossRef] [PubMed]

- Lakshminarayanan, B.; Pritzel, A.; Blundell, C. Simple and scalable predictive uncertainty estimation using deep ensembles. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, LA, USA, 4–9 December 2017; pp. 6402–6413. [Google Scholar]

| Trial | Year | Location | Plot Length (m) | Plot Width (m) | #Plot * | #GTM * |

|---|---|---|---|---|---|---|

| T1 | 2018 | Savoy, IL-USA | 2.20 | 1 × 0.76 | 9360 | 9230 |

| T2 | 2019 | Champaign, IL-USA | 5.50 | 2 × 0.76 | 8608 | 1421 |

| T3 | 2019 | Arcola, IL-USA | 5.50 | 2 × 0.76 | 6272 | 1408 |

| T4 | 2019 | Litchfield, IL-USA | 5.50 | 2 × 0.76 | 6400 | 883 |

| T5 | 2019 | Rolândia, PR-Brazil | 5.50 | 2 × 0.50 | 7170 | 2680 |

| Trial | Images | Dates | Height (px/plot) | Width (px/plot) | Raw Data (GB) | Processed Data (GB) |

|---|---|---|---|---|---|---|

| T1 | 100 | 9 | 32 | 96 | 7.2 | 0.10 |

| T2 | 250 | 10 | 64 | 224 | 20.0 | 0.53 |

| T3 | 150 | 9 | 64 | 224 | 10.8 | 0.32 |

| T4 | 200 | 6 | 64 | 224 | 9.6 | 0.22 |

| T5 | 200 | 12 | 40 | 224 | 19.2 | 0.30 |

| Layer | Kernel Dim | Tensor Shape | Param # |

|---|---|---|---|

| Conv2D-S1 | [3,3,3] | [−1, 3, h, w] | 112 |

| Conv2D-S2 | [4,3,3] | [−1, 4, h/2, w/2] | 222 |

| Conv2D-S3 | [6,3,3] | [−1, 6, h/4, w/4] | 440 |

| Conv2D-S4 | [8,3,3] | [−1, 8, h/8, w/8] | 730 |

| Conv2D-S5 | [10,3,3] | [−1, 10, h/16, w/16] | 2912 |

| Conv2D-S6 | [32,1,1] | [−1, 32, h/32, w/32] | 528 |

| Conv2D-S7 | [16,1,1] | [−1, 16, h/32, w/32] | 136 |

| Conv2D-S8 | [8,1,1] | [−1, 8, h/32, w/32] | 45 |

| Conv2D-S9 | [5,1,1] | [−1, 5, h/32, w/32] | 6 |

| Total Single | [−1, 1, h/32, w/32] | 5131 | |

| Conv2D-M1 | [30,1,1] | [−1, 30, h/32, w/32] | 465 |

| Conv2D-M2 | [15,1,1] | [−1, 15, h/32, w/32] | 80 |

| Conv2D-M3 | [5,1,1] | [−1, 5, h/32, w/32] | 6 |

| Total Multi | [−1, 1, h/32, w/32] | 551 | |

| Total | 5682 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Trevisan, R.; Pérez, O.; Schmitz, N.; Diers, B.; Martin, N. High-Throughput Phenotyping of Soybean Maturity Using Time Series UAV Imagery and Convolutional Neural Networks. Remote Sens. 2020, 12, 3617. https://doi.org/10.3390/rs12213617

Trevisan R, Pérez O, Schmitz N, Diers B, Martin N. High-Throughput Phenotyping of Soybean Maturity Using Time Series UAV Imagery and Convolutional Neural Networks. Remote Sensing. 2020; 12(21):3617. https://doi.org/10.3390/rs12213617

Chicago/Turabian StyleTrevisan, Rodrigo, Osvaldo Pérez, Nathan Schmitz, Brian Diers, and Nicolas Martin. 2020. "High-Throughput Phenotyping of Soybean Maturity Using Time Series UAV Imagery and Convolutional Neural Networks" Remote Sensing 12, no. 21: 3617. https://doi.org/10.3390/rs12213617