Challenges in UAS-Based TIR Imagery Processing: Image Alignment and Uncertainty Quantification

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Sites

2.2. Image Acquisition

2.3. Geometric Camera Calibration

2.4. Image Preprocessing

- For each individual image capture, the camera creates multiple frames. The frame with highest image quality according to the Agisoft Image Quality tool was selected for further processing to reduce the probability of blurry images. This tool aims to give an impression of image quality by returning a parameter that reflects the level of sharpness of the most focused part of the image [31].

- The extracted frames were temperature corrected, as proposed by Maes et al. [15], for flights performed in Fendt where sufficient meteorological background data were available,where and are the corrected and uncorrected measured temperature by the camera, respectively; is the air temperature at the moment of image capture; and is the mean of the air temperature during the flight. Air temperature of the local weather station with temporal resolution of 1 min served as input for the correction.

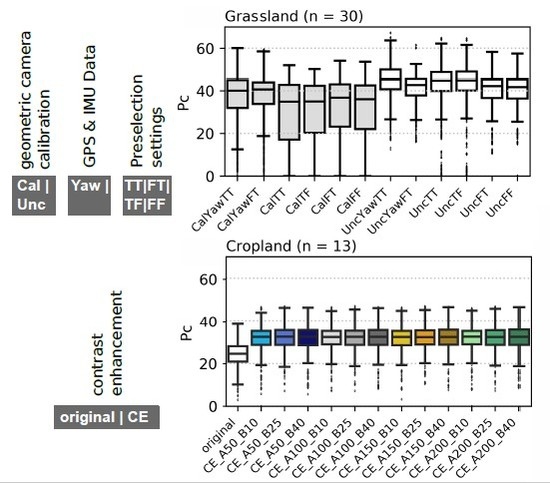

- We applied a contrast enhancement (CE) using the adapted Wallis filter of Brad [24] to the images. We chose a window size of 17 based on a pre-analysis for the local statistics calculation. We then tested different parameter settings based on the proposed range in Brad [24] of the edge enhancement factor A and uniform enhancement factor B. The chosen parameter combinations are listed in Table 1.

2.5. Image Alignment Analysis

2.6. Camera Uncertainty Quantification

3. Results

3.1. Impact of Image Preprocessing, IMU Data Inclusion, Geometric Camera Pre-Calibration, and Pre-Selection Options

3.2. Influence of Land Cover

3.3. Influence of Image Acquisition (Weather, SEA)

3.4. Camera Uncertainty Quantification from Tiepoint Extraction

4. Discussion

4.1. Contrast Enhancement

4.2. Camera Calibration

4.3. Pre-Selection Methods

4.4. SEA and Weather

4.5. Land Cover Effect

4.6. Camera Uncertainty Quantification

5. Conclusions

- For the creation of orthomosaics, high SEA are favorable due to their higher contrast. This is especially important for land cover types and weather conditions that are problematic in image alignment such as forest or overcast sky conditions.

- When analyzing temperature differences throughout the day, the probability of aligned imagery is higher in the evening than in the morning for the same SEA.

- Results concerning land cover types reveal major problems of image alignment over forested areas. The analysis of our data set proposes an image acquisition on clear sky conditions at maximal SEA. Related research on RGB imagery further propose higher resolution or flying at lower altitude with lower velocity. A high forward and side overlap yields better results.

- We propose a stabilization time of a minimum of 60 sto allow the sensor temperature to adapt to air temperatures at flight altitude or adding additional flight lines [13].

- Contrast enhancement of the imagery significantly improves the number of detected features.

- The inclusion of geometric camera calibration estimates using a regular image pattern hampers image alignment and should be avoided.

- We recommend the application of both pre-selection methods supplied in Agisoft Metashape for aligning the imagery. In case no GPS information for each image is available, we propose to only match features within overlapping areas as implemented in the Generic Pre-selection option of Agisoft Metashape.

- We further are able to show that the inclusion of UAS orientation background data is not necessary for SEA higher than 30 and 40 for sunny and cloudy days, respectively. For SEA <15, the inclusion of UAS orientation information increases the probability of a high number of points in the point cloud.

- The proposed method of temperature correction after Maes et al. [15] did not lead to a reduction in camera uncertainty in long and short-term noise.

- We strongly advocate for the uncertainty quantification of the camera and recommend the approach presented here. This approach also has the potential to identify and remove single images with high measurement uncertainty.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| CE | contrast enhancement |

| UAS | Unmanned aerial system |

| SfM | Structure for Motion |

| NUC | Nonuniformity correction |

| FPA | Focal plane array |

| TIR | Thermal Infrared |

| SIFT | Scale-Invariant Feature Transform |

| IQR | Interquantile Range |

| IR | Infrared |

| RGB | Red Green Blue |

| SAZ | Sun azimuth angle |

| SEA | Sun elevation angle |

Appendix A

| f | cx | cy | B1 | B2 | k1 | k2 | k3 | k4 | P1 | P2 | P3 | P4 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 124.64 | 17.44 | 16.99 | 0.27 | 0.034 | −0.033 | −0.0014 | 0.005 | −0.001 | −1.092 × 10−06 | −1.001 × 10−06 | −20.51 | 89 |

References

- Pachauri, R.K.; Allen, M.R.; Barros, V.R.; Broome, J.; Cramer, W.; Christ, R.; Church, J.A.; Clarke, L.; Dahe, Q.; Dasgupta, P.; et al. Climate Change 2014: Synthesis Report. Contribution of Working Groups I, II and III to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change; IPCC: Geneva, Switzerland, 2014. [Google Scholar]

- Hu, T.; Renzullo, L.J.; van Dijk, A.I.; He, J.; Tian, S.; Xu, Z.; Zhou, J.; Liu, T.; Liu, Q. Monitoring agricultural drought in Australia using MTSAT-2 land surface temperature retrievals. Remote Sens. Environ. 2020, 236, 111419. [Google Scholar] [CrossRef]

- Langer, M.; Westermann, S.; Boike, J. Spatial and temporal variations of summer surface temperatures of wet polygonal tundra in Siberia-implications for MODIS LST based permafrost monitoring. Remote Sens. Environ. 2010, 114, 2059–2069. [Google Scholar] [CrossRef]

- Bai, L.; Long, D.; Yan, L. Estimation of surface soil moisture with downscaled land surface temperatures using a data fusion approach for heterogeneous agricultural land. Water Resour. Res. 2019, 55, 1105–1128. [Google Scholar] [CrossRef]

- Kumar, K.S.; Bhaskar, P.U.; Padmakumari, K. Estimation of land surface temperature to study urban heat island effect using LANDSAT ETM+ image. Int. J. Eng. Sci. Technol. 2012, 4, 771–778. [Google Scholar]

- Masoudi, M.; Tan, P.Y. Multi-year comparison of the effects of spatial pattern of urban green spaces on urban land surface temperature. Landsc. Urban Plan. 2019, 184, 44–58. [Google Scholar] [CrossRef]

- Egea, G.; Verhoef, A.; Vidale, P.L. Towards an improved and more flexible representation of water stress in coupled photosynthesis–stomatal conductance models. Agric. Forest Meteorol. 2011, 151, 1370–1384. [Google Scholar] [CrossRef]

- Berni, J.; Zarco-Tejada, P.; Sepulcre-Cantó, G.; Fereres, E.; Villalobos, F. Mapping canopy conductance and CWSI in olive orchards using high resolution thermal remote sensing imagery. Remote Sens. Environ. 2009, 113, 2380–2388. [Google Scholar] [CrossRef]

- Brenner, C.; Thiem, C.E.; Wizemann, H.D.; Bernhardt, M.; Schulz, K. Estimating spatially distributed turbulent heat fluxes from high-resolution thermal imagery acquired with a UAV system. Int. J. Remote Sens. 2017, 38, 3003–3026. [Google Scholar] [CrossRef] [Green Version]

- Nieto, H.; Kustas, W.P.; Torres-Rúa, A.; Alfieri, J.G.; Gao, F.; Anderson, M.C.; White, W.A.; Song, L.; del Mar Alsina, M.; Prueger, J.H.; et al. Evaluation of TSEB turbulent fluxes using different methods for the retrieval of soil and canopy component temperatures from UAV thermal and multispectral imagery. Irrig. Sci. 2019, 37, 389–406. [Google Scholar] [CrossRef] [Green Version]

- Pádua, L.; Vanko, J.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Olbrycht, R.; Więcek, B. New approach to thermal drift correction in microbolometer thermal cameras. Quant. InfraRed Thermogr. J. 2015, 12, 184–195. [Google Scholar] [CrossRef]

- Kelly, J.; Kljun, N.; Olsson, P.O.; Mihai, L.; Liljeblad, B.; Weslien, P.; Klemedtsson, L.; Eklundh, L. Challenges and best practices for deriving temperature data from an uncalibrated UAV thermal infrared camera. Remote Sens. 2019, 11, 567. [Google Scholar] [CrossRef] [Green Version]

- Mesas-Carrascosa, F.J.; Pérez-Porras, F.; Meroño de Larriva, J.E.; Mena Frau, C.; Agüera-Vega, F.; Carvajal-Ramírez, F.; Martínez-Carricondo, P.; García-Ferrer, A. Drift correction of lightweight microbolometer thermal sensors on-board unmanned aerial vehicles. Remote Sens. 2018, 10, 615. [Google Scholar] [CrossRef] [Green Version]

- Maes, W.H.; Huete, A.R.; Steppe, K. Optimizing the processing of UAV-based thermal imagery. Remote Sens. 2017, 9, 476. [Google Scholar] [CrossRef] [Green Version]

- Berni, J.A.; Zarco-Tejada, P.J.; Suárez, L.; Fereres, E. Thermal and narrowband multispectral remote sensing for vegetation monitoring from an unmanned aerial vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef] [Green Version]

- Ribeiro-Gomes, K.; Hernández-López, D.; Ortega, J.F.; Ballesteros, R.; Poblete, T.; Moreno, M.A. Uncooled thermal camera calibration and optimization of the photogrammetry process for UAV applications in agriculture. Sensors 2017, 17, 2173. [Google Scholar] [CrossRef]

- Torres-Rua, A. Vicarious calibration of sUAS microbolometer temperature imagery for estimation of radiometric land surface temperature. Sensors 2017, 17, 1499. [Google Scholar] [CrossRef] [Green Version]

- Schönberger, J.L.; Frahm, J. Structure-from-Motion Revisited. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 4104–4113. [Google Scholar] [CrossRef]

- Canon Deutschland GmbH. Unsere Auswahl an EOS DSLR-Kameras. Available online: https://www.canon.de/cameras/dslr-cameras-range (accessed on 30 March 2020).

- Hoffmann, H.; Nieto, H.; Jensen, R.; Guzinski, R.; Zarco-Tejada, P.; Friborg, T. Estimating evaporation with thermal UAV data and two-source energy balance models. Hydrol. Earth Syst. Sci 2016, 20, 697–713. [Google Scholar] [CrossRef] [Green Version]

- Pech, K.; Stelling, N.; Karrasch, P.; Maas, H. Generation of multitemporal thermal orthophotos from UAV data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 1, 305–310. [Google Scholar] [CrossRef] [Green Version]

- Wallis, R.H. An approach for the space variant restoration and enhancement of images. In Proceedings of the Symposium on Current Mathematical Problems in Image Science, Montery, CA, USA, 10–12 November 1976. [Google Scholar]

- Brad, R. Satellite Image Enhancement by Controlled Statistical Differentiation. In Innovations and Advanced Techniques in Systems, Computing Sciences and Software Engineering; Elleithy, K., Ed.; Springer: Dordrecht, The Netherlands, 2008; pp. 32–36. [Google Scholar]

- Brenner, C.; Zeeman, M.; Bernhardt, M.; Schulz, K. Estimation of evapotranspiration of temperate grassland based on high-resolution thermal and visible range imagery from unmanned aerial systems. Int. J. Remote Sens. 2018, 39, 5141–5174. [Google Scholar] [CrossRef] [Green Version]

- Luhmann, T.; Piechel, J.; Roelfs, T. Geometric calibration of thermographic cameras. In Thermal Infrared Remote Sensing; Springer: Dordrecht, The Netherlands, 2013; pp. 27–42. [Google Scholar] [CrossRef] [Green Version]

- Harwin, S.; Lucieer, A.; Osborn, J. The impact of the calibration method on the accuracy of point clouds derived using unmanned aerial vehicle multi-view stereopsis. Remote Sens. 2015, 7, 11933–11953. [Google Scholar] [CrossRef] [Green Version]

- Kiese, R.; Fersch, B.; Bassler, C.; Brosy, C.; Butterbach-Bahlc, K.; Chwala, C.; Dannenmann, M.; Fu, J.; Gasche, R.; Grote, R.; et al. The TERENO Pre-Alpine Observatory: Integrating Meteorological, Hydrological, and Biogeochemical Measurements and Modeling. Vadose Zone J. 2018, 17, 180060. [Google Scholar] [CrossRef]

- Sands, P.J. Prediction of vignetting. J. Opt. Soc. Am. 1973, 63, 803–805. [Google Scholar] [CrossRef]

- Ciocia, C.; Marinetti, S. In-situ emissivity measurement of construction materials. In Proceedings of the 11th International Conference on Quantitative InfraRed Thermography, Naples, Italy, 11–14 June 2012. [Google Scholar]

- Agisoft LLC. Agisoft Metashape User Manual; Professional edition; Version 1.5; AgiSoft LLC: Petersburg, Russia, 2019. [Google Scholar]

- DWD Climate Data Center (CDC). Auswahl von 81 über Deutschland verteilte Klimastationen, im Traditionellen KL-Format, Version Recent. Available online: https://opendata.dwd.de/climate_environment/CDC/observations_germany/climate/subdaily/standard_format/ (accessed on 7 May 2020).

- Turner, D.; Lucieer, A.; Wallace, L. Direct georeferencing of ultrahigh-resolution UAV imagery. IEEE Trans. Geosci. Remote Sens. 2013, 52, 2738–2745. [Google Scholar] [CrossRef]

- Fraser, B.T.; Congalton, R.G. Issues in Unmanned Aerial Systems (UAS) data collection of complex forest environments. Remote Sens. 2018, 10, 908. [Google Scholar] [CrossRef] [Green Version]

- Earth System Research Laboratory, NOAA. NOAA Solar Calculator. Available online: http://www.esrl.noaa.gov/gmd/grad/solcalc (accessed on 15 January 2020).

- Wierzbicki, D.; Kedzierski, M.; Fryskowska, A. Assesment of the influence of uav image quality on the orthophoto production. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 1. [Google Scholar] [CrossRef] [Green Version]

- Ley, A.; Hänsch, R.; Hellwich, O. Reconstructing white walls: Multi-view, multi-shot 3d reconstruction of textureless surfaces. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 3, 91–98. [Google Scholar] [CrossRef]

- Ballabeni, A.; Apollonio, F.I.; Gaiani, M.; Remondino, F. Advances in Image Pre-processing to Improve Automated 3D Reconstruction. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 315–323. [Google Scholar] [CrossRef] [Green Version]

- Lagouarde, J.; Kerr, Y.; Brunet, Y. An experimental study of angular effects on surface temperature for various plant canopies and bare soils. Agric. Forest Meteorol. 1995, 77, 167–190. [Google Scholar] [CrossRef]

- Bellvert, J.; Zarco-Tejada, P.J.; Girona, J.; Fereres, E. Mapping crop water stress index in a ‘Pinot-noir’vineyard: Comparing ground measurements with thermal remote sensing imagery from an unmanned aerial vehicle. Precis. Agric. 2014, 15, 361–376. [Google Scholar] [CrossRef]

- Dandois, J.P.; Ellis, E.C. High spatial resolution three-dimensional mapping of vegetation spectral dynamics using computer vision. Remote Sens. Environ. 2013, 136, 259–276. [Google Scholar] [CrossRef] [Green Version]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Deardorff, J.W. Efficient prediction of ground surface temperature and moisture, with inclusion of a layer of vegetation. J. Geophys. Res. Oceans 1978, 83, 1889–1903. [Google Scholar] [CrossRef] [Green Version]

- Highnam, R.; Brady, M. Model-based image enhancement of far infrared images. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 410–415. [Google Scholar] [CrossRef]

- Duffour, C.; Lagouarde, J.P.; Roujean, J.L. A two parameter model to simulate thermal infrared directional effects for remote sensing applications. Remote Sens. Environ. 2016, 186, 250–261. [Google Scholar] [CrossRef]

- Duffour, C.; Lagouarde, J.P.; Olioso, A.; Demarty, J.; Roujean, J.L. Driving factors of the directional variability of thermal infrared signal in temperate regions. Remote Sens. Environ. 2016, 177, 248–264. [Google Scholar] [CrossRef]

- Lark, R. Geostatistical description of texture on an aerial photograph for discriminating classes of land cover. Int. J. Remote Sens. 1996, 17, 2115–2133. [Google Scholar] [CrossRef]

- Dandois, J.P.; Olano, M.; Ellis, E.C. Optimal altitude, overlap, and weather conditions for computer vision UAV estimates of forest structure. Remote Sens. 2015, 7, 13895–13920. [Google Scholar] [CrossRef] [Green Version]

- Seifert, E.; Seifert, S.; Vogt, H.; Drew, D.; Van Aardt, J.; Kunneke, A.; Seifert, T. Influence of drone altitude, image overlap, and optical sensor resolution on multi-view reconstruction of forest images. Remote Sens. 2019, 11, 1252. [Google Scholar] [CrossRef] [Green Version]

- Jensen, A.M.; McKee, M.; Chen, Y. Procedures for processing thermal images using low-cost microbolometer cameras for small unmanned aerial systems. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; pp. 2629–2632. [Google Scholar] [CrossRef]

| CE | A | B | Yaw | Geom. cal. | Gen. Pres. | Ref. Pres. | Example | |

|---|---|---|---|---|---|---|---|---|

| combinations | No Yes | 50 100 150 200 | 10 25 40 | Yes No | calibrated on-job | Yes No | Yes No | CE = Yes| A = 100| B = 10|No|No|T|F |

| naming | original CE | Ax | By | Yaw/ | Cal Unc | T F | T F | CE_A100_B10 UncTF |

| Land Cover Class | Mean Pc | Median Pc | SD Pc | Mean SD within Images | # Flights |

|---|---|---|---|---|---|

| Grassland | 47.3 | 45.5 | 3.5 | 1.43 | 7 |

| Cropland | 35.8 | 35.7 | 1.6 | 2.46 | 5 |

| Forest | 24.8 | 25.8 | 9.5 | 2.87 | 4 |

| Temperature Correction | t | mMean | m|Mean| | mSD |

|---|---|---|---|---|

| Yes | Max 4 s | −0.01 | 1.20 | 0.94 |

| Yes | Min 50 s | −0.10 | 1.61 | 0.96 |

| No | Max 4 s | −0.01 | 1.20 | 0.94 |

| No | Min 50 s | −0.09 | 1.61 | 0.96 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Döpper, V.; Gränzig, T.; Kleinschmit, B.; Förster, M. Challenges in UAS-Based TIR Imagery Processing: Image Alignment and Uncertainty Quantification. Remote Sens. 2020, 12, 1552. https://doi.org/10.3390/rs12101552

Döpper V, Gränzig T, Kleinschmit B, Förster M. Challenges in UAS-Based TIR Imagery Processing: Image Alignment and Uncertainty Quantification. Remote Sensing. 2020; 12(10):1552. https://doi.org/10.3390/rs12101552

Chicago/Turabian StyleDöpper, Veronika, Tobias Gränzig, Birgit Kleinschmit, and Michael Förster. 2020. "Challenges in UAS-Based TIR Imagery Processing: Image Alignment and Uncertainty Quantification" Remote Sensing 12, no. 10: 1552. https://doi.org/10.3390/rs12101552