1. Introduction

Flooding at a regional scale poses a serious risk to lives and property, especially those in or near low-lying areas; therefore, accurate diagnosis and prediction of flooding conditions (i.e., water depth and extent), as well as factors that can modify these conditions (i.e., built features, surface water storage, complex channel geometry), is critical for accurate forecasts of river conditions. Unfortunately, many of these factors exist and/or influence hydrologic characteristics at small spatial scales, which can modify and augment larger-scale processes related to atmospheric conditions. As a result, proper consideration of scale must be considered when developing prediction/diagnostic strategies for microscale hydrologic applications, especially considering that high spatial resolution applications require more data in both space and time for initial and boundary conditions.

While river gauges provide invaluable point-scale water level/discharge estimates at select locations, the lack of real-time data on inundated areas adjacent to river channels is a major limitation to accurate assessment of existing flood conditions. Satellite-based products are often used to enhance the situational awareness of operational hydrologic forecasters, although issues related to low spatial and temporal resolution and cloud obstruction limit the usefulness of the products. Additionally, with the advent of operational distributed hydrologic models at high spatial resolution, such as the National Water Model (NWM), it is necessary to recognize and incorporate small-scale hydrologic and hydraulic features for improved model performance and accuracy.

Unmanned aerial vehicles (UAVs), along with imaging and sensing equipment mounted on the platforms (which together comprise an unmanned aerial system, or UAS), offer a unique compromise to the issue of scale-dependent data requirements in hydrologic applications. While the data are viable at extremely high spatial resolution (centimeter scale), which is comparable to surface-based observations, the data can be obtained over larger areas comparable to satellite coverage. Given their spatial extent and resolution, UAS-based observations provide a unique platform for data collection between the point-scale (i.e., surface stations) and the regional scale (i.e., satellite data) [

1]. As a result, UASs have been shown to be valuable tools for monitoring of surface characteristics, features, and processes in a variety of hydrologic and geomorphological applications, especially in regions that are difficult to access. [

2] demonstrated the utility of UAV-based imagery in defining surface characteristics, namely high-resolution elevation data, associated with flood risk in difficult-to-reach areas. Furthermore, [

3] and [

4] showed that UAV-based imagery played an important role in characterizing elevation, aspect, and stream distribution at high spatial resolution, which was useful in interpolating surface-based observations and spatially analyzing hydrologic response.

Many studies have utilized UAV imagery and photogrammetric techniques for observing topological and geomorphological features and processes over landscapes with varying levels of environmental complexity [

5,

6,

7,

8,

9]. [

10] employed a small UAV to construct a high spatial resolution (5-cm) orthomosaic image and digital elevation model (DEM) to map a variety of surface and hydrologic features over a limited river reach of the Elbow River in Alberta, Canada, which was used to initialize a two-dimensional hydrodynamic model. The authors noted that the primary disadvantages of using UAVs for mapping river features include vegetation obstructions of the surface and immature regulations, while specific advantages include low cost, high efficiency and flexibility, and high spatial resolution.

In terms of direct applications of UAV platforms to hydrologic data and monitoring applications, [

11] and [

12] provided information on the utility and uses of UAV technologies on river mapping and floodplain characterization. Additionally, [

13,

14] discussed the advantages, uses, and applications of UAV platforms for monitoring river levels. [

14] applied image recognition technology to UAV-based imagery to define water level in a complex hydrologic environment (spillway downstream of a hydropower station) and found that the methods were suitable for recognition of water level with high accuracy. This, along with the flexibility and low cost of UAV platforms, indicates that the procedure can be used to define water level and water surface variability in a variety of environments with complex topography, hydrologic characteristics, and/or flow regimes. As floodplain characterization and elevation mapping are critical aspects of developing accurate and representative hydrologic modeling frameworks, UAVs have also been proven useful in surveying of riverbanks and floodplains [

15,

16], as well as the generation of DEMs over various scales and landscapes [

17,

18]. [

19,

20,

21] further illustrated the accuracy and utility of UAV-based photogrammetry in mapping river systems and river conditions rapidly and at high resolution.

Along with the usefulness of UAV technology in monitoring of hydrologic conditions and surface characteristics, UAVs also offer advantages over other remote sensing platforms (e.g., satellites and aircraft) in terms of initial and long-term cost [

22,

23]. While the various remote sensing platforms are often applied to different research objectives or serve specific data collection purposes, [

24] noted a substantial decrease in cost of UAV-based products relative to similar satellite-based products, while [

25] quantified the savings of using unmanned vehicles rather than manned aircraft for repeated surface monitoring. Although satellites are superior in providing spatially extensive coverage with a high revisit frequency, the variety of available UAV and imaging assets allows for a variable cost–benefit ratio depending on the specific mission being planned and the type, extent, and quality of data required for the research objectives. Additionally, the increasing availability of payloads that can be used on UAV platforms (i.e., thermally calibrated infrared cameras, gas sensors, microwave soil moisture sensors, etc.) provides multiple customization options to fit a variety of research frameworks, operational missions, and related budgets. With the decreasing cost of UAV platforms as more models are released to the market and the cost of technology goes down, the utilization of UAS equipment is becoming a more viable option for a wider range of research teams and applications. Further information regarding the cost–benefit aspects of UAVs, especially in terms of hydrologic applications, can be found in [

26].

It is the purpose of this paper to outline and illustrate methods by which high-resolution data collected by UASs can be used beyond their initial operational applications, namely in the context of microscale hydrologic analysis. The results of the work will not only highlight the advantages of applying UAS technology to operational hydrologic prediction but will also quantify how and where additional improvements can be made to maximize the effectiveness and cost–benefit aspects of UAS-based imagery in flood assessment and forecasting at the local scale. The work is based on imagery collected during missions conducted around Greenwood, MS, in the context of a larger National Oceanic and Atmospheric Administration (NOAA) project, which is explained in

Section 2.

Section 3 provides the results of the study and a discussion of the potential uses and impacts of UAS data on microscale applications, while

Section 4 defines future plans and paths forward for the use of UAVs and the associated data in hydrologic applications.

3. Results and Discussion

While UAS-based imagery is becoming a common product for a variety of scientific applications related to Earth science research, the benefits related to hydrology are best illustrated relative to microscale applications. While some of the benefits and uses of the data are well established, it is useful to properly define the applications using actual imagery to better justify and illustrate how UASs can be used for various hydrologic research approaches.

3.1. Assess Current Conditions of River Channels and Surface Runoff

The first step in any approach to diagnosing and predicting hydrologic conditions is the recognition of current river conditions, including flow rate, stage, and/or inundated extent. While direct measurement of discharge is not a quantity easily defined using aerial imagery, inundated extent is easily measured with such visual data. From these inundation data, stage can be inferred given a sufficient number and density of georeferenced points along the landscape. Along with river gauges to provide discharge, aerial data can be a critical factor in defining and assessing current river conditions along and adjacent to a water body at a scale much higher than the gauges alone can justify.

As far as the number of georeferenced ground control points (GCPs) necessary to adequately define water height across a landscape, the value depends on the level of accuracy required by the user and the variability of local topographical features. [

31] suggest placing GCPs between 1/5 and 1/10 of the flight altitude, with the points spaced out evenly though not necessarily on a grid (especially where heights change substantially). Given the 4500 ft (1372 m) flight level for the study missions, this guidance indicates that GCPs should be provided roughly every 225–450 ft (68.6–137.2 m) for a minimum vertical accuracy for water level determination. This was not feasible during the missions, especially given the existing flood conditions; however, for future missions it would be possible to use known objects with positional data as GCPs. Furthermore, [

32] mention that adding GCPs along the edges of flight runs can help to improve accuracy if an evenly spaced network of points is not available.

In terms of microscale hydrologic applications, high resolution imagery is a requirement in defining detailed flow patterns within a watershed. This is especially true in areas with low topographic relief where a small change in stage can lead to a large change in horizontal flooded area, or in areas with complex surface features such as cities or road intersections where flow direction changes over small spatial scales. By seeing and identifying areas that are flooded at a given stage and/or discharge, microscale surface conditions related to surface runoff direction, floodplain storage, and active routing channels (including channel structure) can be identified and tied to the given flow conditions.

Using the Feb. 24, 2019 flood near Greenwood, MS as an example,

Figure 4 shows the inundated area over an agricultural landscape with minimal topographic relief relative to non-flood conditions on Jan. 16, 2019. On Feb. 24, the Yazoo River at Greenwood, MS was at 38.1 feet (11.6 m), which was just below the record flood stage of 40.1 feet (12.2 m) [

30]; however, it is difficult to assess the relationship between river stage at the observation point with flood conditions along minor tributaries and low-lying areas. While the defined river channels do provide some information about where inundation can be expected during over-bank conditions, the high-resolution imagery clearly shows the complex spatial patterns of flooded area over fields adjacent to the flow paths. While the inundation is likely quite shallow, the aggregate volume of water stored on the landscape can quickly become substantial, possibly leading to errors in predictive models that do not recognize nor account for the stored water. Further examples are shown in

Figure 5, where inundated areas show varying spatial correlation with recognized river channels (in this case the National Hydrography Dataset). In

Figure 5a, some channels do not show any surface water while in

Figure 5b there is surface water well removed from the channels. Such inconsistencies can lead to substantial error in hydrologic modeling and planning, and in terms of agricultural or emergency management, diagnosing and predicting flooding in areas like this is critical for a variety of economic, environmental, and safety reasons.

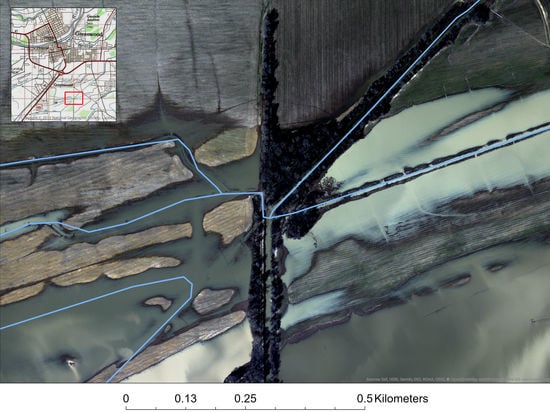

To better illustrate the utility of UAS data in assessing current surface hydrologic conditions,

Figure 6 shows an example of a land-water mask denoting inundated area over an agricultural area northeast of Greenwood, MS. The low topographic relief and heavy vegetation in this area decrease the potential accuracy and utility of satellite estimates of inundation; however, applying an adjusted NDVI filter on the UAV imagery allows for the development of a highly detailed map showing inundated area both along a river channel as well as adjacent to a floodplain. As mentioned previously, surface water storage in flooded fields poses a substantial problem when quantifying the volume of water moving along a channel, so being able to define the extent and depth using elevation data along with the detailed land-water mask allows for improved estimates of water volume at the field scale. Although such estimates are highly sensitive to the vertical accuracy of the elevation data, recognition of the areal extent of inundation does allow for an approximate diagnosis of stored water within a floodplain (especially if there are GCPs with a known elevation within the imaged area), allowing for improved analysis of risk and damage.

High-resolution georeferenced imagery from UASs and the associated inundation maps, combined with observed gauge records at select points (if available and representative of the location), can provide a relatively complete description of the microscale hydrologic characteristics of a location. Furthermore, these data can be used to generate an event database for future reference, which is critical for post-event analysis and highlighting at-risk areas at specific flow criteria. Current databases offer limited qualitative information for select events, such as “flooding of courthouse steps” or “inundated parking lot”; therefore, inclusion of information such as high-resolution images, stage/discharge information, and maps and/or shapefiles of inundated area (along with estimated water elevation) would provide critical information for reference during future events. Additionally, this information could be used for verification or calibration of hydrologic models to improve microscale water level prediction over select areas. Such data could be stored and disseminated through a dedicated web portal, although the size of the processed imagery may necessitate the need for tiling of the data for easier delivery. As the number and types of applications using these data increase, specific data products can be generated from the initial imagery that can then be uploaded and made available to various stakeholders. Although not necessarily feasible, ideally the database would be updated during and after each major flood to provide information on inundation and the resulting modifications to the landscape.

3.2. Define Areas With Complex Hydrologic and/or Hydraulic Processes

Recognizing and assessing the influence of microscale features on surface water patterns during a flood is incredibly important for assessment of water storage and inundation, as the information can be used to define features impacting the hydraulic flow criteria along a channel leading to non-linear hydrologic responses. UAS platforms are especially useful for defining areas exhibiting complex hydrologic responses, including built structures, river confluences (leading to backwater effects), or submerged vegetation within a floodplain. As a flood event progresses, repeated imaging of an area having these features could help forecasters understand the impact of the microscale processes on the progression of flood waters, allowing for enhanced understanding of variations in the speed and magnitude of a flood peak as it moves downstream.

Figure 7 provides two examples of hydraulic influences on microscale hydrologic patterns, both of which are easily identified using UAS imagery. The first (

Figure 7a) is a dam at the confluence of the Tallahatchie River and the Yalobusha River west of Greenwood, MS. During the progression of a flood event moving south along the Tallahatchie River, this dam can be used to dampen the progression of the floodwave around Greenwood. Although known discharge through the dam can be used to modify hydrologic model simulations, recognizing and understanding the amount of inundation around the structure is important both for flood control and dam safety.

Figure 7b, which shows the confluence of the Yalobusha River with a tributary northwest of Greenwood, MS, provides a good example of how changes in built structures along with natural hydraulic processes can lead to complex and often dangerous microscale hydrologic responses. In instances of a progressing flood wave from the north, this area becomes inundated and acts as an area of storage to slow the progression of flood waters and decrease the potential for flooding within Greenwood, MS. Despite the tiled nature of the images and the variability in lighting due to the changing conditions and orientation of the UAS during flight, the transition from urban to rural areas and the specific surface characteristics of the area (i.e., vegetation, orientation of roads and embankments, etc.) are easily seen and identified. As shown by the UAS imagery, there are flooded residential properties at this location where backwater effects from the confluence of the rivers make hydrologic prediction difficult. Recognizing the existence and influence of these structures on river flow and surface water storage, combined with the known backwater effects during flood conditions, can greatly enhance the diagnosis and prediction of microscale river properties and inundation over the area.

3.3. Improve Surface and Channel Representation in Model Frameworks

A critical issue in microscale hydrologic simulations is correctly representing the surface topographic and channel features within a modeling framework. This is especially true in areas with low topographic relief or complex stream networks, where stream density and meandering can substantially alter the speed and volume of surface runoff. During flood conditions, the complexity of a river system can change due to the development of backwater processes, inundation of smaller tributaries to generate fewer (albeit larger) flow paths, connection of previously independent storage areas (such as ponds or areas adjacent to levees), and so on. As a result, given the ability of UAVs to operate under cloud decks and fly along specific river channels, the related imagery can be extremely useful in providing detailed information about stream networks such as channel width, shape, and curvature. Additionally, the imagery can show inundated areas during high water events to illustrate ephemeral streams and floodplain storage areas, which can help with future risk assessment and additional UAV mission planning by outlining areas at risk during flood conditions. By knowing areas where stream complexity is high, future planning can focus on those regions to maximize the effectiveness of the resulting imagery in hydrologic analysis.

Figure 8 shows two examples where UAV imagery indicates potential issues in existing larger-scale channel networks, which can be easily recognized due to the high spatial resolution of the data.

Figure 8a shows a creek running around Greenwood-Leflore Airport alongside the channel network defined by the National Hydrography Dataset (NHD). While the NHD flow lines are considered representative in regional hydrologic simulations, it is clear from this image that at the microscale the flow lines are not spatially accurate. By using the UAV imagery for developing a microscale hydrologic prediction framework, a more realistic channel network can be generated (or an existing network can be modified) to more appropriately route surface water. This would improve not only the timing and volume of surface runoff but would allow for more precise assessment of streamflow at specific geographic locations within the simulation domain (such as near built structures).

Building on this concept, at high water levels the channel network over small areas can and does change; therefore, defining a more realistic and representative hydrologic framework is critical for proper discharge and inundation estimation.

Figure 8b illustrates how UAV-generated imagery is necessary in producing such a framework, as it allows for determination of surface water flow paths among existing waterways, ephemeral channels, and areas of water storage (such as oxbow lakes that are normally disconnected from river channels at normal flow levels). By improving channel representation in numerical hydrological models, especially those produced specifically for microscale flood prediction, the potential accuracy and overall usefulness of model output can be increased. It should be noted that the level of improvement in models is not just dependent on the representativeness of the underlying hydrologic network, but also on the initial data used to force the models. As a result, the final determination of how accurate the river network should be and what level of spatial detail should be included in the model framework is based on the experience and needs of the forecasters and decision makers.

Along with direct imagery, with the proper flight plan and image overlap considerations a digital surface model can be generated using photogrammetric methods. Based on guidance from the Agisoft software used for post-processing of the mission data, a recommended minimum 60% cross-track and 80% in-track overlap is necessary for “good quality” results. These recommendations have changed over time and can vary based on the availability of surface tie points and image resolution. For the study missions, the guidance provided by the Overwatch sensors was used, leading to a minimum cross-track and in-track overlap of 40% and 60%, respectively, with a 20% scan-frame overlap (overlap between the five images along the cross-track) for orthomosaic generation. Although a detailed quantification of the influence of the imagery overlap on orthomosaic accuracy is beyond the scope of this study, for a microscale hydrologic simulation, proper representation of the topography within the simulation domain is absolutely critical to account for overland flow and runoff. Additionally, if the elevation data are to be used to define channel networks, accurate and high-resolution microscale topographic relief are needed.

To illustrate the potential impact that UAV-generated elevation data can have in defining microscale topographic features,

Figure 9 provides a comparison between a ~30 m DEM from the NHD and a ~16 cm DSM produced by the Feb. 25, 2019 UAV mission. Since the primary focus of the UAV flights for this work was obtaining imagery of hydrologic features, namely main river channels, the flight paths were not organized to maximize cross-track image overlap. Despite this, and noting that the two datasets do show different aspects (ground elevation vs. elevation of surface features, respectively), the figure does illustrate the variability in elevation that influences surface water flow direction, speed, and path at the microscale. Within the DSM (

Figure 9b), small-scale features that could contribute to accelerated surface runoff are more clearly defined within otherwise flat agricultural fields, while the topographic variability in and adjacent to known channels is also more clearly defined. While hydrologic applications of UAVs are generally focused on flood conditions, the ability to generate a DSM highlights the utility of flights at low water levels during the winter season when vegetation obstructions are minimized.

4. Conclusions

As unmanned aerial vehicles (UAVs) and associated sensor and imaging packages become more readily available for scientific applications, it is important to define the utility of the associated data for specific applications. In the context of this research, the objective was to define the role of UAVs and associated imagery in meeting the data needs and requirements for microscale hydrologic analysis. A series of missions conducted over Greenwood, Mississippi in January and February 2019 during normal and extreme flood conditions were used to illustrate the use of high-resolution UAV-based imagery in identifying microscale hydrologic impacts. While the initial application of the data was for increased situational awareness of hydrologic conditions during operational river forecasting, the use of the data and related derived products for analysis of high-resolution features and processes indicated a much more robust set of benefits after initial mission completion.

The primary application of UAV-based imagery for hydrologic applications is the assessment of water levels within the river channels, as well as areas of surface water storage across the landscape. This information is important in defining the existence and extent of storage, which helps in both the diagnosis and forecasting of river levels before and after maximum flood stage, as well as defining inundated areas. The ability of UAV platforms to operate underneath cloud decks shows an added benefit compared to satellite imagery, especially during rapidly evolving high-water conditions when extensive cloud cover is in place. Additionally, due to the inherent high spatial resolution of the imagery, it is possible to define areas with complex hydrologic or hydraulic conditions such as river confluences or dams, respectively. The non-linear flow patterns in and around these areas make diagnosis of river conditions difficult; therefore, having high-resolution imagery during a flood event helps in defining the relative impact of these processes on water levels and flow paths.

Beyond direct image analysis, UAV-based imagery can be used to develop important derived products useful for microscale hydrologic model development. With proper flight planning to maximize image overlap along and across the flight path, photogrammetric techniques can be used to generate digital surface models (DSM) for development of gridded flow paths and channel networks. This information can be used to develop model frameworks over select microscale regions, potentially improving accuracy and/or precision of model output.

Although there are numerous benefits to the use of UAV imagery and associated data in hydrologic applications, there are distinct limitations that must be considered. First and foremost is the limited spatial coverage of UAV imagery relative to existing remotely sensed satellite datasets, which is directly associated with the cost and legal restrictions of UAV deployment. Considerable planning must be done before a mission can be performed, as a certificate of authorization (CoA) must be obtained from the Federal Aviation Administration (FAA) before flights can be conducted. Depending on the rules outlined by the CoA, additional resources may be needed (i.e., chase planes) or restrictions may be in place (i.e., cannot fly over specific points) that increase the cost and/or decrease the utility of the data. As UAVs are used in more scientific applications and the usefulness of the associated data are proven, some of these limitations may be minimized over certain times or areas. For example, permission to fly beyond visible line-of-sight (BVLoS) over some areas would allow for a substantial increase in collected imagery during a single flight, thereby maximizing the cost–benefit ratio of a mission. Additionally, although UAVs do offer the advantage of being able to fly under cloud decks that would otherwise obscure satellite imagery, other weather considerations such as high wind speeds, precipitation, or even extreme temperatures can limit the ability to operate. Therefore, planning and implementation of a UAV mission does depend on meteorological conditions.

Given the defined uses of UAV imagery in microscale hydrologic diagnosis and prediction, there are several clear paths forward to future research and data development. First and foremost, future work should focus on expanding the collection of UAV imagery over various locations and hydrologic conditions. This will enhance the knowledge of when and where high-resolution aerial data are the most useful, both in terms of landscape conditions (i.e., agricultural vs. forested, high vs. low topography, etc.) and river conditions (i.e., flood vs. low flow). As is generally the case in any scientific framework, the more data the better. Building on this, future missions could be planned with imagery from multiple platforms (i.e., satellite, aircraft, multiple UAVs, etc.), along with established GCP and georeferenced objects, with the specific purpose of accuracy assessment of the various derived products.

In terms of prediction, future work should focus on the use and application of UAV imagery and derived topographic data within the hydrologic forecast pipeline for microscale simulation domains. Derived elevation and stream channel information can enhance model domain configuration and setup, potentially increasing the physical representativeness of the model at the microscale and thereby improving the precision and accuracy of the output. Additionally, gridded estimates of inundated area through land-water masks of the UAV imagery could be used for model verification or model nudging, helping to improve subsequent model forecasts. Building on the concept of microscale hydrologic models and prediction, one important question that remains to be answered is the impact of high-resolution data on the accuracy of simulations. Although the idea of microscale forecasts of water level and inundation are intriguing, it is necessary to quantify the impact of spatial data resolution on model performance. Knowing the threshold where resolution no longer improves model accuracy can help define future UAV missions and products and could potentially set the stage for the development of guidelines in microscale hydrologic data collection. This information would then work backwards through the data collection pipeline, improving planning and implementation of missions. For example, if a specific horizontal resolution is defined as a criteria maximum value, then UAV flight altitude can be standardized, allowing for more precise determination of areal coverage and imagery extent.