RealPoint3D: Generating 3D Point Clouds from a Single Image of Complex Scenarios

Abstract

:1. Introduction

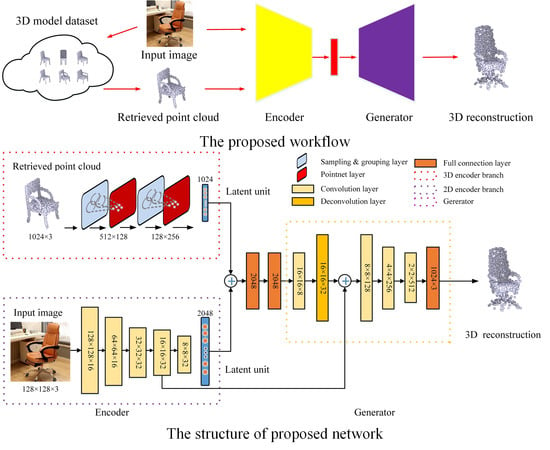

- We design a novel and end-to-end network RealPoint3D, which combines a 2D image and 3D point cloud for 3D object reconstruction. By using prior knowledge, RealPoint3D reconstructs objects from an image with a complex background and change of viewpoints.

- Extensive experiments on images of five common categories of objects were conducted and the results demonstrate the effectiveness of the RealPoint3D framework.

2. Related Work

2.1. 3D Reconstruction from a Single Image

2.2. Shape Prior Guided 3D Reconstruction

2.3. Deep Learning on Point Clouds

3. Methodology

3.1. Overview of the Workflow

3.2. Nearest Shape Retrieval

3.3. RealPoint3D

3.3.1. Encoder Net

3.3.2. Generator

3.3.3. Influence of the 3D Encoder

3.4. Loss Function

4. Experiments

4.1. Datasets and Implementation Details

4.2. Results and Comparisons

4.2.1. Object Reconstruction on Rendered Images

4.2.2. Reconstructed 3D Points Using Real Images

4.3. Model Analysis

4.4. Time Complexity

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Fristrup, K.M.; Harbison, G.R. How do sperm whales catch squids? Mar. Mammal Sci. 2002, 18, 42–54. [Google Scholar] [CrossRef]

- Fan, H.; Su, H.; Guibas, L.J. A Point Set Generation Network for 3D Object Reconstruction from a Single Image. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; Volume 2, p. 6. [Google Scholar]

- Tatarchenko, M.; Dosovitskiy, A.; Brox, T. Octree Generating Networks: Efficient Convolutional Architectures for High-resolution 3D Outputs. arXiv 2017, arXiv:1703.09438. [Google Scholar]

- Wang, N.; Zhang, Y.; Li, Z.; Fu, Y.; Liu, W.; Jiang, Y.G. Pixel2Mesh: Generating 3D Mesh Models from Single RGB Images. In Proceedings of the European Conference on Computer Vision (ECCV) 2018, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Su, H.; Huang, Q.; Mitra, N.J.; Li, Y.; Guibas, L. Estimating image depth using shape collections. ACM Trans. Graph. (TOG) 2014, 33, 37. [Google Scholar] [CrossRef]

- Huang, Q.; Wang, H.; Koltun, V. Single-view reconstruction via joint analysis of image and shape collections. ACM Trans. Graph. (TOG) 2015, 34, 87. [Google Scholar] [CrossRef]

- Hong, D.; Yokoya, N.; Chanussot, J.; Zhu, X.X. An augmented linear mixing model to address spectral variability for hyperspectral unmixing. IEEE Trans. Image. Process. 2019, 28, 1923–1938. [Google Scholar] [CrossRef] [PubMed]

- Cicek, O.; Abdulkadir, A.; Lienkamp, S.S.; Brox, T.; Ronneberger, O. 3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2016; pp. 424–432. [Google Scholar]

- Wu, J.; Zhang, C.; Xue, T.; Freeman, W.T.; Tenenbaum, J.B. Learning a Probabilistic Latent Space of Object Shapes via 3D Generative-Adversarial Modeling. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2016; pp. 82–90. [Google Scholar]

- Choy, C.B.; Xu, D.; Gwak, J.Y.; Chen, K.; Savarese, S. 3D-R2N2: A Unified Approach for Single and Multi-view 3D Object Reconstruction. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 628–644. [Google Scholar]

- Maturana, D.; Scherer, S. Voxnet: A 3d convolutional neural network for real-time object recognition. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 922–928. [Google Scholar]

- Hong, D.; Liu, W.Q.; Wu, X.; Pan, Z.K.; Su, J. Robust palmprint recognition based on the fast variation Vese–Osher model. Neurocomputing 2016, 174, 999–1012. [Google Scholar] [CrossRef]

- Chang, A.X.; Funkhouser, T.; Guibas, L.; Hanrahan, P.; Huang, Q.; Li, Z.; Savarese, S.; Savva, M.; Song, S.; Su, H. ShapeNet: An Information-Rich 3D Model Repository. arXiv 2015, arXiv:1512.03012. [Google Scholar]

- Xiang, Y.; Kim, W.; Chen, W.; Ji, J.; Choy, C.; Su, H.; Mottaghi, R.; Guibas, L.; Savarese, S. Objectnet3d: A large scale database for 3d object recognition. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 160–176. [Google Scholar]

- Fuentes-Pacheco, J.; Ruiz-Ascencio, J.; Rendón-Mancha, J.M. Visual simultaneous localization and mapping: A survey. Artif. Intell. Rev. 2015, 43, 55–81. [Google Scholar] [CrossRef]

- Häming, K.; Peters, G. The structure-from-motion reconstruction pipeline—A survey with focus on short image sequences. Kybernetika 2010, 46, 926–937. [Google Scholar]

- Hong, D.; Yokoya, N.; Chanussot, J.; Zhu, X.X. Cospace: Common subspace learning from hyperspectral-multispectral correspondences. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4349–4359. [Google Scholar] [CrossRef]

- Barron, J.T.; Malik, J. Shape, Illumination, and Reflectance from Shading. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1670–1687. [Google Scholar] [CrossRef] [PubMed]

- Malik, J.; Rosenholtz, R. Computing Local Surface Orientation and Shape from Texture for Curved Surfaces. Int. J. Comput. Vis. 1997, 23, 149–168. [Google Scholar] [CrossRef]

- Savarese, S.; Andreetto, M.; Rushmeier, H.; Bernardini, F.; Perona, P. 3D Reconstruction by Shadow Carving: Theory and Practical Evaluation. Int. J. Comput. Vis. 2007, 71, 305–336. [Google Scholar] [CrossRef]

- Karimi Mahabadi, R.; Hane, C.; Pollefeys, M. Segment based 3D object shape priors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2015, Boston, MA, USA, 7–12 June 2015; pp. 2838–2846. [Google Scholar]

- Qi, C.R.; Su, H.; Niebner, M.; Dai, A.; Yan, M.; Guibas, L.J. Volumetric and Multi-view CNNs for Object Classification on 3D Data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 5648–5656. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2017, Honolulu, HI, USA, 21–26 July 2017; Volume 1, p. 4. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2017; pp. 5099–5108. [Google Scholar]

- Huang, R.; Ye, Z.; Hong, D.; Xu, Y.; Stilla, U. Semantic Labeling and Refinement of LIDAR Point Clouds Using Deep Neural Network in Urban Areas. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, IV-2/W7, 63–70. [Google Scholar] [CrossRef]

- Yang, Y.; Feng, C.; Shen, Y.; Tian, D. FoldingNet: Point Cloud Auto-Encoder via Deep Grid Deformation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2018), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Li, J.; Chen, B.M.; Hee Lee, G. SO-Net: Self-Organizing Network for Point Cloud Analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2018), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Hong, D.; Liu, W.Q.; Su, J.; Pan, Z.K.; Wang, G.D. A novel hierarchical approach for multispectral palmprint recognition. Neurocomputing 2015, 151, 511–521. [Google Scholar] [CrossRef]

- Bronstein, M.M.; Bruna, J.; Lecun, Y.; Szlam, A.; Vandergheynst, P. Geometric Deep Learning: Going beyond Euclidean data. IEEE Signal Process. Mag. 2016, 34, 18–42. [Google Scholar] [CrossRef]

- Yi, L.; Su, H.; Guo, X.; Guibas, L. SyncSpecCNN: Synchronized Spectral CNN for 3D Shape Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 6584–6592. [Google Scholar]

- Masci, J.; Boscaini, D.; Bronstein, M.M.; Vandergheynst, P. Geodesic Convolutional Neural Networks on Riemannian Manifolds. In Proceedings of the IEEE International Conference on Computer Vision Workshop 2015, Santiago, Chile, 11–18 December 2015; pp. 832–840. [Google Scholar]

- Su, H.; Qi, C.R.; Li, Y.; Guibas, L.J. Render for cnn: Viewpoint estimation in images using cnns trained with rendered 3d model views. In Proceedings of the IEEE International Conference on Computer Vision 2015, Santiago, Chile, 11–18 December 2015; pp. 2686–2694. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Wu, X.; Hong, D.; Ghamisi, P.; Li, W.; Tao, R. Msri-ccf: Multi-scale and rotation-insensitive convolutional channel features for geospatial object detection. Remote Sens. 2018, 10, 1990. [Google Scholar] [CrossRef] [Green Version]

| Category | PSGN | Retrieval | RealPoint3D |

|---|---|---|---|

| Sofa | 2.20 | 6.83 | 1.95 |

| Airplane | 1.00 | 3.67 | 0.79 |

| Bench | 2.51 | 2.11 | 2.11 |

| Car | 1.28 | 1.96 | 1.26 |

| Chair | 2.38 | 6.91 | 2.13 |

| Category | OGN | Retrieval | RealPoint3D |

|---|---|---|---|

| Sofa | 0.11 | 0.12 | 0.22 |

| Airplane | 0.15 | 0.36 | 0.53 |

| Bench | 0.05 | 0.17 | 0.36 |

| Car | 0.44 | 0.24 | 0.54 |

| Chair | 0.14 | 0.13 | 0.27 |

| Category | PSGN | OGN | RealPoint3D |

|---|---|---|---|

| Sofa | - | - | 0.63 |

| Airplane | 0.60 | 0.59 | 0.67 |

| Bench | 0.55 | 0.48 | 0.58 |

| Car | 0.83 | 0.82 | 0.83 |

| Chair | 0.54 | 0.48 | 0.58 |

| Category | Non-Guidance | Random-Guidance | Searched-Guidance |

|---|---|---|---|

| Sofa | 2.46 | 2.10 | 1.95 |

| Airplane | 1.38 | 0.88 | 0.79 |

| Bench | 3.55 | 2.39 | 2.11 |

| Car | 1.31 | 1.28 | 1.26 |

| Chair | 2.53 | 2.35 | 2.13 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xia, Y.; Wang, C.; Xu, Y.; Zang, Y.; Liu, W.; Li, J.; Stilla, U. RealPoint3D: Generating 3D Point Clouds from a Single Image of Complex Scenarios. Remote Sens. 2019, 11, 2644. https://doi.org/10.3390/rs11222644

Xia Y, Wang C, Xu Y, Zang Y, Liu W, Li J, Stilla U. RealPoint3D: Generating 3D Point Clouds from a Single Image of Complex Scenarios. Remote Sensing. 2019; 11(22):2644. https://doi.org/10.3390/rs11222644

Chicago/Turabian StyleXia, Yan, Cheng Wang, Yusheng Xu, Yu Zang, Weiquan Liu, Jonathan Li, and Uwe Stilla. 2019. "RealPoint3D: Generating 3D Point Clouds from a Single Image of Complex Scenarios" Remote Sensing 11, no. 22: 2644. https://doi.org/10.3390/rs11222644