1. Introduction

Forest inventory and mapping is essential for sustainability of forest ecosystems and forest management. Forests are often spread in less accessible areas, which might be considered hazardous for human access. This is, for example, the case in disturbed areas but also in areas with steep topographic slopes with risk of landslides, avalanches and other potential hazards. Mapping inaccessible or hazardous areas using terrestrial surveying methods is a challenging task. Remote sensing techniques can be helpful in such cases and have been commonly used for this purpose [

1,

2,

3,

4]. Such studies mostly focus on estimation of canopy height since this is the most critical factor to predict risk [

1,

5,

6,

7,

8,

9] but they also estimate other inventory parameters like tree basal area, volume (e.g., [

3]) or ecosystem services [

10]. The detection of individual trees in dense forests is also possible, driving the forest inventory from stand- and plot-level to single tree levels [

11,

12,

13]. Increasing availability of UAVs is also contributing towards such studies [

14].

In most studies, remote sensing data georeferencing is dependent on ground control points (GCPs) [

15,

16]. These are acquired mostly using terrestrially-based methods, thus negating the advantage of contactless survey by UAV technology. The effort to eliminate this dependency can be observed especially within the increasing use and development of digital photogrammetry methods. Most current large extent photogrammetric systems (carried by piloted aircrafts) provide data useful for exterior and interior orientation acquired using Global Navigation Satellite Systems (GNSS) and inertial measurement units (IMU) (e.g., [

17]).

The miniaturization of digital photographic equipment enabled the use of smaller platforms—especially unmanned aerial vehicles (UAV or remotely piloted aircraft systems RPAS or drones). These systems are currently able to carry a wide range of sensors, which provide a variety of tools for forestry purposes: RGB cameras utilized for inventory tasks [

18,

19,

20], plantation assessments [

21,

22], gap detection [

23,

24] and tree stump identification [

25]; multi- and hyperspectral sensors utilized for forest health [

26,

27,

28,

29] and UAV laser scanners (LIDAR) for estimation of geometrical parameters at ultrahigh resolution [

30,

31,

32].

Simple visible spectral range or RGB cameras are most commonly used partially due to the emergence of new computer vision techniques—Structure-from-Motion (SfM) and Multiview Stereopsis (MVS). SfM is used to reconstruct the camera position and scene geometry. In contrast to the traditional photogrammetric methods, SfM does not require 3D position of the camera or multiple control points prior to image acquisition, because the position, orientation and geometry are reconstructed using automatic matching of features in multiple images [

33]. The MVS technique is subsequently used to densify the resulting point cloud. However, the resulting models lack proper scales without the use of any spatial reference information. Various scaling techniques are, therefore, used, often depending on the software used. If the absolute orientation is needed (e.g., to overlay the model output with other GIS layers), georeferencing via GCPs is the standard approach. Application of GNSS tagged imagery could be an alternative.

Even the most common, hobby-grade UAVs (e.g., DJI Phantom series) use a GNSS receiver for navigation purposes and can be used to add positional information and coordinates to the EXIF metadata of the images acquired during flight. Typical accuracy of autonomously operating, single-frequency GNSS receivers is in the range of meters and is thus insufficient for higher accuracy demands. Therefore, differential GNSS solutions are currently being adopted for UAVs. Such a solution requires two receivers: a base station operating under ideal conditions with GNSS signal reception to provide differential correction data and a rover moving between points of interest and the positions are refined using the differential correction data from the base station [

34]. The base GPS is often replaced by services of continuously operating reference stations (CORS). With differential kinematic GNSS solutions, the positioning of the rover can achieve accuracy of a few centimetres. The advantage of the UAV GNSS receivers is that they almost always operate under conditions with ideal GNSS signal reception in contrast to terrestrial receivers, whose accuracy in forests is decreased due to signal blocking and multi-path effect [

35,

36].

Two primary modifications of kinematic GNSS measurements are being adopted for UAV applications, based on immediate availability of correction data during UAV flights. If the UAV GNSS receiver can communicate with the reference station in real time (using a radio link), corrections can be simultaneously applied during the flight. This mode is referred to as Real-Time Kinematic (RTK) correction. If the corrections from the CORS or a virtual reference station (VRS) are applied post-flight, the mode is referred to as Post-Processed Kinematic (PPK).

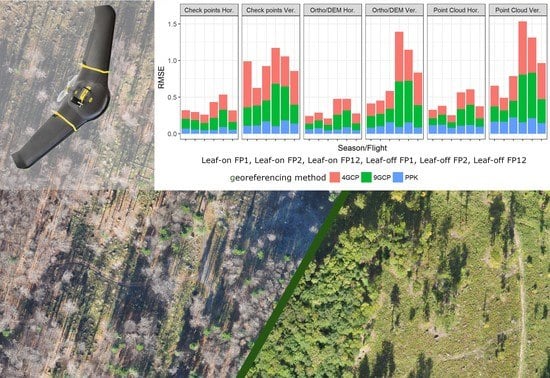

The objective of this study is to evaluate the geospatial accuracies of photogrammetric products from the UAV RTK/PPK solution in a forested area. The results were compared to the traditional approach—application of ground control points (GCPs) to georeference the data into a proper coordinate system. In addition, we evaluate the influence of vegetation leaf-off versus leaf-on conditions as well as the impact of differing flight patterns on the accuracies.

4. Discussion

Overall, influence of GCP numbers and spatial distribution have been widely studied but conclusions often differ significantly. In terms of accuracy assessment and GCP configuration, this study partially follows our previous study [

40], where we reported RMSE

xy from 0.04 m to 0.11 m for the 4GCP pattern, while RMSEs of the 9GCP pattern were from 0.04 m to 0.08 m. However, the DJI Phantom 3 UAV was used at significantly lower flight altitudes (50–60 m) and the test plot areas were smaller. Vertical RMSEs were in range from 0.08 to 0.17 m. Aguera-Vega et al. [

41] studied influence of GCP count (4–20 GCPs) and pattern on a ~18 ha plot and achieved the best accuracy with 15 GCP. They declare wide range of slope values; the elevation range was about 60 m. For smaller areas (2.8–4.1 ha), He et al. [

42] used 9–10 GCPs and achieved horizontal RMSEs of 1–3 centimetres and vertical RMSE of 4 centimetres. The authors designed an algorithm to improve accuracy of automated aerial triangulation, however, the height of flight was significantly lower—40 to 60m. Fewer studies were conducted on areas larger than 100 ha. Rangel et al. [

43] used multiple flights of a S500 multicopter and studied differing GCP configurations on an area of 400 ha. They did not find any significant increase of orthophoto accuracy when the GCP count was over 18. Tahar [

44] conducted a photogrammetric survey of a 150 ha area using 4–9 GCPs and achieved an RMSE

xy of 0.50 m and RMSE

z of 0.78 m. Küng et al. [

45] used 19 GCPs on an area of 210 ha and reported horizontal accuracy of 0.38 m and vertical accuracy of 1.07 m for a flight height of 262 m AGL.

In terms of accuracy, increasing number of GCPs and their regular spatial distribution has a positive effect. However, from practical point of view, a simple increase of GCP number makes the survey more labour intensive and less effective. This is particularly evident in forests where the use of GNSS for GCP measurements is complicated. In such cases (but not limited to these), RTK/PPK technology applied with UAVs can be a feasible solution. Tests of this technology outside forest ecosystems have already been conducted. Gerke and Przybilla [

46] tested the technology on an stockpile area. For this 1100 × 600 m area with 50 m maximum height difference, they achieved horizontal and vertical RMSEs under 10cm. Another result of this test was that addition of GCPs did not provide better accuracy when the RTK technology was enabled. Benassi et al. [

47] used a senseFly eBee RTK UAV to test the accuracy at a 400 × 500 m area comprising of a part of a university campus with buildings up to 35 m height. On 14 checkpoints, they achieved average horizontal RMSE of 2.2 cm for differing software packages. The elevation accuracy was more than two times worse; authors suggest application of at least one GCP to gain control over the biases influencing elevation. Another test of the UAV RTK solution was conducted on 80 ha area with flat terrain and buildings [

48]. Reported horizontal RMSEs were under four centimetres for both real-time and post-processed variants of GNSS measurement. However, in this case authors suggest that “classical” aerotriangulation with GCPs is better than the direct georeferencing. Achievable accuracy of the RTK/PPK therefore seems higher than the one achieved in our study, however, our results are related to much more complicated terrain conditions. When comparing RTK/PPK georeferencing approach to georeferencing with the use of GCPs, significantly higher number of precise positions entering the bundle adjustment must be also considered. The number for PPK solution in our study was higher than 600, while only four and nine GCPs were used for our configurations.

The influence of flight patterns on the achieved accuracy was tested in multiple studies. In our case, the patterns were adapted to the main terrain lines (valleys, ridges). Despite this design, the influence of flight patterns on the vertical accuracy was not confirmed. The differences of horizontal accuracy for individual flight patterns FP1 and FP2 were not significant but the combined FP12 pattern provided significantly better accuracy compared to both of them. This effect of combined patterns, often referred to as crossflights, is described for example, by Manfreda et al. [

49]. Six flight patterns, some with tilted camera, were used to achieve higher accuracy. Authors report the combination of patterns as the most accurate, in terms of both horizontal and vertical accuracy. Increased influence of crossflights with decreasing number of GCPs is reported in another study [

46]. Authors also report a non-substantial but visible increase of accuracy for the RTK UAV technology when employed with cross-flights. A practical problem of the combined patterns is the increased amount of redundant data. This can induce problems especially in more complex projects, where the computational power needed to process the data can be very high.

The significant negative impact of the leaf-off season already during alignment of imagery can limit possibilities of constructing digital terrain models from UAV imagery. The limited ability to reconstruct terrain under full forest canopy is one of the main disadvantages of SfM when comparing photogrammetry with LiDAR. However, multiple studies reported some success during the leaf-off season or under partially opened canopy. Graham et al. [

50] reconstructed up to 60% of terrain with an RMSE lower than 1.5m in disturbed conifer forest. Guerra-Hernández et al. [

12] studied terrain under a Eucalyptus plantation with canopy cover higher than 60% and reported terrain height overestimation over two meters. Moudrý et al. [

51] mapped a post-mining site under leaf-off conditions and achieved point cloud accuracy between 0.11 and 0.19 m. Similar team of authors [

52] reported a DTM acquired in forest during the leaf-off season as the most accurate when compared with aquatic vegetation and steppe ecosystems. Through application of the Best Available Pixel Compositing (BATP) on multi-temporal UAV imagery, Goodbody et al. [

53] were able to obtain DTMs with a 0.01 m mean error, a standard deviation of 0.14m and a relative coverage of 86.3% compared with the reference DTM. The stem density was relatively low (50 stems/ha). The authors suggest that the imagery acquisition timing has a significant impact on DTM error with assumption that the acquisition in spring, late-fall and early winter was the most accurate. This is in contrast with our results. We explain this by a monotone pattern of mostly monocultural, fully-stocked Beech forests during the leaf-off season. This lack of distinctive features resulted into failure of image alignment on parts with continuous forest. During the leaf-on season, the crowns of the trees and gaps between them provided sufficient background for the alignment.

Besides the actual accuracy, the ability to identify points of interest can significantly influence results of photogrammetric evaluation. The process of point identification demands some operator’s experience with image interpretation, especially when interpreting natural features [

54]. Even though points in our study were identified using crosses, we have observed some difficulties during the identification on point clouds and orthomosaics (

Figure 10). Slightly shaded conditions were ideal, where the shape and dimensions of the crosses were clearly distinguishable. In contrast, sharply illuminated bright surfaces caused deformation of the pixels and their spectra during the process of orthorectification. Special cases were related to the vegetation canopy boundaries. The identification of the point centres was even more complicated in the point clouds. Despite the total point count of hundreds of millions points, the point clouds were too sparse to properly zoom in on the point centre. We consider this the reason of lower accuracy when comparing point clouds to orthophotos.

During the orthophoto evaluation, we experienced difficulties with identification of particular points at the edges of forest stands. In some cases, trees were rendered over such points on the orthophoto, even when the points were situated outside the canopy during the field survey. This is because the product used is a standard orthophoto, not a “true orthophoto” [

55,

56]. This can be seen when the flight pattern is perpendicular to the edge of the forest (

Figure 11). As the identification of forest gaps is a frequent task in forestry (e.g., [

23,

24]), this can introduce errors, especially when the desired accuracy is high. Agisoft Photoscan software allows editing the seamlines and thus production of true orthophotos but the labour intensity is increased significantly. In contrast with evaluation of the orthophotos, the use of point clouds allowed non-problematic identification of points partially occluded by trees. However, in few cases the point was snapped to a point belonging to the tree instead of ground.

Overall, the RTK/PPK solution used in this study could be suitable for mapping inaccessible areas if the desired horizontal error is lower than 10 cm and vertical error is under 20 cm. Besides the accuracy, other practical challenges must be considered, if the technology is to be applied effectively. For example, if the possible flight time of the platform is short or the UAV must operate within a line-of-sight of an operator, the feasibility for mapping inaccessible or hazardous areas is significantly limited. But UAVs with—one hour flight time can reach more distant areas, thus, in combination with the RTK/PPK technology, can provide the remote sensing independent on terrestrial measurements. Higher initial costs must also be considered. In case of eBee UAVs, the cost of the RTK/PPK technology is in range of several thousands of Euros (compared to the UAV without the RTK/PPK technology). However, utilization of GCPs also requires a GNSS receiver and its price must be considered.