JointNet: A Common Neural Network for Road and Building Extraction

Abstract

:1. Introduction

- (1)

- A novel neural network was proposed for road and building information extraction. The network can accurately extract information on both the linear shape and the large-scale objects.

- (2)

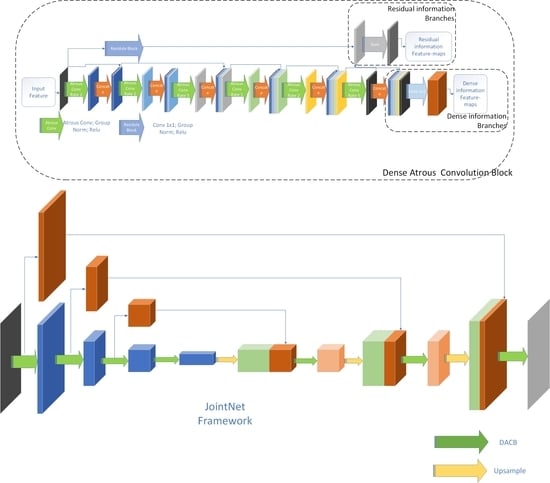

- A novel network module, dense atrous convolution block, was proposed. The module overcame the problem of the small receptive field of dense connectivity pattern by effectively organizing its atrous convolution layers with crafted rate settings. The module not only maintained the feature propagation efficiency of dense connectivity but also achieved a larger receptive field.

- (3)

- By utilizing the focal loss [9] function, the imbalance problem between the road centerline target and its background was solved. Through the improvement on the loss function, the network improved the correctness of road extraction and enhanced the ability to find unlabeled roads.

- (4)

- By replacing the batch normalization [10] (BN) layer in the network with the group normalization [11] (GN) layer, the problem that network performance was affected by small training batch size was solved. The neural network generally uses the BN as a standard normalization method to improve the network training. However, when the batch size is too small, the performance of the BN decreases significantly. By using GN as a normalization method, the training results of the proposed network were no longer affected by the batch size, and the neural network model itself can become larger with more modules to achieve better performance.

2. Related Works

3. Methodology

3.1. Dense Atrous Convolution Blocks

3.2. JointNet Architecture

3.3. Group Normalization and Focal loss

3.3.1. Group Normalization

3.3.2. Focal Loss

4. Experiment and Analysis

4.1. DataSets

4.1.1. Massachusetts Road and Building Datasets

4.1.2. National Laboratory of Pattern Recognition (NLPR) Road Datasets

4.2. Data Augmentation

4.3. Baseline Methods

4.4. Experimental Metrics

4.5. Results

4.5.1. Experimental Result on the Massachusetts Road Dataset

4.5.2. Experimental Result on the NLPR Road Dataset

4.5.3. Experimental Results on Massachusetts Building Dataset

5. Discussion

- (1)

- In the road extraction task, the ground truth of road is a linear shape target. As the width of the target is very limited, only a few pixels, if the prediction results differ from the target location, even if by only a few pixels, the evaluation results can show a big difference. Therefore, the extraction accuracy of such targets depends on the consistency of the shape and position of the prediction results with the target. In the high-level features of convolutional networks, the spatial location information of the target becomes unstable after several rescale operations. At this time, the reuse of the low-level features becomes key, because the low-level features have not been rescaled, the spatial location information in the low-level feature is more accurate than that of the high-level feature. For this reason, the encoder-decoder network, which reuses the low-level feature by skip connection, played an important role in the road extraction task.

- (2)

- In the building extraction, the key to the building extraction network is to have a large receptive field. Accurate building extraction depends on the acquisition of the complete edge information of the building, which is distributed in a certain range in remote sensing images. A network with a large receptive field which covers the range can extract the context information of the building such as its edge. As shown in the evaluation results, if the size of the receptive field of the network is too small to cover the building target, one of the typical problems caused by this issue would be the discontinuity in the central area of the extracted large-size building target. The semantic information of the high-level features of the network covers a wider range of receptive fields than that of the low-level features, so for building extraction, the high-level features of the network are more critical than that of the low-level features.

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: New York, NY, USA, 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv, 2017; arXiv:1706.05587. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. arXiv, 2018; arXiv:1802.02611. [Google Scholar]

- Mnih, V. Machine Learning for Aerial Image Labeling. Ph.D. Thesis, University of Toronto, Toronto, ON, Canada, 2013. [Google Scholar]

- Marcu, A.; Leordeanu, M. Dual local-global contextual pathways for recognition in aerial imagery. arXiv, 2016; arXiv:1605.05462. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2017, PP, 2999–3007. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Wu, Y.; He, K. Group Normalization. arXiv, 2018; arXiv:1803.08494. [Google Scholar]

- Salakhutdinov, R.; Mnih, A.; Hinton, G. Restricted Boltzmann machines for collaborative filtering. In Proceedings of the 24th International Conference on Machine Learning, Corvalis, OR, USA, 20–24 June 2007; pp. 791–798. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Mnih, V.; Larochelle, H.; Hinton, G.E. Conditional restricted boltzmann machines for structured output prediction. arXiv, 2012; arXiv:1202.3748. [Google Scholar]

- Mnih, V.; Hinton, G.E. Learning to detect roads in high-resolution aerial images. In European Conference on Computer Vision; Springer: New York, NY, USA, 2010; pp. 210–223. [Google Scholar]

- Saito, S.; Aoki, Y. Building and road detection from large aerial imagery. In Proceedings of the Image Processing: Machine Vision Applications VIII, San Francisco, CA, USA, 8–12 February 2015; Volume 9405, p. 94050K. [Google Scholar]

- Saito, S.; Yamashita, Y.; Aoki, Y. Multiple Object Extraction from Aerial Imagery with Convolutional Neural Networks. Electron. Imaging 2016, 60, 10402–10402:9. [Google Scholar]

- Zhang, Z.; Liu, Q.; Wang, Y. Road extraction by deep residual u-net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing & Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015. [Google Scholar]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Drăguţ, L.; Blaschke, T. Automated classification of landform elements using object-based image analysis. Geomorphology 2006, 81, 330–344. [Google Scholar] [CrossRef]

- Myint, S.W.; Gober, P.; Brazel, A.; Grossman-Clarke, S.; Weng, Q. Per-pixel vs. object-based classification of urban land cover extraction using high spatial resolution imagery. Remote Sens. Environ. 2011, 115, 1145–1161. [Google Scholar] [CrossRef]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Fully convolutional neural networks for remote sensing image classification. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS, Beijing, China, 10–15 July 2016; pp. 5071–5074. [Google Scholar]

- Marcu, A.; Costea, D.; Slusanschi, E.; Leordeanu, M. A Multi-Stage Multi-Task Neural Network for Aerial Scene Interpretation and Geolocalization. arXiv, 2018; arXiv:1804.01322. [Google Scholar]

- Vincent, L.; Soille, P. Watersheds in Digital Spaces: An Efficient Algorithm Based on Immersion Simulations. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 583–598. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; SuSstrunk, S. SLIC Superpixels Compared to State-of-the-Art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 8–12 June 2015; pp. 3431–3440. [Google Scholar]

- Eigen, D.; Fergus, R. Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2650–2658. [Google Scholar]

- Pinheiro, P.H.; Collobert, R. Recurrent convolutional neural networks for scene labeling. In Proceedings of the 31st International Conference on Machine Learning (ICML), Beijing, China, 21–26 June 2014; pp. 82–90. [Google Scholar]

- Lin, G.; Shen, C.; Van Den Hengel, A.; Reid, I. Efficient piecewise training of deep structured models for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3194–3203. [Google Scholar]

- Chen, L.C.; Yang, Y.; Wang, J.; Xu, W.; Yuille, A.L. Attention to scale: Scale-aware semantic image segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3640–3649. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. arXiv, 2015; arXiv:1511.00561. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In European Conference on Computer Vision; Springer: New York, NY, USA, 2016; pp. 630–645. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 8–12 June 2015; pp. 1–9. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the 31st AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 4278–4284. [Google Scholar]

- Wang, P.; Chen, P.; Yuan, Y.; Liu, D.; Huang, Z.; Hou, X.; Cottrell, G. Understanding convolution for semantic segmentation. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 1451–1460. [Google Scholar]

- Frajka, T.; Zeger, K. Downsampling dependent upsampling of images. Signal Process. Image Commun. 2004, 19, 257–265. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer Normalization. arXiv, 2016; arXiv:1607.06450. [Google Scholar]

- Ulyanov, D.; Vedaldi, A.; Lempitsky, V. Instance Normalization: The Missing Ingredient for Fast Stylization. arXiv, 2016; arXiv:1607.08022. [Google Scholar]

- Cheng, G.; Ying, W.; Xu, S.; Wang, H.; Xiang, S.; Pan, C. Automatic Road Detection and Centerline Extraction via Cascaded End-to-End Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3322–3337. [Google Scholar] [CrossRef]

- OpenStreetMap Contributors. OpenStreetMap. Available online: https://www.openstreetmap.org (accessed on 20 March 2019).

- Simard, P.; Steinkraus, D.; Platt, J.C. Best Practices for Convolutional Neural Networks Applied to Visual Document Analysis. In Proceedings of the International Conference on Document Analysis Recognition, Edinburgh, UK, 3–6 August 2003. [Google Scholar]

- Zhou, L.; Zhang, C.; Wu, M. D-linknet: Linknet with pretrained encoder and dilated convolution for high resolution satellite imagery road extraction. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 8–22 June 2018. [Google Scholar]

- Demir, I.; Koperski, K.; Lindenbaum, D.; Pang, G.; Huang, J.; Basu, S.; Hughes, F.; Tuia, D.; Raska, R. Deepglobe 2018: A challenge to parse the earth through satellite images. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 8–22 June 2018. [Google Scholar]

- Iglovikov, V.I.; Seferbekov, S.; Buslaev, A.V.; Shvets, A. TernausNetV2: Fully Convolutional Network for Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 8–22 June 2018. [Google Scholar]

- Ehrig, M.; Euzenat, J. Relaxed precision and recall for ontology matching. In Proceedings of the K-Cap 2005 Workshop on Integrating Ontology, Banff, AB, Canada, 2 October 2005. [Google Scholar]

- Chollet, F. Keras. Available online: https://keras.io (accessed on 20 March 2019).

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv, 2014; arXiv:1412.6980. [Google Scholar]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic differentiation in PyTorch. NIPS-W. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Hamaguchi, R.; Fujita, A.; Nemoto, K.; Imaizumi, T.; Hikosaka, S. Effective Use of Dilated Convolutions for Segmenting Small Object Instances in Remote Sensing Imagery. arXiv, 2017; arXiv:1709.00179. [Google Scholar]

- Alshehhi, R.; Marpu, P.R.; Wei, L.W.; Mura, M.D. Simultaneous extraction of roads and buildings in remote sensing imagery with convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2017, 130, 139–149. [Google Scholar] [CrossRef]

| Layers | Input | Kernel Size | Growth Rate | Atrous Conv Rate | Output |

|---|---|---|---|---|---|

| Atrous Conv 1 | α | 3 × 3 | k | 1 | k |

| Atrous Conv 2 | α + k | 3 × 3 | k | 2 | k |

| Atrous Conv 3 | α + 2k | 3 × 3 | k | 5 | k |

| Atrous Conv 4 | α + 3k | 3 × 3 | k | 1 | k |

| Atrous Conv 5 | α + 4k | 3 × 3 | k | 2 | k |

| Atrous Conv 6 | α + 5k | 3 × 3 | k | 5 | k |

| Conv RB(1) | α | 1 × 1 | None | 1 | k |

| Conv RB(2) | α + 6k | 1 × 1 | None | 1 | 4k |

| Name | Module Type | Spatial Size | Input | Stride | GR(1) | RO(2) | DO(3) |

|---|---|---|---|---|---|---|---|

| Encoder Level 1 | DACB(4) | 3 | 1 | 32 | 32 | 128 | |

| Encoder Level 2 | DACB | 32 | 2 | 64 | 64 | 256 | |

| Encoder Level 3 | DACB | 64 | 2 | 128 | 128 | 512 | |

| Network Bridge | DACB | 128 | 2 | 256 | 256 | None | |

| Decoder Level 3 | DACB | 768 | 1 | 128 | 128 | None | |

| Decoder Level 2 | DACB | 384 | 1 | 64 | 64 | None | |

| Decoder Level 1 | DACB | 192 | 1 | 32 | 32 | 128 | |

| Classification layer | 1 × 1 Conv | 128 | 1 | None | Class number | None |

| Methods | BEP(1) | Relaxed (ρ = 3) BEP | COR(2) | COM(3) | QUA(4) |

|---|---|---|---|---|---|

| Mnih-RBM(5) [7] | −−− | 0.8873 | −−− | −−− | −−− |

| Mnih-RBM+Post-processing [7] | −−− | 0.9006 | −−− | −−− | −−− |

| Saito et al. [17] | −−− | 0.9047 | −−− | −−− | −−− |

| U-Net (Keras, MSE(6)) [19] | 0.7628 | 0.9053 | 0.8269 | 0.6980 | 0.6102 |

| Res-U-Net (Keras, MSE) [18] | |||||

| CasNet (Pytorch, DA(7), BCE) [44] | |||||

| U-Net (Pytorch, DA, BCE(8)) [19] | |||||

| Res-U-Net (Pytorch, DA, BCE) [18] | |||||

| DLinkNet101 (Pytorch, DA, BCE) [47] | |||||

| Ours (Pytorch, DA, BCE) | |||||

| Ours (Pytorch, DA, FL(9)) |

| Methods | BEP(1) | Relaxed (ρ = 3) BEP | COR(2) | COM(3) | QUA(4) |

|---|---|---|---|---|---|

| CasNet (Pytorch, DA(5), BCE) [44] | |||||

| U-Net (Pytorch, DA, BCE(6)) [19] | |||||

| Res-U-Net (Pytorch, DA, BCE) [18] | |||||

| Ours (Pytorch, DA, BCE) | |||||

| Ours (Pytorch, DA, BCE(7)) |

| Methods | BEP(1) | Relaxed (ρ = 3) BEP | COR(2) | COM(3) | QUA(4) |

|---|---|---|---|---|---|

| Mnih et al. [7] | −−− | −−− | −−− | −−− | |

| Saito et al. [17] | −−− | −−− | −−− | −−− | |

| Hamaguchi et al. [54] | −−− | −−− | −−− | −−− | |

| U-Net (Keras, BCE(5)) [19] | |||||

| Res-U-Net (Keras, BCE) [18] | |||||

| MTMS-Stage-1 (report) [24] | 0.8339 | 0.9604 | −−− | −−− | −−− |

| MTMS-Stage-1(Keras, BCE) [24] | 0.8345 | 0.9595 | |||

| TernausNetV2 (Keras, BCE) [49] | 0.8481 | 0.9643 | |||

| Ours (Keras, BCE) | |||||

| Ours (Keras, MSE(7)) | |||||

| Ours (Keras, @448 × 448, BCE) |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Wang, Y. JointNet: A Common Neural Network for Road and Building Extraction. Remote Sens. 2019, 11, 696. https://doi.org/10.3390/rs11060696

Zhang Z, Wang Y. JointNet: A Common Neural Network for Road and Building Extraction. Remote Sensing. 2019; 11(6):696. https://doi.org/10.3390/rs11060696

Chicago/Turabian StyleZhang, Zhengxin, and Yunhong Wang. 2019. "JointNet: A Common Neural Network for Road and Building Extraction" Remote Sensing 11, no. 6: 696. https://doi.org/10.3390/rs11060696