Using Multi-Spectral UAV Imagery to Extract Tree Crop Structural Properties and Assess Pruning Effects

Abstract

:1. Introduction

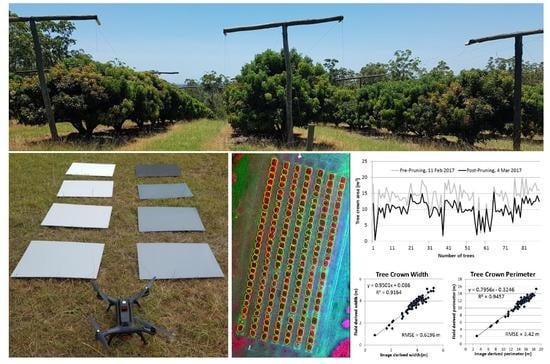

2. Study Area

3. Materials and Methods

3.1. Field Data

3.2. UAV Data and Pre-Processing

3.3. Geographic Object-Based Image Analysis

3.4. Tree Crown Parameter Extraction

4. Results and Discussion

4.1. Tree Crown Delineation

4.2. Mapping of Tree Structure

4.3. Pre- and Post-Pruning Tree Structure Comparison

4.4. Effects of Flying Height Differences

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Atzberger, C. Advances in remote sensing of agriculture: Context description, existing operational monitoring systems and major information needs. Remote Sens. 2013, 5, 949–981. [Google Scholar] [CrossRef]

- Me, C.Y.; Balasundram, S.K.; Hanif, A.H.M. Detecting and monitoring plant nutrient stress using remote sensing approaches: A review. Asian J. Plant Sci. 2017, 16, 1–8. [Google Scholar]

- Ikinci, A. Influence of pre- and postharvest summer pruning on the growth, yield, fruit quality, and carbohydrate content of early season peach cultivars. Sci. World J. 2014. [Google Scholar] [CrossRef] [PubMed]

- Jimenez-Brenes, F.M.; Lopez-Granados, F.; de Castro, A.I.; Torres-Sanchez, J.; Serrano, N.; Pena, J.M. Quantifying pruning impacts on olive tree architecture and annual canopy growth by using UAV based 3D modelling. Plant Methods 2017, 13, 55. [Google Scholar] [CrossRef] [PubMed]

- Rosell, J.; Sanz, R. A review of methods and applications of the geometric characterization of tree crops in agricultural activities. Comput. Electron. Agric. 2012, 81, 124–141. [Google Scholar] [CrossRef]

- Sinoquet, H.; Stephan, J.; Sonohat, G.; Lauri, P.É.; Monney, P. Simple equations to estimate light interception by isolated trees from canopy structure features: Assessment with three-dimensional digitized apple trees. New Phytol. 2007, 175, 94–106. [Google Scholar] [CrossRef] [PubMed]

- Connor, D.J.; Gomez del Campo, M.; Rousseaux, M.C.; Searles, P.S. Structure management and productivity of hedgerow olive orchards: A review. Sci. Hortic. 2014, 169, 71–93. [Google Scholar] [CrossRef]

- Menzel, C. Lychee production in Australia. In Lychee Production in the Asia-Pacific Region, 1st ed.; Papademetriou, M.K., Dent, F.J., Eds.; RAP Publication 2002/04; Food and Agriculture Organization of the United Nations, Regional Office for Asia and the Pacific: Bangkok, Thailand, 2002. [Google Scholar]

- Day, K.R.; Dejong, T.M.; Hewitt, A.A. Postharvest summer pruning of “Firebrite” nectarine trees. HortScience 1989, 24, 238–240. [Google Scholar]

- Miller, S.S. Regrowth, flowering and fruit quality of “delicious” apple trees as influenced by pruning treatments. J. Am. Soc. Hortic. Sci. 1982, 107, 975–978. [Google Scholar]

- Ferguson, L.; Glozer, K.; Crisosto, C.; Rosa, U.A.; Castro-Garcia, S.; Fichtner, E.J.; Guinard, J.X.; Le, S.M.; Krueger, W.H.; Miles, J.A.; et al. Improving canopy contact olive harvester efficiency with mechanical pruning. Acta Hortic. 2012, 965, 83–87. [Google Scholar] [CrossRef]

- Campbell, T.P.; Diczbalis, Y. Pruning to Meet Your Lychee Goals: A Report for the Rural Industries Research and Development Corporation; Rural Industries Research and Development Corporation: Barton, Australia, 2001. [Google Scholar]

- Lu, D.; Mausel, P.; Brondizio, E.; Moran, E. Change detection techniques. Int. J. Remote Sens. 2004, 25, 2365–2407. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J. The application of small Unmanned Aerial Systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Bagheri, N. Development of a high-resolution aerial remote-sensing system for precision agriculture. Int. J. Remote Sens. 2017, 38, 2053–2065. [Google Scholar] [CrossRef]

- McCabe, M.F.; Rodell, M.; Alsdorf, D.E.; Miralles, D.G.; Uijlenjhoet, R.; Wagner, W.; Lucieer, A.; Houborg, R.; Verhoest, N.E.C.; Franz, T.E.; et al. The future of earth observation in hydrology. Hydrol. Earth Syst. Sci. 2017, 21, 3879–3914. [Google Scholar] [CrossRef]

- Kang, J.; Wang, L.; Chen, F.; Nui, Z. Identifying tree crown areas in undulating eucaluptus plantations using JSEG multi-scale segmentation and unmanned aerial vehicle near-infrared imagery. Int. J. Remote Sens. 2017, 38, 2296–2312. [Google Scholar] [CrossRef]

- Panagiotidis, D.; Abdollahnejad, A.; Surový, P.; Chiteculo, V. Determining tree height and crown diameter from high-resolution UAV imagery. Int. J. Remote Sens. 2017, 38, 2392–2410. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Diaz-Varela, R.; Angileri, V.; Loudjani, E. Tree height quantification using very high resolution imagery acquired from an unmanned aerial vehicle (UAV) and automatic 3D photo-reconstruction methods. Eur. J. Agron. 2014, 55, 89–99. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Geoinformation 2015, 38, 79–87. [Google Scholar] [CrossRef]

- Oerke, E.-C.; Gerhards, R.; Menz, G.; Sikora, R.A. Precision Crop Protection—The Challenge and Use of Heterogeneity; Springer: Dordrecht, The Netherlands, 2010. [Google Scholar]

- Matese, A.; Di Gennaro, S.F.; Berton, A. Assessment of a canopy height model (CHM) in a vineyard using UAV-based multispectral imaging. Int. J. Remote Sens. 2017, 38, 2150–2160. [Google Scholar] [CrossRef]

- Berni, J.A.J.; Zarco-Tejada, P.J.; Suarez, L.; Fereres, E. Thermal and narrowband multispectral remote sensing for vegetation monitoring from an unmanned aerial vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef] [Green Version]

- Diaz-Varela, R.A.; de la Rosa, R.; Leon, L.; Zarco-Tejada, P.J. High-resolution airborne UAV imagery to assess olive tree crown parameters using 3D photo reconstruction: Application in breeding trials. Remote Sens. 2015, 7, 4213–4232. [Google Scholar] [CrossRef]

- Torres-Sanchez, J.; Lopez-Granados, F.; Serrano, N.; Arquero, O.; Pena, J.M. High-throughput 3-D monitoring of agricultural-tree plantations with Unmanned Aerial Vehicle (UAV) technology. PLoS ONE 2015. [Google Scholar] [CrossRef] [PubMed]

- Koch, B.; Heyder, U.; Weinacker, H. Detection of individual tree crowns in airborne Lidar data. Photogramm. Eng. Remote Sens. 2006, 72, 357–363. [Google Scholar] [CrossRef]

- Leckie, D.; Gougeon, F.; Hill, D.; Quinn, R.; Armstrong, L.; Shreenan, R. Combined high-density lidar and multispectral imagery for individual tree crown analysis. Can. J. Remote Sens. 2003, 29, 633–649. [Google Scholar] [CrossRef]

- Pouliot, D.A.; King, D.J.; Bell, F.W.; Pitt, D.G. Automated tree crown detection and delineation in high-resolution digital camera imagery of coniferous forest regeneration. Remote Sens. Environ. 2002, 82, 322–334. [Google Scholar] [CrossRef]

- Bunting, P.; Lucas, R. The delineation of tree crowns in Australian mixed species forests using hyperspectral Compact Airborne Spectrographic Imager (CASI) data. Remote Sens. Environ. 2006, 101, 230–248. [Google Scholar] [CrossRef]

- Johansen, K.; Bartolo, R.; Phinn, S. Special Feature—Geographic object-based image analysis. J. Spat. Sci. 2010, 55, 3–7. [Google Scholar] [CrossRef]

- Johansen, K.; Sohlbach, M.; Sullivan, B.; Stringer, S.; Peasley, D.; Phinn, S. Mapping banana plants from high spatial resolution orthophotos to facilitate eradication of Banana Bunchy Top Virus. Remote Sens. 2014, 6, 8261–8286. [Google Scholar] [CrossRef]

- Kamal, M.; Phinn, S.; Johansen, K. Object-based approach for multi-scale mangrove composition mapping using multi-resolution image datasets. Remote Sens. 2015, 7, 4753–4783. [Google Scholar] [CrossRef]

- Kee, Y.; Quackenbush, L.J. A review of methods for automatic individual tree-crown detection and delineation from passive remote sensing. Int. J. Remote Sens. 2011, 32, 4725–4747. [Google Scholar] [CrossRef]

- Blaschke, T. Object Based Image Analysis for Remote Sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Herrick, J.E.; Rango, A.; Winters, C. Acquisition, orthorectification, and object-based classification of unmanned aerial vehicle (UAV) imagery for rangeland monitoring. Photogramm. Eng. Remote Sens. 2010, 76, 611–672. [Google Scholar] [CrossRef]

- Ma, L.; Cheng, L.; Li, M.; Liu, Y.; Ma, X. Training set size, scale, and features in Geographic Object-Based Image Analysis of very high resolution unmanned aerial vehicle imagery. ISPRS J. Photogramm. Remote Sens. 2015, 102, 14–27. [Google Scholar] [CrossRef]

- Torres-Sanchez, J.; Lopez-Granados, F.; Pena, J.M. An automatic object-based method for optimal thresholding in UAV images: Application for vegetation detection in herbaceous crops. Comput. Electron. Agric. 2015, 114, 43–52. [Google Scholar] [CrossRef]

- Bureau of Meteorology. Climate Statistics for Australian Locations. Available online: www.bom.gov.au/climate/averages/tables/cw_040854.shtml (accessed on 19 March 2018).

- Scarth, P. A Methodology for Scaling Biophysical Models. Ph.D. Thesis, The University of Queensland, Brisbane, Australia, 2003. [Google Scholar]

- Van Gardingen, P.R.; Jackson, G.E.; Hernandez-Daumas, S.; Russel, G.; Sharp, L. Leaf area index estimates obtained for clumped canopies using hemispherical photography. Agric. For. Meteorol. 1999, 94, 243–257. [Google Scholar] [CrossRef]

- Propeller. AeroPoints. Available online: https://www.propelleraero.com/aeropoints/ (accessed on 19 March 2018).

- Wang, C.; Myint, S.W. A simplified empirical line method of radiometric calibration for small Unmanned Aircraft Systems-based remote sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1876–1885. [Google Scholar] [CrossRef]

- Ahmed, O.S.; Shemrock, A.; Chabot, D.; Dillon, C.; Williams, G.; Wasson, R.; Franklin, S.E. Hierarchical land cover and vegetation classification using multispectral data acquired from an unmanned aerial vehicle. Int. J. Remote Sens. 2017, 38, 2037–2052. [Google Scholar] [CrossRef]

- Zhan, Q.; Molenaar, M.; Tempfli, K.; Shi, W. Quality assessment for geo-spatial objectis derived from remotely sensed data. Int. J. Remote Sens. 2005, 26, 2953–2974. [Google Scholar] [CrossRef]

- Franklin, S.E.; Maudie, A.J.; Lavigne, M.B. Using spatial co-occurrence texture to increase forest structure and species composition classification accuracy. Photogramm. Eng. Remote Sens. 2001, 67, 849–855. [Google Scholar]

- Haralick, R.M.; Shanmugan, K.; Dinstein, I. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef]

- Clausi, D.A. An analysis of co-occurrence texture statistics as a function of grey level quantization. Can. J. Remote Sens. 2002, 28, 45–62. [Google Scholar] [CrossRef]

- Johansen, K.; Coops, N.C.; Gergel, S.E.; Stange, Y. Application of high spatial resolution satellite imagery for riparian and forest ecosystem classification. Remote Sens. Environ. 2007, 110, 29–44. [Google Scholar] [CrossRef]

- Kaartinen, H.; Hyyppa, J.; Yu, X.; Vastaranta, M.; Hyyppa, H.; Kukko, A.; Holopainen, M.; Heipke, C.; Hirschmugl, M.; Morsdorf, F.; et al. An international comparison of individual tree detection and extraction using airborne laser scanning. Remote Sens. 2012, 4, 950–974. [Google Scholar] [CrossRef] [Green Version]

- Lillesand, T.; Kiefer, R.W.; Chipman, J. Remote Sensing and Image Interpretation, 7th ed.; Wiley: Hoboken, NJ, USA, 2015. [Google Scholar]

- Johansen, K.; Phinn, S. Mapping structural parameters and species composition of riparian vegetation using IKONOS and Landsat ETM+ data in Australian tropical savannahs. Photogramm. Eng. Remote Sens. 2006, 72, 71–80. [Google Scholar] [CrossRef]

- Puissant, A.; Hirsch, J.; Weber, C. The utility of texture analysis to improve per-pixel classification for high to very high spatial resolution imagery. Int. J. Remote Sens. 2005, 26, 733–745. [Google Scholar] [CrossRef]

- Woodcock, C.E.; Strahler, A.H. The factor of scale in remote sensing. Remote Sens. Environ. 1987, 21, 311–332. [Google Scholar] [CrossRef]

- Gu, Y.; Wulie, B.K.; Howard, D.M.; Phuyal, K.P.; Ji, L. NDVI saturation adjustment: A new approach for improving cropland performance estimates in the Greater Platte River Basin, USA. Ecol. Indic. 2013, 30, 1–6. [Google Scholar] [CrossRef]

- Anderson, K.; Gaston, K. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef]

- Pajares, G. Overview and current status of remote sensing applications based on unmanned aerial vehicles (UAVs). Photogramm. Eng. Remote Sens. 2015, 81, 281–329. [Google Scholar] [CrossRef]

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Xu, B.; Yang, X.; Zhu, D.; Zhang, X.; et al. Unmanned aerial vehicle remote sensing for field-based crop phenotyping: Current status and perspectives. Front. Plant Sci. 2017, 8, 1111. [Google Scholar] [CrossRef] [PubMed]

- Robson, A.; Rahman, M.M.; Muir, J. Using WorldView satellite imagery to map yield in avocado (Persea Americana): A case study in Bundaberg, Australia. Remote Sens. 2017, 9, 1223. [Google Scholar] [CrossRef]

| Flying Height (m) | Overall (%) | User (%) | Producer (%) |

|---|---|---|---|

| 30 | 96.5 | 97.8 | 98.6 |

| 50 | 96.4 | 97.6 | 98.8 |

| 70 | 96.2 | 96.9 | 99.3 |

| Flying Height (m) | Tree Height (m) | Crown Width (m) | Crown Perimeter (m) |

|---|---|---|---|

| 30 | 0.3860 | 0.2280 | 2.5105 |

| 50 | 0.3934 | 0.2839 | 2.6700 |

| 70 | 0.6374 | 0.2604 | 2.3672 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Johansen, K.; Raharjo, T.; McCabe, M.F. Using Multi-Spectral UAV Imagery to Extract Tree Crop Structural Properties and Assess Pruning Effects. Remote Sens. 2018, 10, 854. https://doi.org/10.3390/rs10060854

Johansen K, Raharjo T, McCabe MF. Using Multi-Spectral UAV Imagery to Extract Tree Crop Structural Properties and Assess Pruning Effects. Remote Sensing. 2018; 10(6):854. https://doi.org/10.3390/rs10060854

Chicago/Turabian StyleJohansen, Kasper, Tri Raharjo, and Matthew F. McCabe. 2018. "Using Multi-Spectral UAV Imagery to Extract Tree Crop Structural Properties and Assess Pruning Effects" Remote Sensing 10, no. 6: 854. https://doi.org/10.3390/rs10060854