Optical Flow-Aware-Based Multi-Modal Fusion Network for Violence Detection

Abstract

:1. Introduction

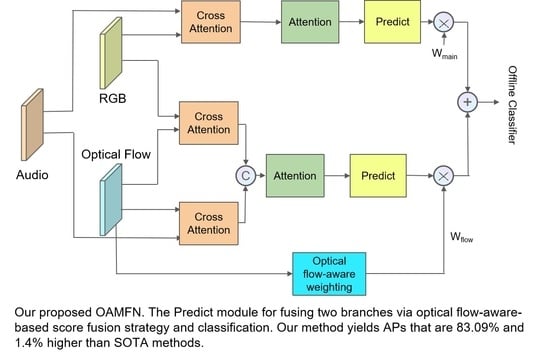

- We propose a novel two-branch optical flow-aware-based multi-modal fusion network for violence detection, which integrates audio features, the optical flow features, and the RGB features into a unified framework;

- We introduce three different fusion strategies for extracting important information and suppressing unnecessary audio and visual information, which includes an input fusion strategy, attention-based halfway fusion strategy, and optical flow-aware-based score fusion strategy;

- We propose an optical flow-aware score weighting mechanism to control the contributions of the main branch and the optical flow branch under different optical flow conditions and to boost the AP performance of violence detection;

- A novel cross-modal information fusion module (CIFM) and novel channel attention module are proposed to weight the combined feature, which can extract useful information from features while eliminating useless information, such as redundancies and noise.

2. Related Work

2.1. Multi-Modal Fusion Strategies

2.2. Multi-Modal Fusion Methods

2.2.1. Single Modal Attention

2.2.2. Cross-Modal Attention

3. Proposed Work

3.1. The Cross-Modal Information Fusion Module

3.1.1. Modal Combination

3.1.2. Adaptive Fusion

3.2. Channel Attention Module

3.3. Prediction Module

3.3.1. Transition Layer

3.3.2. Backbone

3.3.3. Optical Flow-Aware Weighting

3.3.4. Offline Detection

3.3.5. Online Detection

3.3.6. Optical Flow-Aware Score Fusion

3.4. Loss Function

3.4.1. Online Prediction Loss

3.4.2. Offline Prediction Loss

3.4.3. Cross Prediction Loss

3.4.4. Total Loss

4. Experiments

4.1. Datasets

4.2. Evaluation Criteria

4.3. Implementation Details

4.4. Ablation Study: Comparison of Modules in Our Method

4.5. A Comparison of the AP Performance with the Existing Methods on the XD-Violence Dataset

4.6. A Comparison of the Offline AP Performance on the Different Violent Classes

4.7. Qualitative Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wan, S.; Ding, S.; Chen, C. Edge Computing Enabled Video Segmentation for Real-Time Traffic Monitoring in Internet of Vehicles. Pattern Recognit. 2022, 121, 108146. [Google Scholar] [CrossRef]

- Bertini, M.; Del Bimbo, A.; Seidenari, L. Multi-scale and real-time non-parametric approach for anomaly detection and localization. Comput. Vis. Image Underst. 2012, 116, 320–329. [Google Scholar] [CrossRef]

- Lu, C.; Shi, J.; Jia, J. Abnormal Event Detection at 150 FPS in MATLAB. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013. [Google Scholar]

- Xie, S.; Guan, Y. Motion instability based unsupervised online abnormal behaviors detection. Multimed. Tools Appl. 2016, 75, 7423–7444. [Google Scholar] [CrossRef]

- Biswas, S.; Babu, R.V. Real time anomaly detection in H.264 compressed videos. In Proceedings of the 2013 Fourth National Conference on Computer Vision, Pattern Recognition, Image Processing and Graphics (NCVPRIPG), Jodhpur, India, 18–21 December 2013. [Google Scholar]

- Zhou, S.; Shen, W.; Zeng, D.; Fang, M.; Wei, Y.; Zhang, Z. Spatial-temporal convolutional neural networks for anomaly detection and localization in crowded scenes. Signal Process. Image Commun. Publ. Eur. Assoc. Signal Process. 2016, 47, 358–368. [Google Scholar] [CrossRef]

- Sabokrou, M.; Fayyaz, M.; Fathy, M.; Klette, R. Deep-Cascade: Cascading 3D Deep Neural Networks for Fast Anomaly Detection and Localization in Crowded Scenes. IEEE Trans. Image Process. 2017, 26, 1992–2004. [Google Scholar] [CrossRef]

- Sultani, W.; Chen, C.; Shah, M. Real-world Anomaly Detection in Surveillance Videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Zhu, Y.; Newsam, S. Motion-Aware Feature for Improved Video Anomaly Detection. arXiv 2019, arXiv:1907.10211. [Google Scholar]

- Hu, X.; Dai, J.; Huang, Y.; Yang, H.; Zhang, L.; Chen, W.; Yang, G.; Zhang, D. A Weakly Supervised Framework for Abnormal Behavior Detection and Localization in Crowded Scenes. Neurocomputing 2020, 383, 270–281. [Google Scholar] [CrossRef]

- Nguyen, T.N.; Meunier, J. Anomaly Detection in Video Sequence with Appearance-Motion Correspondence. arXiv 2019, arXiv:1908.06351. [Google Scholar]

- Liu, W.; Luo, W.; Lian, D.; Gao, S. Future Frame Prediction for Anomaly Detection—A New Baseline. arXiv 2017, arXiv:1712.09867. [Google Scholar]

- Zaheer, M.Z.; Lee, J.H.; Astrid, M.; Lee, S.I. Old is Gold: Redefining the Adversarially Learned One-Class Classifier Training Paradigm. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Rodrigues, R.; Bhargava, N.; Velmurugan, R.; Chaudhuri, S. Multi-timescale Trajectory Prediction for Abnormal Human Activity Detection. In Proceedings of the 2020 IEEE Winter Conference on Applications of Computer Vision (WACV), Snowmass Village, CO, USA, 1–5 March 2020. [Google Scholar]

- Nam, J.; Alghoniemy, M.; Tewfik, A.H. Audio-visual content-based violent scene characterization. In Proceedings of the 1998 International Conference on Image Processing, ICIP98 (Cat. No.98CB36269), Chicago, IL, USA, 7 October 1998. [Google Scholar]

- Giannakopoulos, T.; Makris, A.; Kosmopoulos, D.; Perantonis, S.; Theodoridis, S. Audio-Visual Fusion for Detecting Violent Scenes in Videos. In Proceedings of the Artificial Intelligence: Theories, Models and Applications, Proceedings of the 6th Hellenic Conference on AI, SETN 2010, Athens, Greece, 4–7 May 2010; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Jian, L.; Wang, W. Weakly-Supervised Violence Detection in Movies with Audio and Video Based Co-training. In Proceedings of the Advances in Multimedia Information Processing—PCM 2009, Proceedings of the 10th Pacific Rim Conference on Multimedia, Bangkok, Thailand, 15–18 December 2009; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Giannakopoulos, T.; Pikrakis, A.; Theodoridis, S. A Multimodal Approach to Violence Detection in Video Sharing Sites. In Proceedings of the International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010. [Google Scholar]

- Zou, X.; Wu, O.; Wang, Q.; Hu, W.; Yang, J. Multi-modal Based Violent Movies Detection in Video Sharing Sites. In Proceedings of the Third Sino-Foreign-Interchange Conference on Intelligent Science and Intelligent Data Engineering, Nanjing, China, 15–17 October 2012; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Cristani, M.; Bicego, M.; Murino, V. Audio-Visual Event Recognition in Surveillance Video Sequences. IEEE Trans. Multimed. 2007, 9, 257–267. [Google Scholar] [CrossRef]

- Zajdel, W.; Krijnders, J.D.; Andringa, T.; Gavrila, D.M. CASSANDRA: Audio-video sensor fusion for aggression detection. In Proceedings of the 2007 IEEE Conference on Advanced Video and Signal Based Surveillance, London, UK, 5–7 September 2007. [Google Scholar]

- Wu, P.; Liu, J.; Shi, Y.; Sun, Y.; Shao, F.; Wu, Z.; Yang, Z. Not only Look, but also Listen: Learning Multimodal Violence Detection under Weak Supervision. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Gong, Y.; Wang, W.; Jiang, S.; Huang, Q.; Gao, W. Detecting Violent Scenes in Movies by Auditory and Visual Cues. In Proceedings of the Advances in Multimedia Information Processing—PCM 2008, Proceedings of the 9th Pacific Rim Conference on Multimedia, Tainan, Taiwan, 9–13 December 2008; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Wu, P.; Liu, J. Learning Causal Temporal Relation and Feature Discrimination for Anomaly Detection. IEEE Trans. Image Process. 2021, 30, 3513–3527. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Liu, F.; Jiao, L. Self-Training Multi-Sequence Learning with Transformer for Weakly Supervised Video Anomaly Detection. In Proceedings of the AAAI, Virtual, 24 February 2022. [Google Scholar]

- Pang, W.F.; He, Q.H.; Hu, Y.J.; Li, Y.X. Violence Detection In Videos Based On Fusing Visual And Audio Information. In Proceedings of theICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021. [Google Scholar]

- Li, C.; Song, D.; Tong, R.; Tang, M. Illumination-aware Faster R-CNN for Robust Multispectral Pedestrian Detection. arXiv 2018, arXiv:1803.05347. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.; Roychowdhury, A.; Maji, S. Bilinear CNN Models for Fine-grained Visual Recognition. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Learning Spatial Fusion for Single-Shot Object Detection. arXiv 2019, arXiv:1911.09516. [Google Scholar]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual Attention Network for Image Classification. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Zhong, Z.; Lin, Z.Q.; Bidart, R.; Hu, X.; Daya, I.B.; Li, Z.; Zheng, W.S.; Li, J.; Wong, A. Squeeze-and-Attention Networks for Semantic Segmentation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Li, C.; Wu, X.; Zhao, N.; Cao, X.; Tang, J. Fusing Two-Stream Convolutional Neural Networks for RGB-T Object Tracking. Neurocomputing 2017, 281, 78–85. [Google Scholar] [CrossRef]

- Gao, Y.; Li, C.; Zhu, Y.; Tang, J.; He, T.; Wang, F. Deep Adaptive Fusion Network for High Performance RGBT Tracking. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Zhang, T.; Liu, X.; Zhang, Q.; Han, J. SiamCDA: Complementarity-and distractor-aware RGB-T tracking based on Siamese network. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 1403–1417. [Google Scholar] [CrossRef]

- Lu, A.; Li, C.; Yan, Y.; Tang, J.; Luo, B. RGBT Tracking via Multi-Adapter Network with Hierarchical Divergence Loss. IEEE Trans. Image Process. 2021, 30, 5613–5625. [Google Scholar] [CrossRef]

- Dou, Z.Y.; Xu, Y.; Gan, Z.; Wang, J.; Wang, S.; Wang, L.; Zhu, C.; Zhang, P.; Yuan, L.; Peng, N.; et al. An Empirical Study of Training End-to-End Vision-and-Language Transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022. [Google Scholar]

- Liu, Y.; Li, S.; Wu, Y.; Chen, C.W.; Shan, Y.; Qie, X. UMT: Unified Multi-modal Transformers for Joint Video Moment Retrieval and Highlight Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022. [Google Scholar]

- Badamdorj, T.; Rochan, M.; Wang, Y.; Cheng, L. Joint Visual and Audio Learning for Video Highlight Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Jiang, H.; Lin, Y.; Han, D.; Song, S.; Huang, G. Pseudo-Q: Generating Pseudo Language Queries for Visual Grounding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022. [Google Scholar]

- Hendricks, L.A.; Mellor, J.; Schneider, R.; Alayrac, J.B.; Nematzadeh, A. Decoupling the Role of Data, Attention, and Losses in Multimodal Transformers. Trans. Assoc. Comput. Linguist. 2021, 9, 570–585. [Google Scholar] [CrossRef]

- Biswas, K.; Kumar, S.; Banerjee, S.; Pandey, A.K. SMU: Smooth activation function for deep networks using smoothing maximum technique. arXiv 2021, arXiv:2111.04682. [Google Scholar]

- Goodfellow, I.; Warde-Farley, D.; Mirza, M.; Courville, A.; Bengio, Y. Maxout Networks. Int. Conf. Mach. Learn. 2013, 28, 1319–1327. [Google Scholar]

- Carreira, J.; Zisserman, A. Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Gemmeke, J.F.; Ellis, D.P.; Freedman, D.; Jansen, A.; Lawrence, W.; Moore, R.C.; Plakal, M.; Ritter, M. Audio Set: An ontology and human-labeled dataset for audio events. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017. [Google Scholar]

- Hershey, S.; Chaudhuri, S.; Ellis, D.P.; Gemmeke, J.F.; Jansen, A.; Moore, R.C.; Plakal, M.; Platt, D.; Saurous, R.A.; Seybold, B.; et al. CNN Architectures for Large-Scale Audio Classification. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Tian, Y.; Pang, G.; Chen, Y.; Singh, R.; Verjans, J.W.; Carneiro, G. Weakly-supervised Video Anomaly Detection with Contrastive Learning of Long and Short-range Temporal Features. In Proceedings of the 2021 International Conference on Computer Vision(ICCV), Virtual, 11–17 October 2021. [Google Scholar]

- Scholkopf, B.; Williamson, R.C.; Smola, A.J.; Shawe-Taylor, J.; Platt, J.C. Support vector method for novelty detection. Adv. Neural Inf. Process. Syst. 1999, 12, 582–588. [Google Scholar]

- Hasan, M.; Choi, J.; Neumann, J.; Roy-Chowdhury, A.K.; Davis, L.S. Learning Temporal Regularity in Video Sequences. In Linear Networks and Systems; Chen, W.-K., Ed.; Wadsworth: Belmont, CA, USA, 1993; pp. 123–135. [Google Scholar]

| Cross-Attention | Channel Attention | Optical Flow-Aware Fusion | AP (%) |

|---|---|---|---|

| 🗸 | 80.69 | ||

| 🗸 | 81.39 | ||

| 🗸 | 80.8 | ||

| 🗸 | 🗸 | 82.58 | |

| 🗸 | 🗸 | 81.87 | |

| 🗸 | 🗸 | 82.43 | |

| 🗸 | 🗸 | 🗸 | 83.09 |

| Supervision | Method | Feature | Online AP(%) | Offline AP(%) |

|---|---|---|---|---|

| Unsupervised | SVM | - | - | 50.78 |

| OCSVM [48] | - | - | 27.25 | |

| Hasan et al. [49] | - | - | 30.77 | |

| Weakly Supervised | Sultani et al. [8] | RGB | - | 73.2 |

| Wu et al. [22] | RGB + Audio | 73.67 | 78.64 | |

| Tian et al. [47] | RGB | - | 77.81 | |

| CRFD [24] | RGB | - | 75.90 | |

| Pang et al. [26] | RGB + Audio | - | 81.69 | |

| MSL [25] | RGB | - | 78.59 | |

| Ours (without OASFM) | RGB + Flow + Audio | 77.24 | 82.58 | |

| Ours (with OASFM) | RGB + Flow + Audio | 78.09 | 83.09 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiao, Y.; Gao, G.; Wang, L.; Lai, H. Optical Flow-Aware-Based Multi-Modal Fusion Network for Violence Detection. Entropy 2022, 24, 939. https://doi.org/10.3390/e24070939

Xiao Y, Gao G, Wang L, Lai H. Optical Flow-Aware-Based Multi-Modal Fusion Network for Violence Detection. Entropy. 2022; 24(7):939. https://doi.org/10.3390/e24070939

Chicago/Turabian StyleXiao, Yang, Guxue Gao, Liejun Wang, and Huicheng Lai. 2022. "Optical Flow-Aware-Based Multi-Modal Fusion Network for Violence Detection" Entropy 24, no. 7: 939. https://doi.org/10.3390/e24070939