Traceability Analyses between Features and Assets in Software Product Lines

Abstract

:1. Introduction

- Check if at least one of the pre-configured machines covers the needs of a new user configuration.

- Check if at least one of the pre-configured machines realizes (exactly) the needs of a new user configuration.

- Check if there are dead packages, i.e., packages that cannot be in any of the virtual machines.

- A simple and abstract set-theoretic formal semantics of SPL with variability and traceability constraints is proposed.

- A number of new analysis problems, useful for relating the features and core assets in an SPL, are described.

- Quantified Boolean Formulae (QBFs) are proposed as a natural and efficient way of modeling these problems. The evidence of the scalability of QSAT for the analysis problems in large SPLs (compared to SAT) is also provided.

- We present a tool named SPLAnE that enables SPL developers to perform existing operations in the literature over feature diagrams [6] and many new operations proposed in this paper. It also allows one to perform analysis operations on a component model and SPL model. We used the FaMa framework to develop SPLAnE that makes it flexible to extend with new analyses of specific needs.

- We experimented on our approach with a number of models, i.e.,: (i) real and large Debian models; (ii) randomly-generated SPL models from ten features to twenty thousand features with different levels of cross-tree constraints; and (iii) SPLOTrepository models. The experimental results also give the comparison across two QSAT solvers (CirQit and RaReQS) and three SAT solvers (Sat4j, PicoSAT and MiniSAT).

- An example from the cloud computing domain is presented to motivate the practical usefulness of the proposed approach.

2. Motivating Example

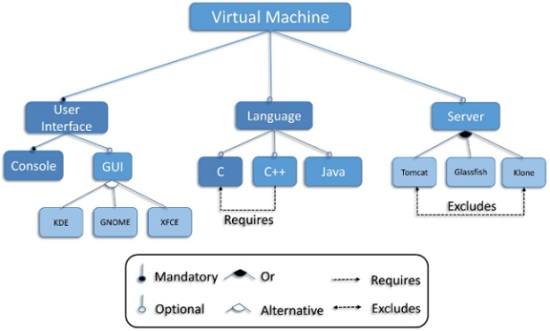

Feature Models

- mandatory: this relationship refers to features that have to be in the product if its parent feature is in the product. Note that a root feature is always mandatory in feature models.

- optional: this relationship states that a child feature is an option if its parent feature is included in the product.

- alternative: it relates a parent feature and a set of child features. Concretely, it means that exactly one child feature has to be in the product if the parent feature is included.

- or: this relationship refers to the selection of at least one feature among a group of child features, having a similar meaning to the logical OR.

- requires: this relationship implies that if the origin feature is in the product, then the destination feature should be included.

- excludes: this relationship between two features implies that, only one of the feature can be present in a product.

- Check if at least one of the pre-configured machines covers the needs of a new user configuration: In VMPL, there is always a need to check the existence of any virtual machine as per the given user specification. For example, the specification F= should be first analyzed to check the existence of any implementation that implements F. The implementation C= (equivalent to preconfigured Virtual Machine 2 in Figure 3) provides all the features in the specification F, it means that there exists a pre-configured machine which covers the user specification F.

- Check if at least one of the pre-configured machines realizes (exactly) the needs of a new user configuration: Multiple implementations may cover a given user specification F. We can analyze the VMPL to find the realized implementation for the user specification. For example, the implementation C= (equivalent to preconfigured Virtual Machine 3 in Figure 3) exactly provides all the feature in the specification F.

- Check if there are dead packages: Actual VMPLs contain a huge number of components for Linux systems. The components that are not present in any of the products are termed as dead elements in the product line. In the given VMPL, none of the components is dead.

3. SPLAnE Framework: Traceability and Implementation

3.1. Specification and Implementation

- Scope ++, }

- Core Assets }

- PL Specification ={} or ,} or },} or },} or },} or }}, where , and are some specifications.

- PL Implementation ={} or} or} or} or} or} or} or }}, where to are some implementations.

3.2. Traceability

3.3. The Implements Relation

4. Analysis Operations

4.1. SPL Model Verification

4.2. Complete and Sound SPL

4.3. Product Optimization

4.4. SPL Optimization

4.5. Generalization and Specialization in SPL

5. Validation

5.1. SPLAnE

5.2. Experimentation

5.2.1. Experiment 1: Validating SPLAnE with Feature Models from the SPLOT Repository

5.2.2. Experiment 2: Validating SPLAnE with Randomly Generated Large Size SPL Models

5.2.3. Experiment 3: Comparing SPLAnE and FaMa Approach in Front of Real and Large Debian Models

5.2.4. Experiment 4: Comparing SPLAnE and FaMa Scalability in Front of Randomly Generated Large Size Models

5.2.5. Experiment 5: Comparing SPLAnE with FaMa based Reasoning Techniques

5.3. Threats to Validity

- Ecological validity: While external validity, in general comes with the generalization of the results to other contexts (e.g., using other models), the ecological validity faces the threats affecting the experiment materials and tools. To prevent the threats of third party threads running on the machines, SPLAnE analyses were executed 10 times and then averaged.

6. Related Work

7. Future Work

- More solvers: Currently, we have implemented SPLANE analysis operations using a reduced number of QSAT and SAT solvers. In the future we plan to add some SMT (Satisfiability Modulo Theories) solvers to this list and proceed with comparative study detecting the pros and cons of each approach.

- Granularity: In this paper we have considered that the traceability relation exists at the level of features and components. However, A traceability relation can be extended to map a feature with a part of components or a component can be decompose into sub-component to perform a granular mapping or multi-level mapping.

- Logic paradigms: We have focused on SAT solving techniques, however, there are some other approaches such as BDD that are appealing for the same usage. In the future, we plan to do a comparison between a QSAT approach presented in paper and quantification over BDD with the implementation across all the analysis operation.

- Experimentation: In this paper, we have evaluated our approach in a diverse set of scenarios however, we focused in examples containing only 1:m relationships. In the future work we plan to extend the experimentation to n:m relationships to see if this has implications in the scalability of our solution.

8. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| SPL | Software Product Line |

| QBF | Quantified Boolean Formulae |

| QSAT | Quantified Satisfiability |

| SPLE | Software Product Line Engineering |

| GUI | Graphical User Interface |

| KDE | K desktop environment |

| GNOME | GNU Network Object Model Environment |

| XFCE | XForms Common Environment |

| CSP | Constraint Satisfaction Problem |

| BDD | Binary Decision Diagrams |

| CM | Component Model |

| FM | Feature Model |

| QCIR | Quantified CIRcuit |

| SPLOT | Software Product Line Online Tools |

| CTC | Cross Tree Constraints |

References

- Czarnecki, K.; Wasowski, A. Feature diagrams and logics: There and back again. In Proceedings of the 11th International Software Product Line Conference (SPLC 2007), Kyoto, Japan, 10–14 September 2007; pp. 23–34.

- Berg, K.; Bishop, J.; Muthig, D. Tracing software product line variability: From problem to solution space. In Proceedings of the 2005 Annual Research Conference of the South African Institute of Computer Scientists and Information Technologists on IT Research in Developing Countries, White River, South Africa, 20–22 September 2005; pp. 182–191.

- Czarnecki, K.; Eisenecker, U.W. Generative Programming: Methods, Tools, and Applications; Addison-Wesley Publishing: New York, NY, USA, 2000. [Google Scholar]

- Pohl, K.; Böckle, G.; van der Linden, F.J. Software Product Line Engineering—Foundations, Principles, and Techniques; Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Clements, P.C.; Northrop, L.M. Software Product Lines: Practices and Patterns; Addison-Wesley: Boston, MA, USA, 2001. [Google Scholar]

- Benavides, D.; Segura, S.; Cortés, A.R. Automated analysis of feature models 20 years later: A literature review. Inf. Syst. 2010, 35, 615–636. [Google Scholar] [CrossRef] [Green Version]

- Pohl, K.; Metzger, A. Variability management in software product line engineering. In Proceedings of the 28th International Conference on Software Engineering, Shanghai, China, 20–28 May 2006; ACM: New York, NY, USA, 2006; pp. 1049–1050. [Google Scholar]

- Anquetil, N.; Grammel, B.; Galvao Lourenco da Silva, I.; Noppen, J.A.R.; Shakil Khan, S.; Arboleda, H.; Rashid, A.; Garcia, A. Traceability for model driven, software product line engineering. In Proceedings of the ECMDA Traceability Workshop, Berlin, Germany, 12 June 2008; pp. 77–86.

- Beuche, D.; Papajewski, H.; Schröder-Preikschat, W. Variability management with feature models. Sci. Comput. Program. 2004, 53, 333–352. [Google Scholar] [CrossRef]

- Czarnecki, K.; Antkiewicz, M. Mapping features to models: A template approach based on superimposed variants. In Proceedings of the 4th International Conference on Generative Programming and Component Engineering, Tallinn, Estonia, 29 September–1 October 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 422–437. [Google Scholar]

- Czarnecki, K.; Pietroszek, K. Verifying feature-based model templates against well-formedness OCL constraints. In Proceedings of the 5th International Conference on Generative Programming and Component Engineering, Portland, OR, USA, 22–26 October 2006; ACM: New York, NY, USA, 2006; pp. 211–220. [Google Scholar]

- DeBaud, J.M.; Schmid, K. A systematic approach to derive the scope of software product lines. In Proceedings of the 21st International Conference on Software Engineering, Los Angeles, CA, USA, 16–22 May 1999; ACM: New York, NY, USA, 1999; pp. 34–43. [Google Scholar]

- Eisenbarth, T.; Koschke, R.; Simon, D. A formal method for the analysis of product maps. In Proceedings of the Requirements Engineering for Product Lines Workshop, Essen, Germany, 9–13 September 2002.

- Satyananda, T.K.; Lee, D.; Kang, S.; Hashmi, S.I. Identifying traceability between feature model and software architecture in software product line using formal concept analysis. In Proceedings of the International Conference on Computational Science and its Applications (ICCSA 2007), Kuala Lumpur, Malaysia, 26–29 August 2007; pp. 380–388.

- Zhu, C.; Lee, Y.; Zhao, W.; Zhang, J. A feature oriented approach to mapping from domain requirements to product line architecture. In Proceedings of the 2006 International Conference on Software Engineering Research and Practice, Las Vegas, NV, USA, 26–29 June 2006; pp. 219–225.

- Anquetil, N.; Kulesza, U.; Mitschke, R.; Moreira, A.; Royer, J.C.; Rummler, A.; Sousa, A. A model-driven traceability framework for software product lines. Softw. Syst. Model. 2010, 9, 427–451. [Google Scholar] [CrossRef] [Green Version]

- Cavalcanti, Y.A.C.; do Carmo Machado, I.; da Mota, P.A.; Neto, S.; Lobato, L.L.; de Almeida, E.S.; de Lemos Meira, S.R. Towards Metamodel Support for Variability and Traceability in Software Product Lines. In Proceedings of the 5th Workshop on Variability Modeling of Software-Intensive Systems, Namur, Belgium, 27–29 January 2011; ACM: New York, NY, USA, 2011; pp. 49–57. [Google Scholar]

- Ghanam, Y.; Maurer, F. Extreme product line engineering: Managing variability and traceability via executable specifications. In Proceedings of the 2009 Agile Conference, Chicago, IL, USA, 24–28 August 2009; pp. 41–48.

- Riebisch, M.; Brcina, R. Optimizing design for variability using traceability links. In Proceedings of the 15th Annual IEEE International Conference and Workshop on the Engineering of Computer Based Systems, Belfast, Northern Ireland, 31 March–4 April 2008; IEEE: Washington, DC, USA, 2008; pp. 235–244. [Google Scholar]

- Metzger, A.; Heymans, P.; Pohl, K.; Schobbens, P.Y.; Saval, G. Disambiguating the documentation of variability in software product lines: A separation of concerns, formalization and automated analysis. In Proceedings of the 15th IEEE International Requirements Engineering Conference (RE 2007), Delhi, India, 15–19 October 2007; pp. 243–253.

- SAT4J-Solver. Available online: http://www.sat4j.org/ (accessed on 7 July 2016).

- MiniSAT-Solver. Available online: http://minisat.se/ (accessed on 7 July 2016).

- PicoSAT. Available online: http://fmv.jku.at/picosat/ (accessed on 7 July 2016).

- CirQit. Available online: http://www.cs.utoronto.ca/~alexia/cirqit/ (accessed on 7 July 2016).

- RAReQS-NN. Available online: http://sat.inesc-id.pt/~mikolas/sw/rareqs-nn/ (accessed on 7 July 2016).

- Mohalik, S.; Ramesh, S.; Millo, J.V.; Krishna, S.N.; Narwane, G.K. Tracing SPLs precisely and efficiently. In Proceedings of the 16th International Software Product Line Conference–Volume 1, Salvador, Brazil, 2–7 September 2012; pp. 186–195.

- Benavides, D.; Segura, S.; Trinidad, P.; Cortés, A.R. FAMA: Tooling a framework for the automated analysis of feature models. In Proceedings of the First International Workshop on Variability Modelling of Softwareintensive Systems, Limerick, Ireland, 16–18 January 2007; pp. 129–134.

- SPLAnE. Available online: http://www.cse.iitb.ac.in/~splane/ (accessed on 7 July 2016).

- Kang, K.C.; Cohen, S.G.; Hess, J.A.; Novak, W.E.; Peterson, A.S. Feature-Oriented Domain Analysis (FODA) Feasibility Study; Technical Report CMU/SEI-90-TR-21; Software Engineering Institute of Carnegie Mellon University: Pittsburgh, PA, USA, 1990. [Google Scholar]

- Schobbens, P.Y.; Heymans, P.; Trigaux, J.C.; Bontemps, Y. Generic semantics of feature diagrams. Comput. Netw. 2007, 51, 456–479. [Google Scholar] [CrossRef]

- Batory, D. Feature models, grammars, and propositional formulas. In Proceedings of the 9th International Conference on Software Product Lines, Rennes, France, 26–29 September 2005; pp. 7–20.

- The Yices SMT Solver. Available online: http://yices.csl.sri.com/ (accessed on 7 July 2016).

- BDDSolve. Available online: http://www.win.tue.nl/~wieger/bddsolve/ (accessed on 7 July 2016).

- Stockmeyer, L.J. The polynomial-time hierarchy. Theor. Comput. Sci. 1976, 3, 1–22. [Google Scholar] [CrossRef]

- SPLAnE-TR. Available online: http://www.cse.iitb.ac.in/~krishnas/tr2012.pdf (accessed on 7 July 2016).

- Roos-Frantz, F.; Galindo, J.A.; Benavides, D.; Ruiz-Cortés, A. FaMa-OVM: A tool for the automated analysis of OVMs. In Proceedings of the 16th International Software Product Line Conference, Salvador, Brazil, 2–7 September 2012; pp. 250–254.

- Galindo, J.A.; Benavides, D.; Segura, S. Debian packages repositories as software product line models. Towards automated analysis. In Proceedings of the 1st International Workshop on Automated Configuration and Tailoring of Applications, Antwerp, Belgium, 20 September 2010; pp. 29–34.

- Seidl, M. The qpro Input Format: A Textual Syntax for QBFs in Negation Normal Form. Available online: http://qbf.satisfiability.org/gallery/qpro.pdf (accessed on 7 July 2016).

- Gallery, Q. QCIR-G14: A Non-Prenex Non-CNF Format for Quantified Boolean Formulas. Available online: http://qbf.satisfiability.org/gallery/qcir-gallery14.pdf (accessed on 7 July 2016).

- Peschiera, C.; Pulina, L.; Tacchella, A.; Bubeck, U.; Kullmann, O.; Lynce, I. The Seventh QBF Solvers Evaluation (QBFEVAL’10). In Proceedings of the 13th International Conference (SAT 2010), Edinburgh, UK, 11–14 July 2010; pp. 237–250.

- Mendonca, M.; Branco, M.; Cowan, D. SPLOT: Software product lines online tools. In Proceedings of the 24th ACM SIGPLAN Conference Companion on Object Oriented Programming Systems Languages and Applications, Orlando, FL, USA, 25–29 October 2009; ACM: New York, NY, USA, 2009; pp. 761–762. [Google Scholar]

- Segura, S.; Galindo, J.A.; Benavides, D.; Parejo, J.A.; Ruiz-Cortés, A. BeTTy: Benchmarking and testing on the automated analysis of feature models. In Proceedings of the Sixth International Workshop on Variability Modeling of Software-Intensive Systems, Leipzig, Germany, 25–27 January 2012; pp. 63–71.

- Thüm, T.; Batory, D.S.; Kästner, C. Reasoning about edits to feature models. In Proceedings of the 31st International Conference on Software Engineering (ICSE 2009), Vancouver, BC, Canada, 16–24 May 2009; pp. 254–264.

- SPLAnE. Available online: http://www.cse.iitb.ac.in\~splane (accessed on 7 July 2016).

- White, J.; Benavides, D.; Schmidt, D.; Trinidad, P.; Dougherty, B.; Ruiz-Cortes, A. Automated diagnosis of feature model configurations. J. Syst. Softw. 2010, 83, 1094–1107. [Google Scholar] [CrossRef] [Green Version]

- Bagheri, E.; di Noia, T.; Ragone, A.; Gasevic, D. Configuring software product line feature models based on stakeholders’ soft and hard requirements. In Proceedings of the 14th International Conference on Software Product Lines: Going Beyond, Jeju Island, Korea, 13–17 September 2010; pp. 16–31.

- Soltani, S.; Asadi, M.; Hatala, M.; Gasević, D.; Bagheri, E. Automated planning for feature model configuration based on stakeholders’ business concerns. In Proceedings of the 26th IEEE/ACM International Conference on Automated Software Engineering, Lawrence, KS, USA, 6–10 November 2011; pp. 536–539.

- Borba, P.; Teixeira, L.; Gheyi, R. A theory of software product line refinement. Theor. Comput. Sci. 2012, 455, 2–30. [Google Scholar] [CrossRef]

- Mendonca, M.; Wasowski, A.; Czarnecki, K. SAT-based analysis of feature models is easy. In Proceedings of the 13th International Software Product Lines (SPLC 2009), San Francisco, CA, USA, 24–28 August 2009; pp. 231–240.

- She, S.; Lotufo, R.; Berger, T.; Wasowski, A.; Czarnecki, K. Reverse engineering feature models. In Proceedings of the 33rd International Conference on Software Engineering (ICSE 2011), Waikiki, HI, USA, 21–28 May 2011; pp. 461–470.

- Janota, M.; Kiniry, J. Reasoning about feature models in higher-order logic. In Proceedings of the 11th International Software Product Lines (SPLC 2007), Kyoto, Japan, 10–14 September 2007; pp. 13–22.

- Acher, M.; Collet, P.; Lahire, P.; France, R.B. Separation of concerns in feature modeling: Support and applications. In Proceedings of the 11th International Conference on Aspect-Oriented Software Development (AOSD 2012), Potsdam, Germany, 25–30 March 2012; pp. 1–12.

| Feature | Components | Feature | Components |

|---|---|---|---|

| C | |||

| ++ | |||

| Properties | Formula |

|---|---|

| Valid Model | |

| Complete Traceability | |

| Void Product Model | ⋯ ∧∧ |

| , | |

| Ψ complete | |

| Ψ sound | ⇒⋯ |

| ∧ | |

| F existentially explicit | |

| F universally explicit | |

| . | |

| F has unique implementation = | |

| ⋯ ⋯∧ | |

| ⇒ | |

| common | |

| live | |

| c dead | |

| C superfluous | |

| redundant | |

| critical for | |

| Union | ⋯ ⇔ |

| ∧ | |

| Intersection | ⇔∧ ⇔ |

| Hypotheses of Experiment 1 | |

|---|---|

| Null Hypothesis () | SPLAnE does not scale when coping with SPLOT model repository. |

| Alt. Hypothesis () | SPLAnE does scale when coping with SPLOT model repository. |

| Models used as input | Feature Model for TPL, MPPL and ESPL were taken from [41]. ECPL is taken from [26]. VMPL is presented in current paper. SPLOT repository. The 69,800 SPL models were generated from 698 SPLOT Models. |

| Blocking variables | For each SPLOT model, we used 10 different topology and 10 level of cross-tree constraints to get 100 SPL models. Percentages of cross-tree constraints were 5%, 10%, 15%, 20%, 25%, 30%, 35%, 40%, 45% and 50%. |

| Hypotheses of Experiment 2 | |

| Null Hypothesis () | SPLAnE does not scale when coping with randomly generated SPL models. |

| Alt. Hypothesis () | SPLAnE does scale when coping with randomly generated SPL models. |

| Model used as input | 1000 Randomly generated SPL Models. |

| Blocking variables | We generated 10 random feature models with the number of features as 10, 50, 100, 500, 1000, 3000, 5000, 10,000, 15,000 and 20,000. For each feature model, 100 SPL models were generated by changing it to 10 different topology across 10 different cross tree constraints. Number of components in each model were three-times the number of features. Percentages of cross-tree constraints: 5%, 10%, 15%, 20%, 25%, 30%, 35%, 40%, 45% and 50%. |

| Hypotheses of Experiment 3 | |

| Null Hypothesis () | The use of SPLAnE will not result in a faster executions of operations than SAT-based techniques in front of a real very-large SPL models. |

| Alt. Hypothesis () | The use of SPLAnE will result in a faster executions of operations than SAT-based techniques in front of a real very-large SPL models. |

| Model used as input | We used as input the Debian variability model extracted from [37] that you can find at [28] |

| Hypotheses of Experiment 4 | |

| Null Hypothesis () | The use of SPLAnE will not result in a faster executions of operations than SAT-based techniques in front of randomly generated SPL models. |

| Alt. Hypothesis () | The use of SPLAnE will result in a faster executions of operations than SAT-based techniques in front of randomly generated SPL models. |

| Model used as input | We used as input random models varying from ten features to twenty thousand features. |

| Hypotheses of Experiment 5 | |

| Null Hypothesis () | The QSAT based reasoning technique is not faster as compare to SAT based technique for operations like completeness and soundness. |

| Alt. Hypothesis () | The QSAT based reasoning technique is faster as compare to SAT based technique for operations like completeness and soundness. |

| Model used as input | We used as input random models varying from ten features to twenty thousand features and SPLOT repository models. |

| Constants | |

| QSAT and SAT solvers | CirQit solver [24], RaReQS solver [25], Sat4j [21], PicoSAT [23] and MiniSAT [22] |

| Heuristic for variable selection in the QSAT and SAT solver | Default |

| SPL name | ECPL | VMPL | MPPL | TPL | ESPL |

|---|---|---|---|---|---|

| #Features | 8 | 15 | 25 | 34 | 290 |

| #Components | 12 | 20 | 41 | 40 | 290 |

| Analysis Operations | Time (ms) | Time (ms) | Time (ms) | Time (ms) | Time (ms) |

| 10 | 14 | 14 | 20 | 35 | |

| 12 | 15 | 13 | 12 | 37 | |

| 14 | 16 | 15 | 28 | 40 | |

| 18 | 25 | 23 | 29 | 55 | |

| 7 | 13 | 11 | 17 | 45 | |

| 9 | 15 | 13 | 12 | 48 | |

| 0 | 1 | 0 | 1 | 1 | |

| 8 | 10 | 9 | 10 | 22 | |

| 10 | 26 | 24 | 14 | 78 | |

| 12 | 13 | 10 | 14 | 74 | |

| 18 | 30 | 26 | 284 | 2135 | |

| 350 | 2120 | 1323 | 730 | 6550 | |

| 15 | 22 | 19 | 28 | 74 | |

| 18 | 45 | 61 | 25 | 82 | |

| 11 | 20 | 18 | 27 | 65 | |

| 14 | 24 | 19 | 21 | 38 | |

| 10 | 14 | 15 | 19 | 34 | |

| 14 | 18 | 16 | 26 | 45 | |

| 18 | 30 | 25 | 35 | 37 | |

| 18 | 24 | 20 | 32 | 48 | |

| 9 | 14 | 11 | 22 | 37 | |

| 11 | 17 | 16 | 28 | 28 | |

| 15 | 20 | 21 | 19 | 46 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Narwane, G.K.; Galindo, J.A.; Krishna, S.N.; Benavides, D.; Millo, J.-V.; Ramesh, S. Traceability Analyses between Features and Assets in Software Product Lines. Entropy 2016, 18, 269. https://doi.org/10.3390/e18080269

Narwane GK, Galindo JA, Krishna SN, Benavides D, Millo J-V, Ramesh S. Traceability Analyses between Features and Assets in Software Product Lines. Entropy. 2016; 18(8):269. https://doi.org/10.3390/e18080269

Chicago/Turabian StyleNarwane, Ganesh Khandu, José A. Galindo, Shankara Narayanan Krishna, David Benavides, Jean-Vivien Millo, and S. Ramesh. 2016. "Traceability Analyses between Features and Assets in Software Product Lines" Entropy 18, no. 8: 269. https://doi.org/10.3390/e18080269