Personalizing Care Through Robotic Assistance and Clinical Supervision

- 1Scuola Superiore Sant’Anna, Pisa, Italy

- 2Department of Industrial Engineering, University of Florence, Florence, Italy

- 3CNR–Institute of Cognitive Sciences and Technologies (CNR-ISTC), Rome, Italy

By 2030, the World Health Organization (WHO) foresees a worldwide workforce shortfall of healthcare professionals, with dramatic consequences for patients, economies, and communities. Research in assistive robotics has experienced an increasing attention during the last decade demonstrating its utility in the realization of intelligent robotic solutions for healthcare and social assistance, also to compensate for such workforce shortages. Nevertheless, a challenge for effective assistive robots is dealing with a high variety of situations and contextualizing their interactions according to living contexts and habits (or preferences) of assisted people. This study presents a novel cognitive system for assistive robots that rely on artificial intelligence (AI) representation and reasoning features/services to support decision-making processes of healthcare assistants. We proposed an original integration of AI-based features, that is, knowledge representation and reasoning and automated planning to 1) define a human-in-the-loop continuous assistance procedure that helps clinicians in evaluating and managing patients and; 2) to dynamically adapt robot behaviors to the specific needs and interaction abilities of patients. The system is deployed in a realistic assistive scenario to demonstrate its feasibility to support a clinician taking care of several patients with different conditions and needs.

1 Introduction

By 2030, the World Health Organization (WHO) foresees a worldwide workforce shortfall of about 18 million healthcare professionals, with dramatic consequences for patients, economies, and communities (Liu et al., 2017). The development of ICT-based integrated care solutions offers a variety of possible solutions to address this issue. Research in assistive robotics has experienced an increasing attention during the last decade aiming at the realization of intelligent robotic solutions for healthcare and social assistance, also to compensate for such workforce shortages. Also, the potential impact of healthcare and assistive robots is also witnessed by their deployments to deal with the COVID19 pandemic (Murphy et al., 2022). Remarkable results have been achieved integrating social robots in realistic assistive scenarios with human users (see e.g., (Cavallo et al., 2018; Angelini et al., 2019; D’Onofrio et al., 2016; Bertolini et al., 2016; Coradeschi et al., 2013)), also including the case of assistance and monitoring of impaired and frail people (see, e.g., (Casey et al., 2016; Fiorini et al., 2017; Mancioppi et al., 2019)). Assistive robots can be then used to support healthcare professionals in their activities augmenting their capacities and strength in dealing with a wide number of patients. Moreover, human–robot interaction (HRI) is now a very compelling field also used to better understand how humans perceive, interact with, or accept these machines in social contexts (Wykowska et al., 2016). Several studies investigated the relationships between user needs and assistive robot features when deployed inside integrated care solutions for older adults living alone in their homes (see, e.g., (Cesta et al., 2018; Cortellessa et al., 2021)). A crucial requirement for effective assistive robotic systems is their ability to deal with a high variety of situations and contextualize their interactions according to living contexts and habits (or preferences) of assisted people (Rossi et al., 2017; Bruno et al., 2019; Umbrico et al., 2020c). A key current challenge consists in realizing advanced control systems endowing assistive robots with a rich portfolio of high-level cognitive and interaction capabilities (Nocentini et al., 2019; Fiorini et al., 2020a) to realize personalized and adaptive assistance (Tapus et al., 2007; Umbrico et al., 2020a; Andriella et al., 2022) and thus achieve a good level of acceptance (Rossi et al., 2017; Moro et al., 2018).

This study presents a cognitive system for assistive robots that rely on ontology-based representation and reasoning capabilities to support healthcare professionals and elderly users during assessment and therapy administration. More specifically, the presented approach pursues a human-in-the-loop methodology that leverages a “robot-based” user profiling and artificial intelligence (AI) representation and reasoning features/services to support decision-making processes of healthcare assistants. The objective is, on the one hand, to support healthcare professionals during patient assessment and therapy administration and, on the other hand, to provide assistive robots with personalization and adaptability features to support patients characterized by heterogeneous health-related needs. Taking inspiration from cognitive architecture research (Langley et al., 2009; Lieto et al., 2018; Kotseruba and Tsotsos, 2020), we proposed the integration of AI-based features, that is, knowledge representation and reasoning and automated planning to 1) define a human-in-the-loop process for continuous evaluation and treatment of patients and; 2) to dynamically adapt robot behaviors to the specific needs and interaction abilities of patients.

The system is deployed on a social assistive robot and validated in a realistic scenario. We showed how an assistive robot endowed with cognitive control features is able to autonomously contextualize its behavior and effectively support both patients and clinicians in the synthesis of personalized cognitive interventions. A key point stands in the mutual assistance between the clinician and the robot through a “mixed-initiative” work flow. The role of the clinician is essential to refine and validate decisions made by the robot. In turn, the robot supports the clinician in the screening and monitoring of patients as well as the administration of a therapy. In this regard, the main contribution of the work concerns the correlation between standard screening practices used by therapists with the internal user model used by the robot. This correlation allows a robot to correctly interpret health-related data about patients provided by therapists. In particular, it enables the transfer of knowledge from clinicians to robots and is thus crucial to synthesizing effective and personalized assistive behaviors.

A profiling procedure is performed through a robotic platform during the administration of the Mini-Mental State Examination (MMSE) to patients with suspected cognitive decline. As shown in Rossi et al. (2018) and Di Nuovo et al. (2019), the use of a robot guarantees test neutrality and attainable standardization for the administration of cognitive tests. Data about the quality of interaction are extracted to refine interaction modalities and thus shape robot behaviors when interacting with users. User modeling capabilities of the robot rely on an ontological reification of the International Classification of Functioning, Disability and Health1 (ICF). The obtained ontological model defines a well-structured and general reference framework suitable to autonomously reason about the health status of a person and elicit fitting interaction parameters. Many works in the literature deal with user modeling and propose different frameworks, depending on the specific application needs (Lema ignan et al., 2010; Awaad et al., 2015; Tenorth and Beetz, 2015; Lemaignan et al., 2017; Porzel et al., 2020).

Concerning healthcare and assistive domains, user modeling is particularly crucial to support a user-centered design and realize effective assistive technologies (LeRouge et al., 2013). Other works have used the ICF framework as a reference to characterize cognitive and physical conditions of users. For example, the work (Kostavelis et al., 2019) introduced a novel robot-based assessment methodology of users’ skills is proposed in order to characterize the needed level of daily assistance. The work (Filippeschi et al., 2018) used the ICF to characterize cognitive and physical skills of users and accordingly represent the outcomes of the implemented robot-based assessment procedures. Similarly, the work (García-Betances et al., 2016) used ICF to represent needs and requirements of different types of users and support the user-centered design of ICT technologies. In particular, this work integrates an ontological model of ICF into the cognitive architecture ACT-R (Anderson et al., 1997) to simulate the behaviors of different types of user.

Nevertheless, the aforementioned works present a “rigid” and static representation as they usually do not rely on a well-structured ontological formalism to characterize knowledge about users (i.e., user profiles) in different situations. Related works usually do not integrate online reasoning mechanisms that allow assistive robots to autonomously reason about the specific needs of a user and autonomously (or partially autonomously) decide the kind of intervention that best fit such needs. Conversely, our approach pursues a highly flexible solution implementing the cognitive capabilities needed to understand health conditions of users and (autonomously) personalize assistance accordingly, under the supervision of a human expert.

2 Continuous Assessment and Monitoring

We aimed at leveraging the interaction capabilities of socially assistive robots to support clinicians in assessing and monitoring the cognitive state of patients. We envisage a multi-actor HRI approach in which an assistive robot can facilitate the interactions. In particular, we proposed a continuous assessment and monitoring procedure in which a robot supports a clinician by: 1) proposing a set of tests suitable for the specific needs of a patient, 2) administering the chosen tests, and 3) monitoring (and reasoning over) the performance of the patient. In this way, the employment of assistive robots can alleviate clinicians in some of their activities and, thus, support them in dealing with a larger number of patients. In addition, a robot can continuously and proactively stimulate patients by administrating suitable exercises and generally motivating the participation and the adherence to the therapy.

2.1 Mixed-Initiative Design of Cognitive Stimulation Therapy

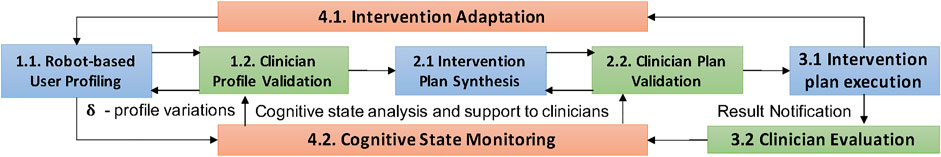

We envisage a novel cognitive intervention program where a robot constantly supports clinicians in evaluating/monitoring the cognitive state of a patient and in making decisions about the intervention plan to follow. The general structure is depicted in Figure 1. The process fosters a continuous “feedback loop” between the robot and the clinician. It interleaves patient–robot interactions (i.e., steps 1.1, 2.1, and 3.1 in Figure 1) with direct clinician validation and involvement (i.e., steps 1.2, 2.2, and 3.2 in Figure 1). The interleaving of steps performed by the two actors aimed at achieving a fruitful synergy combining the computational capabilities of the robot with the analytical capabilities of the clinician.

It is worth noticing that the clinician is constantly involved in the decisional process and maintains control over the decisions made by the robot, validating them. Each cycle consists of a number of human–robot interaction steps aiming at 1) profiling the health state of a person (steps 1.1 and 1.2), 2) defining an intervention plan suitable for the specific health needs of a patient (steps 2.1 and 2.2) and, 3) executing the plan by administrating exercises within a certain temporal horizon (e.g., a week or a month) and evaluating outcomes (steps 3.1 and 3.2).

The cyclic repetition of these phases allows a clinician to continuously monitor and assess the evolving cognitive state of a patient with the support of a robot. Two “feedback chains” are considered as shown in Figure 1. One feedback chain assesses and (if necessary) updates the profile of the user at the end of each cycle. In this way, it is possible to keep track of the outcomes of synthesized plans, keep track of changes in the health state of a person, and adapt the next cycle accordingly. Another feedback chain concerns the continuous construction of a dataset containing information about the evolution of user profiles and the related outcomes of the cognitive interventions. This information would, in particular, allow the clinician to analyze the evolution over time of the state of a user and thus make better decisions about next steps.

2.2 An AI-Based Cognitive Architecture

To implement the process of Figure 1, an assistive robot should be able to reason about the health state/conditions of a patient and autonomously make suitable decisions. In particular, a robot needs a number of properly designed and integrated cognitive capabilities in order to contextualize assistive behaviors and effectively support both patients and clinicians. Taking inspiration from cognitive architectures (Langley et al., 2009; Kotseruba and Tsotsos, 2020), we focus on the development and integration of AI-based technologies supporting knowledge representation and reasoning and decision making and problem solving. The integration of knowledge representation and reasoning with automated planning has been shown to be effective for the synthesis of flexible robot behaviors. They are particularly crucial to realize advanced (cognitive) controllers capable of (autonomously) personalize and adapt robot behaviors to the specific features of different application scenarios, for example, service robots (Awaad et al., 2015; Tenorth and Beetz, 2015; Porzel et al., 2020), daily assistance (Umbrico et al., 2020a; Cortellessa et al., 2021), and manufacturing (Borgo et al., 2019).

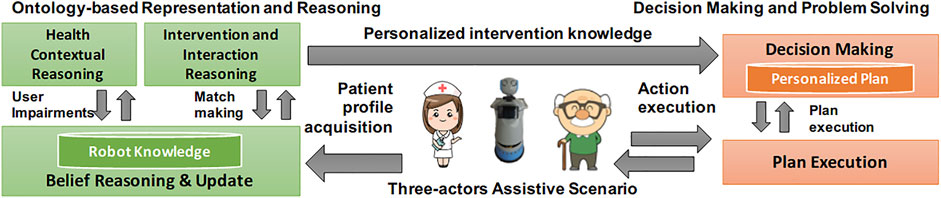

Figure 2 provides an overview of modules developed to support the considered capabilities and their integration within a “cognitive loop.” On the one hand, an ontology-based representation and reasoning module allows an assistive robot to internally represent cognitive and physical information about an assisted person and contextualize its interaction and intervention capabilities accordingly. Pursuing a foundational approach (Guarino, 1998), we defined a domain ontology based on the ICF classification to represent user profiles and reason on the health state of a person. It relies on DOLCE2 as a theoretical foundation, and was written in OWL (Antoniou and Harmelen, 2009) using Protégé3. The robot knowledge and related knowledge-reasoning modules have been developed in Java using the open-source library Apache Jena4. On the other hand, a decision making and problem solving module allows an assistive robot to synthesize and execute intervention plans, personalized according to the “recommendations” extracted from the robot knowledge. The synthesis and execution of such plans rely on PLATINUm (Umbrico et al., 2017), a timeline-based planning and execution framework deployed in assistive scenarios (Umbrico et al., 2020a) and concrete human–robot collaboration manufacturing scenarios (Pellegrinelli et al., 2017). The contribution of this work specifically focuses on the developed ontology-based representation and reasoning capabilities.

3 Ontology-Based Modeling of Health-Related Needs

A user profile should encapsulate a rich and heterogeneous set of information characterizing the general health state of a person. We proposed an ontological model of health needs based on the International Classification of Functioning, Disability and Health (ICF), defined by the World Health Organization (WHO) (World Health Organization, 2001). User profiles are thus represented on top of such ICF-based ontological models in order to provide an assistive robot with a complete characterization of patients’ needs.

3.1 Modeling Health-Related Knowledge About Patients

There are several factors that can be considered when modeling users. Different choices would support different robot behaviors and different levels/types of adaptation. Broadly speaking, the creation of a complete and effective user model is crucial to realize human–robot interactions characterized by adaptability, trust building, effective communication, and explainability (Tabrez et al., 2020). In the context of cognitive assessment with assistive robots, many works tend to focus on personality, emotions, and engagement as aspects of the user profile that the robot should take into account (Sorrentino et al., 2021). For example, Tapus et al. (2008) described a socially assisted robot therapist designed to monitor, assist, encourage, and socially interact with post-stroke users engaged in rehabilitation exercises. This work investigated the role of the robot’s personality (i.e., introvert–extrovert) in the therapy process taking into account the personality traits of a user. Similarly, (Rossi et al. (2018) investigated the influence of the user’s personality traits on the perception of the Pepper robot, administrating a cognitive test. Their results suggested that the usage of a robot in this context improved socialization among the participants. On the other hand, the works of Desideri et al. (2019) and (Pino et al. (2020) showed that the usage of a robotic platform for cognitive stimulation engaged more participants to the therapy. In the mentioned works, the influence of each aspect was mostly investigated offline and it was mostly related to the occurred quality of the interaction. In addition, the robotic platform was adopted as a medium for the administration of the clinical protocol, without providing any cues on how the information collected by the robot could be used for planning future interventions. The assumption behind this work is that the robot should be able to adapt its intervention, by focusing on the quality of the interaction, but also on the user cognitive profile.

Concerning our contribution, other works have used the ICF framework to characterize cognitive and physical conditions of users. The work (Kostavelis et al., 2019) introduced a novel robot-based assessment methodology of users’ skills to characterize the needed level of daily assistance. The work (Filippeschi et al., 2018) used ICF to characterize cognitive and physical skills of users and accordingly represent the outcomes of the implemented robot-based assessment procedures. Similarly, the work (García-Betances et al., 2016) used ICF to represent needs and requirements of different types of users and support a user-centered design of ICT technologies. This work integrates an ontological model of ICF into the cognitive architecture ACT-R (Anderson et al., 1997) to simulate the behaviors of different types of user. Nevertheless, these works present a “rigid” and static representation as they usually do not rely on a well-structured ontological formalism to dynamically contextualize knowledge about users (i.e., user profiles) in different situations. Such works usually do not integrate online reasoning mechanisms to allow assistive robots to autonomously reason about the specific needs of a user and autonomously (or partially autonomously) decide the kind of intervention that best fit such needs. Conversely, our approach pursues a highly flexible solution implementing the cognitive capabilities needed to understand health conditions of users and (autonomously) personalize assistance accordingly, under the supervision of a human expert.

3.2 ICF-Based Representation of User Profiles

The ICF classification aimed at organizing and documenting information on functioning and disability. It pursues the interpretation of functioning as a dynamic interaction among health conditions of a person, environmental factors, and personal factors. Each defined concept characterizes a specific aspect concerning the physical or cognitive functioning of a person. The level of functioning of each physical/cognitive aspect is represented by the following scale: 1) the value 0 denotes no impairment, 2) the value 1 denotes soft impairment, 3) the value 2 denotes medium impairment, 4) the value 3 denotes serious impairment, and 5) the value 4 denotes full impairment.

The ICF classification is organized into two parts. A part deals with functioning and disabilities while the other part deals with contextual factors. The former is further organized into the components body functions and body structures that are the ones considered in the design of the ontological model. The body is an integral part of human functioning and the bio-psychosocial model considers it in interaction with other components. Body functions are thus the physiological aspects of body systems, while structures are the anatomical support (e.g., sight is a function while the eye is a structure). Several ICF concepts describe the functioning of mental faculties and have been used to define user profiles. The concept OrientationFunctioning characterizes the functioning of general mental functions of known and ascertaining one’s relation to time, to place, to self, objects, and space. The concept AttentionFunctioning characterizes specific mental functions focusing on external stimulus or internal experience for the required period of time. The concept MemoryFunctioning characterizes specific mental functions of encoding, storing information, and retrieving it as needed.

Other ICF concepts have been instead used to characterize the interaction capabilities of a person and thus identify interaction preferences determining the way a robot should interact with a person while administrating exercises. The concept SeeingFunctioning models specific functions related to seeing the presence of light and sensing the form, the size, shape, and color of visual stimuli. The concept HearingFunctioning models sensory functions related to sensing the presence of sounds and discriminating the location, pitch, loudness, and quality of sounds.

4 Knowledge Reasoning for Personalization

Information gathered during the profiling phase and its representation based on ICF allow an assistive robot to autonomously reason about the intervention plan that “best fit” the specific needs of a person (e.g., what kind of cognitive exercise a person needs) and the way such actions should be executed (e.g., how a robot should interact with a person to effectively administrate cognitive exercises).

4.1 From Impairments to Intervention Actions

Following ICF classification, the ontological model defines a number of concepts that represent different FunctioningQuality of a person. As mentioned in Section 2.2, we rely on DOLCE as foundational ontology. Then, the ICF qualities are modeled as subclasses of DOLCE:Quality and are associated to entities of type DOLCE:Person. The concept Profile defines a descriptive context of the overall functioning qualities of a particular person. It represents the outcome of a profiling phase and consists of a number of Measurements. Each measurement associates the evaluation of a functioning quality to a value representing the assigned ICF score (i.e., the outcome of the evaluation). Knowledge-reasoning processes analyze such measurements (i.e., a user profile) to autonomously infer the physical or cognitive impairments characterizing the functioning state of a person. Eq. 1 in the following section shows a general inference rule used to detect such impairments.

In addition to this knowledge, the ontology characterizes properties of intervention plans. A robot can indeed be endowed with a number of “programs” implementing known tests suitable to evaluate/stimulate different functioning qualities, for example, the Free and Cued Selective Reminding Test for episodic long-term memory assessment or the Trailing Making Test form A for selective attention assessment.

Taking inspiration from some works in manufacturing that define the concept of function (Borgo and Leitão, 2004; Borgo et al., 2009), we characterized intervention actions of a robot in terms of their effects on the functioning qualities of a person. The defined semantics characterizes these “programs” according to the functioning qualities they address. For example, an interactive program implementing the Free and Cued Selective Reminding Test is classified as an intervention action whose effects can “improve” (i.e., has positive effects on) the functioning quality MemoryFunctioning. The obtained ontological model fosters an integrated representation of knowledge about the health state of a person and intervention capabilities of a robot. Knowledge processing mechanisms then use this integrated knowledge to infer a set of actions suited to address the inferred impairments of a patient (Umbrico et al., 2020b). For example, if MemoryFunctioning is inferred as soft impairment (value 1) and AttentionFunctioning as no impairment (value 0) only actions implementing the Free and Cued Selective Reminding Test (or other similar tests) are inferred as suitable to a patient.

4.2 Reasoning on Interaction Preferences

A Profile encapsulates a rich set of information that can be analyzed to infer interaction capabilities of a person and define robot interaction preferences accordingly. If the analysis of a profile infers a medium impairment of the quality HearingFunctioning then, the interactions between the patient and the robot should rely mainly on visual and textual messages rather than voice and audio. In case that audio interactions cannot be avoided (e.g., recorded audio instructions and recommendations or video conferences) it would be possible to properly set the sound level of the robot in order to help the assisted person as much as possible.

Knowledge-reasoning mechanisms thus infer also how intervention plans should be carried out by the robot in order to effectively interact with the considered patient. In this regard, we have defined four interaction parameters characterizing the execution of robot actions: 1) sound level, 2) subtitle, 3) font size, and 4) explanation. The sound level is an enumeration parameter with values \{ none, regular, high\} specifying the volume of audio communications and messages from the robot to the patient. Patients with soft or medium hearing impairment represented as HearingFunctioning would need a high sound level, while audio would be completely excluded for persons with serious impairments in order to use different interaction modalities. The subtitle is an enumeration parameter with values \{ none, yes, no\} specifying the need of supporting audio messages through text. Patients with no, soft, or medium impairment of SeeingFunctioning and medium or serious impairment of HearingFunctioning would need subtitles to better understand instructions and messages from the robot.

The font size is a binary parameter with values \{ regular, large\} specifying the size of the font of text messages and subtitles, if used. Patients with medium impairment of SeeingFunctioning would need large fonts in text messages in order to better read their content. Finally, explanation is a binary parameter (i.e., yes or non) specifying the need of explaining an exercise to a patient before its execution. Such instructions would be particularly needed for patients with impaired MemoryFunctioning or OrientationFunctioning. Clearly, the way such explanations are carried out complies with the interaction parameters described earlier.

5 Feasibility Assessment

To demonstrate the feasibility of our cycle-based approach, we considered ASTRO, an assistive robot equipped with several sensors (i.e. laser, RGB-D camera, microphones, speakers, and force sensors) and two tablets (Fiorini et al., 2020b) (see Figure 3). We deployed on ASTRO the architecture proposed in Section 2 augmenting its capabilities with the cognitive functionalities presented in Section 2.2 in order to implement the human-in-the-loop cycle proposed in Section 2.1. Then, we demonstrated the feasibility of such robotic functionalities to support a clinician while responding to specific needs of some older adult users. In particular, eight elderly persons, 3 males and 5 females (avg. age 82.25 years old, range 72–91 years old), were enrolled for this study. All the recruited subjects live in a residential facility in the same geographical region5.

The ASTRO robot equipped with the new proposed functionalities was tested to demonstrate its ability to support a clinician in realizing the functionalities to 1) represent user profiles with respect to ICF (who you are), 2) synthesize personalized intervention plans (what you need) considering a set of 10 cognitive tests typically used to further investigate and evaluate the cognitive state of a person, and 3) select the appropriate interaction modalities of the robot (how you like it) among the ones supported by the robotic platform. An off-line analysis and discussion of the results with the clinicians are reported at the end of the section to emphasize the importance of the human-in-the-loop approach (see again Figure 1).

5.1 Demonstrating User Profiling and Profile Representation

As shown in Figure 1, user profiling is necessary at the beginning of each intervention cycle to set/update robot knowledge about the health state of an assisted person. The outcome of this step is a profile describing the cognitive state of a person with respect to the developed ontological model. A correct acquisition of this information is crucial for the efficacy of the synthesized intervention plan. The robot indeed relies on the user profile to infer the set of cognitive tests (i.e., stimuli) that are suitable for the considered user and then decide personalized intervention plan (i.e., the further assessment).

The user profile is generated according to the scoring obtained through the administration of the Mini-Mental State Examination (MMSE). The MMSE represents the most used screening test for cognitive status, and it is adopted worldwide by clinicians to briefly assess persons with the suspect of dementia. It encompasses 21 items which cover tests of orientation, recall, registration, naming, comprehension, calculation and attention, writing, repetition, drawing, and reading (Folstein et al., 1983). The cognitive status level is obtained by summing the score of the individual items and normalizing it based on the educational level and the age of the patient. Decreasing scores of repeated tests highlight deterioration in cognition. In particular, the participants were asked to undergo the MMSE administrated by ASTRO. The assessment was performed with a Wizard-of-Oz (WOz) method. A clinician guides the robot through the examination phases using a dedicated web interface. The patient is not aware of the presence of the clinician and he/she directly interacts with the robot. The web interface allows the clinician to select the appropriate MMSE tests to perform. The tests require different interaction modalities between the user and the robot, for example, asking questions to the user or showing images to the user through the front tablet. The clinician can ask the robot to repeat the test if necessary.

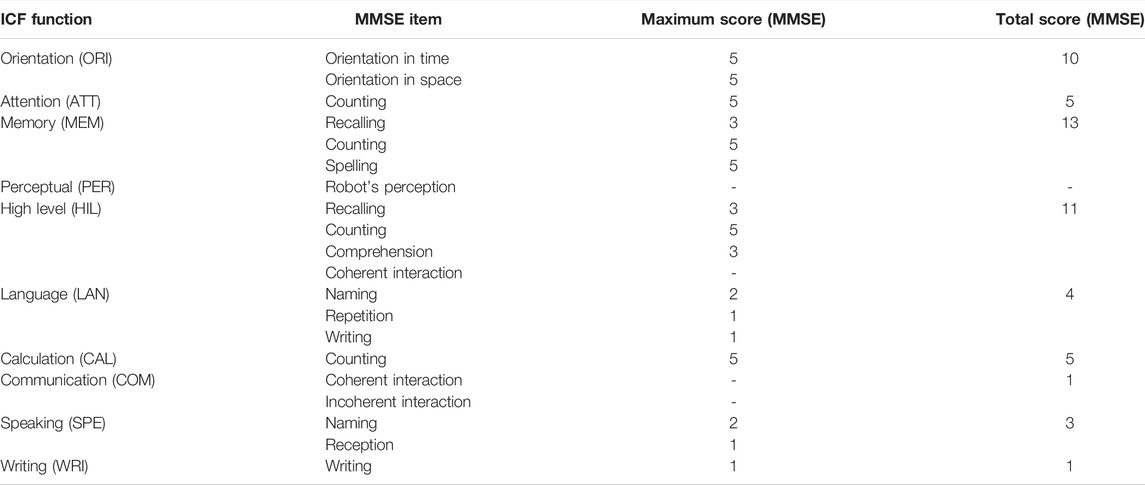

The caregiver stores the results of the assessment into the robot knowledge base through the same dedicated technical interface. The overall score of the MMSE is automatically processed by the robot at the end of the session, by parsing the annotated answers. The robot automatically correlates MMSE items with (relevant) ICF functions the robot uses to represent the cognitive state of a patient. According to this correlation, the robot then builds a user profile by mapping received MMSE scores to ICF scores. Table 1 shows how each ICF function can be described by one or multiple MMSE categories. This is an original mapping performed by a clinician between MMSE scores and ICF for generating a user profile representation. For instance, the ICF concept MemoryFunctioning is defined as specific mental functions of registering and sorting information and retrieving it as needed. This ICF function can be described by the MMSE items which cover the recalling, counting, and spelling tests. Based on the same similarity approach, the overall mapping shown in Table 1 is obtained. In order to convert the MMSE scoring of each category into the measured level of impairment of the ICF profiling, a proportional method is used. The current MMSE score (i.e., the number of tasks correctly performed) in one category is compared to the maximum MMSE score achievable in the same category and then converted into the ICF scoring. This mapping is based on an inverted scale of values. For example, if the patient correctly accomplished the requested task of MMSE items (high values of MMSE), he/she gets a lower ICF score (no impairment). If the patient partially accomplished the task, an intermediate value of the ICF score is assigned (mild impairment). If the patient did not accomplish the task, a higher value of ICF score is attributed (hard impairment).

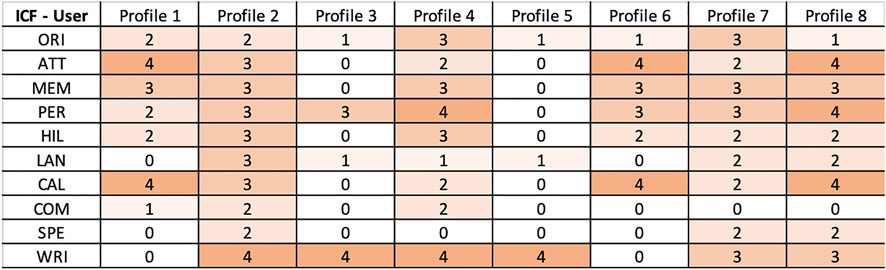

The video and audio of the administration sessions were recorded by the robot’s frontal camera. The recorded videos were off-line analyzed by the clinician to extract the quality of interaction (e.g., the number of robot’s repetition) with the robot and additional evidence of the cognitive decline (e.g., coherent and incoherent interaction) (Sorrentino et al., 2021). Table 1 reports the complete list of extracted parameters. These data are then merged with the individual MMSE score returned by the robot and manually mapped into the ICF scores, following the proposed mapping. Figure 4 reports the final results for each user. Data were then analyzed with the proposed framework and discussed with the clinician to corroborate the analysis. The clinician found an adequate representation of the profiles and validated all of them.

5.2 Demonstrating Intervention Personalization

The subsequent considered actions consist in the selection of a number of cognitive tests to administer, regarding the inferred impaired functioning qualities of a patient. The assistive capabilities of such actions depend on the features of the associated cognitive tests and thus on the functioning qualities stimulated by them. According to the inferred impairments and the recommendations generated by the developed knowledge processing mechanisms, a number of these tests are selected for administration.

We have considered a total number of 10 cognitive tests that are typically used to further investigate and evaluate the cognitive state of a person. The Free and Cued Selective Reminding Test, the Rey’s Figure Test, the Forward Digit Test, and the Backward Digit Span Test evaluate and stimulate the functioning quality MemoryFunctioning. The Trailing Making Test form A and the Trailing Making Test form B evaluate and stimulate the functioning quality AttentionFunctioning. The Stroop Test evaluates and stimulates the functioning quality OrientationFunctioning. The Boston Naming Test 40-item, the Animals Test, and the Denomination Test evaluate and stimulate the functioning quality LanguageFunctioning.

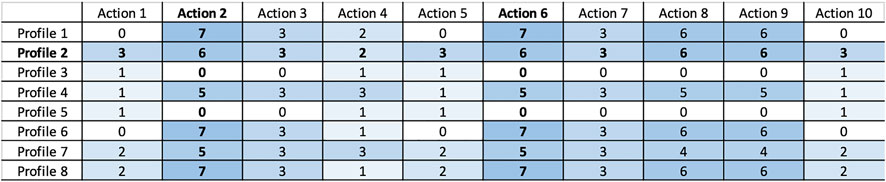

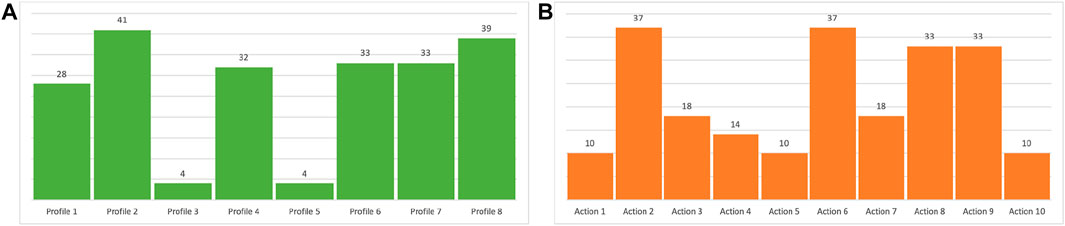

The experiments have been performed with the objective to demonstrate the capability of combining this knowledge with the ICF scores of Figure 4 (i.e., user profiles) to determine actions that fit the cognitive status of the profiled users and thus achieve personalization. Figure 5 shows results of the experiments. It specifically shows a heat-map with the ranking values of known cognitive tests (i.e., actions) for each user profile. The value expresses the significance of a specific test/action considering the cognitive impairments inferred for a particular user profile. The higher the computed ranking value the more is the relevance of a particular action for the corresponding user. Figure 6 then shows aggregated numbers pointing out the total level of impairments of the considered users in Figure 6A and the inferred impact of each action in Figure 6B. These figures clearly show the most compromised users (i.e., the users with the highest level of impairment) and the most useful actions (i.e., the actions that address the higher number and most significant impairments of users) according to robot knowledge.

FIGURE 5. Ranking intervention actions for different profiles (action enumeration: 1) Denomination test. 2) Forward digit test. 3) Free and Cued Selective Reminding Test. 4) Stroop test. 5) Animals test. 6) Backward digit span test. 7) Rey’s figure test. 8) Trailing Making Test form B. 9) Trailing Making Test form A. 10) Boston Naming Test 40-items.

FIGURE 6. Charts show (A) inferred (cumulative) impairment state of patients and; (B) inferred impact of known actions on patients.

The higher the ranking value the higher the “seriousness” of the associated impairments and consequently the significance of a test with respect to the cognitive state of a user. An example is profile 8 whose cognitive state is characterized by several impairments as can be seen from the ICF scores resulting from the outcome of MMSE in Figure 4. Consequently, as shown in Figure 5, many of the considered tests have been computed as relevant to the cognitive state of this patient. Higher values have been computed for tests addressing impaired qualities, for example, the Forward Digit Test addressing MemoryFunctioning (medium impairment in Figure 4) or the Trailing Making Test form A addressing AttentionFunctioning (serious impairment in Figure 4).

Vice versa low ranking values have been computed for not so serious impairments. An example is the user profile 5 whose cognitive state is characterized by few soft impairments (see again the ICF scores resulting from the outcome of MMSE in Figure 4). In this case, a “minimum” ranking value has been computed only for the Denomination Test, the Stroop Test, and the Boston Naming Test 40-item that address the soft impaired functioning qualities OrientationFunctioning and LanguageFunctioning. The outcome of the knowledge-based reasoning mechanisms has been assessed by an expert clinician. The results of this validation are reported in Section 5.4.

5.3 Demonstrating Interaction Personalization

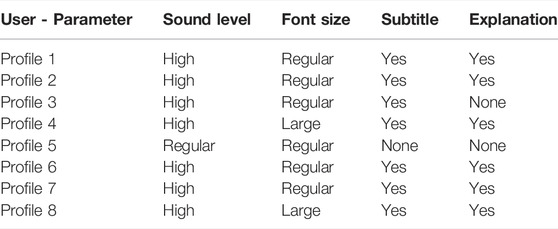

Once a personalized set of interventions has been defined, user profiles are further evaluated to decide how such actions should be performed (i.e., interaction preferences). This reasoning step relies on a number of inference rules that link ICF scores to the interaction preferences introduced in Section 4.2. We have considered ICF scores concerning PerceptualFunction (PER) and MemoryFunctioning (MEM). MEM is linked to the interaction preference explanation as described in Section 4.2. PER is linked to the interaction preferences sound level, font size, and subtitles. As shown in Table 1, a clinician assigns a score to PER by evaluating the number of robot’s repetitions. Given the lack of a precise evaluation of hearing and seeing capabilities of users, we have used PER scores to implicitly measure the functioning qualities HearingFunctioning and SeeingFuncitoning and infer the related interaction preferences as described in Section 4.2.

Table 2 shows the interaction preferences inferred for the considered users. The results show the capability of the developed knowledge-reasoning mechanisms to contextualize the execution of intervention actions (and thus robot behaviors) by defining a number of coherent interaction parameters. Users with soft or no impairment conditions of PerceptualFunctioning would not require particular interaction preferences for the execution of the associated intervention actions.

An example, is profile 5 that has no impairment of PerceptualFunctioning (PER = 0 in Figure 4) and is associated to the interaction parameters ⟨ regular, regular, none, and none ⟩ in Table 2. Vice versa users with medium or serious impairment conditions of PerceptualFunctioning would require a specific configuration of robot behaviors for the execution of the associated intervention actions. Examples are profile 4 and profile 8 that have serious impairments of PerceptualFunctioning (PER = 4 in Figure 4) and are both associated to the interaction parameters ⟨ high, large, yes, and yes ⟩ in Table 2. These experiments show the feasibility of the developed knowledge-reasoning mechanisms in personalizing and adapting robot assistive behaviors to the health needs of different patients.

It is worth noting that the use of domain-dependent rules like the ones defined for PER would not limit the generality of the developed knowledge-reasoning approach. Rather, this situation shows how developed reasoning behaviors can be easily tailored to specific needs and features of different assistive scenarios.

5.4 Off-Line Result Discussion With the Clinician

The system identifies the subjects with a higher level of impairment, which needs more attention during a further comprehensive neuropsychological testing. Therefore, based on the stored profiles, the system suggests a broader set of further cognitive tests to the users with a higher average of cognitive issues, to provide more informative support for the clinician. We are referring particularly to subject numbers 1, 2, 4, 6, 7, and 8 that, respectively, report a raw score of 16, 17, 12, 18, 15, and 17 out 30 on MMSE. For example, subjects 1, 6, and 8 were strongly suggested to undergo the similar set of tests. In particular, those subjects, which showed a mild to medium-cognitive impairment, were asked to undergo tests related to executive functions and working memory (forward and backward digit span, and Trailing Making Test form A and B). Such cognitive impairments represent a crucial risk factor for the transition to mild cognitive impairment to a full-blown dementia syndrome. In addition, the subject number 8, which showed a more severe impairment related to languages, was suggested to undergo also a denomination test and the Boston Naming Test, both tests for language domain. Such suggestions were not mentioned in the other two subjects. Moreover, the memory function, tested by the Free and Cued Selective Reminding Test, was suggested to be studied in almost all the subjects except for the not impaired subject numbers 3 and 5.

Interestingly, the system reports less need for further test administration for subject numbers 4 and 7, respectively, the two subjects with worst cognitive performances. This is an expected performance as the implemented knowledge processing mechanisms assign ranks to intervention actions in a “non-linear way.” In particular, lower ranks are assigned to tests addressing too compromised functions as they are supposed to be managed separately. That may represent a counter-intuitive data, but a common clinic routine. Too much compromised subjects’ condition makes the clinical picture already clear and further analysis fruitless. Therefore, clinical practice is a balance between the need to accomplish an explicit vision of the case, and the economy of time and resources. On the other hand, regarding the subjects with a non-impaired neuro-cognitive profile (i.e., numbers 3 and 5. Score of 27 and 29 out 30), the system proposed fewer tests as informative. In conclusion, such results are aligned with standard clinical practice, thus the assistive robot could represent a useful tool for assessment process refinement.

6 Conclusion and Future Works

This study presents an original cyclic procedure to support healthcare assistance with robots endowed with a novel integration of AI-based technologies supporting knowledge representation and reasoning and decision making and problem solving, two crucial capabilities to achieve personalization and adaptation of assistive behaviors. A human-in-the-loop approach is pursued to define a process in which a clinician is involved into the decisional process and an interleave of cognitive state evaluation and test administration allows her to maintain the control over the decisions made by a robot and its resulting assistive behaviors. The approach was demonstrated to be feasible and effective in a realistic scenario with eight participants. A clinician supervised the procedure evaluating the robot’s behavior.

This study presented a first concrete result of a research initiative whose long-term goal is to foster the development of intelligent assistive robots capable of supporting healthcare professionals in dealing with larger number of patients. Indeed, despite the small sample size, the results suggest how the robot’s interaction parameters can be fine-tuned to the residual abilities and the cognitive profile of the person who it is interacting with. In this sense, a better understanding of patients’ social, cognitive, and biological aspects will allow assistive robots to represent such information into their cognitive system, and use it to autonomously take more initiative to support both clinicians and patients. According to the feedback obtained during the off-line discussion with the clinicians, the decision making module can suggest/schedule an appropriate personalized care plan. This finding can suggest that the proposed ontologies based on ICF score can be generalized to be applied to social robots to improve and personalize the human–robot interaction as well as to provide a care plan to the caregiver.

The future work plan aims at addressing two main aspects of overcoming current limitations. First, from a technical perspective, it aims to investigate user assessment and profile-building functions (e.g., via machine learning) to better identify user needs and to extend the set of assistive services supported by the cognitive architecture to enlarge the application opportunities. Second, from the user perspective, future work should consider larger involvement of participants’ cohort so as to perform a systematic evaluation to assess its concrete effectiveness, usability, and acceptance in real contexts.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material; further inquiries can be directed to the corresponding author.

Ethics Statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

All authors contributed to the study conception and design. Material preparation, data collection, and analysis were performed by AS, GM, and AU. The first draft of the manuscript was written by AU and AO and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Funding

This research is partially supported by Italian M.I.U.R. under project "SI-ROBOTICS: SocIal ROBOTICS for active and healthy ageing" (PON Ricerca e Innovazione 2014-2020 - G.A. ARS01_01120) and by Regione Toscana under project "Cloudia" (POR FESR 2014-2020). CNR authors are also supported by the EU project "TAILOR: Foundations of Trustworthy AI - Integrating Learning, Optimisation and Reasoning" (G.A. 952215).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1https://www.who.int/standards/classifications

2http://www.loa.istc.cnr.it/dolce/overview.html

4https://jena.apache.org/index.html

5All procedures were in accordance with the 1964 Helsinki declaration and its later amendments or comparable ethical standards

References

Anderson, J. R., Matessa, M., and Lebiere, C. (1997). ACT-R: A Theory of Higher Level Cognition and its Relation to Visual Attention. Hum.-Comput. Interact. doi:10.1207/s15327051hci1204_5

Andriella, A., Torras, C., Abdelnour, C., and Alenyà, G. (2022). Introducing CARESSER: A Framework for In Situ Learning Robot Social Assistance from Expert Knowledge and Demonstrations. User Model. User-Adapted Interact. doi:10.1007/s11257-021-09316-5

Angelini, L., Mugellini, E., Khaled, O. A., Röcke, C., Guye, S., Porcelli, S., et al. (2019). “The Nestore E-Coach: Accompanying Older Adults through a Personalized Pathway to Wellbeing,” in PETRA ’19: Proceedings of the 12th ACM International Conference on PErvasive Technologies Related to Assistive Environments (New York, NY, USA: Association for Computing Machinery), 620–628.

Antoniou, G., and Harmelen, F. v. (2009). Web Ontology Language: OWL. Berlin, Heidelberg: Springer Berlin Heidelberg, 91–110. chap. Web Ontology Language: OWL. doi:10.1007/978-3-540-92673-3_4Web Ontology Language: OWL.

Awaad, I., Kraetzschmar, G. K., and Hertzberg, J. (2015). The Role of Functional Affordances in Socializing Robots. Int J Soc Robotics 7, 421–438. doi:10.1007/s12369-015-0281-3

Bertolini, A., Salvini, P., Pagliai, T., Morachioli, A., Acerbi, G., Trieste, L., et al. (2016). On Robots and Insurance. Int J Soc Robotics 8, 381–391. doi:10.1007/s12369-016-0345-z

Borgo, S., Carrara, M., Garbacz, P., and Vermaas, P. (2009). A Formal Ontological Perspective on the Behaviors and Functions of Technical Artifacts. Aiedam 23, 3–21. doi:10.1017/s0890060409000079

Borgo, S., Cesta, A., Orlandini, A., and Umbrico, A. (2019). Knowledge-based Adaptive Agents for Manufacturing Domains. Eng. Comput. 35, 755–779. doi:10.1007/s00366-018-0630-6

Borgo, S., and Leitão, P. (2004). The Role of Foundational Ontologies in Manufacturing Domain Applications. Berlin, Heidelberg: Springer Berlin Heidelberg, 670–688. chap. The Role of Foundational Ontologies in Manufacturing Domain Applications. doi:10.1007/978-3-540-30468-5_43

Bruno, B., Recchiuto, C. T., Papadopoulos, I., Saffiotti, A., Koulouglioti, C., Menicatti, R., et al. (2019). Knowledge Representation for Culturally Competent Personal Robots: Requirements, Design Principles, Implementation, and Assessment. Int J Soc Robotics 11, 515–538. doi:10.1007/s12369-019-00519-w

Casey, D., Felzmann, H., Pegman, G., Kouroupetroglou, C., Murphy, K., Koumpis, A., et al. (2016). “What People with Dementia Want: Designing MARIO an Acceptable Robot Companion,” in Computers Helping People with Special Needs. Editors K. Miesenberger, C. Bühler, and P. Penaz (Berlin, Germany: Springer), 318–325. doi:10.1007/978-3-319-41264-1_44

Cavallo, F., Esposito, R., Limosani, R., Manzi, A., Bevilacqua, R., Felici, E., et al. (2018). Acceptance of Robot-Era System: Results of Robotic Services in Smart Environments with Older Adults. J. Med. Int. Res.

Cesta, A., Cortellessa, G., Fracasso, F., Orlandini, A., and Turno, M. (2018). User Needs and Preferences on AAL Systems that Support Older Adults and Their Carers. Ais 10, 49–70. doi:10.3233/ais-170471

Coradeschi, S., Cesta, A., Cortellessa, G., Coraci, L., Gonzalez, J., Karlsson, L., et al. (2013). “GiraffPlus: Combining Social Interaction and Long Term Monitoring for Promoting Independent Living,” in The 6th International Conference on Human System Interactions (HSI), 578–585. doi:10.1109/hsi.2013.6577883

Cortellessa, G., De Benedictis, R. D., Fracasso, F., Orlandini, A., Umbrico, A., and Cesta, A. (2021). Ai and Robotics to Help Older Adults: Revisiting Projects in Search of Lessons Learned. Paladyn, J. Behav. Robotics 12, 356–378. doi:10.1515/pjbr-2021-0025

Desideri, L., Ottaviani, C., Malavasi, M., di Marzio, R., and Bonifacci, P. (2019). Emotional Processes in Human-Robot Interaction during Brief Cognitive Testing. Comput. Hum. Behav. 90, 331–342. doi:10.1016/j.chb.2018.08.013

Di Nuovo, A., Varrasi, S., Lucas, A., Conti, D., McNamara, J., and Soranzo, A. (2019). Assessment of Cognitive Skills via Human-Robot Interaction and Cloud Computing. J. Bionic Eng. 16, 526–539. doi:10.1007/s42235-019-0043-2

D’Onofrio, G., Sancarlo, D., Ricciardi, F., Ruan, Q., Yu, Z., Giuliani, F., et al. (2016). Cognitive Stimulation and Information Communication Technologies (ICT) in Alzheimer’s Diseases: A Systematic Review. Int. J. Med. Biol. Front. 22, 97.

Filippeschi, A., Peppoloni, L., Kostavelis, I., Gerlowska, J., Ruffaldi, E., Giakoumis, D., et al. (2018). “Towards Skills Evaluation of Elderly for Human-Robot Interaction,” in 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), 886–892. doi:10.1109/roman.2018.8525843

Fiorini, L., Mancioppi, G., Semeraro, F., Fujita, H., and Cavallo, F. (2020). Unsupervised Emotional State Classification through Physiological Parameters for Social Robotics Applications. Knowledge-Based Syst. 190, 105217. doi:10.1016/j.knosys.2019.105217

Fiorini, L., Tabeau, K., D’Onofrio, G., Coviello, L., De Mul, M., Sancarlo, D., et al. (2020). Co-creation of an Assistive Robot for Independent Living: Lessons Learned on Robot Design. Int. J. Interact. Des. Manuf. 14, 491–502. doi:10.1007/s12008-019-00641-z

Fiorini, S. R., Bermejo-Alonso, J., Goncalves, P., Pignaton de Freitas, E., Olivares Alarcos, A., Olszewska, J. I., et al. (2017). A Suite of Ontologies for Robotics and Automation [Industrial Activities]. IEEE Robot. Autom. Mag. 24, 8–11. doi:10.1109/mra.2016.2645444

Folstein, M. F., Robins, L. N., and Helzer, J. E. (1983). The Mini-Mental State Examination. Arch. Gen. Psychiatry 40, 812. doi:10.1001/archpsyc.1983.01790060110016

García-Betances, R. I., Cabrera-Umpiérrez, M. F., Ottaviano, M., Pastorino, M., and Arredondo, M. T. (2016). Parametric Cognitive Modeling of Information and Computer Technology Usage by People with Aging- and Disability-Derived Functional Impairments. Sensors 16. doi:10.3390/s16020266

Guarino, N. (1998). “Formal Ontology in Information Systems,” in Proceedings of the first international conference (FOIS’98), Trento, Italy, June 6-8 (Amsterdam, Netherlands: IOS press). vol. 46.

Kostavelis, I., Vasileiadis, M., Skartados, E., Kargakos, A., Giakoumis, D., Bouganis, C.-S., et al. (2019). Understanding of Human Behavior with a Robotic Agent through Daily Activity Analysis. Int J Soc Robotics 11, 437–462. doi:10.1007/s12369-019-00513-2

Kotseruba, I., and Tsotsos, J. K. (2020). 40 Years of Cognitive Architectures: Core Cognitive Abilities and Practical Applications. Artif. Intell. Rev. 53, 17–94. doi:10.1007/s10462-018-9646-y

Langley, P., Laird, J. E., and Rogers, S. (2009). Cognitive Architectures: Research Issues and Challenges. Cognitive Syst. Res. 10, 141–160. doi:10.1016/j.cogsys.2006.07.004

Lemaignan, S., Ros, R., Mosenlechner, L., Alami, R., and Beetz, M. (2010). “ORO, a Knowledge Management Platform for Cognitive Architectures in Robotics,” in Intelligent Robots and Systems (IROS), 2010 IEEE/RSJ International Conference on, 3548–3553. doi:10.1109/iros.2010.5649547

Lemaignan, S., Warnier, M., Sisbot, E. A., Clodic, A., and Alami, R. (2017). Artificial Cognition for Social Human-Robot Interaction: An Implementation. Artif. Intell. 247, 45–69. doi:10.1016/j.artint.2016.07.002

LeRouge, C., Ma, J., Sneha, S., and Tolle, K. (2013). User Profiles and Personas in the Design and Development of Consumer Health Technologies. Int. J. Med. Inf. 82, e251–e268. doi:10.1016/j.ijmedinf.2011.03.006

Lieto, A., Bhatt, M., Oltramari, A., and Vernon, D. (2018). The Role of Cognitive Architectures in General Artificial Intelligence. Cognitive Syst. Res. 48, 1–3. doi:10.1016/j.cogsys.2017.08.003

Liu, J. X., Goryakin, Y., Maeda, A., Bruckner, T., and Scheffler, R. (2017). Global Health Workforce Labor Market Projections for 2030. Hum. Resour. Health 15, 11. doi:10.1186/s12960-017-0187-2

Mancioppi, G., Fiorini, L., Timpano Sportiello, M., and Cavallo, F. (2019). Novel Technological Solutions for Assessment, Treatment, and Assistance in Mild Cognitive Impairment. Front. Neuroinform. 13, 58. doi:10.3389/fninf.2019.00058

Moro, C., Nejat, G., and Mihailidis, A. (2018). Learning and Personalizing Socially Assistive Robot Behaviors to Aid with Activities of Daily Living. ACM Trans. Human-Robot Interact. doi:10.1145/3277903

Murphy, R. R., Gandudi, V. B., Amin, T., Clendenin, A., and Moats, J. (2022). An Analysis of International Use of Robots for Covid-19. Robotics Aut. Syst. 148, 103922. doi:10.1016/j.robot.2021.103922

Nocentini, O., Fiorini, L., Acerbi, G., Sorrentino, A., Mancioppi, G., and Cavallo, F. (2019). A Survey of Behavioral Models for Social Robots. Robotics 8, 54. doi:10.3390/robotics8030054

Pellegrinelli, S., Orlandini, A., Pedrocchi, N., Umbrico, A., and Tolio, T. (2017). Motion Planning and Scheduling for Human and Industrial-Robot Collaboration. CIRP Ann. 66, 1–4. doi:10.1016/j.cirp.2017.04.095

Pino, O., Palestra, G., Trevino, R., and De Carolis, B. (2020). The Humanoid Robot Nao as Trainer in a Memory Program for Elderly People with Mild Cognitive Impairment. Int J Soc Robotics 12, 21–33. doi:10.1007/s12369-019-00533-y

Porzel, R., Pomarlan, M., Beßler, D., Malaka, R., Beetz, M., and Bateman, J. (2020). “A Formal Modal of Affordances for Flexible Robotic Task Execution,” in ECAI 2020 - 24th European Conference on Artificial Intelligence, 629–636.

Rossi, S., Ferland, F., and Tapus, A. (2017). User Profiling and Behavioral Adaptation for HRI: A Survey. Pattern Recognit. Lett. 99, 3–12. doi:10.1016/j.patrec.2017.06.002

Rossi, S., Santangelo, G., Staffa, M., Varrasi, S., Conti, D., and Di Nuovo, A. (2018). “Psychometric Evaluation Supported by a Social Robot: Personality Factors and Technology Acceptance,” in 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN) (Nanjing, China: IEEE), 802–807. doi:10.1109/roman.2018.8525838

Sorrentino, A., Mancioppi, G., Coviello, L., Cavallo, F., and Fiorini, L. (2021). Feasibility Study on the Role of Personality, Emotion, and Engagement in Socially Assistive Robotics: A Cognitive Assessment Scenario. Informatics 8, 23. doi:10.3390/informatics8020023

Tabrez, A., Luebbers, M. B., and Hayes, B. (2020). A Survey of Mental Modeling Techniques in Human-Robot Teaming. Curr. Robot. Rep. 1, 259–267. doi:10.1007/s43154-020-00019-0

Tapus, A., Mataric, M. J., and Scassellati, B. (2007). Socially Assistive Robotics [Grand Challenges of Robotics]. IEEE Robot. Autom. Mag. 14, 35–42. doi:10.1109/mra.2007.339605

Tapus, A., Ţăpuş, C., and Matarić, M. (2008). User-robot Personality Matching and Assistive Robot Behavior Adaptation for Post-stroke Rehabilitation Therapy. Intel. Serv. Robot. 1, 169–183. doi:10.1007/s11370-008-0017-4

Tenorth, M., and Beetz, M. (2015). Representations for Robot Knowledge in the KnowRob Framework. Artif. Intell. 247, 151.

Umbrico, A., Cesta, A., Cialdea Mayer, M., and Orlandini, A. (2017). PLATINUm: A New Framework for Planning and Acting. Lect. Notes Comput. Sci. 2017, 498–512. doi:10.1007/978-3-319-70169-1_37

Umbrico, A., Cesta, A., Cortellessa, G., and Orlandini, A. (2020). A Holistic Approach to Behavior Adaptation for Socially Assistive Robots. Int J Soc Robotics 12, 617–637. doi:10.1007/s12369-019-00617-9

Umbrico, A., Cortellessa, G., Orlandini, A., and Cesta, A. (2020). “Modeling Affordances and Functioning for Personalized Robotic Assistance,” in Principles of Knowledge Representation and Reasoning: Proceedings of the Sixteenth International Conference (AAAI Press). doi:10.24963/kr.2020/94

Umbrico, A., Cortellessa, G., Orlandini, A., and Cesta, A. (2020). Toward Intelligent Continuous Assistance. J. Ambient. Intell. Hum. Comput. 12, 4513–4527. doi:10.1007/s12652-020-01766-w

World Health Organization (2001). International Classification of Functioning, Disability and Health: ICF. Geneva, Switzerland: World Health Organization.

Keywords: socially assistive robot (SAR), knowledge representation and reasoning (KRR), automated planning (AP), user modeling (UM), human–robot interaction (HRI)

Citation: Sorrentino A, Fiorini L, Mancioppi G, Cavallo F, Umbrico A, Cesta A and Orlandini A (2022) Personalizing Care Through Robotic Assistance and Clinical Supervision. Front. Robot. AI 9:883814. doi: 10.3389/frobt.2022.883814

Received: 25 February 2022; Accepted: 22 June 2022;

Published: 12 July 2022.

Edited by:

Alessandro Freddi, Marche Polytechnic University, ItalyReviewed by:

Daniele Proietti Pagnotta, Marche Polytechnic University, ItalyLuca Romeo, Marche Polytechnic University, Italy

Copyright © 2022 Sorrentino, Fiorini, Mancioppi, Cavallo, Umbrico, Cesta and Orlandini. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Alessandro Umbrico, alessandro.umbrico@istc.cnr.it

Alessandra Sorrentino

Alessandra Sorrentino Laura Fiorini

Laura Fiorini Gianmaria Mancioppi

Gianmaria Mancioppi Filippo Cavallo

Filippo Cavallo Alessandro Umbrico

Alessandro Umbrico Amedeo Cesta3

Amedeo Cesta3  Andrea Orlandini

Andrea Orlandini