- 1Shanghai Municipal Center for Disease Control and Prevention, Shanghai, China

- 2Shanghai Institutes of Preventive Medicine, Shanghai, China

- 3Melbourne School of Population and Global Health, The University of Melbourne, Melbourne, VIC, Australia

- 4Faculty of Medicine, The George Institute for Global Health, University of New South Wales, Sydney, NSW, Australia

- 5The George Institute for Global Health, New Delhi, India

- 6Vital Strategies, New York, NY, United States

- 7Department of Health Metrics Sciences, IHME, University of Washington, Seattle, WA, United States

Approximately 30% of deaths in Shanghai either occur at home or are not medically attended. The recorded cause of death (COD) in these cases may not be reliable. We applied the Smart Verbal Autopsy (VA) tool to assign the COD for a representative sample of home deaths certified by 16 community health centers (CHCs) from three districts in Shanghai, from December 2017 to June 2018. The results were compared with diagnoses from routine practice to ascertain the added value of using SmartVA. Overall, cause-specific mortality fraction (CSMF) accuracy improved from 0.93 (93%) to 0.96 after the application of SmartVA. A comparison with a “gold standard (GS)” diagnoses obtained from a parallel medical record review investigation found that 86.3% of the initial diagnoses made by the CHCs were assigned the correct COD, increasing to 90.5% after the application of SmartVA. We conclude that routine application of SmartVA is not indicated for general use in CHCs, although the tool did improve diagnostic accuracy for residual causes, such as other or ill-defined cancers and non-communicable diseases.

Introduction

Accurate data on causes of death are essential for policymakers and public health experts to plan appropriate health policies and interventions to improve population health. In Shanghai, a mega-city with a population of 24 million, the vital statistics registration system registers almost all deaths of the resident (Hukou) population (1). Deaths that occur in the hospital are certified by the attending doctor. For the 30% of deaths in Shanghai that occur at home or are otherwise not medically attended, the family members of the deceased present to Community Health Centers (CHC), usually with available medical documentation, such as discharge summaries, medical records, and laboratory test results, and the CHC doctor on duty reviews the records and issues a death certificate. In such cases, the recorded cause of death (COD) may be less reliable than that for hospital death.

Verbal Autopsy (VA) is a practical method that can help determine causes of death in regions where most deaths occur at home or where medical certification is limited or unreliable (2, 3). Automated VA does not require physician review of the responses to a questionnaire to ascertain signs and symptoms preceding death; rather, the most probable COD is predicted from the application of a diagnostic algorithm. Where physicians are available to immediately review the outputs of a verbal autopsy and certify the COD, a specific tool, SmartVA for Physicians, has been developed to facilitate physician diagnoses. This innovation produces a summary of all endorsed symptoms, as reported by family members, providing more information for the certifying physician to determine the COD for people who die outside of hospitals (4, 5). The validity of Smart VA as a diagnostic tool has been demonstrated in a diverse range of low- and middle-income populations (6–10).

To ascertain whether routine application of the method would improve the quality (i.e., diagnostic accuracy) of death certification in Shanghai (especially for deaths occurring outside health facilities), SmartVA for Physicians was applied to a sample of community deaths for which the true cause had been separately established via an independent medical record review study. The findings were compared with diagnoses from routine practice to ascertain the value, if any, of incorporating Smart VA into the diagnostic practices of physicians in Shanghai certifying the cause of home deaths.

Materials and Methods

SmartVA Auto-Analyze Package

The SmartVA Auto-Analyze is a software package that builds on SmartVA Analyze and includes the Population Health Metrics Research Consortium (PHMRC) shortened VA questionnaire, the Open Data Kit (ODK) suite for data collection, and the modified Tariff 2.0 algorithm for computer analysis of the VA interview responses (11–13). The SmartVA Auto-Analyze was developed to be used by physicians in real time, and produces a list of up to three most likely causes of death at the individual level, commonly referred to as SmartVA for Physicians (for brevity, we use the term SmartVA in this article). The PHMRC shortened questionnaire was validated in terms of quantifying the decline in diagnostic accuracy as a function of deleting symptom questions in the long form of the questionnaire, using formal item reduction methods (14). Subsequently, the shortened questionnaire has been applied to selected China CDC sites and validated against local diagnostic practices in these sites (15).

Training and Administration

A local VA team, trained by experts in SmartVA from the University of Melbourne, trained 32 CHC doctors as VA interviewers. User manuals with detailed instructions and Standard Operating Procedures (SOP) were introduced during the training and were made available for use by the Shanghai Municipal Center for Disease Control and Prevention (SCDC) project staff. In addition, the interviewers received training on correct death certification practices as well as training on operating Android-based tablets to conduct SmartVA interviews and implement troubleshooting. After the training, the interviewers underwent supervised field practice to ensure that they had the requisite skills and conceptual knowledge to carry out VAs as required.

A local information technology (IT) technical/data management staff member, with support from the University of Melbourne technical team, installed the Open Data Kit Collect software, the electronic SmartVA questionnaire and media file onto tablets, and SmartVA-Auto-Analyze onto computers, and prepared all devices for SmartVA data collection.

Data Collection and Diagnostic Procedures

Previous experience with similar validation studies suggests that at least 20 gold standard (GS) cases are required for each cause to establish the COD accuracy and validity within acceptable uncertainty bounds (4). For investigating diagnostic accuracy of the top 20 causes of death, therefore, at least 400 GS cases were required. To allow for VA interview refusals, poor quality medical records to establish GSs, etc., we applied multistage sampling to select 16 community health centers (CHC) from three districts, chosen as representative of urban, suburban, and urban-suburban areas in Shanghai. Minhang district, Songjiang District, and Pudong District, each of which contains urban, suburban, and urban-suburban areas were first selected. Then, five CHCs from Minhang district, five CHCs from Songjiang district, and six CHCs from Pudong district were selected to meet our stratification criteria. All home deaths (1,648) in these CHCs which met our inclusion criteria were eligible for inclusion in our study, although it was expected that the final number of cases would be lower due to refusals, medical record quality and availability, etc.

Each home death that occurred between December 2017 and June 2018 was investigated by a trained CHC doctor on duty. Doctors identified an appropriate respondent (>18 years of age, cared for the deceased, or most familiar with the symptoms and terminal phase of the deceased) from among the family members who came to report the death to the CHC, requested their consent to participate in the pilot study, and interviewed them.

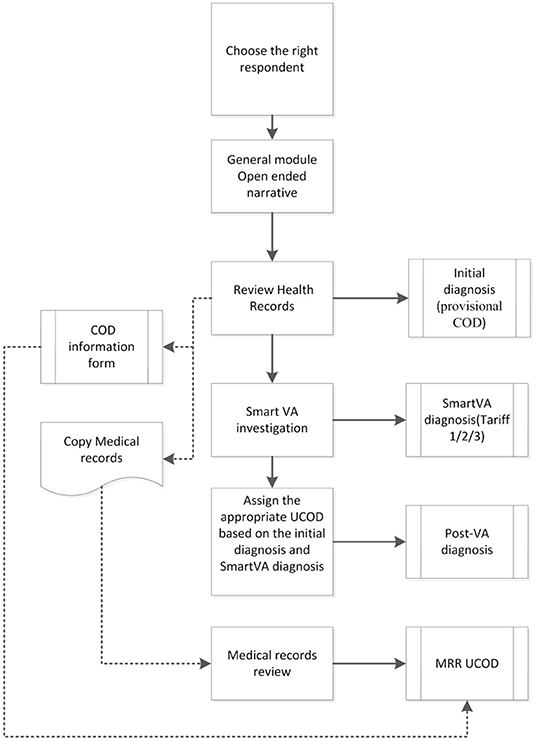

The various diagnoses associated with each case included in the study are shown in Figure 1. At the end of the interview, the CHC doctor assigned an Initial diagnosis with an underlying cause of death (UCOD) selected according to usual practice and procedures in place, which included a review of the outpatient clinical records and any other documentation brought by the family when reporting the death to the CHC. Next, the physician ran the SmartVA-Auto-Analyze program for each death which suggested up to three possible UCOD; these predicted diagnoses from the Tariff diagnostic algorithm are labeled as the SmartVA diagnosis (Tariff COD 123). Finally, the physician then reviewed the Initial diagnosis in the context of the additional information provided by the SmartVA diagnoses, including the list of endorsed symptoms provided by SmartVA, and used this information to assign a Post-VA diagnosis (as shown in Figure 1).

Ethics Approval

Ethics approval was obtained from Shanghai CDC (Ethics ID: 2016-28) and the University of Melbourne Ethics Committees (Ethics ID: 1647517.1.1). All participants were provided with a participant information sheet and consent forms in the local language.

Monitoring and Evaluation

Each CHC doctor was asked to complete a Microsoft Excel spreadsheet (“COD information form” box in Figure 1) with the data on demographics, initial diagnosis, SmartVA (Tariff) diagnosis, and the post-VA diagnosis of the UCOD for each case. This spreadsheet was submitted to SCDC by the CHC doctor at the end of each month, for monitoring the progress and quality of the study implementation. After 6 months of data collection, a program manager from SCDC integrated the data from all 16 CHCs and performed further analysis.

GS UCOD and Data Analysis

The medical records for all deaths for which a VA was carried out in these three districts were carefully evaluated by an independent Medical Record Review (MRR) team. The medical records of each home deaths were carefully audited according to the ex-ante study protocols adopted from the PHMRC study The MRR team members were experienced district CDC coders/physicians. The members were trained on how to review a medical record by the University of Melbourne team, as well as in the definition and interpretation of the standard diagnostic criteria and GS levels. The MRR team assigned each death a “GS” UCOD, which we define here as the MRR UCOD, based on the GS criteria for each COD developed by the PHMRC, and as applied in several studies (4, 16–18). Under these criteria, GS1 refers to the highest standard of (i.e., confidence in) diagnostic accuracy of the UCOD, progressing down to GS4, for which diagnostic confidence following the MRR was lowest. For example, the GS1 criteria for a case to be diagnosed as lung cancer is based on histological confirmation, whereas GS4 would be used for cases where the MRR concluded that there was unsupported clinical diagnosis.

Causes of death from the application of SmartVA, as well as the UCOD from the MRR, were transformed to the SmartVA cause list (as shown in Supplementary File 1) to facilitate comparison, given this was an abbreviated list of causes as appropriate for VA. Based on the Smart VA cause list, we carried out the following comparisons: (i) concordance between the initial diagnosis and MRR UCOD (to ascertain the accuracy of current diagnostic practice); and (ii) concordance between the initial and post-VA diagnosis (to ascertain the impact of applying SmartVA on diagnostic accuracy). In addition, we developed a misclassification matrix by cause to identify the pattern and extent of certification errors. For the misclassification matrices, only the 16 leading causes of death based on MRR UCODs have been included to facilitate interpretation of findings; all other diseases were merged into a residual group, labeled “others.”

Standard validation metrics, such as sensitivity, positive predictive value (PPV), Cohen's kappa, chance-corrected concordance (CCC), and cause-specific mortality fraction (CSMF) accuracy, were calculated to assess concordance. The statistical analysis was performed using R 3.6 software.

Results

Of the 1,648 deaths reported to the study CHCs during the defined period, only 619 (37.6%) could be included in this study. This was because many cases did not meet the study's inclusion criteria for eligible respondents or refused to participate. Of the 619 deaths for which a SmartVA interview was conducted, 570 cases also had available medical records that enabled the establishment of a GS1 and GS2 diagnosis following MRR.

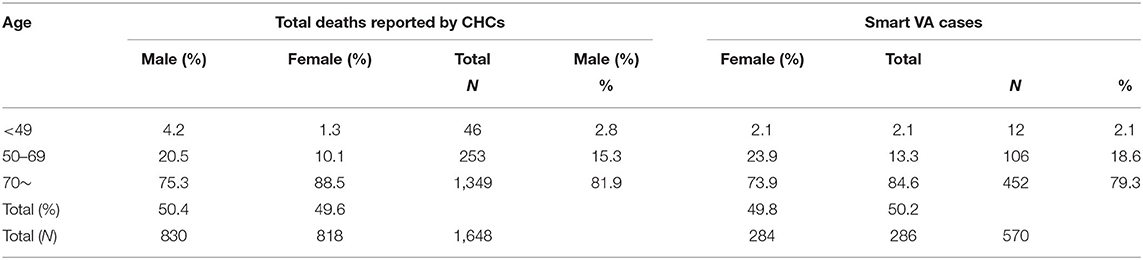

There was no significant difference in the age and sex composition between the 570 deaths and the total number of CHC deaths in same area and time period (as shown in Table 1; p = 0.862 for sex and p = 0.135 for age). The majority of deaths were among those aged 70 years and above.

Table 1. Age-sex distribution of home deaths and deaths investigated by Smart Verbal Autopsy (SmartVA) in the 16 community health centers (CHCs).

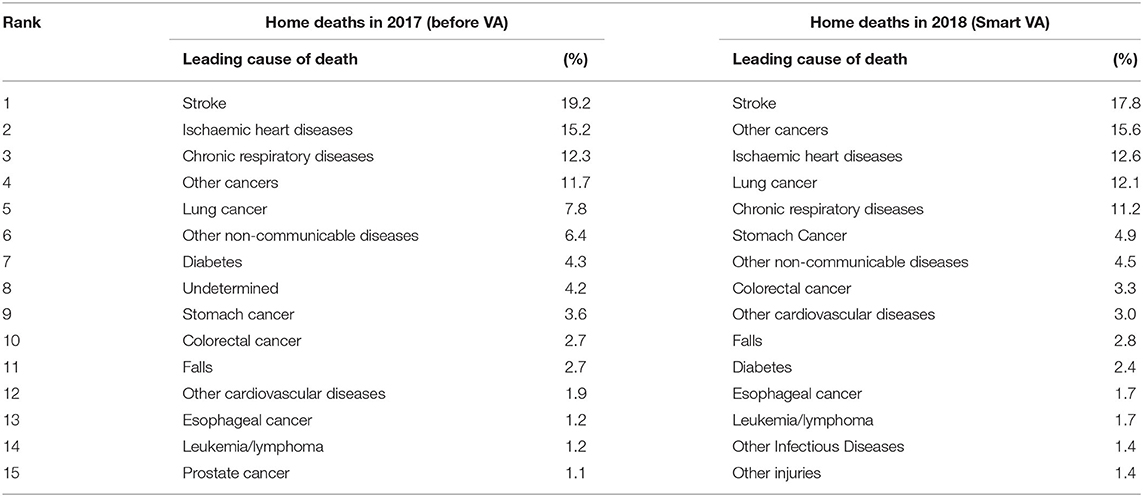

The CSMFs for all the home deaths in the 16 CHCs in 2017, and the CSMFs based on the VA results from this study, conducted in the same 16 regions in 2018, showed a similar COD distribution based on the common SmartVA cause list (as shown in Table 2).

Table 2. The cause-specific mortality fractions (CSMFs) of home deaths in the 16 regions of Shanghai in 2017 and in 2018.

From the MRR of the deaths analyzed by SmartVA for Physicians, stroke was the leading COD, accounting for 17.8% of deaths, followed by other cancers (15.6%) and ischemic heart disease, lung cancer, and chronic respiratory diseases (CRDs), accounting for 12.6, 12.1, and 11.2% of deaths, respectively. All other causes accounted for <5% of deaths. Broadly speaking, CSMFs for causes of death diagnosed by SmartVA were similar to those based on existing diagnostic practices in Shanghai, with only slight changes in the ranking of causes of death (Table 2).

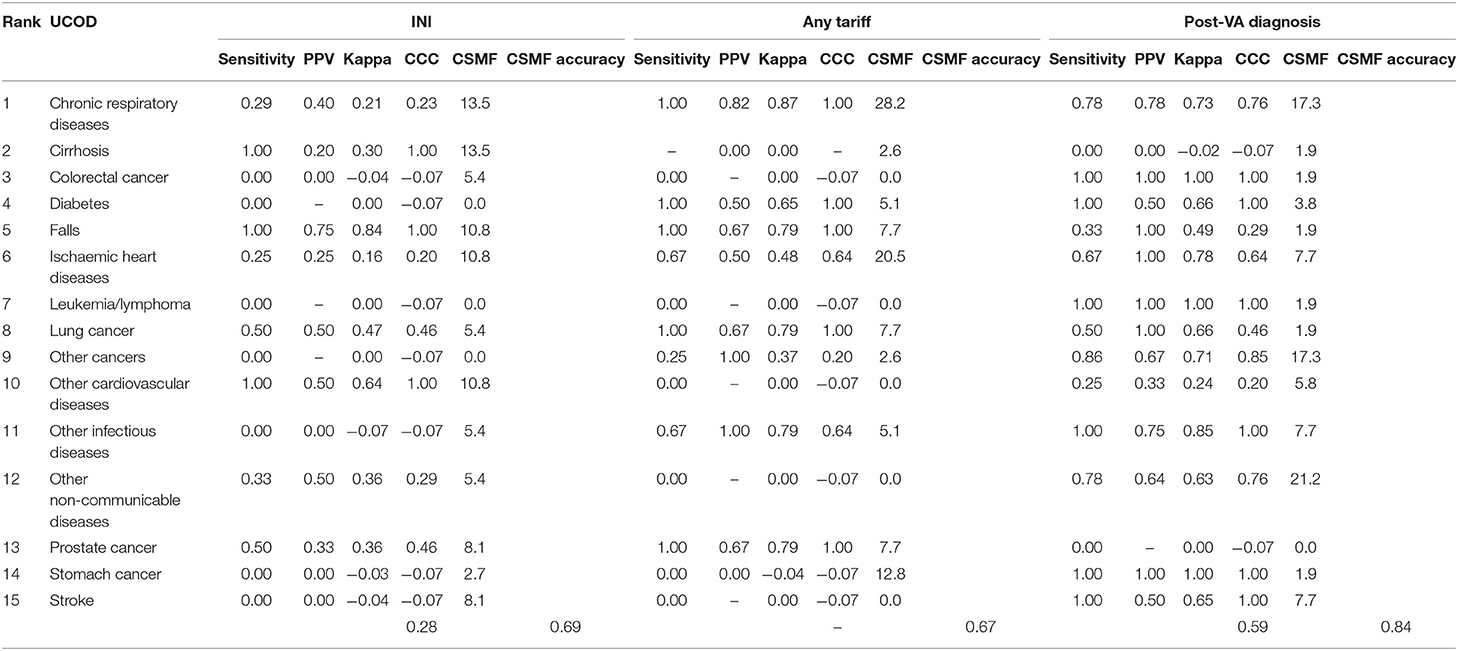

The concordance between the initial diagnosis and the MRR UCOD (assessing the accuracy of existing diagnostic practices) and between the post-VA diagnosis and MRR UCOD (assessing the impact of SmartVA on diagnostic accuracy) was measured using chance-corrected concordance (CCC; Tables 3–5). This metric evaluates the extent of agreement (average sensitivity) of individual diagnoses between the two sources, corrected for chance. Additionally, the CSMF accuracy was evaluated by measuring the absolute deviation of the CSMFs for the initial diagnosis of the SmartVA CSFMs from the MRR UCOD (12, 19, 20). The closer this value is to 1, the higher the concordance of the results.

Table 3. Validation metrics comparing initial diagnosis or post-VA diagnosis with Medical Record Review (MRR) underlying cause of death (UCOD) (top 15 specific UCOD).

Sensitivity and positive predictive value (PPV) were both high for the top six CODs. PPV was low for diabetes and other infectious diseases, indicating that some of the initial diagnoses that were not diabetes or other infectious diseases were reallocated to other diseases after the VA investigation.

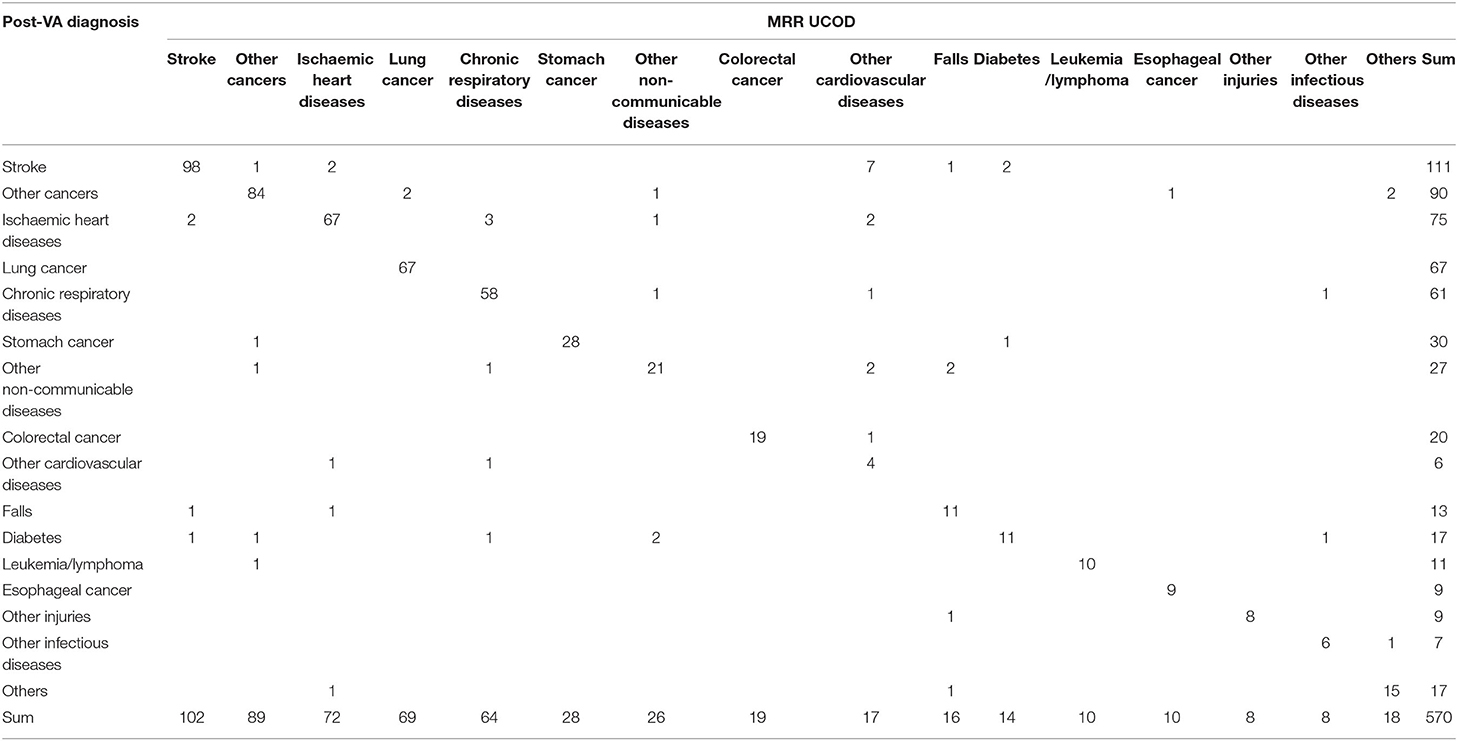

Although not dramatic, overall CSMF accuracy improved from 0.93, based on the initial diagnoses, to 0.96 after the application of SmartVA (as shown in Table 3). In terms of specific causes, the CCCs for the top six causes of death (stroke, other cancers, ischemic heart disease (IHD), lung cancer, CRD, and stomach cancer, accounting for over 75% of deaths) all increased to more than 0.90 after VA-assisted diagnosis. Detailed metrics are shown in Tables 3–5. Some CODs, especially other non-communicable diseases (NCDs) and other infectious diseases, had noticeable increases in CCC following the application of SmartVA. Of interest is the change in CCC for other cardiovascular diseases (CVDs) and falls; both decreased after the VA investigation. This suggests that CVDs are being used as a convenient diagnostic category for some deaths, possibly those where it was difficult to establish the UCOD from the outpatient clinical records, which were subsequently reclassified following an investigation with SmartVA.

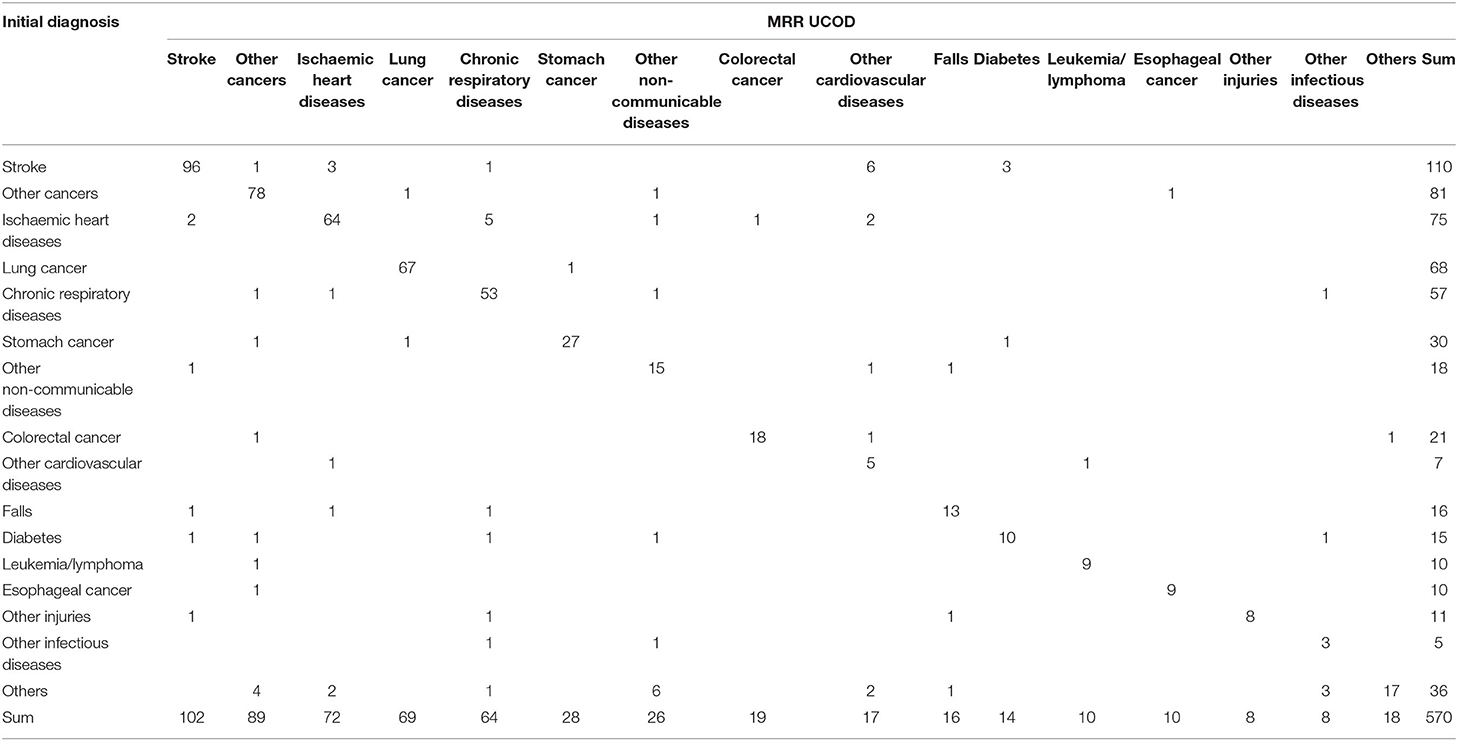

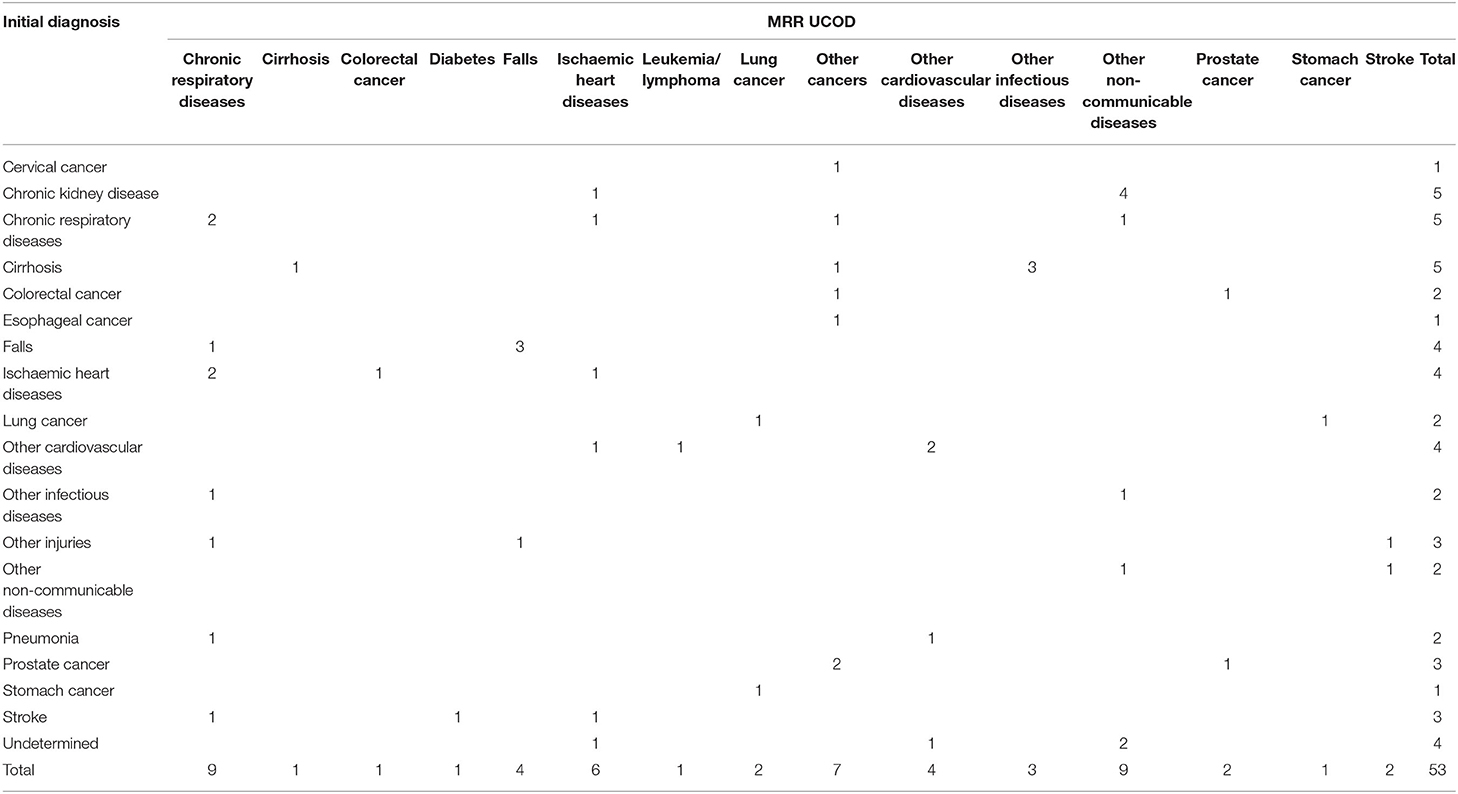

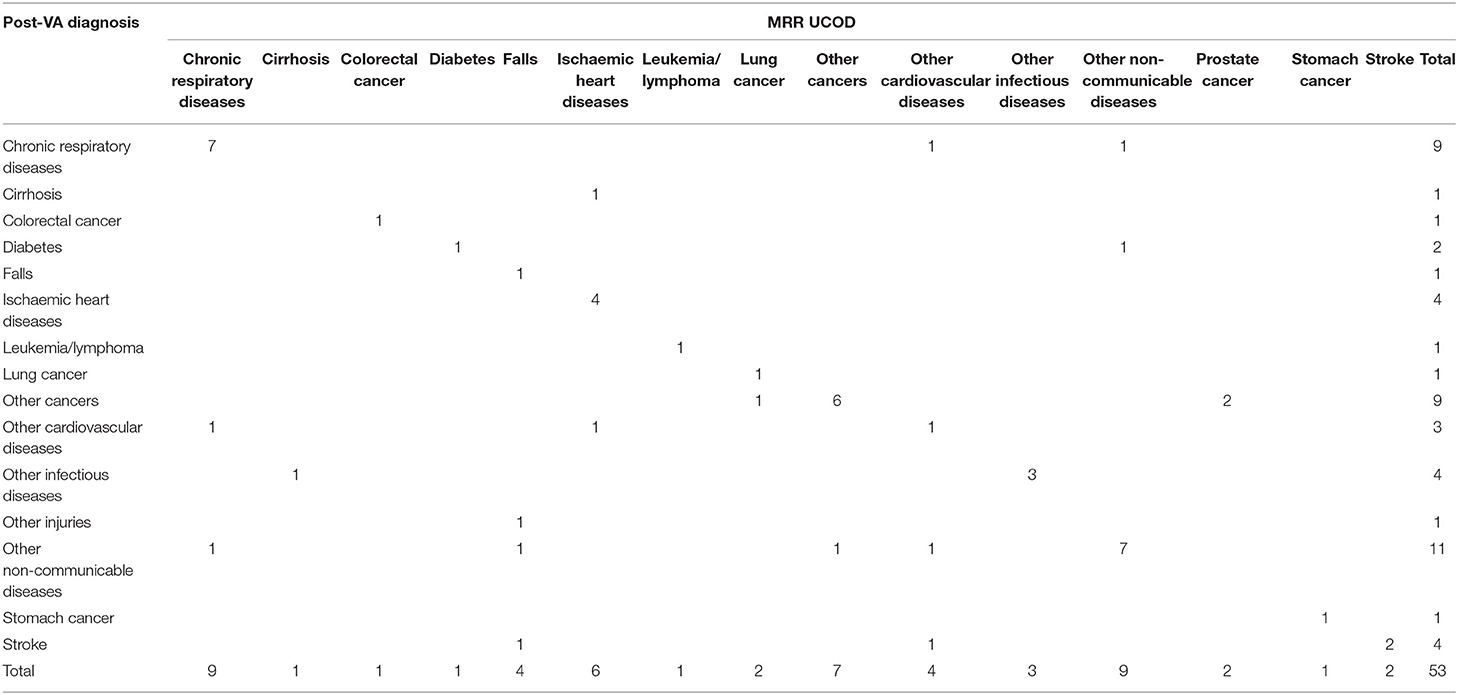

Table 4 shows the misclassification matrix based on the initial diagnosis compared with that from the MRR and is thus a rigorous test of the diagnostic accuracy of existing practices in the CHCs; 86.3% (492/570) of cases were correctly diagnosed by the initial diagnosis. The extent of misclassification was reduced following the VA investigation (Tables 4, 5), with overall diagnostic accuracy increasing to 90.5% (516/570) among the post-VA diagnoses.

Based on the results of the initial diagnosis before the VA investigation, other CVDs and other infectious diseases were more likely to be mis-assigned to other causes; nearly one-third of other CVDs were misclassified as stroke (6/17; Table 4).

The accuracy of CSMFs increased following the application of SmartVA, except for the categories of other CVDs and falls (Table 3). As mentioned, other CVDs were often (6/17 or 35.3%) misclassified cases of stroke, when compared with the MRR diagnoses (Table 5).

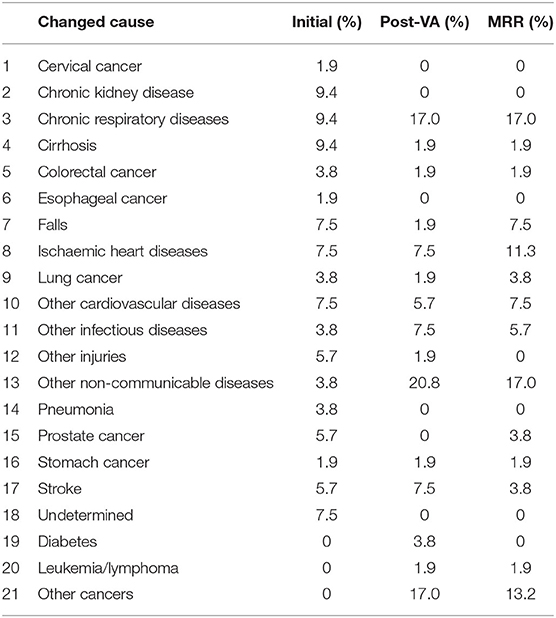

Analysis of the VA results with SmartVA Auto Analyze resulted in the causes of 53 deaths, or just under 10% of the sample, being reclassified from their initially assigned causes. This was particularly the case for chronic kidney diseases (CKDs), CRD, and cirrhosis, as well as falls, IHDs, other CVDs, and undetermined causes.

Among the 53 cases where the method led to a change in the COD, only 22.6% (12/53) were assigned correctly before VA (Table 6), whereas 67.9% (36/53) of the new CODs were assigned correctly according to MRR (Table 7). The number of misclassified conditions, compared with MRR, was also reduced. Among the 53 cases with a change in COD, all the causes assigned before VA (Table 6) had a high degree of misclassification, except for cirrhosis and falls. After VA, the misclassification was greatly reduced, except for falls and other CVDs (Table 7). Four undetermined deaths were reallocated to other diagnoses (as shown in Tables 3–8).

Table 6. The concordance between initial diagnosis and MRR results of the 53 changed cases where SmartVA led to a change in diagnosis.

Table 7. The concordance between post-VA diagnosis and MRR results of the 53 changed cases where SmartVA led to a change in diagnosis.

Table 8. Validation metrics comparing initial diagnosis, any Tariff or post-VA diagnosis, with MRR UCOD (top 15 specific UCOD) of the 53 cases where diagnosis changed.

For the 53 deaths where the UCOD changed after the application of SmartVA, the initially assigned CODs (initial diagnoses) were distributed reasonably randomly across the 15 causes. In the initially assigned CODs, no cases were assigned to leukemia/lymphoma, diabetes, or other cancers. However, according to the MRR results, other cancers should be the third leading COD in this sample of 53 deaths. SmartVA suggested that the fraction was 17%. CRD was only half as important as a cause (9.4 vs. 17%) according to the initial diagnosis compared with both SmartVA and the MRR. CKD, undetermined causes, other injuries, pneumonia, cervical cancer, and esophageal cancer were not among the UCOD identified by the MRR, or by SmartVA (except for other injuries), while the CHC doctors assigned them as UCODs after initial diagnosis. Overall, the CSMF pattern identified by the application of SmartVA for these 53 cases was much closer to the true pattern suggested from MRR than the initial diagnosis. This suggests a need for greater care when assigning these diseases as UCODs (Tables 6, 7).

With the assistance of SmartVA, the majority of misdiagnosed deaths were assigned to other NCDs (20.8%), CRD (17.0%), and other cancers (17.0%). Though a small degree of misclassification persisted, the post-VA diagnosis of the UCOD agreed more closely with the reference standard (MRR) than the initial diagnosis (Table 9).

Discussion

Although Shanghai has an established and well-functioning CRVS system, SmartVA for Physicians contributed to an improvement in the accuracy of death certification, as measured by the CSMF, which increased from 0.93 to 0.96 following the introduction of SmartVA. In addition, SmartVA may be a useful tool for inferring some special causes of death, such as those CODs classified as undetermined, which while less of an issue for Shanghai, is a common problem in civil registration systems worldwide (20–24). In our study, four undetermined CODs were reclassified after the application of SmartVA. With the help of this tool, the Shanghai CRVS system could reduce the fraction of undetermined deaths.

Among the 53 cases where the UCOD was misclassified according to the VA investigation, the largest impact was for CRD (17 vs. 9.4% suggested by initial diagnosis), other NCDs (17 vs. 3.8%), as well as other cancers (13.2 vs. 0%), suggesting that for causes such as these, a more careful examination of the available medical history may be needed by the certifier before assigning the UCOD. The fact that only 53 cases were misclassified out of a sample of 570 reflects the rigor of the diagnostic practices routinely applied in Shanghai, but given the clustering of these cases around certain causes of death (COPD, residual NCDs, and residual cancers), selective application of the methodology might help to improve diagnostic accuracy even further.

The improvement in COD data following the application of SmartVA in this study could be attributed to several factors. First, the SOPs for COD assignment that were followed during the SmartVA investigation ensured a structured and consistent approach, leading to a more accurate COD assignment. Second, the SmartVA procedure has systematic and comprehensive questions about symptoms, which can help to ensure that all relevant medical information regarding the decedent's morbid conditions is captured at the time of certification of the COD. Third, the improvement attributed to Smart VA could in part be due to the comprehensive training in seeking information about symptoms and signs from the family, which is more systematic and comprehensive than current procedures.

As Shanghai is highly developed with a relatively advanced CRVS system, the routine use of SmartVA is unlikely to result in a significant improvement in the accuracy of COD data in the Shanghai system, nor is it a cost-effective way to do so. The routine application of SmartVA would add a further 15–30 min to the diagnostic process for each death, which, given the already high diagnostic standards and procedures in place, is not justifiable. Shanghai CHC doctors' routine work already comprises checking and correcting MCCOD data, including re-interviewing the family of the deceased. In contrast, for other cities in China, especially in the remote areas in the west, that do not have a well-functioning death registration system, SmartVA may be more beneficial.

There are several reasons why not all the home deaths can be investigated. The high refusal rate undoubtedly reflects the fact that urban, comparatively well-off populations engaged in non-agricultural occupations have little time or inclination to respond to questionnaires, particularly at a time when the family of the deceased is still grieving, making the investigation more difficult to conduct. Second, conducting the SmartVA investigation requires systematic training from an expert team. Aside from regular medical certification of COD training, the training courses include how to install the software for the SmartVA tool, how to connect the tablet to the computer to transmit the survey data, etc. This is further complicated by the mobility of CHC physicians, which is quite high as their workload is heavy. Shanghai CDC has subsequently developed the WeChat version of the Smart VA questionnaire in 2021 and is conducting a new round of home death investigation for those UCODs which were initially assigned as R codes.

Our SmartVA study has some limitations. First, the SmartVA tool, especially the cause list, is not perfectly suited to the actual mortality fractions observed in Shanghai (25). For example, liver cancer is not on the SmartVA cause list, and therefore the program does not assign it as a COD to any deaths, whereas liver cancer accounted for more than 2.6% of all deaths in Shanghai in 2018. This is due to the fact that validation metrics for liver cancer in the original PHMRC study were considered too low to justify the inclusion of liver cancer as a target cause for SmartVA (11, 12). Second, in this study, the VA investigations were conducted after the certifier reviewed the previous outpatient medical histories of the deceased. This may have biased the certifier when considering the diagnostic information suggested by SmartVA. Third, in this study in Shanghai, the SmartVA procedure was implemented in 16 communities. As the number of deaths in these communities was not very high and may not be representative of the whole population, further research should be done to determine the generalizability of SmartVA for Physicians before it can be extended to all districts and counties in China where home deaths are common. Fourth, the GS dataset used in the MRR to establish true causes of death is not without errors, given that it has been derived from available medical records, which can themselves contain errors. The surest way to ensure an error free GS is through autopsy, but this is not practical or affordable in most settings. Rather, by adopting ex-ante diagnostic procedures with clearly defined diagnostic criteria for specific causes of interest, the PHMRC methods and exclusion criteria applied in this study are likely to dramatically reduce, but not eliminate, diagnostic uncertainty and subjectivity. Last, the cause pattern identified by SmartVA is constrained to the causes associated with the symptom questions asked in SmartVA. While these causes collectively would likely account for the vast majority of deaths in most low- or middle-income countries, important local causes, such as liver cancer in China, may be omitted due to the criteria and methods used to validate the tool.

While we have focused on the applicability of SmartVA in the Shanghai context, it should be kept in mind that there are alternative automated (electronic) VA methods, such as In Silico VA and InterVA, which could also be applied to assist physician diagnoses (26–28). In our study, the strengths and weaknesses of different automated diagnostic methods were not discussed. Rather, we have focused on the applicability of generic automated VA methods as a diagnostic aid for physicians who need to certify the cause of home deaths, often in the absence of good clinical records (29–33).

The SCDC plans to adapt the workflow and operational specifications of SmartVA to maximize the effectiveness of the method in improving COD diagnosis and lowering the proportion of undetermined causes of death. This will most likely be through selective application and integration into the existing CRVS system. In addition, SCDC is also considering using SmartVA to identify the possible causes of death in cases with incomplete medical history information.

Increasing the diagnostic accuracy of any dataset that is likely to be used to guide public policy is, or should be, a priority for data custodians. Our research has demonstrated that COD accuracy in Shanghai is very good, but it is not without errors. Furthermore, our study has shown that the application of SmartVA can improve diagnostic accuracy even further, if only marginally. As a result, the routine use of SmartVA is unlikely to be a cost-effective strategy to further improve the diagnostic accuracy of an already well-performing system, but its application to improve the diagnoses of certain conditions appears justified. This marginal application would likely further improve confidence in the use of Shanghai COD data for some public health purposes.

Conclusion

This research illustrates that although Shanghai has an established and well-functioning CRVS system, SmartVA for Physicians contributed to an improvement in the accuracy of death certification. In addition, SmartVA may be a useful tool for inferring some special causes of death, such as those CODs classified as undetermined.

Data Availability Statement

The data that support the findings of this study are available upon request from the Shanghai Municipal Center for Disease Control and Prevention (Shanghai CDC) but restrictions apply to the availability of these data, which were used under license for the current study, and so are not publicly available. Data are available from the authors upon reasonable request and with the permission of Shanghai CDC.

Ethics Statement

The studies involving human participants were reviewed and approved by Ethics approval was obtained from Shanghai CDC (Ethics ID: 2016-28) and the University of Melbourne Ethics Committees (Ethics ID: 1647517.1.1). The patients/participants provided their written informed consent to participate in this study.

Author Contributions

RJ and AL devised the study and were responsible for the study design. ZY, TX, and CW oversaw the research. LC and HL were members of the writing group. HL, TA, CW, HY, and AL provided feedback on data analysis, results, and discussion. RR, ZG, BF, AL, and DM revised the manuscript critically for important intellectual content. All authors contributed to the framework construction, results interpretation, manuscript revision, and approved the final version of the manuscript. The corresponding authors attest that all listed authors meet authorship criteria and that no others meeting the criteria have been omitted.

Funding

The research was funded by the Bloomberg Philanthropies Data for Health Initiative and by the Clinical Research Project of the Health Industry of Shanghai Health Commission in 2020 (Award number: 20204Y0205). The funders had no role in study design, data collection and analysis, decision to publish, or the preparation of the manuscript.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors would like to acknowledge the following people who contributed to the design and conduct of the research and provided comments on various iterations of this article: Megha Rajasekhar (the University of Melbourne), Romain Santon (Vital Strategies), and Chen Jun (Shanghai Putuo District Center for Disease Control and Prevention).

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpubh.2022.842880/full#supplementary-material

Abbreviations

CHC, community health centers; VA, verbal autopsy; COD, cause of death; PHMRC, Population Health Metrics Research Consortium; ODK, Open Data Kit; SOPs, Standard Operating Procedures; SCDC, Shanghai Municipal Center for Disease Control and Prevention; UCOD, underlying cause of death; GS, gold standard; MRR, medical record review; CSMF, cause-specific mortality fraction; CCC, chance-corrected concordance; CKD, chronic kidney diseases; IHD, ischemic heart diseases; CVD, cardiovascular diseases; CRD, chronic respiratory diseases.

References

1. Shanghai Municipal Statistics Bureau. 2020 Shanghai Statistical Yearbook. Shanghai: China Statistics Press; 2020.

2. Nichols EK, Byass P, Chandramohan D, Clark SJ, Flaxman AD, Jakob R, et al. The WHO 2016 verbal autopsy instrument: an international standard suitable for automated analysis by InterVA, InSilicoVA, and Tariff 20. PLoS Med. (2018) 15:e1002486. doi: 10.1371/journal.pmed.1002486

3. Leitao J, Desai N, Aleksandrowicz L, Byass P, Miasnikof P, Tollman S, et al. Comparison of physician-certified verbal autopsy with computer-coded verbal autopsy for cause of death assignment in hospitalized patients in low- and middle-income countries: systematic review. BMC Med. (2014) 12:22. doi: 10.1186/1741-7015-12-22

4. James SL, Flaxman AD, Murray CJ. Performance of the Tariff Method: validation of a simple additive algorithm for analysis of verbal autopsies. Popul Health Metr. (2011) 9:31. doi: 10.1186/1478-7954-9-31

5. Lozano R, Lopez AD, Atkinson C, Naghavi M, Flaxman AD, Murray CJ. Performance of physician-certified verbal autopsies: multisite validation study using clinical diagnostic gold standards. Popul Health Metr. (2011) 9:32. doi: 10.1186/1478-7954-9-32

6. Yokobori Y, Matsuura J, Sugiura Y, Mutemba C, Nyahoda M, Mwango C, et al. Analysis of causes of death among brought-in-dead cases in a third-level Hospital in Lusaka, Republic of Zambia, using the tariff method 20 for verbal autopsy: a cross-sectional study. BMC Public Health. (2020) 20:473. doi: 10.1186/s12889-020-08575-y

7. Hazard RH, Buddhika MPK, Hart JD, Chowdhury HR, Firth S, Joshi R, et al. Automated verbal autopsy: from research to routine use in civil registration and vital statistics systems. BMC Med. (2020) 18:60. doi: 10.1186/s12916-020-01520-1

8. Reeve M, Chowdhury H, Mahesh PKB, Jilini G, Jagilly R, Kamoriki B, et al. Generating cause of death information to inform health policy: implementation of an automated verbal autopsy system in the Solomon Islands. BMC Public Health. (2021) 21:2080. doi: 10.1186/s12889-021-12180-y

9. Hart JD, Kwa V, Dakulala P, Ripa P, Frank D, Lei T, et al. Mortality surveillance and verbal autopsy strategies: experiences, challenges and lessons learnt in Papua New Guinea. BMJ Glob Health. (2020) 5:e003747. doi: 10.1136/bmjgh-2020-003747

10. Mikkelsen L, de Alwis S, Sathasivam S, Kumarapeli V, Tennakoon A, Karunapema P, et al. Improving the policy utility of cause of death statistics in sri lanka: an empirical investigation of causes of out-of-hospital deaths using automated verbal autopsy methods. Front Public Health. (2021) 9:591237. doi: 10.3389/fpubh.2021.591237

11. Serina P, Riley I, Stewart A, James SL, Flaxman AD, Lozano R, et al. Improving performance of the Tariff Method for assigning causes of death to verbal autopsies. BMC Med. (2015) 13:291. doi: 10.1186/s12916-015-0527-9

12. Murray CJ, Lopez AD, Black R, Ahuja R, Ali SM, Baqui A, et al. Population Health Metrics Research Consortium gold standard verbal autopsy validation study: design, implementation, and development of analysis datasets. Popul Health Metr. (2011) 9:27. doi: 10.1186/1478-7954-9-27

13. Murray CJ, Lozano R, Flaxman AD, Serina P, Phillips D, Stewart A, et al. Using verbal autopsy to measure causes of death: the comparative performance of existing methods. BMC Med. (2014) 12:5. doi: 10.1186/1741-7015-12-5

14. Serina P, Riley I, Stewart A, Flaxman AD, Lozano R, Mooney MD, et al. A shortened verbal autopsy instrument for use in routine mortality surveillance systems. BMC Med. (2015) 13:302. doi: 10.1186/s12916-015-0528-8

15. Qi J, Adair T, Chowdhury HR, Li H, McLaughlin D, Liu Y, et al. Estimating causes of out-of-hospital deaths in China: application of SmartVA methods. Popul Health Metr. (2021) 19:25. doi: 10.1186/s12963-021-00256-1

16. Murray CJ, James SL, Birnbaum JK, Freeman MK, Lozano R, Lopez AD. Population Health Metrics Research Consortium (PHMRC). Simplified symptom pattern method for verbal autopsy analysis: multisite validation study using clinical diagnostic gold standards. Popul Health Metr. (2011) 9:30. doi: 10.1186/1478-7954-9-30

17. McCormick TH Li ZR, Calvert C, Crampin AC, Kahn K, Clark SJ. Probabilistic cause-of-death assignment using verbal autopsies. J Am Stat Assoc. (2016) 111:1036–49. doi: 10.1080/01621459.2016.1152191

18. Chen L, Xia T, Yuan Z-A, Rampatige R, Chen J, Li H, et al. Are cause of death data for Shanghai fit for purpose? A retrospective study of medical records. BMJ Open. (2022) 12:e046185. doi: 10.1136/bmjopen-2020-046185

19. Hernandez B, Ramirez-Villalobos D, Romero M, Gomez S, Atkinson C, Lozano R. Assessing quality of medical death certification: Concordance between gold standard diagnosis and underlying cause of death in selected Mexican hospitals. Popul Health Metr. (2011) 9:38. doi: 10.1186/1478-7954-9-38

20. Flaxman AD, Serina PT, Hernandez B, Murray CJ, Riley I, Lopez AD. Measuring causes of death in populations: a new metric that corrects cause-specific mortality fractions for chance. Popul Health Metr. (2015) 13:28. doi: 10.1186/s12963-015-0061-1

21. Breiding MJ, Wiersema B. Variability of undetermined manner of death classification in the US. Inj Prev. (2006) 12(Suppl 2):ii49–54. doi: 10.1136/ip.2006.012591

22. Fanaroff AC, Clare R, Pieper KS, Mahaffey KW, Melloni C, Green JB, et al. Frequency, regional variation, and predictors of undetermined cause of death in cardiometabolic clinical trials: a pooled analysis of 9259 deaths in 9 trials. Circulation. (2019) 139:863–73. doi: 10.1161/CIRCULATIONAHA.118.037202

23. Advenier AS, Guillard N, Alvarez JC, Martrille L, Lorin de la Grandmaison G. Undetermined manner of death: an autopsy series. J Forensic Sci. (2016) 61:S154–8. doi: 10.1111/1556-4029.12924

24. Baumert JJ, Erazo N, Ladwig KH. Sex- and age-specific trends in mortality from suicide and undetermined death in Germany 1991-2002. BMC Public Health. (2005) 5:61. doi: 10.1186/1471-2458-5-61

25. Yang G, Rao C, Ma J, Wang L, Wan X, Dubrovsky G, et al. Validation of verbal autopsy procedures for adult deaths in China. Int J Epidemiol. (2006) 35:741–8. doi: 10.1093/ije/dyi181

26. Flaxman AD, Joseph JC, Murray CJL, Riley ID, Lopez AD. Performance of InSilicoVA for assigning causes of death to verbal autopsies: multisite validation study using clinical diagnostic gold standards. BMC Med. (2018) 16:56. doi: 10.1186/s12916-018-1039-1

27. Lozano R, Freeman MK, James SL, Campbell B, Lopez AD, Flaxman AD, et al. Performance of InterVA for assigning causes of death to verbal autopsies: multisite validation study using clinical diagnostic gold standards. Popul Health Metr. (2011) 9:50. doi: 10.1186/1478-7954-9-50

28. Byass P, Hussain-Alkhateeb L, D'Ambruoso L, Clark S, Davies J, Fottrell E, et al. An integrated approach to processing WHO-2016 verbal autopsy data: the InterVA-5 model. BMC Med. (2019) 17:102. doi: 10.1186/s12916-019-1333-6

29. Joshi R, Hazard RH, Mahesh PKB, Mikkelsen L, Avelino F, Sarmiento C, et al. Improving cause of death certification in the Philippines: implementation of an electronic verbal autopsy decision support tool (SmartVA auto-analyse) to aid physician diagnoses of out-of-facility deaths. BMC Public Health. (2021) 21:563. doi: 10.1186/s12889-021-10542-0

30. Thomas LM, D'Ambruoso L, Balabanova D. Use of verbal autopsy and social autopsy in humanitarian crises [published correction appears in BMJ Glob Health. 2018 Jun 9;3:e000640corr1]. BMJ Glob Health. (2018) 3:e000640. doi: 10.1136/bmjgh-2017-000640

31. The University of Melbourne. Guidelines For Interpreting Verbal Autopsy Data. Melbourne, VIC: Bloomberg Philanthropies Data for Health Initiative, Civil Registration and Vital Statistics Improvement, the University of Melbourne (2019). Available online at: https://crvsgateway_info.myudo.net/file/11243/3231 (accessed May 25, 2022).

32. Sankoh O, Byass P. Time for civil registration with verbal autopsy. Lancet Glob Health. (2014) 2:e693–4. doi: 10.1016/S2214-109X(14)70340-7

33. Shawon MTH, Ashrafi SAA, Azad AK, Firth SM, Chowdhury H, Mswia RG, et al. Routine mortality surveillance to identify the cause of death pattern for out-of-hospital adult (aged 12+ years) deaths in Bangladesh: introduction of automated verbal autopsy. BMC Public Health. (2021) 21:491. doi: 10.1186/s12889-021-10468-7

Keywords: Smart Verbal Autopsy, cause of death, CRVS system, demography, epidemiology

Citation: Chen L, Xia T, Rampatige R, Li H, Adair T, Joshi R, Gu Z, Yu H, Fang B, McLaughlin D, Lopez AD, Wang C and Yuan Z (2022) Assessing the Diagnostic Accuracy of Physicians for Home Death Certification in Shanghai: Application of SmartVA. Front. Public Health 10:842880. doi: 10.3389/fpubh.2022.842880

Received: 24 December 2021; Accepted: 03 May 2022;

Published: 17 June 2022.

Edited by:

Oyelola A. Adegboye, James Cook University, AustraliaReviewed by:

Shubash Shander Ganapathy, Ministry of Health, MalaysiaRajesh Kumar, Health Systems Transformation Platform, India

Copyright © 2022 Chen, Xia, Rampatige, Li, Adair, Joshi, Gu, Yu, Fang, McLaughlin, Lopez, Wang and Yuan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zheng'an Yuan, yuanzhengan@scdc.sh.cn

†These authors have contributed equally to this work and share first authorship

‡These authors have contributed equally to this work and share senior authorship

Lei Chen1†

Lei Chen1† Rasika Rampatige

Rasika Rampatige Rohina Joshi

Rohina Joshi Deirdre McLaughlin

Deirdre McLaughlin Alan D. Lopez

Alan D. Lopez Zheng'an Yuan

Zheng'an Yuan