- 1School of Health and Exercise Sciences, University of British Columbia, Kelowna, BC, Canada

- 2Department of Recreational and Leisure Studies, Brock University, St. Catharines, ON, Canada

Background: Training programs must be evaluated to understand whether the training was successful at enabling staff to implement a program with fidelity. This is especially important when the training has been translated to a new context. The aim of this community case study was to evaluate the effectiveness of the in-person Small Steps for Big Changes training for fitness facility staff using the 4-level Kirkpatrick training evaluation model.

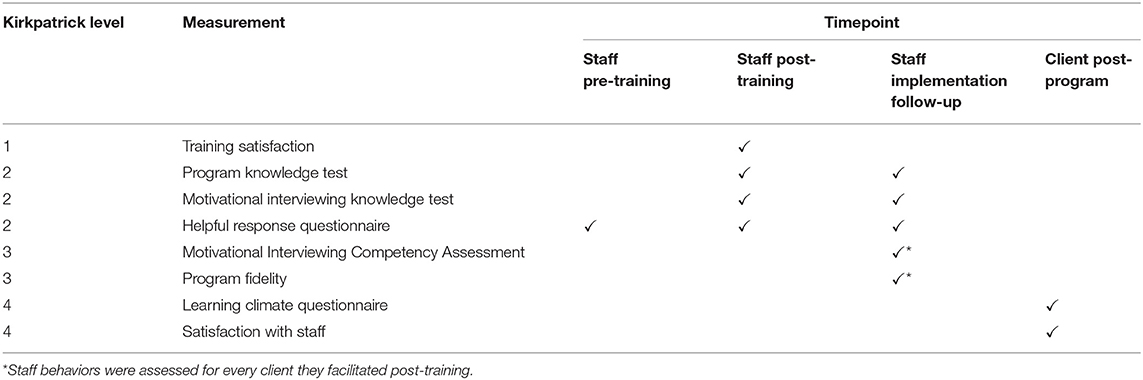

Methods: Eight staff were trained to deliver the motivational interviewing-informed Small Steps for Big Changes program for individuals at risk of developing type 2 diabetes. Between August 2019 and March 2020, 32 clients enrolled in the program and were allocated to one of the eight staff. The Kirkpatrick 4-level training evaluation model was used to guide this research. Level one assessed staff satisfaction to the training on a 5-point scale. Level two assessed staff program knowledge and motivational interviewing knowledge/skills. Level three assessed staff behaviors by examining their use of motivational interviewing with each client. Level four assessed training outcomes using clients' perceived satisfaction with their staff and basic psychological needs support both on 7-point scales.

Results: Staff were satisfied with the training (M = 4.43; SD = 0.45; range = 3.86–4.71). All learning measures demonstrated high post-training scores that were retained at implementation follow-up. Staff used motivational interviewing skills in practice and delivered the program at a client-centered level (≥6; M = 6.34; SD = 0.83; range = 3.75–7.80). Overall, clients perceived staff supported their basic psychological needs (M = 6.55; SD = 0.64; range = 6.17–6.72) and reported high staff satisfaction scores (M = 6.88; SD = 0.33; range = 6–7).

Conclusion: The Small Steps for Big Changes training was successful and fitness facility staff delivered a motivational interviewing-informed program. While not all staff operated at a client-centered level, clients perceived their basic psychological needs to be supported. Findings support the training for future scale-up sites. Community fitness staff represent a feasible resource through which to run evidence-based counseling programs.

Introduction

The prevalence of diabetes continues to rise, with 1 in 3 Canadians affected (1). Prediabetes acts as an early warning sign for type 2 diabetes (T2D), when individuals have blood glucose levels that are higher than normal, but not high enough to be diagnosed as T2D. This window of opportunity is important as individuals with prediabetes have up to 70% increased risk of developing T2D in the future (2). Large clinical trials have demonstrated that diet and exercise modifications are effective at reducing T2D risk by up to 58% in individuals with prediabetes (3), with risk-reducing effects lasting long after intervention end (4). Thus, a focus on reducing risks of developing T2D is critical to address this public health priority.

There have been multiple translations of T2D prevention programs into real-world settings with demonstrated effectiveness (5, 6). Many countries have developed large-scale translational studies [see (7) for an overview]. Despite the effectiveness of diabetes prevention programs in community settings (8), there remains a paucity of available programs in Canada. To reach and positively impact Canadians living at risk of T2D, effective programs need to be implemented in community settings and scaled-up across the country.

Several translational studies have been modeled on the United States Diabetes Prevention Program, adopting a group-based format. While group-based approaches demonstrate one method to reduce overall program costs (9), use of community members represents another. A recent systematic review indicated that overall, diabetes prevention programs are cost-effective in any setting, and the review contained no studies examining an individual format with community members (9). A systematic review assessing real-world impact of global diabetes prevention interventions identified 63 studies, of which only three adopted an in-person, individual approach with a health care provider (6). No studies adopted an in-person, individual approach led by a community member. More research on programs for reducing T2D risk using an individualized approach with community members, such as fitness facility staff, are needed.

Training is a core component of implementation with implications on program fidelity and subsequent effectiveness (10). Understanding the effectiveness of a training program, especially for fitness facility staff without experience in counseling and diabetes prevention, is integral to the development of scalable, cost-effective diabetes prevention programs. Assessing training is one of the five domains of the Behavioral Change Consortium's best practice guidelines for assessing fidelity in health behavior change trials (11). A training program must sufficiently teach the required knowledge and skills for attendees to be successful in implementing the program.

Motivational interviewing (MI) has been used in numerous health-related programs to help individuals change various health-related behaviors, including the management of diabetes and obesity (12, 13). When evaluated, MI training has been shown to be effective among health care practitioners (14). Evaluated training programs typically include a 1.5–3-day workshop with individual MI skill practice, role-play scenarios, and post-training support (14). To our knowledge no study to date has examined MI training for fitness facility staff, and few researchers have examined MI training for non-health care practitioners. Of the limited research, one study examined teaching community health agents to lead a T2D self-management program using a MI-informed approach in Brazilian primary care centers (15). The researchers examined MI fidelity and most community health agents performed at medium-to-high levels. However, MI fidelity was assessed using researcher-completed MI fidelity checklists, a less reliable method compared to audio or video recording sessions (16). The current study builds on previous work by examining the effectiveness of training fitness facility staff (hereafter referred to as staff) to deliver a MI-informed program using independently coded audio recordings to examine MI fidelity.

Recently, Phillips and Guarnaccia (17) recommended researchers who study MI could benefit from measuring self-determination theory (18) variables, such as basic psychological needs. Self-determination theory has been identified as a valuable theory to understand the mechanisms behind MI (19). There are three basic psychological needs: autonomy (i.e., client-centered approach, elicit client ideas), competence (i.e., confidence in the capacity to change), and relatedness (i.e., understand client perspective and non-authoritarian position) which overlap with core MI components (19). The learning climate questionnaire (20, 21) is a self-determination theory measure used to examine whether clients felt their basic psychological needs were met through program participation. To our knowledge, this is the first study to assess the learning climate questionnaire as an indicator of client perceptions of staff MI skills.

Background and Rationale

The Small Steps for Big Changes (SSBC) program uses an individual approach to deliver behavior change techniques coupled with MI techniques to bolster client's self-regulatory skills in an autonomy supportive manner (22). The program's main behavior change techniques include goals and planning, feedback and monitoring, and repetition and substitution in relation to diet and exercise topics. The free program is housed in a community not-for-profit organization, the YMCA of Okanagan,1 and consists of six sessions over 3-weeks [for full program overview see (22)]. Prior to this current translation project, SSBC was running as a research project in a community site with research staff implementing the program. In the first 2 years, the program had 213 participants enrolled with a 95% completion rate (23). Clients demonstrated improved self-reported physical activity and dietary behaviors, improved distance on a 6-minute walk test, and reduced weight and waist circumference (23). To accelerate the benefit of this program reaching the 6 million Canadians at risk for developing T2D (24), translation to the community is necessary.

Translating the program to be run by a community organization presents an ideal opportunity to supplement gaps in primary healthcare and provide a cost-efficient program using YMCA staff. Hosting the program in a community organization has numerous benefits including increasing reach to those underserved by the healthcare system and enabling clients to have ongoing access to facilities (including their staff) post-program. The YMCA of Okanagan was actively looking for a program to adopt and inquired whether SSBC could be implemented within their facility (25). YMCA staff are well-suited to deliver such a program with their demonstrated interest in the health and fitness industry and expertise in physical activity (Dineen et al., unpublished). Through their established community presence, dedication to supporting healthy communities and availability of assisted membership post-program, the YMCA is an ideal partner to increase reach, accessibility, and long-term support. As part of a national organization, there are opportunities for scale-up across Canada. However, it is unknown if YMCA staff can effectively learn the program's counseling strategy (MI), diabetes prevention concepts and implement the program with fidelity. This current case study was needed to examine the effectiveness of training YMCA staff to implement a MI-informed diabetes prevention program.

As noted, SSBC centers on the delivery of diabetes prevention topics using MI skills and specific behavior change techniques (e.g., goal setting) to support clients' basic psychological needs and program success. The goal of the training was to teach staff core program components and skills necessary to be successful in facilitating the program to clients. The Kirkpatrick Evaluation Model (26) is a frequently used model to evaluate training programs. The model consists of four levels: (a) reaction, (b) learning, (c) behavior, and (d) results. The first level measures reactions to the training received. The second level measures the knowledge and skills learned. The third level measures whether the knowledge and skills acquired from the training are used in practice. The fourth level measures the effect from the use of the skills/information in practice. Despite the popularity of Kirkpatrick's evaluation model, all four levels are rarely assessed—with levels one and two being most reported [e.g., (27)]. Levels three and four are more challenging to measure as they require post-training follow-up to examine both the application of training skills in practice and the effect of those skills that were used. The current study delivers a comprehensive training evaluation using all four levels of the model. The purpose of this case was to evaluate the effectiveness of the in-person SSBC training using the Kirkpatrick 4-level training evaluation model. In line with this model, four research questions guided the case: (a) How satisfied are staff with the training?, (b) To what extent do staff learn the material taught in the training?, (c) To what extent do staff enact what they learned in practice?, (d) To what extent are clients satisfied with their staff and feel their basic psychological needs are supported?

Case Description

A 1-year collaborative planning process with the YMCA of Okanagan was undertaken to support uptake and sustainability of the program (25). Two local YMCA sites were selected to implement the SSBC program and staff were invited to engage in the 1-year planning process. Following this planning process, interested staff volunteered to attend a 3-day (17-h) training workshop that covered MI principles, program content, and standard operating procedures. Two master trainers from the SSBC team (TD and KC) facilitated the training. The training workshop was previously developed and tested by the SSBC study team and refined prior to the current case (28, 29).

The workshop included didactic teaching of intervention content (e.g., effect of physical activity on diabetes prevention), a variety of role-play activities to practice use of skills (e.g., MI skills), demonstration of skills (e.g., exercise protocol), videos of session content delivered using MI skills, and how-to videos for common program procedures (e.g., how to review client's app-based diet and exercise tracking). All staff were given an implementation manual, including standard operating procedures, sample session scripts and checklists for the six sessions, and had ongoing access to the training videos through an online platform. All staff were shadowed by a research team member for their first client and given feedback after each session. Finally, ongoing monthly meetings with staff from each site were led by the first author to provide study updates, support, and review successes, challenges, and lessons learned.

Assessing training is an important fidelity assessment to gain confidence that staff are adequately trained to implement a program with high fidelity. This is a crucial step for effective program implementation, especially prior to scaling-up an intervention. Results will support refining a standardized training program for a community behavior change program for individuals at risk of T2D and support future scale-up of the training to other YMCAs.

Methods

Participants

Staff and Clients

All staff and clients provided written informed consent prior to participating. Staff who facilitated a minimum of three clients through the program were included in the present study (n = 8). Staff were, on average, 31.88 ± 9.05 years of age (range 24–51), predominantly self-identified as female (75%), and white (88%). There was a wide range in years of employment (50% had worked for 10+ years, 38% for 1–5 years, and 13% for <1 year) and education (75% had a university degree, 25% had obtained a college diploma) in staff. The eight staff facilitated clients (n = 32) through the program between August 2019 and March 2020. Clients were on average 59.56 ± 6.88 years of age (range 43–70), and predominantly self-identified as female (68%) and white (95%).

Data Collection

Staff completed a pre-training, post-training, and implementation follow-up survey measuring various Kirkpatrick levels further described in the following sections. The pre- and post-training survey link were emailed to staff immediately before and after the 3-day workshop. The implementation follow-up survey link was emailed to staff after facilitating three participants through the program (M = 5 months post-training). Clients were emailed a pre-program survey link after their baseline appointment and a post-program survey link after completion of the program. All surveys were hosted on Qualtrics. The following sections describe how each level was measured and with what measure (see Table 1 for the measurement time-point table).

Level 1: Reaction

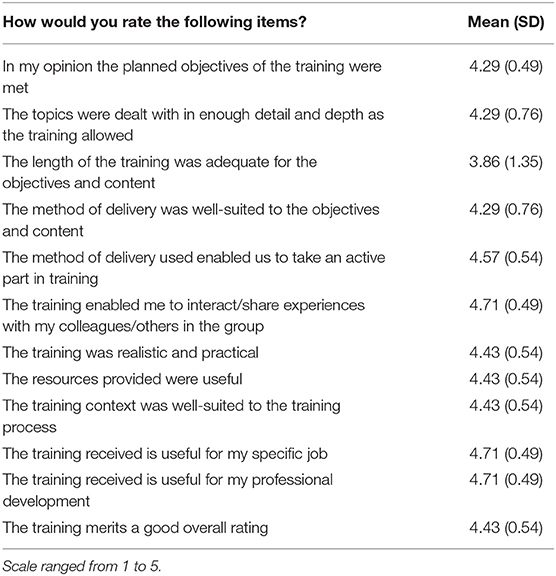

A post-training satisfaction survey was used to assess staff reaction to the training. A 12-item training satisfaction measure with demonstrated reliability was used [α = 0.88; (30)] whereby staff were asked to rate their confidence on a 5-point scale (totally disagree-totally agree; “In your opinion, the planned objectives were met”). Overall scale means were used in the analyses and demonstrated strong internal consistency (α = 0.89).

Level 2: Learning

To assess staff learning of program content and MI knowledge, two measures were assessed post-training and at implementation follow-up. First, staff knowledge of program content and standard operating procedures were assessed using a 39-item measure developed for this study. The measure was developed by the first author and pilot tested among the study team for readability, relevance, and suitability, resulting in minor modifications to questions to increase question comprehension (e.g., “How much sugar is recommended for women daily?”). Second, staff MI knowledge was assessed using a modified MI knowledge test (31). The original measure was modified from 23 to 17 items to reflect the MI content taught in the SSBC training. Responses to both knowledge measures were scored for each staff.

A third measure was used to assess staff knowledge of MI skills. The helpful response questionnaire (HRQ) is a brief measure to assess MI skills (32) and was administered to staff at pre-training, post-training and at implementation follow-up. Staff were asked to document how they would respond to six hypothetical patient statements. Staff were presented with the pre- and post-HRQ in person at the training workshop. The implementation follow-up HRQ was completed as part of the implementation follow-up online survey. An adapted scoring method was used for this study to reflect the level of MI taught and the skills in which staff were expected to be proficient. One point was given for a response with the presence of an MI communication skill (open-ended question, affirmation, and/or reflection) and zero points were given for a MI non-adherent response. A final score was calculated out of six. Two coders (TD and KC) scored all responses. Any disagreements were discussed by both coders.

Level 3: Behavior

To assess if staff used their program knowledge and skills in practice, an in-depth fidelity assessment was conducted and has been reported elsewhere [see (33)]. The study assessed whether staff implemented the program as intended by examining staff self-reported fidelity to session specific checklists and two independent coders cross-checked a sub-sample of audio-recorded sessions to ensure accuracy of staff self-report. In addition, goal setting fidelity (a key program component that relates to the goal setting and action planning behavior change techniques) was also examined.

In this current study, staff use of MI-specific skills and strategies were assessed using the MI Competency Assessment (MICA) (34). The MICA has been used in past research to assess use of MI during a session and has demonstrated reliability and validity (35). Two coders (KC and MM) listened to 20-minutes of one randomly selected program session for each client (n = 32). Coders rated the staff's use of five MI intentions (supporting autonomy and activation, guiding, expressing empathy, partnering, and evoking) and two MI strategies (strategically responding to change talk and strategically responding to sustain talk) on a 5-point scale from 1 (inconsistent with MI) to 5 (proficient with MI) for each audio recording. Items could be given a half-point. The five MI intentions aim to capture the overall spirit of MI. The coders also counted the frequency of two MI micro-skills (open-ended questions and reflections) within each coded session. A random 25% of sessions were double-coded. Based on the MICA double-coding standards (34), the coders discussed any disagreements and came to consensus.

Level 4: Training Outcomes

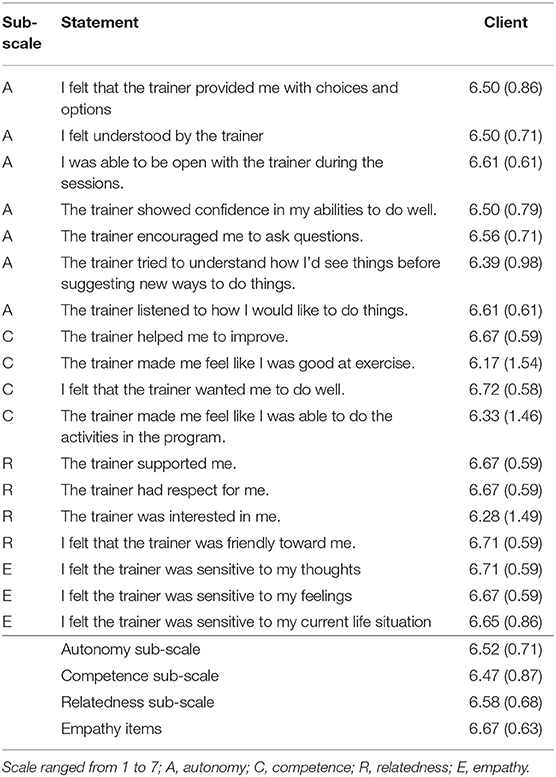

To assess training outcomes, two client measures were examined post-program that reflected staff program delivery. The first measure was the 15-item learning climate questionnaire (20, 21), which examined whether clients felt their basic psychological needs were met through program participation. The learning climate questionnaire has been used in various learning settings, such as within university and physical education contexts (20, 36). Three additional items were included to examine empathy, a key aspect of MI, including one item for each of the three elements of empathy: ability to understanding another's thoughts, feelings, and condition (e.g., “I felt the trainer was sensitive to my thoughts”). Clients were asked post-program to respond on a 7-point Likert scale (1 = strongly disagree; 7 = strongly agree). The learning climate questionnaire sub-scales (autonomy, competence, relatedness) demonstrated good internal consistency (α = 0.74–0.97) in addition to the empathy sub-scale (α = 0.91).

Finally, clients were asked to rate their overall satisfaction with their YMCA staff who facilitated them through the program. For this study, 1-item from a 10-item measure developed for this study on program quality (program and structures) was reported. The item measured overall staff satisfaction: “Please rate your satisfaction with your trainer?”. Staff satisfaction was chosen as a training outcome to represent whether clients felt their staff had delivered program components in a satisfactory manner. Clients were asked post-program to rate their confidence on a 7-point Likert scale from extremely dissatisfied to extremely satisfied. The entire 10-item program quality measure is available for reference in Supplementary File A.

Data Analysis

A mean score was calculated for each measure and sub-scale. A paired-samples t-test was run to test for sustained effects on the program and MI knowledge measures, and the HRQ. As recommended, multiple agreement statistics were calculated for the HRQ including percent agreement, Cohen's kappa (K), and prevalence, and bias adjusted Kappa (PABAK) statistics (37, 38). For MICA coding, the five MI intentions were averaged and added to the average of the two MI strategies to have one overall score to represent each staff's use of the spirit of MI. Interclass Correlation Coefficients (ICC) were used to measure consistency in MICA coding across both coders (39). All MICA-subscales and the question to reflection ratio are averaged for each staff and presented in Supplementary File B. All measures demonstrated normality with the Shapiro-Wilks test and no outliers identified based on Tabachnick and Fidell's (40) criteria.

Results

Overall, missing data for staff were low. One staff did not complete the post-training survey and one staff did not complete the implementation follow-up HRQ. Overall, post-program survey completion was moderate with 18 clients completing the learning climate questionnaire survey (56% response rate) and 17 clients completing the program quality survey (53% response rate).

Level 1: Reaction

Table 2 presents the mean and standard deviation for staff satisfaction with the 3-day training. Overall, staff were satisfied with the training (M = 4.43, SD = 0.45). Staff agreed or totally agreed to 95% of all statements. Two statements had one staff responded neutrally to the items, “the topics were dealt with in enough detail and depth as the training allowed” and “the method of delivery was well-suited to the objectives and content.” Two staff disagreed to the item, “the length of the training was adequate for the objectives and content.”

Level 2: Learning

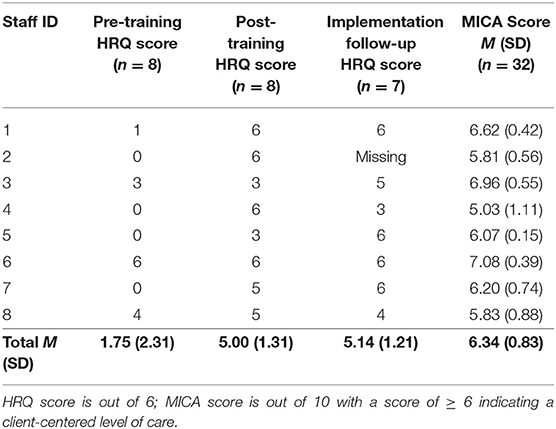

Similar trends occurred for all three learning measures; all demonstrated high post-training scores that were retained at implementation follow-up. A paired samples t-test [t(6) = −0.13, p = 0.90] demonstrated staff's program knowledge test scores did not significantly differ between post-training (M = 18.74, SD = 2.20) and implementation follow-up (M = 18.84, SD =1.40). Similarly, a paired-samples t-test [t(6) = −1.00, p = 0.36] demonstrated staff MI knowledge test scores did not significantly differ between post-training (M = 13.17, SD = 1.33) and implementation follow-up (M = 14.00, SD =1.41). Finally, a paired-samples t-test [t(7) = −4.04, p = 0.01] demonstrated staff MI knowledge test scores significantly improved from pre- to post-training and there were no differences [t(6) = −0.18, p = 0.86] between post-training and implementation follow-up (see Table 3 for individual staff HRQ scores). Overall, coders had high agreement on all consensus markers for HRQ coding (84.78% agreement, K = 0.64; PABAK = 0.70).

Table 3. Helpful response questionnaire (HRQ) and motivational interviewing competency assessment (MICA) scores per staff.

Level 3: Behavior

On average staff delivered the program at a client-centered level (M = 6.34, SD = 0.83; Range: 3.75–7.80). Based on staff availability, staff facilitated between 3 and 6 clients during the study timeframe. When assessed at the individual staff level, 5 staff operated above a client-centered level and 3 were below (see Table 3 for individual staff MICA scores). Overall, coders demonstrated excellent consistency with an ICC of 0.93.

Level 4: Training Outcomes

Table 4 presents descriptive statistics for client responses to the learning climate questionnaire. Overall, clients perceived staff supported their basic psychological needs (M = 6.55, SD = 0.64). The mean for all the learning climate questionnaire sub-scales and the added empathy items obtained similar high results (A: 6.52, C: 6.47, R: 6.58, E: 6.67). Overall, clients reported high staff satisfaction scores (M = 6.88, SD = 0.33).

Table 4. The mean and standard deviation for client responses to the learning climate questionnaire.

Discussion

Training evaluations are an important component of assessing program fidelity and a vital implementation strategy during program translation and scale-up. Assessing training is a necessary factor to understand if a program was delivered as planned to have confidence in program outcomes (16). As a program is translated from being delivered by research staff to community members, researchers must examine whether the training is effective in the new context. Thus, the present study examined the SSBC training for YMCA fitness facility staff and had positive results. This evaluation was guided by the Kirkpatrick Evaluation Model (26), a model commonly used in training evaluations. Study strengths include evaluating all four levels of Kirkpatrick's model and using multiple perspectives. The training was effective for staff and demonstrated community members are a feasible resource to implement evidence-based counseling programs.

Past diabetes prevention programs have used MI [e.g., (41, 42)], including the original United States Diabetes Prevention Program (3). However, MI training and subsequent fidelity have not been explicitly evaluated in these programs. Rather, training effectiveness was determined using client outcomes as a proxy. In doing so, it is impossible to determine if staff were using MI skills in practice. One related study that targeted diet, exercise, or smoking behaviors among individuals at risk for diabetes and/or cardiovascular disease did include an MI evaluation (43). Practice nurses underwent 12 h of MI training, received a treatment manual and on the job coaching halfway through the intervention. Overall, nurses' skills in practice were low, despite their self-reported confidence. The researchers hypothesized the training was not long enough to improve counseling techniques and suggested the shift in practice (i.e., common for nurses to give advice, explain things to patients which is avoided when using MI) may require more time to implement. While the above examples took place in healthcare contexts, there remains a lack of research examining the use of MI in non-healthcare related fields. Prior research has demonstrated that the YMCA is a promising organization to disseminate a group-based diabetes prevention program achieving positive program outcomes (44). The current study extends this notion by demonstrating YMCA staff can learn MI during a 3-day (17-h) in-person training workshop and implement MI within their program sessions. When examining MI specific skills, staff HRQ increased from pre- to post-training and staff maintained their skills at the implementation follow-up timepoint, which is in line with previous MI training evaluations (14). Fitness facilities may be a promising community-based venue to deliver an MI-informed brief-counseling diabetes prevention program.

Although the fidelity of MI skills has not been examined in diabetes prevention programs, it has been examined in other fields. For pragmatic reasons, staff did not complete a pre-training audio recording of a counseling session. Therefore, comparisons of staff MICA scores from pre- to post-training were not done, which has been done in past training evaluations (4). Rather, the MICA tool was used to assess if staff reached the client-centered level of care, a less reported and valuable statistic. Overall, staff used MI skills in practice with an average MICA score of 6.3, indicating a client-centered level of care (≥6). When examining individual staff scores, some staff achieved higher MICA scores than others. Overall, five staff were operating above a client-centered level of care and three staff were operating close to, but below a client-centered level of care. These results are similar to a sample of community health agents from Brazil who underwent MI training. Overall, 13 community health agents were categorized as medium-or-high performance and 3 were categorized as low performance when MI fidelity was assessed using a researcher completed checklist of 10 MI techniques (15). It is important to recognize that some staff may learn and apply MI more quickly than others. Thus, more research is needed on how to move individuals operating at a level below client-centered care to a level at or above client-centered care, or how to effectively screen for competency prior to training.

Self-determination theory has been suggested as a potential conceptual framework for explaining how and why MI works (17, 19). While the learning climate questionnaire was developed for assessing the basic psychological needs (autonomy, competence, and relatedness), it has been argued that MI is a complementary approach that also aims to support closely related constructs (20, 31, 44), Therefore, in response to a recommendation in the literature (17), the learning climate questionnaire was used as a marker of MI use. To our knowledge, this is the first study to use the learning climate questionnaire to assess basic psychological needs in a MI study. Results demonstrated that clients felt supported by their staff on all three basic psychological need sub-scales and on the added empathy measure. Although some staff may not have reached a client-centered level of care on their MICA coded sessions, the staff were able to foster a positive leaning environment throughout the program that was perceived by clients as empathetic and supporting clients' autonomy, competence, and relatedness. Future research should consider similar analyses to assess MI use.

Limitations

This research was affected by COVID-19 (YMCA and associated programming were forced to close due to the pandemic), is limited by a small sample size and results may lack generalizability. A pre-training knowledge test was not administered as all staff were naïve to working with individuals at risk for developing T2D and the program's standard operating procedures prior to the workshop. Similarly, there was no pre-training audio recorded session to capture staff pre-training MICA scores. These decisions were made for pragmatic reasons. In evaluation research, data collection methods (e.g., knowledge test) can act as part of the training to increase the likelihood that staff demonstrate a minimum level of knowledge gained (45). In doing so, after the evaluation is over, the data collection tools used remain a component of the training, increasing feasibility (i.e., reduce staff burden) and supporting future scale-up. All staff were naïve to MI prior to the training except one who completed a university level MI course. No staff had previous counseling experience. The HRQ gauged pre-training MI levels and indicated all staff had low levels of MI skills prior to the training, except the one staff with prior MI knowledge. In the current training, all staff had a research team member shadow all sessions with their first client and provide feedback post-session. As a conservative measure, these clients were included in the MICA scoring. In general MICA scores for the shadowed clients were consistent with future client scores. While not all staff reached a client-centered level, clients' perceived staff to support their basic psychological needs. The current study was pragmatic in nature and aimed to examine the training effectiveness. Therefore, all staff continued regardless of MI quality. On completion of the program, clients were sent a post-program survey through an email from the research team and had a moderate response rate (~50%). Future research should consider completing surveys during the final appointment or offer an incentive to increase response rates.

Lessons Learned

Staff praised the shadowing experience for providing comfort during their first experience implementing the program and an opportunity to receive direct feedback on their program delivery. However, it was difficult to coordinate scheduling and is not feasible for scale-up. Similarly, finding time for all staff to attend a 3-day in-person training workshop was challenging due to conflicting staff work schedules. Translating the training into a self-paced digital training may better support scale-up and sustainability of the training for broad scale-out. Future research should examine novel ways to mimic the reverse shadow experience to adequately prepare staff, such as incorporating a mock session.

Conducting a training evaluation was invaluable to learn how the training was received and implemented by YMCA staff, and how clients were affected by their staff's execution of the program. The current study demonstrates that MI can be taught to fitness facility staff. Previous research has suggested MI was difficult to learn, with those struggling wishing to have more training (42). Indeed, the one item that two staff disagreed with related to training length. Interestingly, current results indicate that staff who complete training at a client-centered level of care, generally maintain that level post-training. Markers for staff competency or booster training sessions should be explored to better achieve a client-centered level of care for all staff prior to graduating the training.

Conclusion

Implementing a program as it was intended to be implemented gives confidence that program outcomes are a result of the program structure and delivery (16). All staff attended a standardized training to learn how to implement the SSBC program effectively and reliably. Results demonstrated staff were satisfied with the training, developed the knowledge and skills to implement the program effectively, and applied the knowledge and skills in practice. In turn, clients felt their basic psychological needs were supported and were satisfied with their staff. While this study does not assess client outcomes, a successful training can positively affect clinical outcomes (46). Although client outcomes from the current study have been affected by the COVID-19 pandemic and are ongoing, evidence of program effectiveness when implemented by research staff is high (23). Current and prior results [i.e., (33)] indicate that staff implemented the program with fidelity which provides confidence that similar outcomes may result. Overall, this study demonstrated that the SSBC training was effective for fitness facility staff and is suitable to use for program scale-up. Future research should consider community members as a feasible resource to implement community-based counseling programs.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by Conjoint Health Research Ethics Board, University of British Columbia: H16-02028. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

TD, CB, and MJ contributed to conception and design of the study. TD and KC completed the HRQ coding. KC and MM completed the MICA coding. TD organized the database, performed the statistical analysis, and wrote the first draft of the manuscript. All authors contributed to manuscript revision, read, and approved the submitted version.

Funding

This research was funded by both a Social Sciences and Humanities Research Council Doctoral Scholarship (#767-2020-2130) and a Partnership Engage Grant (#892-2018-3065), the Canadian Institutes of Health Research (#333266), and Michael Smith Foundation for Health Research Reach Grant (#18120).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpubh.2021.728612/full#supplementary-material

Abbreviations

T2D, Type 2 diabetes; SSBC, Small Steps for Big Changes; MI, Motivational interviewing; MICA, Motivational Interviewing Competency Assessment; HRQ, Helpful response questionnaire; ICC, Interclass correlation coefficients; PABAK, Prevalence and bias adjusted Kappa.

Footnotes

1. ^The YMCA waived their rights to anonymity.

References

1. Diabetes Canada,. Prediabetes. Canadian Diabetes Association (2021). Available online at: https://www.diabetes.ca/about-diabetes/prediabetes-1 (accessed May 15, 2021).

2. Nathan DM, Davidson MB, DeFronzo RA, Heine RJ, Henry RR, Pratley R, et al. Impaired fasting glucose and impaired glucose tolerance. Diabetes Care. (2007) 30:753–9. doi: 10.2337/dc07-9920

3. Knowler WC, Barrett-Connor E, Fowler SE, Hamman RF, Lachin JM, Walker EA, et al. Reduction in the incidence of type 2 diabetes with lifestyle intervention or metformin. N Engl J Med. (2002) 346:393–403. doi: 10.1056/NEJMoa012512

4. Haw JS, Galaviz KI, Straus AN, Kowalski AJ, Magee MJ, Weber MB, et al. Long-term sustainability of diabetes prevention approaches: a systematic review and meta-analysis of randomized clinical trials. JAMA Intern Med. (2017) 177:1808–17. doi: 10.1001/jamainternmed.2017.6040

5. Ali MK, Echouffo-Tcheugui J, Williamson DF. How effective were lifestyle interventions in real-world settings that were modeled on the Diabetes Prevention Program? Health Aff (Millwood). (2012) 31:67–75. doi: 10.1377/hlthaff.2011.1009

6. Galaviz KI, Weber MB, Straus A, Haw JS, Narayan KMV, Ali MK. Global diabetes prevention interventions: a systematic review and network meta-analysis of the real-world impact on incidence, weight, and glucose. Diabetes Care. (2018) 41:1526–34. doi: 10.2337/dc17-2222

7. Gruss SM, Nhim K, Gregg E, Bell M, Luman E, Albright A. Public health approaches to type 2 diabetes prevention: the us national diabetes prevention program and beyond. Curr Diab Rep. (2019) 19:78. doi: 10.1007/s11892-019-1200-z

8. Bilandzic A, Rosella L. The cost of diabetes in Canada over 10 years: applying attributable health care costs to a diabetes incidence prediction model. Health Promot Chronic Dis Prev Can. (2017) 37:49–53. doi: 10.24095/hpcdp.37.2.03

9. Zhou X, Siegel KR, Ng BP, Jawanda S, Proia KK, Zhang X, et al. Cost-effectiveness of diabetes prevention interventions targeting high-risk individuals and whole populations: a systematic review. Diabetes Care. (2020) 43:1593–616. doi: 10.2337/dci20-0018

10. Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F. Implementation Research: A Synthesis of the Literature. Tampa, FL: National Implementation Research Network (2005).

11. Borrelli B. The assessment, monitoring, and enhancement of treatment fidelity in public health clinical trials. J Public Health Dent. (2011) 71:S52–63. doi: 10.1111/j.1752-7325.2011.00233.x

12. Lundahl B, Moleni T, Burke BL, Butters R, Tollefson D, Butler C, et al. Motivational interviewing in medical care settings: a systematic review and meta-analysis of randomized controlled trials. Patient Educ Couns. (2013) 93:157–68. doi: 10.1016/j.pec.2013.07.012

13. Christie D, Channon S. The potential for motivational interviewing to improve outcomes in the management of diabetes and obesity in paediatric and adult populations: a clinical review. Diabetes Obes Metab. (2014) 16:381–7. doi: 10.1111/dom.12195

14. Barwick MA, Bennett LM, Johnson SN, McGowan J, Moore JE. Training health and mental health professionals in motivational interviewing: a systematic review. Children Youth Serv Rev. (2012) 34:1786–95. doi: 10.1016/j.childyouth.2012.05.012

15. do Valle Nascimento TM, Resnicow K, Nery M, Brentani A, Kaselitz E, Agrawal P, et al. A pilot study of a community health agent-led type 2 diabetes self-management program using motivational interviewing-based approaches in a public primary care center in São Paulo, Brazil. BMC Health Serv Res. (2017) 17:32. doi: 10.1186/s12913-016-1968-3

16. Bellg AJ, Borrelli B, Resnick B, Hecht J, Minicucci DS, Ory M, et al. Enhancing treatment fidelity in health behavior change studies: best practices and recommendations from the NIH Behavior Change Consortium. Health Psychol. (2004) 23:443–51. doi: 10.1037/0278-6133.23.5.443

17. Phillips AS, Guarnaccia CA. Self-determination theory and motivational interviewing interventions for type 2 diabetes prevention and treatment: a systematic review. J Health Psychol. (2020) 25:44–66. doi: 10.1177/1359105317737606

18. Ryan RM, Deci EL. Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. Am Psychol. (2000) 55:68–78. doi: 10.1037/0003-066X.55.1.68

19. Vansteenkiste M, Sheldon KM. There's nothing more practical than a good theory: integrating motivational interviewing and self-determination theory. Br J Clin Psychol. (2006) 45:63–82. doi: 10.1348/014466505X34192

20. Standage M, Duda JL, Ntoumanis N. A test of self-determination theory in school physical education. Br J Educ Psychol. (2005) 75:411–33. doi: 10.1348/000709904X22359

21. Williams GC, Deci EL. Internalization of biopsychosocial values by medical students: a test of self-determination theory. J Pers Soc Psychol. (1996) 70:767–79. doi: 10.1037/0022-3514.70.4.767

22. MacPherson MM, Dineen TE, Cranston KD, Jung ME. Identifying behaviour change techniques and motivational interviewing techniques in small steps for big changes: a community-based program for adults at risk for type 2 diabetes. Can J Diabetes. (2020) 44:719–26. doi: 10.1016/j.jcjd.2020.09.011

23. Bean C, Dineen T, Locke S, Bouvier B, Jung M. An evaluation of the reach and effectiveness of a diabetes prevention behaviour change program situated in a community site. Can J Diabetes. (2021) 45:360–8. doi: 10.1016/j.jcjd.2020.10.006

24. Diabetes Canada,. About Diabetes. Canadian Diabetes Association (2021). Available online at: https://www.diabetes.ca/about-diabetes (accessed May 12, 2021).

25. Bean C, Sewell K, Jung ME. A winning combination: collaborating with stakeholders throughout the process of planning and implementing a type 2 diabetes prevention programme in the community. Health Soc Care Community. (2019) 28:681–9. doi: 10.1111/hsc.12902

26. Kirkpatrick DL, Kirkpatrick JD. Evaluating Training Programmes: The Four Levels. San Francisco, CA: Berrett-Koehier (2006).

27. Campbell K, Taylor V, Douglas S. Effectiveness of online cancer education for nurses and allied health professionals; a systematic review using Kirkpatrick evaluation framework. J Cancer Educ. (2019) 34:339–56. doi: 10.1007/s13187-017-1308-2

28. Dineen TE, Bean C, Ivanova E, Jung M. Evaluating a motivational interviewing training for facilitators of a prediabetes prevention program. J Exerc Mov Sport. (2018) 50:234.

29. Cranston KD, Ivanova E, Davis C, Jung M. How long do motivational interviewing skills last? Evaluation of the sustainability of mi skills in newly trained counsellors in a diabetes prevention program. J Exerc Mov Sport. (2018) 50:229.

30. Holgado-Tello F, Moscoso S, Barbero-García I, Sanduvete-Chaves S. Training satisfaction rating scale: development of a measurement model using polychoric correlations. Eur J Psychol Assess. (2006) 22:268–79. doi: 10.1027/1015-5759.22.4.268

31. Moyers BT, Martin T, Christopher P. Motivational Interviewing Knowledge Test (2005). Available online at: https://casaa.unm.edu/download/ELICIT/MI%20Knowledge%20Test.pdf (accessed March 26, 2019).

32. Miller WR, Hedrick KE, Orlofsky DR. The helpful responses questionnaire: a procedure for measuring therapeutic empathy. J Clin Psychol. (1991) 47:444–8. doi: 10.1002/1097-4679(199105)47:3<444::AID-JCLP2270470320>3.0.CO

33. Dineen TE, Banser T, Bean C, Jung ME. Fitness facility staff demonstrate high fidelity when implementing an evidence-based diabetes prevention program. Transl Behav Med. (2021). 11:1814–22. doi: 10.1093/tbm/ibab039

34. Jackson C, Butterworth S, Hall A, Gilbert J. Motivational Interviewing Competency Assessment (MICA). (2015). Unpublished manual. Available online at: http://www.ifioc.com/wp-content/uploads/2021/02/MICA-Manual_v3.2_Sept_2019_Final.pdf (accessed September 20, 2021).

35. Vossen J, Burduli E, Barbosa-Leiker C. Reliability Validity Testing of the Motivational Interviewing Competency Assessment (MICA). (2018). Available online at: www.micacoding.com (accessed February 24, 2021).

36. Black AE, Deci EL. The effects of instructors' autonomy support and students' autonomous motivation on learning organic chemistry: a self-determination theory perspective. Sci Educ. (2000) 84:740–56. doi: 10.1002/1098-237X(200011)84:6<740::AID-SCE4>3.0.CO

37. Byrt T, Bishop J, Carlin JB. Bias, prevalence and kappa. J Clin Epidemiol. (1993) 46:423–9. doi: 10.1016/0895-4356(93)90018-V

38. Chen G, Faris P, Hemmelgarn B, Walker RL, Quan H. Measuring agreement of administrative data with chart data using prevalence unadjusted and adjusted kappa. BMC Med Res Methodol. (2009) 9:5. doi: 10.1186/1471-2288-9-5

39. Koch GG. Intraclass correlation coefficient. In: Kotz S, Johnson NL, editors. Encyclopedia of Statistical Sciences. New York, NY: John Wiley & Sons (1982). p. 212–7.

40. Tabachnick BG, Fidell LS. Using Multivariate Statistics. 6th ed. Boston, MA: Pearson Education (2013).

41. Greaves CJ, Middlebrooke A, O'Loughlin L, Holland S, Piper J, Steele A, et al. Motivational interviewing for modifying diabetes risk: a randomised controlled trial. Br J Gen Pract. (2008) 58:535–40. doi: 10.3399/bjgp08X319648

42. Whittemore R, Melkus G, Wagner J, Dziura J, Northrup V, Grey M. Translating the diabetes prevention program to primary care: a pilot study. Nurs Res. (2009) 58:2–12. doi: 10.1097/NNR.0b013e31818fcef3

43. Lakerveld J, Bot S, Chinapaw M, van Tulder M, Kingo L, Nijpels G. Process evaluation of a lifestyle intervention to prevent diabetes and cardiovascular diseases in primary care. Health Promot Pract. (2012) 13:696–706. doi: 10.1177/1524839912437366

44. Ackermann RT, Finch EA, Brizendine E, Zhou H, Marrero DG. Translating the Diabetes Prevention Program into the community. The DEPLOY Pilot Study. Am J Prev Med. (2008) 35:357–63. doi: 10.1016/j.amepre.2008.06.035

Keywords: training evaluation, implementation science (MeSH), health behavior (MeSH), prediabetic state, diet, exercise

Citation: Dineen TE, Bean C, Cranston KD, MacPherson MM and Jung ME (2021) Fitness Facility Staff Can Be Trained to Deliver a Motivational Interviewing-Informed Diabetes Prevention Program. Front. Public Health 9:728612. doi: 10.3389/fpubh.2021.728612

Received: 21 June 2021; Accepted: 26 October 2021;

Published: 07 December 2021.

Edited by:

Katherine Henrietta Leith, University of South Carolina, United StatesReviewed by:

Larry Kenith Olsen, Logan University, United StatesPaul Cook, University of Colorado Denver, United States

Copyright © 2021 Dineen, Bean, Cranston, MacPherson and Jung. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mary E. Jung, mary.jung@ubc.ca

Tineke E. Dineen

Tineke E. Dineen Corliss Bean

Corliss Bean Kaela D. Cranston

Kaela D. Cranston Megan M. MacPherson

Megan M. MacPherson Mary E. Jung

Mary E. Jung