- 1IMT Nord Europe, Institut Mines-Télécom, Univ. Lille, Centre for Digital Systems, Lille, France

- 2Faculty of Psychology and Educational Sciences, KU Leuven, Kortrijk, Belgium

- 3IMEC Research Group ITEC, KU Leuven, Kortrijk, Belgium

- 4ICL, Junia, Université Catholique, LITL, Lille, France

Over the last decade, Higher Education has focused more of its attention toward soft skills compared to traditional technical skills. Nevertheless, there are not many studies concerning the relation between the courses followed within an academic program and the development of soft skills. This work presents a practical approach to model the effects of courses on soft skills proficiency. Multiple Membership Ordinal Logistic Regression models are trained with real data from students of the 2021, 2022, and 2023 cohorts from the general engineering program in a French Higher Education institution. The results show that attending a postgraduate course in average increases the odds of being more proficient in terms of soft skills. Nonetheless, there is considerable variability in the individual effect of courses, which suggest there can be huge differences between courses. Moreover, the data also suggest great dispersion in the students' initial soft skill proficiency.

1 Introduction

Soft skills have gathered considerable attention, especially in Higher Education Institutions (HEI). International organizations, such as the European Union with the Bologna process, have pushed HEI to consider competencies, life-learning skills or soft skills in their curricula (European Higher Education Area, 2016; Council of the European Union, 2018). The work by Caeiro Rodriguez et al. (2021) studied the European perspective of soft skill development in Higher Education investigating the different practices in 5 European countries, describing the current pedagogical methodologies (e.g., problem, project, or simulation-based methods) that aim to support soft skills, their advantages and limitations. Moreover, Almeida and Morais (2021) analyzed 4 case studies of HEI in Portugal addressing soft skills in their curricula. They realized that although the number of courses that explicitly took into account soft skills in their pedagogical activities was rather small, there was growing pressure and interest from HEI in incorporating such skills as core parts of the curricula.

Arvanitis et al. (2022) studied, through focus groups with stakeholders from HEI in Greece, strategies to fill the soft skills “gap.” This is the difference between the soft skill proficiency demanded in industry compared to the one developed in HEI. A possible strategy for HEI towards reforming and changing curricula across all levels and degrees could be to analyze their current state, that is, how their curricula help students develop their soft skills. Since courses are amongst the most common modular parts of any curriculum (there could be other elements that award credits such as projects or internships), they could serve as valuable information to help explain the students' soft skill proficiency across time. This would allow the quantification of the effect of the courses of the curricula on the students' soft skills. In other words, we could inspect whether a particular course helps more or less, compared to other courses, the students in terms of their soft skills. Therefore, in this context, the following research questions are examined.

RQ1: How can we model the effect of courses on the soft skills of postgraduate students?

Secondary research questions are:

RQ2: How is the soft skill proficiency's behavior throughout the academic program?

RQ3: How is the behavior of the course effects on soft skills?

RQ4: What are the degrees of linear correlation of course effects across different soft skills?

Nevertheless, before attempting to answer any of these questions, it is important to underline that there is not a full consensus regarding the definition of “soft skills.” The work by Touloumakos (2020) describes how these expanded from the overall definition of “skills”, encompassing a variety of attributes and traits, which do not require specific contexts in contrast to more technical skills. Their increasing relevance has promoted many researchers to try to define them, engaging in a long debate about what may or may not be considered as soft skills (Hurrell et al., 2013). According to Almonte (2021), soft skills are difficult to conceptualize in a cohesive and clear way without considering their elasticity and multifaceted perspectives. Furthermore, there are other terms that may be pseudo-interchangeable (depending on the context and definition) with soft skills such as 21st century skills (Partnership for 21st Century Skills, 2007), 4C Skills (Ye and Xu, 2023), graduate skills (Barrie, 2007), competencies, life skills (Council of the European Union, 2018), generic skills (Tuononen et al., 2022), transferable skills, and employability skills.

These other terms, however, may focus on other aspects, which do not necessarily concern soft skills. For example, employability skills generally relate to skills that help increase the chances of employment whereas transferable skills stress the skills that can be developed in a particular situation and transferred into another (Jardim et al., 2022; van Ravenswaaij et al., 2022). On the other hand, graduate skills emphasize the skills expected from university graduates whereas generic skills mostly relate to the generic aspect of skills that are not context-specific and can be used in different situations. For the purposes of this study, the term “soft skills” refers to non-technical, personal and social skills that can be used in various situations. For instance, Leadership and Stress Management are non-technical skills with a personal and interpersonal nature. In a situation, such as an industrial interdisciplinary project, the participants may need to take full advantage of these skills to manage their stress and lead the groups they are in charge of. Moreover, these same skills could also be used on academic settings, such as group coursework within courses where students would need to work together to accomplish the requirements of the pedagogical activity.

The pressure of the focus on soft skills has not only been present in HEI, but also in industry. There are several studies investigating the requirements of the industry in terms of soft skills along with the perceptions of both student and employers of soft skills as means to improve graduate employability (Ellis et al., 2014; Succi and Canovi, 2020; Xu et al., 2022). Moreover, the work by Cacciolatti et al. (2017) studied the clash between the employers' needs of both technical and soft skills along with the universities' policies towards these skills in the United Kingdom.

Since skills, in general, are latent variables (i.e., variables that can not be directly observed, and only estimated with a model that links latent variables with one or more manifest variables), the estimation of soft skills is an important part to consider. There are some strategies that may be followed to estimate soft skills. A questionnaire consisting of binary or multiple choice questions, designed to estimate a soft skill in particular (e.g., Stress Management), could be deployed. Then, either Classical Test Theory (CTT) (Novick et al., 1968), or Item Response Theory (IRT) (Lord, 1952; Rasch, 1960) could be used. With CTT, the questionnaire could be validated [e.g., with item-total correlations and Cronbach's alpha (Novick and Lewis, 1966)] in order to ensure that the questions reliably measure the construct, and then estimate the soft skill with the assumption that the computed score comprises the true soft skill proficiency alongside an error residual. In contrast, IRT models in a non-linear way the probability of correct responses based on underlying person and item characteristics, being the person characteristic, in this case, the soft skill proficiency. Another strategy could be to develop a marking scheme or rubric that describes in depth the levels of proficiency that are expected of the individuals to be assessed, thereby having pre-defined ordered levels of proficiency.

Several studies have estimated soft skills or their perception through some of the previously mentioned strategies. For instance, Chamorro-Premuzic et al. (2010) performed various studies in United Kingdom universities, where one of those was based on the students' perceptions of the influence of soft skills proficiency towards obtaining first class degrees (a distinction degree awarded at United Kingdom universities). They used a 7-likert type scale (scale from 1 to 7, where 1 was “Not at all” and 7 “Extremely useful”) of an inventory of 15 soft skills, and computed a total score per soft skill by calculating the mean score across items. Other studies have also taken a similar approach towards the estimation of soft skills with the computation of likert-type scale-measures across various items (Feraco and Meneghetti, 2022; Salem, 2022). Moreover, the work by Zendler (2019) studied the modeling of competencies in Computer Science Education using IRT. Furthermore, multidimensional IRT (Adams et al., 1997; Reckase, 2009), which considers the interplay between multiple abilities through compensatory or partially compensatory methods, has been used to model competencies (Hartig and Höhler, 2008, 2009), and could be used, in principle, to estimate latent soft skills based on soft skill item responses.

There have also been a few studies which investigated the interplay between courses and soft skills. The work by Muukkonen et al. (2022) studied the students' self-perceptions towards competence gains in 28 courses from two large Finnish universities. The study showed the effects of courses on skill growth to be statistically significant between courses. We follow this line of thought, in which students, besides achieving technical learning objectives, may also develop their soft skills by attending courses. We further assume that this development occurs in varying degrees across the entire curriculum (given their multidisciplinary nature, multiple courses could affect the same soft skill, e.g., a course from computer science, such as mobile application development, and another course from chemical engineering, such as organic chemistry, could both affect the problem solving skills of students). However, optimizing the development of soft skills throughout the program requires understanding the relation between courses and soft skills. An exploration of the effect of courses on soft skills can serve as a tool to study whether it is appropriate to adjust the curriculum (e.g., add, remove or adapt courses with soft skills centered pedagogical activities) if the curriculum does not sufficiently foster soft skills according to the aims of the HEI.

Continuous data is comprised within an infinite number of possible measurements between two specified limits. These measurements can include decimals. For instance, a continuous scale where the minimum is 1 and maximum is 4 can comprise measurements such as 1.01 or 3.85. Continuous models are based on distributions that can predict continuous data. Nonetheless, these models can be adapted to account for the discrete nature of categorical data. Soft skill proficiency can be estimated categorically (e.g., Leadership could be assessed in levels of proficiency such as Satisfactory, Good, Very Good, and Excellent). A first modeling approach could be to use mixed regression models to explain the soft skill proficiency with courses as predictors and random effects across students regarding their individual soft skill traits. In this approach, there would be a fixed effect, same for all students, of each course towards the students' soft skills proficiency. Nonetheless, a sizeable dataset would be needed to obtain stable fixed course effect estimates, particularly in HEI where the number of courses offered to students is considerably high. Therefore, in order to account for this issue, an overall general fixed effect of courses can be estimated instead of individual course effects. Moreover, random effects of courses and students can also be included to consider their variability. Students may not begin the program with the same level of soft skill proficiency. Similarly, courses may differ between themselves towards their effects on soft skills. For instance, course x may be more effective in helping students develop Project Management skills compared to course y.

The general aim of this study is to explore the relation between courses and soft skill proficiency of students, and showcasing a practical approach to model these course effects, which could serve as valuable input for the overall analysis of soft skills development within an academic program. In order to so, we leverage longitudinal data from a French engineering school, IMT Nord Europe, which is interested in redesigning their current general engineering program by incorporating soft skills. The engineering school developed a marking scheme to estimate the soft skills proficiency of the general engineer profile. This marking scheme is used throughout the academic program after each of the students' yearly internships, providing a longitudinal dataset describing their progress across time in terms of soft skill proficiency.

Section 2 describes in more detail the data used in this study as well as the statistical treatment of missing data from a part of the 2021 cohort. The models are also presented along with our Bayesian approach to estimate the parameters.

Section 3 presents the descriptive results of the students' soft skills proficiency across the last three years of the engineering program. Afterwards, the estimates of the parameters are presented, both continuously in the logit scale and through odds.

In Section 4, a discussion regarding the results is presented. Finally, the conclusions, limitations and possible alternatives for future work are described in Section 5.

2 Materials and methods

2.1 Data

The data was collected from the cohorts of students that graduated in 2021, 2022, and 2023 of the general engineering program (884 students in total), at IMT Nord Europe. The 5 year general engineering program contemplates 5 internships (one per year) for the students. Besides their regular studies, the students are also in constant involvement with the industry throughout the entire length of the program. After each internship, the students have their soft skills proficiency assessed by internship tutors with the previously mentioned marking scheme (see Table 1), which considers 10 soft skills and classifies their proficiency in 4 ordered categories. The marking scheme was developed by IMT Nord Europe in 2016, and later deployed by their Professional Development department in 2019.

Moreover, the students share the same curriculum up until the end of the third year. In the fourth and fifth years, they must choose a specialization, from which there is a catalogue of courses (104 different courses in the dataset, where some are exclusive to specific specializations) to choose with no prerequisites other than the hard skills we expect they have developed in previous courses. In addition, there are courses which are transversal and can be chosen across all specializations. The specializations are Energy and Environment, Digital, Industry and Services, and Materials and Civil Engineering. By taking advantage of the soft skill assessments at the end of the third year as an initial reference, we can focus our interest in the last two years (year 4 and 5), where the students can freely choose their courses (a maximum of 11 courses are followed up until the end), and therefore may end up with different courses by the end of the program. If the students were to follow the exact same curriculum, the variability in soft skill proficiency could be explained by a number of factors such as the initial differences between students or how a same course affects differently students. Nonetheless, in this case where students may follow different courses, their variability in terms of soft skill proficiency could also be affected by their heterogeneous course history.

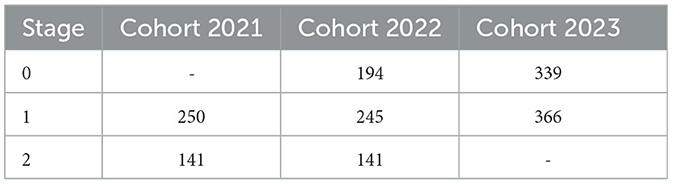

We categorized the years as stages (Stage = 0 at the end of third year and reference for the models, Stage = 1 at the end of fourth year and Stage = 2 at the end of the fifth year or academic program). Table 2 shows the number of students, whose soft skill proficiency data was assessed, per student cohort. It can be seen that there is no data from the 2021 cohort at the initial stage (the soft skill assessment program had not yet started) along with the last ongoing stage of the 2023 cohort. Given the importance of much data in order to model the effects of courses on soft skills, statistical techniques are used to handle the missing data from stage 0 of the 2021 cohort. As the same data will be collected in future years, we plan to update the analyzes each year.

The dataset structure, which comprises the longitudinal annual soft skills proficiency and course history of the last three years of the general engineering program, is shown in Table 3. The first column represents the student identifiers. The Stage describes the year during which the data from the student in question were collected whereas the Wc columns represent dummy variables with wcst equal to 1 if student s has followed course c by stage t, otherwise equal to 0. Moreover, the dummy variables keep the previous courses up until stage t. The N column describes the cumulative number of courses of student s up until that time t (It could also be thought of as the row sum of the Wc columns). sskillist is the soft skill score of student s at stage t on soft skill i. For instance, the student with identifier 884 by stage 2, followed a total of Ns = 884, t = 2 = 11 courses, amongst which were courses 1, 2, and 104, and was assessed on soft skill 1 and 10, respectively, with scores of 4 and 3.

It is important to note that aside from the missing data at stage 0 from Cohort 2021, there were cases of soft skill scores which were not assessed during the internships, and were therefore treated as missing data for the models.

2.2 Modeling approach

Since our outcome of interest is an ordinal variable (i.e., ordered categorical data such as the levels of soft skill proficiency from Table 1), we use ordinal logistic regression. Its general form is presented Equation 1, where Y is a variable with K categories. The cumulative probability P(Y ≤ k) is the probability of Y of being in category 1, 2,..., or k. Furthermore, the odds of Y being less or equal to a k category is expressed by the ratio . This model allows us to predict the probability, via a logit link function, of the K categories, totalling 100% with all the categories, based on the linear combination of the explanatory variables W.

where k = 1, 2, ..., K − 1

The αk0 intercepts represent the K − 1 thresholds needed to compare against the linear combination of effects [the probability of the last category P(Y = K) is calculated by the complement, 1 − P(Y ≤ K − 1)]. The β parameters are fixed effects that, in our case, could very well describe the individual effects of courses, with the W being dummy binary variables that determine whether the students followed or not that particular course by that time. Nonetheless, this model would use only fixed effects, same for all students, and because some students may begin with different soft skill proficiency levels, random intercepts can be considered to account for the student's individual characteristics [i.e., by adding a random residual θs to the intercepts αk0, with θs~N(0, σθs)]. In this way, the probability of a student being assessed a soft skill proficiency category k would be a function of both the student's individual characteristic and the course history. Nonetheless, due to the relatively small size of the dataset compared to the amount of courses that can be chosen by students, it may be quite difficult to adequately estimate the effects of individual courses (β1, …, βn). Therefore, we consider a general course effect, and a random deviation of individual courses from this mean course effect. These random deviations are assumed to follow a random normal distribution. Because each of the courses can contribute to the soft skill proficiency, a multiple membership model was used (Hill and Goldstein, 1998).

Multiple membership models arose from the need of properly modeling data structures which do not accommodate traditional hierarchical data clusters. For instance, in our case, the soft skill scores for Leadership of student s belong to that student and no one else. This means that those scores are nested within students. Nonetheless, the same cannot be said regarding the relation between students and courses because students would follow multiple courses (and not just one), where each of them would have an effect on the students' soft skill scores. The students would therefore belong to multiple courses. Therefore, Multiple Membership Ordered Logistic regression models are used to explain the cumulative probability of soft skill proficiency of soft skill i from student s and stage t being classified in category k. It is important to remark that the linear combination of effects is performed in the logit scale, and can be either interpreted with odds or continuously in the logit scale. In addition, it is assumed the course effects remain the same throughout time (regardless of lecturer or syllabus changes) in order to limit the complexity of the models. Moreover, the models are uni-dimensional, in the sense that each soft skill is modeled separately thereby having an ensemble of 10 models explaining each a soft skill in particular.

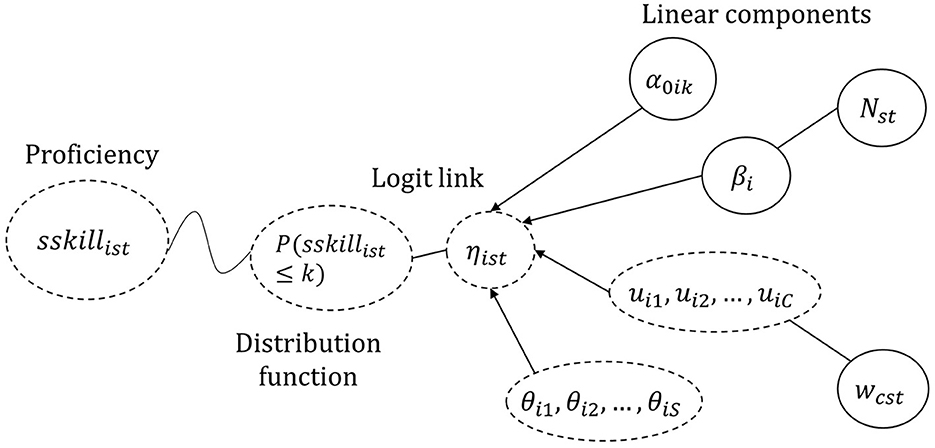

Equation 2 describes the model where α0ik represents the various thresholds against which the linear combination of effects are compared. βi is the fixed average course effect (same for all students) on soft skill i, and Nst the number of courses followed by student s and stage t. uic is the random effect of course c on soft skill i whereas wcst represents the contribution weights of the course random effects on soft skill i. Finally, θis is a random effect across students towards soft skill i.

where k = 1, 2, 3 represents the soft skill proficiency categories. Regarding the weights, wcst = 1 if student s followed course c by stage t, otherwise it is equal to 0. Moreover, the random effects are assumed to follow normal distributions, uic~N(0, σic) and θs~N(0, σis).

Figure 1 shows a graphical representation of the model, following the visualizations of Generalized Linear Mixed Models by De Boeck and Wilson (2004). The probability of the soft skill of a student being assessed a certain proficiency category depends on the the linear combination, ηist, linked to the expected outcome by a logit link function. This ηist results from the interplay between fixed and random variables from the courses as well as the individual characteristics of student s.

Figure 1. Schema of the model. The dotted circles represent random variables whereas the non-dotted circles represent fixed parameters and variables. ηist is the linear combination of the α thresholds with the general fixed effect of the average course along with the random effects of courses (uic) and students (θis).

According to the model in Equation 2, if a student followed 4 courses with identifiers (7, 12, 29, 44), the general fixed effect βi towards soft skill i would be multiplied by 4 and 4 random effects (one for each course identifier) would contribute to the overall effect of courses for this student. Since the weights of the random effects would be equal to 1 for those courses, the overall effect could be expressed as βi + ui7 + ⋯ + βi + ui44. This means course effect ci could be explained by the average course effect plus their individual random effects βi + uicwcst.

A previous study by Arreola and Wilson (2020) utilized a similar approach to the one of our models, albeit their models predicted dichotomous categorical academic performance, and not soft skills proficiency. They defined the outcome as success if students achieved a Grade Point Average greater than 2 or 3. Moreover, their models considered the students as members of multiple instructors or lecturers (where each student may have been affected by one or multiple instructors along their studies), whereas in our particular case, the students are considered multiple members of courses. This means that students follow various courses, and the students' course history varies in both in number and type (identifier) of courses.

2.3 Bayesian analysis

A Bayesian approach was used considering its flexibility in terms of model implementation. The software Stan by Carpenter et al. (2017), the R programming language (R Core Team, 2021), and the R package RStan by Stan Development Team (2023) were used to code, compile and fit the models to the data.

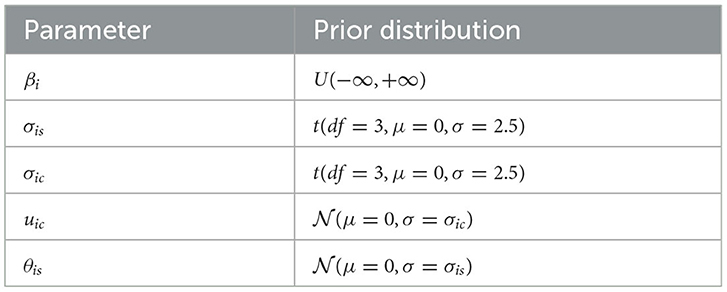

Since we have no previous data regarding course effects on soft skills, non-informative (i.e., priors that do not affect the posterior given their non-informative nature) and weakly-informative (i.e., priors that have a mild influence on the posterior distribution in order to keep the estimates in sensible ranges) were considered for all parameters. Table 4 shows the parameters priors, which were chosen based on the default priors in the R package brms by Bürkner (2018). A standard deviation σ = 2.5 is used for the priors of the standard deviations σis and σic for both course and student random intercepts. It is important to add that σis and σic are restrained to be positive in the Bayesian implementation.

Furthermore, the models were fitted with 4 chains, where each chain ran for 5,000 iterations and 1,000 burn-out samples, having a total of 16,000 post warm-up iterations per model fit. The models were fitted for each of the ten soft skill dimensions, resulting in several model fits. The empirical estimate was equal to 1 for all fits, which suggests convergence.

2.4 Multiple imputation

In our experiment, multiple imputation was used to impute 5 times the soft skills missing data from stage 0 of the 2021 student cohort. The missing data from that stage and cohort comprised the soft skill scores. The imputation was made possible with a model that depends on the data from further stages (as well as stage 0 from the 2022 and 2023 cohorts) comprising the student and soft skill scores. Multiple imputation is a highly popular approach to handle missing data (Rubin, 1987, 1996). It comprises a range of techniques that allow to generate values in order to fill in the missing data, based on other known variables from the dataset. We used the R package mice (van Buuren and Groothuis-Oudshoorn, 2011), which allows flexible implementations of various multiple imputation methods, such as predictive mean matching [imputation method proposed by Rubin and Schenker (1986); Little (1988) and used in our study]. By imputing several times the missing data, we end up with several datasets. These datasets would be similar with the only difference being the generated values corresponding to the 2021 students at stage 0. It is also important to note that by having multiple datasets, there would be various estimations (1 for each dataset) of the courses effects on the same soft skill dimension i. In this case, a course c would have 5 effects for soft skill i. Rubin's rule (Rubin, 1987), which was proposed to pool parameter results from multiple imputation, is used further in Section 3 to combine the different parameter estimates from the imputed datasets, and to account in the standard errors of the regression parameters for the uncertainty about the imputed values.

While the computational cost of multiple imputation is relatively low, the cost of estimating the model parameters with each of the imputed datasets is considerable. In a computer Intel(R) Core(TM) i7-10810U CPU @ 1.10GHz 1.61 GHz and 32 GB of RAM, the Bayesian estimation needed approximately 35 to 40 min to fit a model (by running the chains in parallel) given the current number of parameters and size of the dataset. This means that in order to estimate the effects (40 min × 5 imputed datasets × 10 soft skills), 2,000 min or approximately 33 h were necessary. Therefore, the number of imputed datasets was chosen to keep the overall computational cost feasible. Nonetheless, a higher number of imputations, in principle, would provide more robust estimations.

3 Results

Before interpreting the results, it is important to note that it should be done with caution given the complexity of the data as well as the considerable number of parameters to estimate, associated with a relatively low number of students (in comparison).

3.1 Descriptive results

3.1.1 Soft skill proficiency

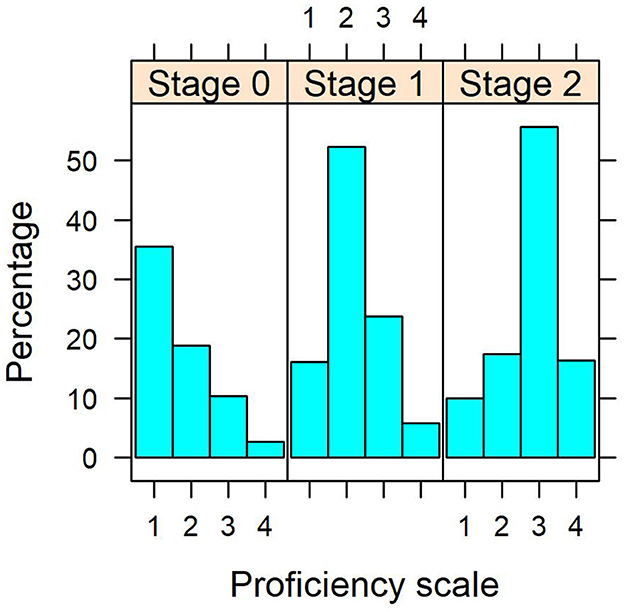

The discrete distribution of all soft skill dimensions have similar tendencies regarding the probability of higher proficiency towards the end of the program. An example is shown in Figure 2, which depicts the histograms of the students' organization skill proficiency across stages. It can be seen that the distribution at stage 0 tends to be right skewed (more probability of the first soft skill scores), whereas stage 1 is less skewed and appears to be somewhat symmetric (more probability in the 2nd and 3rd levels). On the other hand, stage 2 tends to have a left skewed distribution (higher probability of the last categories and lower on the first levels). This may suggest the probability of higher scores increases across stages.

Figure 2. Organization skills proficiency histograms across stages. Please note proportions may not amount to 100% due to missing data from the assessments aside from the imputed data at stage 0 from Cohort 2021.

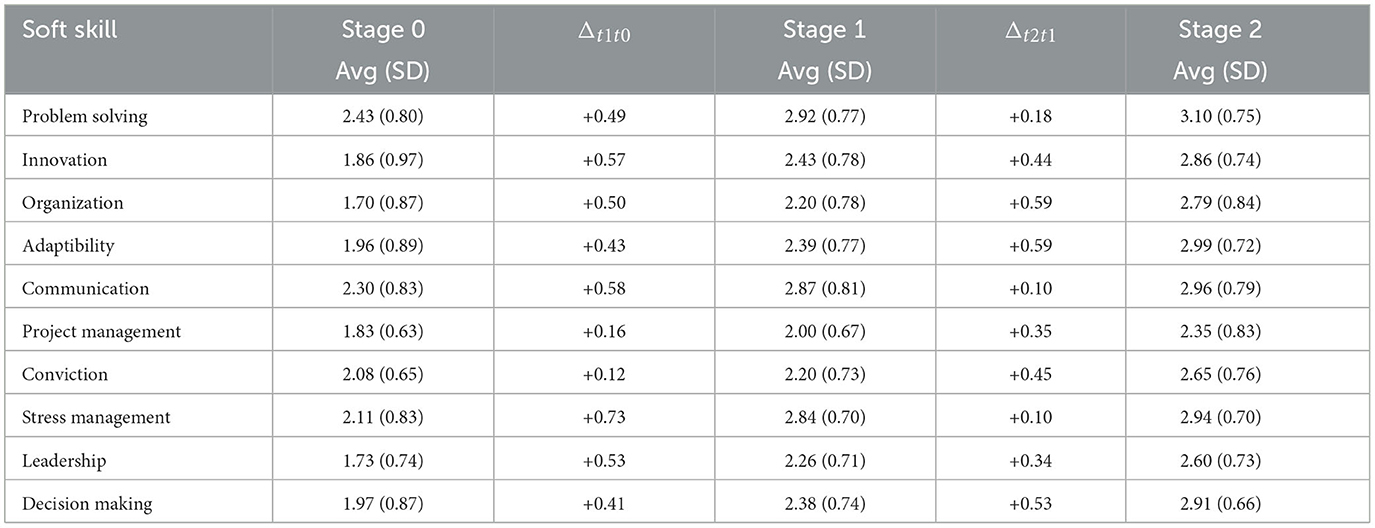

A more detailed descriptive analysis of the soft skill proficiency across time is shown in Table 5. It can be seen that the highest average proficiency was observed for problem solving with average values of 2.43, 2.92, and 3.10 (on the 4-point scale) across the three stages. The lowest average proficiency was observed, at stage 0, for Organization, followed closely by Leadership. It can also be seen that several soft skills have lower standard deviations across the stages (e.g., Decision Making with 0.87 at stage 0, 0.74 at stage 1 and 0.66 at stage 2), although the decreasing trend presents itself in varying degrees. Moreover, the average change (Δt+1,t) depicts the difference between the average proficiency at stage t + 1 and t. It can be noted that all these changes are positive, albeit some are very small at certain stages.

Table 5. Average, standard deviation, and average changes Δt+1t of the soft skill scores across stages.

3.2 Multiple membership ordinal logistic regression results

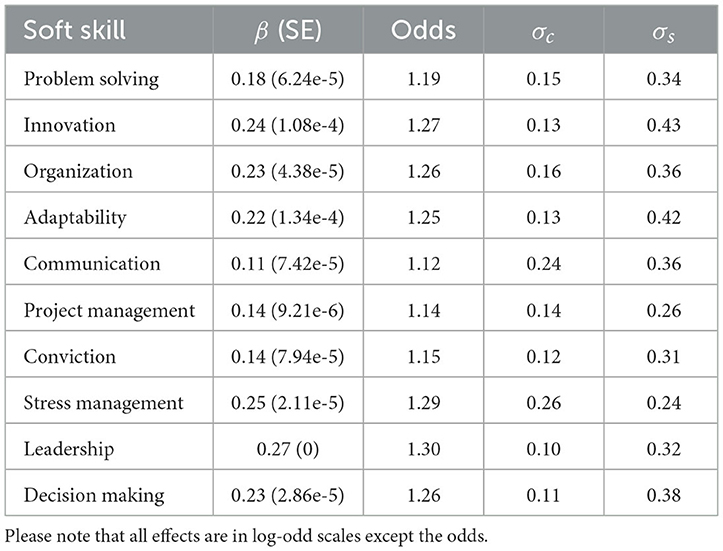

Table 6 presents the pooled mean results from the imputed datasets on each of the soft skills. It can be seen that the average effect of attending an additional course in logit scales ranges from 0.11 in Communication to 0.27 in Leadership. This also means that for each average course (course whose effect does not deviate from the mean effect βi, uic = 0) that an average student (student whose random intercept is equal to the 0 mean amongst all students, θis = 0) follows, maintaining the other variables constant, the odds of having gained proficiency in leadership after attending the course (e0.27 = 1.30 or 30% increase) are greater than communication (e0.11 = 1.12 or 12% increase).

Table 6. Pooled mean parameter results from the imputed dataset model fits (with the standard errors SE in parantheses).

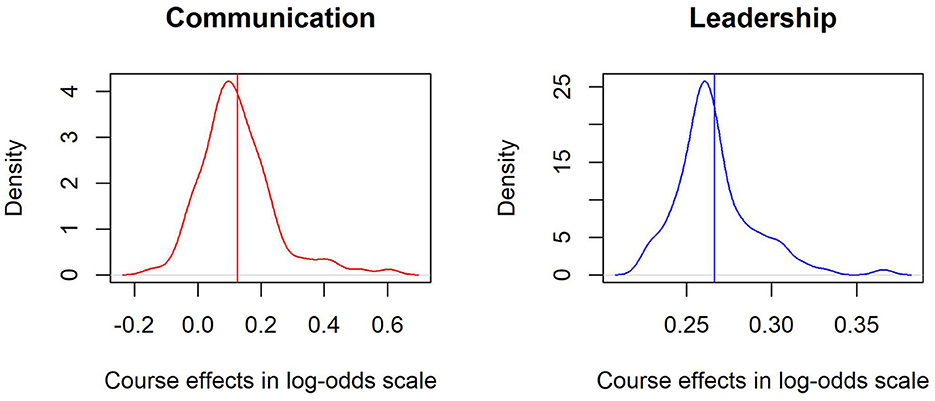

The standard deviations of the random effects of the courses range from 0.10 in Leadership to 0.26 in Stress Management. These values are considerably high given that βi is measured on a logit scale. Given the assumption of a normal distribution approximately 95% of the course effects would be comprised within 1.96 standard deviations of the mean. For the case of Leadership, whose mean effect is 0.27, this interval would be defined between 0.074 and 0.466 [0.27 − 1.96(0.10) = 0.074, 0.27 + 1.96(0.10) = 0.466]. Moreover, there are some soft skills such as Communication where the interval would comprise a substantial part bellow zero, which further suggests there can be huge differences between courses. Although on average there is a positive effect of attending a course, not all courses have such positive effects. Figure 3 shows the kernel density distributions of the random effects across courses towards Communication (red) and Leadership skills (blue) centered around their average course effects (β). First, it can be seen that both distributions resemble normal distributions, albeit Communication distribution reaches negative values in logit scales, which further suggest not all courses seem to have positive effects. Furthermore, the highest standard deviation of the random effects correspond to the students, where the minimum is 0.24 in Stress Management and the highest 0.43 in Innovation, suggesting even greater variability amongst students in terms of their initial soft skill proficiency.

Figure 3. Kernel density plot of the course effects over Communication and Leadership skills. The vertical lines describe the pooled mean average course effect in the logit scale whereas the dispersion represents the variability amongst the individual effects of courses.

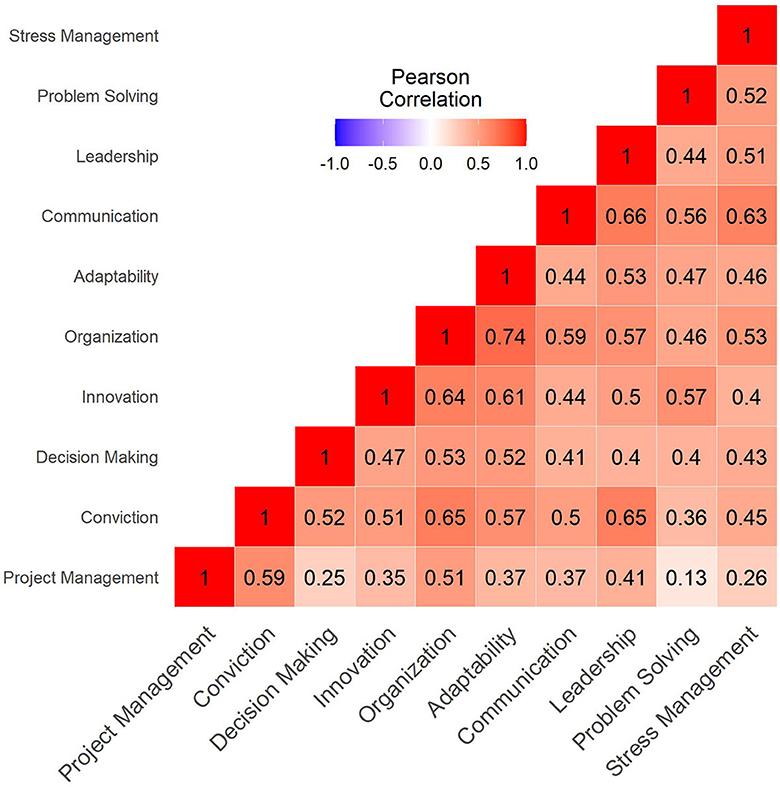

Additionally, Figure 4 shows a heat map of the Pearson correlation between the course random residual effects across the various soft skill dimensions. It can be seen that all soft skill dimensions are positively correlated, which means the course effects help develop in general all dimensions. Nonetheless, the coefficients display varying degrees of correlation. While several of the dimensions are considerably correlated (ρsskillxsskilly > 0.40), there are a few dimensions with lower correlation such as Project Management and Problem Solving(ρsskillxsskilly= 0.13), Project Management and Decision Making (ρsskillxsskilly= 0.25) and Project Management and Stress Management (ρsskillxsskilly= 0.26). There are also some highly correlated dimensions such as Organization and Adaptability (ρsskillxsskilly= 0.74), Communication and Leadership (ρsskillxsskilly= 0.66), and Organization and Conviction (ρsskillxsskilly= 0.65).

Figure 4. Correlation matrix of course random effects across soft skill dimensions. The higher the correlation the more red it becomes. Similarly, the less correlated, the less red it turns. Perfect linear correlations are depicted as fully red.

4 Discussion

The descriptive results suggest a positive evolution of the average soft skill proficiency of the engineering students across their curriculum. The discrete probability distribution of the soft skill proficiency changes throughout time, being positively skewed at the beginning of the course free-choice part of the program. This means that at the end of the third year, most students are in the first levels of soft skill proficiency. However, by the end of the program, the distribution becomes negatively skewed, and most students tend to be in the last levels of proficiency, which is mostly a good sign for the academic program.

The model results show Leadership to be the most positively affected soft skill by an average course, followed closely by Innovation and Stress Management. Moreover, the least positively affected soft skill is Communication, followed by Project Management. Furthermore, there is a considerable dispersion of course effects, which may suggest not all courses help students develop their soft skills with the same intensity. The correlation matrix of the random effects show that the course effects on soft skills are positively related, though there are varying degrees of correlation. This may suggest not all courses focus their pedagogical activities evenly on all soft skills. For instance, the modest correlation coefficient between Project Management and Problem Solving (ρsskillxsskilly= 0.13) suggest courses that strongly promote one of those soft skills may not necessarily support the other soft skill with the same intensity.

While other approaches such as Charoensap-Kelly et al. (2016) and Muukkonen et al. (2022) focus, respectively, on the perception of competence gain of individual courses and the change of behavior after following a training program, our approach is centered around the relation between courses and the soft skill proficiency of the students. That is, how the course history of the students can help us explain their soft skill proficiency. In addition, our approach leveraged the longitudinal assessments of soft skill proficiency of students throughout the academic program. Nevertheless, it is important to note that the assembly of the dataset, which comprises the longitudinal history of both soft skills and courses from 884 engineering students from 3 different cohorts, involves considerably high costs regarding logistics, time (at least the last 3 years in this case of a cohort of students), management, communication, data cleaning and pre-processing. Additionally, the importance of the dataset became even stronger given the non-availability of public datasets of a similar nature.

Nevertheless, there are several limitations to consider. First, we have to be prudent to interpret the estimated course effects as causal effects, since students were not randomly assigned to courses. The students chose their own courses, making the comparability amongst student groups a bit difficult. If the proficiency of a student group that followed a course increased considerably, we cannot say that this is due to the course itself. It can be due to other kinds of activities that the students performed on top of the course (and that they would have done, even if they had followed another course). Second, the soft skill assessment is performed only once per year, making the dataset size quite difficult to enlarge longitudinally (in terms of soft skill assessments across time) unless these were performed between semesters. Third, due to convergence issues it is currently impossible to include fixed effects of courses as well as additional random effects corresponding to specializations and tutors (4 effects of specialization, their standard deviation, and 1608 tutors alongside their variance, which provides a total of 1,612 additional random effects and 2 variance parameters). Third, in favor of simplicity it is assumed the course effects remain the same regardless of lecturer, syllabus or pedagogical design changes across time, which may not necessarily be the case for most institutions. Fourth, HEI in Europe may contemplate semesters abroad (e.g., Erasmus exchange programs), which makes the integration of course history data across multiple institutions quite difficult.

5 Conclusions and future work

In this work, we answered our research questions by proposing the use of Multiple Membership ordinal logistic regression models to allow us to understand the relation between attending postgraduate courses and their effects towards the soft skill proficiency of students. Moreover, we have shown practical methods that may provide great insight for HEI (such as IMT Nord Europe) interested in adapting their curriculum towards soft skills development. It is our hope that this article inspires practitioners and other researchers to further explore and propose other methodologies to model the effect the postgraduate courses on soft skills.

There are several alternatives that could be considered for future work. First, the addition of random intercept effects per specialization, which is not currently included due to the size of the dataset. Second, the inclusion of random intercept effects across internship tutors, who assess the students' soft skills proficiency. Third, the addition of the internship organization, and possibly categorize it by type (e.g., technological, pharmaceutical, clothing) in order to analyze whether the type of organization affects differently the soft skill proficiency of the students. Fourth, the study of the self-perception of their soft skills, and analyze whether these self-assessments correlate to the tutor-assessments. Finally, another strategy could be to study the relation between soft skills and not the courses themselves, but the pedagogical designs they are based on (e.g., problem, project, or simulation-based), which could reduce the complexity, and also provide valuable feedback for curriculum analysis. Nonetheless, it would require more work in discerning which methodologies are applied, and how close they are between the different courses in order to adequately define the weights of the multiple membership design, where students would be multiple members of one or various methodologies.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

Ethical approval was not required for the study involving humans in accordance with the local legislation and institutional requirements. Written informed consent to participate in this study was not required from the participants or the participants' legal guardians/next of kin in accordance with the national legislation and the institutional requirements.

Author contributions

LP: Conceptualization, Data curation, Formal analysis, Methodology, Writing – original draft, Writing – review & editing, Validation. AL: Methodology, Writing – review & editing. AK: Methodology, Writing – review & editing. MV: Methodology, Writing – review & editing. AF: Funding acquisition, Methodology, Supervision, Writing – review & editing. WV: Formal analysis, Methodology, Supervision, Validation, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was funded by I-SITE ULNE (42 Rue Paul Duez, 59800 Lille, France) under the project SUCCESS. The SUCCESS project aims on supporting the assessment of soft skill proficiency on postgraduate students, and provide personalized recommendations of courses based on their soft skills.

Acknowledgments

We would like to thank the collaboration of the Pôle Développement Professionnel of the IMT Nord Europe for the collection of the soft skills data, especially Cécile Labarre et Cécile Leroy. We would also thank Etienne Leblanc, IT officer at the direction of programmes at IMT Nord Europe for the course history data.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Adams, R. J., Wilson, M., and Wang, W.-C. (1997). The multidimensional random coefficients multinomial logit model. Appl. Psychol. Measur. 21, 1–23. doi: 10.1177/0146621697211001

Almeida, F., and Morais, J. (2021). Strategies for developing soft skills among higher engineering courses. J. Educ. 203, 103–112. doi: 10.1177/00220574211016417

Almonte, R. (2021). A Practical Guide to Soft Skills: Communication, Psychology, and Ethics for Your Professional Life. London: Routledge, Taylor &Francis Group. doi: 10.4324/9781003212942

Arreola, E. V., and Wilson, J. R. (2020). Bayesian multiple membership multiple classification logistic regression model on student performance with random effects in university instructors and majors. PLoS ONE 15, 1–19. doi: 10.1371/journal.pone.0227343

Arvanitis, A., Touloumakos Anna, K., Dimitropoulou, P., Vlemincx, E., Theodorou, M., and Panayiotou, G. (2022). Learning how to learn in a real-life context: Insights from expert focus groups on narrowing the soft-skills gap. Eur. J. Psychol. Open 81, 71–77. doi: 10.1024/2673-8627/a000027

Barrie, S. C. (2007). A conceptual framework for the teaching and learning of generic graduate attributes. Stud. High. Educ. 32, 439–458. doi: 10.1080/03075070701476100

Bürkner, P.-C. (2018). Advanced Bayesian multilevel modeling with the R package brms. R J. 10, 395–411. doi: 10.32614/RJ-2018-017

Cacciolatti, L., Lee, S. H., and Molinero, C. M. (2017). Clashing institutional interests in skills between government and industry: an analysis of demand for technical and soft skills of graduates in the UK. Technol. Forec. Soc. Change 119, 139–153. doi: 10.1016/j.techfore.2017.03.024

Caeiro Rodriguez, M., Manso Vázquez, M., Fernández, A., Llamas Nistal, M., Fernández Iglesias, M. J., Tsalapatas, H., et al. (2021). Teaching soft skills in engineering education: a European perspective. IEEE Access 9, 29222–29242. doi: 10.1109/ACCESS.2021.3059516

Carpenter, B., Gelman, A., Hoffman, M. D., Lee, D., Goodrich, B., Betancourt, M., et al. (2017). Stan: a probabilistic programming language. J. Stat. Softw. 76, 1–32. doi: 10.18637/jss.v076.i01

Chamorro-Premuzic, T., Arteche, A., Bremner, A., Greven, C., and Furnham, A. (2010). Soft skills in higher education: importance and improvement ratings as a function of individual differences and academic performance. Educ. Psychol. 30, 221–241. doi: 10.1080/01443410903560278

Charoensap-Kelly, P., Broussard, L., Lindsly, M., and Troy, M. (2016). Evaluation of a soft skills training program. Bus. Profess. Commun. Quart. 79, 154–179. doi: 10.1177/2329490615602090

Council of the European Union (2018). Key competences for lifelong learning. Available online at: https://op.europa.eu/en/publication-detail/-/publication/297a33c8-a1f3-11e9-9d01-01aa75ed71a1/language-en (accessed December 15, 2023).

De Boeck, P., and Wilson, M. (2004). “A framework for item response models,” in Explanatory Item Response Models: A Generalized Linear and Nonlinear Approach (New York, NY: Springer New York) 3–41. doi: 10.1007/978-1-4757-3990-9_1

Ellis, M., Kisling, E., and Hackworth, R. (2014). Teaching soft skills employers need. Commun. Coll. J. Res. Pract. 38, 433–453. doi: 10.1080/10668926.2011.567143

Feraco, T., and Meneghetti, C. (2022). Sport practice, fluid reasoning, and soft skills in 10- to 18-year-olds. Front. Hum. Neurosci. 16. doi: 10.3389/fnhum.2022.857412

Hartig, J., and Höhler, J. (2008). Representation of competencies in multidimensional IRT models with within-item and between-item multidimensionality. Zeitschrift Psychol. 216, 89–101. doi: 10.1027/0044-3409.216.2.89

Hartig, J., and Höhler, J. (2009). Multidimensional IRT models for the assessment of competencies. Stud. Educ. Eval. 35, 57–63. doi: 10.1016/j.stueduc.2009.10.002

Hill, P. W., and Goldstein, H. (1998). Multilevel modelling of educational data with cross-classification and missing identification for units. J. Educ. Behav. Statist. 23, 117–128. doi: 10.2307/1165317

Hurrell, S. A., Scholarios, D., and Thompson, P. (2013). More than a “humpty dumpty” term: strengthening the conceptualization of soft skills. Econ. Ind. Democr. 34, 161–182. doi: 10.1177/0143831X12444934

Jardim, J., Pereira, A., Vagos, P., Direito, I., and Galinha, S. (2022). The soft skills inventory: developmental procedures and psychometric analysis. Psychol. Rep. 125, 620–648. doi: 10.1177/0033294120979933

Little, R. (1988). Missing-data adjustments in large surveys: Reply. J. Bus. Econ. Stat. 6, 300–301. doi: 10.2307/1391881

Muukkonen, H., Lakkala, M., Ilomäki, L., and Toom, A. (2022). Juxtaposing generic skills development in collaborative knowledge work competences and related pedagogical practices in higher education. Front. Educ. 7. doi: 10.3389/feduc.2022.886726

Novick, M., Birnbaum, A., and Lord, F. (1968). Statistical Theories of Mental Test Scores. Boston: Addison-Wesley.

Novick, M. R., and Lewis, C. (1966). Coefficient alpha and the reliability of composite measurements. ETS Res. Bull. Ser. 1966, i–28. doi: 10.1002/j.2333-8504.1966.tb00356.x

Partnership for 21st Century Skills (2007). P 21 framework definitions. Available online at: https://www.marietta.edu/sites/default/files/documents/21st_century_skills_standards_book_2.pdf (accessed December 15, 2023).

R Core Team (2021). R: A Language and Environment for Statistical Computing. Vienna, Austria: R Foundation for Statistical Computing.

Rasch, G. (1960). Probabilistic models for some intelligence and attainment tests. Danish Institute for Educational Research.

Reckase, M. D. (2009). Multidimensional Item Response Theory. New York, NY: Springer Publishing Company, Incorporated, 1st edition. doi: 10.1007/978-0-387-89976-3

Rubin, D. B. (1987). Multiple Imputation for nonresponse in surveys. Wiley series in probability and mathematical statistics. Applied probability and statistics. New York, NY: Wiley. doi: 10.1002/9780470316696

Rubin, D. B. (1996). Multiple imputation after 18+ years. J. Am. Stat. Assoc. 91, 473–489. doi: 10.1080/01621459.1996.10476908

Rubin, D. B., and Schenker, N. (1986). Efficiently simulating the coverage properties of interval estimates. J. R. Stat. Soc. C 35, 159–167. doi: 10.2307/2347266

Salem, A. A. M. S. (2022). The impact of webquest-based sheltered instruction on improving academic writing skills, soft skills, and minimizing writing anxiety. Front. Educ. 7, 799513. doi: 10.3389/feduc.2022.799513

Succi, C., and Canovi, M. (2020). Soft skills to enhance graduate employability: comparing students and employers' perceptions. Stud. High. Educ. 45, 1834–1847. doi: 10.1080/03075079.2019.1585420

Touloumakos, A. K. (2020). Expanded yet restricted: A mini review of the soft skills literature. Front. Psychol. 11, 2207. doi: 10.3389/fpsyg.2020.02207

Tuononen, T., Hyytinen, H., Kleemola, K., Hailikari, T., Männikkö, I., and Toom, A. (2022). Systematic review of learning generic skills in higher education–enhancing and impeding factors. Front. Educ. 7, 885917. doi: 10.3389/feduc.2022.885917

van Buuren, S., and Groothuis-Oudshoorn, K. (2011). Mice: multivariate imputation by chained equations in R. J. Statist. Softw. 45, 1–67. doi: 10.18637/jss.v045.i03

van Ravenswaaij, H., Bouwmeester, R. A. M., van der Schaaf, M. F., Dilaver, G., van Rijen, H. V. M., and de Kleijn, R. A. M. (2022). The generic skills learning systematic: evaluating university students' learning after complex problem-solving. Front. Educ. 7, 1007361. doi: 10.3389/feduc.2022.1007361

Xu, L., Zhang, J., Ding, Y., Sun, G., Zhang, W., Philbin, S. P., et al. (2022). Assessing the impact of digital education and the role of the big data analytics course to enhance the skills and employability of engineering students. Front. Psychol. 13, 974574. doi: 10.3389/fpsyg.2022.974574

Ye, P., and Xu, X. (2023). A case study of interdisciplinary thematic learning curriculum to cultivate “4C skills”. Front. Psychol. 14, 1080811. doi: 10.3389/fpsyg.2023.1080811

Keywords: soft skills, courses, modeling, multiple membership, multiple imputation

Citation: Pinos Ullauri LA, Lebis A, Karami A, Vermeulen M, Fleury A and Van Den Noortgate W (2024) Modeling the effect of postgraduate courses on soft skills: a practical approach. Front. Psychol. 14:1281465. doi: 10.3389/fpsyg.2023.1281465

Received: 22 August 2023; Accepted: 08 December 2023;

Published: 05 January 2024.

Edited by:

María del Carmen Pérez-Fuentes, University of Almeria, SpainReviewed by:

Lene Graupner, North-West University, South AfricaAnna K. Touloumakos, Panteion University, Greece

Copyright © 2024 Pinos Ullauri, Lebis, Karami, Vermeulen, Fleury and Van Den Noortgate. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Luis Alberto Pinos Ullauri, luis.pinos-ullauri@imt-nord-europe.fr

Luis Alberto Pinos Ullauri

Luis Alberto Pinos Ullauri Alexis Lebis

Alexis Lebis Abir Karami

Abir Karami Mathieu Vermeulen

Mathieu Vermeulen Anthony Fleury1

Anthony Fleury1 Wim Van Den Noortgate

Wim Van Den Noortgate