- 1Department of Health Sciences, Hull York Medical School, University of York, York, United Kingdom

- 2Department of Social Policy and Social Work, University of York, York, United Kingdom

- 3Bradford Institute for Health Research, Bradford Teaching Hospitals NHS Foundation Trust, Bradford, United Kingdom

- 4Rotherham Doncaster and South Humber NHS Foundation Trust, Doncaster, United Kingdom

- 5Little Minds Matter Bradford Infant Mental Health Service, Bradford District Care NHS Foundation Trust, Bradford, United Kingdom

Introduction: The National Institute for Health and Care Excellence (NICE) guidelines acknowledge the importance of the parent–infant relationship for child development but highlight the need for further research to establish reliable tools for assessment, particularly for parents of children under 1 year. This study explores the acceptability and psychometric properties of a co-developed tool, ‘Me and My Baby’ (MaMB).

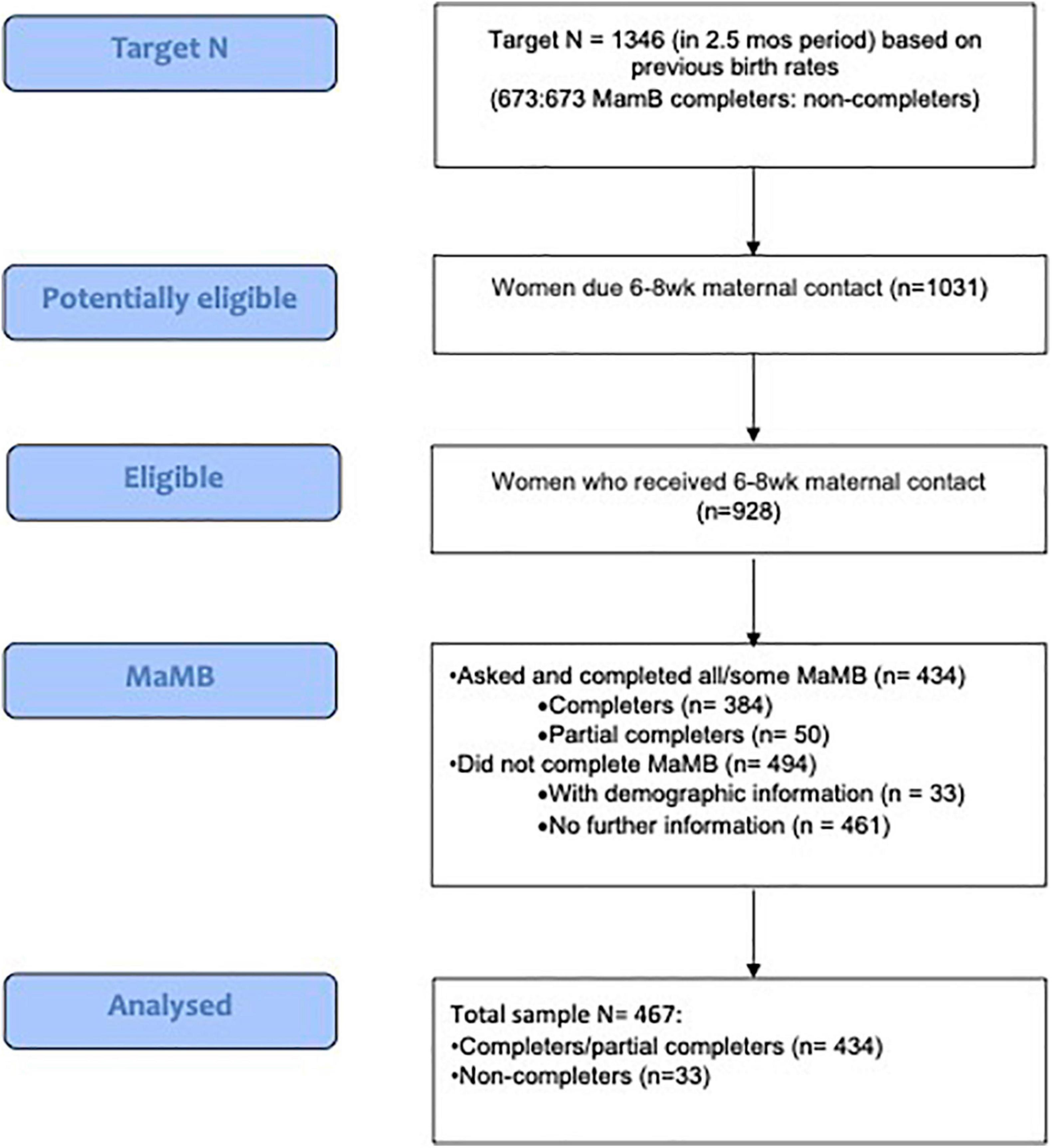

Study design: A cross-sectional design was applied. The MaMB was administered universally (in two sites) with mothers during routine 6–8-week Health Visitor contacts. The sample comprised 467 mothers (434 MaMB completers and 33 ‘non-completers’). Dimensionality of instrument responses were evaluated via exploratory and confirmatory ordinal factor analyses. Item response modeling was conducted via a Rasch calibration to evaluate how the tool conformed to principles of ‘fundamental measurement’. Tool acceptability was evaluated via completion rates and comparing ‘completers’ and ‘non-completers’ demographic differences on age, parity, ethnicity, and English as an additional language. Free-text comments were summarized. Data sharing agreements and data management were compliant with the General Data Protection Regulation, and University of York data management policies.

Results: High completion rates suggested the MaMB was acceptable. Psychometric analyses showed the response data to be an excellent fit to a unidimensional confirmatory factor analytic model. All items loaded statistically significantly and substantially (>0.4) on a single underlying factor (latent variable). The item response modeling showed that most MaMB items fitted the Rasch model. (Rasch) item reliability was high (0.94) yet the test yielded little information on each respondent, as highlighted by the relatively low ‘person separation index’ of 0.1.

Conclusion and next steps: MaMB reliably measures a single construct, likely to be infant bonding. However, further validation work is needed, preferably with ‘enriched population samples’ to include higher-need/risk families. The MaMB tool may benefit from reduced response categories (from four to three) and some modest item wording amendments. Following further validation and reliability appraisal the MaMB may ultimately be used with fathers/other primary caregivers and be potentially useful in research, universal health settings as part of a referral pathway, and clinical practice, to identify dyads in need of additional support/interventions.

Introduction

As mothers are typically primary caregivers, the current study evaluated the Me and My Baby (MaMB) for use by mothers. Maternal bonding can be defined as a mother’s emotional connection and feeling toward her child (Condon, 1993). Bonding is often conflated with attachment. Whilst the constructs are related, they are distinct (Bowlby, 1982; Redshaw and Martin, 2013). Maternal bonding refers to a mother’s (typically self-reported) emotional connection and feelings toward their child. Attachment on the other hand, refers to an infant’s expectations of their caregiver’s responses and the pattern of their own behavior, e.g., when activated in response to a perceived threat. Attachment typically develops from 6 months, whereas a mother’s bond to the infant begins to develop during pregnancy. Stronger bonding is theoretically linked to more frequent expression of behaviors such as maternal sensitivity and emotional availability (Feldman et al., 1999), which in turn foster positive interactions within the dyad and promote social and emotional development, including the development of secure attachment in the infant (Ainsworth et al., 1978; Le Bas et al., 2019).

Two systematic reviews (Branjerdporn et al., 2017; Le Bas et al., 2019) indicate that strong maternal bonding in pregnancy is associated with optimal child developmental outcomes. The Le Bas et al. (2019) review also suggested that higher affective postnatal parent-infant bond was predictive of positive child development outcomes. Both reviews suggested the findings should be interpreted with caution due to the relative paucity of studies in this area and highlighted the need for more robust self-report measures of bonding.

There are currently no agreed, standardized, methods for identifying mother/parent–infant dyads who may benefit from additional support around bonding and relationships in England. Although Health Visitors (HVs) work directly with parents some research suggests that they may struggle to consistently identify problems in the parent–infant relationship (Wilson et al., 2010; Appleton et al., 2013; Kristensen et al., 2017; Elmer et al., 2019). Relevant the National Institute for Health and Care Excellence (NICE) guidelines acknowledge the importance of parent–infant relationship for child development and parent mental health but highlight the need for further research to establish reliable tools for assessment, particularly for parents of children under the age of 1 year (NICE, 2012; NICE., 2015).

There is a distinct need for validated, robust measures to be administered universally to identify and support families who may struggle with their parent-infant relationship. Parent–infant relationship is a key focus in the Early Years High Impact Area 2: supporting good parental mental health (Public Health England [PHE], 2020) due to the risks to subsequent child social and emotional development arising from poor parent–infant relationships (Fearon et al., 2010; Cassidy et al., 2013). A reliable, valid, identification tool could allow services to more confidently signpost parents who may benefit to one of the emerging evidence-based interventions (Barlow et al., 2010, 2016; Wright et al., 2015; Facompre et al., 2018).

A very limited number of brief parent self-report tools exist that assess maternal-infant bonding, are freely available, and have some reliability and validity (Kane, 2017; Blower et al., 2019; Gridley et al., 2019; Wittkowski et al., 2020), for example; Maternal Attachment Inventory (MAI; Müller, 1994); Maternal Postnatal Attachment Scale (MPAS) (Condon and Corkindale, 1998); Postpartum Bonding Questionnaire (PBQ) (Brockington et al., 2006); Mother Infant Bonding Scale (MIBS) (Taylor et al., 2005). However, most are not widely used, or have been validated with a small sample (for further discussion see Le Bas et al., 2019; Wittkowski et al., 2020). A further two reviews, Blower et al. (2019) and Gridley et al. (2019) were undertaken to explore which measures would be acceptable, reliable, and valid for a large randomized controlled trial of a parenting intervention for parents of infants and toddlers and it was found that choice of measures was very limited (the trial was led by TB, the first author. For the protocol see Bywater et al., 2018).

The 19-item MPAS, which has preliminary evidence of reliability and validity (Kane, 2017; Wittkowski et al., 2020) is the most used tool when linking maternal-infant bonding to later child development outcomes (Le Bas et al., 2019). The MPAS was piloted (with the involvement of the first and second authors) with 347 mothers in universal health visiting services (Bird et al., 2021; Dunn et al., 2021) as part of Better Start Bradford – a 10-year National Lottery Community Fund project aimed at improving the socio-emotional development, nutrition and communication skills of children aged 0–3 living in deprived multi-ethnic communities (Dickerson et al., 2016). The pilot concluded that the MPAS could not be recommended for use in health visiting services in Bradford to assess parent-infant relationship due to; little variation in the responses of the 225 who completed the MPAS in English; an unexpected ceiling effect; issues with scoring, parental acceptability and understanding. The E-SEE trial found similar findings, with lack of variation in scores on a sample of 341 (Bywater et al., 2018).

Using the learning from the MPAS pilot the study team co-developed a new tool, MaMB, in an iterative process via workshops and interviews with Health Visitors, Clinical Psychologists, service staff, Managers and parental input, to address the issues highlighted in the MPAS pilot. Prior to a measure being recommended for use in any context, evidence of the measurement properties should be established (Cooper, 2019). Psychometric properties comprise two overarching dimensions – validity and reliability. Validity is defined as the degree to which an instrument measures the construct(s) it purports to measure, and reliability is the degree to which a measure is free from measurement error (de Vet et al., 2015). Acceptable reliability is thus a necessary, though not sufficient, condition for achieving valid scores from an instrument. ‘Reliability’ also relates to the important concept of ‘test information’; that is, the trait level at which the instrument is most capable of discriminating between test takers/respondents. Thus, a test’s ‘information curve’ has important implications for how it is optimally used in practice; for example, when identifying a screening cut-off score.

This study was therefore intended to evaluate the measurement model for the MaMB and acceptability when implemented in routine practice, as a prerequisite to further studies aiming to establish validity of the tool. The main aim was to address previous paucity and quality of available tools to assess parent (mother)–infant relationship, specifically bonding, by developing a measure for use in research as well as universal health settings as part of a referral pathway, and potentially clinical practice, to identify dyads in need of additional support or interventions. The research objectives for this study were:

(1) To explore MaMB pilot data to determine the item and test properties in relation to dimensionality and reliability, in terms of both internal consistency and test information; and

(2) To identify any necessary revisions to MaMB following the results of our psychometric analysis.

These findings would have implications for which items would be retained in a final version of instrument, and how the scores might be best summarized and used in practice. The work also paves the way for validation studies.

Materials and Methods

The Tool Under Investigation

The MaMB questionnaire (for further information see Supplementary Measure 1, and the protocol at https://osf.io/q3hmf/) has 11 items presented in a user-friendly format. Responses are indicated using a four-point Likert scale (‘never,’ ‘sometimes,’ ‘often,’ or ‘always,’ scored 0–3 with four reversed scored items). The language of items is simple to understand with a reading age of approximately 12, similar to that for popular magazines. A free text box is also included to give mothers the opportunity to record any comments or concerns they have about their relationship with their infant. Lower scores indicate a stronger affective bond.

Research Questions

RQ1: Is the MaMB acceptable to mothers of infants (aged 6–8 weeks) and HVs when administered in a universal healthcare setting?

(a) As a proportion of all eligible dyads, how many complete the MaMB?

(b) What are the reasons given for non-completion?

(c) Are the free text boxes completed by parents and what information is being recorded/reported in them?

RQ2: What are the measurement properties of the MaMB?

(a) What is the most plausible dimensionality (factor structure) of the MaMB?

(b) Does the scale (or subscales if applicable) of the MaMB demonstrate acceptable levels of internal consistency?

(c) According to item response modeling, do the items demonstrate an acceptable fit to the Rasch model, implying that the summed scores from the instrument can be used as a ‘sufficient summary statistic’?

(d) What is the relative level of information yielded for respondents by the test (or putative scales), and where might a potential cut-off score be best placed that most accurately differentiates between two groups of test-takers?

Design

A cross-sectional design was applied.

A briefing was prepared in partnership with Rotherham Doncaster and South Humber NHS Foundation Trust (RDaSH) to support the training of HVs in the use of the tool. The briefing covered the purpose of the tool, how to introduce it to families, how to score it and how to interpret the scores.

The MaMB was implemented universally (in two RDaSH localities) with eligible mothers during the 6–8 weeks routine HV contact following completion of the core mandated elements of the visit.

Health visitors asked mothers to complete a paper version of the tool, with support if needed or requested. During tool completion HVs were expected to use their professional skills to discuss with parents their relationship with their infant. If HVs were unable to complete the tool (e.g., due to time constraints) they would record the reason(s) for non-completion.

Health visitors inputted the responses electronically into the case management software (SystmOne) co-developed template to include; if tool administration was attempted, and if not why, and if tool administration had been abandoned prior to completion. The template also captured responses to all 11 items, and the free text responses to the open question on the back page of the paper tool, and HVs comments on the interaction. Key demographic variables were also recorded to adequately describe the sample’s characteristics and to support subgroup analyses.

The research team received anonymized (numerical and free text) data extracted from SystmOne, and a small number of key demographic characteristics such as age, ethnicity, and parity.

Study Setting

Two RDaSH sites in Northern England implemented the MaMB at the 6–8 weeks universally mandated HV contact.

Inclusion/Exclusion Criteria

All mothers of a child aged 6–8 weeks living in the sites were eligible for the study.

If a parent had opted out of NHS digital they may have completed the MaMB but were not included in the study (in England, NHS patients can choose to opt out of their confidential patient information being used for research and planning).

Consent

This study received ethical approval on 21st August 2020 by South Central - Berkshire B Research Ethics Committee, United Kingdom, Ref: 20/SC/0266, Integrated Research Application System (IRAS) 201, project ID: 273708.

Parents were given a MaMB Participant Information Sheet (V2.0 17th August 2020; see Supplementary Information Sheet 1) at a visit prior to the 6–8-week check to give them time to read and understand why they will be asked to complete the MaMB.

Written consent from mothers completing the MaMB, and for the non-identifiable fully anonymized, data to be shared with the research team, was not required. This was because:

(1) The research team only accessed anonymized data. Data were restricted to the minimum needed to describe the sample and to conduct the proposed analyses of measurement properties and acceptability. Free text boxes, where completed, and were screened by an authorized RDaSH employee to remove any identifiable information prior to data sharing.

(2) There was no risk of harm to participants from completing the MaMB. The tool was one of several used by HVs to conduct a broad needs assessment, as is standard at the 6–8-week contact. The MaMB supplemented existing tools and was implemented in addition to standard care. HVs are trained and well equipped to support mothers who may be struggling to bond with their baby.

(3) It was deemed essential that the MaMB sample was representative of mothers of young infants in the research site so that the study findings are generalizable. Introducing an informed consent process would likely have led to selection bias, arising from parent and practitioner characteristics and attitudes.

(4) There is a clear value and benefit from doing the research, i.e., a need for a short, easy-to-administer, valid and reliable measure to support practitioners to identify families experiencing difficulties in their parent-infant relationship. The MaMB has been co-developed by academics, psychologists and HVs with parental input to address this gap, it is vital that this measure is tested before it can be recommended for use more widely.

Sample Size

The average number of live births per year in the year prior to the study was 3460 in Site 1 (Doncaster) and 3000 in Site 2 (North Lincolnshire), which would yield approximately 538 births per month. Assuming a conservative 50% completion rate (allowing for potential implementation/uptake barriers such as time constraints, parent refusal or practitioner non-compliance, time lag in implementation and data entry) we anticipated 269 MaMBs would be completed per month. To construct a sample large enough to support the analysis of psychometric properties we proposed a sample of 673 over a 10-week period. Based on a 50% completion rate, the overall sample would include a further 673 non-completers to explore acceptability (total n = 1346). Please note this sample size was calculated pre-COVID-19.

Psychometric Analyses

RQ1

To assess acceptability of the tool reported the proportion of participants who were recorded as being offered the tool but either refused, or failed to complete, it. Where data were available descriptive analysis of the reasons for refusal was to be produced.

Key demographic characteristics (age, parity, ethnicity, English as an additional language) of completers and non-completers were to be presented in contingency tables as either frequency counts or means for descriptive purposes.

A frequency count was intended to determine the proportion of completers who used the free-text box to expand on their answers. Free-text comments were to be summarized in a brief narrative.

RQ2

Dimensionality and Internal Consistency Reliability

The sample was originally intended to be randomized into exploratory and confirmatory (‘validation’) datasets, if the data obtained were sufficient to support this approach. Initially dimensionality was planned to be explored in the former data subset using parallel analysis (see below for details) (Horn, 1965). Once this had been established, it would be followed by an exploratory factor analysis (EFA) of exploratory portion of the response data. The potential factor structures elicited would then be tested using confirmatory factor analyses (CFA) on the confirmatory (validation) dataset. Internal reliability consistency of the postulated subscales would then be examined. The findings of these analyses were intended to indicate whether it is appropriate to summarize bonding via several subscales or simply by a single total overall score for the MaMB.

The parallel analysis would be performed using unweighted least squares (ULS) as the estimation method (Horn, 1965; Lorenzo-Seva and Ferrando, 2006). In a parallel analysis the maximum plausible number of factors to be retained is indicated at the point where the eigenvalues of the randomly generated data exceed those of the actual data. A series of EFAs was expected to be then performed to aid interpretation of any factors underlying the response patterns observed. Oblique (geomin) rotations were to be used in the factor analyses, assuming that, as in almost all psychological measures, underlying latent traits would be correlated with each other to some extent. The EFAs will be repeated, again using a geomin rotation, to derive standard errors (and thus standardized Z scores) for the factor loadings to evaluate their relative statistical significance (Asparouhov and Muthén, 2009). All EFAs and CFAs were to be conducted in Mplus version 6.1 employing robust weighted least squares (WLSMV) as the estimation method, or ‘full information maximum likelihood,’ as appropriate.

Internal reliability consistency for the putative subscales based on the CFA structure was to be evaluated using Cronbach’s alpha and McDonald’s omega. Cronbach’s alpha may be a poor index of internal reliability where tau-equivalence (equality of factor loadings across items in a scale) does not hold (Raykov, 1997). In this respect McDonald’s omega is reported to represent a more accurate estimate of the extent to which items in a scale measure a unidimensional underlying construct.

Item Response Modeling

Item response modeling and theory (IRT) is based on the modified factor analysis of binary and categorical data. Within the family of IRT models Rasch analysis was originally developed for the exploration of dichotomous responses to test items (Rasch, 1960), though was subsequently extended to accommodate polytomous data. Rasch analysis can be used to create interval metrics of both item difficulty and respondent ability from ordinal (ordered categorical) or binary (dichotomous) response data. The Rasch model assumes that all items are identical in terms of their ability to discriminate between respondents according to ability/trait (i.e., equality of item factor loadings in classical factor analytic terms). For the present Rasch analysis the software package Winsteps version 4.01 was used (Linacre, 2017). A partial credit model was applied to the categorical MaMB item responses. In a Rasch analysis reliability can be appraised in several ways. Specifically, the person reliability coefficient relates to the replicability of the ranking of abilities while the person separation index represents the signal to noise ratio and estimates the ability of a test to reliably differentiate different levels of ability within a cohort (Wright and Masters, 1982).

Power issues in Rasch analysis are a matter for debate with some authors suggesting that around 200 respondents are required to accurately estimate item difficulty whilst others suggest as few as 30 participants may be required in well-targeted tests (i.e., those where difficulty is well matched to ability) (Goldman and Raju, 1986; Linacre, 1994; Baur and Lukes, 2009). Thus, this study should be adequately powered to estimate item properties from both Rasch analysis as well as the factor analyses, the latter of which could be considered re-parameterized two parameter logistic regression IRT models. Thus, the fit of items to the Rasch model was to be assessed and any potential sources of misfit diagnosed. This will be important in deciding whether it is appropriate to summarize the scores on the scale/s as summed totals. Moreover, the Rasch calibration was intended to allow the evaluation of test information, which would indicate to what extent the test is able to differentiate test-takers across the putative trait levels under evaluation (assumed to be ‘perceived bonding with baby’).

Data Handling and Sharing

Fully anonymized data was exported from SystmOne and shared with the study team via the University of York secure drop off service, which securely encrypts data. Data management is compliant with the General Data Protection Regulation (GDPR) and University of York data management policies. The custodian of data, Professor Tracey Bywater (Chief Investigator), is the contact point for any data management queries.

Results

The pilot ran 10th September 2020 to 1st December 2020, and the MaMB was administered either face to face or over the telephone depending on COVID-19 restrictions at the time of administration.

See Figure 1 for a flow of participants through the study.

The 434 response rate from the eligible 928 women equates to a 47% response rate, close to the predicted 50%.

The target sample size of 673 for MaMB completion was not achieved, and we only have data for 33/494 women who did not complete the MaMB rather than the proposed 673. The birth rate was lower than expected, and HVs changed to telephone rather than face to face visits during the study due to COVID.

Results will be presented in order of the research questions.

RQ1: Is the MaMB acceptable to mothers of infants (aged 6–8 weeks) and HVs when administered in a universal healthcare setting?

(a) As a proportion of all eligible dyads, how many complete the MaMB?

(b) What are the reasons given for non-completion?

(c) Are the free text boxes completed by parents and what information is being recorded/reported in them?

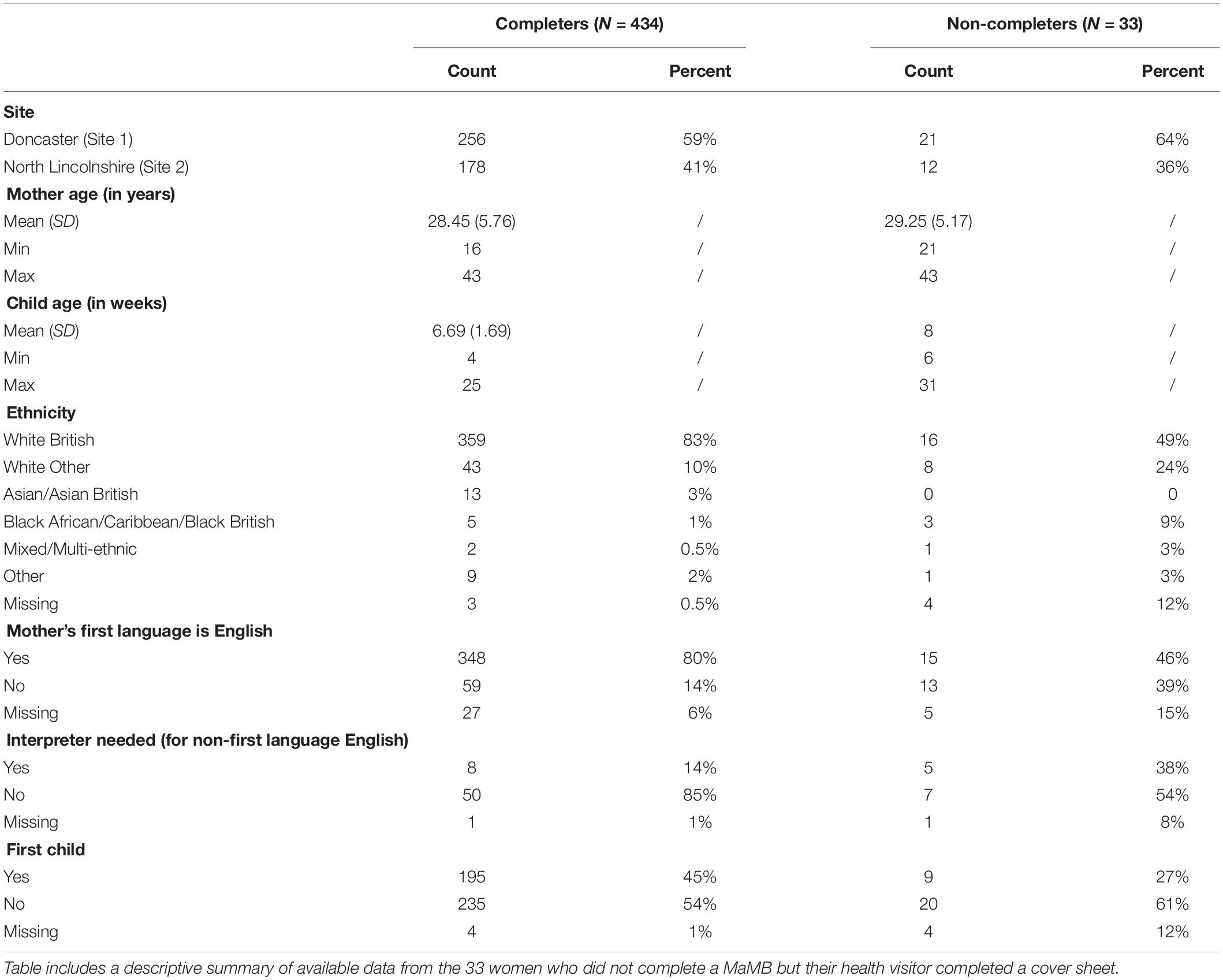

Table 1 shows the characteristics of the sample who completed the MaMB. The sample appears to represent the local population regarding ethnicity (83% white British, 10% White other, 7% Black, Asian, Multi-ethnic and other) and language (80% English as a first language, 6% missing). Although the numbers are small and we cannot draw conclusions from them, the 33 non-completers appeared to differ on ethnicity and language, which may be a reason for not completing the MaMB, e.g., 24% were white ‘other’ in the non-completers, compared to 10% in the completers. Likewise, 38% of non-completers needed an interpreter compared to 14% from the completers. Although 461 cover sheets for non-completers were missing, there was minimal missing data at item-level for those that were returned.

From the 434 respondents who completed a MaMB 50 had one or more missing items. Scores from the 384 who fully completed the MaMB tool suggest that the sample had positive relationships with their baby, mean = 1.2 (SD 1.6), with a median summed score of 1 (inter-quartile range 0–2) from a possible 33 (the lower the score indicating the more positive the perception of the mother-baby relationship), and a range of 0–15.

Twenty-nine respondents (parents and HVs) completed the free-text box with some mothers saying they felt guilty that they could not give more time to their baby or felt less than positively to toward their child at times, e.g;

“I feel guilty for having less positive feelings especially when he is screaming”

“I feel I need time by myself sometimes, but feel guilty that I feel like that as a mum”

Four mothers mentioned that they had not been separated from their baby yet, so items 8 and 10 were not applicable.

RQ2: What are the measurement properties of the MaMB?

From 467 mothers 33 had no MaMB questionnaire data whatsoever, leaving 434 participants with some response data. The original plan was to divide up the data, randomly, into a training and validation set (see section “Materials and Methods”). However, due to lack of variance in some of the item responses this was not possible. That is, dividing the dataset into two portions created items where little or no variation in responses were observed in some cases, rendering estimation of factor models impossible. Therefore, the entire dataset was explored in relation to its dimensionality.

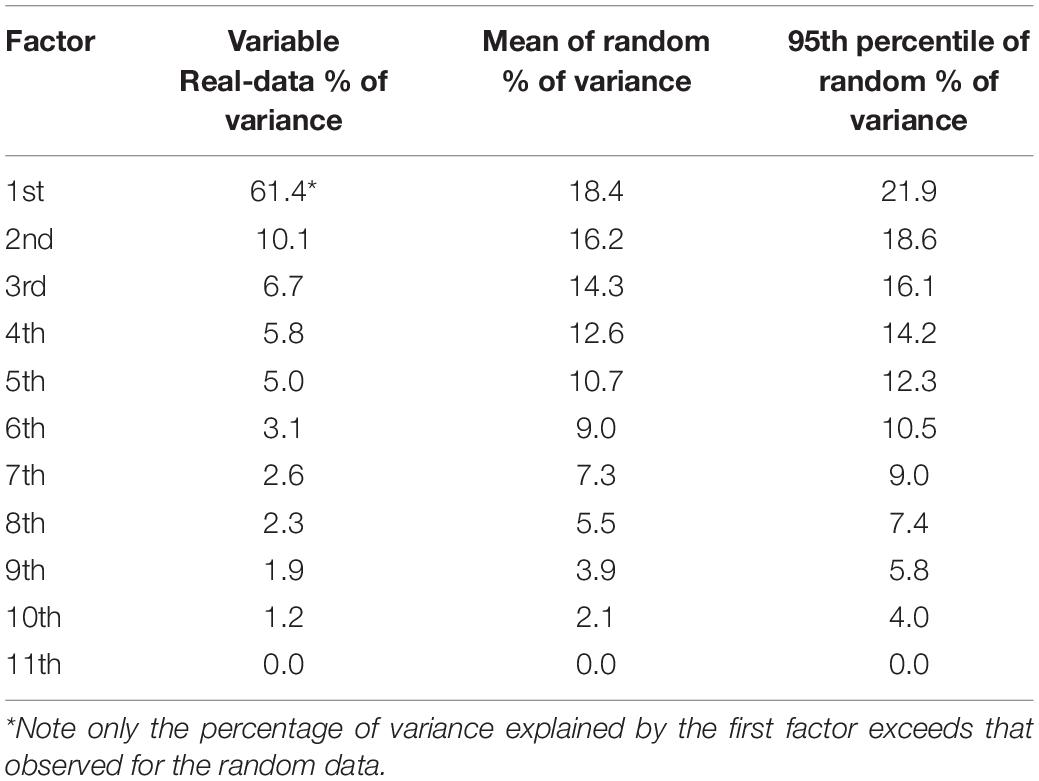

Dimensionality

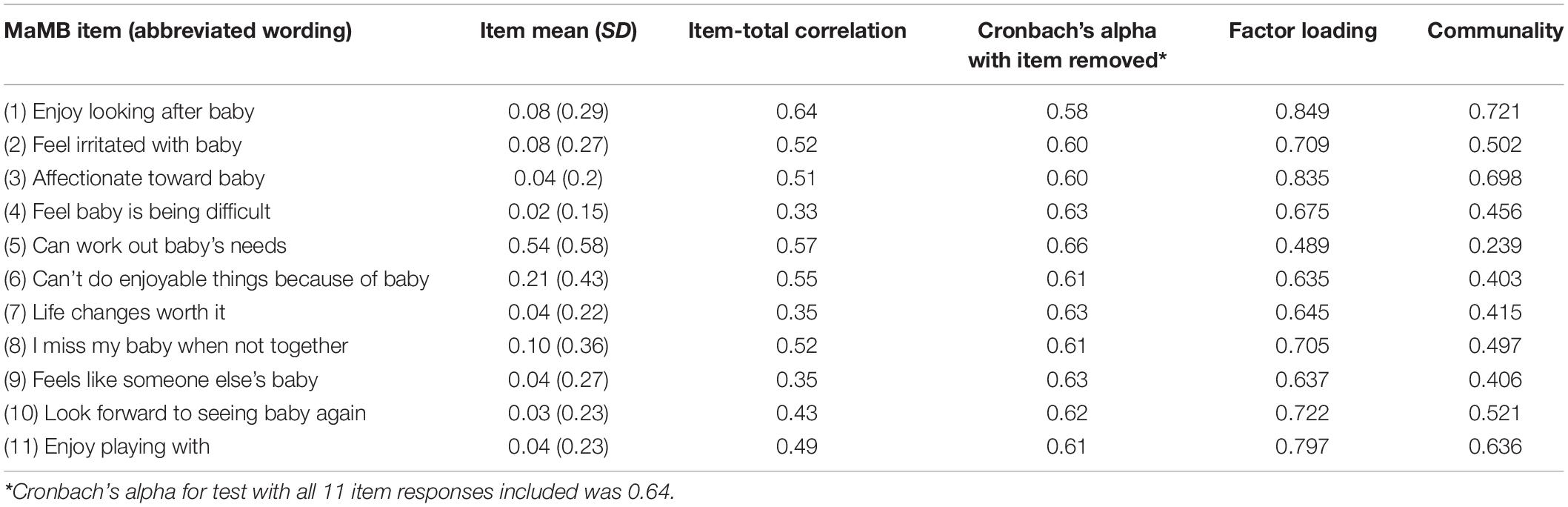

Firstly, a parallel analysis was conducted using the software FACTOR. This generates pseudorandom data, with the same dimensions as the real data. This process was adapted for use with the ordinal response data using polychoric matrices. Missing data values were handled using hot deck multiple imputation (Lorenzo-Seva and Van Ginkel, 2016). The results of the parallel analysis are shown in Table 2. These clearly indicate that there is a maximum of one factor (latent variable) underlying the response structure. This is evidenced clearly by the fact that the first latent variable explains around 60% of the variance in the indicators (item responses). However, a second postulated latent variable explains less variance than that found in a second latent variable for the pseudorandom data. The reliability, as indexed by Cronbach’s alpha was 0.64 (standardized Cronbach’s alpha 0.92) and McDonald’s Omega value of 0.92. The goodness of fit index for the one factor EFA was 0.985 (95% confidence intervals, derived via bootstrapping, 0.985–0.989). The psychometric properties of the items are shown in Table 3. For the standardized covariance matrix (polychoric correlations) as estimated from an ordinal factor analysis of the items of the MaMB scale, using the FACTOR software package see Supplementary Table 1 provided.

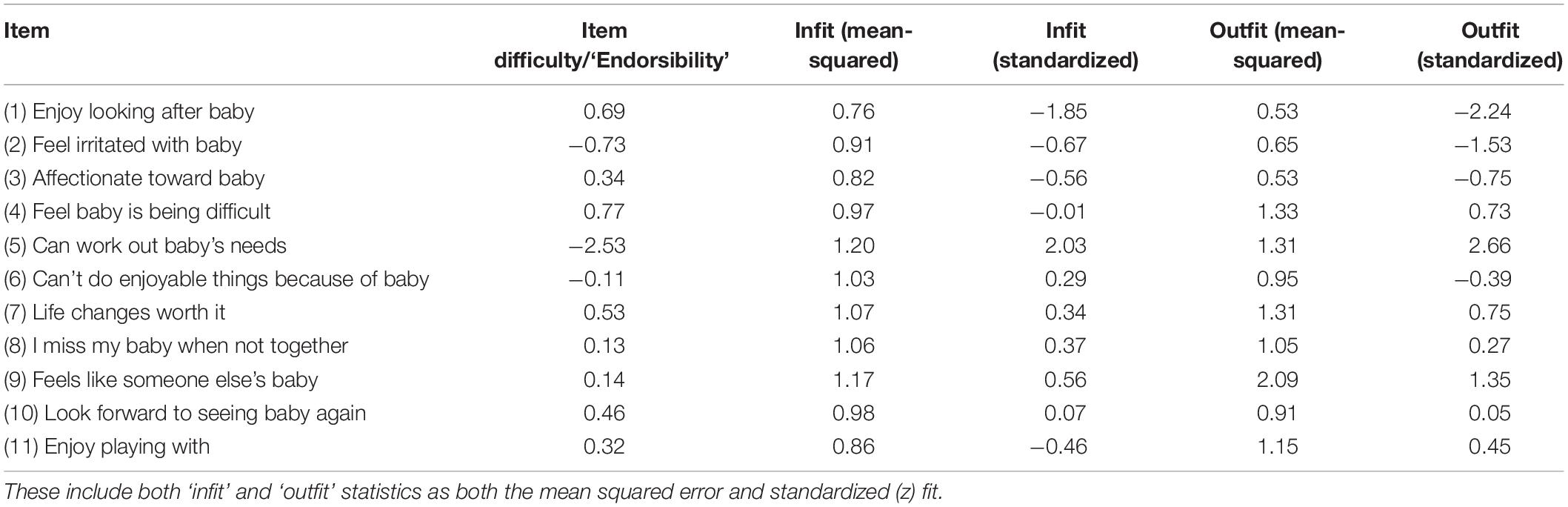

Table 3. Psychometric properties of the MAMB items, including exploratory factor analysis results, assuming one underlying factor (dimension).

This unidimensional structure was confirmed by examining the fit to a single factor confirmatory factor analytic model within the Mplus v8.4 software environment. This confirmatory factor analysis (CFA) was adapted for the ordinal nature of the response data, using robust weighted least squares as the estimation method (WLSMV). There were technical difficulties estimating a one factor model due to the low variance in items 4 and 5 and their collinearity with responses to items 10 and 11 respectively (that is, responses to the latter items were almost wholly associated with response to the former). Specifically, the correlation between item 4 (‘difficult’) and item 10 (‘apart’) was 0.987. That between item 5 (‘need’) and item 11 (‘play’) was also 0.987. Consequently, items 4 and 5 (which exhibited the lowest variance of the pairs were dropped from the CFA). When the CFA was repeated with the remaining nine items the one factor model showed a good fit to the data; the Comparative Fit Index (CFI) and Tucker-Lewis Index (TLI) fit indices were 0.94 and 0.92 respectively (≥0.90 usually is taken as acceptable fit, whilst values over 0.95 indicate good fit). Combining positive and negative worded items in a single scale can sometimes artificially lead to method effects. That is, these item types can sometimes show dependency on each other that manifest as correlated model residuals or ‘artifactors’ (Marsh, 1996). For this reason the residuals from negatively worded items were permitted to correlate within the CFA model to evaluate if this resulted in improved fit. However, this was not the case, with fit, if anything, deteriorating slightly (the TLI reduced from 0.92 to 0.91). Moreover, the modification indices did not suggest that fit would be significantly improved by permitting correlated residuals between items. The issue of dependency between items was also evaluated as part of the Rasch calibration (see below).

The factor loadings demonstrate a substantial (>0.4), positive and significant (p < 0.01) magnitude of loadings for all nine MaMB items included. Negative items were reverse coded so that the latent variable and the item factor loadings were interpretable. Having established the unidimensional structure of the data it appeared appropriate to progress to a Rasch calibration of the MaMB items.

Rasch Analysis

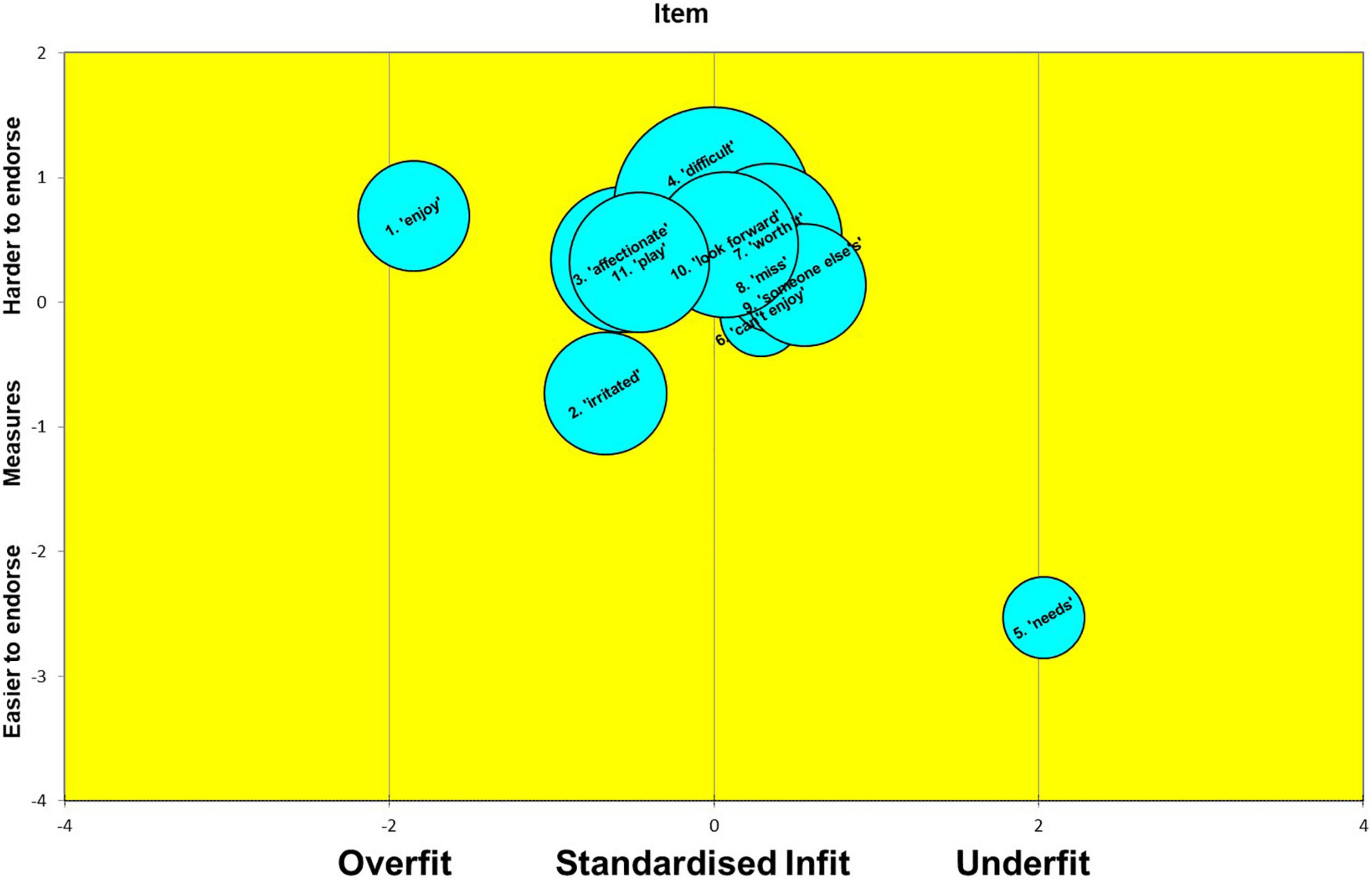

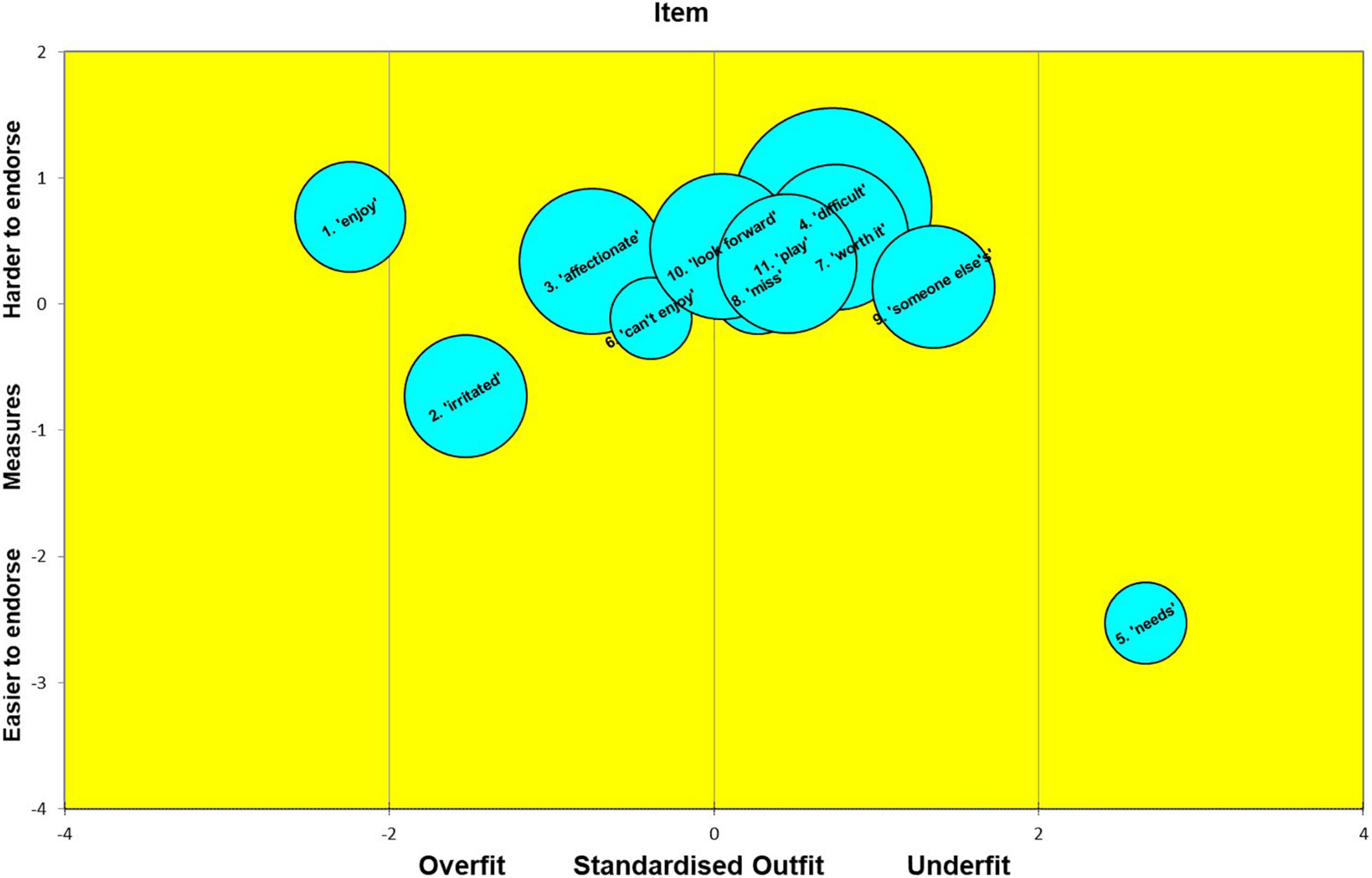

The Rasch calibration results yielded much useful diagnostic information on the MaMB questionnaire. As highlighted earlier the scale reliability itself was moderate to high. Indeed, the item reliability estimated by the Rasch calibration was 0.94. However, the person separation index (which include ‘extreme’ and ‘non-extreme’ persons) was only 0.10. The person separation index reflects the number of groups that can be plausibly differentiated by the scale with acceptable precision. It represents a signal to noise ratio in the scale. Thus, the MaMB scale had virtually no ability to differentiate respondents. This was no doubt a reflection on the lack of observed variance in responses in the study sample. Nevertheless, in terms of scale development and future research it is useful to explore the item ‘difficulties’ (or ‘endorsibility’ in this case), as well as the fit statistics. These are shown below in Table 4. The z standardized fit, along with the difficulty/endorsibility and standard error (reflected in the diameter of each bubble) are also shown in the ‘bubble plots’ in Figures 2, 3. In the Rasch context ‘fit’ in this sense refers to which the item responses follow a Guttman sequence (Rasch, 1960). That is, as the ability or trait increases the respondent or test-taker tends to be observed to give a higher scoring category of response, allowing for the play of chance, e.g., 0010101112221221222223323333. Items where responses are too predictable ‘overfit’ the model. Those that are more erratic are described as ‘underfitting.’ The former tends to indicate redundant items, that may be dependent on responses to other items. Underfitting items can distort or degrade the measurement properties of the scale. ‘Infit’ refers to fit where an item ‘difficulty’ is well matched to the level of trait or ability in a test taker. That is, for example, for a right/wrong maths question the person who is well matched would have a 50:50 chance of either a correct or incorrect answer. In this case ‘well targeted’ items would tend to show a reasonable spread of responses for a set of test takers with trait levels that are matched to the item endorsibility. Conversely, ‘outfit’ refers to fit (conformity to the Rasch model) where item difficulty is not well matched to the test taker’s trait or ability level. These distinctions between infit and outfit tend to be more pertinent to knowledge tests, than trait assessments, however. As can be seen from Table 4 and Figures 2, 3 overall, the MaMB items tend to conform reasonably well to the Rasch model. However, there are four key issues.

Figure 2. Bubble plot of the MaMB items, according to estimated endorsability (‘measure’), their standard error for this (diameter) and degree of ‘infit’ according to the Rasch model.

Figure 3. Bubble plot of the MaMB items, according to estimated endorsability (‘measure’), their standard error for this (diameter) and degree of ‘outfit’ according to the Rasch model.

(1) The items seem very easy (or in the case of negatively worded items- very hard) to endorse. This can be seen by the ‘measure’ estimates that tend to be around or above the zero point- a standardized trait (estimate) derived from the item responses.

(2) A couple of items tend to overfit the model: ‘enjoy’ (item 1) and ‘irritated’ (item 2). These tend to be somewhat overly predictable from the responses to the other items. However, this observation should be viewed cautiously as only the z standardized fit showed misfit, and this can be sensitive to relatively large numbers of observations (e.g., >300).

(3) One items (‘I feel like I’m looking after my baby for someone else’ -item 9) tends to show poor outfit. This suggests some erratic ratings, by those respondents whose estimated trait level was some distance from the item ‘measure’ (endorsibility’).

(4) One item showed poor infit and outfit, at least on the ‘z’ fit statistics (‘I can work out what my baby needs from me’). This suggests this item may have been relatively erratically answered. It may have been different respondents read or interpreted the item differently from each other. For example, some may have interpreted it in terms of basic needs, whilst others, more in terms of emotional needs. It may be useful to explore whether this item showed any item bias or differential item functioning in relation to demographic factors.

In terms of ‘person fit’; only 16 of the 438 (3.7%) participants showed marked underfit to the Rasch model, as indicated by a standardized infit or outfit of greater than 2.0. That is, their responses were more erratic than the Rasch model would have predicted. In contrast, only one respondent showed marked overfit, as defined as a standardized infit and/or outfit of less than –2.0.

The potential for item responses to be dependent on each other was investigated by examining the matrix of correlated residuals from the Rasch model, between pairs of items. In general, the magnitude of these were very small (average 0.08). The only more substantial correlated residual (≥3.0) was observed for that between item 5 (‘I can work out what my baby needs from me’) and item 6 (‘I feel like I can’t do things I enjoy because of my baby’). These two items had a correlated residual of –0.31. It is not clear why this dependency was observed, though given only one paired correlation out of 55 pairs exceeded 0.3 in magnitude this could be a chance finding.

Item Category Probabilities

It was apparent that most of the items were not operating as four-point Likert scales. That is, in many items not all four categories of response were observed in this sample of respondents. Moreover, some intermediate categories of response were rarely observed. In effect this means that even if a respondent is higher on a trait level a lower category of response may still be observed. This is sometimes referred to as ‘Rasch-Andrich threshold suppression.’ This effect is nicely illustrated below, by the item category probability curves for item 11. Although some respondents selected a response with a score of ‘2’ had higher trait levels than those who scored ‘1’ (‘0’ was not observed), in practice they were more likely to be seen to choose a ‘1’ category, as so few chose the ‘2.’ These findings suggest, at least for the kind of general population sample used in this study, the use of four Likert scale points may be too many; that is, they may not lead to more information on a test-taker and introduce some risk of extreme responses style (ERS) bias. Supplementary Figure 1, refers to probability of observing a respondent choosing a particular response category according to their overall trait level (‘baby bonding’). Note that curves do not always correspond to the ordered responses (0→1→2→3).

Test Information

As would be expected for a test mainly composed of easily endorsed items, most of the area under the test information curve was for test takers whose traits were defined as slightly below the average. That is, those who were likely to give midrange responses to easily endorsed items. This can be seen by the fact the peak of the test information curve is just below the zero on the x-axis. This suggests the item calibration is not ideal to pick out mothers who may be struggling to bond with their babies (i.e., those who are likely to be observed with a lower total score on the MaMB scale). The test information curve is depicted in Supplementary Figure 2.

Discussion

There is a paucity of high-quality tools to assess parent-infant relationships. The MaMB was co-developed to address this gap and act as a tool to measure bonding for use in research and universal health settings.

The results suggest that it is feasible for HVs to administer the MaMB with mothers in universal services. HVs successfully completed the MaMB with approximately 50% of the universal population at the 6–8-week visit in the context of highly pressured services due to the COVID-19 global pandemic. Given low rates of missing data the MaMB appears to be acceptable to parents.

The psychometric analyses suggest the MaMB tool responses, in this sample of test takers, were unidimensional. The MaMB showed relatively high levels of internal reliability consistency and the items generally fitted the Rasch model. However, the high reliability may be partly an artifact of the lack of variation in responses observed – almost all respondents gave high-scoring categories on the items. The items did not generally behave as four-point response format questions, as it was common for some response categories to go unobserved. Consequently, test information was relatively low and was much less than may be required to identify at least two separate groups of respondents, e.g., if the MaMB were to be used as a screening tool.

For the 29 parents that completed the free text it appeared a useful part of the MaMB to expand on item completion with an opportunity to voice feelings or concerns. Responses suggest parents were engaging in a meaningful discussion about bonding with their health visitor. This suggests the MaMB could be considered a potential catalyst in opening discussions about sensitive aspects of parenting such as experiencing guilt for wanting some ‘alone’ time, or for feeling less positive when their baby is screaming. Such open conversations suggest that the tool could fit well within a pathway for accessing specialist services, such as infant mental health services.

Strengths

The MaMB was co-developed over a series of workshops and interviews, using an iterative process with HVs, Clinical Psychologists, service staff and managers from different localities, and included parental input. It was piloted within routine HV contacts and, although the pilot was delivered during the COVID-19 pandemic with many visits taking place remotely, or with restrictions, completed MaMBs were obtained from 50% of the eligible population. The pilot study was classed as research as opposed to service design and had ethical approval as such. Previously psychometric analyses focused on exploratory and confirmatory factor analysis; however, this study also included IRT, which affords additional rigor and confidence in the results.

Limitations

Some HV teams would have conducted some core 6–8-week contacts over the telephone rather than in the family home due to COVID-19. However, we do not have data to report how many. This may have led to lower completion rate of the MaMB.

A much smaller than anticipated comparison group of non-completers was achieved. This was because HVs appeared not to complete, or partially complete, a cover sheet with demographic information if a mother did not wish to complete the MaMB. The pilot was conducted during the COVID-19 pandemic, during which time HVs were under enormous pressure to continue delivering statutory support to families despite adverse circumstances which likely contributed to the non-completion of cover sheets.

Deviations From the Registered Protocol

Due to the limited information on non-completers we were unable to conduct planned statistical analyses of the characteristics of completers compared to non-completers. The amount of data contained within the free-text responses of completed MaMBs also prevented a planned thematic analysis of these data, though it was sufficient to provide useful information in a descriptive summary.

Future Research

The findings of this study suggest that the MaMB is a promising tool to assess parent–infant relationships. Future research directions fall across three domains (1) understanding practitioner experiences, (2) expanding sample of users, and (3) refining approach to measurement.

Understanding Practitioner Experiences

Practitioners such as health visitors are a key component of using a measure of parent–infant relationships. A better understanding of their experience supporting mothers to complete the MaMB tool would help to further refine the tool. Obtaining ethical approval to ask HVs from the current study their views on completing the MaMB would be a priority for future research.

Expanding Sample of Users

This study found that most participants responded similarly to items on the MaMB. Further piloting of the tool with an expanded sample of users would help to understand the reason for this limited range of responses. For example, use with mothers experiencing mental health difficulties in the perinatal period would be particularly valuable. We might hypothesize that those within the clinical range of depression measures may respond differently when asked about their bond with their baby. This is highly likely to result in observing more variance in the items. It may also be able to show whether the tool is able to discriminate, with any precision, between at least two different groups of respondents. Note, in theory, a Rasch model is based on a sample free distribution (that is the estimates should be the same irrespective of the sample of test takers used for the calibration). However, in practice, precise estimates of item fit and difficulty may not be achieved, even with large samples, if some categories of response are rarely or never observed.

It was appropriate for this first pilot to target mothers, who are typically primary caregivers. However, we know that there is increasing variability in those who take on the primary caregiver role across society. Piloting the MaMB tool with a diverse range of caregivers would enable exploration of differences and similarities across responses for wider parent–infant relationships. It would also support use of the tool in practice, where fathers, same sex parents, or other kinship carers may be caring for a baby.

Refining Approach to Measurement

To enable the tool to have a greater degree of variation across responses, future research could test the MaMB tool with amended items (as highlighted in the results) to make them more subtle. This could be helpful in picking up difficulties and bonding and attachment in parents or caregivers. Moreover, future research could evaluate the tool as a three-point Likert scale, as opposed to the four-point scale used in the current study. This could help to increase variation across items.

Conclusion

Health visitors successfully administered the MaMB in universal services and the MaMB appears to be acceptable to parents. The MaMB demonstrated good internal consistency and may support HV signposting decisions for additional support, however, as the more robust analysis shows, if the MaMB was to be used as a screening tool, with a cut-off, or ranges of ‘concern’ then additional work is needed, which will need to include more families with risk factors such as depression in an enriched sample.

Regarding our objectives, we consider the MaMB to be feasible for use in routine practice with some amendments, and future piloting of such amendments.

Associated Protocol

Version 2, 7th December 2020 – to access the protocol for further information please visit: https://osf.io/q3hmf/.

Bywater, T., Blower, S. L., Dunn, A., Endacott, C., Smith, K., and Tiffin, P. (2020, December 7). Me and My Baby Protocol: The measurement properties and acceptability of a new mother-infant bonding tool designed for use in a universal healthcare setting in the United Kingdom. Retrieved from osf.io/6br2e.

Study Sponsor

The University of York. Data sharing agreements and data management were compliant with the General Data Protection Regulation (GDPR) and University of York data management policies.

Research Reference Numbers

• IRAS Number: 273708

• This study received ethical approval on 21st August 2020 by South Central - Berkshire B Research Ethics Committee, United Kingdom, Ref: 20/SC/0266, Integrated Research Application System (IRAS) 201, project ID: 273708.

• Funder References: HEIF - H0026802, ARC-YH: NIHR200166, The National Lottery Community Fund (previously the Big Lottery Fund) – 0094849, National Institute for Health Research (NIHR) Public Health Research (PHR) (ref 13/93/10).

• OFS Study Registration Number: osf.io/6br2e.

Data Availability Statement

The datasets presented in this manuscript are not readily available because the data sharing agreement stipulated RDaSH anonymized data could be shared with UoY, but no agreement exists for UoY to share the data externally. Additional agreements would possibly be required to do this. Requests to access the datasets should be directed to tracey.bywater@york.ac.uk.

Ethics Statement

The studies involving human participants were reviewed and approved by Berkshire REC. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

Author Contributions

TB secured funding, wrote the initial draft, will act as guarantor and affirms that the manuscript is an honest, accurate, transparent, and full account. TB and AD conceived the study. TB, AD, CE, KS, PT, and SB designed various aspects of the study. TB and SB provided supervision of the study from the academic perspective (UoY) and KS from the practitioner perspective (RDaSH). PT conducted the statistical analysis and provided psychometric expertise. KS and MP participated in co-developing the tool and provided clinical and practitioner expertise. AD and CE were research fellows on this study and coordinated and conducted various activities. All authors have contributed and commented on subsequent drafts of this manuscript, meet authorship criteria and no others meeting the criteria have been omitted.

Funding

Higher Education Innovation Fund (HEIF; Ref H0026802), Research and Enterprise, University of York provided funding for some research fellow time and expenses. National Institute for Health Research; Applied Research Collaboration – Yorkshire and Humber (NIHR; ARC-YH; Ref NIHR200166) supported TB and SB and provided additional in-kind costs. National Institute for Health Research (NIHR) Public Health Research (PHR) (ref 13/93/10) funded Open Access fees and some staff time. RDaSH provided their staff time and other in-kind costs. The National Lottery Community Fund (previously the Big Lottery Fund) funded some staff time, and other in-kind costs (Ref 10094849).

Author Disclaimer

The views expressed in this publication are those of the authors and not necessarily those of the NHS, the NIHR, or the Department of Health and Social Care. This study also received funding from the National Lottery Community Fund (previously the Big Lottery Fund) as part of the ‘A Better Start’ program. The National Lottery Community Fund have not had any involvement in the design or writing of the manuscript.

Conflict of Interest

TB and SB are supported by the NIHR Yorkshire and Humber Applied Research Collaboration (ARC–YH). KS is an employee of RDaSH.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

This study could not be conducted without the support of RDaSH, the health visiting teams from Doncaster and North Lincolnshire, and of course the families – we extend our gratitude to these key stakeholders, and to other professionals and parents across Yorkshire and Lincolnshire who participated in the co-development of the MaMB. We would also like to thank the funders (see funding section for information), and the ARC-YH management group for their oversight.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2022.804885/full#supplementary-material

References

Ainsworth, M., Blehar, M., Waters, E., and Wall, S. N. (1978). Patterns of Attachment: A Psychological Study of the Strange Situation. Hove, UK: Psychology Press.

Appleton, J. V., Harris, M., Oates, J., and Kelly, C. (2013). Evaluating health visitor assessments of mother-infant interactions: a mixed methods study. Int. J. Nurs. Stud. 50, 5–15. doi: 10.1016/j.ijnurstu.2012.08.008

Asparouhov, T., and Muthén, B. (2009). Exploratory Structural Equation Modeling. Multidiscipl. J. 16, 397–438. doi: 10.1080/10705510903008204

Barlow, J., McMillan, A. S., Kirkpatrick, S., Ghate, D., Barnes, J., and Smith, M. (2010). Health-Led Interventions in the Early Years to Enhance Infant and Maternal Mental Health: a Review of Reviews. Child Adolesc. Mental Health 15, 178–185. doi: 10.1111/j.1475-3588.2010.00570.x

Barlow, J., Schrader-McMillan, A., Axford, N., Wrigley, Z., Sonthalia, S., Wilkinson, T., et al. (2016). Review: attachment and attachment-related outcomes in preschool children – a review of recent evidence. Child Adolesc. Mental Health 21, 11–20. doi: 10.1111/camh.12138

Baur, T., and Lukes, D. (2009). An Evaluation of the IRT Models Through Monte Carlo Simulation. Madison, WI: University of Wisconsin.

Bird, P. K., Hindson, Z., Dunn, A., de Chavez, A. C., Dickerson, J., Howes, J., et al. (2021). Implementing the Maternal Postnatal Attachment Scale in universal services: qualitative interviews with health visitors. (Submitted). medRxiv [preprint]. doi: 10.1101/2021.11.30.21267065

Blower, S. L., Gridley, N., Dunn, A., Bywater, T., Hindson, Z., and Bryant, M. (2019). Psychometric Properties of Parent Outcome Measures Used in RCTs of Antenatal and Early Years Parent Programs: a Systematic Review. Clin. Child Fam. Psychol. Rev. 22, 367–387. doi: 10.1007/s10567-019-00276-2

Bowlby, J. (1982). Attachment and loss: retrospect and Prospect. Am. J. Orthopsych. 52, 664–678. doi: 10.1111/j.1939-0025.1982.tb01456.x

Branjerdporn, G., Meredith, P., Strong, J., and Garcia, J. (2017). Associations Between Maternal-Foetal Attachment and Infant Developmental Outcomes: a Systematic Review. Maternal Child Health J. 21, 540–553. doi: 10.1007/s10995-016-2138-2

Brockington, I. F., Fraser, C., and Wilson, D. (2006). The Postpartum Bonding Questionnaire: a validation. Arch. Womens Ment. Health 9, 233–242. doi: 10.1007/s00737-006-0132-1

Bywater, T. J., Berry, V., Blower, S. L., Cohen, J., Gridley, N., Kiernan, K., et al. (2018). Enhancing social-emotional health and wellbeing in the early years (E-SEE). A study protocol of a community-based randomised controlled trial with process and economic evaluations of the Incredible Years infant and toddler parenting programmes, delivered in a Proportionate Universal Model. BMJ Open 8:e026906. doi: 10.1136/bmjopen-2018-026906

Cassidy, J., Jones, J. D., and Shaver, P. R. (2013). Contributions of attachment theory and research: a framework for future research, translation, and policy. Devel. Psychopathol. 25, 1415–1434. doi: 10.1017/s0954579413000692

Condon, J. T. (1993). The assessment of antenatal emotional attachment: development of a questionnaire instrument. Br. J. Med. Psychol. 66, 167–183. doi: 10.1111/j.2044-8341.1993.tb01739.x

Condon, J. T., and Corkindale, C. J. (1998). The assessment of parent-to-infant attachment: development of a self-report questionnaire instrument. J. Reproduct. Infant Psychol. 16, 57–76. doi: 10.1080/02646839808404558

de Vet, H. W. W., Terwee, C. B., Mokkink, L. B., and Knol, D. L. (2015). Measurement in Medicine. Cambridge: Cambridge University Press.

Dickerson, J., Bird, P. K., McEachan, R. R., Pickett, K. E., Waiblinger, D., Uphoff, E., et al. (2016). Born in Bradford’s Better Start: an experimental birth cohort study to evaluate the impact of early life interventions. BMC Public Health 16:711. doi: 10.1186/s12889-016-3318-0

Dunn, A., Bird, P., Bywater, T., Dickerson, J., and Howes, J. (2021). Implementing the Maternal Postnatal Attachment Scale (MPAS) in universal services: Qualitative interviews with health visitors. medRxiv [preprint].

Elmer, J. R. S., O’Shaughnessy, R., Bramwell, R., and Dickson, J. M. (2019). Exploring health visiting professionals’ evaluations of early parent-infant interactions. J. Reproduct. Infant Psychol. 37, 554–565. doi: 10.1080/02646838.2019.1637831

Facompre, C. R., Bernard, K., and Waters, T. E. (2018). Effectiveness of interventions in preventing disorganized attachment: a meta-analysis. Devel. Psychopathol. 30, 1–11. doi: 10.1017/s0954579417000426

Fearon, R. P., Bakermans-Kranenburg, M. J., van Ijzendoorn, M. H., Lapsley, A. M., and Roisman, G. I. (2010). The significance of insecure attachment and disorganization in the development of children’s externalizing behavior: a meta-analytic study. Child Dev. 81, 435–456. doi: 10.1111/j.1467-8624.2009.01405.x

Feldman, R., Weller, A., Leckman, J. F., Kuint, J., and Eidelman, A. I. (1999). The nature of the mother’s tie to her infant: maternal bonding under conditions of proximity, separation, and potential loss. J. Child Psychol. Psychiatry. Allied Discipl. 40, 929–939. doi: 10.1111/1469-7610.00510

Goldman, S. H., and Raju, N. S. (1986). Recovery of One- and Two-Parameter Logistic Item Parameters: an Empirical Study. Educat. Psychol. Measur. 46, 11–21. doi: 10.1177/0013164486461002

Gridley, N., Blower, S., Dunn, A., Bywater, T., and Bryant, M. (2019). Psychometric Properties of Child (0-5 Years) Outcome Measures as used in Randomized Controlled Trials of Parent Programs: a Systematic Review. Clin. Child Fam. Psychol. Rev. 22, 388–405. doi: 10.1007/s10567-019-00277-1

Horn, J. L. (1965). A Rationale And Test For The Number Of Factors In Factor Analysis. Psychometrika 30, 179–185. doi: 10.1007/bf02289447

Kane, K. (2017). Assessing the Parent-Infant Relationship Through Measures of Maternal-Infant Bonding. A Concept Analysis and Systematic Review. Ph D thesis, England, UK: University of York.

Kristensen, I. H., Trillingsgaard, T., Simonsen, M., and Kronborg, H. (2017). Are health visitors’ observations of early parent-infant interactions reliable? A cross-sectional design. Infant Ment. Health J. 38, 276–288. doi: 10.1002/imhj.21627

Le Bas, G. A., Youssef, G. J., Macdonald, J. A., Rossen, L., Teague, S. J., Kothe, E. J., et al. (2019). The role of antenatal and postnatal maternal bonding in infant development: a systematic review and meta-analysis. Soc. Devel. [Epub online ahead of print] doi: 10.1111/sode.12392

Linacre, J. (1994). Sample Size and Item Calibration (or Person Measure) Stability. Available online at: https://www.rasch.org/rmt/rmt74m.htm [accessed on Oct27, 2021]

Linacre, J. M. (2017). Winsteps Rasch Measurement Computer Programme (Version 4.0.0). Available online at: https://www.winsteps.com/

Lorenzo-Seva, U., and Ferrando, P. J. (2006). FACTOR: a computer program to fit the exploratory factor analysis model. Behav. Res. Methods 38, 88–91. doi: 10.3758/bf03192753

Lorenzo-Seva, U., and Van Ginkel, J. R. (2016). Multiple imputation of missing values in exploratory factor analysis of multidimensional scales: estimating latent trait scores. Anal. Psicol. Anna. Psychol. 32, 596–608.

Marsh, H. W. (1996). Positive and negative global self-esteem: a substantively meaningful distinction or artifactors? J. Pers. Soc. Psychol. 70, 810–819. doi: 10.1037//0022-3514.70.4.810

Müller, M. E. (1994). A questionnaire to measure mother-to-infant attachment. J. Nurs. Meas. 2, 129–141.

NICE. (2015). Children’s Attachment: Attachment in Children and Young People Who Are Adopted from Care, in Care or at High Risk of Going Into Care. France: NICE.

Public Health England [PHE] (2020). Early Years High Impact Area Two: Supporting Good Parental Mental Health. London: Public Health England.

Rasch, G. (1960). Probabilistic Models for Some Intelligence and Attainment Tests. Chicago: The University of Chicago Press.

Raykov, T. (1997). Scale Reliability, Cronbach’s Coefficient Alpha, and Violations of Essential Tau-Equivalence with Fixed Congeneric Components. Multiv. Behav. Res. 32, 329–353. doi: 10.1207/s15327906mbr3204_2

Redshaw, M., and Martin, C. (2013). Babies, ‘bonding’ and ideas about parental ‘attachment’. J. Reproduct. Infant Psychol. 31, 219–221.

Taylor, A., Atkins, R., Kumar, R., Adams, D., and Glover, V. (2005). A new Mother-to-Infant Bonding Scale: links with early maternal mood. Arch. Womens Ment. Health 8, 45–51. doi: 10.1007/s00737-005-0074-z

Wilson, P., Thompson, L., Puckering, C., McConnachie, A., Holden, C., Cassidy, C., et al. (2010). Parent-child relationships: are health visitors’ judgements reliable? Commun. Pract. 85, 22–25.

Wittkowski, A., Vatter, S., Muhinyi, A., Garrett, C., and Henderson, M. (2020). Measuring bonding or attachment in the parent-infant-relationship: a systematic review of parent-report assessment measures, their psychometric properties and clinical utility. Clin. Psychol. Rev. 82:101906. doi: 10.1016/j.cpr.2020.101906

Wright, B., Barry, M., Hughes, E., Trépel, D., Ali, S., Allgar, V., et al. (2015). Clinical effectiveness and cost-effectiveness of parenting interventions for children with severe attachment problems: a systematic review and meta-analysis. Health Technol. Assess. 19:52. doi: 10.3310/hta19520

Keywords: parent, baby, measure, psychometrics, bonding

Citation: Bywater T, Dunn A, Endacott C, Smith K, Tiffin PA, Price M and Blower S (2022) The Measurement Properties and Acceptability of a New Parent–Infant Bonding Tool (‘Me and My Baby’) for Use in United Kingdom Universal Healthcare Settings: A Psychometric, Cross-Sectional Study. Front. Psychol. 13:804885. doi: 10.3389/fpsyg.2022.804885

Received: 29 October 2021; Accepted: 03 January 2022;

Published: 14 February 2022.

Edited by:

Paola Gremigni, University of Bologna, ItalyReviewed by:

María De La Fe Rodriguez, Universidad Nacional de Educación a Distancia, SpainMike Horton, University of Leeds, United Kingdom

Copyright © 2022 Bywater, Dunn, Endacott, Smith, Tiffin, Price and Blower. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Tracey Bywater, tracey.bywater@york.ac.uk

Tracey Bywater

Tracey Bywater Abigail Dunn2

Abigail Dunn2 Matthew Price

Matthew Price Sarah Blower

Sarah Blower