- 1Université de Poitiers, Poitiers, France

- 2Université de Tours, Tours, France

- 3Centre de Recherches sur la Cognition et l’Apprentissage, UMR 7295, Poitiers, France

- 4Centre National de la Recherche Scientifique (CNRS), Paris, France

- 5Institut Pprime UPR 3346, Poitiers, France

Facial emotion recognition occupies a prominent place in emotion psychology. How perceivers recognize messages conveyed by faces can be studied in either an explicit or an implicit way, and using different kinds of facial stimuli. In the present study, we explored for the first time how facial point-light displays (PLDs) (i.e., biological motion with minimal perceptual properties) can elicit both explicit and implicit mechanisms of facial emotion recognition. Participants completed tasks of explicit or implicit facial emotion recognition from PLDs. Results showed that point-light stimuli are sufficient to allow facial emotion recognition, be it explicit and implicit. We argue that this finding could encourage the use of PLDs in research on the perception of emotional cues from faces.

Introduction

Presumably because of their crucial role in everyday life, facial expressions have become an important research topic in the field of human interactions. They are key to social communication, as they can promote mental state inference and emotion induction in the perceiver (e.g., Wood et al., 2016). In this context, research on point-light displays (PLDs) imitating biological motion has expanded considerably. The aim of the present study was to investigate whether facial PLDs can elicit two important processes: explicit (explicit judgment) and implicit (via a priming task) emotion recognition.

Researchers study facial emotion recognition using either explicit or implicit tasks. In the former, which are widely used, participants have to explicitly judge emotions conveyed by target faces (e.g., Calvo and Lundqvist, 2008; Adolph and Alpers, 2010). In the latter, they perform a priming task, where instead of responding about a face representing the prime stimulus, they respond to a probe stimulus following the presentation of the prime (e.g., McLellan et al., 2010; Wentura et al., 2017). Here, the congruency effect implies that the probe stimulus judgment is influenced by the emotional information conveyed by the prime. For instance, a probe stimulus is judged to be more negative, and the reaction time is shorter, when it is preceded by a negative rather than a positive prime. Implicit perception is thought to rely on an early stage of processing (i.e., automatic mechanisms) that is nonconscious and functional even with poor stimuli. By contrast, explicit recognition involves the linking of raw perceptions to relevant conceptual knowledge and inference processes (Adolphs, 2002). Neuroscience studies (see Dricu and Frühholz, 2016, for a meta-analysis) and behavioral findings in individuals with psychiatric disorders (e.g., Wagenbreth et al., 2016) argue in favor of this distinction.

Another issue in the field of emotional faces concerns the properties of the stimuli that are used. As summarized in a recent review (Dobs et al., 2018), different types of facial stimuli can be considered (e.g., static vs dynamic; real vs synthetic). Although most research has looked at static faces, a number of studies have suggested that dynamic facial expressions benefit recognition in certain conditions (for a review, see Krumhuber et al., 2013), even if interpretations (e.g., attention driven, facilitation of mimicry behavior) are still sparse (e.g., Calvo et al., 2016). Given their respective advantages and disadvantages (Dobs et al., 2018), stimuli need to be chosen according to the research objective. In this vein, studies featuring PLDs are growing in popularity.

A PLD is a stylised depiction of the articulated motion (i.e., intrinsic properties) of a living creature (human or other animal) (Troje and Aust, 2013). PLDs can be useful in social research, as they provide a way of studying core social cues, while controlling other perceptual dimensions (e.g., color, image attractiveness). Since the introduction of the point-light motion methodology in the early 1970s (Johansson, 1973), numerous studies have shown that humans are very sensitive to kinematic information. Simply by watching moving dots representing an actor performing an action, people can recognize the action being produced (Johansson, 1973), or access different features of the actor, such as the sex, intention, or identity (see Decatoire et al., 2018, for a review). Importantly, this ability may be compromised in patients with social cognition disorders such as autism (e.g., Blake et al., 2003) or schizophrenia (e.g., Kim et al., 2005), suggesting that visual body motion processing is a hallmark of social cognition (Pavlova, 2012). Consequently, some authors have used PLDs to study the recognition of emotional states (e.g., Atkinson et al., 2004; Alaerts et al., 2011), and have shown that emotion can be accurately recognized simply from watching a moving body. In a recent review, Okruszek (2018) summarized the performances of patients with different disorders on emotion recognition from body PLDs. He noted that recognition of emotion from body PLDs seems to be specifically affected in patients, suggesting that this is a relevant methodology for studying social problems in patients with psychiatric disorders.

Although body PLDs are now used to assess the recognition of emotions, to our knowledge, very few studies have specifically investigated the ability to recognize emotions from facial PLDs (Bassili, 1978, 1979; Dittrich, 1991; Pollick et al., 2003; Atkinson et al., 2012). In these studies, the authors showed that adults are able to recognize basic emotional expressions from dynamic stimuli. Crucially, these studies only investigated explicit emotion recognition.

The aim of the present study was to assess whether emotional facial PLD stimuli can elicit both explicit and implicit facial emotion recognition processes. In addition, we explored the possible effect of sex, as some studies have reported a female advantage both for emotional faces (Hall et al., 2000; Fischer et al., 2018) and for threatening biological-motion stimuli (Alaerts et al., 2011; Pavlova et al., 2015). In the present study, participants performed two kinds of task: an explicit emotional facial PLD recognition task (open-ended and multiple-choice questionnaires), and a priming paradigm with PLDs as the primes. We expected to observe good performance on the explicit emotion recognition tasks, similar to the rates of recognition usually observed in the literature for full-light emotional faces (Nelson and Russell, 2013) and PLD faces (Bassili, 1979), and a congruency effect in the implicit task, with both effects being potentially modulated by the perceiver’s sex.”

Methods

Participants

Participants were thirty-seven French university students1 (Mage = 20.9 years, SD = 1.61; 17 male; nine left-handed). They all provided their written informed consent in accordance with the Declaration of Helsinski, and took part in exchange for a course credit. All participants had normal or corrected-to-normal vision, and no history of motor, perceptual or neurological disorders, as assessed with a short questionnaire. The protocol was approved by a local human subject committee.

Material

Eight PLDs depicting an emotional facial expression (happiness, anger, disgust, or surprise) of a man or woman were used in the experiment. These PLDs were taken from the PLAViMoP database (Decatoire et al., 2018; https://plavimop.prd.fr/en/motions1). They were each made up of 41 points representing the position of the actors’ eyes, eyebrows, mouth, nose, outline of their face, shoulders and sternum. 4 mm hemispherical facial markers were used for face and 6.4 mm spherical markers for sternum and shoulders (see Appendix 1 and Image 1 in Supplementary Material for a complete description). Importantly, the eyes’ position has not been recorded directly but has been calculated a posteriori from the position of dots placed at the edge of each eye. This choice was made to render our PLDs more “face like” because a pre-test including 15 adult participants showed that without eyes, our face PLDs seemed strange and ghostly. We extracted 60 neutral nouns composed of one (30 nouns) or two (30 nouns) syllables from the validated French Affective Norms database (Monnier and Syssau, 2014). These French words were selected for their neutral valence (M = 4.88, SD = 1.53) and level of arousal (M = 3.30, SD = 2.22), as rated on the nine-point scale of the database.

Procedure

The participants were tested individually in a dark, soundproof room, seated in front of a computer screen (spatial resolution: 1280 × 800 pixels; temporal resolution: 60 Hz). Three tasks were administered in the following order: priming task (implicit emotion evaluation), and the open-ended and multiple-choice questionnaires (explicit emotion evaluation) for a total duration of 45 min.

Implicit Emotion Evaluation: Priming Task

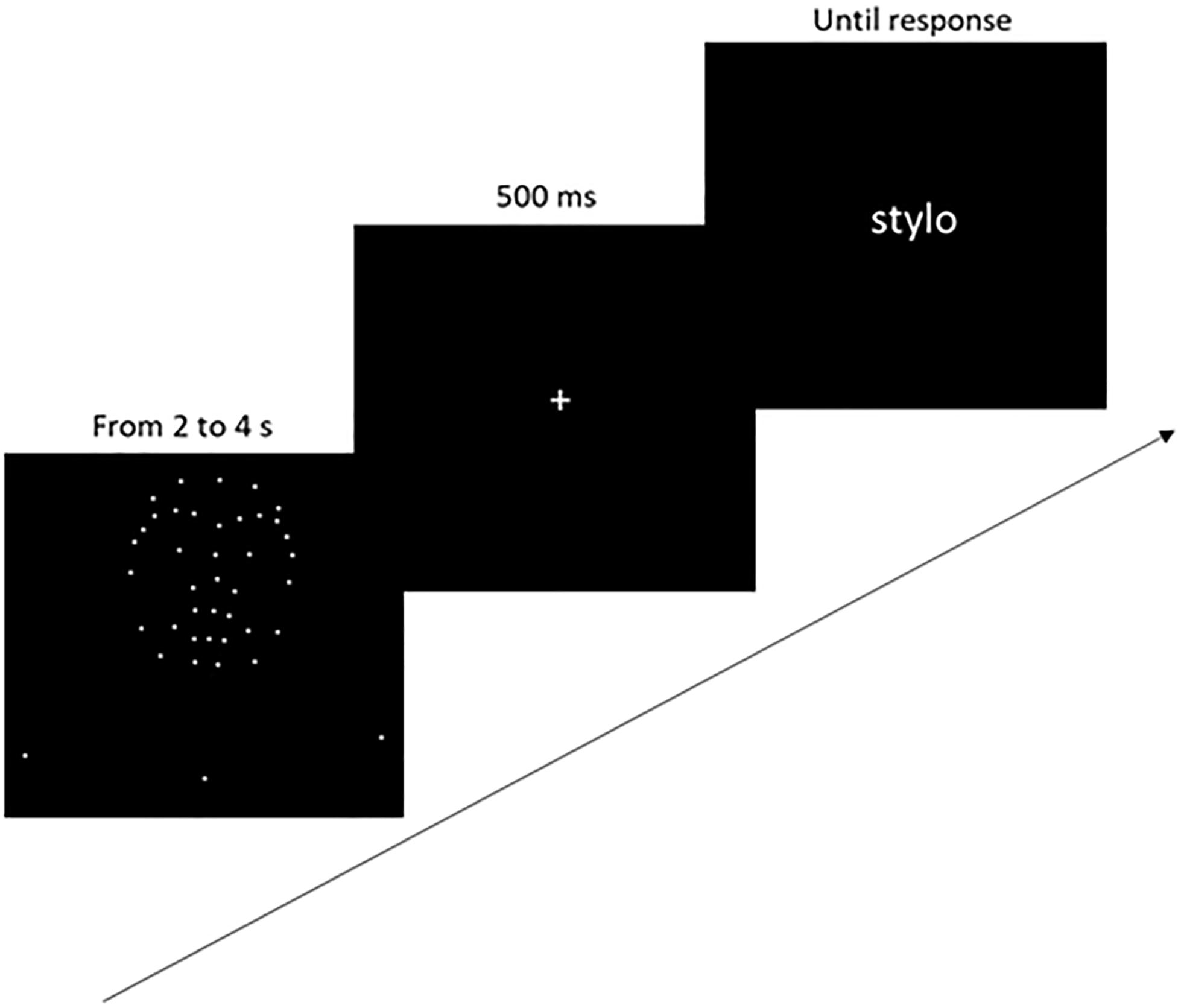

The experimental session featured 240 random trials (four PLDs × 60 words). The PLDs used in this session were male anger, female anger, male happiness and female happiness. Each trial consisted in the presentation of a PLD (lasting 2-4 s) followed by a fixation cross (500 ms), then a neutral word (see Figure 1). The word remained on the screen until the participant entered a response. The participants’ task was to judge, as quickly and as accurately as possible, whether the word presented was positive or negative. Participants answered by pressing the P or A keys of an AZERTY keyboard with their right or left hand. The keys associated with the positive and negative responses were counterbalanced between participants.

Figure 1. Priming task procedure. The point-light sequence, fixation cross, and word stimulus were successively displayed in the center of the screen. The arrow represents the sequence of one trial.

Before the experimental session, we administered a training session using the other PLD sequences (male surprise, male disgust, female surprise, female disgust) and different target words.

Explicit Emotion Evaluation: Open-Ended and Multiple-Choice Questionnaires

In the open-ended questionnaire, the PLDs that had been used in either the training or the experimental session were presented in random order, and participants were asked, “What is it?” after each one. No PLD was presented more than twice. We recorded the participants’ responses for each PLD. No limit time was given, but the experimenter encouraged participants to be spontaneous.

In the multiple-choice questionnaire, the previously used PLDs were again presented to participants in random order. For each one, participants had to judge which of the four emotions (anger, happiness, disgust or surprise) was featured. They also had to rate the intensity of the PLD on a scale ranging from 1 (Very low) to 7 (Very high) and its valence on a scale ranging from 1 (Very negative) to 7 (Very positive). We recorded the participants’ responses for each PLD. No limit time was given, but the experimenter encouraged participants to be spontaneous.

Data Analysis

In the priming task, our first dependent variable (DV) was participants’ binary responses to categorizing neutral words (i.e., negative vs positive) as a function of PLD prime (i.e., negative or positive expression). Each response was coded one when it was congruent with the valence of the PLD prime (i.e., positive response when the PLD represented a positive expression; negative response when the PLD represented a negative expression), and 0 when it was incongruent. The second DV was the corresponding response times. For these two DVs, we calculated mixed models, using the GLIMMIX procedure with SAS Version 9.4 statistical software. For the first DV (i.e., categorisation), we ran a logistic mixed model, with participants and items as random-effects factors. Three fixed-effects factors were considered: PLD category (positive/happiness vs negative/anger), sex of participants (male or female), and their interactions (see Baayen et al., 2008). For the second DV (i.e., reaction times), the analysis used a gamma distribution as recommended (Baayen and Milin, 2010). The same three fixed-effects factors and their interactions were considered. For all analyses, we calculated the p values for the reported F values (Type III analysis of variance, ANOVA) with the error degrees of freedom calculated according to the Satterthwaite approximation, as the number of observations varied across conditions. The significance level was set at p = 0.05.

For the open-ended questionnaire and the multiple-choice questionnaire, the scores were compared using a nonparametric Friedman ANOVA. The difference between males and females was then assessed with Mann-Whitney comparisons. Moreover, for the multiple-choice questionnaire, we compared the mean percentage of correct emotion recognition responses with chance level (25%) using a z test. The data that support the findings of this study are openly available in figshare at http://doi.org/10.6084/m9.figshare.11407611.

Results

Implicit Emotion Evaluation: Priming Task

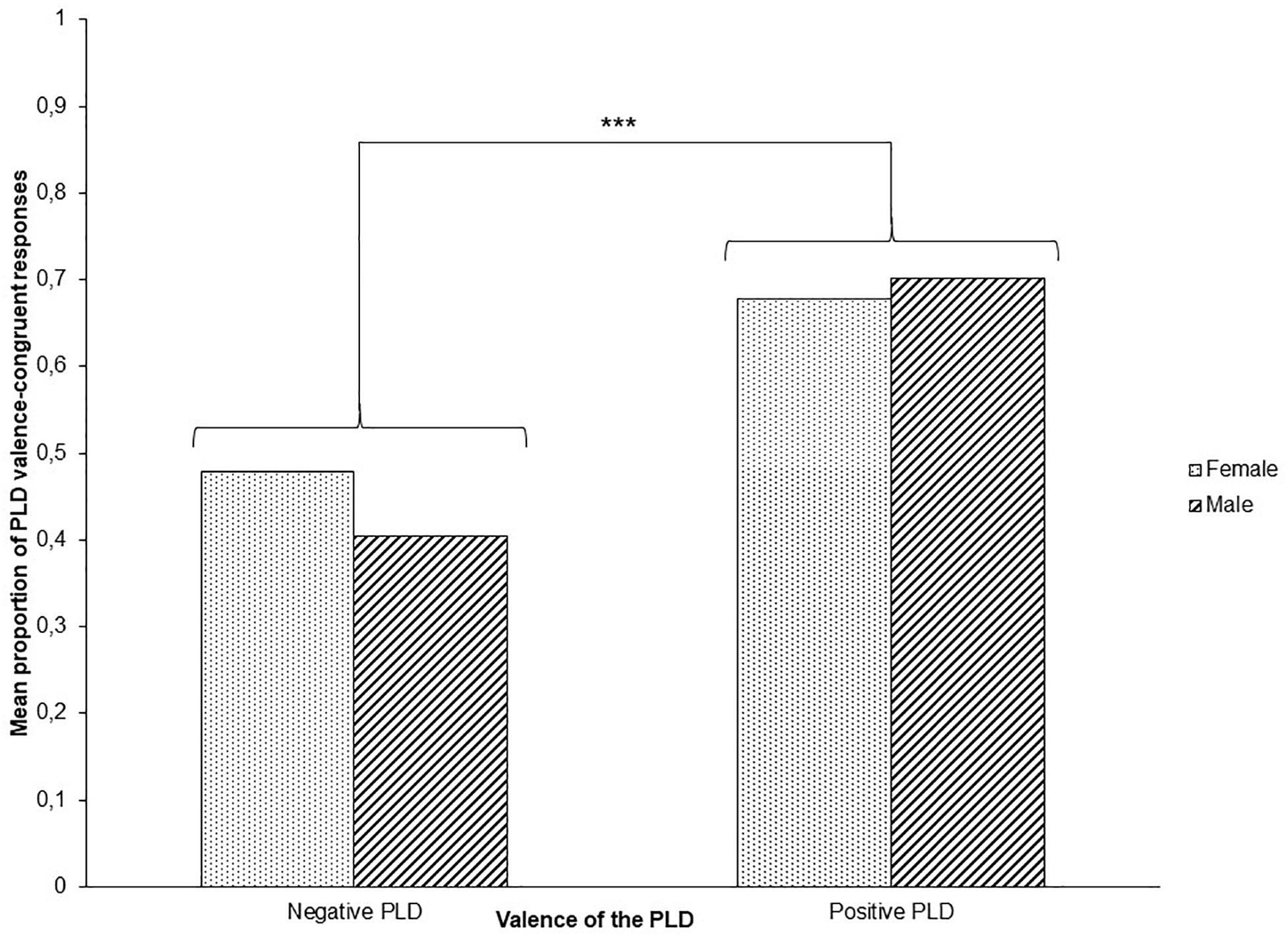

The logistic statistical model revealed that the nature of the PLD was predictive of congruent responses when these were positive (see Figure 2). Participants categorized neutral words as being more positive when they were primed by a positive rather than a negative PLD, F(1,128) = 40.47, p < 0.0001, 95% CI = [0.173, 0.397]. No other effect was significant (all ps > 0.1).

Figure 2. Mean proportion of congruent responses as a function of prime (PLD expression) valence (i.e., negative vs positive). The asterisks indicate a significance level of p < 0.001.

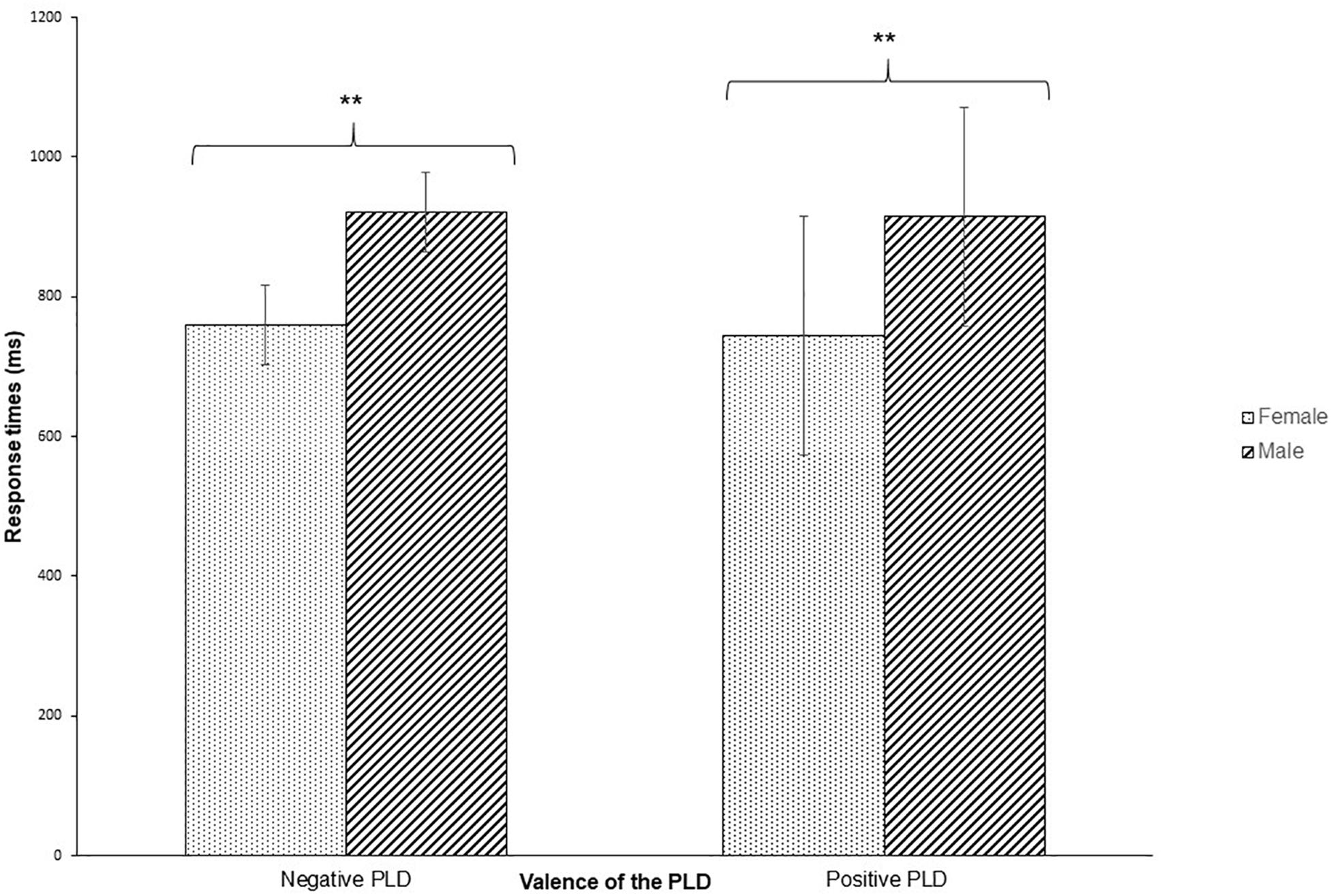

Concerning response times (see Figure 3), analysis showed an effect of sex of participants, F(1,145.8) = 8.86 p = 0.003, with faster responses for females (M = 752.3 ms, SD = 125.7 ms) than for males (M = 917.5 ms, SD = 313.6 ms). No other main or interaction effect was significant (all ps > 0.17).

Figure 3. Mean response times as a function of prime (PLD expression) valence (i.e., negative vs positive). Error bars represent 95% confidence intervals. The asterisks indicate a significance level of p < 0.01.

Explicit Emotion Evaluation: Open-Ended and the Multiple-Choice Questionnaires

Concerning responses to the open-ended questionnaire, analysis revealed an effect of emotion, chi2(N = 37, df = 3) = 63.68, p < 0.001, with better recognition for happiness (M = 90.5%, SD = 19.8%) and surprise (M = 93.2%, SD = 20.9%) than for anger (M = 56.8%, SD = 26.8%) and disgust (M = 37.8%, SD = 36.1%). There was no difference between males and females on the percentage of correct emotion recognition responses (all ps > 0.32).

For the multiple-choice questionnaire, results showed an effect of emotion, chi2(N = 37, df = 3) = 34.26, p < 0.001, with better recognition for surprise (M = 100%) and happiness (M = 97.2%, SD = 11.4%) than for disgust (M = 81%, SD = 27.2%) and anger (M = 75.7%, SD = 27.9%). There was no difference between males and females on the percentage of correct emotion recognition responses (all ps > 0.55), and the recognition score was above chance level for all PLDs (all ps < 0.001).

Concerning intensity ratings, there was a significant effect of emotion, chi2(N = 37, df = 3) = 53.27, p < 0.001, with higher ratings for happiness (M = 5.87, SD = 0.58) than for either anger (M = 4.55, SD = 0.90), surprise (M = 4.06, SD = 1.47), or disgust (M = 3.59, SD = 1.08). There was no difference between males and females on judgments of intensity (all ps > 0.12).

Concerning valence ratings, we observed a significant effect of emotion, chi2(N = 37, df = 3) = 86.33, p < 0.001, with higher ratings for happiness (M = 5.43, SD = 0.71) and surprise (M = 3.97, SD = 0.78) than for disgust (M = 3.01, SD = 0.58) and anger (M = 2.45, SD = 0.63). There was no difference between males and females except on surprise (U = 97, p < 0.05), to which males (M = 4.32, SD = 0.64) attributed a higher valence than females did (M = 3.68, SD = 0.78).

Discussion

The aim of the present study was to assess whether the presentation of emotional facial PLDs can elicit both explicit and implicit processes of facial emotion recognition. Concerning explicit emotion recognition, results showed similar performance that these observed in literature for full-light emotions and comparable methodology (for multiple-force choice tasks, see for example the review’s of Nelson and Russell (2013) based on 39 sets of data) and PLD emotions (Bassili, 1979). Moreover, our performances are above chance level for all four emotions proposed which suggests that our stimuli are adequate to assess emotions. However, this result should be confirmed in futures studies in particular to assess how our calculation of the eyes’ position can affect the recognition. Actually, our calculation gives all the stimuli the impression that the actors are looking at the camera and this is maybe not so natural for some emotions such as disgust for example. Moreover, several works have shown that the recognition of PLD faces are dependant of spatial and dynamic manipulations (e.g., Pollick et al., 2003) and that the modification of stimuli can affect the cerebral areas activated (Atkinson et al., 2012). Future studies should assess this point with stimuli associating classical PLD recording and pupil recording. Interestingly, we obtained better recognition of happiness and surprise than of anger and disgust. This difference was observed whether the recognition was open-ended or constrained by multiple choices. Our findings confirm those reported by previous studies using facial PLDs (e.g., Bassili, 1979; Pollick et al., 2003; Atkinson et al., 2012). More importantly, both the high explicit emotion recognition scores and the hierarchy between the different valences (i.e., positive emotions recognized better than negative emotions) were consistent with previous findings for full-light facial stimuli (for a review, see Nelson and Russell, 2013). As with full-light facial expressions, the easier recognition of positive facial PLDs, generally called the happiness advantage in the literature (e.g., Leppänen and Hietanen, 2004), can be explained by different perceptual or theoretical features/functions of positive faces (e.g., mouth region processing; Sullivan et al., 2007; Calvo and Beltrán, 2013). Globally, the explicit task findings suggest that facial PLDs are recognized largely above chance level (for the multiple choice task) and are just as efficient as full-light facial expressions (Nelson and Russell, 2013). Even these results could be confirmed with a direct comparison group control, they suggest that PLD faces are good stimuli for expressing emotions.

Concerning the implicit recognition of emotional facial PLDs, our findings show that PLDs are sufficient to trigger a congruency effect. When participants saw a positive facial PLD as a prime, they had a significant tendency to attribute a positive valence to subsequent neutral words. However, this effect was not observed for negative emotions. This discrepancy can be explained either by a lower level of recognition of negative emotions or by a specificity of positive emotions, as explained in the previous section dealing with explicit recognition.

Concerning the effect of sex, the analysis of response times indicated that women were more efficient than men in judging emotional facial PLDs in the implicit task. This could be related to the known f. advantage observed for both emotional faces (Hall et al., 2000; Kret and De Gelder, 2012; Fischer et al., 2018) and biological-motion stimuli (Alaerts et al., 2011; Pavlova et al., 2015), as mentioned in the Introduction. However, as this advantage was only observed on response times, we can hypothesize that it is related to two kinds of sex differences. First, the literature highlights females’ ability to respond more automatically than males to minimal affective stimuli, as in subliminal presentations (e.g., Hall and Matsumoto, 2004; Hoffmann et al., 2010; Donges et al., 2012). Second, several studies have pointed to sex differences in the time course and topography of the neural circuitry underpinning biological motion processing (Pavlova et al., 2015). Therefore, we suggest that sex differences in the analysis of facial PLDs are related to the automatic capture of women’s attention (Bidet-Ildei and Bouquet, 2014).

More generally speaking, our main finding was to show that a minimalistic representation of emotional faces like PLDs seems to convey critical visual information for expression recognition. This result is consistent with the literature showing that humans are skilled at processing different kinds of social information (e.g., gender, intention, affective state) with minimalistic representation (see Troje and Aust, 2013 for a review). Moreover, the present study expands on this literature by suggesting that this efficient treatment is also the case on the basis of emotional facial PLDs. One interpretation is offered by the framework of embodied cognition (Barsalou, 1999, 2010; Niedenthal, 2007), which argues that the processing of emotional stimuli can be supported by somatosensory reactions. PLDs – even if they are minimalistic stimuli – are by definition based on “Life motion” (i.e., they correspond to the biological dynamic of human actions). Therefore, emotional PLDs can be considered as a privileged way for embodied emotional recognition responses. Precisely, in simulation theories, the internal simulation of movements is a prominent mechanism of efficient emotion recognition; a growing literature in neuroimaging studies in both typical and atypical populations argues for emotion recognition reliance to somatosensory cortices (for a recent review, see Ross and Atkinson, 2020).

Finally, emotional facial PLDs can be employed to efficiently assess both explicit and implicit processes of facial emotion recognition. Therefore, they could serve as socio-emotional cues instead of videos and static materials, especially when researchers wish to control and/or limit the amount of information available. Facial PLDs can provide an easy manner to study the dynamics and the number of clues available during a facial emotion recognition task (Decatoire et al., 2019). For instance, they offer the opportunity to examine the role of biological dynamic in emotion recognition (for example, see Atkinson et al., 2007, using upright or inverted PLDs of body). In the same manner, they could be applied to examine the minimum amount of information required for the recognition of a given emotion (e.g., by changing the color or the size of some dots) (see also Pollick et al., 2003 for spatial and dynamic manipulations of PLDs). Moreover, facial PLDs could be a useful tool to study emotion recognition mechanisms in some patients (e.g., Okruszek, 2018), such as people with autism spectrum disorder (for a review, see Tanaka and Sung, 2016). In conclusion, emotional facial PLDs could constitute both a theoretical and a practical interest to better understand the mechanisms involved in the recognition of emotions.

Data Availability Statement

The datasets generated for this study are openly available in figshare at http://doi.org/10.6084/m9.figshare.11407611.

Ethics Statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

CB-I and SG both conceptualized the study and did analysis of the data. AD participated to the building of the stimuli. CB-I constructed the software programs, conducted and supervised data collection. CB-I and SG both contributed to writing of this manuscript.

Funding

Support for this research was provided by a grant from La Région Nouvelle Aquitaine (CPER-FEDER P-2017-BAFE-68), in partnership with the European Union (FEDER/ERDF, European Regional Development Fund), and by the French Government research program Investissements d’Avenir through the Robotex Equipment of Excellence (ANR-10-EQPX-44).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We are grateful to Morgane Larée, a psychology student at the University of Tours François-Rabelais, for carrying out part of the data collection.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2020.01062/full#supplementary-material

FIGURE S1 | Placement of the markers used to make the PLDs used in the experiment.

VIDEO S1 | Female disgust.

VIDEO S2 | Male disgust.

VIDEO S3 | Female joy.

VIDEO S4 | Male joy.

VIDEO S5 | Female surprise.

VIDEO S6 | Male surprise.

VIDEO S7 | Female anger.

VIDEO S8 | Male anger.

Footnotes

- ^ Given than we anticipated to use logistic mixed models for our analysis (see data analysis), we did not estimate the a priori sample size which is not adapted for this type of analysis.

References

Adolph, D., and Alpers, G. W. (2010). Valence and arousal: a comparison of two sets of emotional facial expressions. Am. J. Psychol. 123, 209–219. doi: 10.5406/amerjpsyc.123.2.0209

Adolphs, R. (2002). Recognizing emotion from facial expressions: psychological and neurological mechanisms. Behav. Cogn. Neurosci. Rev. 1, 21–62.

Alaerts, K., Nackaerts, E., Meyns, P., Swinnen, S. P., and Wenderoth, N. (2011). Action and emotion recognition from point light displays: an investigation of gender differences. PLoS One 6:e20989. doi: 10.1371/journal.pone.0020989

Atkinson, A. P., Dittrich, W. H., Gemmell, A. J., and Young, A. W. (2004). Emotion perception from dynamic and static body expressions in point-light and full-light displays. Perception 33, 717–746. doi: 10.1068/p5096

Atkinson, A. P., Tunstall, M. L., and Dittrich, W. H. (2007). Evidence for distinct contributions of form and motion information to the recognition of emotions from body gestures. Cognition 104, 59–72.

Atkinson, A. P., Vuong, Q. C., and Smithson, H. E. (2012). Modulation of the face- and body-selective visual regions by the motion and emotion of point-light face and body stimuli. Neuroimage 59, 1700–1712. doi: 10.1016/j.neuroimage.2011.08.073

Baayen, R. H., Davidson, D. J., and Bates, D. M. (2008). Mixed-effects modeling with crossed random effects for subjects and items. J. Mem. Lang. 59, 390–412. doi: 10.1016/j.jml.2007.12.005

Barsalou, L. W. (1999). Perceptual symbol system. Behav. Brain Sci. 22, 577–660. doi: 10.1017/S0140525X99002149

Barsalou, L. W. (2010). Grounded cognition: past, present, and future. Top. Cogn. Sci. 4, 716–724. doi: 10.1111/j.1756-8765.2010.01115.x

Bassili, J. N. (1978). Facial motion in the perception of faces and of emotional expression. JEP 4, 373–379. doi: 10.1037//0096-1523.4.3.373

Bassili, J. N. (1979). Emotion recognition: the role of facial movement and the relative importance of upper and lower areas of the face. J. Pers. Soc. Psychol. 37, 2049–2058. doi: 10.1037/0022-3514.37.11.2049

Bidet-Ildei, C., and Bouquet, C. A. (2014). Motor knowledge modulates attentional processing during action judgment. Athens J. Soc. Sci. 2, 249–262. doi: 10.30958/ajss.2-4-1

Blake, R., Turner, L. M., Smoski, M. J., Pozdol, S. L., and Stone, W. L. (2003). Visual recognition of biological motion is impaired in children with autism. Psychol. Sci. 14, 151–157. doi: 10.1111/1467-9280.01434

Calvo, M. G., Avero, P., Fernández-Martín, A., and Recio, G. (2016). Recognition thresholds for static and dynamic emotional faces. Emotion 16:1186. doi: 10.1037/emo0000192

Calvo, M. G., and Beltrán, D. (2013). Recognition advantage of happy faces: tracing the neurocognitive processes. Neuropsychologia 51, 2051–2061. doi: 10.1016/j.neuropsychologia.2013.07.010

Calvo, M. G., and Lundqvist, D. (2008). Facial expressions of emotion (KDEF): identification under different display-duration conditions. Behav. Res. Methods 40, 109–115. doi: 10.3758/BRM.40.1.109

Decatoire, A., Beauprez, S. A., Pylouster, J., Lacouture, P., Blandin, Y., and Bidet-Ildei, C. (2018). PLAViMoP Database: A New Collaborative Database for Point-Light Displays. Available online at: https://plavimop.prd.fr/en/motions (accessed September 1, 2018).

Decatoire, A., Beauprez, S. A., Pylouster, J., Lacouture, P., Blandin, Y., and Bidet-Ildei, C. (2019). PLAViMoP: how to standardize and simplify the use of point-light displays. Behav. Res. Methods 51, 2573–2596. doi: 10.3758/s13428-018-1112-x

Dittrich, W. H. (1991). Das erkennen von emotionen aus ausdrucksbewegungen des gesichts [Facial motion and the recognition of emotions]. Psychol. Beiträge 33, 366–377.

Dobs, K., Bülthoff, I., and Schultz, J. (2018). Use and usefulness of dynamic face stimuli for face perception studies–a review of behavioral findings and methodology. Front. Psychol. 9:1355. doi: 10.3389/fpsyg.2018.01355

Donges, U. S., Kersting, A., and Suslow, T. (2012). Women’s greater ability to perceive happy facial emotion automatically: gender differences in affective priming. PLoS One 7:e41745. doi: 10.1371/journal.pone.0041745

Dricu, M., and Frühholz, S. (2016). Perceiving emotional expressions in others: activation likelihood estimation meta-analyses of explicit evaluation, passive perception and incidental perception of emotions. Neurosci. Biobehav. Rev. 71, 810–828. doi: 10.1016/j.neubiorev.2016.10.020

Fischer, A. H., Kret, M. E., and Broekens, J. (2018). Sex differences in emotion perception and self-reported emotional intelligence: a test of the emotion sensitivity hypothesis. PLoS One 13:e0190712. doi: 10.1371/journal.pone.0190712

Hall, J. A., Carter, J. D., and Horgan, T. G. (2000). Gender Differences in Nonverbal Communication of Emotion: Gender and Emotion: Social Psychological Perspectives. Cambridge: Cambridge University Press, 97-117. doi: 10.1017/CBO9780511628191.006

Hall, J. A., and Matsumoto, D. (2004). Gender differences in judgments of multiple emotions from facial expressions. Emotion 4:201. doi: 10.1037/1528-3542.4.2.201

Hoffmann, H., Kessler, H., Eppel, T., Rukavina, S., and Traue, H. C. (2010). Expression intensity, gender and facial emotion recognition: women recognize only subtle facial emotions better than men. Acta Psychol. 135, 278–283. doi: 10.1016/j.actpsy.2010.07.012

Johansson, G. (1973). Visual perception of biological motion and a model for its analysis. Percept. Psychophys. 14, 201–211.

Kim, J., Doop, M. L., Blake, R., and Park, S. (2005). Impaired visual recognition of biological motion in schizophrenia. Schizophrenia Res. 77, 299–307. doi: 10.1016/j.schres.2005.04.006

Kret, M. E., and De Gelder, B. (2012). A review on sex differences in processing emotional signals. Neuropsychologia 50, 1211–1221. doi: 10.1016/j.neuropsychologia.2011.12.022

Krumhuber, E. G., Kappas, A., and Manstead, A. S. (2013). Effects of dynamic aspects of facial expressions: a review. Emot. Rev. 5, 41–46. doi: 10.1177/1754073912451349

Leppänen, J. M., and Hietanen, J. K. (2004). Positive facial expressions are recognized faster than negative facial expressions, but why? Psychol. Rev. 69, 22–29. doi: 10.1007/s00426-003-0157-2

McLellan, T., Johnston, L., Dalrymple-Alford, J., and Porter, R. (2010). Sensitivity to genuine vs. posed emotion specified in facial displays. Cogn. Emot. 24, 1277–1292. doi: 10.1080/02699930903306181

Monnier, C., and Syssau, A. (2014). Affective norms for French words (FAN). Behav. Res. Methods 46, 1128–1137. doi: 10.3758/s13428-013-0431-1

Nelson, N. L., and Russell, J. A. (2013). Universality revisited. Emot. Rev. 5, 8–15. doi: 10.1177/1754073912457227

Okruszek, L. (2018). It is not just in faces! Processing of emotion and intention from biological motion in psychiatric disorders. Front. Hum. Neurosci. 12:48. doi: 10.3389/fnhum.2018.00048

Pavlova, M. (2012). Biological motion processing as a hallmark of social cognition. Cereb. Cortex 22, 981–995. doi: 10.1093/cercor/bhr156

Pavlova, M., Sokolov, A. N., and Bidet-Ildei, C. (2015). Sex differences in the neuromagnetic cortical response to biological motion. Cerebral Cortex 25, 3468–3474. doi: 10.1093/cercor/bhu175

Pollick, F. E., Hill, H., Calder, A., and Paterson, H. (2003). Recognising facial expression from spatially and temporally modified movements. Perception 32, 813–826.

Ross, P., and Atkinson, A. P. (2020). Expanding simulation models of emotional understanding: the case for different modalities, body-state simulation prominence, and developmental trajectories. Front. Psychol. 11:309. doi: 10.3389/fpsyg.2020.00309

Sullivan, S., Ruffman, T., and Hutton, S. B. (2007). Age differences in emotion recognition skills and the visual scanning of emotion faces. J. Gerontol. B Psychol. Sci. Soc. Sci. 62, 53–60. doi: 10.1093/geronb/62.1.P53

Tanaka, J. W., and Sung, A. (2016). The “eye avoidance” hypothesis of autism face processing. J. Autism Dev. Disord. 46, 1538–1552. doi: 10.1007/s10803-013-1976-7

Troje, N. F., and Aust, U. (2013). What do you mean with “direction”? Local and global cues to biological motion perception in pigeons. Vision Res. 79, 47–55. doi: 10.1016/j.visres.2013.01.002

Wagenbreth, C., Wattenberg, L., Heinze, H. J., and Zaehle, T. (2016). Implicit and explicit processing of emotional facial expressions in Parkinson’s disease. Behav. Brain Res. 303, 182–190. doi: 10.1016/j.bbr.2016.01.059

Wentura, D., Rohr, M., and Degner, J. (2017). Masked emotional priming: a double dissociation between direct and indirect effects reveals non-conscious processing of emotional information beyond valence. Conscious Cogn. 49, 203–214. doi: 10.1016/j.concog.2017.01.016

Appendix 1

Placement of the markers used to make the PLDs used in the experiment. For more information, please consult https://plavimop.prd.fr/en/motions.

38 markers in the face +3 (Shoulders and plexus)

8 Eyebrows: REB1/REB2/REB3/REB4/LEB1/LEB2/LEB3/LEB42 Eyes: ER/EL

5 Nose: N1/N2/N3/NL/NR

8 Mouth: M1/M2/M3/M4/M5/M6/M7/M8

15 Face: F1/F2/F3/F4/F5/F6/F7/F8/F9/F10/F11/F12/F13/F14/F15

+ RSHO/LSHO/STER. See also Image 1 in Supplementary Material.

Keywords: emotion, point-light display, face, priming, emotional recognition

Citation: Bidet-Ildei C, Decatoire A and Gil S (2020) Recognition of Emotions From Facial Point-Light Displays. Front. Psychol. 11:1062. doi: 10.3389/fpsyg.2020.01062

Received: 04 March 2020; Accepted: 27 April 2020;

Published: 04 June 2020.

Edited by:

Anthony P. Atkinson, Durham University, United KingdomReviewed by:

Paddy Ross, Durham University, United KingdomCali Bartholomeusz, Orygen, University of Melbourne, Australia

Copyright © 2020 Bidet-Ildei, Decatoire and Gil. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Christel Bidet-Ildei, christel.bidet@univ-poitiers.fr

Christel Bidet-Ildei

Christel Bidet-Ildei Arnaud Decatoire1,4,5

Arnaud Decatoire1,4,5 Sandrine Gil

Sandrine Gil