- 1College of Engineering, China Agricultural University, Beijing, China

- 2Research Center of Intelligent Equipment, Beijing Academy of Agriculture and Forestry Sciences, Beijing, China

- 3National Research Center of Intelligent Equipment for Agriculture, Beijing, China

- 4Nanjing Institute of Agricultural Mechanization, Ministry of Agriculture and Rural Affairs, Nanjing, China

The center coordinate and radius of the spherical hedges are the basic phenotypic features for automatic pruning. A binocular vision-based shape reconstruction and measurement system for front-end vision information gaining are built in this paper. Parallel binocular cameras are used as the detectors. The 2D coordinate sequence of target spherical hedges is obtained by region segmentation and object extraction process. Then, a stereo correcting algorithm is conducted to keep two cameras to be parallel. Also, an improved semi-global block matching (SGBM) algorithm is studied to get a disparity map. According to the disparity map and parallel structure of the binocular vision system, the 3D point cloud of the target is obtained. Based on this, the center coordinate and radius of the spherical hedges can be measured. Laboratory and outdoor tests on shape reconstruction and measurement are conducted. In the detection range of 2,000–2,600 mm, laboratory test shows that the average error and average relative error of standard spherical hedges radius are 1.58 mm and 0.53%, respectively; the average location deviation of the center coordinate of spherical hedges is 15.92 mm. The outdoor test shows that the average error and average relative error of spherical hedges radius by the proposed system are 4.02 mm and 0.44%, respectively; the average location deviation of the center coordinate of spherical hedges is 18.29 mm. This study provides important technical support for phenotypic feature detection in the study of automatic trimming.

Introduction

With the vigorous development of urban greening, trimming or pruning hedges to desired shape regulars is one of the major tasks in urban plant landscape construction. Manual trimming using large scissors or power tools causes a significant load on the person executing this task. The semi-automated trimmer, however, also needs a driver operating, consumes most time, and is difficult to control working accuracy. Therefore, the development of automatic and intelligent pruning robots has drawn increasing attention.

To automatically trim hedges, finding the basic phenotypic information of hedges is the key. In a complex outdoor environment, an adaptive hedge horizontal cross-section center detection algorithm was proposed to obtain the hedge’s horizontal cross-section center in real time by inputting the top view image of the hedge. This detection algorithm could be truly applied in the vehicle-mounted system (Li et al., 2022). A TrimBot2020 robotic platform equipped with a pentagon-shaped rig of five pairs of stereo cameras was developed for navigation and 3D reconstruction, which can build the model of bush or hedges and be used as the input for the trimming operation (Strisciuglio et al., 2018). An arm-mounted vision approach was studied to scan a specified shape and fit it into the reconstructed point cloud, and then, a co-mounted trimming tool could cut the bush using an automatically planned trajectory, which ensured flexibility via a vision-based shape fitting module that allows fitting an arbitrary mesh into a bush at hand (Kaljaca et al., 2019a,b). Besides, the binocular vision system has great application in picking robots for object recognition and orientation. A litchi-picking robot based on binocular vision was developed to identify and locate the target and then provide information for collision-free motion planning. The results show that the success rate of path determination is 100% for the laboratory’s picking scene (Ye et al., 2021). Herein, vision sensing technology was widely used in characteristic recognition of fruits and vegetables and movement navigation of picking robots, such as tomatoes, apples, and Hangzhou White Chrysanthemums (Ji et al., 2017; Lili et al., 2017; Yang et al., 2018; Jin et al., 2020). From the above research, it can be concluded that binocular stereo vision technology has been widely used in agricultural robotics for three-dimensional (3D) reconstruction, measurement, navigation, etc. As the “eye” of the pruning robot, the shape reconstruction and dimension measurement of target objects provide a crucial information for the follow-up operation.

In this paper, a parallel binocular vision is constructed to complete the 3D reconstruction of spherical hedges, and high accuracy is achieved in both spherical center positioning and radius measurement. The 3D reconstruction contains two-dimensional (2D) image extraction, binocular camera calibration, stereo correcting, stereo matching, and sharp reconstruction. Herein, in this paper, stereo matching is a key technology of shape reconstruction, and an improved semi-global block matching (SGBM) algorithm was proposed in this study to get a good disparity map. Based on this, the center coordinate of spherical hedges and their radius is finally realized by processing the point cloud data.

Materials and Methods

Description of the Measurement System

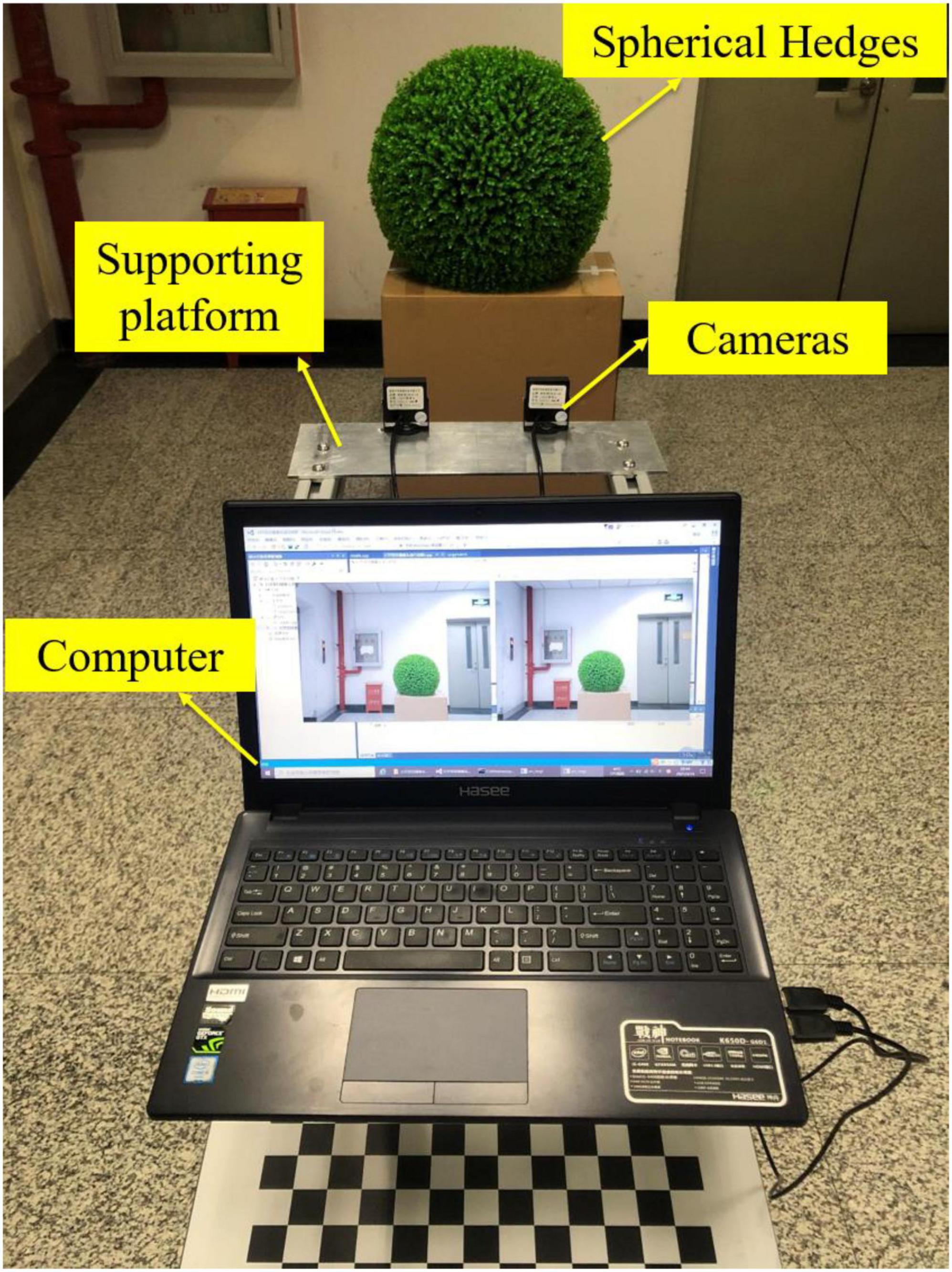

To obtain point cloud information and reshape spherical hedges, a binocular vision system is used for measurement. The binocular vision system consists of two RMONCAM G200 cameras and a supporting platform. The cameras are mounted on the slider, and the positions of the cameras can be moved on the slider rail. The distance between two cameras can be set to 80, 100, 120, 140, and 160 mm. All experiments are involved in this paper, and the distance between the two cameras is set to 140 mm. The shape reconstruction and measurement system are programmed using Microsoft Visual Studio 2015, OpenCV3.4.10, and MATLAB2018a. The focus length, maximum frame rate, pixel size, and image resolution of a utilized camera are 2.8 mm, 60 fps, 3.0 μm × 3.0 μm, and 1,920 × 1,200 pixels, respectively. Figure 1 shows the schematic diagram of the binocular vision system.

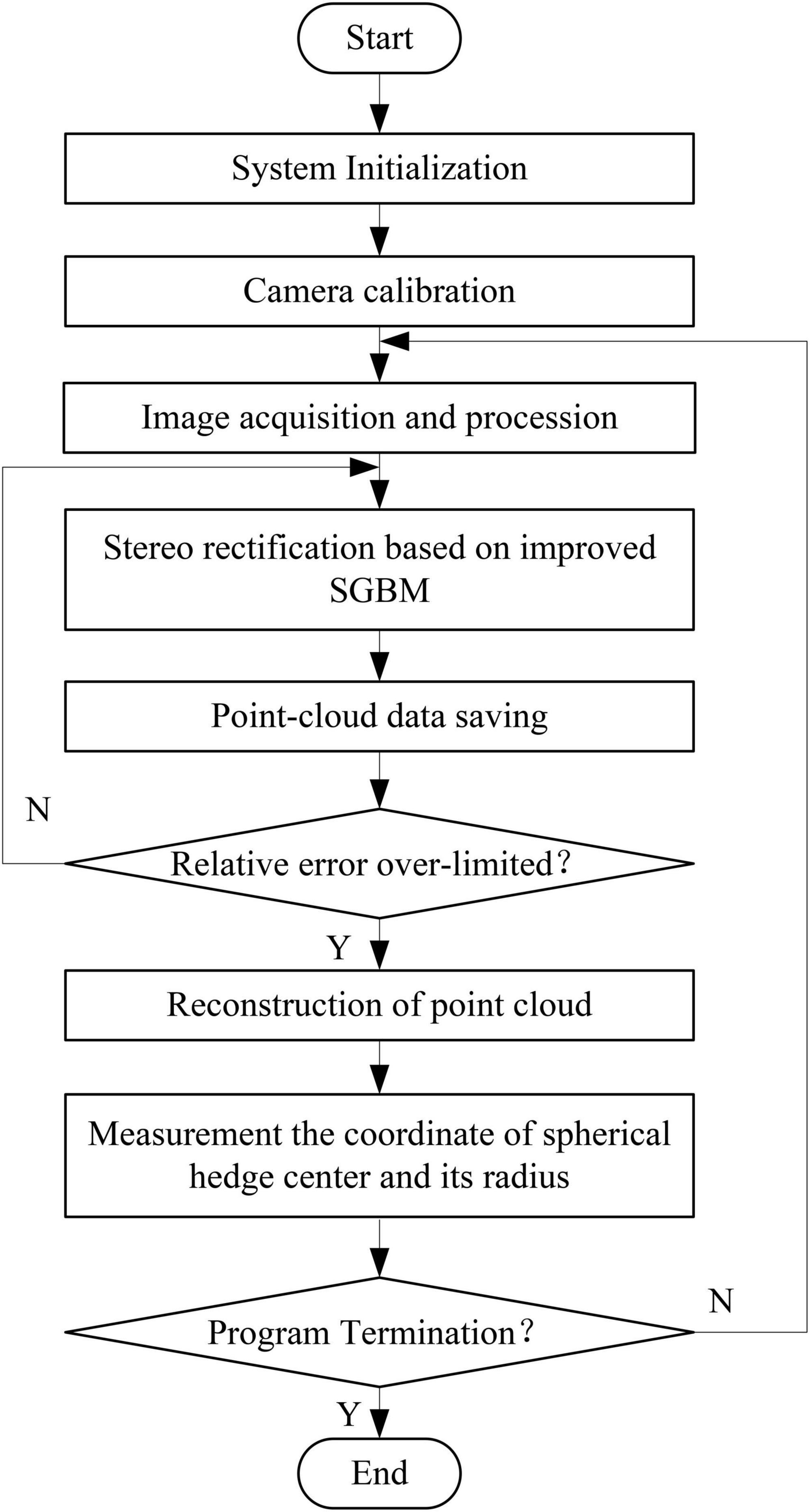

When conducting experiments, the spherical hedges are placed in front of the cameras. Then, the system captures the current images. Next, the images are transmitted to the computer. Afterward, image processing is called to obtain the point cloud data of spherical hedges. Based on this, the shape reconstruction graph is obtained. Finally, the radius and center coordinate of spherical hedges are calculated. Figure 2 shows the flowchart of the measurement system.

Camera Calibration and Image Processing

Monocular Vision Calibration

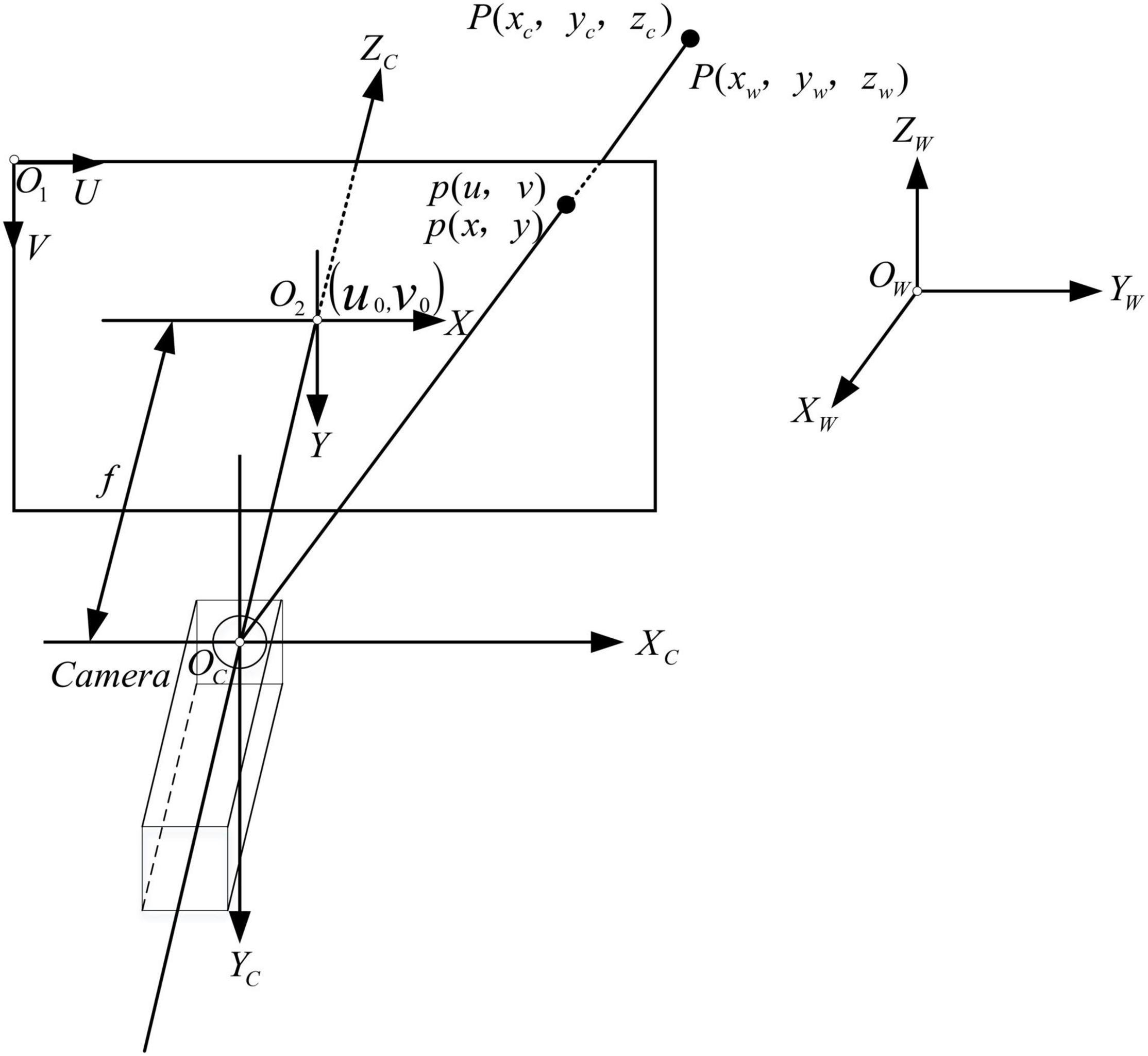

Camera calibration is an important task because it directly determines the accuracy of 3D reconstruction (Long and Dongri, 2019). According to Zhang’s camera plane calibration method, the calibration test of a monocular camera is carried out first. Figure 3 presents the schematic diagram of pinhole imaging, OC−XCYCZC is the camera coordinate system and OW−XWYWZW is the world coordinate system; O1−UVis the pixel coordinate system and O2−XY is the image coordinate system. P(xw, yw, zw) is the world coordinate of point P, and its corresponding camera coordinate in camera is P(xc, yc, zc) and its pixel coordinate is p(u, v).

Converting from world coordinate system to pixel coordinate system needs to follow several transformations: transformation between world coordinate system and camera coordinate system; transformation between camera coordinate system and image coordinate system; and transformation between image coordinate system and pixel coordinate system.

The transformation between pixel coordinate system and image coordinate system is expressed as

where sx is the pixel size of 1 mm in the x-direction of O2−XY and sy is the pixel size of 1 mm in the y-direction of O2−XY.

The transformation between the camera coordinate system and image coordinate can be obtained from the pinhole imaging theory. It is formularized as

where f is the focal length of the camera.

The transformation between the camera coordinate system and the world coordinate system can be obtained through rotation and translation. The transformation relationships are expressed as

where R and T represent the rotation matrix and the horizontal movable matrix.

Herein, the transformation between world coordinate system to pixel coordinate system can be determined by

where , , M = M1 • M2, fx = f • sx, fy = f • sy. The fx, fy, u0, and v0 are camera intrinsic parameters, and thus, M1 represents the camera’s intrinsic parameter matrix. The M2 represents the camera’s extrinsic parameter matrix; hence, M represents the projection matrix of the camera.

Moreover, a high-order polynomial model is adopted to correct the image distortion. The high-order polynomial model is expressed as

where L(r) = 1 + k1r + k2r2 + k3r3 + …, , x and y refer to the horizontal and vertical coordinate values before correction, respectively, xc and yc refer to the horizontal and vertical coordinate values after correction, respectively, x0 and y0 refer to coordinate values of the center of the distorted image. Herein, a polynomial distortion correction model of the camera can be expressed as

where k1, k2, and k3 are radial distortion coefficients, p1 and p2 are tangential distortion coefficients. Herein, k1, k2, k3, p1, and p2 are also camera intrinsic parameters.

Herein, the camera calibration toolbox (Toolbox_Calib) in MATLAB is used for monocular vision calibration. The calibration process of a monocular vision camera is as follows: image calibration, calibration chessboard extraction, corner points extraction, intrinsic and extrinsic parameters calculation, and calibration error analysis.

Binocular Vision Calibration

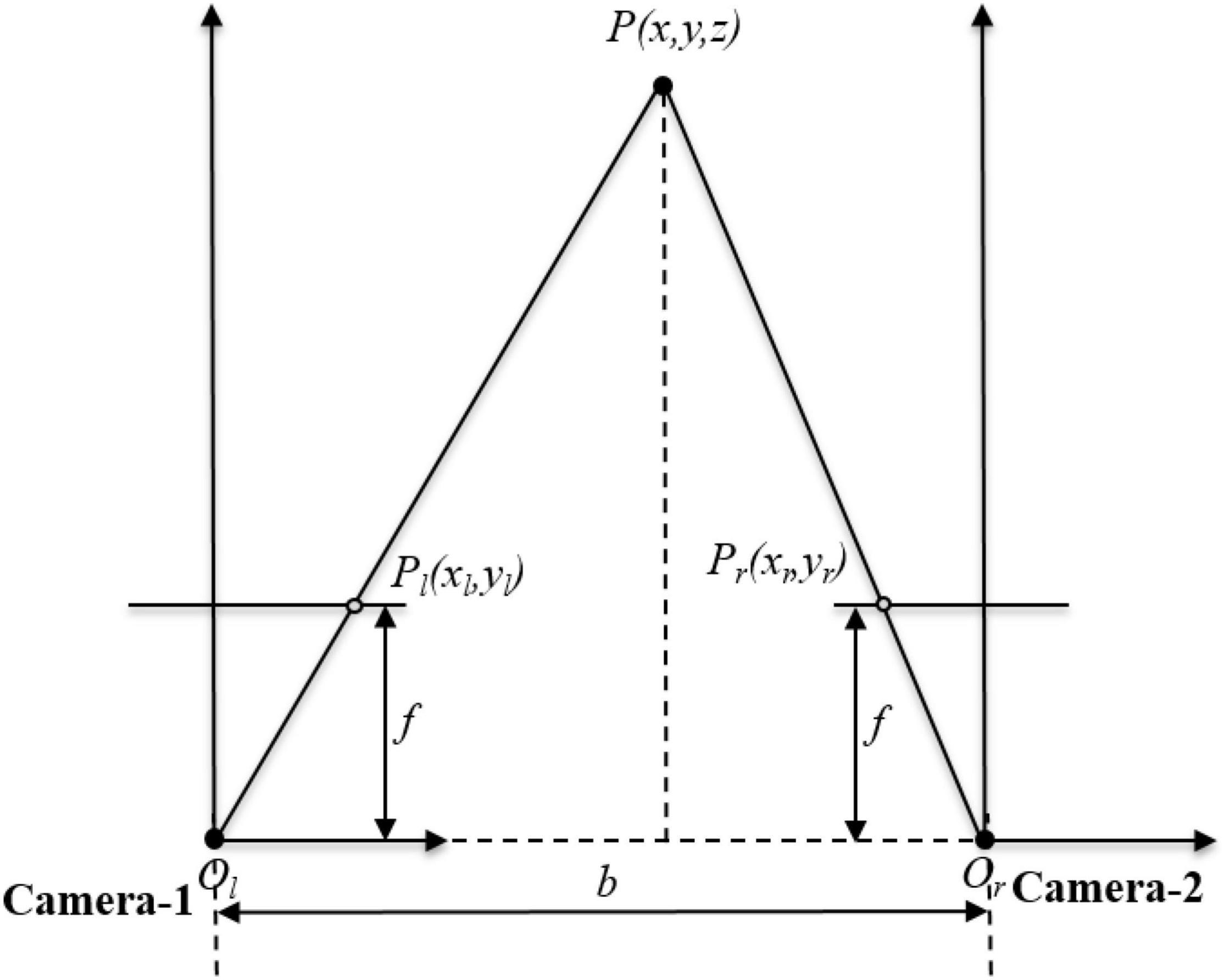

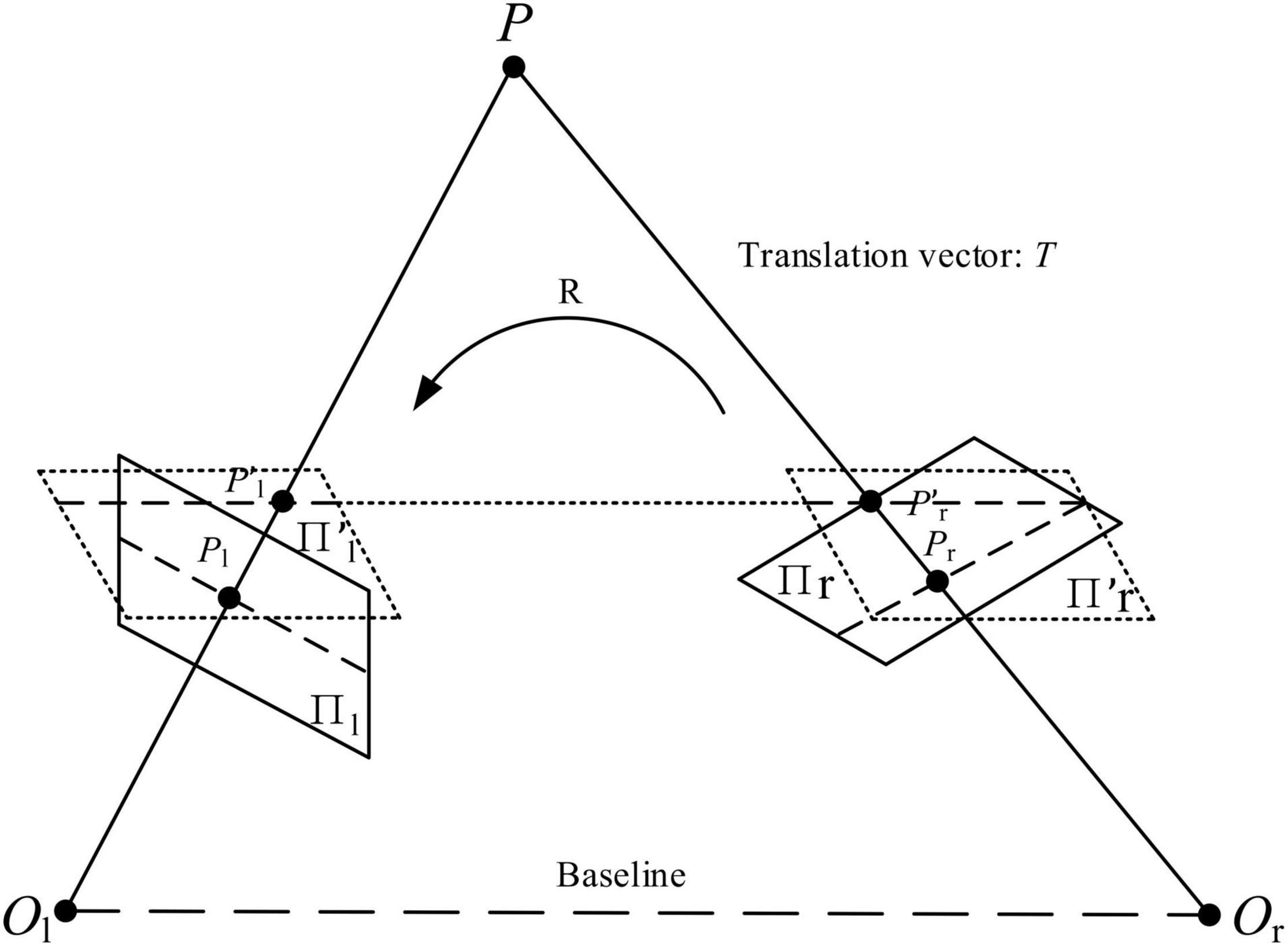

The binocular vision calibration is conducted based on the monocular vision calibration; through calibration test, the intrinsic matrix and extrinsic matrix of a camera can be obtained. In this paper, a parallel binocular stereo vision system is built. The two cameras are the same and mounted at the same height, and its front end is parallel and level. The parallel structure of the binocular vision system is shown in Figure 4. The left camera is called the Camera-1 and the left camera is called the Camera-2. Set the camera coordinate of Camera-1 as the reference world coordinate system. As indicated above, P(xw, yw, zw)is the world coordinate of point P. Its corresponding image coordinate in Camera-1 is pl(xl, yl) and its corresponding image coordinate in Camera-2 is pr(xr, yr).

According to the principle of similar triangles, it can be obtained as

where b is the baseline distance of Camera-1 and Camera-2, f is the focal length of the camera, and xl−xr is the disparity value.

As in Equation 3, the transformation between the camera coordinate system of Camera-1 and world coordinate can be obtained through rotating vector Rl and translation vector Tl, and the transformation between camera coordinate system of Camera-2 and world coordinate can be obtained through rotating vector Rr and translation vector Tr. Therefore, the transformation between camera coordinate systems of Camera-1 and Camera-2 can be represented as

The pixel coordinates of point P in Camera-1 and Camera-2 are pl(ul, vl) and pr(ur, vr), respectively. According to Equation 4, the transformation between world coordinate system to pixel coordinate system can be represented as

To solve the world coordinate [xw, yw, zw]T of point P, taking the optical central position of Camera-1 as origin, an inhomogeneous linear equation is obtained through getting rid of Zcl and Zcr in Equations 9, 10.

Up to now, for one point in space, as long as we obtain its pixel coordinates in Camera-1 and Camera-2, its world coordinates can be solved by Equation 11.

Region Segmentation and Object Extraction

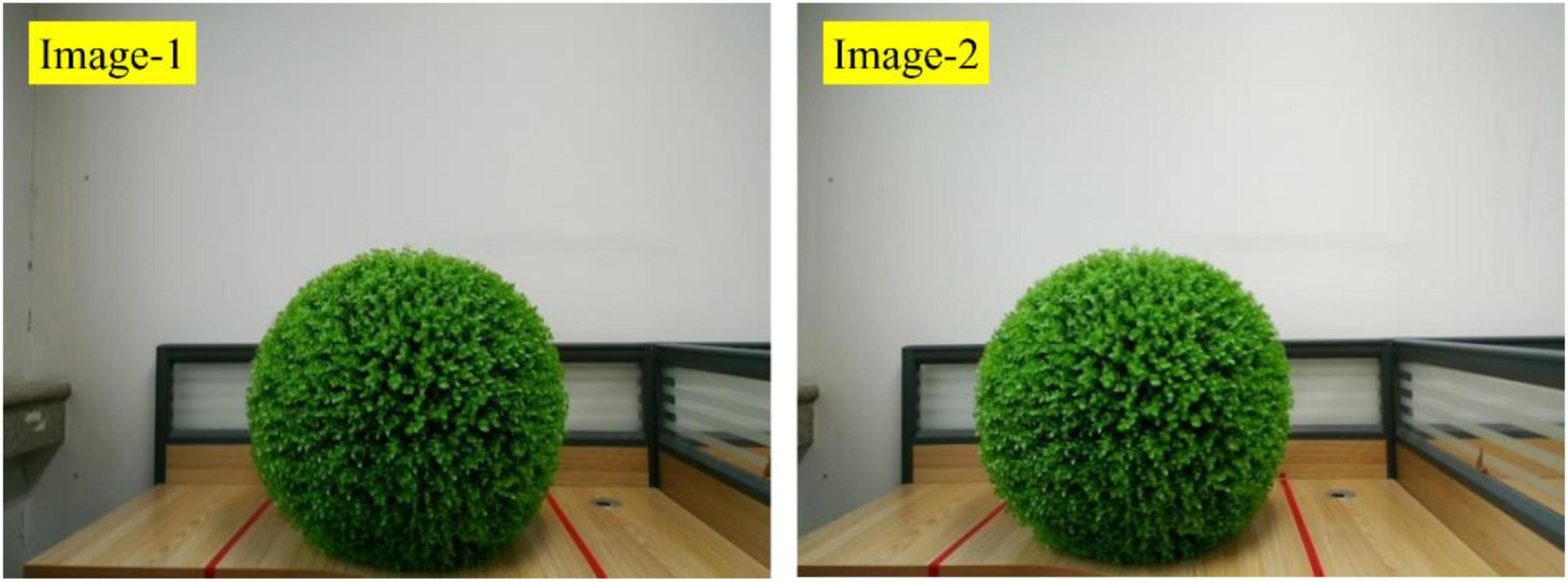

After calibration, the binocular vision system can be used to capture images. The images captured by Camera-1 and Camera-2 are called Image-1 and Image-2, respectively. Figure 5 shows Image-1 and Image-2.

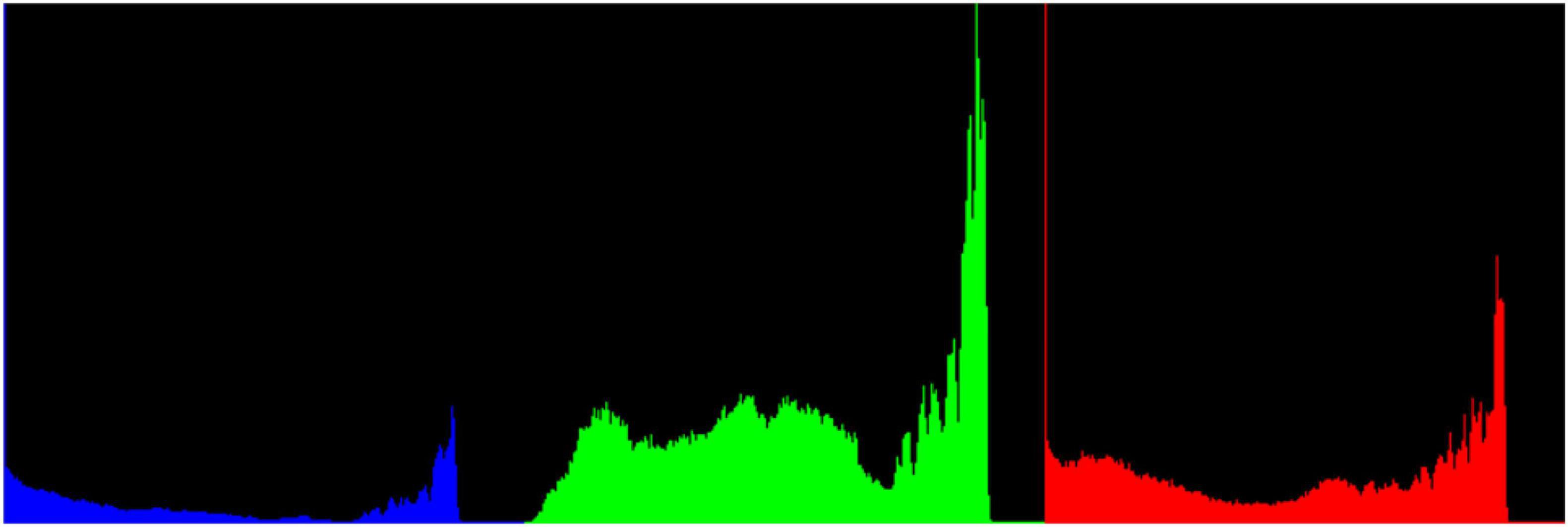

Take Image-2 as an example to introduce the hedges extraction process. The RGB color histogram of Image-2 is shown in Figure 6, which shows that green color accounts for the largest proportion. Ultra-green extraction of green plant images has a good effect on distinguishing the green plants from the surrounding environment, and it is the most commonly used grayscale method for crop recognition or weed recognition. The excess green index (ExG) of ultra-green algorithm is set to ExG = 2G−R−B.

Figure 7A is the 2G-R-B gray image of Image-2. The bilateral filtering for image denoising is used for image noise removal. Figure 7B is the bilateral filtered image of Image-2, which shows that the image boundary features can be most reserved.

Figure 7. (A) The gray image of Image-2 obtained after the Ultra-green algorithm. (B) The bilateral filtered image of Image-2.

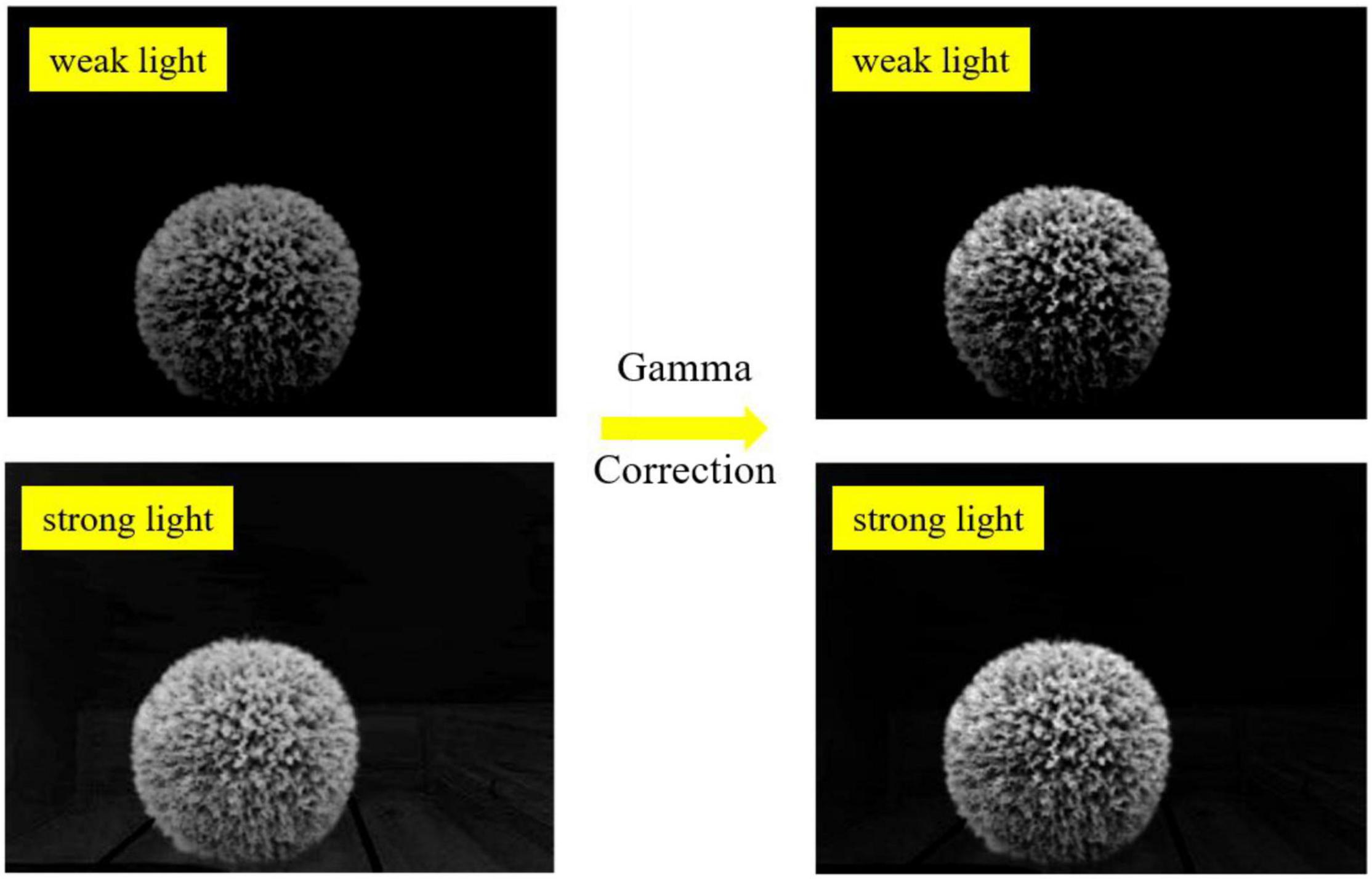

Then, gamma correction was studied to enhance the contrast between the target hedges and the surrounding environment under strong light and weak light. The gamma formula can be expressed as

where, x ∈ [0,1], y ∈ [0,1], esp is the compensation factor, and γ is the gamma coefficient.

Figure 8 shows the grayscale mapping relationship between the output image and the input image with different γ values.

From Figure 8, it can be seen that different γ values should be used when performing gamma transformations for images with different grayscale distributions. In this paper, the contrast has been enhanced to some extent after gamma correction as shown in Figure 9, when γequals 1.5.

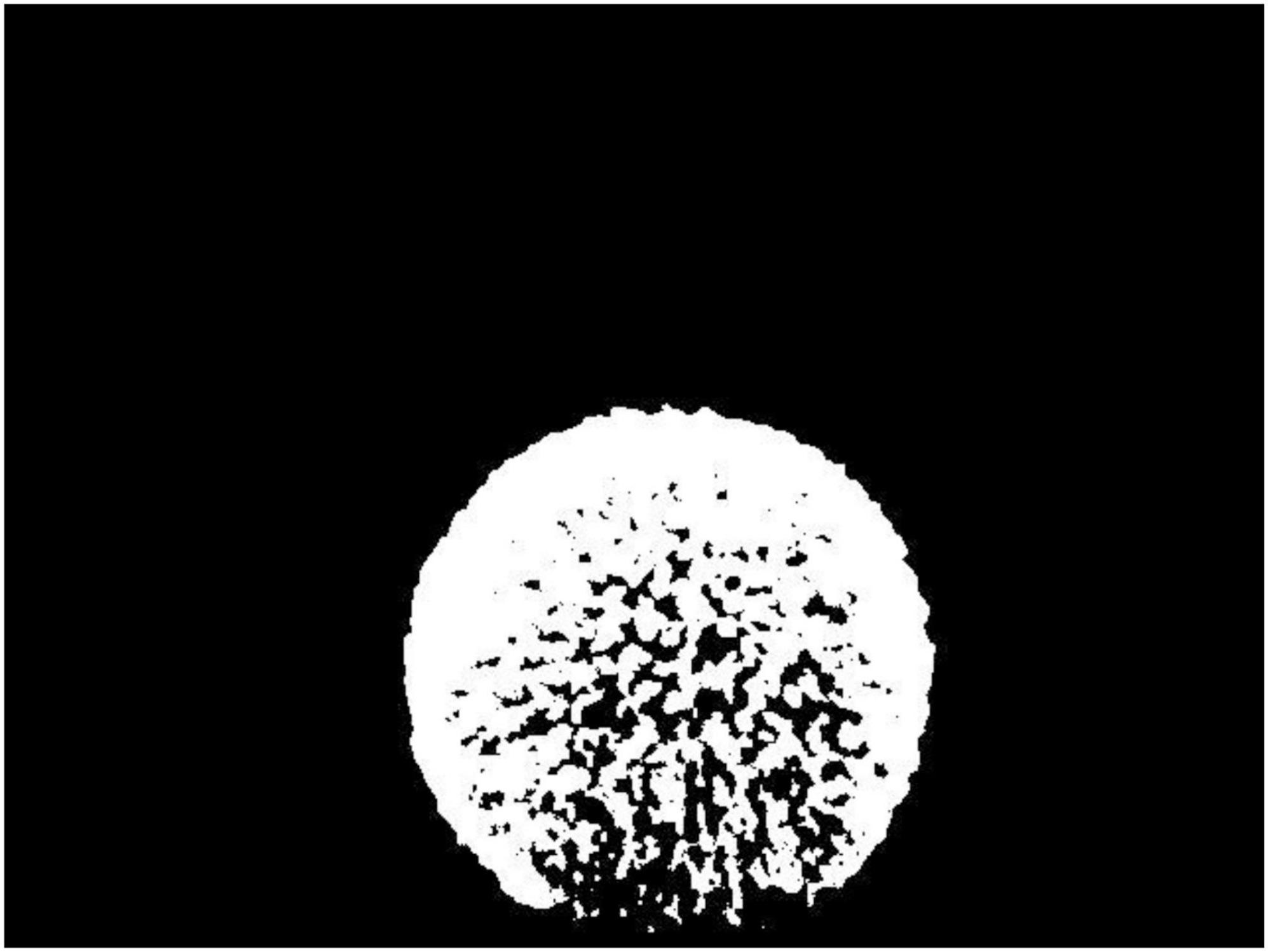

At last, the image binarization best treatment threshold is obtained using the maximum between-class variance method (OTSU), hereafter, the 2D coordinate sequence of spherical hedges can be obtained from Image-2 (Caraffa et al., 2015). Figure 10 shows the binary image of Image-2.

Shape Reconstruction and Measurement

Stereo Image Rectification

It is difficult to align the two cameras in this binocular vision system to be perfectly parallel (Wu et al., 2017). After binocular vision calibration, the stereo image rectification is used based on Bouguet’s algorithm to ensure that the cameras are completely parallel. Figure 11 shows the algorithmic principles of Bouguet’s algorithm. The plane Πl and plane Πr are the image planes of Camera-1 and Camera-2 before polar correction, and the plane and plane are the image planes of Camera-1 and Camera-2 after polar correction. The and are the pixel coordinates of point P in the plane and plane. The rotating vector R and translation vector T of the camera coordinate systems of Camera-1 and Camera-2 are obtained from camera calibration results.

In Figure 11, the practical binocular vision system can be corrected to a parallel binocular parallel system by multiplying the coordinate systems of Camera-1 and Camera-2 with their respective stereo correction matrices (Rrect) as follows

where , Rrect = , T = [Tx, Ty, Tz]T.

Shape Reconstruction

According to the morphological characteristics of spherical hedges, the surface fitting model is established by the SGBM algorithm. The SGBM algorithm is a classic semi-global matching algorithm, and this method has the advantages of both stereo matching quality and processing rates.

In the study of Romaniuk and Roszkowski (2014), the energy function of the SGBM algorithm can be represented as

where C(p, Dp) indicates matching cost value, Np indicates pixels adjacent to point P, and P1 and P2 are penalty coefficient.

Considering operating efficiency, Np is set to 8. The 2D search problem is divided into eight one-dimensional problems, thus using dynamic programming to treat each one-dimensional problem separately. When disparity is d, the matching cost value of point P in the r direction can be represented as

where C(p, d) is the matching cost value when disparity is equal to d, min(Lr(p−r, d), Lr(p−r, d−1) + P1, Lr(p−r, d + 1) indicates the minimum matching cost value of previous matching point pixel of point P in r direction, and is the constraint.

Then, the matching cost values on each path were calculated and the total sum according to the SGBM algorithm was taken. The sum of matching cost value can be expressed by

In the study of Hong and Ahn (2020), the optimal disparity d is corresponding to the minimum sum of matching cost value.

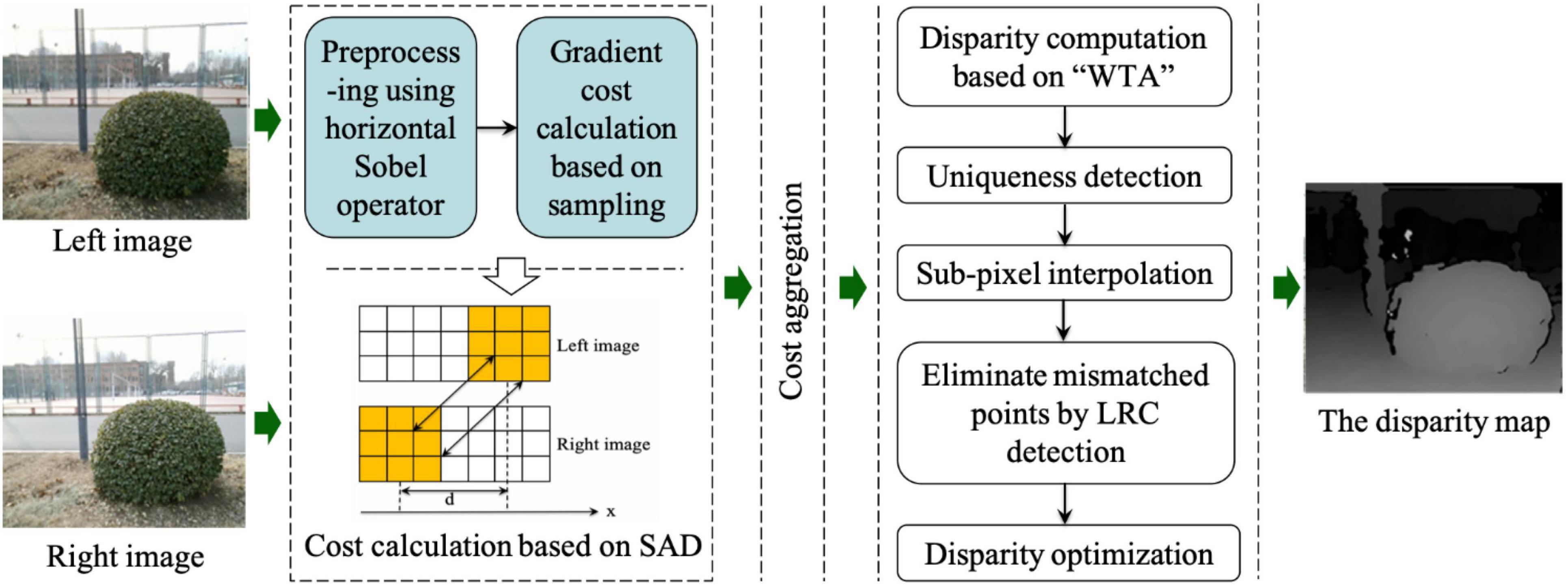

This study improves the SGBM algorithm by the following two main areas: occlusion detection and disparity optimization. The left-right consistency (LRC) method is used to remove the mismatch points, and the bilateral filtering algorithm is used to fill the holes in the disparity map. Then, the corresponding point cloud coordinates of the parallax map are calculated. Figure 12 shows the flowchart of the improved SGBM algorithm.

Occlusion detection based on the LRC is used to detect the disparity of all pixels in an image. When the disparity in the left and right imaging planes is inconformity, the pixels are regarded as the occluded points. To figure out occluded points, the disparity error is defined as

where d(q) is the disparity of pixel q in the left imaging plane (Camera-1), d(q + d(q)) is the disparity of the corresponding pixel in the right imaging plane (Camera-2) when the disparity of pixel q is d.

Disparity optimization refers to filling the holes in the disparity map. After the occlusion detection, mismatch points or occluded points are removed, and thus, some pixels have no disparity value. Meanwhile, the depth of occluded points removed by the LRC detection is greater than the depth of the object that occludes it. Therefore, the disparity of occluded points can be estimated according to the non-occluded pixels and then fill it to the disparity map. Since disparity map-based hole filling is easily led to creating stripes, an edge keeping filter is used to reduce noise and save edge information of image well. The disparity processed by bilateral filtering can be expressed as

where, σs and σr are smooth parameters in the spatial domain and pixel range, Ip and Iq are input disparities of pixel p and pixel q, and is the bilateral filtering weight.

The pixel coordinates of p and q are marked as p(x, y) and q(k, l), respectively. Then, Gσs(||p-q||) and Gσr(Ip−Iq) can be expressed as

where I(i, j) and I(k, l) are the disparity values of corresponding pixels in the disparity map.

Dimension Measurement of Spherical Hedges

According to the morphological characteristics of spherical hedges, the surface fitting model is established by the SGBM algorithm. The SGBM algorithm is a classic semi-global matching algorithm, which has the advantages of both stereo matching quality and processing rates.

After obtaining the disparity map through stereo matching, 3D point cloud coordinates of detected spherical hedges can be calculated by Equation 7. Then, the deformed shape of the spherical hedges is mapped and the error of coordinate and fitted coordinate of each 3D point is calculated. Finally, the coordinate of spherical hedges’ center and its radius are obtained when the sum of error is minimal.

In the calculation process, O(x0, y0, y0) is the center of a fitting sphere, its corresponding radius is r, and (xi, yi, zi) is the coordinate of a point cloud. The error formula of the actual coordinate and fitted coordinate of each 3D point can be expressed as (Guo et al., 2020)

Then, the sum of error is demonstrated as

where N is the number of 3D point clouds, and E is the sum of errors.

In Equation 21, Eshows a function relation to x0, y0, z0, and r. Thus, all the partial derivatives with respect to E are set to zero, and then, a minimum value of E can be obtained. The extreme value of partial derivative with respect to E can be expressed as

With Equations 20–22 can be demonstrated as

To solve out x0, y0, and z0, Equation 23 can be transformed into

where,

Then, the radius of spherical hedges is obtained by

Results

Binocular Vision Calibration Test and Results

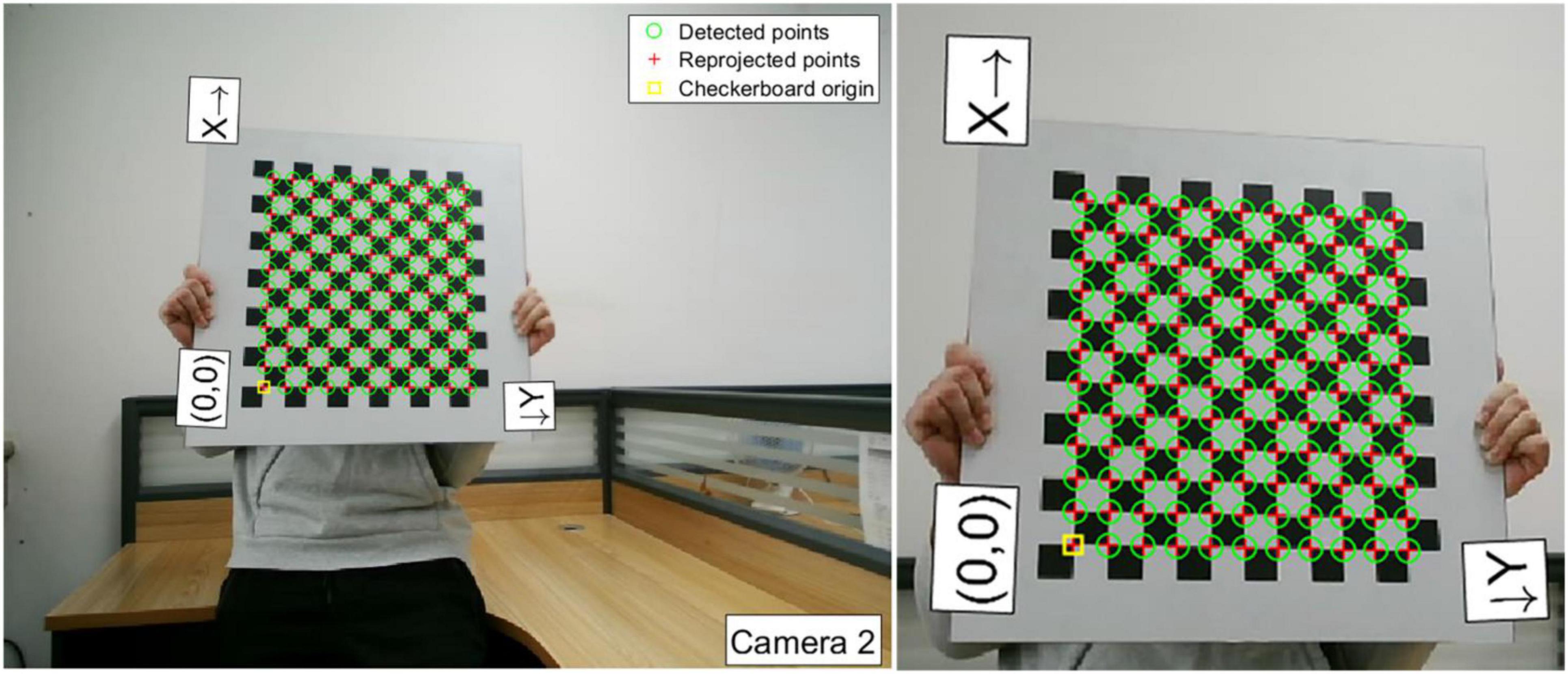

A calibration chessboard is applied in the experiment. The chessboard is placed in front of Camera-1 and Camera-2 with different positions and attitudes, and sixteen groups of images for calibration are captured. Then, the camera calibration toolbox (Toolbox_Calib) in MATLAB is used to extract corners in the chessboard. The detailed features of the chessboard are as follows: the material is armored glass; board size is 500 mm2 × 500 mm2; chessboard size is 390 mm2 × 360 mm2; check array is 13 × 12; check size is 30 × 30 mm2; and the precision is ± 0.01 mm. In the captured calibration images, the number of corners that can be extracted from each image is 12 × 11. Figure 13 shows one of the corner extraction results of Camera-2.

Taking the first corner in the lower left (marked in yellow square in Figure 13 as the origin), the “X”-“Y” co-ordinate system is set up in a chessboard plane. The pixel coordinates of each corner can be obtained (Qiu and Huang, 2021). The world coordinates of corners are obtained based on the pixel coordinates of corners and check size. Then, the transformation matrix can be calculated by linear calculation. Additionally, by matrix decomposition, the intrinsic matrix (fx, fy, u0 and v0) of Camera-1 and Camera-2 can be obtained. In addition, a polynomial distortion correction model is built to correct the distortion, and the radial distortion coefficients and tangential distortion coefficients (k1, k2, p1, and p2) are given. The intrinsic parameters and distortion coefficients of Camera-1 and Camera-2 are listed in Table 1.

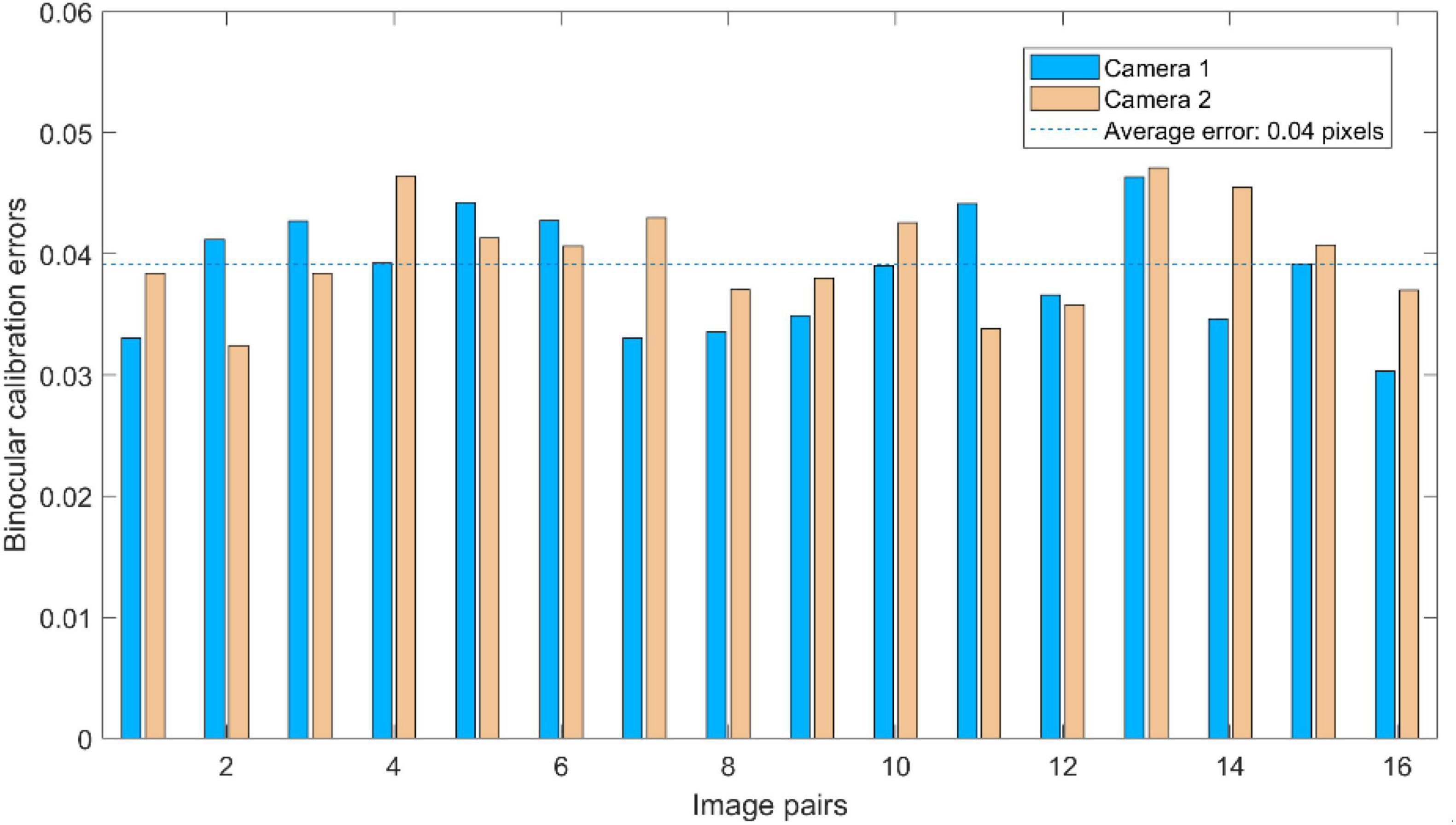

To test the calibration accuracy results listed in Table 1, the calibration errors of captured calibration images are analyzed, respectively. The coordinates of the corners in the “X”-“Y” co-ordinate system are obtained after back-projection and compared with the corresponding actual pixels of corners in the chessboard to obtain calibration errors. The binocular calibration errors of each image pair are shown in Figure 14. As can be seen in Figure 14, the binocular calibration errors for each pair of images are less than 0.05 pixels, and the average errors of Camera-1 and Camera-2 are both 0.04 pixels.

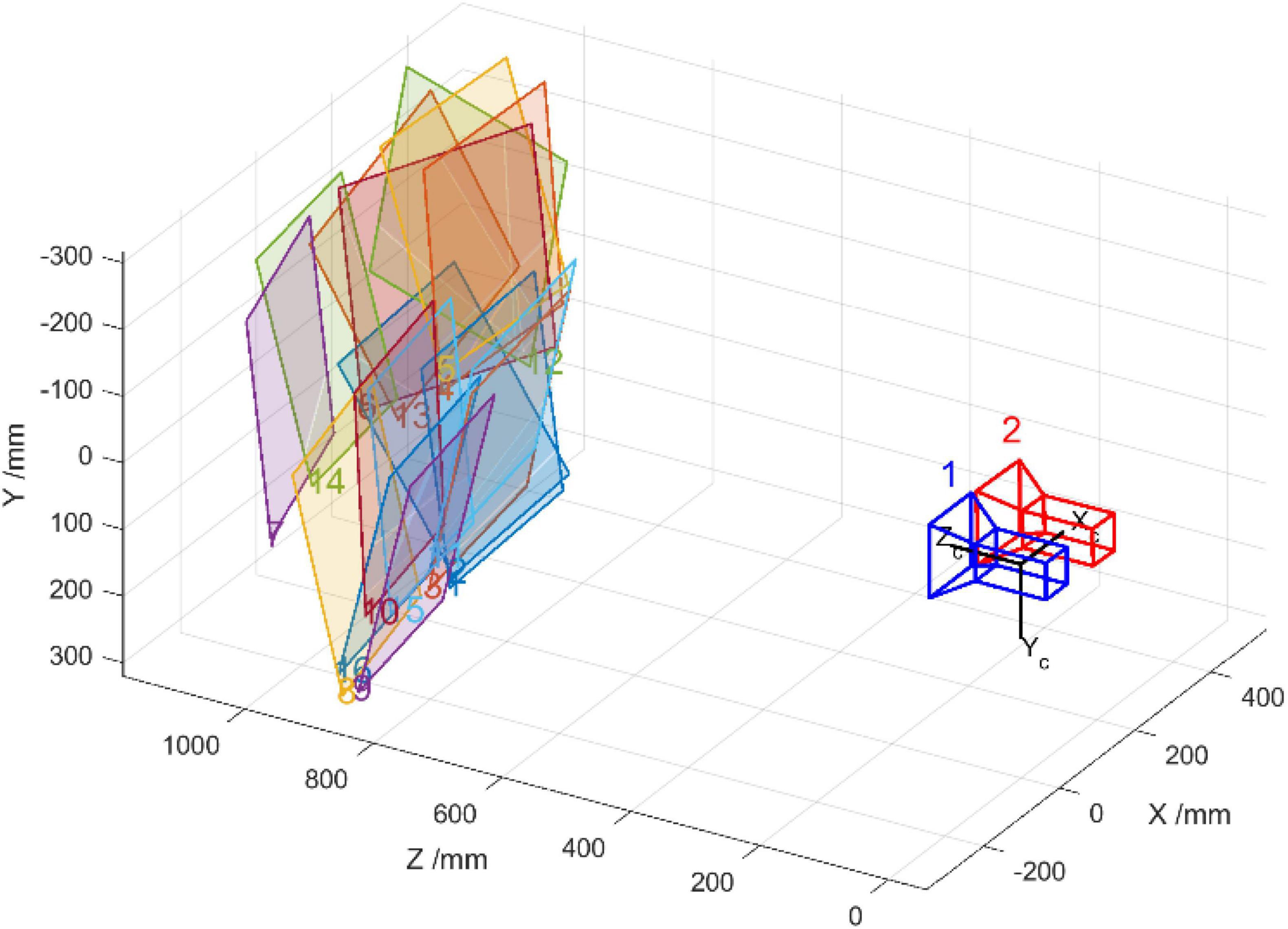

Then, the binocular vision calibration proceeds by using the binocular calibration toolbox in MATLAB. The installation of the two cameras is close to the coplanar and row alignment. As shown in Figure 15, the “1” and “2” represent the position and placing attitude of Camera-1 and Camera-2, respectively. The sixteen colored squares represent the positions and placing attitudes of the sixteen images of the calibration chessboard. In addition, the relative position between Camera-1 and Camera-2 can be obtained. Iterate over the intrinsic parameters and distortion coefficients of Camera-1 and Camera-2 obtained by monocular vision calibration. The transformation matrix and vector between Camera-1 and Camera-2 are given as T = [−119.2486 0.3206 3.3474]T and .

The binocular calibration errors are also obtained by reverse projection of spatial coordinates of the corners, the binocular calibration errors for each pair of images are less than 0.07 pixels, and the average error of binocular calibration is less than 0.04 pixels. The calibration accuracy meets the requirements of the binocular vision system in this study.

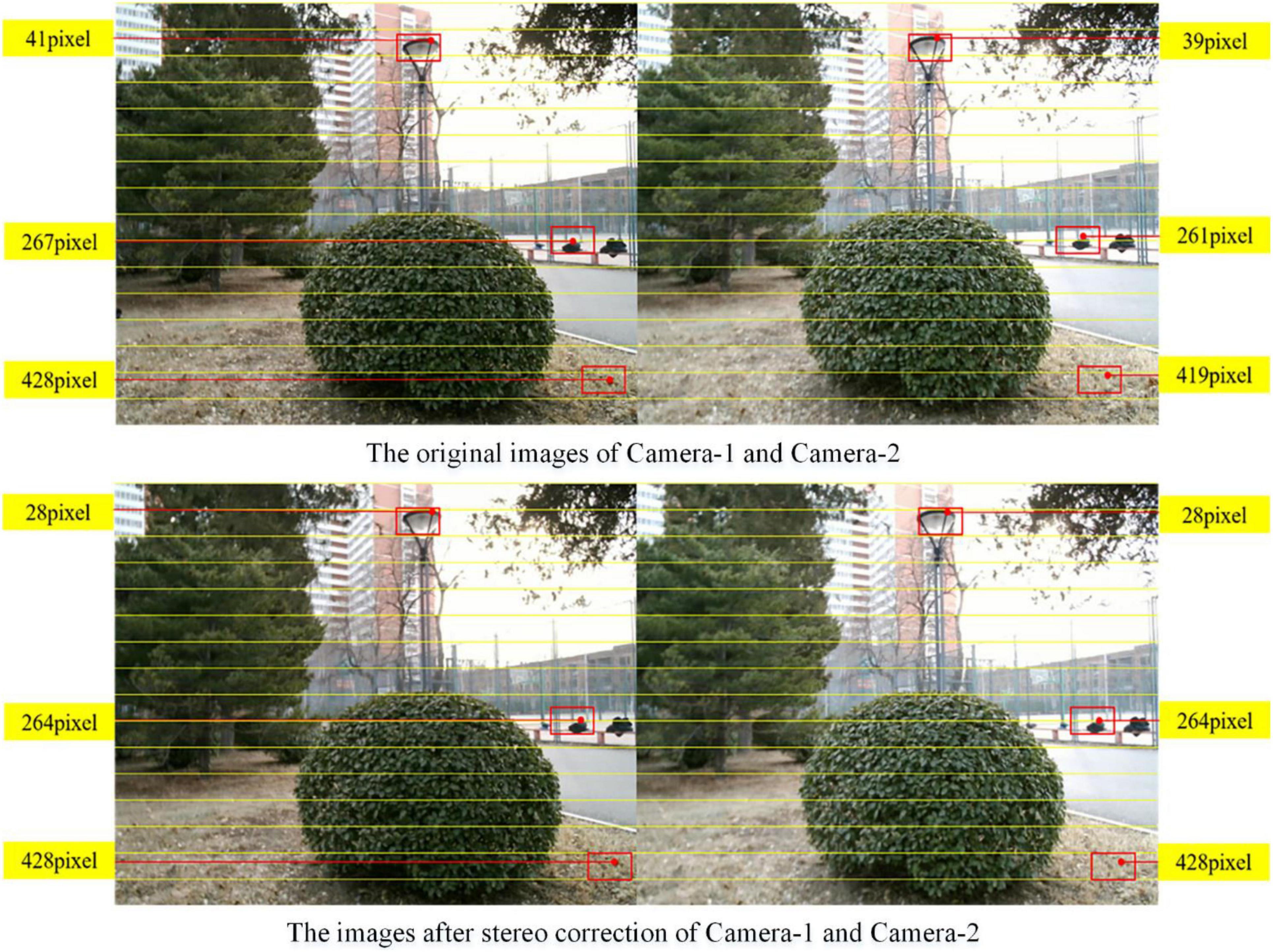

Afterward, the images collected by this binocular vision system outdoor are used for stereo correcting, and the result is shown in Figure 16. The pixels of red dots from the top of the image are marked on the images. The pixels from the top of the original image of Camera-1 are 41, 267, and 428, whereas the values of Camera-2 are 39, 261, and 419, respectively. Herein, after stereo correction, the pixels of the same object in images of Camera-1 and Camera-2 are in the same row, and the pixels of makers after stereo correction are all 28, 264, and 428, respectively.

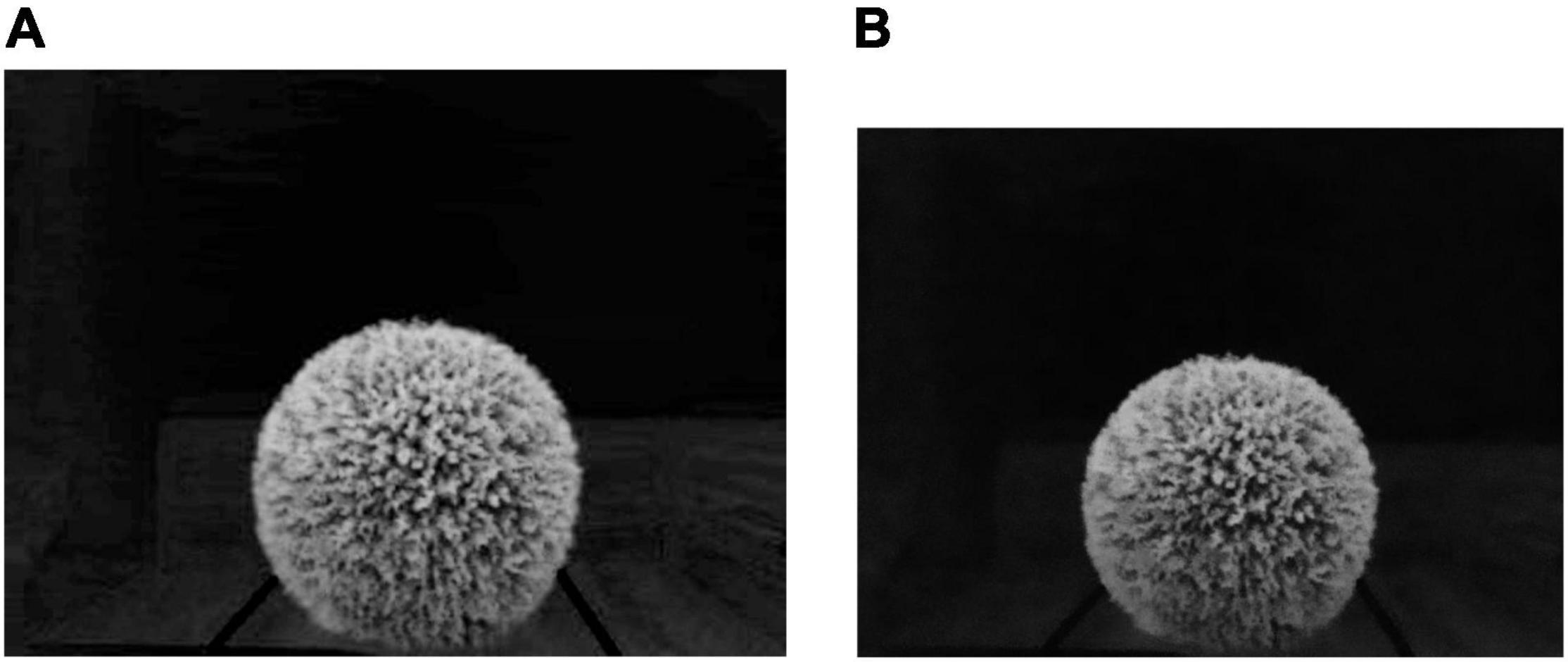

Laboratory Test and Results

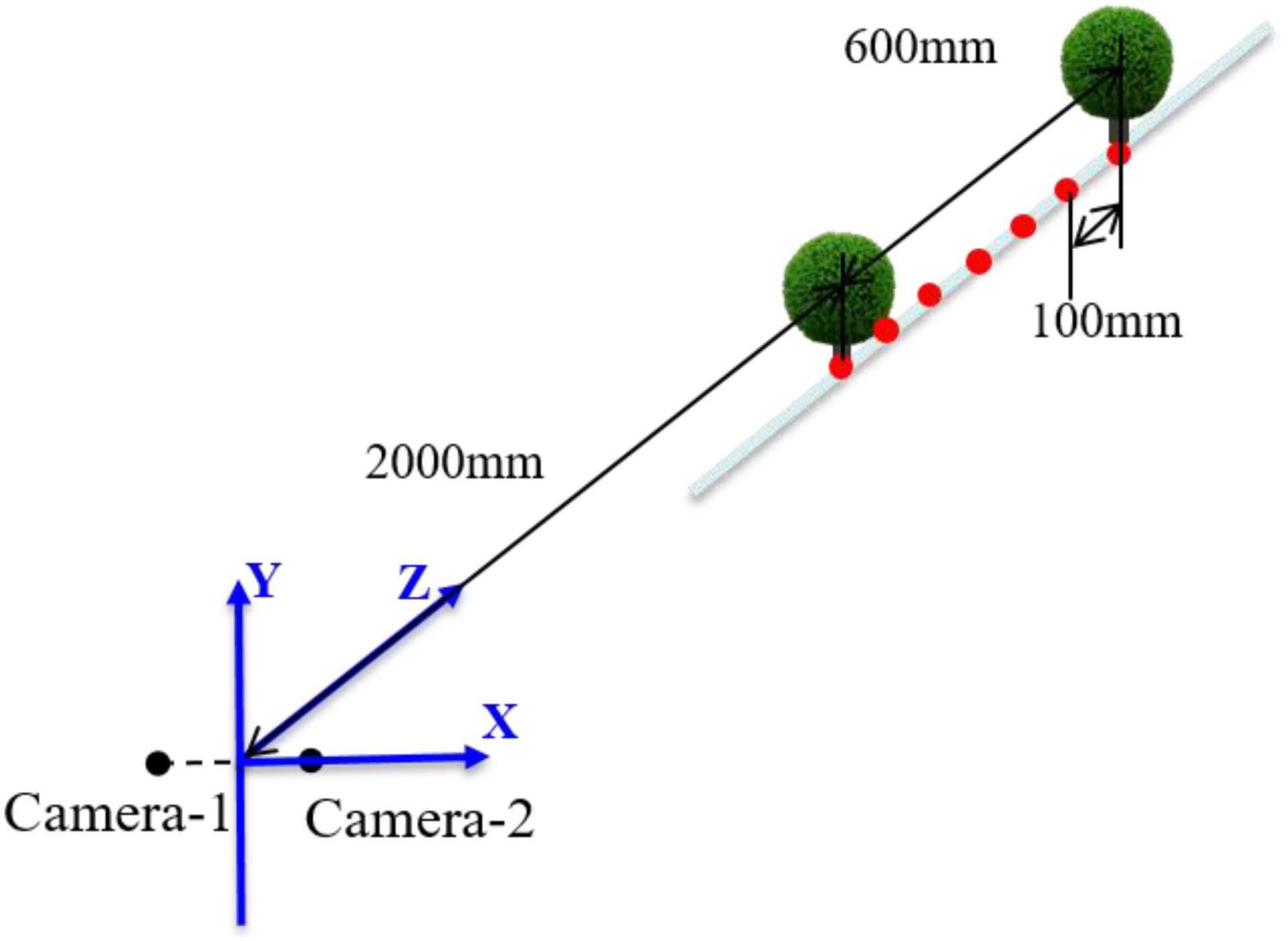

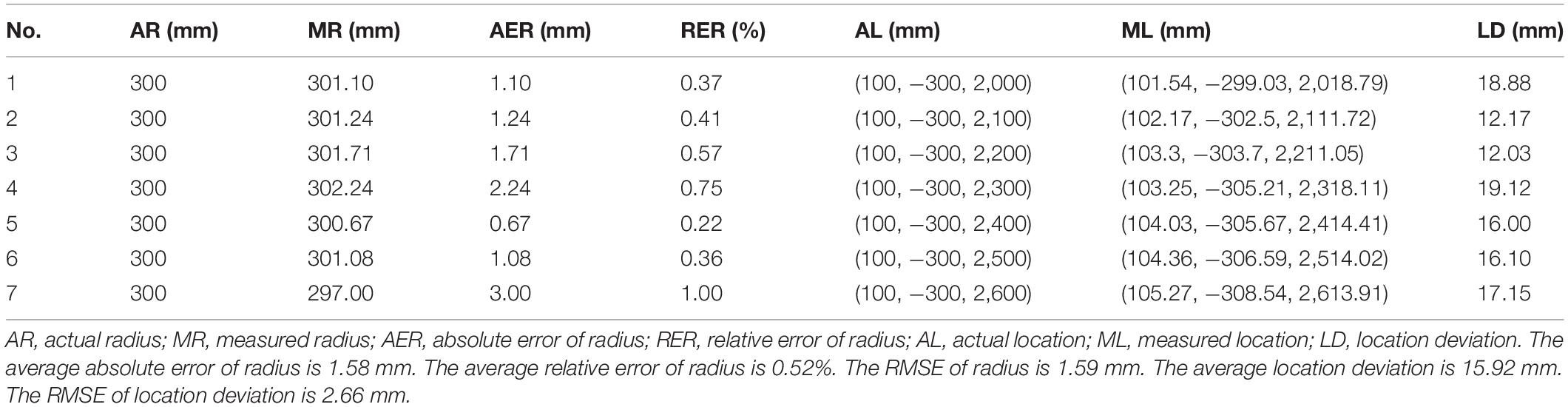

To better reflect the 3D reconstruction effect of spherical hedges, a standard spherical hedge with a diameter of 60 mm was used to conduct a laboratory test first. The different test data sets could be obtained by changing the distances between the spherical hedges and the binocular vision system. Then, the stability and accuracy of this measurement system were verified according to the errors of the measured value and actual value. In the laboratory test, a straight line was marked in front of the binocular vision system, and seven different positions were set at the direction of Z by every 100 mm in range of 2,000–2,600 mm, described as red dots in Figure 17. Seven groups of images were captured, and the test values of the spherical center and its radius are shown in Table 2.

According to Table 2, the maximum and average error of radius of standard spherical hedges by the proposed system were 3.00 mm and 1.58 mm, respectively; maximum and average relative errors of radius were 1.00% and 0.52%, respectively; the root mean square error (RMSE) of the radius was 1.59 mm. Moreover, the relative error and error of radius increase with the distance in direction of Z, and the maximum relative error was 1.00% at the distance of 2,600 mm in direction of Z, which indicated the high monitoring accuracy and stability of the proposed system for radius measurement. The minimum, maximum, and average location deviations were 12.03, 19.12, and 15.92 mm in the range of 2,000–2,600 mm, and the RMSE of the center coordinate of spherical hedges was 2.66 mm. It showed that the proposed system had high accuracy in positioning and dimension measurement and had stability and applicability for different distances in a certain range.

Outdoor Test and Results

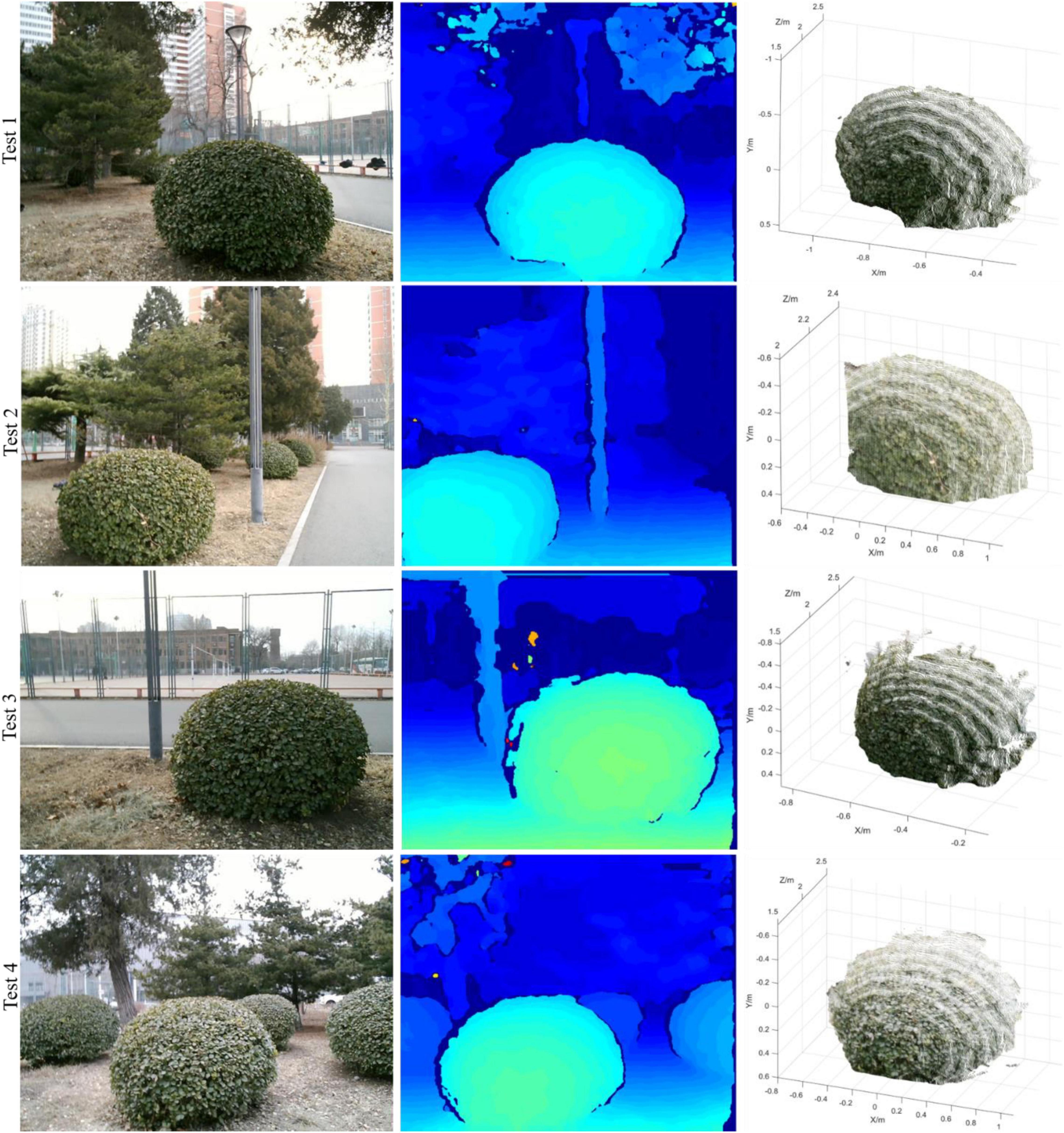

An outdoor test was conducted at China Agricultural University East Campus (Beijing, China). During the test, the weather was overcast and the leaves of spherical hedges were slightly yellow and sparse. A number of four spherical hedges were randomly selected on the campus; therefore, the results have a certain generality. The spherical hedges were non-standard spheres and their radius was unknown; therefore, for each spherical hedge, six groups of images were captured at different positions. The distances between the proposed system and spherical hedges were all around 2,000 mm. The outdoor scene image acquired by the left camera, the disparity map obtained by stereo matching, and the 3D shape reconstruction image of the proposed system are shown in Figure 18.

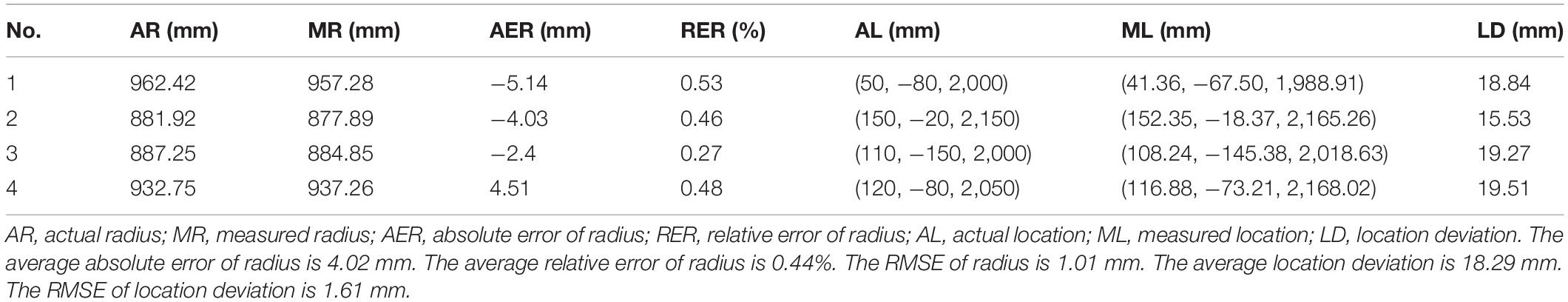

In the outdoor test, the actual center position and radius of spherical hedges were measured manually using a tap. In each test, the actual radius was collected manually by six different positions, and the average value was determined. The results of the center coordinate and its radius in Figure 18 are shown in Table 3.

According to Table 3, the maximum and average errors of the radius of measured spherical hedges in the outdoor test were 5.14 and 4.02 mm, respectively; maximum and average relative errors of radius were 0.53% and 0.44%; the and RMSE of the radius was 1.01 mm, respectively. At the distance of around 2,000 mm in direction of Z, the maximum and average location deviation were 19.51 and 18.29 mm, respectively. It indicated a high measurement accuracy and stability of the proposed system for outdoor sphere center positioning and radius detection.

Discussion

A binocular vision system for spherical hedge reconstruction and measurement was proposed in this work to provide front-end visual information for pruning robots. Through theoretical analysis and experimental verification, this shape reconstruction and dimension measurement method showed high accuracy in both spherical center positioning and radius measurement. The conclusions of this study were as follows:

(1) The binocular vision platform was built based on the theory of binocular parallel structure. After binocular camera calibration, stereo image correcting was used based on Bouguet’s algorithm to improve the accuracy of shape reconstruction. Meanwhile, the captured 2D images were processed through filtering algorithm, segmentation, edge extraction, etc. Then, an improved SGBM algorithm was applied to obtain a good disparity map.

(2) The sharp reconstruction and measurement method were tested in a laboratory and outdoors in the detection range of 2,000–2,600 mm. The laboratory test result showed that the average error and average relative error of standard spherical hedges radius were 1.58 mm and 0.53%, respectively; the average location deviation of the center coordinate of spherical hedges was 15.92 mm in range of 2,000–2,600 mm. The outdoor test showed that the average error and average relative error of spherical hedges radius by the proposed system were 4.02 mm and 0.44%, respectively; the average location deviation of the center coordinate of spherical hedges was 18.29 mm. Therefore, the proposed system could be employed for the visual information acquisition of various trimming robots due to its excellent applicability.

Future studies may involve expanded tests on different shapes of hedges to clarify the accuracy and stability of the proposed system further. This study provides key technical support for visual detection in studies of trimming robots.

Data Availability Statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

Author Contributions

YZ, JG, TR, and HL built the system, conducted the experiments, and wrote the manuscript. BZ, JZ, and YY designed the measurement method. All authors discussed the measurement method and designed the laboratory and outdoor experiments.

Funding

This work was supported by the National Key Research and Development Project of China (2019YFB1312305) and the Modern Agricultural Equipments and Technology Demonstration and Promotion project of Jiangsu Province (NJ2020-08). We would like to thank a team of the Beijing Research Center of Intelligent Equipment for Agriculture for financial support of the research.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We would like to thank Taiyu Wu, Lin Gui, Xiao Su, and Jun Liu for helpful discussion and assistance of the research.

References

Caraffa, L., Tarel, J. P., and Charbonnier, P. (2015). The guided bilateral filter: when the joint/cross bilateral filter becomes robust. IEEE Trans. Image Proc. 24, 1199–1208. doi: 10.1109/TIP.2015.2389617

Guo, X., Shi, Z., Yu, B., Zhao, B., Li, K., and Sun, Y. (2020). 3D measurement of gears based on a line structured light sensor. Precision Eng. 61, 160–169. doi: 10.1016/j.precisioneng.2019.10.013

Hong, P. N., and Ahn, C. W. (2020). Robust matching cost function based on evolutionary approach. Exp. Syst. Appl. 161:113712.

Ji, W., Meng, X., Qian, Z., Xu, B., and Zhao, D. (2017). Branch localization method based on the skeleton feature extraction and stereo matching for apple harvesting robot. Int. J. Adv. Robotic Syst. 14:276. doi: 10.1177/1729881417705276

Jin, Z., Sun, W., Zhang, J., Shen, C., Zhang, H., and Han, S. (2020). Intelligent tomato picking robot system based on multimodal depth feature analysis method. IOP Conf. Ser. Earth Environ. Sci. 440:74. doi: 10.1088/1755-1315/440/4/042074

Kaljaca, D., Mayer, N., Vroegindeweij, B., Mencarelli, A., Henten, E. V., and Brox, T. (2019a). “Automated boxwood topiary trimming with a robotic arm and integrated stereo vision,” in Proceeding of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) Macau. (China).

Kaljaca, D., Vroegindeweij, B., and Henten, E. (2019b). Coverage trajectory planning for a bush trimming robot arm. J. Field Robot. 37, 283–308. doi: 10.1002/rob.21917

Li, Z., Xu, E., Zhang, J., Meng, Y., Wei, J., Dong, Z., et al. (2022). AdaHC: adaptive hedge horizontal cross-section center detection algorithm. Comput. Electr. Agric. 192:106582. doi: 10.1016/j.compag.2021.106582

Lili, W., Bo, Z., Jinwei, F., Xiaoan, H., Shu, W., Yashuo, L., et al. (2017). Development of a tomato harvesting robot used in greenhouse. Int. J. Agric. Biol. Eng. 10, 140–149. doi: 10.25165/j.ijabe.20171004.3204

Long, L., and Dongri, S. (2019). “Review of camera calibration algorithms,” in Advances in Computer Communication and Computational Sciences, eds S. Bhatia, S. Tiwari, K. Mishra, and M. Trivedi (Singapore: Springer), 723–732. doi: 10.1007/978-981-13-6861-5_61

Qiu, Z.-C., and Huang, Z.-Q. (2021). A shape reconstruction and visualization method for a flexible hinged plate using binocular vision. Mech. Syst. Signal Proc. 158:107754. doi: 10.1016/j.ymssp.2021.107754

Romaniuk, R. S., and Roszkowski, M. (2014). “Optimization of semi-global stereo matching for hardware module implementation,” in Proceedings of the Symposium on Photonics Applications in Astronomy, Communications, Industry and High-Energy Physics Experiments, Warsaw. doi: 10.1117/12.2075012

Strisciuglio, N., Tylecekg, R., Petkova, N., Biberb, P., Hemmingc, J., Hentenc, E., et al. (2018). TrimBot2020: An Outdoor Robot for Automatic Gardening. Munich: Vde Verlag publisher.

Wu, W., Zhu, H., and Zhang, Q. (2017). Epipolar rectification by singular value decomposition of essential matrix. Multi. Tools Appl. 77, 15747–15771. doi: 10.1007/s11042-017-5149-0

Yang, Q., Chang, C., Bao, G., Fan, J., and Xun, Y. (2018). Recognition and localization system of the robot for harvesting hangzhou white chrysanthemums. Int. J. Agric. Biol. Eng. 11, 88–95. doi: 10.25165/j.ijabe.20181101.3683

Keywords: spherical hedges, shape reconstruction, binocular vision, dimension measurement, 3D point cloud

Citation: Zhang Y, Gu J, Rao T, Lai H, Zhang B, Zhang J and Yin Y (2022) A Shape Reconstruction and Measurement Method for Spherical Hedges Using Binocular Vision. Front. Plant Sci. 13:849821. doi: 10.3389/fpls.2022.849821

Received: 06 January 2022; Accepted: 14 March 2022;

Published: 04 May 2022.

Edited by:

Yongliang Qiao, The University of Sydney, AustraliaReviewed by:

Paul Barry Hibbard, University of Essex, United KingdomLihui Wang, China Conservatory, China

Sixun Chen, Hokkaido University, Japan

Copyright © 2022 Zhang, Gu, Rao, Lai, Zhang, Zhang and Yin. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yanxin Yin, yinyx@nercita.org.cn

Yawei Zhang

Yawei Zhang Jin Gu

Jin Gu Tao Rao1

Tao Rao1 Hanrong Lai

Hanrong Lai