- 1Zhejiang Engineering Research Center of Intelligent Medicine, The First Affiliated Hospital of Wenzhou Medical University, Wenzhou, China

- 2School of Information and Safety Engineering, Zhongnan University of Economics and Law, Wuhan, China

- 3Wenzhou Data Management and Development Group Co., Ltd., Wenzhou, Zhejiang, China

- 4School of Public Health and Management, Wenzhou Medical University, Wenzhou, Zhejiang, China

- 5Department of Gastrointestinal Surgery, The First Affiliated Hospital of Wenzhou Medical University, Wenzhou, China

- 6Zhejiang Provincial Key Laboratory of Aging and Neurological Disorder Research, Department of Neurosurgery, The First Affiliated Hospital of Wenzhou Medical University, Wenzhou, Zhejiang, China

Background: Gastric cancer is a highly prevalent and fatal disease. Accurate differentiation between early gastric cancer (EGC) and advanced gastric cancer (AGC) is essential for personalized treatment. Currently, the diagnostic accuracy of computerized tomography (CT) for gastric cancer staging is insufficient to meet clinical requirements. Many studies rely on manual marking of lesion areas, which is not suitable for clinical diagnosis.

Methods: In this study, we retrospectively collected data from 341 patients with gastric cancer at the First Affiliated Hospital of Wenzhou Medical University. The dataset was randomly divided into a training set (n=273) and a validation set (n=68) using an 8:2 ratio. We developed a two-stage deep learning model that enables fully automated EGC screening based on CT images. In the first stage, an unsupervised domain adaptive segmentation model was employed to automatically segment the stomach on unlabeled portal phase CT images. Subsequently, based on the results of the stomach segmentation model, the image was cropped out of the stomach area and scaled to a uniform size, and then the EGC and AGC classification models were built based on these images. The segmentation accuracy of the model was evaluated using the dice index, while the classification performance was assessed using metrics such as the area under the curve (AUC) of the receiver operating characteristic (ROC), accuracy, sensitivity, specificity, and F1 score.

Results: The segmentation model achieved an average dice accuracy of 0.94 on the hand-segmented validation set. On the training set, the EGC screening model demonstrated an AUC, accuracy, sensitivity, specificity, and F1 score of 0.98, 0.93, 0.92, 0.92, and 0.93, respectively. On the validation set, these metrics were 0.96, 0.92, 0.90, 0.89, and 0.93, respectively. After three rounds of data regrouping, the model consistently achieved an AUC above 0.9 on both the validation set and the validation set.

Conclusion: The results of this study demonstrate that the proposed method can effectively screen for EGC in portal venous CT images. Furthermore, the model exhibits stability and holds promise for future clinical applications.

Introduction

Gastric cancer (GC) is a highly prevalent malignancy, ranking among the top three in terms of mortality (1). Worldwide, over 1 million new cases of gastric cancer are diagnosed annually (2). The five-year survival rate for advanced gastric cancer (AGC) is less than 30%, while early gastric cancer (EGC) boasts a remarkable 90% survival rate (3, 4). EGC refers to invasive gastric cancer that penetrates no deeper than the submucosa, regardless of lymph node metastasis (5). The mainstay of treatment for EGC is endoscopic resection, while AGC is treated with sequential chemotherapy (5), with preoperative and adjuvant chemotherapy improving outcomes (6). Despite the vital implications for prognosis and treatment planning, the detection rate of EGC remains low, with even developed countries reporting a mere 50% diagnostic rate for EGC (3).

Gastric cancer is diagnosed histologically after endoscopic biopsy and staged using CT, endosonography(EUS), PET, and laparoscopy (5). The American Joint Committee on Cancer (8th edition) recommends computed tomography (CT) and endosonography as preoperative diagnostic techniques for gastric cancer. CT aids in identifying malignant lesions (7), detecting lymph node metastasis (8), and evaluating response to neoadjuvant chemotherapy (9). However, the highest reported accuracy for CT-based EGC detection is a mere 0.757 (10, 11), and the overall diagnostic accuracy for T staging is only 88.9% (12). Research indicates that EUS outperforms CT in preoperative T1 and N staging of gastric cancer (13, 14), yielding an overall T staging accuracy of 77% (15). Double contrast-enhanced ultrasonography (DCEUS) achieves a modest 82.3% accuracy in assessing gastric cancer T staging (16). Additionally, EUS staging is less effective in special locations such as the gastroesophageal junction (17).

The field of medical image analysis has witnessed significant interest in the application of rapidly advancing artificial intelligence techniques. These techniques have been successfully employed in various tasks such as image segmentation (18), disease detection (19), and lesion classification (20). Alam et al. utilized deep learning technology to automatically segment the gastrointestinal tract on MRI, aiding physicians in formulating precise treatment plans for cancer-affected regions of the gastrointestinal tract (21). Arai et al. developed a machine learning-based approach to accurately stratify the risk of gastric cancer, enabling individualized prediction of gastric cancer incidence (22). Ba et al., working with 110 whole slide images (WSI), compared deep learning with the diagnostic results of pathologists in diagnosing gastric cancer. The study demonstrated that deep learning technology indeed enhances the accuracy and efficiency of pathologists in gastric cancer diagnosis (23). Zeng et al. manually delineated gastric cancer lesions in portal phase CT images and subsequently selected the largest tumor slice and adjacent slices to establish a deep learning model for distinguishing between EGC and advanced gastric cancer (AGC) (24). However, manual delineation of lesion areas is time-consuming and demands a high level of expertise from the annotators, making it unsuitable for practical clinical diagnosis. This study aims to achieve automatic gastric segmentation and EGC detection on portal phase CT images by establishing a deep learning model capable of accurately distinguishing EGC.

Materials and methods

Patients

The study included 674 gastric cancer (GC) patients who underwent CT and pathological examinations at the First Affiliated Hospital of Wenzhou Medical University between January 2020 and April 2023. All patients were confirmed to have gastric cancer by pathological examination. Exclusion criteria consisted of the following: (1) patients who did not undergo enhanced CT scans, (2) patients with insufficient CT image quality, (3) patients with concurrent malignant tumors, and (4) patients who received neoadjuvant chemotherapy prior to CT examination. Supplementary Figure 1 presents the flow chart outlining the inclusion and exclusion criteria, ultimately resulting in a final sample of 341 patients. Based on pathological examination results, all GC patients were categorized as either early gastric cancer (EGC, n=124) or advanced gastric cancer (AGC, n=217). They were randomly assigned to a training set (n=273) and a validation set (n=68) at an 8:2 ratio. Pathological examination findings served as the gold standard for gastric cancer staging. The Hospital Medical Ethics Committee approved this retrospective study.

Image acquisition and preprocessing

Enhanced CT scans were performed using a TOSHIBA_MEC_CT3 device model. The scanning parameters were as follows: tube voltage range of 120 kVp, tube current range of 90-350 mA, table speed of 69.5 mm/rot, image matrix of 512×512, and reconstruction slice thickness of 2 mm. During the contrast-enhanced scan, 1.5 mL/kg of iodine contrast agent was injected through the antecubital vein using a syringe at a flow rate of 3.50 mL/s. Following the contrast medium injection, the patient held their breath, and imaging was conducted in the arterial phase (at 35-40s), portal venous phase (at 60-90s), and equilibrium phase (at 110s-130s).

Imaging during the portal venous phase is beneficial for assessing visceral invasion in surrounding tissues, as well as detecting and diagnosing lymph node metastasis, peritoneal metastasis, and extramural vascular invasion. Previous studies have employed this phase for tumor lesion segmentation (25, 26). To minimize the impact of exceptional cases on the model, we applied a window width of 350 Hounsfield units (Hu) and a window level of 50 Hu to truncate the grayscale values of CT images. Additionally, we normalized the grayscale values of all images to the range of [0,1].

Two-stage deep learning model development

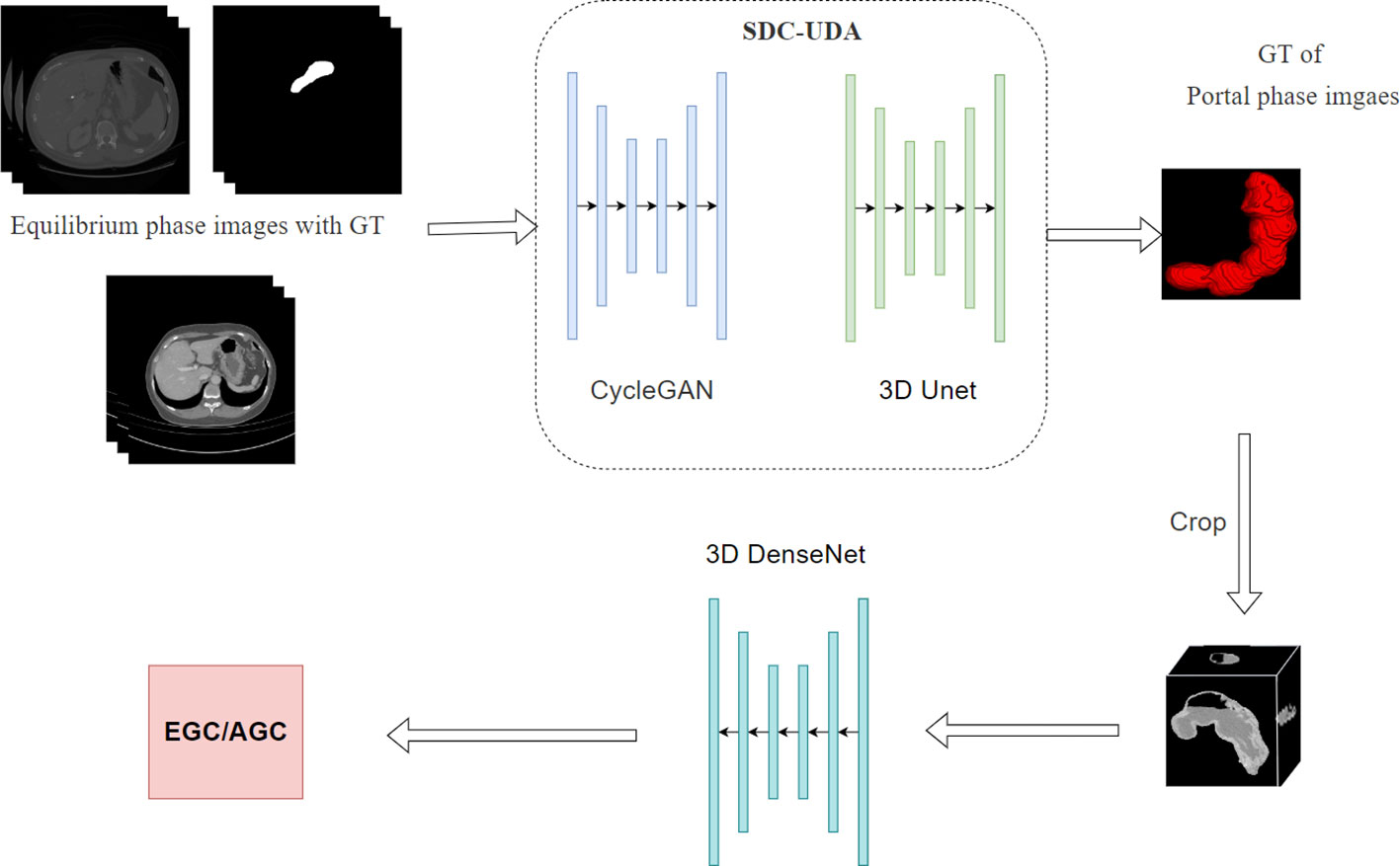

To facilitate fully automated distinction between early gastric cancer (EGC) and advanced gastric cancer (AGC), we have developed a two-stage deep learning model. As illustrated in Figure 1, the first stage of the model encompasses a two-dimensional segmentation network responsible for segmenting the stomach in enhanced CT images. The second stage involves a three-dimensional classification network dedicated to EGC screening.

Segment model development

For the segmentation network in the first stage, we adopted the Slice-Direction Continuous Unsupervised Domain Adaptation Framework (SDC-UDA) (27). This framework leverages the equilibrium phase CT dataset with ground truth (GT) to achieve segmentation of the portal phase CT dataset without GT. The equilibrium phase CT dataset with GT was sourced from the MICCAI FLARE 2022 competition (https://flare22.grand-challenge.org/), comprising a total of 50 cases.

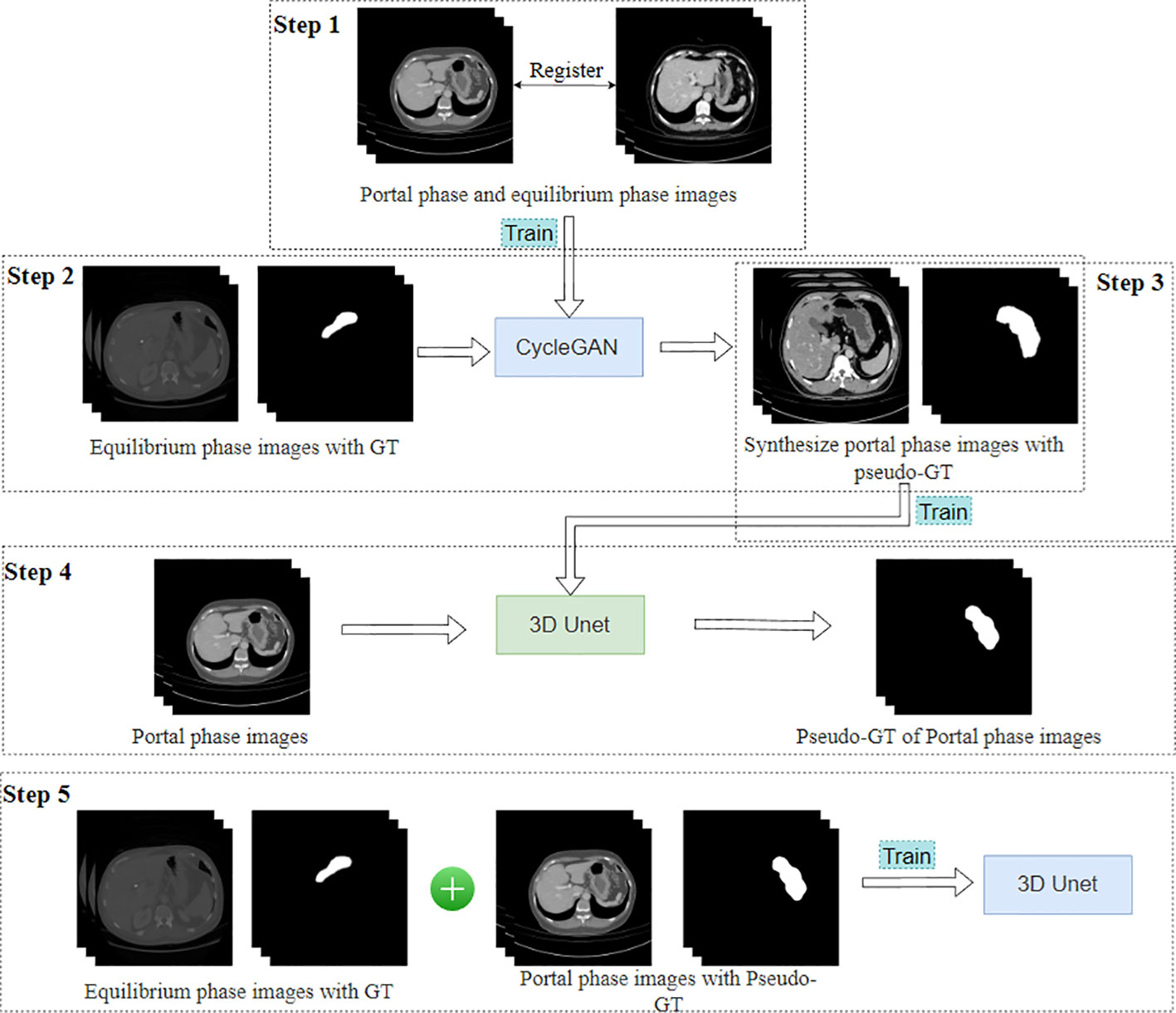

The SDC-UDA model consists of five stages (Figure 2). Firstly, on our dataset, we registered the portal phase and equilibrium phase images and trained an unsupervised image translation generator, employing the CycleGAN network (28), with intra- and inter-slice self-attention. Secondly, we utilized the generator from the previous step to synthesize portal phase images with pseudo-GT, obtained from the equilibrium phase data with GT through 2D-to-3D image translation. Thirdly, we trained the synthesized portal phase images with pseudo-GT using the 3D-Unet network (29). Fourthly, we generated pseudo-GT for real portal phase images without GT using the 3D-Unet network trained in the previous step. Additionally, we improved the pseudo-GT through uncertainty-constrained pseudo-GT refinement. Finally, we jointly trained the segmentation model based on the 3D-Unet network by combining the equilibrium phase image with GT and the real portal phase image with the pseudo-GT.

In our hospital’s CT dataset, 50 cases of gastric segmentation were randomly selected by a doctor with 8 years of clinical experience using ITK-SNAP (version 3.8.0, USA). The segmentation results were utilized to evaluate the performance of the SDC-UDA model.

Classification model development

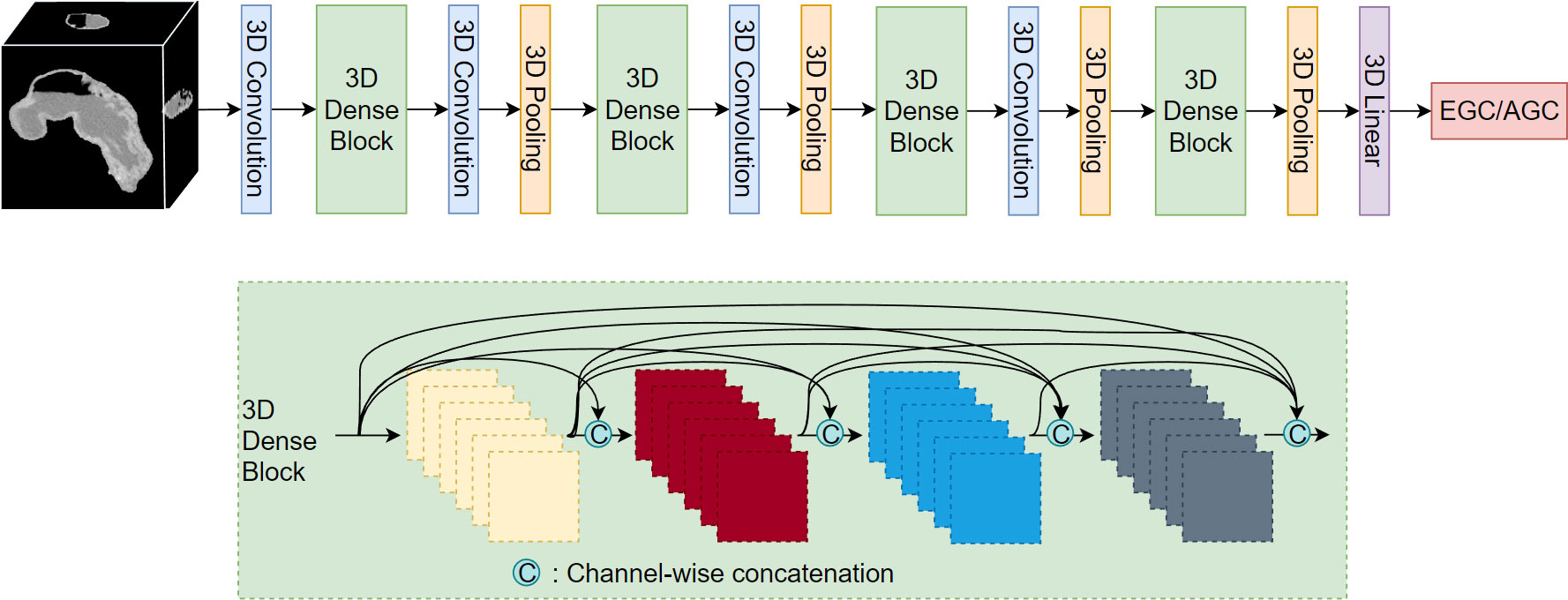

After segmenting the stomach in the CT image, we extracted the stomach region based on the segmentation results and resized it to a dimension of 128*128*128. Subsequently, we employed the 3D DenseNet network (30) for the classification of EGC and AGC. The network comprises four dense modules (Figure 3), connected by convolutional and pooling layers. The final classification result is obtained through a linear layer after passing the output of the last dense module through the pooling layer. Each dense module consists of four convolutional blocks. The convolutional layer incorporates multiple convolutional layers, and the output of each convolutional block is concatenated with the outputs of all subsequent convolutional blocks in a channel-wise manner. Notably, all convolution kernels in the model are 3D.

Model evaluation

To evaluate the segmentation model, we employed the dice coefficient (31). For the classification model, we assessed its performance by computing the accuracy, sensitivity, specificity, and F1 score. The receiver operating characteristic (ROC) curve was plotted, and the area under the ROC (AUC) value was calculated.

To assess the model’s stability, we randomly divided the data into three separate sets for training and validation, maintaining consistent proportions. We compared the results obtained from the three validation sets.

Statistical analysis

All calculations and statistical analyses were conducted in a Linux environment (Ubuntu 7.5.0) using the following hardware configuration: an Intel 4215FR CPU clocked at 3.20 GHz, 64 GB DDR4 memory, and an RTX 4060 Ti graphics card. The programming language utilized was Python 3, specifically version 3.6.13 from the Python Software Foundation. We employed the PyTorch deep learning framework (https://pytorch.org/) along with key packages such as torch (version 1.10.1), torchvision (version 0.11.2), and scikit-learn (version 0.20.4).

Results

Patients

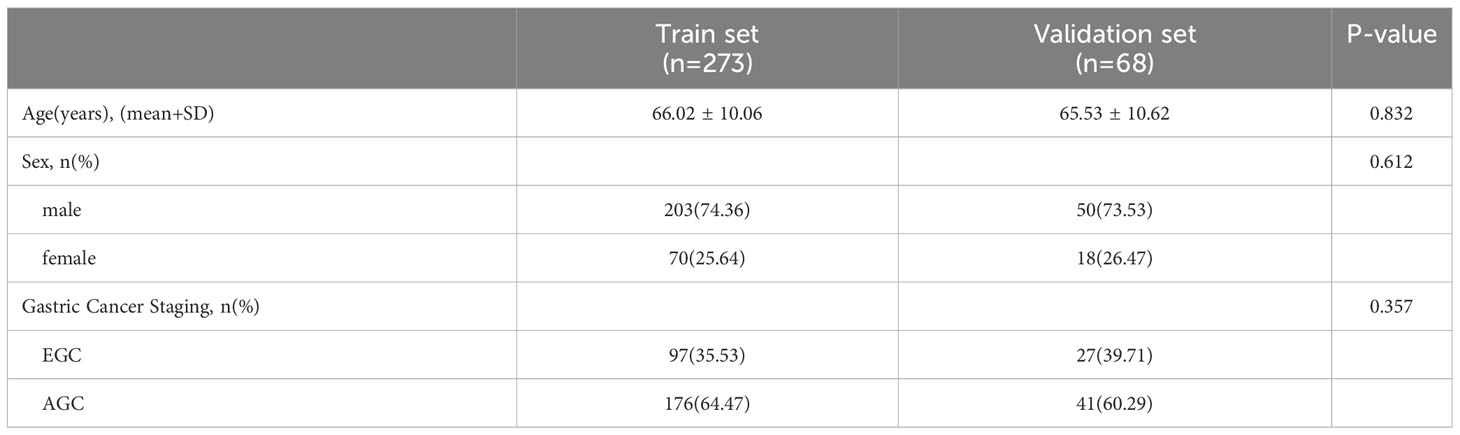

The study included a total of 341 cases of gastric cancer (GC). The training set comprised 273 participants (mean [SD] age: 66.02 [10.06] years), while the validation set consisted of 68 participants (mean [SD] age: 65.53 [10.62] years). Further details regarding the distribution of cases within the training and validation sets are presented in Table 1.

Model building

The optimal parameters for the model were determined through several experiments. For the CycleGAN in SDC-UDA, the parameters were set as follows: 100 training epochs, a beta value of 0.5, a learning rate of 0.0001, and learning rate updates every 50 epochs. The parameters for the 3D Unet in SDC-UDA were set as follows: 200 training epochs, a batch size of 2, a learning rate of 0.0005, and learning rate updates every 100 epochs. The parameters for the 3D DenseNet were set as follows: the base network was DenseNet121, a dropout rate of 0.5, a growth rate of 4, 1000 training epochs, a batch size of 20, a learning rate of 0.1, and learning rate updates every 500 epochs.

Model performance evaluation

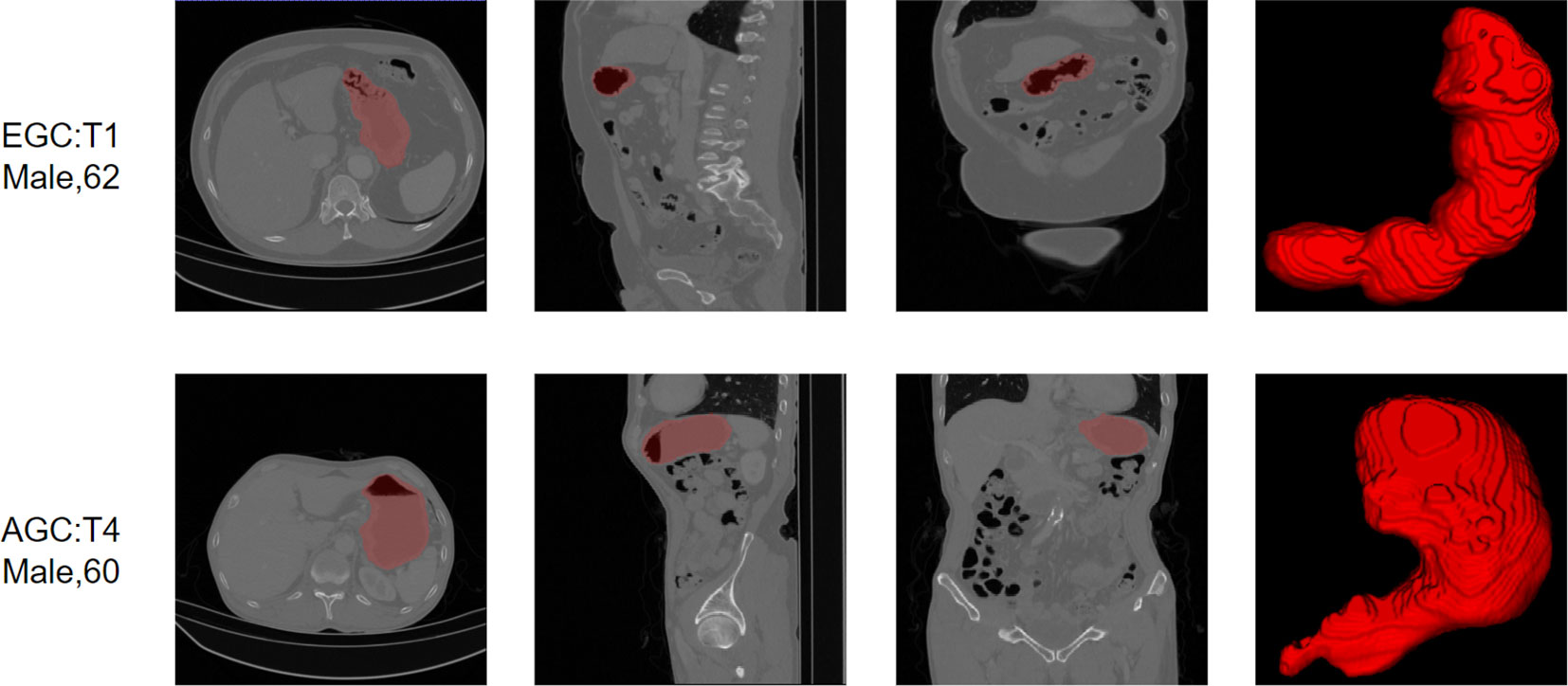

On a dataset of 50 human-annotated gastric segmentations, our model achieved an average dice accuracy of 0.94, with the highest dice coefficient recorded as 0.97 and the lowest as 0.90. The segmentation results are illustrated in Figure 4, demonstrating a close match between the model’s segmentation output and the actual stomach outline.

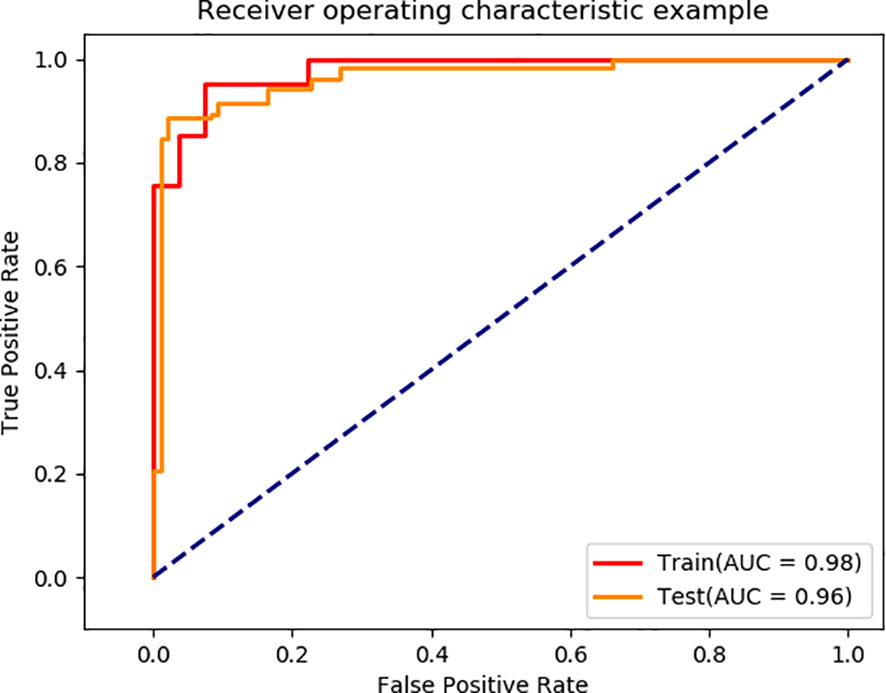

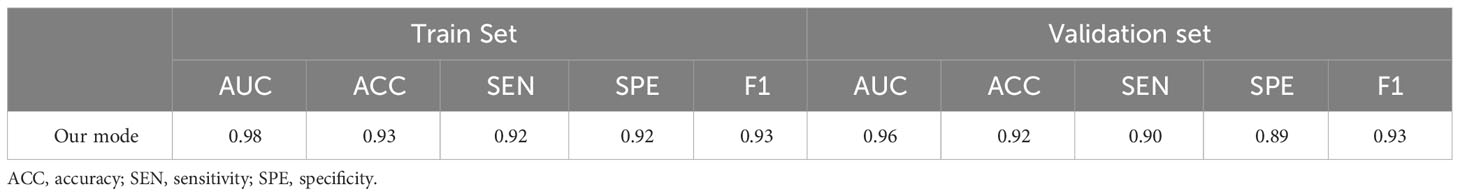

Using the segmented images as input for EGC detection, the final model achieved AUC values of 0.98 and 0.96 on the training and validation sets, respectively (Figure 5). The model’s performance metrics on the training set are as follows: accuracy of 0.93, sensitivity of 0.92, specificity of 0.92, and F1 score of 0.93 (Table 2). On the validation set, the model achieved an accuracy of 0.92, sensitivity of 0.90, specificity of 0.89, and F1 score of 0.93. These experimental results demonstrate that the proposed model exhibits high discriminative ability in distinguishing between EGC and AGC.

Model robustness assessment

To examine the impact of different data distributions on the model, we randomly divided the data into training and validation sets in an 8:2 ratio, performed model training and testing, and repeated this process more than three times. The robustness of the model was evaluated based on the performance of the three models. Supplementary Figure 2 and Supplementary Table 1 present the model’s performance on various training and validation sets. The results indicate that the model achieved an AUC greater than 0.90 on both the training set and validation set, demonstrating the robustness of the deep learning model proposed in this paper.

Discussion

In this study, we developed a deep learning model for accurate EGC screening using CT images without human annotation. The model consists of two stages: automatic gastric segmentation and EGC diagnosis. It achieved AUC values of 0.98 and 0.96 in the training set and validation set, respectively. Additionally, our model demonstrated robustness in EGC screening through multiple training iterations with varied data groupings. Our study provides a clinically applicable preoperative GC staging model for the GC patients, which can assist doctors in formulating more accurate diagnosis and treatment plans.

Preoperative diagnosis of GC has been a focal point of research. Accurately assessing the T stage in the GC classification system is crucial for determining treatment options and prognosis. Understaging may lead to incomplete tumor resection, while overstaging may result in unnecessary overtreatment. EUS could be helpful for identifying superficial that do not penetrate further than the submucosa (T1) or muscularis propria (T2) from advanced cancers (T3–T4) (5). Studies have reported that EUS achieves a high overall accuracy in T stage assessment, with a sensitivity of 86% (32). Zhao et al. demonstrated that the accuracy of staging using multi-slice spiral CT images and gastroscope can reach 83.67% through statistical analysis (33). Guan et al. employed Yolov5-based DetectionNet for staging gastric cancer on arterial phase CT images, achieving an average accuracy of 0.909 (34). Wang et al., using gastric windows on CT images, achieved an accuracy rate of 90% in diagnosing T1 EGC (35). In our study, the model achieved an accuracy of 94.6% in diagnosing EGC in the training set and 90% in the internal validation set. Furthermore, the reliability study demonstrated the stability of the model.

Artificial intelligence technologies, such as radiomics and deep learning, have gained significant attention in the field of gastric cancer. These techniques play a crucial role in tasks like preoperative TNM staging prediction, differential diagnosis, treatment response assessment, and prognosis estimation (36). Radiomics, in particular, has been employed for predicting treatment response and survival in gastric cancer, although there is heterogeneity and relatively low research quality in this area. Nonetheless, radiomics holds promise in predicting clinical outcomes due to its high interpretability (37). An essential initial step in radiomics is the segmentation of the region of interest (ROI), which can be time-consuming and demanding for annotators (38, 39). Thus, automatic segmentation of the ROI is of great significance for omics research. Hu Z et al. proposed a multi-task deep learning framework for automatic segmentation of gastric cancer in human tissue sections using whole slide images (WSI) (40). Zhang Y et al. presented a 3D multi-attention-guided multi-task learning network for gastric tumor segmentation on CT images, achieving a Dice score of 62.7% by leveraging complementary information from different dimensions, scales, and tasks (41). However, there are limited studies on the segmentation of gastric cancer CT, and the Dice accuracy falls short of meeting the requirements of subsequent experiments. Therefore, the model we developed focuses on achieving automatic segmentation of the stomach in the first stage.

This paper used a two-stage deep learning framework that first segments and then classifies. This framework has a wide range of applications in the medical field. In brain disease research, many researchers first remove the skull by segmentation, and then perform subsequent classification modeling (42, 43). In order to classify 18 types of brain tumors more accurately, Gao et al. (44) adopted a deep learning framework that first segmented the tumor area and then performed multi-classification. For the diagnosis of chest diseases, researchers often use segmentation models to extract lung areas, and then perform nodule detection (45), Covid-19 detection (46), interstitial lung disease (47), etc. Compared with models that directly use original images for classification, the two-stage deep learning framework can first segment the area where the target of interest is located, so that the classification model focuses on the target area, which not only improves efficiency, but also improves classification accuracy.

Despite the promising potential of AI in the field of gastric cancer, its clinical application has been hindered by its low interpretability (48, 49). In our study, we utilized the Gradient Weighted Class Activation Map (Grad-CAM) technique to visualize the regions of focus in the model. However, this technique only provided information about the areas the model concentrated on, without revealing the specific features on which the model relied to classify EGC versus AGC (Supplementary Figure 3). Therefore, it is essential to conduct further analysis of the model’s interpretability and verify its reliability from a clinical perspective.

This study has several limitations: Firstly, it is a retrospective study, which may introduce statistical biases. Subsequent studies will include more prospective investigations. Secondly, all the data used in this study originated from a single center. Therefore, future research should incorporate data from multiple centers to develop a more general and robust system. Thirdly, the patients included in this study were exclusively those with pathologically diagnosed gastric cancer. Consequently, the EGC detection model proposed in this paper may not be suitable for detecting EGC in CT images without gastric cancer. Lastly, this study solely focused on CT images in the portal phase, and subsequent research will explore joint multi-sequence CT image modeling.

Conclusion

We have developed a deep learning model that automates the screening of EGC in CT images of patients with GC. The model follows a three-step process: first, it performs stomach segmentation; then, it crops the segmented stomach region; and finally, it feeds the cropped images into the classification network for EGC screening. As the entire process is computer-based, this model holds significant clinical value by assisting doctors in assessing the gastric cancer status of patients and devising personalized treatment plans.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by The Ethics Review Board of the First Affiliated Hospital of Wenzhou Medical University and Wenzhou Central Hospital. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

ZG: Conceptualization, Formal Analysis, Funding acquisition, Investigation, Project administration, Writing – original draft. ZY: Data curation, Methodology, Writing – original draft. XZ: Software, Writing – original draft. CC: Writing – original draft. ZP: Writing – original draft. XC: Writing – original draft. WL: Writing – original draft. JC: Writing – original draft. QZ: Writing – review & editing. XS: Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was supported by grants from Zhejiang Engineering Research Center of Intelligent Medicine (2016E10011).

Conflict of interest

Author XZ was employed by the company Wenzhou Data Management and Development Group Co., Ltd.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fonc.2023.1265366/full#supplementary-material

References

1. Bray F, Ferlay J, Soerjomataram I, Siegel RL, Torre LA, Jemal A. Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin (2018) 68(6):394–424. doi: 10.3322/caac.21492

2. Siegel RL, Miller KD, Fuchs HE, Jemal A. Cancer statistics, 2021. CA Cancer J Clin (2021) 71(1):7–33. doi: 10.3322/caac.21654

3. Luo M, Li L. Clinical utility of miniprobe endoscopic ultrasonography for prediction of invasion depth of early gastric cancer: A meta-analysis of diagnostic test from PRISMA guideline. Med (Baltimore) (2019) 98:e14430. doi: 10.1097/MD.0000000000014430

4. Machlowska J, Baj J, Sitarz M, Maciejewski R, Sitarz R. Gastric cancer: epidemiology, risk factors, classification, genomic characteristics and treatment strategies. Int J Mol Sci (2020) 21(11):4012. doi: 10.3390/ijms21114012

5. Smyth EC, Nilsson M, Grabsch HI, van Grieken NC, Lordick F. Gastric cancer. Lancet (2020) 396:635–48. doi: 10.1016/S0140-6736(20)31288-5

6. Marano L, Verre L, Carbone L, Poto GE, Fusario D, Venezia DF, et al. Current trends in volume and surgical outcomes in gastric cancer. J Clin Med (2023) 12(7):2708. doi: 10.3390/jcm12072708

7. Feng L, Liu Z, Li C, Li Z, Lou X, Shao L, et al. Development and validation of a radiopathomics model to predict pathological complete response to neoadjuvant chemoradiotherapy in locally advanced rectal cancer: a multicentre observational study. Lancet Digit Health (2022) 4:e8–17. doi: 10.1016/S2589-7500(21)00215-6

8. Zeng Q, Li H, Zhu Y, Feng Z, Shu X, Wu A, et al. Development and validation of a predictive model combining clinical, radiomics, and deep transfer learning features for lymph node metastasis in early gastric cancer. Front Med (2022) 9:986437. doi: 10.3389/fmed.2022.986437

9. Cui Y, Zhang J, Li Z, Wei K, Lei Y, Ren J, et al. A CT-based deep learning radiomics nomogram for predicting the response to neoadjuvant chemotherapy in patients with locally advanced gastric cancer: A multicenter cohort study. EClinicalMedicine (2022) 46:101348. doi: 10.1016/j.eclinm.2022.101348

10. Li J, Fang M, Wang R, Dong D, Tian J, Liang P, et al. Diagnostic accuracy of dual-energy CT-based nomograms to predict lymph node metastasis in gastric cancer. Eur Radiol (2018) 28:5241–9. doi: 10.1007/s00330-018-5483-2

11. Kim JW, Shin SS, Heo SH, Lim HS, Lim NY, Park YK, et al. The role of three-dimensional multidetector CT gastrography in the preoperative imaging of stomach cancer: emphasis on detection and localization of the tumor. Korean J Radiol (2015) 16(1):80–9. doi: 10.3348/kjr.2015.16.1.80

12. Ma T, Li X, Zhang T, Duan M, Ma Q, Cong L, et al. Effect of visceral adipose tissue on the accuracy of preoperative T-staging of gastric cancer. Eur J Radiol (2022) 155:110488. doi: 10.1016/j.ejrad.2022.110488

13. Nie RC, Yuan SQ, Chen XJ, Chen S, Xu LP, Chen YM, et al. Endoscopic ultrasonography compared with multidetector computed tomography for the preoperative staging of gastric cancer: a meta-analysis. World J Surg Oncol (2017) 15:1–8. doi: 10.1186/s12957-017-1176-6

14. Ungureanu BS, Sacerdotianu VM, Turcu-Stiolica A, Turcu-Stiolica A, Cazacu IM, Saftoiu A. Endoscopic ultrasound vs. computed tomography for gastric cancer staging: a network meta-analysis. Diagnostics (2021) 11(1):134. doi: 10.3390/diagnostics11010134

15. Chen J, Zhou C, He M, Zhen Z, Wang J, Hu X. A meta-analysis and systematic review of accuracy of endoscopic ultrasound for N staging of gastric cancers. Cancer Manage Res (2019) 11:8755. doi: 10.2147/CMAR.S200318

16. Wang L, Liu Z, Kou H, He H, Zheng B, Zhou L, et al. Double contrast-enhanced ultrasonography in preoperative T staging of gastric cancer: a comparison with endoscopic ultrasonography. Front Oncol (2019) 9:66. doi: 10.3389/fonc.2019.00066

17. Hagi T, Kurokawa Y, Mizusawa J, Fukagawa T, Katai H, Sano T, et al. Impact of tumor-related factors and inter-institutional heterogeneity on preoperative T staging for gastric cancer. Future Oncol (2022) 18(20):2511–9. doi: 10.2217/fon-2021-1069

18. Chai C, Wu M, Wang H, Cheng Y, Zhang S, Zhang K, et al. CAU-net: A deep learning method for deep gray matter nuclei segmentation. Front Neurosci (2022) 16. doi: 10.3389/fnins.2022.918623

19. Alsubai S, Khan HU, Alqahtani A, Sha M, Abbas S, Mohammad UG. Ensemble deep learning for brain tumor detection. Front Comput Neurosci (2022) 16:1005617. doi: 10.3389/fncom.2022.1005617

20. Jiang H, Guo W, Yu Z, Lin X, Zhang M, Jiang H, et al. A comprehensive prediction model based on MRI radiomics and clinical factors to predict tumor response after neoadjuvant chemoradiotherapy in rectal cancer. Acad Radiol (2023) 30:S185–98. doi: 10.1016/j.acra.2023.04.032

21. Alam MJ, Zaman S, Shill PC, Kar S, Hakim MA. (2023). Automated gastrointestinal tract image segmentation of cancer patient using leVit-UNet to automate radiotherapy, in: 2023 International Conference on Electrical, Computer and Communication Engineering (ECCE), pp. 1–5. Chittagong, Bangladesh: IEEE.

22. Arai J, Aoki T, Sato M, Niikura R, Suzuki N, Ishibashi R, et al. Machine learning–based personalized prediction of gastric cancer incidence using the endoscopic and histologic findings at the initial endoscopy. Gastrointest Endoscopy (2022) 95(5):864–72. doi: 10.1016/j.gie.2021.12.033

23. Ba W, Wang S, Shang M, Zhang Z, Wu H, Yu C, et al. Assessment of deep learning assistance for the pathological diagnosis of gastric cancer. Modern Pathol (2022) 35(9):1262–8. doi: 10.1038/s41379-022-01073-z

24. Zeng Q, Feng Z, Zhu Y, Zhang Y, Shu X, Wu A, et al. Deep learning model for diagnosing early gastric cancer using preoperative computed tomography images. Front Oncol (2022) 12:1065934. doi: 10.3389/fonc.2022.1065934

25. Ma T, Cui J, Wang L, Li H, Ye Z, Gao X. A multiphase contrast-enhanced CT radiomics model for prediction of human epidermal growth factor receptor 2 status in advanced gastric cancer. Front Genet (2022) 13:968027. doi: 10.3389/fgene.2022.968027

26. Xie K, Cui Y, Zhang D, He W, He Y, Gao D, et al. Pretreatment contrast-enhanced computed tomography radiomics for prediction of pathological regression following neoadjuvant chemotherapy in locally advanced gastric cancer: a preliminary multicenter study. Front Oncol (2022) 11:770758. doi: 10.3389/fonc.2021.770758

27. Shin H, Kim H, Kim S, Jun Y, Eo T, Hwang D. (2023). SDC-UDA: volumetric unsupervised domain adaptation framework for slice-direction continuous cross-modality medical image segmentation, in: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (Vancouver, Canada: IEEE), pp. 7412–21.

28. Zhu JY, Park T, Isola P, Efros AA. (2017). Unpaired image-to-image translation using cycle-consistent adversarial networks, in: Proceedings of the IEEE international conference on computer vision (Venice, Italy:IEEE), pp. 2223–32.

29. Çiçek Ö, Abdulkadir A, Lienkamp SS, Lienkamp SS, Brox T, Ronneberger O. (2016). 3D U-Net: learning dense volumetric segmentation from sparse annotation, in: Medical Image Computing and Computer-Assisted Intervention–MICCAI 2016: 19th International Conference, Athens, Greece, October 17-21, 2016. pp. 424–32. Athens, Greece: Springer International Publishing, Proceedings, Part II 19.

30. Mehta NK, Prasad SS, Saurav S, Saurav S, Saini R, Singh S. Three-dimensional DenseNet self-attention neural network for automatic detection of student’s engagement[J]. Appl Intell (2022) 52(12):13803–23. doi: 10.1007/s10489-022-03200-4

31. Chen L, Yu Z, Huang J, Shu L, Kuosmanen P, Shen C, et al. Development of lung segmentation method in x-ray images of children based on TransResUNet. Front Radiol (2023) 3:1190745. doi: 10.3389/fradi.2023.1190745

32. Pei Q, Wang L, Pan J, Ling T, Lv Y, Zou X. Endoscopic ultrasonography for staging depth of invasion in early gastric cancer: a meta-analysis. J Gastroenterol Hepatol (2015) 30(11):1566–1573. doi: 10.1111/jgh.13014

33. Zhao S, Bi Y, Wang Z, Zhang F, Zhang Y, Xu Y. Accuracy evaluation of combining gastroscopy, multi-slice spiral CT, Her-2, and tumor markers in gastric cancer staging diagnosis. World J Surg Oncol (2022) 20(1):1–10. doi: 10.1186/s12957-022-02616-z

34. Guan X, Lu N, Zhang J. Accurate preoperative staging and HER2 status prediction of gastric cancer by the deep learning system based on enhanced computed tomography. Front Oncol (2022) 12:950185. doi: 10.3389/fonc.2022.950185

35. Wang ZL, Li YL, Tang L, Tang L, Li XT, Bu ZD, et al. Utility of the gastric window in computed tomography for differentiation of early gastric cancer (T1 stage) from muscularis involvement (T2 stage). Abdominal Radiol (2021) 46:1478–86. doi: 10.1007/s00261-020-02785-z

36. Qin Y, Deng Y, Jiang H, Hu N, Song B. Artificial intelligence in the imaging of gastric cancer: current applications and future direction. Front Oncol (2021) 11:631686. doi: 10.3389/fonc.2021.631686

37. Chen Q, Zhang L, Liu S, You J, Chen L, Jin Z, et al. Radiomics in precision medicine for gastric cancer: opportunities and challenges. Eur Radiol (2022) 32(9):5852–68. doi: 10.1007/s00330-022-08704-8

38. Van Timmeren JE, Cester D, Tanadini-Lang S, Tanadini-Lang S, Alkadhi H, Baessler B. Radiomics in medical imaging—”how-to” guide and critical reflection. Insights into Imaging (2020) 11(1):1–16. doi: 10.1186/s13244-020-00887-2

39. Scapicchio C, Gabelloni M, Barucci A, Cioni D, Saba L, Neri E. A deep look into radiomics. La radiologia Med (2021) 126(10):1296–311. doi: 10.1007/s11547-021-01389-x

40. Hu Z, Deng Y, Lan J, Wang T, Han Z, Huang Y, et al. A multi-task deep learning framework for perineural invasion recognition in gastric cancer whole slide images. Biomed Signal Process Control (2023) 79:104261. doi: 10.1016/j.bspc.2022.104261

41. Zhang Y, Li H, Du J, Qin J, Wang T, Chen Y, et al. 3D multi-attention guided multi-task learning network for automatic gastric tumor segmentation and lymph node classification. IEEE Trans Med Imaging (2021) 40(6):1618–31. doi: 10.1109/TMI.2021.3062902

42. Jang J, Hwang D. M3T: three-dimensional Medical image classifier using Multi-plane and Multi-slice Transformer. Proc IEEE/CVF Conf Comput Vision Pattern recogn (2022), 20718–29. doi: 10.1109/CVPR52688.2022.02006

43. Tiwari A, Srivastava S, Pant M. Brain tumor segmentation and classification from magnetic resonance images: Review of selected methods from 2014 to 2019[J]. Pattern recogn Lett (2020) 131:244–60. doi: 10.1016/j.patrec.2019.11.020

44. Gao P, Shan W, Guo Y, Wang Y, Sun R, Cai J, et al. Development and validation of a deep learning model for brain tumor diagnosis and classification using magnetic resonance imaging. JAMA Netw Open (2022) 5(8):e2225608. doi: 10.1001/jamanetworkopen.2022.25608

45. Li R, Xiao C, Huang Y, Hassan H, Huang B. Deep learning applications in computed tomography images for pulmonary nodule detection and diagnosis: A review. Diagnostics (2022) 12(2):298. doi: 10.3390/diagnostics12020298

46. Harmon SA, Sanford TH, Xu S, Turkbey EB, Roth H, Xu Z, et al. Artificial intelligence for the detection of COVID-19 pneumonia on chest CT using multinational datasets. Nat Commun (2020) 11(1):4080. doi: 10.1038/s41467-020-17971-2

47. Pawar SP, Talbar SN. Two-stage hybrid approach of deep learning networks for interstitial lung disease classification. BioMed Res Int (2022). doi: 10.1155/2022/7340902

48. Zhou SK, Greenspan H, Davatzikos C, Duncan JS, Van Ginneken B, Madabhushi A, et al. A review of deep learning in medical imaging: Imaging traits, technology trends, case studies with progress highlights, and future promises. Proc IEEE (2021) 109(5):820–38. doi: 10.1109/JPROC.2021.3054390

Keywords: early gastric cancer (EGC), deep learning, CT, automatically stomach segmentation, gastric cancer classification

Citation: Gao Z, Yu Z, Zhang X, Chen C, Pan Z, Chen X, Lin W, Chen J, Zhuge Q and Shen X (2023) Development of a deep learning model for early gastric cancer diagnosis using preoperative computed tomography images. Front. Oncol. 13:1265366. doi: 10.3389/fonc.2023.1265366

Received: 22 July 2023; Accepted: 15 September 2023;

Published: 06 October 2023.

Edited by:

Zequn Li, The Affiliated Hospital of Qingdao University, ChinaReviewed by:

Wei Xu, The First Affiliated Hospital of Soochow University, ChinaNatale Calomino, University of Siena, Italy

Michela Giulii Capponi, Santo Spirito in Sassia Hospital, Italy

Beishi Zheng, Metropolitan College of New York, United States

Copyright © 2023 Gao, Yu, Zhang, Chen, Pan, Chen, Lin, Chen, Zhuge and Shen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Qichuan Zhuge, qc.zhuge@wmu.edu.cn; Xian Shen, 13968888872@163.com

†These authors have contributed equally to this work

Zhihong Gao1†

Zhihong Gao1† Zhuo Yu

Zhuo Yu Chun Chen

Chun Chen Zhifang Pan

Zhifang Pan Xiaodong Chen

Xiaodong Chen Qichuan Zhuge

Qichuan Zhuge Xian Shen

Xian Shen