Naturalistic Stimuli in Affective Neuroimaging: A Review

- Human Information Processing Laboratory, Faculty of Social Sciences, Tampere University, Tampere, Finland

Naturalistic stimuli such as movies, music, and spoken and written stories elicit strong emotions and allow brain imaging of emotions in close-to-real-life conditions. Emotions are multi-component phenomena: relevant stimuli lead to automatic changes in multiple functional components including perception, physiology, behavior, and conscious experiences. Brain activity during naturalistic stimuli reflects all these changes, suggesting that parsing emotion-related processing during such complex stimulation is not a straightforward task. Here, I review affective neuroimaging studies that have employed naturalistic stimuli to study emotional processing, focusing especially on experienced emotions. I argue that to investigate emotions with naturalistic stimuli, we need to define and extract emotion features from both the stimulus and the observer.

Introduction

A touching movie, a horror story, or a vivid discussion all evoke strong emotions. What are the neural dynamics of emotional processing in such natural conditions? Affective neuroimaging has traditionally used simple, controlled paradigms, that rely on varying one aspect of a stimulus at the time and often use short, prototypical emotion stimuli such as static images, movie clips, or facial expressions. Naturalistic paradigms have emerged as an approach in neuroscience with great potential for deciphering the neural dynamics in close-to-real-life settings (see, e.g., Sonkusare et al., 2019; Nastase et al., 2020). Compared to controlled paradigms, naturalistic stimuli, such as longer movies and stories, are complex and evoke multimodal processing and strong emotions. However, investigating the neural mechanisms underlying time-varying, higher-order phenomena such as emotions in naturalistic paradigms requires that the mixture of emotion-related signals and their dynamics are extracted and modeled reliably (Simony and Chang, 2020).

Emotions are generally defined as momentary processes caused by internal or external stimuli and leading to automatic changes in multiple functional components, including physiology, behavior, motivation, and conscious experiences (e.g., Barrett et al., 2007; Scherer, 2009; LeDoux, 2012; Anderson and Adolphs, 2014). Compared to semantic and object features that have been successfully extracted from naturalistic stimuli to model dynamic changes in brain activity (Huth et al., 2012, 2016; de Heer et al., 2017), emotion features need to be extracted both from the stimulus and the observer. This results from the abstract nature of emotions: they require higher-order processing of stimuli, integrating information across modalities and processing levels, and emerge at higher areas along the processing hierarchy (Chikazoe et al., 2014). Emotions are not bound only to the sensory features but depend on context, previous experiences, and personal relevance. Thus, for investigating emotions with complex, naturalistic paradigms, a clear framework is necessary for defining what aspect of emotion is studied (see also Adolphs, 2017).

In this review, I describe such a framework based on a consensual, componential view of emotions (for similar approaches, see Mauss and Robinson, 2009; Sander et al., 2018; Vaccaro et al., 2020) and apply this framework to review how naturalistic stimuli have been employed to investigate emotions. Excellent recent reviews introduce methods for naturalistic paradigms in neuroscience (Nastase et al., 2019; Sonkusare et al., 2019) and summarize results across different domains of human cognition (Jääskeläinen et al., 2021). The focus of the current review is on emotions and especially on methodology: how emotions have been extracted from naturalistic, dynamic stimuli, and how emotion-related information has been integrated with brain imaging data. The current paper aims to serve as an introduction for affective scientists interested in embarking naturalistic brain imaging studies. To limit the scope, the focus is on how movies, stories, music, and text have been used to study the neural basis of emotional experiences in combination with functional magnetic resonance imaging. I will focus on studies that have modeled both the emotion-related information and brain activity continuously.

The Neural Basis of Emotional Experiences

An open question in affective neuroscience is how conscious emotional experiences emerge in the brain (for a review, see LeDoux and Hofmann, 2018). In affective science, emotion is usually broadly defined as a relatively short-lasting state of the nervous system caused by a relevant internal memory or external stimulus and leading to a set of complex changes in behavior, cognition, and body (Mobbs et al., 2019). Emotional experience refers to the subjectively felt, conscious part of emotion (Barrett et al., 2007; Adolphs, 2017). Different emotion theories emphasize either the distinctness of emotion categories (Ekman, 1992; Panksepp, 1992), bodily sensation in emotional experience (Craig, 2002; Damasio and Carvalho, 2013), the functional role of different emotions (Anderson and Adolphs, 2014; Sznycer et al., 2017), or construction of emotions from affective dimensions (Russell, 1980; Barrett, 2017). However, we are far from understanding how neural activity leads to a conscious emotional experience.

Despite their other differences, most current emotion theories agree that emotions are multicomponent phenomena (see e.g., Sander et al., 2018). A consensual, componential view of emotions suggests that they are elicited as a response to personally relevant stimuli and lead to responses in multiple functional components systems (Figure 1A; see e.g., Mauss and Robinson, 2009; Sander et al., 2018; Vaccaro et al., 2020). When relevant emotional input arrives in the brain, several automatic changes take place in split seconds: changes in autonomic nervous system activity including heart rate and respiration, in interoception, in behavior including facial behaviors, voice, bodily behaviors, and actions, in systems controlling behavior, in memories and expectations, and in the interpretation of our current state and that of the environment. These functional components correspond to brain regions or functional networks that all serve different roles in emotional processing and that activate during different emotions (Kober et al., 2008; Sander et al., 2018). For instance, distinct brain networks are responsible for processing somatosensory information, salience, and conceptualization. Brain networks underlying different functional components are activated in an emotion-specific way, leading to emotion-related changes in the state of the central nervous system, visible as different activity patterns of the whole nervous system (Damasio et al., 2000; Kragel and LaBar, 2015; Wager et al., 2015; Saarimäki et al., 2016, 2018). Currently, there is no consensus regarding how emotional experiences evolve in the central nervous system (for a review, see LeDoux and Hofmann, 2018). However, several theories posit that conscious emotional experience emerges as an integration of information from other functional components (Scherer, 2009; Sander et al., 2018). This integration potentially takes place in the default mode network which has emerged as a candidate network for integrating information and holding emotion-specific experiences (Satpute and Lindquist, 2019).

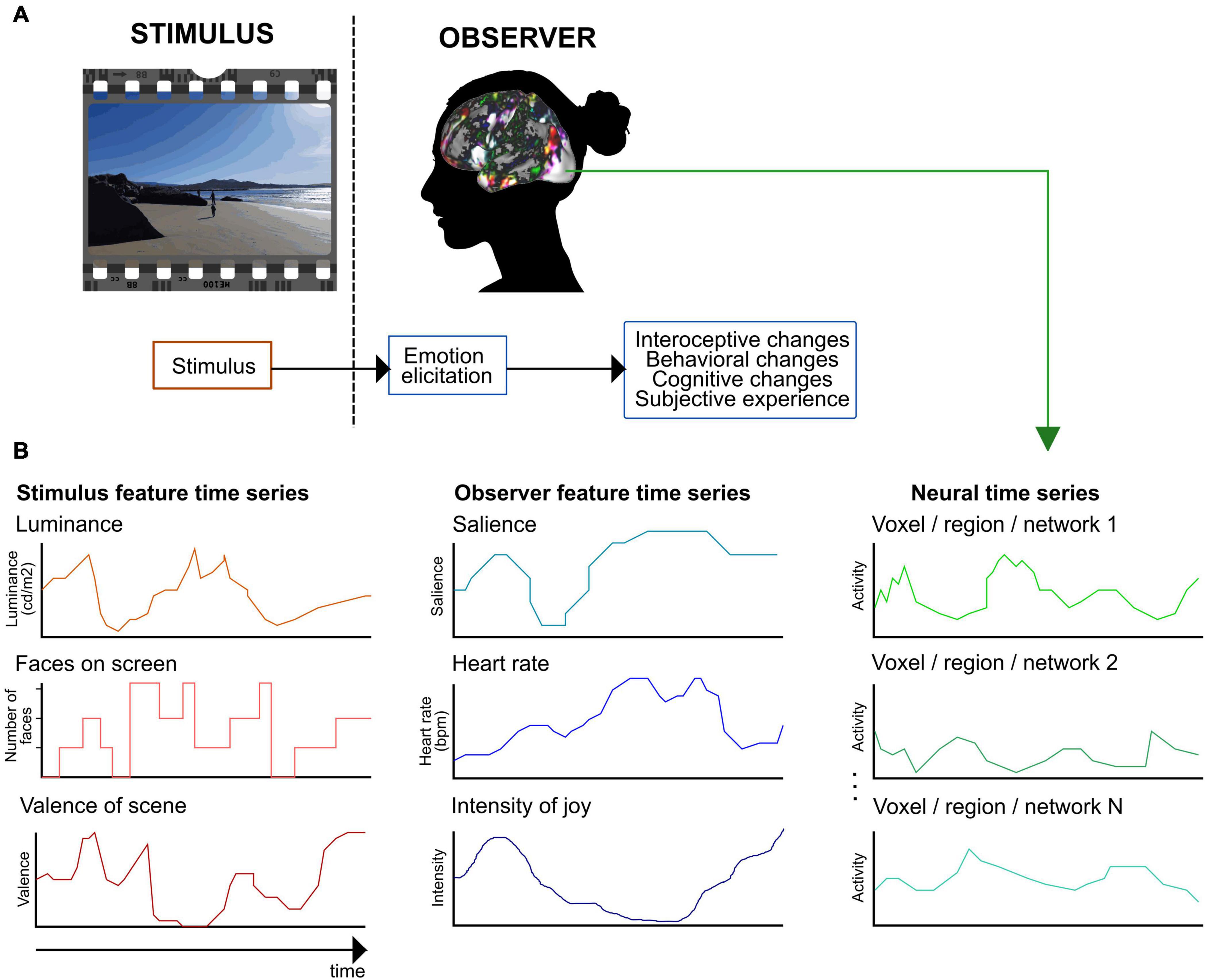

Figure 1. A framework for extracting emotion features in naturalistic paradigms. (A) Defining emotion features with a consensual component model of emotional processing (see, e.g., Mauss and Robinson, 2009; Anderson and Adolphs, 2014; Sander et al., 2018). First, the observer evaluates the stimulus’s relevance during an emotion elicitation step. Second, the elicited emotion leads to automatic changes in several functional components. (B) Extracting emotion features and example feature time series. With naturalistic paradigms, emotion features can be extracted both from the stimulus and the observer. Stimulus features are related to perceived emotions (here, depicted for a movie stimulus), observer features model experienced emotions. The figure shows examples of potential emotion features and their time series. In the next methodological step, the stimulus and observer feature time series are used to model the neural time series.

Despite the relevance of functional components in several current emotion theories, most studies have focused on one component at the time. To advance the understanding of how emotional experience emerges and how the different components interact dynamically, studies would need to extract and model variation in multiple components simultaneously. The few studies with such a multicomponent modeling approach show promising results. For instance, studies extracting physiological changes and experienced emotions have revealed candidate brain regions for integrating interoceptive and exteroceptive representations during emotions (Nguyen et al., 2016) or extracted separate neural representations of stimulus’s sensory and affective characteristics (Chikazoe et al., 2014). Multicomponent modeling requires paradigms that elicit multiple aspects of emotional processing. Here, naturalistic stimuli seem promising.

Emotions in Naturalistic Paradigms

Despite decades of emotion research, we still know relatively little about the neural dynamics of emotions in real-life. Traditionally, emotions have been induced in brain imaging studies using controlled paradigms, such as short videos, music excerpts, or pictures. Controlled studies model emotional responses as static averages across participants and across the whole duration of the stimulus. Emotions are inherently dynamic, and global post-stimulus ratings are not simple averages of the emotion fluctuations during the stimulus (Metallinou and Narayanan, 2013). Similarly, while emotional responses for prototypical, strong stimuli might be consistent across participants, emotions also show sizable individual variation. Thus, while the controlled paradigms are powerful in investigating the neural processes underlying a specific emotional component, such as the recognition of facial expressions, they lack the complicated dynamics of real-life emotional encounters.

Naturalistic stimuli such as longer movies and stories have emerged as a possibility to merge ecologically valid stimuli and laboratory settings to study human cognition (e.g., Hasson et al., 2004; Bartels and Zeki, 2005). Movies and stories are among the most effective stimuli for inducing strong negative and positive emotions in an ethically acceptable way (Gross and Levenson, 1995; Westermann et al., 1996). Thus, naturalistic, complex stimuli are increasingly used in affective neuroscience as they induce reliable, representative neural responses during emotional stimulation (Adolphs et al., 2016). Naturalistic stimuli are vivid, have a narrative structure, and provide a context, thus reflecting the nature of emotional experiences in real life (Goldberg et al., 2014). Importantly, naturalistic stimuli introduce various emotions in a rapidly changing fashion and evoke multisensory processing that preserves the natural timing relations between functional components (De Gelder and Bertelson, 2003). Thus, naturalistic stimuli seem ideal for combining the assets of both ecologically valid emotion elicitation and variation in emotional content, which suits well brain imaging studies with limitations in stimulation time.

The term “naturalistic” is used in neuroimaging to refer to complex stimuli including movies or spoken narratives, even though these stimuli are created by humans rather than occurring naturally (DuPre et al., 2020). Thus, naturalistic paradigms have characteristics that might help us assess how emotions are evoked with the stimuli. For instance, film theory illustrates that movie makers take advantage of local and global narrative cues to induce emotions. Horror films typically use local, visual cues to categorize a character’s behavior and appearance as unnatural or aversive and employ global narrative cues to generate expectations of negative outcome probability for the protagonist (Carroll and Seeley, 2013). On the other hand, empathy toward the protagonist is often induced by close-up scenes displaying a face in distress (Plantinga, 2013). Techniques such as framing, narration, and editing induce strong emotional reactions in viewers, and reciprocally affect the audience’s attention, including how they track actions and events in the film (Carroll and Seeley, 2013). A well-directed movie compared to an unstructured video clip results in more robust synchronization of viewers’ brain activity, suggesting that the stimulus guides their attention similarly (Hasson et al., 2008). Accordingly, the emotionally intense moments of the movies synchronize viewers’ brain activity, supporting the role of emotions in guiding the audience’s attention (Nummenmaa et al., 2012). Parsing the techniques used in creating the stimuli can thus help us better model the emotion features of the naturalistic stimulus.

Each type of naturalistic stimuli also has its unique characteristics, thus providing different tools for studying emotional processing. The current review largely focuses on movies and stories, which are by far the most widely used naturalistic stimuli in affective neuroscience (see Table 1), but other types of naturalistic stimuli such as music and literature have also been used to study the neural basis of emotions (for reviews, see Koelsch, 2010; Jacobs, 2015; Sachs et al., 2015). Movies and stories are forms of narratives where knowledge of emotion elicitation in other domains – including music and literature – is necessary for building complete models of emotional content (see Jääskeläinen et al., 2020). For instance, movies are often accompanied by music as soundtrack or text and stories as dialogue. Music evokes powerful and consistent emotional reactions (Scherer, 2004; Juslin, 2013). The musical soundtrack in movies provides the emotional tone of otherwise neutral or ambiguous scenes (Boltz, 2001; Eldar et al., 2007) and changes experienced emotions in romantic movies (e.g., happy vs. sad soundtrack Pehrs et al., 2013). On the other hand, spoken stories resemble our everyday interaction (Baumeister et al., 2004; Dunbar, 2004) and, in addition to the textual processing, allow investigation of prosody and interaction. Finally, literature can be used to model story comprehension and social representation of the characters (Mason and Just, 2009; Speer et al., 2009; Mar, 2011).

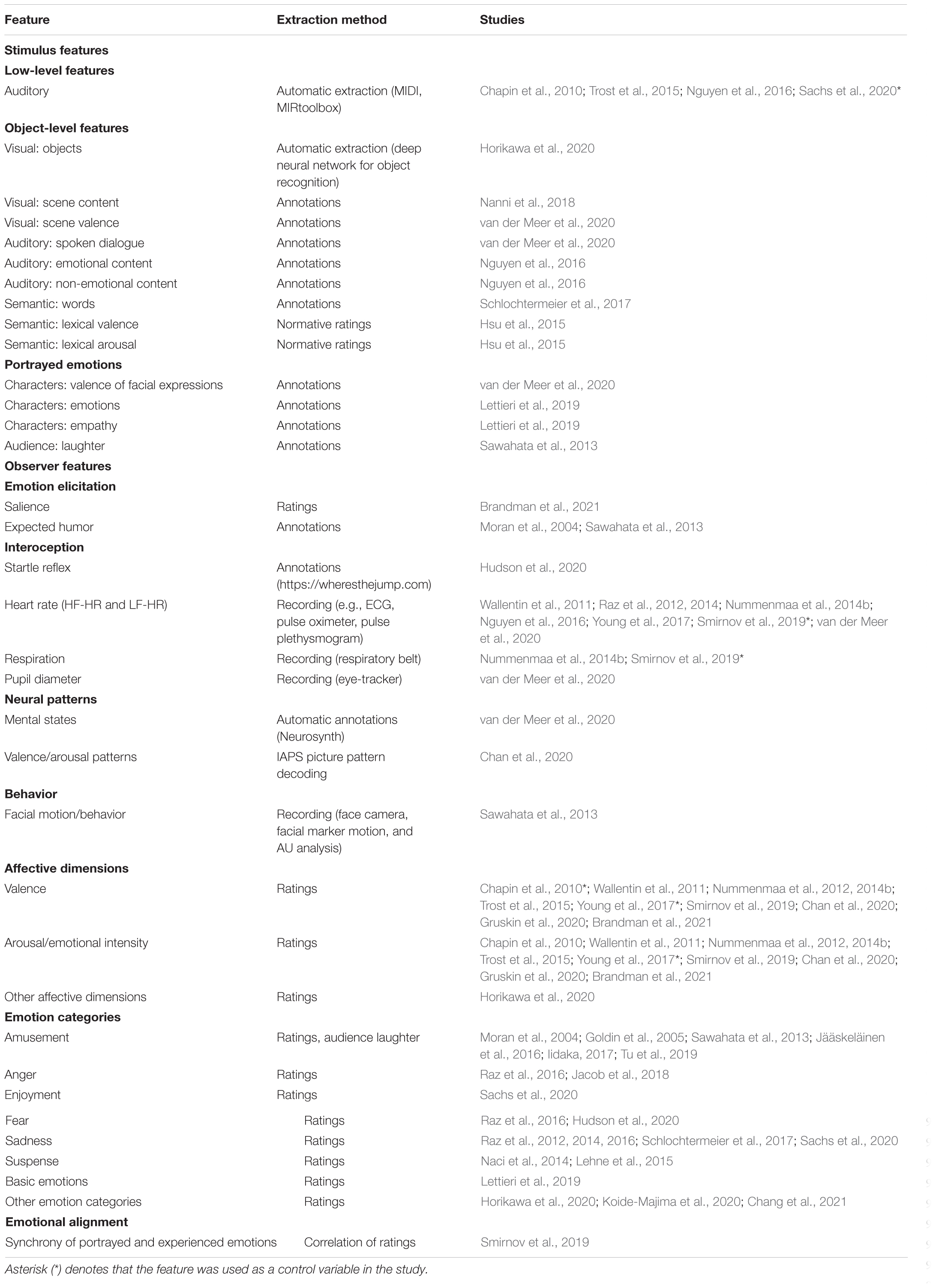

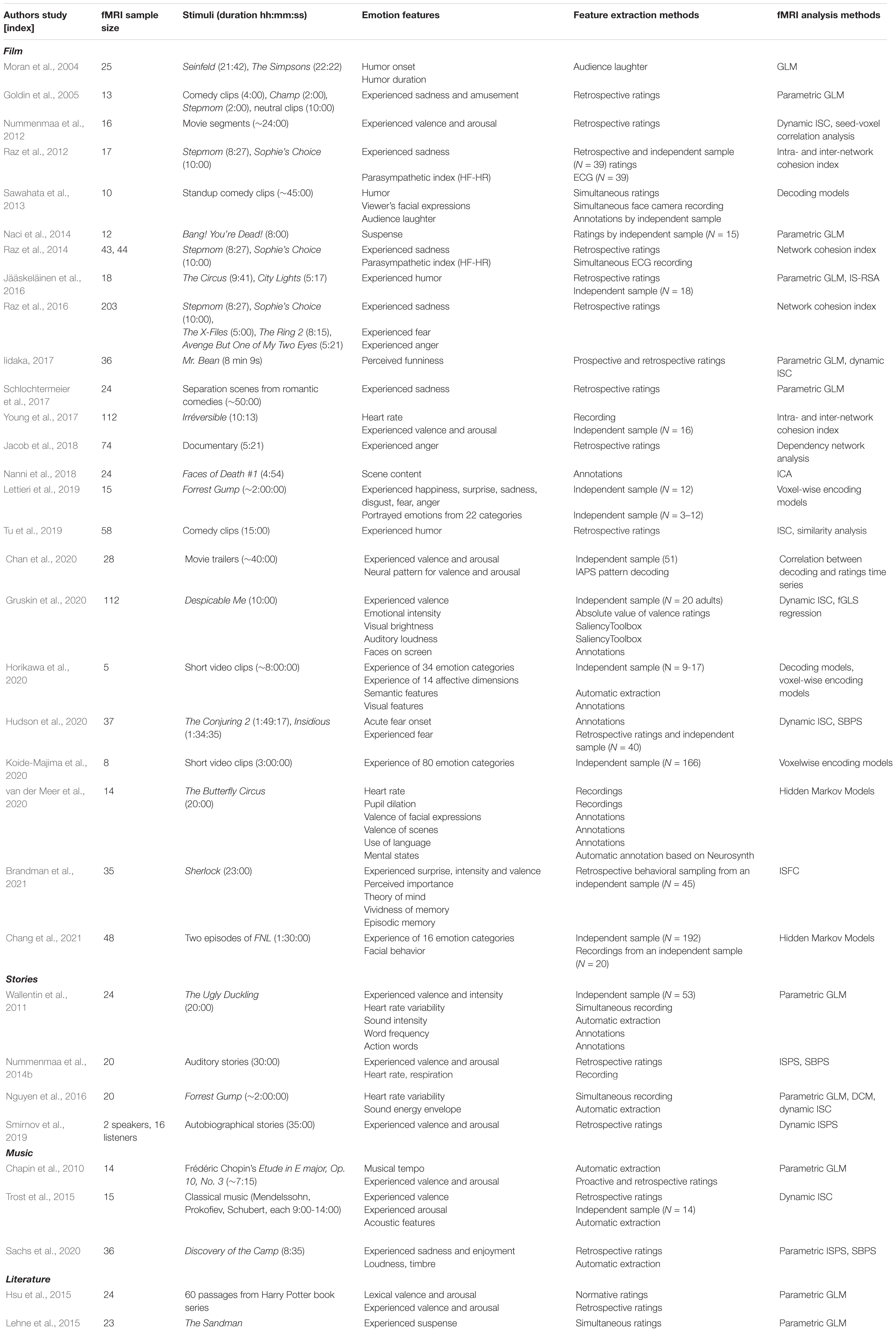

Table 1. Affective neuroimaging studies with naturalistic stimuli included in the review (organized by stimulus type and year of publication).

Taken together, naturalistic paradigms provide means for evoking multicomponent, dynamic emotion processes during brain imaging. However, the less controlled, complex nature of naturalistic paradigms also complicates the modeling of emotion-related information. The critical challenge is that brain activation during naturalistic stimuli reflects multimodal processing, not necessarily related to emotions. The signal reflects various functional component processes happening at different temporal and spatial scales, including but not restricted to sensory processing, evaluation, bodily changes, motor actions, integration, and labeling. Thus, we need to (1) define emotion-related functional components of interest and emotion features reflecting fluctuations in them, (2) extract the emotion features continuously across the stimulus, and (3) integrate the features with brain imaging data to model brain responses underlying different functional components. The current review highlights how these challenges have been solved in affective neuroimaging so far.

Defining Emotion Features in Naturalistic Paradigms

Modeling the stimulus and task properties is a central challenge with naturalistic paradigms and one that has great potential if solved (Simony and Chang, 2020). Compared to controlled paradigms, naturalistic stimuli allow investigating multiple functional components simultaneously, modeling emotion dynamics at different timescales, and studying individual variation. However, modeling the naturalistic stimulus requires a way to extract time-varying, emotion-related processes. The interpretations based on combining stimulus-driven features with brain imaging data are based on two critical assumptions: first, that the researcher knows which features of the stimulus are driving the brain activity, and second, that these features have been modeled accurately (Chang et al., 2020). Following the terminology in naturalistic studies focusing on visual, object, and semantic features (e.g., Huth et al., 2012, 2016), the term emotion feature refers to any emotion-related variable that we can continuously extract from either the stimulus or the observer (Figure 1).

Emotions have several characteristics that guide the definition of features of interest. First, emotions should be modeled across the processing hierarchy. Emotional content leads to modality-specific changes across all functional systems, including separate sensory systems (Miskovic and Anderson, 2018; Kragel et al., 2019). The functional components participating in emotional processing range across the different levels of processing hierarchy, moving from lower, sensory-related processing toward a higher level of abstraction and binding of several lower-level features. Higher-level features in this context refer to socio-emotional representations that are more abstract and consist of integrating different processes including perception, memory, prediction, language, and interoception (Barrett, 2017; Satpute and Lindquist, 2019; Jolly and Chang, 2021). Thus, changes in low-level stimulus features, scene contents, and portrayed emotions are equally crucial for driving emotional responses. However, the low-level features are often treated as nuisance variables, and their effect is removed from the data to guarantee that the brain activity is not solely related to perceptual processing. Yet, the emotional experience depends on integration across all functional components spanning various processing levels including perception (Sander et al., 2018; Satpute and Lindquist, 2019). Features across the processing hierarchy are necessary for a comprehensive model of emotional processing.

Second, emotions result from both the stimulus and the dynamic processes elicited in the observer. Unlike other time-varying features that have been successfully modeled from naturalistic stimuli, including visual, object, and semantic features (Huth et al., 2012, 2016; de Heer et al., 2017), emotion features cannot be modeled only from the stimulus: emotion elicitation also depends on the observer’s evaluation processes. Furthermore, emotional processing includes activating wide-spread functional components within the observer, such as those corresponding to bodily changes and motor planning, which are not directly related to the incoming stimulus but affect the resulting emotional experience (Damasio and Carvalho, 2013; Barrett, 2017; Nummenmaa and Saarimäki, 2019). Thus, accurate modeling of emotion features requires that features are extracted both from the stimulus and the observer.

Third, naturalistic stimuli might lead to various types of emotional processing, which might be necessary to define and model separately (Adolphs, 2017). Emotion features might reflect an emotional state (current state of the central nervous system), emotional experiences (subjectively felt part of the emotional state), semantic processing of emotion concepts (such as fear or love), emotional expressions (automatic or voluntary behaviors associated with the emotional state, including facial behavior), or perception of emotions (understanding the emotional states of other people). For instance, the distinction between perceived and experienced emotions is critical (Bordwell and Thompson, 2008): perceived emotions refer to the emotions portrayed by the characters in the naturalistic stimuli as perceived by the observer, while experienced emotions refer to the emotions elicited in the observer. Perceived emotions are guided by low-level features, such as movie soundtrack or the characters’ behaviors (Tarvainen et al., 2014), and elicit automatic socio-emotional processing such as empathy and sympathy, thus leading to emotional experiences. Perceived and experienced emotions often align, but not always: observing a sad facial expression might lead to pity or satisfaction depending on the context and the character (Labs et al., 2015). Furthermore, a horror film or a sad film without a happy end can be enjoyed (Schramm and Wirth, 2010). Thus, the different aspects of emotional processing elicited by the stimulus need to be distinguished and potentially modeled separately.

Once the emotion features of interest have been defined, the next step is to obtain a continuous time series characterizing the dynamic variation of the feature during the stimulation.

Extracting Emotion Features

Data-driven analyses during naturalistic paradigms often obtain only one, global estimate of stimulus-induced brain activity for the whole duration of the stimulus. This averaging approach has two drawbacks (Bolton et al., 2020): first, it is impossible to know which emotion features drove the estimate, and second, it lowers the sensitivity as temporally localized alignment of feature and brain activity might be concealed by the other time points where feature and brain activity are not aligned. For instance, if we extract the average, stimulus-driven activity across whole emotional movies or narratives, we see mostly primary sensory areas responding. However, adding a continuous, dynamic model of the stimulus features reveals how emotion-related brain networks vary in time throughout the stimulation (Goldin et al., 2005; Nummenmaa et al., 2012, 2014b; Smirnov et al., 2019).

Thus, for accurate modeling of emotion-related variation during naturalistic stimuli, emotion features should be extracted continuously (Figure 1B). The optimal extraction method depends on the feature (Mauss and Robinson, 2009). In affective neuroscience, human ratings and annotations, automatic extraction tools, physiological recordings, and detection of neural activity patterns have been employed to extract continuous emotion features from naturalistic stimuli (see Table 2).

Human Ratings and Annotations

Traditionally, emotion features have been extracted using human ratings or annotations. The words ‘ratings’ and ‘annotations’ are used somewhat interchangeably to describe any manually extracted descriptions of the stimuli, but ratings often refer to more subjective features (i.e., observer features) while annotations refer to features that are considered relatively consistent across individuals (i.e., stimulus features). Continuous emotion rating tools originate from the intensity profile tracking approach, which modeled emotion-related changes during stimulation using manual drawings (Frijda et al., 1991; Sonnemans and Frijda, 1994). More recently, various computerized tools have been developed for collecting the ratings (e.g., Nagel et al., 2007; Nummenmaa et al., 2012). Usually, continuous rating tools include moving a slider to indicate dynamic changes in some emotion feature, such as emotional intensity, during the stimulus presentation.

Human annotations have been used to extract various emotion, semantic, and object features from naturalistic stimuli, ranging from low-level visual features to scene contents and portrayed emotions. Manual annotation systems of stimulus-related emotion features have been developed for different social features (Lahnakoski et al., 2012), emotional behaviors, including facial and bodily expressions of emotions (for a review, see Witkower and Tracy, 2019), moments of acute fear onset leading to startle reflex (Hudson et al., 2020), laughter soundtrack during comedy shows (Sawahata et al., 2013), and character’s emotions and empathy (Lettieri et al., 2019).

Human ratings are currently the main method for accessing and modeling observer’s emotional experiences. Most naturalistic studies in affective neuroimaging have collected self-report ratings of some experienced aspect of emotions, including affective dimensions (valence and arousal: Wallentin et al., 2011; Nummenmaa et al., 2012, 2014b; Young et al., 2017; Smirnov et al., 2019; Gruskin et al., 2020; multiple affective dimensions: Horikawa et al., 2020; Koide-Majima et al., 2020) or intensity of categorical emotion experiences (Goldin et al., 2005; Raz et al., 2012; Naci et al., 2014; Jacob et al., 2018; Lettieri et al., 2019; Horikawa et al., 2020; Hudson et al., 2020). Furthermore, also interoceptive observer features including moments of acute fear onset leading to startle reflex (Hudson et al., 2020) have been extracted with ratings. However, extracting experience-related emotion features using ratings has several challenges that affect how reliably the emotion features can be modeled.

Ideally, accurate modeling of experience-related emotion features requires measuring brain activity and ratings from the same participant. However, there are several methodological obstacles for this. First, ratings could be collected either simultaneously with brain imaging or retrospectively. However, collecting ratings during scanning affects neural processing (Taylor et al., 2003; Lieberman et al., 2007; Borja Jimenez et al., 2020). For instance, labeling an emotion requires a conceptual representation of the emotional state, and activating this representation might alter the experience itself (Lindquist et al., 2015; Satpute et al., 2016). On the other hand, the contents of consciousness might only be accessible during the experience itself (Nisbett and Wilson, 1977; Barrett et al., 2007; but see Petitmengin et al., 2013). Collecting ratings retrospectively relies on autobiographical memory and raises concerns of bias due to repetitive viewing effects. However, studies comparing retrospective and simultaneous ratings have shown no differences for the rated intensity of emotion categories such as sadness and amusement (Hutcherson et al., 2005; Raz et al., 2012), although the lack of observed differences might depend on the emotion feature being rated. For instance, while arousal ratings are consistent between repeated presentations of musical pieces, valence shows more variation (Chapin et al., 2010). At the neural level, repetitive viewing of the same stimulus does not alter local activity. However, the network configurations between repetitions differ, suggesting higher-level processing differences between the two viewings (Andric et al., 2016). Moreover, a memory effect on the behavioral similarity between repetitions of ratings cannot be ruled out: the fact that the participants had to perform the rating task already once increases the probability that they later remember what they rated and felt.

Another option is to collect ratings from an independent sample. Here, the assumption is that naturalistic stimuli elicit similar emotional processing across individuals. However, the rating task is often subjective and challenging, which leads to difficulties in obtaining consistent annotations (Devillers et al., 2006; Malandrakis et al., 2011). Uncertainties are caused by motivation, experience with ratings, the definition of emotional attributes, learning curve with the rating tool, shifting the baseline of emotion ratings when becoming more familiar with the dataset, and variation in annotation delays due to individual differences in perceptual processing (Metallinou and Narayanan, 2013). Annotating emotional content is a subjective task and depends on the individual’s perception, experiences, and culture. Continuous ratings increase the complexity of the emotion rating task, requiring a higher amount of attention and cognitive processing (Metallinou and Narayanan, 2013). Furthermore, individual trait differences, for instance, in social desirability or alexithymia, can affect the ratings (Mauss and Robinson, 2009). To ensure the quality, rating data collection requires careful design. Relative ratings are more straightforward to give than absolute ones, so focusing on change rather than absolute values might increase reliability (Metallinou and Narayanan, 2013). Also, instructions should be clear and unambiguous: for instance, when rating portrayed emotions, instructions to empathize with the character compared to detaching from them leads to stronger negative and positive peaks in ratings (Borja Jimenez et al., 2020).

Automatic Feature Extraction

The rapid developments in automatic feature extraction techniques allow replacing part of the human ratings with automatic tools. Automatic feature extraction usually relies on computer vision or machine learning to find stimulus features that together represent a category. Especially, clearly defined categories might benefit from automatic extraction (McNamara et al., 2017): object categories that were formerly tediously manually annotated can now be extracted automatically from naturalistic stimuli. Going beyond simple low-level sensory features, object and semantic categories, automatic modeling of emotional stimulus content is a central goal in affective computing.

Automatic tools have been developed for extracting both salient low-level visual features (e.g., SaliencyToolbox, Walther and Koch, 2006), auditory features (e.g., MIRtoolbox, Lartillot and Toiviainen, 2007; MIDI Toolbox, Eerola and Toiviainen, 2004), and higher-level features such as faces, semantics, speech prosody, and actions (e.g., pliers, McNamara et al., 2017). In affective neuroimaging of emotions, low-level visual features including brightness (Gruskin et al., 2020) and auditory features such as loudness, tempo, and sound energy envelope (Chapin et al., 2010; Trost et al., 2015; Nguyen et al., 2016; Gruskin et al., 2020; Sachs et al., 2020) have been modeled automatically mainly to control for effects solely driven by sensory features. Object categories such as faces and bodies have been extracted and modeled with automated object recognition tools either from the stimulus (Horikawa et al., 2020) or from the observers (Sawahata et al., 2013; Chang et al., 2021). Sentiment analysis tools have been employed, especially in the study of text-evoked emotions, to model normative valence and arousal of lexical units (Hsu et al., 2015). Finally, tools have recently been developed to automatically extract higher-level prototypical emotion scenes building on ratings of portrayed emotions (Kragel et al., 2019).

Importantly, automatic emotion-related feature extraction often relies on human ratings in the first instance: samples rated by humans are used to train an algorithm to extract features automatically (see, e.g., Kragel et al., 2019). Thus, automatic extraction of emotional content is only as reliable as the original definition of the emotion feature. For instance, automatic recognition of facial expressions (e.g., specific emotion categories from faces) assumes that a correct emotion label was assigned to the facial expressions in the training set, a task that is often underspecified (Barrett et al., 2019).

Automatic feature extraction usually operates at a momentary single-unit level, for instance, on single frames, words, or sounds. However, naturalistic stimuli might elicit emotional processing at various temporal scales. For instance, film makers use methods with different time-scales for enhancing emotional effects (Carroll and Seeley, 2013): the pacing of attention span within short sequences is employed to enhance perceived tension, and global narrative cues build moral expectations of characters or events to drive sustained emotional engagement. Thus, automatic emotion feature extraction currently seems most plausible for prototypical scenes with distinct features that vary in short time intervals.

Recordings of Peripheral and Central Nervous System Changes

Finally, changes in peripheral and central nervous system activity can be measured directly. Autonomic nervous system activity is often routinely measured in brain imaging studies and can be employed to model bodily changes associated with emotions. On the other hand, brain imaging data also allows automatic recognition of neural patterns associated with the stimulus’s emotional content.

In naturalistic neuroimaging, emotion features related to peripheral nervous system activity have been extracted from recordings of heart rate (Wallentin et al., 2011; Raz et al., 2012; Nummenmaa et al., 2014b; Eisenbarth et al., 2016; Nguyen et al., 2016; Young et al., 2017; Smirnov et al., 2019; van der Meer et al., 2020), respiration (Nummenmaa et al., 2014b; Smirnov et al., 2019), skin conductance (Eisenbarth et al., 2016), and pupil dilation (van der Meer et al., 2020). Since the BOLD signal measured with fMRI also contains physiological noise, recordings of heart rate and respiration are often used to remove noise from brain imaging data (Särkkä et al., 2012). However, autonomous nervous system activity also correlates with emotions and signals bodily changes associated with emotions. Recent studies have demonstrated that combining physiological recordings with other emotion features opens intriguing possibilities for investigating the interplay between bodily and other components of emotions (Nguyen et al., 2016).

Central nervous system activity patterns provide an indirect way to measure various aspects of emotions. For instance, brain states during movie viewing have been automatically labeled as mental states using meta-analytic maps (van der Meer et al., 2020). Given that different emotion categories can be decoded from neural activity (Kragel and LaBar, 2016; Saarimäki et al., 2016, 2018), one possibility for accessing emotional effects during stimulation is to look at the brain of the observer. Continuous pattern decoding tools have been employed, for instance, to demonstrate the utility of brain imaging as an additional predictive measure of human behavior on top of self-report measures (Genevsky et al., 2017; Chan et al., 2019). In affective neuroimaging, a recent application of the technique extracted valence and arousal patterns using an emotional picture viewing task and normative ratings for experienced valence and arousal, and identified corresponding neural activity patterns during a movie viewing task to extract a probability time series for experienced valence and arousal (Chan et al., 2020). In future, pattern decoding approaches seem especially useful for accessing emotion features that are difficult to measure with self-reports.

Integrating Emotion Features and Brain Imaging Data

Once the emotion features have been extracted, the next methodological step is to combine them with brain imaging data. Multiple methods have been suggested for this purpose. The overall logic in all of them is that the feature time series are used to model the brain response time series to identify the regions where activation varies similarly with the feature. Different approaches focus either on (1) encoding single voxels or regions corresponding to single or multiple features, (2) employing inter-subject synchrony measures to detect stimulus-induced brain activity, (3) decoding feature time series from the activity pattern time series of multiple voxels (multi-voxel pattern analysis), or (4) extracting changes in functional connectivity related to different features.

Encoding Models

Encoding models in brain imaging use the stimulus to predict brain responses, while the complementary decoding models (see section “Decoding Models”) use the brain activity to predict information regarding the stimulus (e.g., Naselaris et al., 2011). In general, encoding models employ regression to describe how information is represented in the activity of each voxel separately.

By far, the most used approach for combining emotion features to brain imaging data is a univariate approach employing a general linear model (GLM) with parametric regressors (see, e.g., Nummenmaa et al., 2012; Sachs et al., 2020). With a standard univariate regression approach, the feature time series is added as a parametric regressor and applied to all voxel time series to identify the voxels whose activation follows time-varying changes in the feature. Estimates are taken first at an individual level, and group level averages are calculated based on the test statistics. The resulting brain map shows the voxels that follow the feature time course. The traditional GLM approach performs well with models comprising one or few features. Linear regression relies on the assumption that feature vectors are not correlated. However, this is rarely the case with stimulus models comprising multiple features: the feature vectors are linearly dependent of each other, that is, they suffer from multicollinearity. In traditional GLM, multicollinearity is solved by orthogonalizing the feature vectors to avoid unbiased estimates, a practice that is often problematic due to order effects that are difficult to interpret.

Voxel-wise encoding models relying on ridge regression provide a step forward by including multiple features in the regression model simultaneously (e.g., Huth et al., 2012, 2016; Kauttonen et al., 2015). Compared to standard linear regression, ridge regression yields more accurate and unbiased models of data with multicollinearity. Also, voxel-wise encoding models allow direct comparison of multiple feature models (Naselaris et al., 2011). For instance, low-level visual feature models and semantic models could be compared to test whether the voxel represents low-level stimulus properties or more abstract semantic information. A few studies employing voxel-wise encoding models have proven useful, for instance, in comparing the suitability of categorical or dimensional models in explaining brain activity during short, emotional movie clips (Horikawa et al., 2020; Koide-Majima et al., 2020) or in modeling individual emotion rating time series across a full-length feature movie (Lettieri et al., 2019).

Inter-Subject Synchronization Analyses

Inter-subject correlation analyses include data-driven approaches that assess the similarity of regional time courses from separate subjects exposed to the same time-locked paradigms (Hasson et al., 2004; Jääskeläinen et al., 2008). Inter-subject correlation methods are based on the assumption that the brain signal we measure is a combination of stimulus-driven, idiosyncratic, and noise signal components. If we present the same continuous stimulus to a group of participants and calculate the correlation between subjects’ voxel activation time series, we should be left with the stimulus-driven signal (for recent reviews, see Nummenmaa et al., 2018; Nastase et al., 2019). Thus, inter-subject analyses combined with stimulus and (averaged) observer features can identify the shared emotional responses elicited with naturalistic stimuli.

While standard ISC approaches calculate the inter-subject synchronization across the whole stimulus, dynamic ISC allows tracking the moment-to-moment changes (see, e.g., Bolton et al., 2018). Dynamic ISC requires defining a temporal sliding window of a pair a time series and calculating the average inter-subject correlation for each voxel in each sliding window. Sliding through the whole time series, one can extract the ISC time series and model that with emotion features. However, depending on the window size, the time resolution is compromised. Also, the optimal window size might not be straight-forward to estimate.

Another option is to use inter-subject phase synchronization (ISPS), which provides a continuous measure of inter-subject similarity (Glerean et al., 2012). ISPS corresponds to dynamic ISC, but unlike ISC that relies on correlation as a measure of similarity, ISPS first transforms the signal to its analytic form and then calculates the similarity of phase for each time point without the need for sliding windows. Therefore, it also has the maximum temporal resolution (1TR of fMRI acquisition) and can be used to estimate instantaneous synchronization of regional BOLD signals across individuals. Previous studies showed that ISPS analysis gives spatially similar results to ISC but has better sensitivity (Glerean et al., 2012; Nummenmaa et al., 2014b). Emotion features combined with inter-subject phase synchrony have been used in affective neuroimaging of stories and music (Nummenmaa et al., 2014b; Sachs et al., 2020).

Decoding Models

Complementary to encoding models, decoding models start with the entire pattern of activity across multiple voxels to predict information regarding the stimulus (Kay and Gallant, 2009). Encoding models and dynamic inter-subject synchronization methods fit the feature time series - be it a single feature or multiple features – to a single voxel’s or region’s activity at a time. Thus, they are univariate, ignoring the simultaneous contributions of several voxels or regions (e.g., Norman et al., 2006). Decoding approaches such as multivariate pattern analysis were developed to overcome this problem. With a dynamic stimulus, multivariate analyses predict the feature model from multiple voxels simultaneously (see, e.g., Sawahata et al., 2013; Horikawa et al., 2020).

For instance, Chan et al. (2020) extracted shared neural patterns for valence and arousal during emotional picture viewing within a region of interest. They applied the resulting classifier for volume-by-volume classification of valence and arousal during movie trailer viewing. Time series for average classification accuracies were extracted and compared to continuous ratings of experienced valence and arousal. Using a different decoding approach, van der Meer et al. (2020) first extracted dynamic brain states during movie viewing using Hidden Markov Models and identified the mental status corresponding to the brain states using Neurosynth’s meta-analysis library.

Functional Connectivity

Finally, emotion features might also modulate functional connectivity. Once the network time series have been extracted, the methods for combining network time series with emotion features are the same as for voxel or region time series, including encoding, decoding, and inter-subject approaches. In BOLD-fMRI studies, functional connectivity is defined as stimulus-dependent co-activation of different brain regions, usually measured as a correlation between two regions’ time series. Thus, functional connectivity does not necessarily reflect the structural connectivity between two brain regions – two regions might not be structurally connected but still share the same temporal dynamics. In contrast, effective connectivity can be used to investigate the influence one neural system exerts over another (Friston, 2011).

Network modulations of emotion features have been studied with functional connectivity methods including seed-based phase synchronization (SBPS, corresponding to ISPS between regions; Glerean et al., 2012), inter-subject functional connectivity with sliding windows (ISFC, corresponding to ISC between regions; Simony et al., 2016), and inter-network cohesion indices (NCI; Raz et al., 2012). Calculating the connectivity for each pair of voxels is impractical due to the large number of connections this results in. Therefore, functional connectivity is typically calculated as average similarity of voxel timeseries either for selected seed regions of interest or for whole-brain parcellations. To obtain time series of functional connectivity, ISFC and NCI rely on correlations and are calculated with a sliding window approach, while SBPS defines similarity as phase synchrony and can be extracted for each time point directly. Compared to the other two methods, NCI has an additional feature of penalizing higher within-region variance, yielding higher cohesion values for regions that show consistently high correlations (Raz et al., 2012). Affective neuroimaging studies focusing on functional connectivity have revealed emotion-related modulations in various networks (Nummenmaa et al., 2014b; Raz et al., 2014).

Furthermore, the direction of influence between network nodes – effective connectivity - can be investigated with dependency network analysis, which has revealed causal effects between brain regions during emotional states such as anger (Jacob et al., 2018), or with dynamic causal modeling, which has been employed to identify the causal links between regions underlying interoception and exteroception (Nguyen et al., 2016). Dynamic causal modeling can be used to investigate the direction of relations between averaged regions – called nodes – within the functional network, and requires an a priori model of the nodes and their directions of influence (Friston et al., 2003). Thus, it cannot be easily implemented in networks including more than a few nodes. On the other hand, dependency network analysis evaluates a node’s impact on the network based on its correlation influence, quantified with partial correlations between the time courses of the node and all other pairs of nodes (Jacob et al., 2016).

Finally, as studies have demonstrated that combinations of functional networks correlate with emotion features, the relationship between network activation and emotion features is likely more complicated than the single-network approaches can reveal (Nummenmaa et al., 2014b; van der Meer et al., 2020).

Neural Correlates of Perceived Emotion Features: Modeling the Stimulus

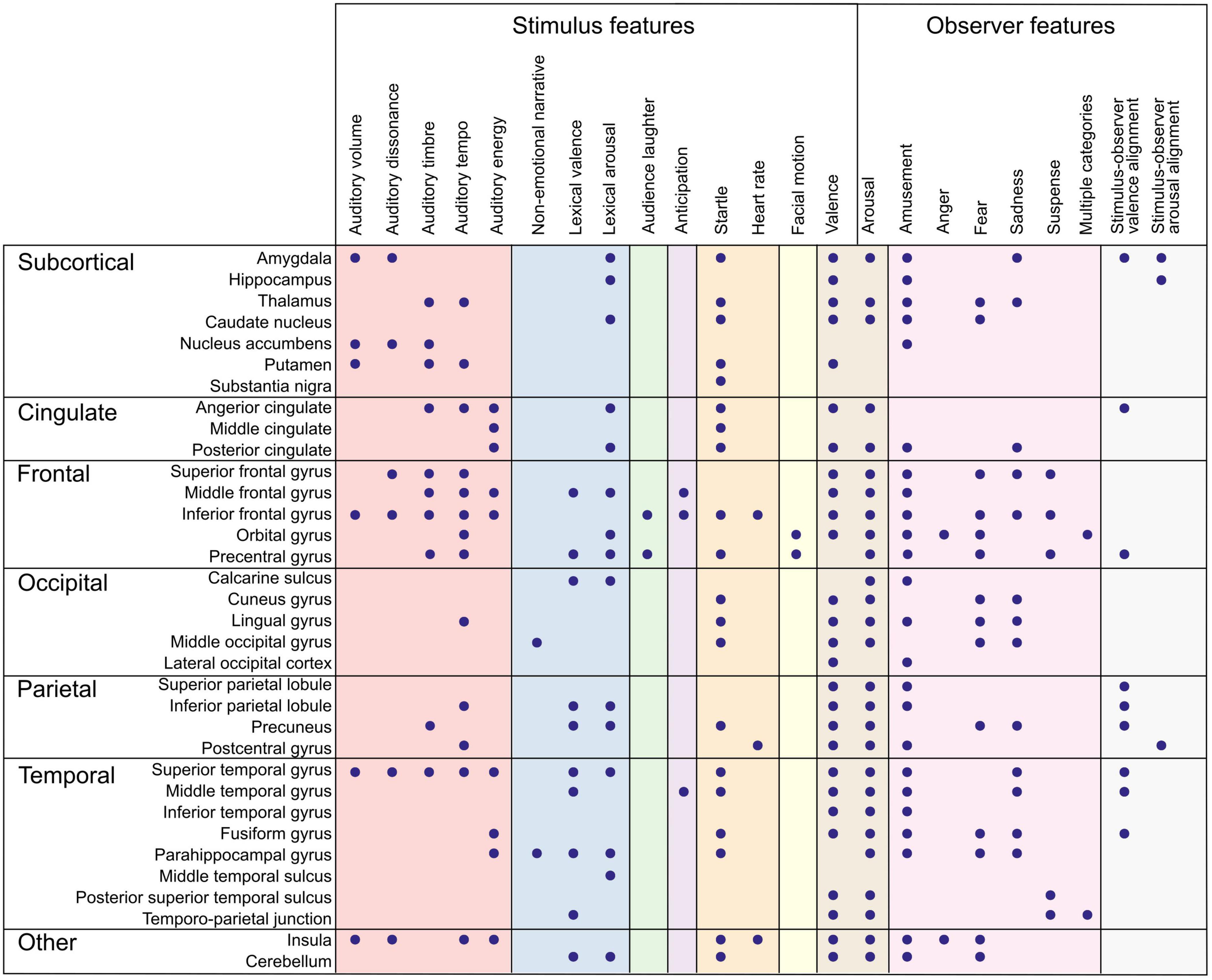

What has affective neuroimaging with naturalistic paradigms revealed regarding the neural correlates of emotion features so far? As suggested in Figure 1, parsing the emotion features to those related to the perceived emotion (the stimulus) and to the experienced emotion (the observer) helps to clarify what aspect of emotion we are modeling with a specific feature. In the next two sections, I will summarize the results so far, proceeding from lower levels along the processing hierarchy to higher ones, and from stimulus features to observer features. The feature-specific results are presented in Figures 2, 3 and in Supplementary Tables 1, 2.

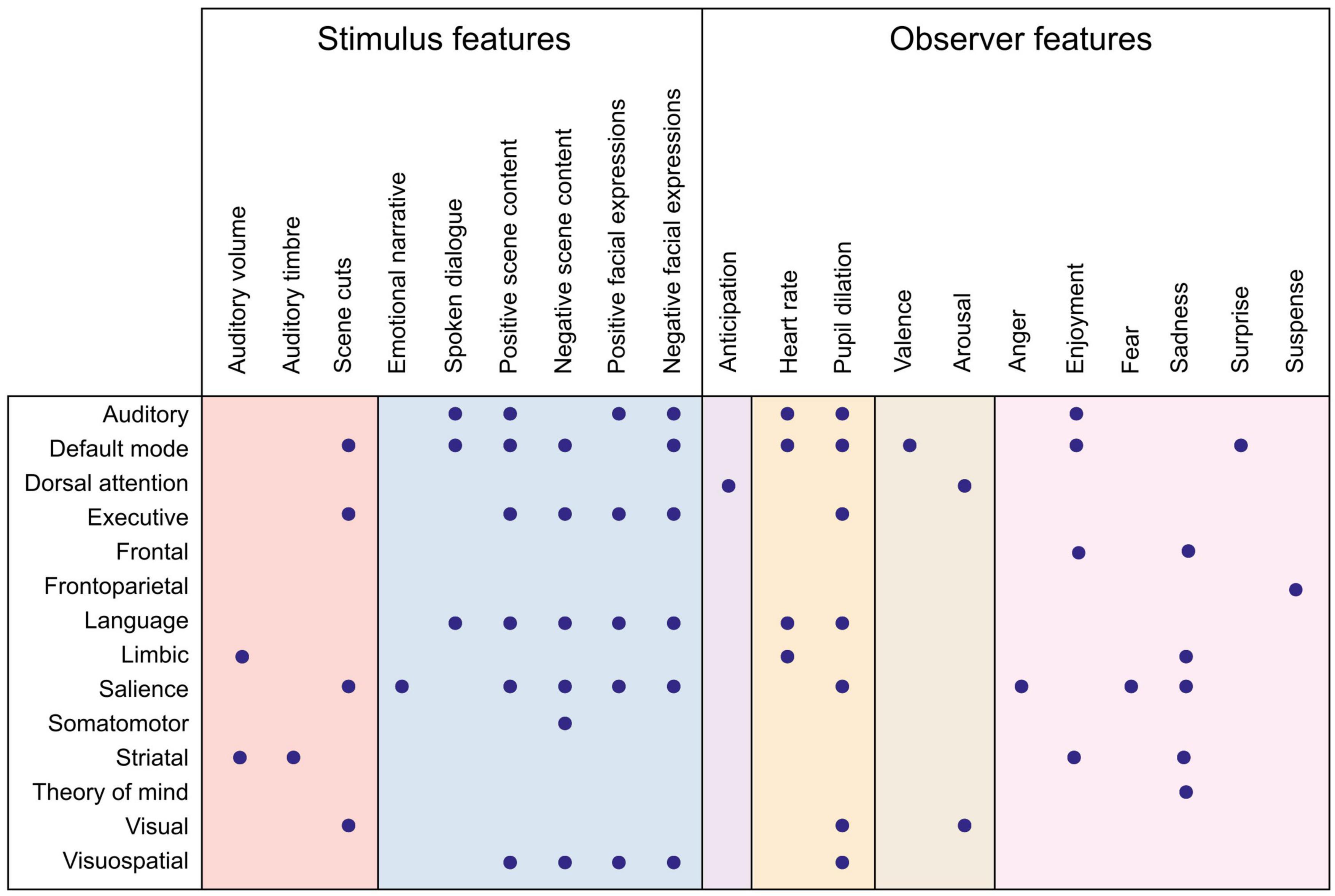

Figure 2. Summary of brain regions correlating with emotion features. Dots denote an observed association between the brain region (rows) and the emotion feature (columns). Color panels denote feature categories (from left to right): low-level auditory features (red), object-level features (blue), portrayed emotions (green), emotion elicitation (violet), interoception (orange), behavior (yellow), affective dimensions (brown), emotion categories (pink), and emotional alignment (gray). Directionality of association and more detailed anatomical locations are listed in Supplementary Table 1.

Figure 3. Summary of functional networks correlating with emotion features. Dots denote an observed association between the network (rows) and the emotion feature (columns). Color panels denote feature categories (from left to right): low-level auditory and visual features (red), object-level features (blue), emotion elicitation (violet), interoception (orange), affective dimensions (brown), and emotion categories (pink). Directionality of association and more detailed anatomical locations are listed in Supplementary Table 2.

For perceived emotions, we can distinguish between three types of emotion features in the past studies: low-level stimulus features, object-level features, and portrayed emotions.

Low-Level Stimulus Features

Low-level stimulus features comprise the visual, auditory, and temporal features that evoke sensory processing in the observer. They can usually be extracted automatically and are often included as nuisance variables to control that the emotion-related activation is not solely related to perceptual features (see Table 2). However, low-level stimulus features might be important for evoking emotions. For instance, affective experiences in music vary with loudness, pitch level, contour, tempo, texture, and sharpness (Coutinho and Cangelosi, 2011).

In affective neuroimaging with naturalistic, low-level auditory features have been extracted and modeled from music and auditory stories (Figures 2, 3). As expected, auditory features are related to activity in auditory regions, but importantly, also in regions traditionally linked with emotions, including subcortical areas (amygdala, thalamus, nucleus accumbens, putamen), anterior cingulate cortex, fronto-parietal regions, and insula (Chapin et al., 2010; Trost et al., 2015; Nguyen et al., 2016; Sachs et al., 2020). Especially, musical tempo increased activation in regions limbic and striatal networks and in executive and somatomotor areas (Chapin et al., 2010). Similarly, behavioral and psychophysiological studies have shown that tempo and loudness in music induce higher experienced and physiological arousal (Schubert, 2004; Etzel et al., 2006; Gomez and Danuser, 2007). Finally, auditory features such as brightness and loudness mediated the correlation between evoked emotions (enjoyment and sadness) and striatal and limbic network activity (Sachs et al., 2020).

So far, low-level visual features have been modeled rarely, but associations of scene switching in movies have been found both with primary visual areas and in default mode and salience networks (van der Meer et al., 2020).

Importantly, some low-level features might directly affect the perceptual pleasantness of the stimulus (Koelsch et al., 2006). For instance, in a study modeling acoustic features from classical music, the activity of nucleus accumbens, an area known to process rewards, was negatively correlated with acoustic features denoting noisiness and loudness (Trost et al., 2015).

Taken together, the regions following dynamic changes in stimulus features include both sensory regions and regions related to higher-level processing. Variation in auditory tempo correlates with somatomotor regions, which might, in turn, correlate with the experienced arousal of the stimulus. The overall stimulus mood, often resulting from variation in low-level stimulus features, also affects the perceived and experienced emotions (Tarvainen et al., 2015). Therefore, removing low-level stimulus features as control variables might remove parts of the emotion-related activation.

Object-Level Features

Object-level features refer to visual or auditory objects, general scene content, semantic categories, and other intermediate features that bind together multiple low-level sensory features but do not directly reflect the emotions portrayed by the stimulus. For instance, visual object categories such as dogs, houses, and children can be visually present on the screen, identified as separate object categories in the brain, and processed without any reference to emotions. However, object categories often carry emotional connotations and contribute to the contextual emotion cues that affect emotion perception (Skerry and Saxe, 2014). For instance, normative ratings show consistency in perceived affective value of object categories including visual objects (i.e., pictures; Lang and Bradley, 2007; Marchewka et al., 2014; Cowen and Keltner, 2017), auditory objects (i.e., sounds; Bradley and Lang, 2007b; Yang et al., 2018), words (Bradley and Lang, 2007a; Redondo et al., 2007; Stevenson et al., 2007; Yao et al., 2017), and sentences and phrases (Socher et al., 2013; Imbir, 2016; Pinheiro et al., 2017). Oftentimes, annotations of object categories including action words and faces have been included as nuisance regressors (Wallentin et al., 2011; Gruskin et al., 2020). However, also the neural underpinnings of object-level features during emotional stimulation have been investigated.

Object-level features in movies can be distinguished especially within the visual areas in the occipital lobe (Horikawa et al., 2020). Importantly, emotional connotations affect the activation related to visual objects. For instance, aversive visual scene content including injuries evokes activation in the somatomotor network, while different networks (dorsal attention network and default mode network, respectively) are activated prior and after the injury scene (Nanni et al., 2018). Emotional (positive and negative) scenes in movies are associated with high default mode, salience, sensory, and language network activation (van der Meer et al., 2020). Furthermore, general positive scene content has been associated with high executive, sensory, and language network activity and low salience network activity, while negative scene content has been associated with higher default mode and language network activation and lower sensory network activation (van der Meer et al., 2020).

Also, emotional auditory content differs from non-emotional content. Using the audio film Forrest Gump, Nguyen et al. (2016) extracted both the original, emotionally salient film soundtrack containing background music and dialogue and an audio commentary track with neutral prosody describing the visual content of the movie. The emotionally salient film soundtrack strongly activated the salience network. However, the commentary correlated with middle occipital gyrus and parahippocampal gyrus activation, probably reflecting the narrated environmental scene descriptions and evoking spatial imagery.

Semantic features in movies are represented especially within brain networks related to auditory, language, and executive processing in lateral frontal, temporal, and parietal regions (Horikawa et al., 2020). Also in the case of semantic features, affective characteristics at various levels affect the feature representation, and can be extracted with sentiment analysis (for a review, see Jacobs, 2015). Word-level affective value based on normative ratings of valence and arousal predicts the BOLD response to affective text passages in emotion-associated regions more strongly and widely than the experienced emotion ratings from the same passages. Besides the traditional emotion-related regions, such as amygdala and cingulate cortices, effects of normative lexical valence and arousal were also found in this study in regions associated with situation model building, multi-modal semantic integration, motor preparation, and theory of mind, suggesting that it is not solely the early emotion areas that activate for words with different affective values (Hsu et al., 2015).

Portrayed Emotions

Portrayed emotions have been extracted mainly with manual annotations (Ekman and Oster, 1979; Lahnakoski et al., 2012; Witkower and Tracy, 2019). Emotion cues used in labeling portrayed emotions include, for instance, facial behaviors (e.g., frowning, Volynets et al., 2020), bodily behaviors (e.g., Witkower and Tracy, 2019), verbal and vocal cues (e.g., trembling voice, Ethofer et al., 2009), actions, and contextual cues (Skerry and Saxe, 2014). Perception of emotions operates at an abstract level regardless of the stimulus modality: both inferred (from contextual descriptions) and perceived (from facial expressions) have shared neural codes (Skerry and Saxe, 2014).

Social feedback alters emotional responses (Golland et al., 2017). Thus, another aspect to consider is the third-person view of the portrayed emotions: the depicted reaction of the audience or another character. For instance, in comedy clips, audience laughter might guide observers’ emotional reactions (Moran et al., 2004; Sawahata et al., 2013). Similarly, movies might portray other people’s reactions to the character’s emotion, potentially affecting also the emotion elicited in the observer. The third-person effect has been approached by categorizing portrayed emotions either to self-directed emotions (i.e., how the character feels) or other-directed emotions (i.e., how the character feels for other characters) (Labs et al., 2015; Lettieri et al., 2019). For instance, Lettieri et al. (2019) showed that ratings of subjective experience share 11% of the variance with the self-directed emotion attribution model and 35% with the other-directed model. Thus, it seems that empathic responses between the observer and characters align better than portrayed and experienced emotions.

Furthermore, emotional alignment and misalignment are important aspects to distinguish. Studies using naturalistic stimuli for emotional induction sometimes assume that we automatically align with the characters’ emotions. Accordingly, similar brain activity might underlie affective judgments of experienced emotions and emotions portrayed by the protagonist (Schnell et al., 2011). Also, higher emotional alignment is related to activation within the mentalizing networks and regions associated with the mirror neuron system (Zaki et al., 2009). However, misalignment between portrayed and experienced emotions is equally possible and might be relevant in naturalistic stimuli where longer-term contextual cues modulate the interpretation of characters’ motivation. For instance, a happy expression on the villain’s face might lead to misalignment. Also, the stimulus’s general mood is not necessarily congruent with the emotions expressed by the characters (Plantinga, 1999).

Neural Correlates of Experienced Emotion Features: Modeling the Observer

The other aspect of emotions we can parse from naturalistic stimuli relates to the emotional processing evoked in the observer. As the consensual, componential approach to emotions suggests, emotions evoke both unconscious and conscious changes ranging from emotion elicitation to interoceptive and motor changing and to integration of overall activation into conscious emotional experiences and labeling the emotional experience using emotion concepts (Scherer, 2009; Satpute and Lindquist, 2019). The neural activation during naturalistic emotional stimuli reflects all these functional components.

Thus, for emotional processing in the observer, we can identify five types of emotion features in the past studies, each corresponding roughly to a different functional component: emotion elicitation, interoception, behavior, affective dimensions, and emotion categories.

Emotion Elicitation

When sensory input arrives in the brain, it is evaluated for personal relevance (see, e.g., Ellsworth and Scherer, 2003; Okon-Singer et al., 2013). This process - emotion elicitation – includes several overlapping processes, including saliency detection and cognitive appraisals (for a review, see Moors, 2009). Emotion elicitation pairs the incoming stimulus with individual’s prior experiences, beliefs, and values (Scherer, 2009). The salience network, consisting of anterior cingulate cortex and anterior insula, is probably the most well-established functional brain network associated with emotion elicitation (Menon, 2015; Sander et al., 2018). However, salience detection associated with the salience network is only one aspect of emotion elicitation, and also other appraisal features have proven useful in distinguishing between brain activity underlying different emotion elicitation processes (Skerry and Saxe, 2015; Horikawa et al., 2020).

Stimulus salience effects can be extracted either automatically or using ratings, which potentially depend on partly different processes related to salient sensory features and more cognitive detection such as ratings for relevance, respectively. While biologically salient stimuli usually activate the salience network (Sander et al., 2003; Pessoa and Adolphs, 2010), relevant goal-directed hand actions during a movie have been linked to activity in the posterior parietal cortex (Salmi et al., 2014).

Anticipation, or expectancy, is another appraisal feature that has been successfully modeled in affective neuroimaging. For instance, activity in the dorsolateral prefrontal cortex, inferior frontal gyrus, ventromedial cortex, and temporal areas predict humor onset already a few seconds before the amusing episode started (Moran et al., 2004; Sawahata et al., 2013). In a study with aversive stimuli, the dorsal attention network was active when scenes with injuries were anticipated (Nanni et al., 2018).

Importantly, individual appraisals reflect different perspectives on the same stimulus (Okon-Singer et al., 2013). Thus, modeling appraisals might be essential for understanding individual differences in emotion elicitation. For instance, perspective-taking studies have shown that the observer’s perspective modulates the neural responses already in the visual cortex (Lahnakoski et al., 2014). Similarly, visual cortex activation differs between experienced emotions and might partially relate to the role of appraisals in guiding the sensory activation underlying different emotions (Kragel et al., 2019). While affective neuroimaging studies rely largely on average responses, the individual effects of appraisal processes remain poorly understood. Naturalistic paradigms compared with feature modeling provide a way forward in this domain.

Interoception

Interoception refers to the central processing of physiological signals and allows perceiving the bodily state both consciously and unconsciously (Khalsa et al., 2018). Interoceptive information regarding bodily changes plays a vital role in emotional experiences according to several emotion theories (e.g., Critchley et al., 2004; Damasio and Carvalho, 2013; Seth, 2016; Barrett, 2017). Naturalistic stimuli, including movies, stories, or merely imagining an emotional event, induce strong physiological reactions (Carroll and Seeley, 2013; Nummenmaa et al., 2014a; Saarimäki et al., 2016). While the physiological changes related to emotions are usually measured externally, I will adopt the term interoception to emphasize that part of the brain activity patterns related to emotional experiences reflect the central processing of physiological signals.

Autonomic nervous system activity, including heart rate and respiration rate, is routinely measured during functional magnetic resonance imaging to account for physiological “noise” in brain activity (Chang et al., 2008). However, the physiological measures also correlate with emotion features, and reflect the variation in bodily changes, which in turn constitute an important functional component contributing to the overall experienced emotion. Especially, the experienced arousal or emotional intensity has been linked with increases in heart rate variability (Wallentin et al., 2011; Young et al., 2017). Heart rate is associated with variation in salience and default mode network activity (Young et al., 2017). In a seminal naturalistic study, the insula was identified as a hub for integrating sensory and interoceptive information during emotional moments of an audio narrative consisting of dialogue and music (Nguyen et al., 2016).

The neural basis of different autonomic responses is not unitary. For instance, during stressful situations, heart rate and skin conductance level are associated with partly common and partly unique brain activity patterns (Eisenbarth et al., 2016). For emotions, this means that while one cannot assume that different emotion categories have consistent changes in one measure (Siegel et al., 2018), the overall sum over all physiological measures might differ between emotions. Both heart rate and skin conductance responses correlated with activity in the anterior cingulate, ventromedial prefrontal cortex, cerebellum, and temporal pole, while several frontal regions were more predictive for either heart rate or skin conductance (Eisenbarth et al., 2016). In another study, larger pupil size strongly correlated with scene luminance, but also with brain states of high executive, sensory, and language network activation, and high default mode network and salience network and low executive network activation (van der Meer et al., 2020).

Emotional Behaviors

Brain imaging studies of emotions often show activity in motor regions (Saarimäki et al., 2016, 2018). These patterns probably reflect the emotion-related behaviors that are automatically activated and inhibited in the observer. On the other hand, behavioral changes are an essential part of the emotional state and emotional experience (Sander et al., 2018). The observer’s emotional behaviors include the same behaviors as earlier listed for emotions portrayed by the characters: vocal characteristics, eye gaze, facial behaviors, and whole-body behaviors (Mauss and Robinson, 2009). Following Mauss and Robinson (2009), I will use the term ‘behavior’ instead of ‘expression’ as the term ‘expression’ implies that emotions trigger specific behaviors, while the literature is inconsistent in this (Barrett et al., 2019).

While brain imaging setups restrict most explicit emotional behaviors, the few studies measuring emotional behaviors have focused on changes in facial behaviors (Sawahata et al., 2013; Chang et al., 2021). For instance, Sawahata et al. (2013) showed that facial motion related to amusement during comedy film viewing could be decoded from motor, occipital, temporal, and parietal regions. Furthermore, Chang et al. (2021) showed that changes in facial behaviors were reflected in the activity of ventromedial prefrontal cortex.

Naturalistic stimuli are not interactive, implying less need for communicative behaviors. However, it is possible that the brain activity patterns measured with affective stimuli reflect also automatic emotional behaviors and inhibition, as suggested by the automatic facial behaviors evoked during emotional movie viewing (Chang et al., 2021). For instance, displaying and observing facial behaviors activate partly overlapping brain regions (Volynets et al., 2020). Similarly, observing actions in movies and real-life automatically activates overlapping motor regions (Nummenmaa et al., 2014c; Smirnov et al., 2017).

Affective Dimensions: Valence and Arousal

Dynamic ratings of experienced valence and arousal serve as emotion features in several studies (Nummenmaa et al., 2012, 2014b; Smirnov et al., 2019). Valence and arousal refer to the dimensions of pleasantness–unpleasantness and calm–excited, respectively, observed initially in studies comparing emotional experiences (Russell, 1980). However, valence might not be a unitary concept as previously thought, and it might be found and evaluated at different processing levels (Man et al., 2017). For instance, representations of valence and arousal in pictures and movies have been found in lower (LOC; Chan et al., 2020) and higher areas along the processing hierarchy (OFC; Chikazoe et al., 2014). Especially, valence-related evaluations can focus on appraisal-level stimulus features, to interoceptive sensations, or to the emerging emotional experience resulting from the integration of multiple functional components. Similarly, the unitary concept of arousal has been questioned (Sander, 2013): arousal can refer to, for instance, bodily changes such as muscle tension or to cortical states of vigilance (e.g., Borchardt et al., 2018). Thus, arousal is closely linked with interoceptive variation during emotional stimuli. Therefore, valence and arousal are best understood as broad dimensions and variation in them might be linked to emotional processing in different functional components.

Valence has been associated with activity in a wide range of regions, including subcortical regions, cingulate cortex, insula, and most cortical areas ranging from early sensory areas to areas associated with higher-level processing (Figures 2, 3 and Supplementary Tables 1, 2; Wallentin et al., 2011; Nummenmaa et al., 2012, 2014b; Trost et al., 2015; Smirnov et al., 2019; Chan et al., 2020; Gruskin et al., 2020). Such widespread activity further suggests that the experienced valence ratings reflect several changes in underlying components, leading to an umbrella label of valence. Supporting this view, subcortical responses including decreases in amygdala and caudate activity during music were driven mainly by low-level, energy-related musical features, including root mean square and dissonance (Trost et al., 2015). Besides low-level stimulus features, valence also correlates with other emotion features. Especially, experienced negative valence during emotional stories correlates with increased heart rate and respiration rate; however, this effect can be difficult to distinguish from arousal, as valence and arousal time series in this study were highly correlated (Nummenmaa et al., 2014b).

Experienced arousal has been linked with wide-spread brain activity spanning most of the brain and, especially, sensory and attention-related brain regions (Figures 2, 3 and Supplementary Tables 1, 2; Wallentin et al., 2011; Nummenmaa et al., 2012, 2014b; Trost et al., 2015; Smirnov et al., 2019; Chan et al., 2020; Gruskin et al., 2020). Similarly to valence, low-level stimulus features can drive arousal. For instance, the correlation between experienced high arousal and activity in subcortical (amygdala, caudate nucleus) and limbic (insula) regions was driven by low-level, energy-related features in music (Trost et al., 2015). In line with this, physiological arousal during movies correlates both with experienced arousal and with activity in the salience network, linking arousal to detection of emotionally relevant stimulus segments (Young et al., 2017). Arousal also varies with autonomous nervous system activity, supporting the role of arousal as a physiological component (Mauss and Robinson, 2009). Subjectively rated arousal peaks coincide with increased heart rate during movie viewing (Golland et al., 2014; Young et al., 2017). However, experienced arousal only correlated with respiration rate but not with heart rate when using emotional stories with neutral prosody (Nummenmaa et al., 2014b). Furthermore, continuous ratings of experienced arousal are associated with increased activity in somatosensory and motor cortices and activity in frontal, parietal, and temporal regions and precuneus (Smirnov et al., 2019).

Affective dimensions also dynamically modulate functional networks. In a study using positive, negative, and neutral stories, higher experienced emotional arousal and negative valence were correlated with widespread increases in the connectivity of frontoparietal, limbic (insula, cingulum), and fronto-opercular (motor cortices, lateral prefrontal cortex) regions for valence and those of subcortical, cerebellar and frontocortical regions for arousal, while connectivity changes due to positive valence and low arousal were minor and involved mostly subcortical regions (Nummenmaa et al., 2014b).

Taken together, affective dimensions including valence and arousal are linked to wide-spread activity changes across the brain. Thus, parsing their effects in specific functional components by extracting and modeling other, more low-level emotion features is necessary for understanding what aspect of emotional processing the dynamic ratings of valence and arousal are measuring.

Emotion Categories

Besides affective dimensions, another option for directly extracting the emotional experiences is to collect ratings of experienced intensity for various emotion categories, such as joy or fear. Based on previous results in multivariate pattern recognition studies, experienced emotion categories modulate brain activity across a wide range of regions (Saarimäki et al., 2016, 2018). Furthermore, if the experience of emotions emerges from the integration of activity across functional components, activation in component-specific regions might also follow the variation in experience. Thus, compared to other emotion features, experienced emotions should show modulations in multiple brain regions. For instance, time-series of experienced joy might correlate with lower-level feature time series such as brightness. Thus, the activation patterns we see during episodes of joy might also reflect lower-level sensory processing.

Most studies with categorical emotions have focused on modeling the dynamic variation of intensity for one emotion category at a time. Thus, the stimulus materials in affective neuroimaging studies have often targeted one emotion category (e.g., horror movies to elicit fear in Hudson et al., 2020; comedy clips to elicit amusement in Iidaka, 2017; or separation scenes to elicit sadness in Schlochtermeier et al., 2017). Emotion categories that have been studied with naturalistic stimuli include amusement (Moran et al., 2004; Sawahata et al., 2013; Iidaka, 2017; Tu et al., 2019), sadness (Goldin et al., 2005; Schlochtermeier et al., 2017; Sachs et al., 2020), enjoyment (Sachs et al., 2020), fear (Hudson et al., 2020), suspense (Naci et al., 2014; Lehne et al., 2015), and anger (Jacob et al., 2018). Overall, as expected, categorical emotions lead to wide-spread brain activity and connectivity changes similarly to valence and arousal (see Figures 2, 3 and Supplementary Tables 1, 2). The emotion-specific differences might result from differential activation in underlying functional components which results in differences in the consciously experienced emotion.

The experienced intensity of multiple emotion categories has recently been modeled simultaneously, allowing the investigation of shared and unique responses across categories. For instance, Lettieri et al. (2019) extracted ratings of experienced happiness, sadness, disgust, surprise, anger, and fear during a full-length movie. Associations with rating time series were found in frontal regions, motor areas, temporal and occipital areas, and especially in temporo-parietal junction, which showed peak activation across all emotion categories. Temporo-parietal junction has been identified as a hub for various types of social processing in movies (Lahnakoski et al., 2012). Accordingly, the categorical emotion ratings were in Lettieri et al. (2019) were mainly correlated with social, other-directed emotions portrayed by the characters.

Interestingly, neural correlates of experienced emotion categories might vary depending on the content of the movie. Raz et al. (2014) found that unfolding sadness-related events during a movie led to correlations between limbic network and sadness ratings, while sadness-related discussions of future events in a movie led to correlations between theory-of-mind network and sadness ratings. This demonstrates the value in carefully evaluating and modeling the content of stimuli.

Dimensional emotion theories posit that emotion categories can be represented along a few dimensions. Thus, if dimensions such as valence and arousal are enough for explaining the variance in emotional experiences, collecting data from multiple emotion categories might not bring additional value. To test this, Horikawa et al. (2020) compared a dimensional model (14 features representing affective dimensions) and a categorical model (34 features representing the intensity of emotion categories). They found that the categorical model explained the variance in brain activity better than the dimensional model.

Taken together, the wide-spread brain activity changes associated with emotion categories support their role in integrating activation across functional components. Especially, studies with more fine-grained analysis between experienced and portrayed emotions (Lettieri et al., 2019) and parsing the same emotion category to different appraisals (Raz et al., 2014) suggest that more detailed modeling of stimulus and observer features is necessary for understanding how the category-specific brain activity emerges.

Future Directions

Temporal Dynamics of Emotion Features

Emotions are momentary by definition (see, e.g., Frijda, 2009; Mulligan and Scherer, 2012). Several emotion theories involve changes that evolve across time and components dynamically affecting each other (e.g., Scherer, 2009; Gross, 2015). Despite the growing theoretical interest in the dynamics of emotions, the empirical studies regarding the dynamics of neural circuitries in human neuroscience remain sparse (for a review, see Waugh et al., 2015). Yet, the few studies that have extracted and directly compared time series from multiple brain regions suggest that different functional components might have different time-scales during emotional stimulation (Sawahata et al., 2013).

The time-varying feature time series have the potential of informing us regarding the neural dynamics of emotions. The two most prominent sources of emotion dynamics are their degrees of explosiveness (i.e., profiles having a steep vs. a gentle start) and accumulation (i.e., profiles increasing over time vs. going back to baseline; Verduyn et al., 2009). Similarly, movies elicit two types of affective responses: involuntary, automatic reflexive responses that produce intense autonomic responses and grab our attention, and more cognitively nuanced emotional reactions (Carroll and Seeley, 2013). In a recent study, Hudson et al. (2020) modeled acute and sustained fear separately. They found differences between brain regions underlying acute (explosive) and sustained (accumulated) fear. Sustained fear led to increased activity in sensory areas while activity in interoceptive regions decreased. Acute fear led to increased activity in subcortical and limbic areas, including the brainstem, thalamus, amygdala, and cingulate cortices. Dynamic approaches have also proven useful in detecting the brain regions and networks responsible for processing at consecutive stages of evolving disgust (Nanni et al., 2018; Pujol et al., 2018), sadness (Schlochtermeier et al., 2017), and humor (Sawahata et al., 2013). For instance, using the temporal dynamics of humor ratings, predicting the upcoming humor events on a volume-by-volume basis was possible before they happened (Sawahata et al., 2013), and cumulative viewing of sad movies increased activation in midline regions including anterior and posterior cingulate and medial prefrontal cortex (Schlochtermeier et al., 2017). Thus, parsing the functional components using emotion feature models allows investigation of temporal variation between components, and opens new possibilities for naturalistic neuroimaging of emotions.