Face-sensitive processes one hundred milliseconds after picture onset

- 1 School of Psychology, University of Wales, Bangor, UK

- 2 Department of Technology, Universitat Pompeu Fabra, Barcelona, Spain

- 3 Faculty of Psychology, Universitat de Barcelona, Barcelona, Spain

- 4 Laboratory of Experimental Neuropsychology, Neuropsychology Unit, Department of Neurology, Geneva University Hospitals, Geneva, Switzerland

The human face is the most studied object category in visual neuroscience. In a quest for markers of face processing, event-related potential (ERP) studies have debated whether two peaks of activity – P1 and N170 – are category-selective. Whilst most studies have used photographs of unaltered images of faces, others have used cropped faces in an attempt to reduce the influence of features surrounding the “face–object” sensu stricto. However, results from studies comparing cropped faces with unaltered objects from other categories are inconsistent with results from studies comparing whole faces and objects. Here, we recorded ERPs elicited by full front views of faces and cars, either unaltered or cropped. We found that cropping artificially enhanced the N170 whereas it did not significantly modulate P1. In a second experiment, we compared faces and butterflies, either unaltered or cropped, matched for size and luminance across conditions, and within a narrow contrast bracket. Results of Experiment 2 replicated the main findings of Experiment 1. We then used face–car morphs in a third experiment to manipulate the perceived face-likeness of stimuli (100% face, 70% face and 30% car, 30% face and 70% car, or 100% car) and the N170 failed to differentiate between faces and cars. Critically, in all three experiments, P1 amplitude was modulated in a face-sensitive fashion independent of cropping or morphing. Therefore, P1 is a reliable event sensitive to face processing as early as 100 ms after picture onset.

Introduction

The human face is probably the most biologically significant stimulus encountered by humans in the environment because it provides critical information about other individuals (e.g., identity, age, sex, mood, direction of attention, intention, etc.). One fundamental question in visual neuroscience is whether or not the human ability to process face information relies on specific neural mechanisms qualitatively distinct from those involved in the perception of other classes of visual stimuli. A number of event-related potential (ERP) and magnetoencephalography (MEG) studies have been carried out to determine the time-course of category-selective1 effects during visual object perception and recognition. A particular peak of ERPs, the N170, which has a latency of ∼170 ms after stimulus onset and is characterized by a vertex positive and bilateral temporal negative deflection (Bentin et al., 1996; Linkenkaer-Hansen et al., 1998), and its magnetic equivalent, the M170 (Liu et al., 2002; Xu et al., 2005), have been frequently reported as face-selective in the literature. In particular, it has been claimed that no stimulus category other than the human face elicits negativities as pronounced as faces in the 140- to 180-ms time-range after stimulus presentation (Itier and Taylor, 2004).

On the other hand, the P1, a peak with a latency of 100 ms, has also been suggested as a category-sensitive peak, albeit by a minority of authors (Herrmann et al., 2005; Thierry et al., 2007). Despite the fact that P1 category-sensitivity has been repeatedly challenged (Bentin et al., 2007b; Rossion and Jacques, 2008; Kuefner et al., 2010, but see also Thierry et al., 2007b; Dering et al., 2009), converging evidence from MEG, ERP, and transcranial magnetic stimulation (TMS) have highlighted face-sensitive processes occurring around 100 ms post-stimulus onset (Liu et al., 2002; Herrmann et al., 2005; Pitcher et al., 2007). In particular, double TMS pulses have been shown to disrupt visual processing selectively for faces when stimulation is delivered over the occipital face area (OFA) 60 and 100 ms after picture presentation but no measurable disruption is observed for double TMS pulses applied at later latencies (Pitcher et al., 2007), nor when applied to nearby extrastriate areas.

Studies of intracranial recordings in patients with implanted electrodes have also yielded inconsistent results. Whilst face-selective responses from the inferior temporal lobe have been recorded within 200 ms of stimulus onset (Allison et al., 1994, 1999), other studies have suggested face-sensitive responses as early as 50 ms after stimulus onset (Seeck et al., 1997, 2001), similar to some ERP studies (Braeutigam et al., 2001; Mouchetant-Rostaing and Giard, 2003). However, cortical activity in pharmacoresistant epileptic individuals can be affected by cognitive impairment after repeated seizures, anticonvulsant medication consumption, or functional reorganization subsequent to the presence of epileptic foci, making comparisons of intracranial recordings to ERPs only tentative (Bennett, 1992; Allison et al., 1999; Liu et al., 2002; Krolak-Salmon et al., 2004).

A number of ERP studies have measured the sensitivity of the N170 peak to various stimulus manipulations in an attempt to determine which stage(s) of visual structural encoding are functionally reflected by the modulation of its amplitude. For instance, the N170 is sensitive to vertical orientation (Bentin et al., 1996), isolation of internal features (Bentin et al., 1996), scrambled facial features (George et al., 1996) as well as contrast (Itier and Taylor, 2002), spatial frequency (Goffaux et al., 2003), and gaussian noise (Jemel et al., 2003). Since the N170 component is affected by the lack of internal (eyes, nose, mouth) and external (hair, ears, neck) features, it is likely to reflect – at least in part – configurational analysis of visual objects (Eimer, 2000b). Surprisingly, the sensitivity of the N170 to the external integrity of faces has rarely been investigated. Moreover, many studies of visual object categorization have compared face and object perception using cropped faces (i.e., faces without hair, ears, or neck) and “intact” objects (Goffaux et al., 2003; Rossion et al., 2003; Kovacs et al., 2006; Jacques and Rossion, 2007; Righart and de Gelder, 2007; Rousselet et al., 2007; Vuilleumier and Pourtois, 2007). Therefore, it is unclear whether differences between experimental conditions found earlier are indeed driven by categorical differences or artificially influenced by differences between experimental conditions in terms of stimulus integrity.

Why should stimulus integrity modulate N170 amplitude? Since eyes presented in isolation have been shown to elicit N170 as large as those elicited by pictures of complete faces, it may be that N170 is not sensitive to stimulus integrity. In fact, this result has led to the hypothesis that N170 may index the activity of an eye-detection system (Eimer, 1998). However, other studies have shown even greater N170 amplitude to isolated eyes (Bentin et al., 1996), which suggests that N170 may be increased in amplitude when stimulus integration is more demanding. Furthermore, the N170 is highly sensitive to stimulus interpretability. That is, the same object can elicit larger N170 amplitudes when interpreted as part of a face (e.g., two dots interpreted as dots or as eyes; Bentin and Golland, 2002).

Overall, because cropped faces and unaltered faces have often been used without distinction (Bentin et al., 2007b; Rossion, 2008; Rossion and Jacques, 2008; Zhao and Bentin, 2008; Anaki and Bentin, 2009), it is unknown whether differences between faces and other object categories may be affected by spurious differences in stimulus integrity. More specifically, a review paper by Rossion and Jacques(2008 has reported unpublished data as evidence against the findings of Thierry et al. (2007a). These results were based on faces and cars presented full front and repeated six times. Critically, the pictures of faces used were cropped but the pictures of cars were unaltered. Here, we investigated the effect of stimulus cropping and repetition to account for the discrepancies between the results obtained by Rossion and Jacques (2008) and those of Thierry et al. (2007a).

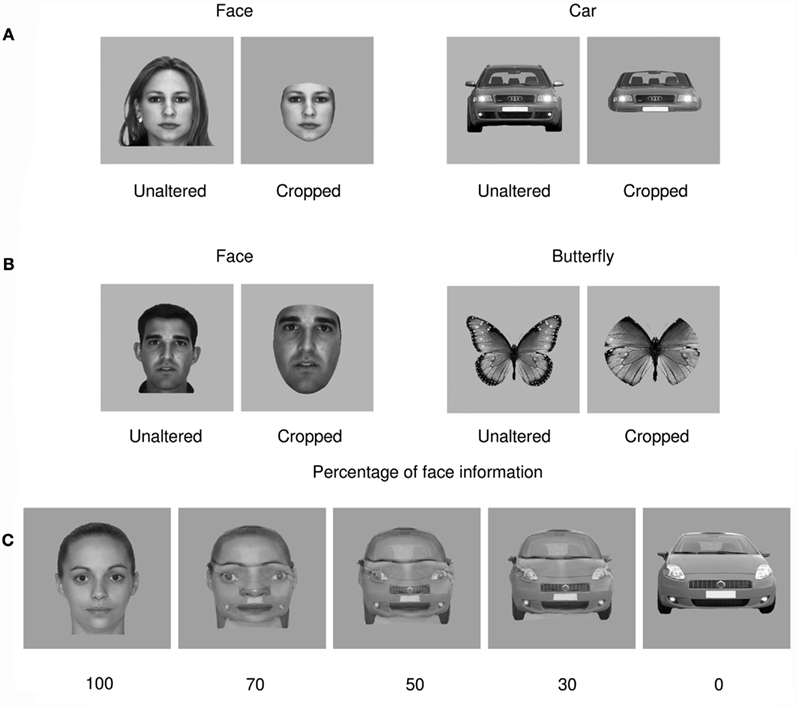

We presented participants with a stream of pictures featuring faces and cars (full front, symmetrical, centered, and of similar size within each condition). In the first block, all the stimuli were presented once complete and once cropped, i.e., faces without hair, ears, or neck and cars without rooftop, rear-view mirrors, or wheels (Figure 1A). In a second block, all stimuli used were repeated six times in order to test for potential repetition effects, since repetition is a factor inherent to previous studies on face categorization (e.g., Rossion and Jacques, 2008). This resulted in a within-participants 2 × 2 × 2 factorial design (face/car vs. cropped/unaltered vs. repetition/no repetition). Participants performed a forced binary choice categorization task. We predicted (a) an effect of cropping on N170 amplitude for faces, which would account for the discrepancy between results obtained by Rossion and Jacques (2008) and Thierry et al. (2007a), without a category effect for the comparison of unaltered faces and cars; (b) a significant category effect on P1 amplitude replicating previous results (Thierry et al., 2007a; Dering et al., 2009; Boehm et al., 2011); and (c) an increase in P1 amplitude, and/or delayed P1 latency, by cropping, since categorization is arguably more difficult when peripheral information is missing, and given that we previously observed P1 amplitude increase with task difficulty (Dering et al., 2009).

Figure 1. Examples of stimuli used in all experiments. (A) Example of a cropped face stimulus of the kind often used in experiments testing object categorization presented next to the unaltered source face and a similar example comparison for a car stimulus. (B) Examples of cropped and unaltered faces and butterflies after matching for luminance, contrast, and size. (C) Examples of progressive morphing between a face and a car stimulus. Note that 50% face–50% car morphs were used as target stimuli and not analyzed.

In a second experiment, we sought to discard the hypothesis that effects of cropping on P1 or N170 amplitude could be due to residual differences in stimulus size, luminance, or contrast between experimental conditions by matching pictures with regard to all of these characteristics. We took this opportunity to compare the processing of faces to that of a third category, butterflies, which have been investigated previously (Schweinberger et al., 2004; Thierry et al., 2007a). The second experiment therefore had a 2 (faces/butterflies) × 2 (cropped/unaltered) design and featured no significant difference in size, luminance, between experimental conditions while keeping contrast variance within a narrow bracket2 (Figure 1B). The predictions for Experiment 2 were exactly the same as that for Experiment 1.

In a third experiment, we manipulated stimulus interpretability. Full front views of faces and cars were morphed to produce images that contained face and car information in various proportions: 100% face, 70% face–30% car, 50% face–50% car, 30% face–70% car, and 100% car (Figure 1C). The ambiguous 50% face–50% car condition was highlighted by a frame, required participant’s responses, and was discarded at the analysis stage. This resulted in a 2 (face vs. car) × 2 (morphed vs. unaltered) design. Any component that is presumed to be face-sensitive was predicted to be significantly larger for face-like stimuli as compared to car-like stimuli.

Materials and Methods

Experiment 1

Participants

Twenty-two participants (mean age = 24.5, SD = 5.5, 15 females, 1 left-handed) with normal or corrected-to-normal vision gave written informed consent to participate in the experiment that was approved by the ethics committee of Bangor University.

Stimuli

Ninety-six images of full front faces were modified digitally so as to remove features considered peripheral to the face–object sensu stricto, i.e., hair, ears, and neck. Ninety-six images of full front cars were modified in a similar way by removing roof top, wing mirrors, and wheels. After cropping, all images were transposed onto a gray background (Figure 1). All images in each of the four groups generated (cars and faces, unaltered and cropped) were centered on the screen, scaled to fit a standard size template, and had the same orientation (thus reducing stimulus variability as much as possible (Thierry et al., 2007a). Cropping images resulted in slight variations in luminance (cropped faces 42.1 cd/m2; cropped cars 40.1 cd/m2; unaltered faces 34.9 cd/m2; unaltered cars 39.8 cd/m2) and contrast (cropped faces 0.7 cd/m2; cropped cars 1.5 cd/m2; unaltered faces 3.6 cd/m2; unaltered cars 3.5 cd/m2) between conditions. Luminance on the screen was measured using a Minolta CS-100 colorimeter and contrast values corresponded to the root mean square contrast over the whole image including background.

Procedure

In the first part of the experiment, stimuli were presented in a randomized order in four blocks of 96 trials such that each block featured 24 pictures from each of the four experimental conditions. In the second part of Experiment 1, conducted with the same participants, a selection of 16 images repeated six times each was presented in the same randomized fashion to test for a potential effect of repetition. All stimuli were presented for 200 ms within 8.6° of vertical and 8.7° of horizontal visual angle on a Viewsonic G90FB 19′ calibrated CRT monitor with a resolution of 1024 × 768, at a refresh rate of 100 Hz. Participants sat 100 cm from the monitor. Inter-stimulus interval was 1300 ms, and participants categorized each of the stimuli as face or car by pressing keys on a keyboard, a task shown to elicit similar ERP patterns as a one-back task (Dering et al., 2009). Response sides were counterbalanced between participants.

Experiment 2

Participants

Twenty participants (mean age = 22.1, SD = 3.7, 11 females, 1 left-handed) with normal or corrected-to-normal vision gave written informed consent to participate in the experiment that was approved by the ethics committee of Bangor University.

Stimuli

Eighty images of full front faces were modified as in Experiment 1, by removing features considered peripheral to the face–object. Eighty images of full front butterflies were modified in a similar way by cropping the wings to approximately half of their length using a circular mask. After cropping, the images were enlarged without distortion to fit the maximum x and y dimensions of their counterpart unaltered image, and then transposed onto a gray background (Figure 1). All images in each of the four groups generated (butterflies and faces, unaltered or cropped) were centered on the screen, matched for size, and had the same orientation (thus reducing stimulus variability as much as possible (Thierry et al., 2007a). Luminance was set at 42 cd/m2, and contrast was 17.8 cd/m2 ±2.2. Luminance on the screen was measured using a Minolta CS-100 colorimeter and contrast values corresponded to the root mean square contrast over the whole image including background.

Procedure

All stimuli were presented for 200 ms within 8.6° of vertical and 8.7° of horizontal visual angle on a Viewsonic G90FB 19′ calibrated CRT monitor with a resolution of 1024 × 768, at a refresh rate of 100 Hz. Participants sat 100 cm from the monitor. Inter-stimulus interval was 1300 ms, and participants categorized each of the stimuli as a face or butterfly by pressing keys on a stimulus response box. Response sides were counterbalanced between participants. Stimuli were presented in a randomized order in 4 blocks of 160 trials such that all images in the experiment were presented twice.

Experiment 3

Participants

Eighteen participants (mean age = 19.8, SD = 1.99, 13 females, 0 left-handed) with normal or corrected-to-normal vision gave written consent to participate in the experiment that was approved by the ethics committee of Bangor University.

Stimuli

Forty images of full front neutral faces aged between 18 and 30 years old were obtained from the Productive Aging lab’s face database (Minear and Park, 2004). These images, centered on the screen, scaled to fit a standard size template, and with the same orientation were transposed onto a uniform gray background. Forty pictures of full front faces were paired with 40 pictures of full front cars and transformed using a morphing algorithm (Sqirlz Morph 2.0) to produce a series of face–car morphs varying in the percentage of face information embedded in each image (Figure 1): 100% face, 70% face–30% car, 50% face–50% car, 30% face–70% car, and 100% car. The morphing procedure produced slight variations in luminance such that across all conditions average luminance was 37.2 cd/m2, ±1 cd/m2 (contrast 1.5 cd/m2). Luminance on the screen was measured using a Minolta CS-100 colorimeter and contrast values corresponded to the root mean square contrast over the whole image including background.

Procedure

Stimuli were presented, in a randomized order, for 500 ms within 8.8° of vertical and 8.5° of horizontal visual angle on a Viewsonic G90FB 19′ calibrated CRT monitor with a resolution of 1024 × 768, at a refresh rate of 100 Hz. Participants sat 100 cm from the monitor. Inter-stimulus interval was 1500 ms, allowing for the participant response. Each picture was presented six times throughout the experiment. Participants only responded to ambiguous target stimuli (50% face–50% car), which were presented in a distinctive black frame, by indicating whether the picture was perceived rather as a face or as a car. The task was a forced-choice binary task and response sides were counterbalanced between participants.

Event-related potentials

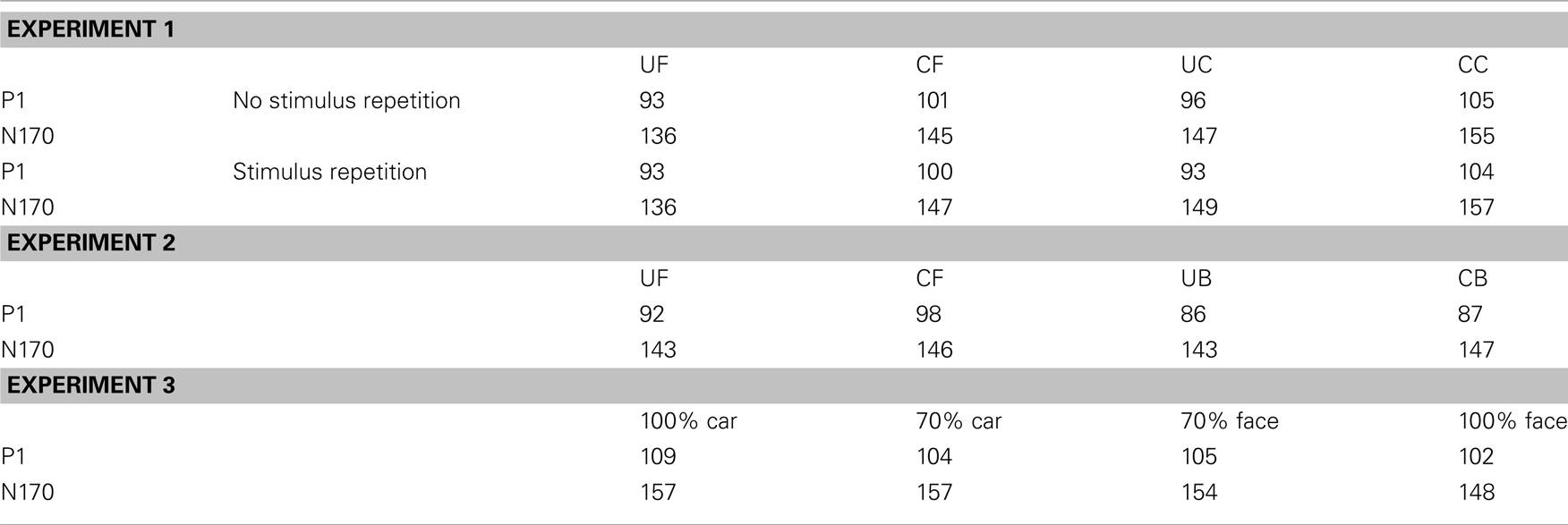

Using Cz as a reference, scalp activity was recorded using SynAmps2™ (Neuroscan, Inc., El Paso, TX, USA) amplifiers with a sampling rate of 1 kHz from 64 Ag/AgCl electrodes (Easycap™, Brain Products, Germany) distributed across the scalp according to the extended 10–20 system. Impedances were kept below 5 kΩ. The electroencephalogram was filtered on-line between 0.01 and 200 Hz and off-line with a low-pass zero phase shift digital filter set to 30 Hz (48 db/octave slope). Eye-blink artifacts were mathematically corrected3 using a model blink artifact computed for each individual following the procedure recommended by Gratton et al. (1983). Signals exceeding ±75 μV in any given epoch were automatically discarded. EEG recordings were cut into epochs ranging from −100 to 500 ms after stimulus onset and averaged for each individual in all experiments according to the experimental conditions. Grand-averages were calculated after re-referencing individual ERPs to the common average reference. Mean amplitudes for each condition were analyzed at eight posterior occipital electrodes for Experiment 1. Global field power was calculated to guide classification of ERP components (Koenig and Melie-Garcia, 2010). Peak latencies were measured at the electrode of maximal amplitude in each condition and each participant. The P1 was identified as a positive peak occurring between 80 and 120 ms and analyzed at sites O1, O2, PO7, PO8, PO9, and PO10. Due to significant differences between latencies for conditions at the P1, mean amplitude analyses were conducted 20 ms around the peak of maximal activity for each condition of the experiment (Table 1). The N170 peaked between 120 and 200 ms at electrode sites P7, P8, PO7, PO8, PO9, and PO10. Mean amplitude analyses for the N170 were conducted 40 ms around the peak for each condition of the experiment (Table 1). The data was subjected to repeated measures analysis of variance (ANOVAs) with three factors – category (face/car), alteration (unaltered/cropped), and electrode (six levels). A Greenhouse–Geisser correction was used where applicable. To demonstrate the magnitude of effects, partial Eta squared (ήp2) is reported. In the analyses reported here, the electrode factor was systematically significant but such effects are not discussed since the focus of this paper was on mean peak amplitude differences at electrodes of predicted (and observed) maximal sensitivity. For a contribution addressing the issue of topographical comparisons, see Boehm et al. (2011).

For Experiments 2 and 3, P1 and N170 components peaked within the same time windows used for analysis in Experiment 1, as indicated by calculation of the global field power. P1 and N170 were examined at the same electrode sites as Experiment 1 respectively, with mean amplitude analyses for P1 run 20 ms around each peak for each condition and 40 ms around the peaks for N170 (Table 1). Experiment 2 was analyzed by repeated measures ANOVAs with three factors of category (face/butterfly), cropping (unaltered/cropped), and electrode (six levels). Experiment 3 had three factors of category (face/car), morphing (morphed images/normal images), and electrode (six levels). We used a 2 × 2 × 6 ANOVA in Experiment 3 because a one-way ANOVA would imply a “clean” perceptual continuum between the 0% face and 100% face conditions. More than a linear relationship between percentage of face/car information and P1 amplitude modulation, we expected morphing to add perceptual difficulty and therefore artificially boost P1 mean amplitude. Effect sizes (ήp2) are also reported where relevant.

Temporal segmentation

This analysis tracked scalp topographies that remain stable for periods of time in the order of tens to hundreds of milliseconds (Michel et al., 2001). These so-called microstates are thought to represent specific phases of neural processing (Lehmann and Skrandies, 1984; Brandeis and Lehmann, 1986; Michel et al., 1999, 2001). We identified the microstates using a hierarchical cluster analysis technique (Murray et al., 2008) to determine the segmented maps accounting for the greatest amount of variance in the ERP map series. The optimal number of segment maps explaining the greatest amount of variance was obtained using a cross-validation criterion (Pascual-Marqui et al., 1995; Pegna et al., 1997, 2004; Michel et al., 2001; Thierry et al., 2006, 2007a; Vuilleumier and Pourtois, 2007; Murray et al., 2008). Then, we calculated the statistical validity of maps extracted from grand-averages by determining the amount of variance explained by each map in the ERPs of each individual in each condition. Repeated measures ANOVAs were then performed on measures of explained amounts of variance to compare the statistical probability of each microstate explaining each experimental condition (Pegna et al., 1997, 2004; Thierry et al., 2006, 2007a; Murray et al., 2008).

Results

Cropping Faces Artificially Increases N170 Amplitude but Does Not Affect P1 Category-sensitivity

In Experiment 1, the mean reaction time was 381 ms ±77 across all conditions and mean accuracy was 92 ± 6.6%. Neither reaction times nor accuracy was affected by stimulus category or cropping (all ps > 0.05).

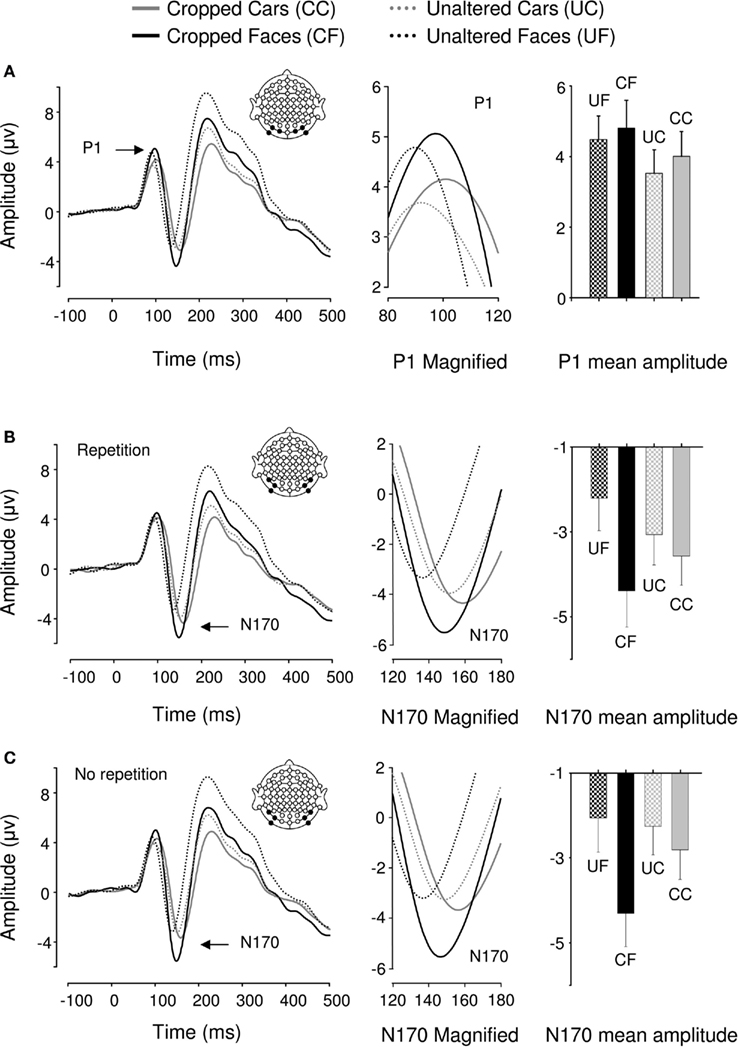

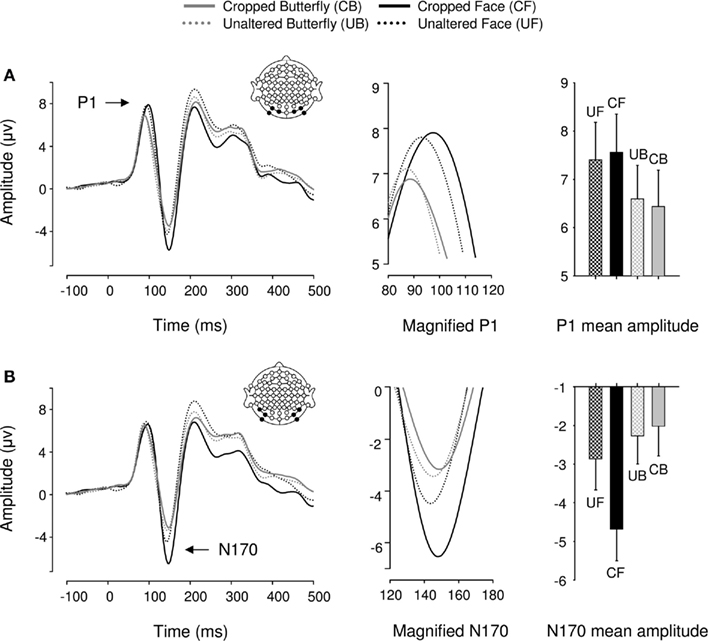

Event-related potentials for all 22 participants displayed a typical P1–N1–P2 complex in all experimental conditions (Figure 2). Analysis of P1 amplitudes revealed a pattern of response sensitive to face information present in the stimulus. Repeated measures ANOVA over 6 posterior occipital electrodes revealed no main effect of repetition [F(1,21) = 2.482, p > 0.05] or interactions [p > 0.1] affecting P1 mean amplitude allowing data for the unrepeated and repeated blocks to be combined for further analysis. There was a main effect of object category on P1 mean amplitudes [F(1,21) = 15.87, p < 0.05, ήp2 = 0.43] showing that the P1 elicited by faces was significantly larger than the P1 elicited by cars but, there was no effect of cropping on P1 mean amplitude [F(1,21) = 2.507, p > 0.05] and critically, no interaction between the two factors [F(1,21) = 0.621, p > 0.05]. Conversely, P1 peak latency was significantly delayed by cropping [F(1,21) = 15.4, p < 0.05, ήp2 = 0.423] but no other experimental factors (all ps > 0.1).

Figure 2. Grand averaged event-related brain potentials recorded in the four conditions of Experiment 1. Waveforms depict a linear derivation of the electrodes used in the statistical analysis for the P1 and N170, respectively. (A) From left to right: linear derivation of electrodes O1, O2, PO7, PO8, PO9, and PO10 regardless of stimulus repetition (factor non-significant), magnification of the P1, and bar plot of P1 mean amplitudes. (B) From left to right: linear derivation of electrodes P7, P8, PO7, PO8, PO9, and PO10 in the experimental block featuring stimulus repetitions, magnification of the N170, and N170 mean amplitudes. (C) Linear derivation of electrodes P7, P8, PO7, PO8, PO9, and PO10 in the experimental block without stimulus repetition, magnification of the N170, and N170 mean amplitudes. Error bars depict SEM.

We found a main effect of repetition on N170 mean amplitude [F(1,21) = 7.13, p < 0.05, ήp2 = 0.253]. Stimulus repetition increased N170 amplitude and this effect was greater for cars than faces as indicated by a significant repetition by category interaction [F(1,21) = 14.04, p < 0.05, ήp2 = 0.401]. The repetition factor did not interact with any other factors. As predicted, object category failed to modulate N170 mean amplitude [F(1,21) = 0.799, p > 0.1; Figures 2B,C]. However, there was a main effect of cropping [F(1,21) = 43.001, p < 0.0001, ήp2 = 0.672], such that cropped stimuli elicited greater N170 mean amplitudes than unaltered stimuli. Also, cropping and object category interacted [F(1,21) = 43.37, p < 0.0001, ήp2 = 0.675], showing that the difference in N170 mean amplitude between the cropped and unaltered conditions was greater for faces than cars (Figures 2B,C). Bonferroni-corrected pair-wise comparisons between cropped and unaltered objects were significant both in the case of faces (p < 0.0001) and in that of cars (p < 0.001). It is noteworthy that the contrast often reported in the literature, i.e., cropped faces vs. unaltered cars was highly significant (p < 0.0001)4.

There was no main effect of repetition on N170 latencies [F(1,21) = 0.54, p > 0.1]. Repetition interacted with category [F(1,21) = 8.401, p < 0.05, ήp2 = 0.286], reducing latencies for repeated in comparison to unrepeated cars [F(1,21) = 6.53, p < 0.05, ήp2 = 0.237], however this latency difference was only 2 ms. Repetition did not interact with any other factor [Fs(1,21) < = 1.85, p > = 0.188]. N170 latency was significantly modulated by both cropping [F(1,21) = 255.72, p < 0.05, ήp2 = 0.924, delayed for cropped as compared to unaltered stimuli] and category [F(1,21) = 123.82, p < 0.05, ήp2 = 0.855, delayed for cars as compared to faces], and these factors interacted significantly [F(1,21) = 7.5, p < 0.05, ήp2 = 0.263], showing that cropping had a greater influence on N170 latencies for faces than cars.

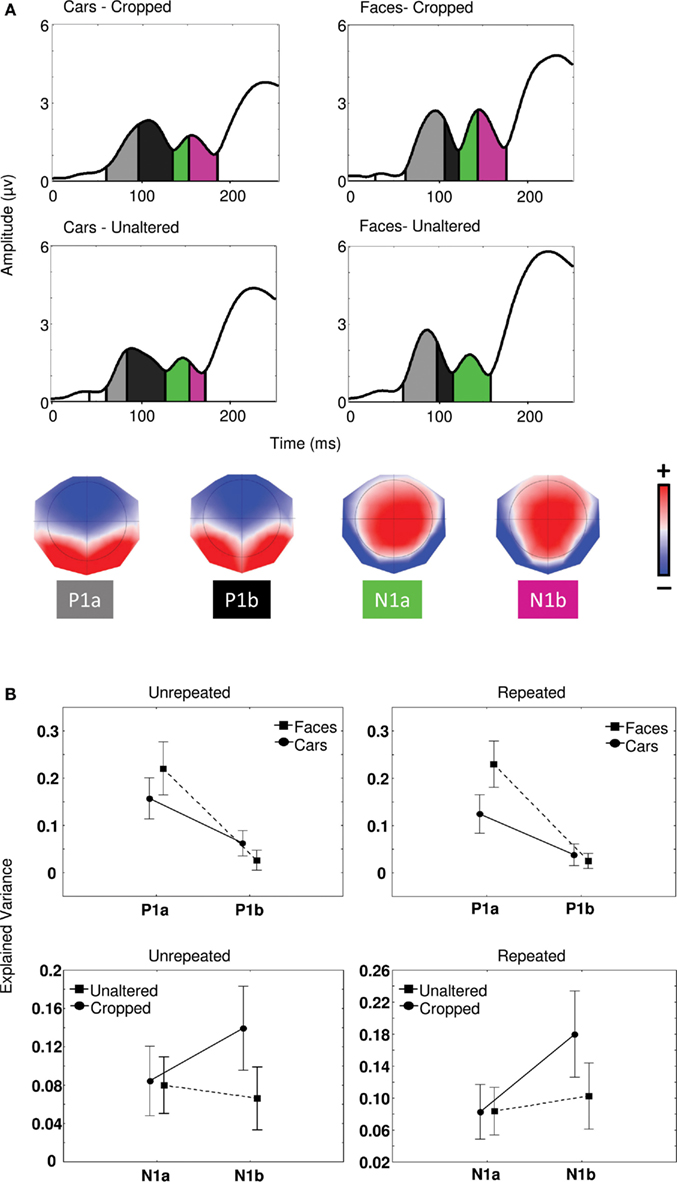

In order to determine the sensitivity of the P1 to object category and that of the N170 to cropping in Experiment 1, a segmentation analysis was performed on the map series elicited in each condition between 0 and 250 ms. This procedure identified two distinct maps for P1 (P1a and P1b) and two distinct maps for N170 (N1a and N1b). The statistical validity of the microstates was tested by evaluating the amount of variance explained by the maps issued from segmentation in each individual participant maps in each condition (see Materials and Methods) using a 2 (cropping) × 2 (category) × 2 (maps) repeated measures ANOVA (Figure 3A).

Figure 3. (A) Global field power waveforms in the four conditions of Experiment 1 segmented by microstate and associated topographies identified by the segmentation procedure (see Materials and Methods). Color scale indicates potentials and ranges from −0.17 to +0.17 μV. (B) Proportion of explained variance by maps P1a and P1b regarding object categories and maps N1a and N1b in relation to cropped and unaltered images.

For unrepeated and repeated blocks separately, category by map interactions [Fs(1,21) > 15.5, ps < 0.001] and univariate tests for planned comparisons confirmed that P1a explained a significantly greater proportion of variance for faces than cars [Fs(1,21) > 11.4, ps < 0.01]. Conversely, map P1b better explained individual maps for cars than faces in the unrepeated block only [F(1,21) = 12.0, p < 0.001]. We found no effect of cropping on P1 microstates (all ps > 0.05). In sum, as predicted, microstates in the P1 range patterned with category differences rather than repetition or cropping.

In the N1 range, in both the case of repeated and unrepeated blocks, we found significantly different microstates between cropping conditions [Fs(1,21) > 6.1, ps < 0.05], such that map N1b better explained variance for cropped than unaltered conditions, whereas map N1a failed to distinguish between any of the experimental conditions [ps > 0.1] (Figure 3B). Univariate test for planned comparisons confirmed that cropping × map interactions were due to map N1b explaining individual maps for cropped stimuli significantly better than individual maps for unaltered stimuli [F(1, 21) = 20.3, p < 0.001], while N1a produced no difference [F(1, 21) = 0.01, p > 0.05].

N170 Cropping Effects are Not Driven by Low-Level Differences between Experimental Conditions

In Experiment 2, the mean reaction time was 399 ms ±54 across all conditions and mean accuracy was 95 ± 3.2%. Accuracy was not affected by either stimulus category or cropping (all ps > 0.05). Reaction times differed significantly for category [F(1,19) = 5.3, p < 0.05, ήp2 = 0.22] and cropping [F(1,19) = 11.57, p < 0.05, ήp2 = 0.38], and these factors interacted [F(1,19) = 13.6, p <0.05, ήp2 = 0.42]. Overall, reaction times were slower to faces than butterflies, with unaltered faces producing the largest delay.

Event-related potentials for all 20 participants displayed a typical P1–N1–P2 complex in all experimental conditions (Figure 4). We found a main effect of object category on P1 mean amplitudes [F(1,19) = 6.29, p < 0.05, ήp2 = 0.25] such that faces elicited greater P1s than butterflies. Critically, with stimuli matched for size and luminance across conditions, there was no effect of cropping on P1 mean amplitude, as in Experiment 1 [F(1,19) = 0, p > 0.05]. Furthermore, object category and cropping did not interact [F(1,19) = 0.887, p > 0.05]. P1 peak latency was unaffected by object category but significantly delayed by cropping [F(1,19) = 8.44, p < 0.05, ήp2 = 0.31] and the two factors interacted [F(1,19) = 4.17, p < 0.05, ήp2 = 0.2] such that cropped faces delayed P1 latencies more than cropped butterflies (although this was a 2-ms difference).

Figure 4. Event-related brain potential results in the four conditions of Experiment 2. Waveforms depict linear derivations of the electrodes used for analysis of the P1 and N170, respectively. (A) From left to right: linear derivation of electrodes O1, O2, PO7, PO8, PO9, and PO10, magnification of the P1, and bar plot of P1 mean amplitudes. (B) From left to right: linear derivation of electrodes P7, P8, PO7, PO8, PO9, and PO10, magnification of the N170, and bar plot of N170 mean amplitudes. Error bars depict SEM.

In the N170 range, as expected from Experiment 1, cropped stimuli elicited greater N170 mean amplitudes than unaltered stimuli [F(1,19) = 21.61, p < 0.0001, ήp2 = 0.53; Figure 4B]. But unexpectedly, faces elicited significantly greater N170 mean amplitudes overall [F(1,19) = 17.12, p < 0.05, ήp2 = 0.47]. However, this effect was driven by cropping, as indicated by a significant category by cropping interaction [F(1,19) = 40.82, p < 0.0001, ήp2 = 0.68], such that the difference in N170 mean amplitude between cropped and unaltered conditions was greater for faces than butterflies (Figure 4B). Critically, unaltered faces and butterflies did not significantly differ in mean amplitude [F(1,19) = 2.13, p > 0.1]. Finally, cropping increased N170 latencies [F(1,19) = 23.8, p < 0.0001, ήp2 = 0.556] by 3 ms on average, but no other factor affected N170 latencies [Fs(1,19) < 1.04, ps > 0.1].

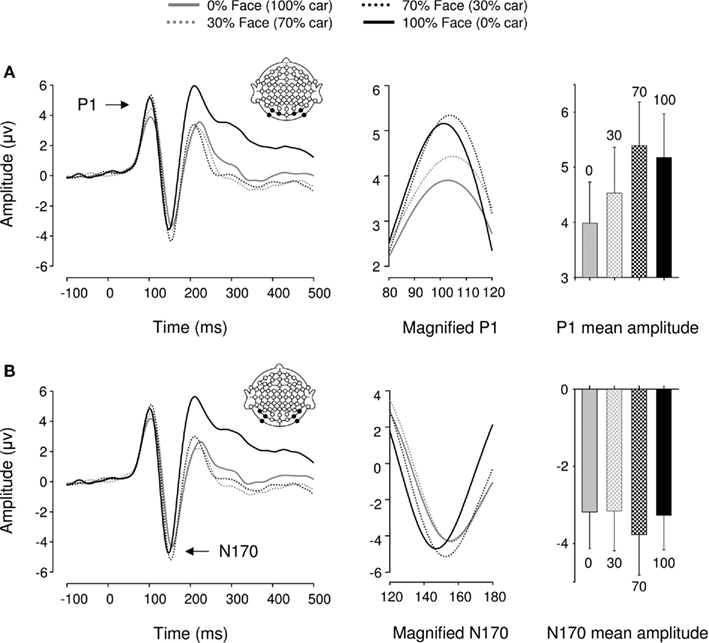

Morphing Pictures across Categories Affects P1 but Not N170 Amplitude

In Experiment 3, a repeated measures ANOVA performed over six posterior occipital electrodes revealed a main effect of category [F(1,17) = 18.09, p < 0.005, ήp2 = 0.516] and morphing [F(1,17) = 6.44, p < 0.05, ήp2 = 0.275] on P1 mean amplitude. There was no interaction between these factors [F(1,17) = 1.9, p > 0.1], suggesting that category and morphing independently increased P1 amplitude (Figure 5). Previous findings of a categorical difference within the P1 range were confirmed [F(1,17) = 11.92, p < 0.01, ήp2 = 0.412] with faces eliciting larger P1 amplitudes than cars. Furthermore, P1 mean amplitude was significantly greater for 100% face and 70% face (30% car) stimuli than 100% car and 70% car (30% face) stimuli, respectively (all ps < 0.05). In other words, P1 mean amplitude was systematically greater for face-like than car-like stimuli. Finally, no differences were found between the 70% face (30% car) and the 100% face conditions [F(1,17) = 1.27, p > 0.1], but there was a difference between 100% car and 70% car (30% face) [F(1,17) = 7.98, p = 0.012, ήp2 = 0.32]. Neither morphing stimuli or categorical differences affected P1 latency [Fs(1,17) < 1.244, ps > 0.1].

Figure 5. Event-related brain potential results in the four conditions of Experiment 3. Waveforms depict linear derivations of the electrodes used for analysis of the P1 and N170, respectively. (A) From left to right: linear derivation of electrodes O1, O2, PO7, PO8, PO9, and PO10, magnification of the P1, and bar plot of P1 mean amplitudes. (B) From left to right: linear derivation of electrodes P7, P8, PO7, PO8, PO9, and PO10, magnification of the N170, and bar plot of N170 mean amplitudes. Error bars depict SEM.

We found no significant modulations of amplitude or latency by either category or morphing in the N170 range [Fs(1,17) < 2.881, ps > 0.1].

Discussion

N170

Experiment 1 aimed at testing the effect of discarding peripheral visual information when studying the neurophysiological indices of face processing. While various lines of evidence have challenged the face-selectivity of the N170, this component remains widely regarded as face-selective (Bentin et al., 1996; Carmel and Bentin, 2002; Itier and Taylor, 2002; Blau et al., 2007; Rossion and Jacques, 2008; Sadeh et al., 2008; Mohamed et al., 2009; Eimer et al., 2010). In all three experiments reported here however, the N170 failed to behave in a face-selective manner (Thierry et al., 2007a, but see Bentin et al., 2007b; Thierry et al., 2007b; Rossion and Jacques, 2008).

Full front views of cars were compared to faces in Experiments 1 and 3 because the two categories have properties in common: they are highly frequent and familiar objects, easy to categorize, susceptible of being subcategorized (make/ethnic origin), they have generic internal features, the arrangement of which is critical for identification. We considered that cars are the ideal contrast category for faces precisely because of these shared properties since a brain response selective to faces should indeed distinguish between the two categories regardless of their similarities. In previous studies involving full front views of faces and cars, N170 selectivity was not measurable (Rossion et al., 2000; Schweinberger et al., 2004; Thierry et al., 2007a). A common account for the finding of similar amplitudes to faces and cars is that they are perceptually highly similar, which could evoke comparable N170 responses (Hadjikhani et al., 2009) but, ultimately, unless neuropsychologically impaired, no one could ever claim that a picture of a car can be confused with that of a face.

A number of studies have resorted to cropping faces from full head pictures, particularly for behavioral testing of face recognition in patients with prosopagnosia (Gauthier et al., 1999; Saumier et al., 2001; Behrmann et al., 2005; Herzmann et al., 2008; Stollhoff et al., 2010). The rationale behind the use of such modified stimuli in neuropsychological testing is to prevent patients relying on the analysis of peripheral cues such as hair color and shape, neck width, or ear size and shape to recognize faces (Duchaine and Nakayama, 2004). The fact that faces are more difficult to recognize when peripheral cues are removed implies that such cues are important in the natural process of face recognition. Surprisingly, however, experimental psychologists and neuroscientists have used such cropped faces as stimuli in experiments testing visual object categorization without preliminarily testing whether this alteration would affect the processing of different object categories in different ways (Horovitz et al., 2004; Schiltz et al., 2006; Kuefner et al., 2010). Our findings show a dramatic effect of such stimulus alteration in the case of faces as compared to the case of non-face stimuli, the N170 being increased in amplitude by 1.37 μV on average and 1.39 μV at the peak when peripheral features are deleted. This effect is consistent with modulation of hemodynamic responses from the fusiform face area (FFA) and OFA found for external features of faces presented in isolation (Andrews et al., 2010).

Interestingly, a significant change in N170 mean amplitude was even found in the case of cars, albeit of smaller amplitude. It is worth noting here that cropping cars is less straightforward than cropping faces, since the internal features of a face are easily identifiable whereas those of a car are uncertain. This is mainly due to the existence of a neutral, featureless area (e.g., forehead, cheeks, etc.,) between peripheral and inner parts in the case of faces that has no equivalent in the case of cars. In addition, spatial relations of features tend to differ between manufacturer’s models of cars to a greater extent than between individual faces. Therefore, our cropped car stimuli were probably less representative of inner part extraction than cropped face stimuli, which may have accounted for the relatively smaller amplitude modulation by cropping for cars. It is noteworthy that the cropped versions of faces and cars were smaller in size as compared to unaltered images. It has been shown that ERP amplitudes increase with stimulus size (Busch et al., 2004; De Cesarei and Codispoti, 2006), therefore differences in physical size between cropped and unaltered stimuli are unlikely to account for the increase in amplitude by cropping observed in the N170 range. In Experiment 2, we addressed this issue directly by matching the cropped stimuli with the unaltered stimuli in terms of size (and luminance while keeping to a narrow contrast range). The effect of cropping on N170 amplitude for faces was fully replicated. However, we did not find such an effect for butterflies. This arguably is not surprising since cropped butterflies were perceptually similar to unaltered ones (see Figure 1 for an example). Since no significant information was lost by cropping (i.e., only a portion of the wings), there is no reason why visual processing should have been more difficult in this condition even though cropping obviously engendered ineluctable differences in spatial frequency between conditions.

In addition to a significant modulation by cropping in Experiment 1, we found a main effect of stimulus repetition on N170 mean amplitude. Unexpectedly, the repetition effect was an increase in amplitude with repetition, which was greater for faces than cars. This is inconsistent with the previously reported habituation effect (Campanella et al., 2000; Heisz et al., 2006), which would be expected to result in an N170 amplitude reduction triggered by immediate repetition. However, repetitions in our study were always separated by several intervening trials, possibly supporting a familiarity account of N170 modulation (e.g., Jemel et al., 2010; Leleu et al., 2010; Tacikowski et al., 2011). Indeed, in studies of repetition priming where long lags between repeated stimuli have been used, no reduction of N170 amplitude has been reported (Schweinberger et al., 2002; Boehm et al., 2006). Furthermore, larger amplitudes to repeated stimuli were previously reported in the N1 range when degraded images were used as primes (Doniger et al., 2001).

We can draw two conclusions from the N170 findings: (a) Although the N170 was not category-selective when we compared complete faces, cars, and butterflies, it is strongly amplified when features important for face recognition are deleted, and we contend that N170 is likely to index mechanisms beyond object categorization such as the processing of familiarity, identity, ethnic origin, emotional expression, etc.; (b) the comparison of N170 amplitude elicited by cropped faces and other object categories presented without alteration should not be used to make claims regarding category-selectivity in visual cognition.

P1

We found events compatible with object categorization in the P1 range when comparing faces and cars (Experiment 1) or faces and butterflies (Experiment 2), and sensitivity to stimulus category was independent of other manipulations, e.g., stimulus variability (Thierry et al., 2007a), cropping (Experiments 1 and 2), and morphing (Experiment 3).

The P1, a peak generally regarded as an index of low-level perceptual processing (Picton et al., 2000; Tarkiainen et al., 2002; Cornelissen et al., 2003; Rossion et al., 2003) and repeatedly suggested as being sensitive to differences in contrast, color, luminance, etc. (Nakashima et al., 2008; Thierry et al., 2009), was only sensitive to object category (i.e., to global image features) regardless of stimulus integrity. In Experiment 1, there were residual differences between cropped and unaltered stimuli in terms of luminance and contrast because peripheral features of faces, in particular, tend to have high contrast and low luminance and thus cannot be dismissed without affecting low-level properties of stimuli. Nevertheless, the small difference in luminance between cropped and unaltered stimuli in Experiment 1 should have produced a P1 modulation in the opposite direction to the trend observed (e.g., Thierry et al., 2009; and in any case the effect of cropping on P1 amplitude was not significant). Furthermore, in Experiment 2 in which stimuli were matched for luminance and narrowly controlled in terms of contrast, the effect of category on P1 amplitude was fully replicated.

Overall, category-sensitivity in the P1 range held across all three experiments and was consistent with findings of a critical phase of visual object categorization at around 100 ms post-stimulus presentation in MEG, ERP, and TMS studies (Liu et al., 2002; Herrmann et al., 2005; Pitcher et al., 2007). In Experiment 3, the P1 was not only increased for faces relative to cars but also for stimuli affected by morphing as compared to unaltered stimuli. This result is consistent with the view that P1 amplitude is increased by visual ambiguity (Schupp et al., 2008) and, more generally, task difficulty (Dering et al., 2009) because categorization of morphed stimuli – which contain information from the other category– is more challenging than that of unaltered images.

Conclusion

To our knowledge, the effect of cropping inner parts of faces and objects on visual categorization has never been studied directly using ERPs and the potential effects of this manipulation have not been discussed (Eimer, 2000b; Duchaine and Nakayama, 2004). This leads to the possibility that category-effects previously reported in the N170 range may have been due not only to uncontrolled perceptual variance between conditions (Thierry et al., 2007a) but also reduction in the amount of information afforded by artificially impoverished stimuli. Furthermore, the sensitivity of the N170 to stimulus integrity (Bentin and Golland, 2002; Bentin et al., 2002) is consistent with hypotheses that the N170 is involved in higher level integration such as identification (Liu et al., 2002; Itier et al., 2006), a process not exclusive to faces.

Overall, our results stand in contrast to a large number of studies in the literature that have consistently reported face-selective N170 modulations. For instance, the N170 is increased in amplitude for inverted faces as compared to upright faces, independently of stimulus variability (Rossion et al., 2000; Boehm et al., 2011). However, it remains unexplained why the N170 should be increased in amplitude rather than reduced by inversion. It is intriguing that cropping, like inversion, increases N170 amplitude, perhaps because in both cases identification difficulty is increased. Visual expertise also has been repeatedly shown to modulate the N170 elicited by non-face–objects (Tanaka and Curran, 2001) or faces (Rossion et al., 2004) independent of cropping. However, effects of cropping on their own cannot explain all modulations found in the N170 range, just like inter-stimulus variance, symmetry, or other individual manipulations. Furthermore, since cropped faces arguably require being interpreted as faces, our results are not incompatible with conceptual priming effects such as those reported by Bentin et al. (2002) and Bentin and Golland (2002). Finally, we note that some studies have shown a lack of face-selectivity in the N170 range in congenitally prosopagnosic patients (Bentin et al., 2007a). However, Harris et al. 2005) have shown that the association between prosopagnosia and the absence of N170 face-selectivity is not straightforward, notwithstanding the fact that most experiments with prosopagnosic patients have systematically used cropped faces.

In sum, we establish that the N170 peak of visual event-related brain potentials is highly sensitive to stimulus integrity, i.e., it is increased in amplitude when stimuli are missing peripheral information, but fails to display category-selectivity with regard to the two contrast categories used here (cars and butterflies). Future studies will characterize the properties of the N170 that are potentially specific to face processing beyond the level of categorization (Eimer, 2000a; Itier and Taylor, 2002). More importantly, robust category-sensitivity regardless of low-level perceptual differences between conditions is consistently found in the P1 range, within 100 ms after picture presentation.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors wish to thank Ghislain Chavand, Paul Downing, Richard Ivry, and Robert Rafal for assistance and comments. Guillaume Thierry is funded by the Economic and Social Research Council (RES-000-23-0095) and the European Research Council (ERC-StG-209704). Benjamin Dering is funded by a School of Psychology studentship of Bangor University. Clara D. Martin is funded by the Spanish government (grant Juan de la Cierva; Consolider Ingenio 2010 CE-CSD2007-00121). Alan J. Pegna is supported by the Swiss National Science foundation grant no. 320030-125196. Segmentation analysis was performed using Cartool (http://brainmapping.unige.ch/Cartool.htm) programmed by Denis Brunet from the Functional Brain Mapping Laboratory, Geneva, Switzerland, and supported by the Center for Biomedical Imaging of Geneva and Lausanne. Clara Martin was supported by the Spanish Government (grant Juan de la Cierva; PSI2008-01191; Consolider Ingenio 2010 CSD2007-00012) and the Catalan government (Consolidado SGR 2009-1521). Clara Martin was supported by the Spanish Government (grant Juan dela Cierva; PSI2008-01191; Consolider Ingenio 2010 CSD2007-00012) and the Catalan government (Consolidado SGR 2009-1521).

Footnotes

- ^Here, we refer to a response as category-selective when it is significantly larger in amplitude to a specific category than to every other object category. Category-sensitivity, on the other hand, refers to significant modulations of the response between any two given categories and is therefore not necessarily selective.

- ^Due to cropping eliminating high contrast parts of the face–object (e.g., hair), it was not possible to fully control for contrast across experimental conditions. However, significant effects of cropping – where they existed – always were in the opposite direction as that that would be expected from a contrast manipulation.

- ^To check the efficacy of our eye-blink correction procedure and to establish that residual noise did not affect our results, we conducted a new analysis excluding all trials containing eye movement artifacts. The net loss of trials was <1.4% and statistical results were unchanged in all three experiments.

- ^We found a significant category × electrode interaction, which survived normalization (such as that recommended by McCarthy and Wood, 1985). Category-effects at electrodes P8 and PO8 drove this interaction, such that N170 was significantly greater for cars than faces (similar to effects reported by Dering et al., 2009). Note that N170 amplitude was not significantly greater for faces than cars at any electrode site.

References

Allison, T., Ginter, H., McCarthy, G., Nobre, A. C., Puce, A., Luby, M., and Spencer, D. D. (1994). Face recognition in human extrastriate cortex. J. Neurophysiol. 71, 821–825.

Allison, T., Puce, A., Spencer, D. D., and McCarthy, G. (1999). Electrophysiological studies of human face perception. I: potentials generated in occipitotemporal cortex by face and non-face stimuli. Cereb. Cortex 9, 415–430.

Anaki, D., and Bentin, S. (2009). Familiarity effects on categorization levels of faces and objects. Cognition 111, 144–149.

Andrews, T. J., Davies-Thompson, J., Kingstone, A., and Young, A. W. (2010). Internal and external features of the face are represented holistically in face-selective regions of visual cortex. J. Neurosci. 30, 3544–3552.

Behrmann, M., Avidan, G., Marotta, J. J., and Kimchi, R. (2005). Detailed exploration of face-related processing in congenital prosopagnosia: 1. Behavioral findings. J. Cogn. Neurosci. 17, 1130–1149.

Bennett, T. L. (1992). “Cognitive effects of epilepsy and anticonvulsant medications,” in The Neuropsychology of Epilepsy, ed. T. L. Bennett (New York: Plenum Press), 73–95.

Bentin, S., Allison, T., Puce, A., Perez, E., and McCarthy, G. (1996). Electrophysiological studies of face perception in humans. J. Cogn. Neurosci. 8, 551–565.

Bentin, S., DeGutis, J. M., D’Esposito, M., and Robertson, L. C. (2007a). Too many trees to see the forest: performance, ERP and fMRI manifestations of integrative congenital prosopagnosia. J. Cogn. Neurosci. 19, 132–146.

Bentin, S., Taylor, M. J., Rousselet, G. A., Itier, R. J., Caldara, R., Schyns, P. G., Jacques, C., and Rossion, B. (2007b). Controlling interstimulus perceptual variance does not abolish N170 face sensitivity. Nat. Neurosci. 10, 801–802; author reply 802–803.

Bentin, S., and Golland, Y. (2002). Meaningful processing of meaningless stimuli: the influence of perceptual experience on early visual processing of faces. Cognition 86, B1–B14.

Bentin, S., Sagiv, N., Mecklinger, A., Friederici, A., and von Cramon, Y. D. (2002). Priming visual face-processing mechanisms: electrophysiological evidence. Psychol. Sci. 13, 190–193.

Blau, V. C., Maurer, U., Tottenham, N., and McCandliss, B. D. (2007). The face-specific N170 component is modulated by emotional facial expression. Behav. Brain Funct. 3, 7.

Boehm, S. G., Dering, B., and Thierry, G. (2011). Category-sensitivity in the N170 range: a question of topography and inversion, not one of amplitude. Neuropsychologia 49, 2082–2089.

Boehm, S. G., Klostermann, E. C., and Paller, K. A. (2006). Neural correlates of perceptual contributions to nondeclarative memory for faces. Neuroimage 30, 1021–1029.

Braeutigam, S., Bailey, A. J., and Swithenby, S. J. (2001). Task-dependent early latency (30-60 ms) visual processing of human faces and other objects. Neuroreport 12, 1531–1536.

Brandeis, D., and Lehmann, D. (1986). Event-related potentials of the brain and cognitive processes: approaches and applications. Neuropsychologia 24, 151–168.

Busch, N. A., Debener, S., Kranczioch, C., Engel, A. K., and Herrmann, C. S. (2004). Size matters: effects of stimulus size, duration and eccentricity on the visual gamma-band response. Clin. Neurophysiol. 115, 1810–1820.

Campanella, S., Hanoteau, C., Depy, D., Rossion, B., Bruyer, R., Crommelinck, M., and Guérit, J. M. (2000). Right N170 modulation in a face discrimination task: an account for categorical perception of familiar faces. Psychophysiology 37, 796–806.

Carmel, D., and Bentin, S. (2002). Domain specificity versus expertise: factors influencing distinct processing of faces. Cognition 83, 1–29.

Cornelissen, P., Tarkiainen, A., Helenius, P., and Salmelin, R. (2003). Cortical effects of shifting letter position in letter strings of varying length. J. Cogn. Neurosci. 15, 731–746.

De Cesarei, A., and Codispoti, M. (2006). When does size not matter? Effects of stimulus size on affective modulation. Psychophysiology 43, 207–215.

Dering, B., Martin, C. D., and Thierry, G. (2009). Is the N170 peak of visual event-related brain potentials car-selective? Neuroreport 20, 902–906.

Doniger, G. M., Foxe, J. J., Schroeder, C. E., Murray, M. M., Higgins, B. A., and Javitt, D. C. (2001). Visual perceptual learning in human object recognition areas: a repetition priming study using high-density electrical mapping. Neuroimage 13, 305–313.

Duchaine, B. C., and Nakayama, K. (2004). Developmental prosopagnosia and the Benton Facial Recognition Test. Neurology 62, 1219–1220.

Eimer, M. (1998). Does the face-specific N170 component reflect the activity of a specialized eye processor? Neuroreport 9, 2945–2948.

Eimer, M. (2000a). Effects of face inversion on the structural encoding and recognition of faces. Evidence from event-related brain potentials. Brain Res. Cogn. Brain Res. 10, 145–158.

Eimer, M. (2000b). The face-specific N170 component reflects late stages in the structural encoding of faces. Neuroreport 11, 2319–2324.

Eimer, M., Kiss, M., and Nicholas, S. (2010). Response profile of the face-sensitive N170 component: a rapid adaptation study. Cereb. Cortex 20, 2442–2452.

Gauthier, I., Behrmann, M., and Tarr, M. J. (1999). Can face recognition really be dissociated from object recognition? J. Cogn. Neurosci. 11, 349–370.

George, N., Evans, J., Fiori, N., Davidoff, J., and Renault, B. (1996). Brain events related to normal and moderately scrambled faces. Brain Res. Cogn. Brain Res. 4, 65–76.

Goffaux, V., Gauthier, I., and Rossion, B. (2003). Spatial scale contribution to early visual differences between face and object processing. Brain Res. Cogn. Brain Res. 16, 416–424.

Gratton, G., Coles, M. G., and Donchin, E. (1983). A new method for off-line removal of ocular artifact. Electroencephalogr. Clin. Neurophysiol. 55, 468–484.

Hadjikhani, N., Kveraga, K., Naik, P., and Ahlfors, S. P. (2009). Early (M170) activation of face-specific cortex by face-like objects. Neuroreport 20, 403–407.

Harris, A. M., Duchaine, B. C., and Nakayama, K. (2005). Normal and abnormal face selectivity of the M170 response in developmental prosopagnosics. Neuropsychologia 43, 2125–2136.

Heisz, J. J., Watter, S., and Shedden, J. M. (2006). Progressive N170 habituation to unattended repeated faces. Vision Res. 46, 47–56.

Herrmann, M. J., Ehlis, A. C., Ellgring, H., and Fallgatter, A. J. (2005). Early stages (P100) of face perception in humans as measured with event-related potentials (ERPs). J. Neural Transm. 112, 1073–1081.

Herzmann, G., Danthiir, V., Schacht, A., Sommer, W., and Wilhelm, O. (2008). Toward a comprehensive test battery for face cognition: assessment of the tasks. Behav. Res. Methods 40, 840–857.

Horovitz, S. G., Rossion, B., Skudlarski, P., and Gore, J. C. (2004). Parametric design and correlational analyses help integrating fMRI and electrophysiological data during face processing. Neuroimage 22, 1587–1595.

Itier, R. J., Latinus, M., and Taylor, M. J. (2006). Face, eye and object early processing: what is the face specificity? Neuroimage 29, 667–676.

Itier, R. J., and Taylor, M. J. (2002). Inversion and contrast polarity reversal affect both encoding and recognition processes of unfamiliar faces: a repetition study using ERPs. Neuroimage 15, 353–372.

Itier, R. J., and Taylor, M. J. (2004). N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cereb. Cortex 2004 14, 132–142.

Jacques, C., and Rossion, B. (2007). Electrophysiological evidence for temporal dissociation between spatial attention and sensory competition during human face processing. Cereb. Cortex 17, 1055–1065.

Jemel, B., Schuller, A. M., Cheref-Khan, Y., Goffaux, V., Crommelinck, M., and Bruyer, R. (2003). Stepwise emergence of the face-sensitive N170 event-related potential component. Neuroreport 14, 2035–2039.

Jemel, B., Schuller, A. M., and Goffaux, V. (2010). Characterizing the spatio-temporal dynamics of the neural events occurring prior to and up to overt recognition of famous faces. J. Cogn. Neurosci. 22, 2289–2305.

Koenig, T., and Melie-Garcia, L. (2010). A method to determine the presence of averaged event-related fields using randomization tests. Brain Topogr. 23, 233–242.

Kovacs, G., Zimmer, M., Banko, E., Harza, I., Antal, A., and Vidnyanszky, Z. (2006). Electrophysiological correlates of visual adaptation to faces and body parts in humans. Cereb. Cortex 16, 742–753.

Krolak-Salmon, P., Henaff, M. A., Vighetto, A., Bertrand, O., and Mauguiere, F. (2004). Early amygdala reaction to fear spreading in occipital, temporal, and frontal cortex: a depth electrode ERP study in human. Neuron 42, 665–676.

Kuefner, D., de Heering, A., Jacques, C., Palmero-Soler, E., and Rossion, B. (2010). Early visually evoked electrophysiological responses over the human brain (P1, N170) show stable patterns of face-sensitivity from 4 years to adulthood. Front. Hum. Neurosci. 3:67.

Lehmann, D., and Skrandies, W. (1984). Spatial analysis of evoked potentials in man – a review. Prog. Neurobiol. 23, 227–250.

Leleu, A., Caharel, S., Carre, J., Montalan, B., Snoussi, M., Vom Hofe, A., Charvin, H., Lalonde, R., and Rebaï, M. (2010). Perceptual interactions between visual processing of facial familiarity and emotional expression: an event-related potentials study during task-switching. Neurosci. Lett. 482, 106–111.

Linkenkaer-Hansen, K., Palva, J. M., Sams, M., Hietanen, J. K., Aronen, H. J., and Ilmoniemi, R. J. (1998). Face-selective processing in human extrastriate cortex around 120 ms after stimulus onset revealed by magneto- and electroencephalography. Neurosci. Lett. 253, 147–150.

Liu, J., Harris, A., and Kanwisher, N. (2002). Stages of processing in face perception: an MEG study. Nat. Neurosci. 5, 910–916.

McCarthy, G., and Wood, C. C. (1985). Scalp distribution of event-related potentials: an ambiguity associated with analysis of variance models. Electroencephalogr. clin. Neurophysiol. 62, 203–208.

Michel, C. M., Seeck, M., and Landis, T. (1999). Spatiotemporal dynamics of human cognition. News Physiol. Sci. 14, 206–214.

Michel, C. M., Thut, G., Morand, S., Khateb, A., Pegna, A. J., Grave de Peralta, R., Gonzalez, S., Seeck, M., and Landis, T. (2001). Electric source imaging of human brain functions. Brain Res. Brain Res. Rev. 36, 108–118.

Minear, M., and Park, D. C. (2004). A lifespan database of adult facial stimuli. Behav. Res. Methods Instrum. Comput. 36, 630–633.

Mohamed, T. N., Neumann, M. F., and Schweinberger, S. R. (2009). Perceptual load manipulation reveals sensitivity of the face-selective N170 to attention. Neuroreport 20, 782–787.

Mouchetant-Rostaing, Y., and Giard, M. H. (2003). Electrophysiological correlates of age and gender perception on human faces. J. Cogn. Neurosci. 15, 900–910.

Murray, M. M., Brunet, D., and Michel, C. M. (2008). Topographic ERP analyses: a step-by-step tutorial review. Brain Topogr. 20, 249–264.

Nakashima, T., Kaneko, K., Goto, Y., Abe, T., Mitsudo, T., Ogata, K., Makinouchi, A., and Tobimatsu, S. (2008). Early ERP components differentially extract facial features: evidence for spatial frequency-and-contrast detectors. Neurosci. Res. 62, 225–235.

Pascual-Marqui, R. D., Michel, C. M., and Lehmann, D. (1995). Segmentation of brain electrical activity into microstates: model estimation and validation. IEEE Trans. Biomed. Eng. 42, 658–665.

Pegna, A. J., Khateb, A., Michel, C. M., and Landis, T. (2004). Visual recognition of faces, objects, and words using degraded stimuli: where and when it occurs. Hum. Brain Mapp. 22, 300–311.

Pegna, A. J., Khateb, A., Spinelli, L., Seeck, M., Landis, T., and Michel, C. M. (1997). Unraveling the cerebral dynamics of mental imagery. Hum. Brain Mapp. 5, 410–421.

Picton, T. W., Bentin, S., Berg, P., Donchin, E., Hillyard, S. A., Johnson, R. Jr., Miller, G. A., Ritter, W., Ruchkin, D. S., Rugg, M. D., and Taylor, M. J. (2000). Guidelines for using human event-related potentials to study cognition: recording standards and publication criteria. Psychophysiology 37, 127–152.

Pitcher, D., Walsh, V., Yovel, G., and Duchaine, B. (2007). TMS evidence for the involvement of the right occipital face area in early face processing. Curr. Biol. 17, 1568–1573.

Righart, R., and de Gelder, B. (2007). Impaired face and body perception in developmental prosopagnosia. Proc. Natl. Acad. Sci. U.S.A. 104, 17234–17238.

Rossion, B. (2008). Picture-plane inversion leads to qualitative changes of face perception. Acta Psychol. (Amst) 128, 274–289.

Rossion, B., Gauthier, I., Tarr, M. J., Despland, P., Bruyer, R., Linotte, S., and Crommelinck, M. (2000). The N170 occipito-temporal component is delayed and enhanced to inverted faces but not to inverted objects: an electrophysiological account of face-specific processes in the human brain. Neuroreport 11, 69–74.

Rossion, B., and Jacques, C. (2008). Does physical interstimulus variance account for early electrophysiological face sensitive responses in the human brain? Ten lessons on the N170. Neuroimage 39, 1959–1979.

Rossion, B., Joyce, C. A., Cottrell, G. W., and Tarr, M. J. (2003). Early lateralization and orientation tuning for face, word, and object processing in the visual cortex. Neuroimage 20, 1609–1624.

Rossion, B., Kung, C.-C., and Tarr, M. J. (2004). Visual expertise with nonface objects leads to competition with the early perceptual processing of faces in the human occipitotemporal cortex. Proc. Natl. Acad. Sci. U.S.A. 101, 14521–14526.

Rousselet, G. A., Husk, J. S., Bennett, P. J., and Sekuler, A. B. (2007). Single-trial EEG dynamics of object and face visual processing. Neuroimage 36, 843–862.

Sadeh, B., Zhdanov, A., Podlipsky, I., Hendler, T., and Yovel, G. (2008). The validity of the face-selective ERP N170 component during simultaneous recording with functional MRI. Neuroimage 42, 778–786.

Saumier, D., Arguin, M., and Lassonde, M. (2001). Prosopagnosia: a case study involving problems in processing configural information. Brain Cogn. 46, 255–259.

Schiltz, C., Sorger, B., Caldara, R., Ahmed, F., Mayer, E., Goebel, R., and Rossion, B. (2006). Impaired face discrimination in acquired prosopagnosia is associated with abnormal response to individual faces in the right middle fusiform gyrus. Cereb. Cortex 16, 574–586.

Schupp, H. T., Stockburger, J., Schmalzle, R., Bublatzky, F., Weike, A. I., and Hamm, A. O. (2008). Visual noise effects on emotion perception: brain potentials and stimulus identification. Neuroreport 19, 167–171.

Schweinberger, S. R., Huddy, V., and Burton, A. M. (2004). N250r: a face-selective brain response to stimulus repetitions. Neuroreport 15, 1501–1505.

Schweinberger, S. R., Pickering, E. C., Burton, A. M., and Kaufmann, J. M. (2002). Human brain potential correlates of repetition priming in face and name recognition. Neuropsychologia 40, 2057–2073.

Seeck, M., Michel, C. M., Blanke, O., Thut, G., Landis, T., and Schomer, D. L. (2001). Intracranial neurophysiological correlates related to the processing of faces. Epilepsy Behav. 2, 545–557.

Seeck, M., Michel, C. M., Mainwaring, N., Cosgrove, R., Blume, H., Ives, J., Landis, T., and Schomer, D. L. (1997). Evidence for rapid face recognition from human scalp and intracranial electrodes. Neuroreport 8, 2749–2754.

Stollhoff, R., Jost, J., Elze, T., and Kennerknecht, I. (2010). The early time course of compensatory face processing in congenital prosopagnosia. PLoS ONE 5, e11482.

Tacikowski, P., Jednorog, K., Marchewka, A., and Nowicka, A. (2011). How multiple repetitions influence the processing of self-, famous and unknown names and faces: an ERP study. Int. J. Psychophysiol. 79, 219–230.

Tanaka, J. W., and Curran, T. (2001). A neural basis for expert object recognition. Psychol. Sci. 12, 43–47.

Tarkiainen, A., Cornelissen, P. L., and Salmelin, R. (2002). Dynamics of visual feature analysis and object-level processing in face versus letter-string perception. Brain 125, 1125–1136.

Thierry, G., Athanasopoulos, P., Wiggett, A., Dering, B., and Kuipers, J. R. (2009). Unconscious effects of language-specific terminology on preattentive color perception. Proc. Natl. Acad. Sci. U.S.A. 106, 4567–4570.

Thierry, G., Martin, C. D., Downing, P., and Pegna, A. J. (2007a). Controlling for interstimulus perceptual variance abolishes N170 face selectivity. Nat. Neurosci. 10, 505–511.

Thierry, G., Martin, C. D., Downing, P. E., and Pegna, A. J. (2007b). Is the N170 sensitive to the human face or to several intertwined perceptual and conceptual factors? Nat. Neurosci. 10, 802–803.

Thierry, G., Pegna, A. J., Dodds, C., Roberts, M., Basan, S., and Downing, P. (2006). An event-related potential component sensitive to images of the human body. Neuroimage 32, 871–879.

Vuilleumier, P., and Pourtois, G. (2007). Distributed and interactive brain mechanisms during emotion face perception: evidence from functional neuroimaging. Neuropsychologia 45, 174–194.

Xu, Y., Liu, J., and Kanwisher, N. (2005). The M170 is selective for faces, not for expertise. Neuropsychologia 43, 588–597.

Keywords: visual object categorization, face processing, event-related brain potentials, N170, P100

Citation: Dering B, Martin CD, Moro S, Pegna AJ and Thierry G (2011) Face-sensitive processes one hundred milliseconds after picture onset. Front. Hum. Neurosci. 5:93. doi: 10.3389/fnhum.2011.00093

Received: 02 February 2011; Accepted: 13 August 2011;

Published online: 15 September 2011.

Edited by:

Leon Y. Deouell, The Hebrew University of Jerusalem, IsraelReviewed by:

Micah M. Murray, Université de Lausanne, SwitzerlandLeon Y. Deouell, The Hebrew University of Jerusalem, Israel

Hiroshi Nittono, Hiroshima University, Japan

Martin Eimer, Birkbeck, University of London, UK

Copyright: © 2011 Dering, Martin, Moro, Pegna and Thierry. This is an open-access article subject to a non-exclusive license between the authors and Frontiers Media SA, which permits use, distribution and reproduction in other forums, provided the original authors and source are credited and other Frontiers conditions are complied with.

*Correspondence: Guillaume Thierry, School of Psychology, University of Wales, LL57 2AS Bangor, UK. e-mail: g.thierry@bangor.ac.uk