- 1School of Information Engineering, Huzhou University, Huzhou, China

- 2Zhejiang Province Key Laboratory of Smart Management & Application of Modern Agricultural Resources, Huzhou University, Huzhou, China

- 3The Laboratory of Artificial Intelligence and Bigdata in Ophthalmology, Affiliated Eye Hospital of Nanjing Medical University, Nanjing, China

- 4Advanced Ophthalmology Laboratory (AOL), Robotrak Technologies, Nanjing, China

Purpose: To assess the value of automatic disc-fovea angle (DFA) measurement using the DeepLabv3+ segmentation model.

Methods: A total of 682 normal fundus image datasets were collected from the Eye Hospital of Nanjing Medical University. The following parts of the images were labeled and subsequently reviewed by ophthalmologists: optic disc center, macular center, optic disc area, and virtual macular area. A total of 477 normal fundus images were used to train DeepLabv3+, U-Net, and PSPNet model, which were used to obtain the optic disc area and virtual macular area. Then, the coordinates of the optic disc center and macular center were obstained by using the minimum outer circle technique. Finally the DFA was calculated.

Results: In this study, 205 normal fundus images were used to test the model. The experimental results showed that the errors in automatic DFA measurement using DeepLabv3+, U-Net, and PSPNet segmentation models were 0.76°, 1.4°, and 2.12°, respectively. The mean intersection over union (MIoU), mean pixel accuracy (MPA), average error in the center of the optic disc, and average error in the center of the virtual macula obstained by using DeepLabv3+ model was 94.77%, 97.32%, 10.94 pixels, and 13.44 pixels, respectively. The automatic DFA measurement using DeepLabv3+ got the less error than the errors that using the other segmentation models. Therefore, the DeepLabv3+ segmentation model was finally chosen to measure DFA automatically.

Conclusions: The DeepLabv3+ segmentation model -based automatic segmentation techniques can produce accurate and rapid DFA measurements.

Introduction

The optic disc and macula are normal physiological structures of the eye. The physiologic disc-fovea angle (DFA) is measured using the line connecting the geometric center of the optic disc to the macular center and the horizontal line passing through the geometric center of the optic disc. The DFA of patients with rotational strabismus is usually beyond the normal range; therefore, DFA measurement is an important tool for the diagnosis of rotational strabismus. The measurement of the rotational strabismus angle is divided into subjective and objective examinations; the former examination utilizes the Maddox method (1), Jackson crossed columnoscopy (2), and manual corneal margin marking (3), while the latter examination is measured using fundus images (4–8). Currently, DFA measurement based on fundus images are mainly measured manually and suffer from an average error of 2.0 (±1.8) (9). There were several disadvantages in manual measurement of DFA: poor reproducibility, low accuracy and time-consuming manual measurement (6, 10). Therefore, it is necessary to research automatic DFA measurement methods.

Artificial intelligence has applications in various fields. Since ophthalmology has many structured images, it has become one of the frontiers of artificial intelligence research (11, 12). AI has many applications in the field of classification and segmentation in ophthalmology (13–20). The automatic measurement of DFA is associated with optic disc segmentation (21–24), optic disc centration (25–27), and macular centration (28). Xiong et al. (29) investigated optic disc segmentation using the U-Net model. Bhatkalkar et al. (30) used a deep learning-driven heat map regression model to locate the optic disc center. Cao et al. (31) proposed a macular localization method based on morphological features and k-mean clustering. There are many related studies illustrate similar methods (32–35). At present, the DFA measurement method based on fundus images has been less studied. Simiera et al. proposed the Cyclocheck software, which measured DFA by locating the center of the macula and its tangent line to the optic disc (6). Piedrahita et al. (10) designed a DFA measurement software by using MATLAB language and the software needed manually locate the optic disc edge and macular center to calculate the DFA. The above methods were suitable for single-image measurement and required physician participation; its disadvantages include the requirement of step-by-step completion, poor repeatability, and low efficiency. To circumvent these weaknesses and challenges, this study designed a fully automated method for measuring the DFA using a deep learning model.

In this study, three segmentation models (DeepLabv3+, U-Net, and PSPNet) were trained to segment the optic disc area and virtual macular area based on 682 normal fundus images. The DFA was calculated by finding the macular and optic disc centers. Therefore, an automatic DFA measurement based on fundus images is realized.

Materials and methods

Data source

The color fundus image data from the Eye Hospital of Nanjing Medical University were obtained from non-mydriasis fundus cameras, and they collected from January to June 2021. A total of 682 images, each with a size of 2,584 × 1,985 and the angle of the color images were selected to be correct. To meet the inclusion criteria of this study, all of the eyes that were selected for the fundus images had no retinal disease, and this was confirmed by the ophthalmologist. There were no restrictions based on sex or age for this study. Additionally, all relevant personal information was removed to avoid the inappropriate disclosure of private information.

The methodology of the study began with the labeling of the optic disc area, optic disc center and macular center. Then, the measurement of the true value of DFA using color fundus images was followed, which was performed by two professional ophthalmologists using the Adobe Photoshop software. Simultaneously, the virtual macular area label was obtained by using the macula center as the center of the circle, 400 pixels as the radius. Finally, all the labels were reviewed and confirmed by ophthalmologist. The normal fundus image is shown in Figure 1A, the DFA is shown in Figure 1B, and the fundus image and the optic disc and virtual macular area labeling map are shown in Figure 1C.

Figure 1. The normal fundus image and labeling map. (A) Normal fundus image, where the macular area is a virtual macular area labeled with the center of the macula as the circle and 400 pixels as the radius; (B) DFA; (C) Optic disc and virtual macular area labeling map[[Inline Image]].

Automatic measurement methods

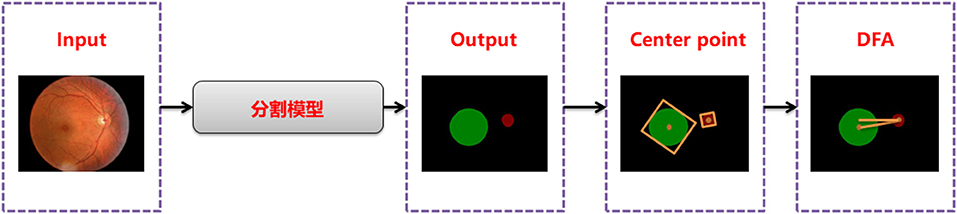

Disc-fovea angle automatically measures the normal fundus image by inputting the image into the trained segmentation model to obtain the segmentation map of the optic disc and virtual macula area. Then, the minimum external matrix is used to obtain the central coordinates of the optic disc and macula. Combining the center coordinates with the inverse tangent function operation, the DFA is obtained by the radian operation. The workflow is shown in Figure 2.

Segmentation model training

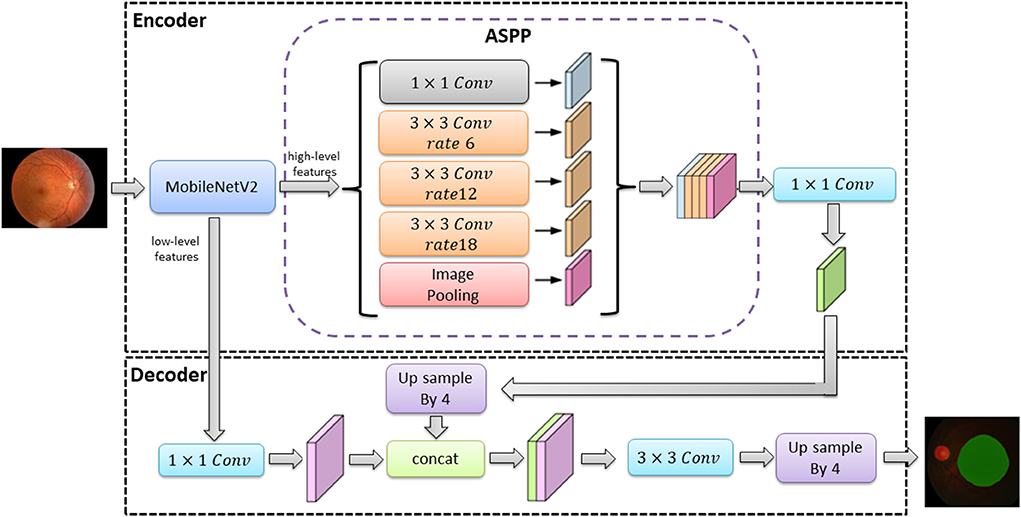

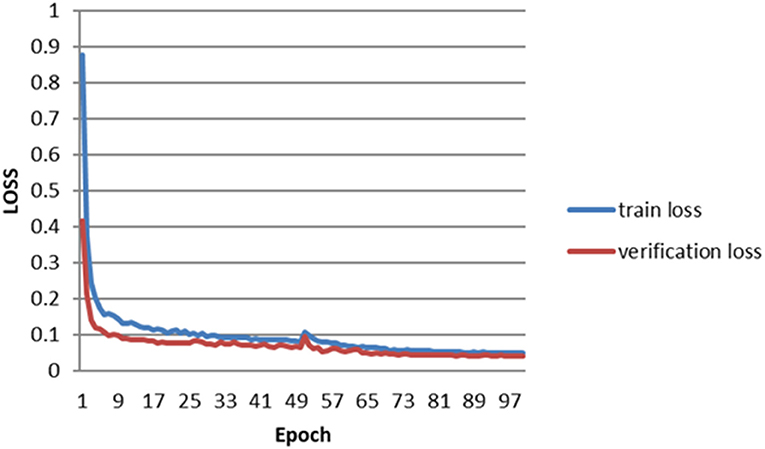

The classical deep learning segmentation models have U-Net, DeepLabv3+, and PSPNet. In this study, the DeepLabv3+ model was used to train the optic disc area and virtual macular area segmentation models using 682 normal fundus images. The images were divided into 477 images for training and 205 images for testing according to 7:3. The images used for training were divided into 429 images as training sets and 48 images as validation sets according to the ratio of 9:1.The model was trained with an image size of 512 × 512, a learning rate of 0.00005, and iterations time of summing up to a total of 100. The optimal optic disc and virtual macular area segmentation model was determined by identifying the model with the lowest loss in the validation set. The network structure of the DeepLabv3+ model is shown in Figure 3.

In Figure 3, the DeepLabv3+ model network structure is divided into an encoder and a decoder. First, the encoder extracts the image high-level and low-level features from the input image via the MobileNetV2 module. The high-level feature (the features representing the overall information of the image) are extracted by null convolution of different atrous rates in atrous spatial pyramid pooling (ASPP) before up sampling. The up sampling results are stitched with the low-level feature (the features of image boundary information) extracted from the MobileNetV2 module. The optic disc and virtual macular area prediction result map is obtained after convolution and up sampling.

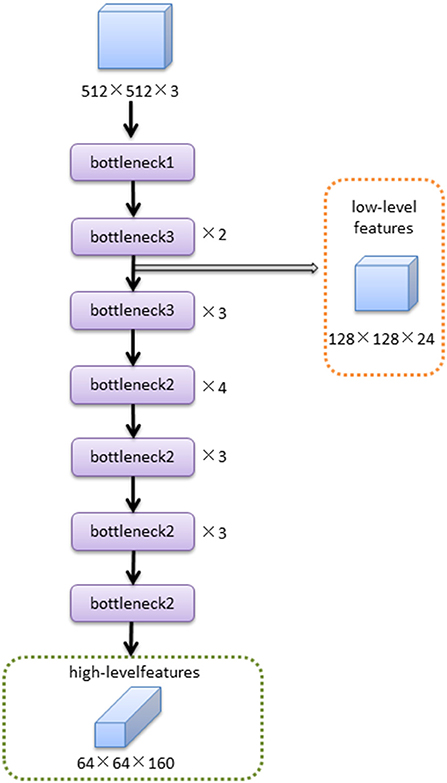

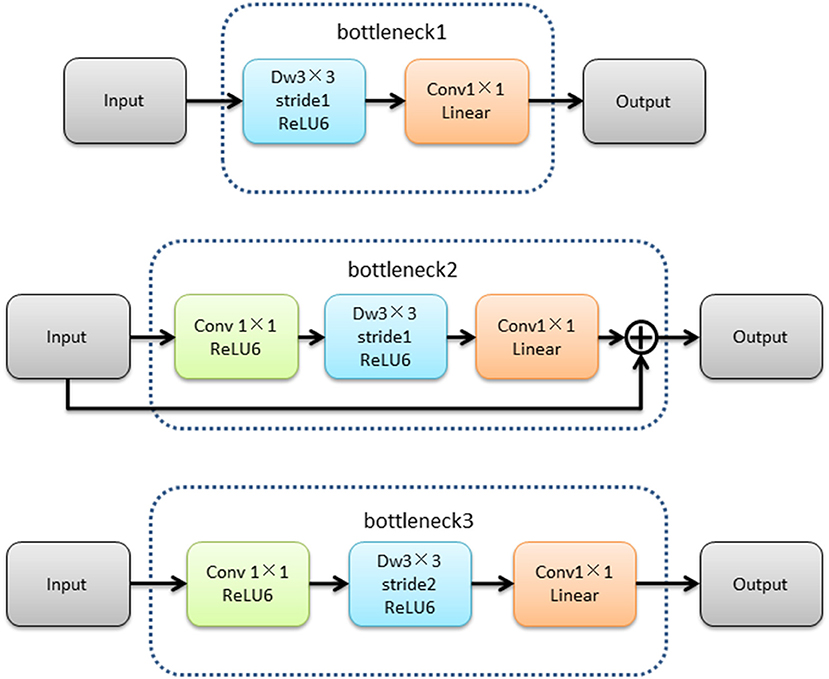

The MobileNetV2 feature extraction network mainly extracted the features of input image. The low-level features were obtained after bottleneck1 and two bottleneck3 modules. The high-level features were obtained after 14 bottleneck modules followed low-level features. The structure of the MobileNetV2 feature extraction network was shown in Figure 4.The structures of bottleneck1, bottleneck2, and bottleneck3 in MobileNetV2 were show in Figure 5, they were all composed of convolution and deep convolution. When the input feature images and output feature images had same size in Bottleneck2 block, the output feature image was obtained by adding the input feature image and its convolution result, otherwise the output feature image was the convolution result.

The server hardware configuration used in this study was Intel (R) Xeon (R) silver 4214 CPU, the main frequency is 2.2 GHz, Tesla V100 graphics card, 32 GB video memory, and the operating system is Ubuntu 18 04. The deep learning framework is PyTorch and the programming language is Python.

Calculation method

The DFA calculation formula is shown in Equation (1), where the optic disc center coordinates are (OX, OY) and the macular center coordinates are (MX, MY). If MY > OY, the DFA takes the opposite number.

The evaluation indexes for measuring the accuracy of the segmentation model in this study are the intersection over union (IoU), pixel accuracy (PA), MIoU, and MPA, as shown in Equations (2), (3), (4), and (5), respectively. In these equations, TP indicates the number of correctly predicted pixels in the optic disc and virtual macular areas, TN indicates the number of correctly predicted pixels in the background area, FP indicates the number of incorrectly predicted pixels in the optic disc and virtual macular areas, and FN indicates the number of incorrectly predicted pixels in the background area.

The optic disc center and macular centroid errors were calculated as follows: DO indicate the optic disc center error, and the formula is shown in Equation (6). DM indicates the macular center error, and the formula (in pixels) is shown in Equation (7). The following points are defined as: (OX1, OY1) as the true optic disc center, (MX1, MY1) as the true macular center, (OX2, OY2) as the segmented obtained optic disc center, and (MX2, MY2) as the segmented obtained macular center.

Results

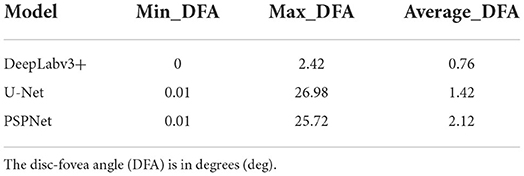

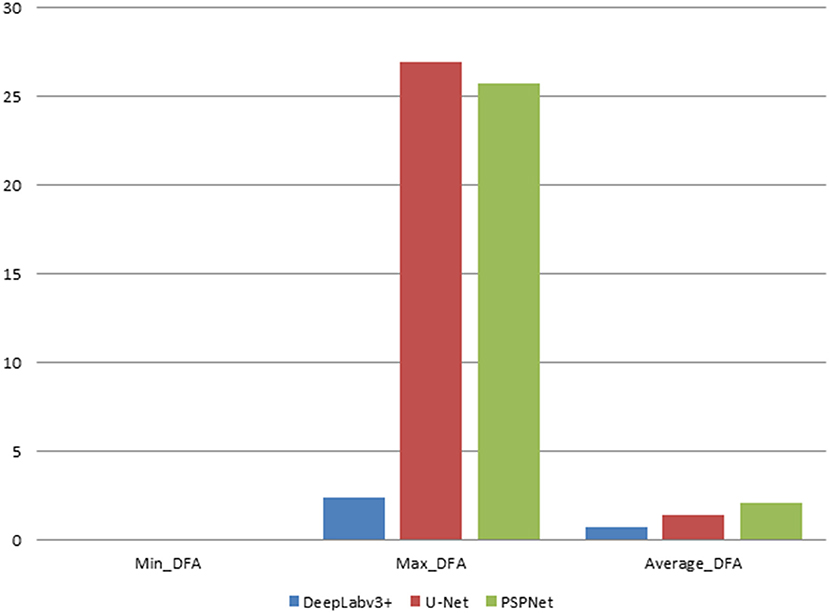

In this study, the automatic DFA measurement method based on DeepLabv3+ was tested using 205 normal fundus images. The results were compared with the DFA angles obtained from the U-Net and PSPNet segmentation-based models. The comparison of the DFA errors obtained from the three models is shown in Table 1 and Figure 6. The automatic DFA measurement method based on the DeepLabv3+ segmentation model achieves the smallest average error of 0.76°, which is 0.66° and 1.36° lower than the errors obtained using the U-Net and PSPNet models, respectively.

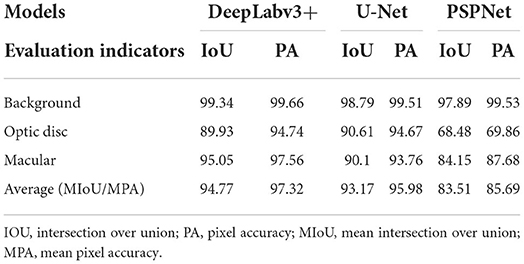

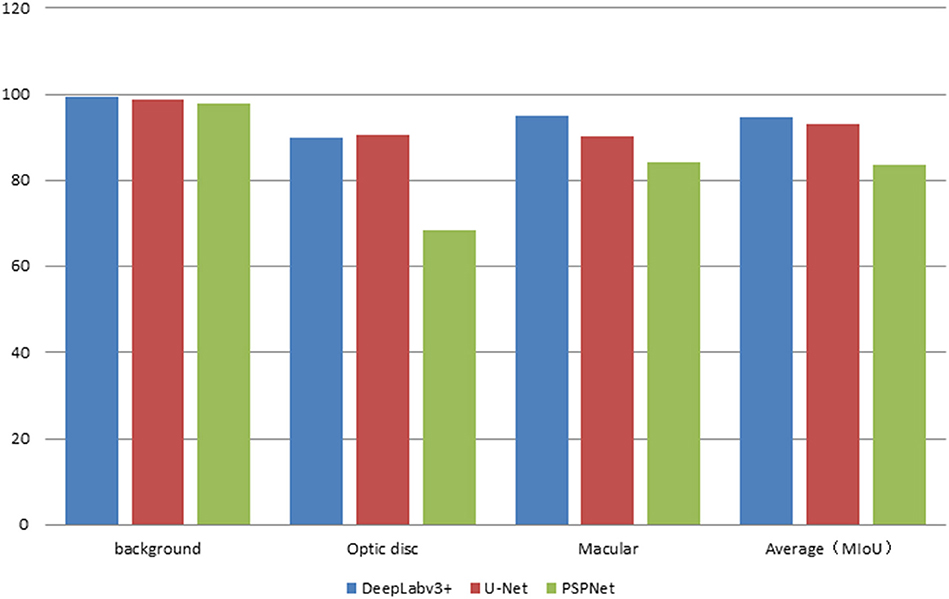

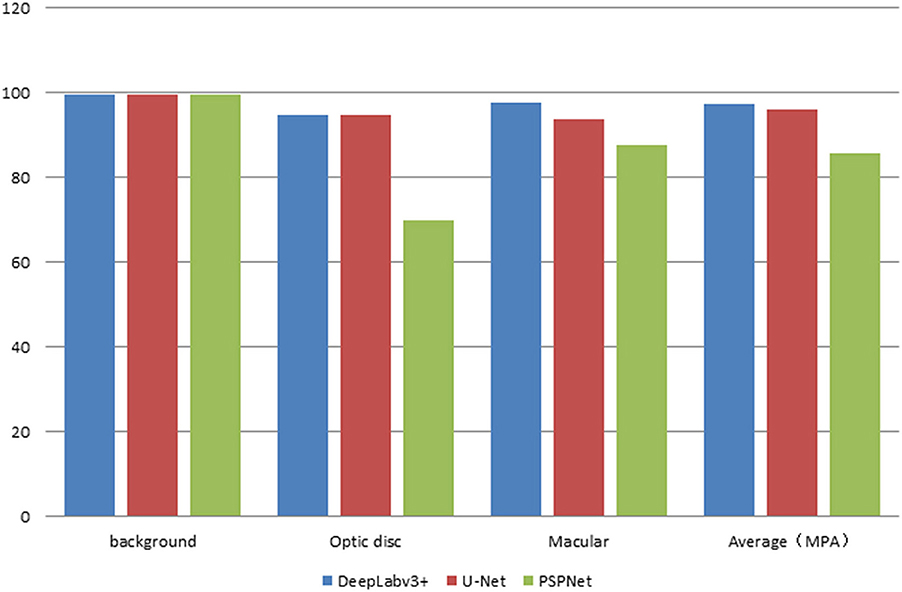

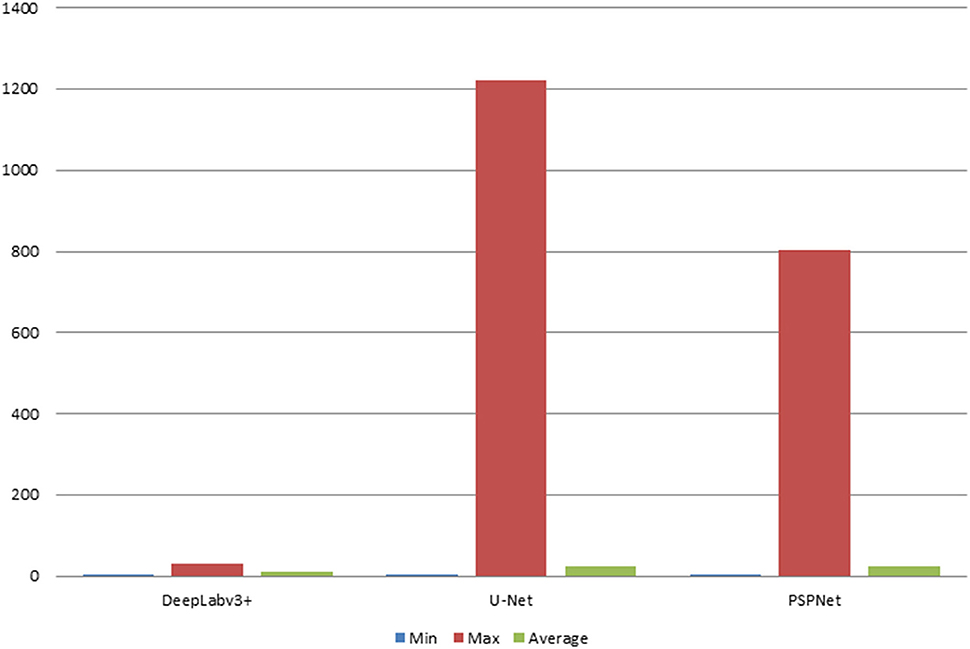

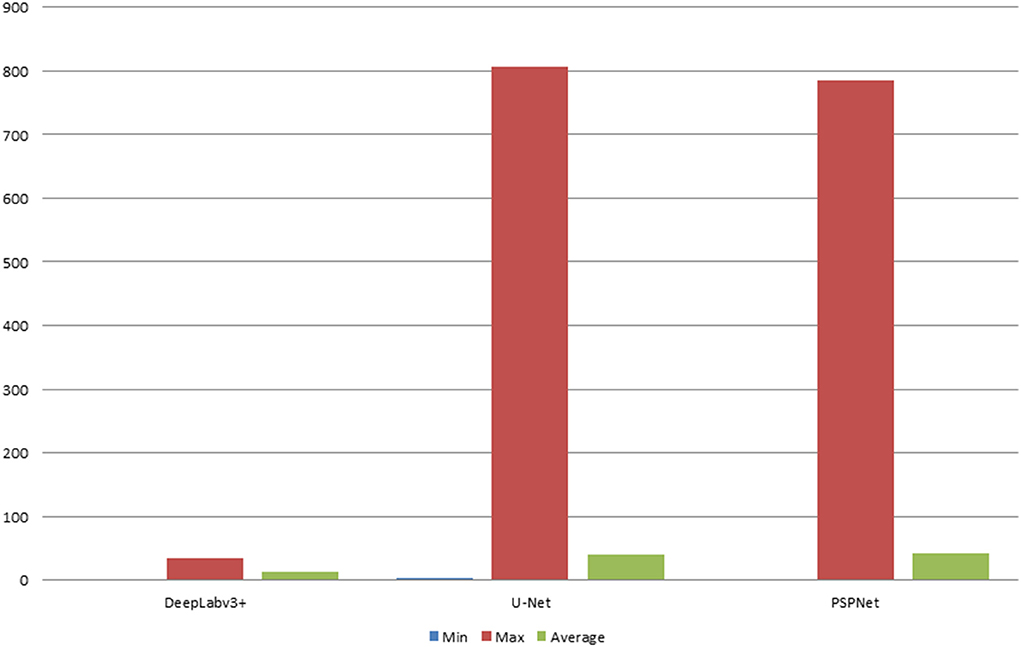

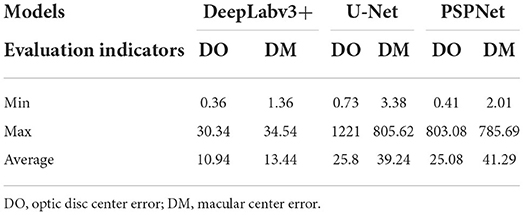

The error of the DFA is closely related to the accuracy of optic disc and virtual macular region segmentation. Therefore, the evaluation index of the three model segmentation results, the error values of optic disc center and macular center were also quantified and presented in Table 2, Figures 7, 8. The DeepLabv3+ segmentation model achieved the best results in MIoU and MPA. The result of MIoU was 1.6% higher than that for the U-Net segmentation model's and 11.26% higher than that for the PSPNet segmentation model's. As shown in Table 3, Figures 9, 10, the model was also optimal for the comparison between the error at the optic disc centroid and macular centroid, where the maximum error at the macular center was 34.54 pixels or ~0.239 mm (36). The loss curves for the DeepLabv3+ segmentation model are shown in Figure 11. The training and validation loss curves gradually stabilized with an increase in the epoch.

Table 3. Comparison of optic disc centroid and macular centroid errors for the three models (pixels).

This study segmented the optic disc and macula of the fundus image using three segmentation models. The DFA was calculated by finding the centers of both separately for the segmentation image. Among the three segmentation methods, the average error of DFA obtained by the automatic DFA measurement method based on DeepLabv3+ was 0.76°, which is 1.24° smaller than the average error of 2.0 (±1.8) ° measured manually.

Discussion

Currently, DFA measurement method is still a time-consuming manual measurement method performed by ophthalmologist; it is inefficient and poorly reproducible. With the developments of artificial intelligence in medicine, the levels of automation and intelligence have increased, and some semi-automatic methods have been established to measure DFA automatically by manually positioning the optic disc and macular center. However, this method still requires each step to be performed by an ophthalmologist, and it is still a manual measurement technique. For this reason, fully automated DFA measurement methods have high research value.

The DeepLabv3+ - based DFA auto-measurement method can obtain the best results when compared to the U-Net and PSPNet models. The DeepLabv3+ model fuses multiscale information in the form of an encoder and decoder. The fundus image is input to the MobileNetV2 and obtains low-level feature layers (boundary information) of size 64 × 64 and high-level feature layers (semantic information) of size 128 × 128. The features of the two feature layers are extracted and fused to improve the boundary segmentation accuracy. Therefore, the model can extract features more adequately, segment boundaries with higher accuracy, and ultimately obtain smaller DFA measurement errors.

Existing DFA measurement methods are mainly manual or semi-automatic (37). Simiera et al. DFA was measured by Cyclocheck software. The DFA was calculated by manually importing a single fundus image and drawing two separate tangents to the top and bottom of the optic disc based on the localized macular center (6). Piedrahita et al. The DFA was calculated by manually acquiring the optic disc edge and macular center. The mean absolute difference between the repeated measurements was 1.64° (10).These semi-automatic methods still need to be operated by ophthalmologist and they are poor repeatability, low accuracy, and time-consuming. The automatic DFA measurement utilized in this study can directly obtain DFA values after inputting fundus images, thus making it more efficient and accurate.

The MIoU and MPA values for segmenting the optical disc and virtual macula using the DeepLabv3+ segmentation model were 95.7 and 97.32%, respectively. The automatic DFA measurement based on this model results in an error of 0.76°, which can be attributed to insufficient training data. It is important to consider that there are only a few ophthalmology-related public databases and make deep learning models difficult to train (38). Moreover, this study only used 682 normal fundus images provided by the partner hospital, which contributed to the less accurate segmentation results. It will increase the training data and improve the segmentation model to improve the accuracy of DFA measurement in the future.

All the images used in this study were obtained from normal eyes. Considering that it is difficult to label fundus images in diseased eyes, it will also be difficult to locate the optic disc and macular centers accurately. Therefore, this study did not include them in the initial automatic DFA measurement.

Conclusion

There were three segmentation models were used to obtain optic disc-virtual macular segmentation results, and DFA values were further obtained by calculation. Among the three segmentation methods, DFA based on DeepLabv3+ had the least average error, which was 0.76°. The automatic measurement of DFA based on DeepLabv3+ can obtain more objective results, assist ophthalmologists to quickly measure DAF value, improve measurement efficiency, and reduce the burden on ophthalmologists. This study mainly studied the automatic DFA measurement of normal fundus. In the future, the relevant data of diseased fundus will be collected and the automatic DFA measurement of diseased fundus will be studied.

Data availability statement

The datasets presented in this article are not readily available as requested of the partner hospital. Requests to access the datasets should be directed at: WY, benben0606@139.com. And I did not detect any particular expressions.

Author contributions

BZ and YS wrote the manuscript. BZ planed experiments and the manuscript. SZ guided the experiments. MW, WY, and CW reviewed the manuscript. YS and XF trained the model. YL, JZ, and LJ collected and labeled the data. All authors issued final approval for the version to be submitted.

Funding

The study supported by the National Natural Science Foundation of China (No. 61906066), Natural Science Foundation of Zhejiang Province (No. LQ18F020002), Hospital Management Innovation Research Key Project of Jiangsu Provincial Hospital Association (JSYGY-2-2021-467), the Ningbo Medical Science and Technique Program (2021Y71), and the Medical Big Data Clinical Research Project of Nanjing Medical University, Postgraduate Research and Innovation Project of Huzhou University (No. 2022KYCX35).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Simons K, Arnoldi K, Brown MH. Color dissociation artifacts in double Maddox rod cyclodeviation testing. Ophthalmology. (1994) 101:1897–901. doi: 10.1016/S0161-6420(94)31086-4

2. Smith EM, Talamo JH, Assil KK, Petashnick DE. Comparison of astigmatic axis in the seated and supine positions. J Refract Surg. (1994) 10:615–20. doi: 10.3928/1081-597X-19941101-05

3. Shen EP, Chen WL, Hu FR. Manual limbal markings versus iris-registration software for correction of myopic astigmatism by laser in situ keratomileusis. J Cataract Refract Surg. (2010) 36:431–6. doi: 10.1016/j.jcrs.2009.10.030

4. Seo JM, Kim KK, Kim JH, Park KS, Chung H. Measurement of ocular torsion using digital fundus image. IEEE. (2004) 1:1711–3. doi: 10.1109/IEMBS.2004.1403514

5. Chen X, Zhao KX, Guo X, Chen X, Zhu LN, Han Y, et al. Application of fundus photography in the diagnosis and curative effect evaluation of inferior oblique muscle overaction. Chin J Optom Ophthalmol. (2008) 10:222–4. doi: 10.3760/cma.j.issn.1674-845X.2008.03.018

6. Simiera J, Loba P. Cyclocheck: a new web-based software for the assessment of objective cyclodeviation. J AAPOS. (2017) 21:305–8. doi: 10.1016/j.jaapos.2017.02.009

7. Simiera J, Ordon A J, Loba P. Objective cyclodeviation measurement in normal subjects by means of Cyclocheck® application. Eur J Ophthalmol. (2021) 31:704–8. doi: 10.1177/1120672120905312

8. Zhu W, Wang X, Jiang C, Ling L, Wu LQ, Zhao C. Effect of inferior oblique muscle belly transposition on versions and vertical alignment in primary position. Graefes Arch Clin Exp Ophthalmol. (2021) 259:3461–8. doi: 10.1007/s00417-021-05240-x

9. Resch H, Pereira I, Hienert J, Weber S, Holzer S, Kiss B, et al. Influence of disc-fovea angle and retinal blood vessels on interindividual variability of circumpapillary retinal nerve fibre layer. Br J Ophthalmol. (2016) 100:531–6. doi: 10.1136/bjophthalmol-2015-307020

10. Piedrahita-Alonso E, Valverde-Megias A, Martin-Garcia B, Hernandez-Garcia E, Gomez-de-Liano R. Minimal detectable change of the disc-fovea angle for ocular torsion assessment. Ophthalmic Physiol Opt. (2022) 42:133–9. doi: 10.1111/opo.12897

11. Chen Q, Yu WH, Lin S, Liu BS, Wang Y, Wei QJ, et al. Artificial intelligence can assist with diagnosing retinal vein occlusion. Int J Ophthalmol. (2021) 14:1895–902. doi: 10.18240/ijo.2021.12.13

12. Ruan S, Liu Y, Hu WT, Jia HX, Wang SS, Song ML, et al. A new handheld fundus camera combined with visual artificial intelligence facilitates diabetic retinopathy screening. Int J Ophthalmol. (2022) 15:620–267. doi: 10.18240/ijo.2022.04.16

13. Liu ZY Li B, Xia S, Chen YX. Analysis of choroidal morphology and comparison of imaging findings of subtypes of polypoidal choroidal vasculopathy: a new classification system. Int J Ophthalmol. (2020) 13:731–6. doi: 10.18240/ijo.2020.05.06

14. Zheng B, Liu Y, He K, Wu M, Jin L, Jiang Q, et al. Research on an intelligent lightweight-assisted pterygium diagnosis model based on anterior segment images. Dis Markers. (2021) 2021:7651462–70. doi: 10.1155/2021/7651462

15. Zheng B, Jiang Q, Lu B, He K, Wu MN, Hao XL, et al. Five-category intelligent auxiliary diagnosis model of common fundus diseases based on fundus images. Transl Vis Sci Technol. (2021) 10:20–30. doi: 10.1167/tvst.10.7.20

16. Wan C, Chen Y, Li H, Zheng B, Chen N, Yang WH, et al. EAD-net: a novel lesion segmentation method in diabetic retinopathy using neural networks. Dis Markers. (2021) 6482665–78. doi: 10.1155/2021/6482665

17. Xu LL, Yang Z, Tian B. Artificial intelligence based on images in ophthalmology. Chin J Ophthalmol. (2021) 57:465–9. doi: 10.3760/cma.j.cn112142-20201224-00842

18. Zhu S, Lu B, Wang C, Wu M, Zheng B, Jiang Q, et al. Screening of common retinal diseases using six-category models based on EfficientNet. Front Med. (2022) 9:808402–11. doi: 10.3389/fmed.2022.808402

19. Zhu SJ, Fang XW, Qian Y, He K, Wu MN, Zheng B. Pterygium screening and lesion area segmentation based on deep learning. J Healthc Eng. (2022) 22:1016–9. doi: 10.3980/j.issn.1672-5123.2022.6.26

20. He K, Wu MN, Zheng B, Yang WH, Zhu SJ, Jin L, et al. Research on the automatic classification system of pterygium based on deep learning. Int Eye Sci. (2022) 22:711–5. doi: 10.3980/j.issn.1672-5123.2022.5.03

21. Escorcia-Gutierrez J, Torrents-Barrena J, Gamarra M, Romero-Aroca P, Valls A, Puig D, et al. A color fusion model based on Markowitz portfolio optimization for optic disc segmentation in retinal images. Expert Syst Appl. (2021) 174:114697–708. doi: 10.1016/j.eswa.2021.114697

22. Kumar ES, Bindu CS. Two-stage framework for optic disc segmentation and estimation of cup-to-disc ratio using deep learning technique. J Ambient Intell Humaniz Comput. (2021) 2021:1–13. doi: 10.1007/s12652-021-02977-5

23. Veena HN, Muruganandham A, Kumaran TS. A novel optic disc and optic cup segmentation technique to diagnose glaucoma using deep learning convolutional neural network over retinal fundus images. J King Saud Univ Comput Inf Sci. (2021) 34:1–12. doi: 10.1016/j.jksuci.2021.02.003

24. Wang L, Gu J, Chen Y, Liang Y, Zhang W, Pu J, et al. Automated segmentation of the optic disc from fundus images using an asymmetric deep learning network. Pattern Recognit. (2021) 112:107810–22. doi: 10.1016/j.patcog.2020.107810

25. Huang Y, Zhong Z, Yuan J, Tang X. Efficient and robust optic disc detection and fovea localization using region proposal network and cascaded network. Biomed Signal Process Control. (2020) 60:101939–49. doi: 10.1016/j.bspc.2020.101939

26. Kim DE, Hacisoftaoglu RE, Karakaya M. Optic disc localization in retinal images using deep learning frameworks. Int Soc Optics Photonics. (2020) 11419:1–8. doi: 10.1117/12.2558601

27. Toptaş B, Toptaş M, Hanbay D. Detection of optic disc localization from retinal fundus image using optimized color space. J Digit Imaging. (2022) 2022:1–18. doi: 10.1007/s10278-021-00566-8

28. Li P, Liu J. Simultaneous Detection of Optic Disc and Macular Concave Center Using Artificial Intelligence Target Detection Algorithms. Cham: Springer (2021) 2021:8–15. doi: 10.1007/978-3-030-81007-8_2

29. Xiong H, Liu S, Sharan R V, Coiera E, Berkovsky S. Weak label based Bayesian U-Net for optic disc segmentation in fundus images. Artif Intell Med. (2022) 126:1–14. doi: 10.1016/j.artmed.2022.102261

30. Bhatkalkar BJ, Nayak SV. Shenoy SV, Arjunan RV. FundusPosNet: a deep learning driven heatmap regression model for the joint localization of optic disc and fovea centers in color fundus images. IEEE Access. (2021) 9:159071–80. doi: 10.1109/ACCESS.2021.3127280

31. Cao XR, Lin JW, Xue LY, Yu L. Detecting and locating the macular using morphological features and k-means clustering. Chin J Biomed Eng. (2017) 36:654–60. doi: 10.3969/j.issn.0258-8021.2017.06.003

32. Kumar S, Adarsh A, Kumar B, Singh AK. An automated early diabetic retinopathy detection through improved blood vessel and optic disc segmentation. Opt Laser Technol. (2020) 121:105815–26. doi: 10.1016/j.optlastec.2019.105815

33. Ramani RG, Shanthamalar JJ. Improved image processing techniques for optic disc segmentation in retinal fundus images. Biomed Signal Process Control. (2020) 58:101832–50. doi: 10.1016/j.bspc.2019.101832

34. Fu Y, Chen J, Li J, Pan D, Yue X, Zhu Y. Optic disc segmentation by U-net and probability bubble in abnormal fundus images. Pattern Recognit. (2021) 117:1–13. doi: 10.1016/j.patcog.2021.107971

35. Hasan MK, Alam MA, Elahi MTE, Roy S, Martí R. DRNet: segmentation and localization of optic disc and fovea from diabetic retinopathy image. Artif Intell Med. (2021) 111:1–16. doi: 10.1016/j.artmed.2020.102001

36. LI N, Shang YQ, Xiong J, Tai BY, Shi CJ. Fundus optic disc segmentation and localization based on improved multi-task learning method. J Appl Sci. (2021) 39:952–60. doi: 10.3969/j.issn.0255-8297.2021.06.006

37. Paques M, Guyomard JL, Simonutti M, Roux MJ, Picaud S, LeGargasson JF, et al. Panretinal, high-resolution color photography of the mouse fundus. Invest Ophthalmol Vis Sci. (2007) 48:2769–74. doi: 10.1167/iovs.06-1099

Keywords: disc-fovea angle, automatic measurement, deep learning, retinal images, artificial intelligence

Citation: Zheng B, Shen Y, Luo Y, Fang X, Zhu S, Zhang J, Wu M, Jin L, Yang W and Wang C (2022) Automated measurement of the disc-fovea angle based on DeepLabv3+. Front. Neurol. 13:949805. doi: 10.3389/fneur.2022.949805

Received: 31 May 2022; Accepted: 06 July 2022;

Published: 27 July 2022.

Edited by:

Peter Koulen, University of Missouri–Kansas City, ChinaReviewed by:

Senthil Ganesh R., Sri Krishna College of Engineering & Technology, IndiaAli Y. Al-Sultan, University of Babylon, Iraq

Copyright © 2022 Zheng, Shen, Luo, Fang, Zhu, Zhang, Wu, Jin, Yang and Wang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Weihua Yang, benben0606@139.com; Chenghu Wang, wangchenghu1226@163.com

Bo Zheng

Bo Zheng Yifan Shen1

Yifan Shen1 Yuxin Luo

Yuxin Luo Shaojun Zhu

Shaojun Zhu Maonian Wu

Maonian Wu Weihua Yang

Weihua Yang