Interactive Optimization With Parallel Coordinates: Exploring Multidimensional Spaces for Decision Support

- 1IPESE, Ecole Polytechnique Fédérale de Lausanne, Sion, Switzerland

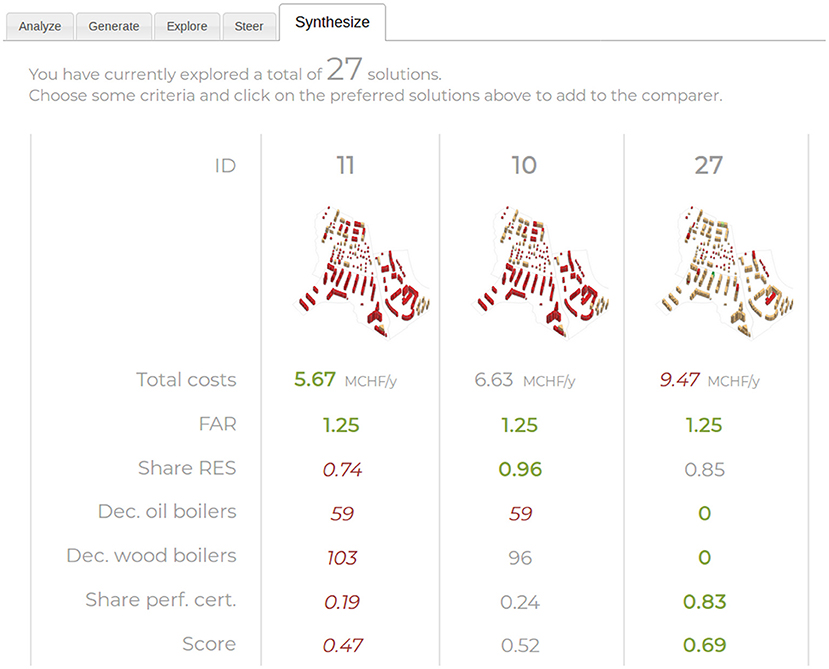

- 2European Institute for Energy Research, Karlsruhe, Germany

Interactive optimization methods are particularly suited for letting human decision makers learn about a problem, while a computer learns about their preferences to generate relevant solutions. For interactive optimization methods to be adopted in practice, computational frameworks are required, which can handle and visualize many objectives simultaneously, provide optimal solutions quickly and representatively, all while remaining simple and intuitive to use and understand by practitioners. Addressing these issues, this work introduces SAGESSE (Systematic Analysis, Generation, Exploration, Steering and Synthesis Experience), a decision support methodology, which relies on interactive multiobjective optimization. Its innovative aspects reside in the combination of (i) parallel coordinates as a means to simultaneously explore and steer the underlying alternative generation process, (ii) a Sobol sequence to efficiently sample the points to explore in the objective space, and (iii) on-the-fly application of multiattribute decision analysis, cluster analysis and other data visualization techniques linked to the parallel coordinates. An illustrative example demonstrates the applicability of the methodology to a large, complex urban planning problem.

1. Introduction

Making a decision involves balancing multiple competing criteria in order to identify a most-preferred alternative. For simple, day-to-day decisions, this can usually be done by relying on intuition and common sense alone.

For larger, more complex decisions, common sense may not suffice, and multicriteria decision analysis (MCDA) can be used to formalize the problem, both improving the decision and making it more transparent (Keeney, 1982).

To make better decisions requires a clear knowledge of the available alternatives. However, research has shown that without adequate support, the identification of alternatives is difficult and often incomplete, even for experts in a field (León, 1999; Bond et al., 2008; Malczewski and Rinner, 2015). MCDA adopts two distinct perspectives on alternatives, depending on the considered branch (Cohon, 1978; Hwang and Masud, 1979). Multiattribute decision analysis (MADA) aims to help select the best alternative from a predetermined subset (Chankong and Haimes, 2008; Malczewski and Rinner, 2015). Such alternative-focused methods have the risk of omitting important alternatives and leading to suboptimal solutions (Keeney, 1992; Beach, 1993; Belton et al., 1997; Feng and Lin, 1999; Belton and Stewart, 2002; Siebert and Keeney, 2015). Multiobjective decision analysis (MODA) methods, on the other hand, systematically generate the alternatives based on the decision maker's (DM) objectives. They can thus be considered to promote value-focused thinking, because they require the DM to think about the driving values first, and consider the means to achieve them only second (Keeney, 1992).

However, solving multiobjective problems implies that, contrary to single-objective problems, not one well-defined solution is found, but a set of equally interesting Pareto optimal solutions. Such solutions cannot be improved in one objective without depreciating the value of another objective.

When considered collectively as a Pareto front, they inform about optimal tradeoffs between objectives. In order to make use of such results, some preferences must be articulated by the DM in order to identify a most satisfactory solution from the Pareto front. The articulation of preferences, consisting e.g., in acceptable ranges, tradeoff information, or relative weights of objectives, can be done in one of three ways (Hwang and Masud, 1979; Branke et al., 2008):

i. A posteriori, once all the Pareto optimal solutions have been identified. The advantage is that the decision maker has a complete overview of the available options. On the other hand, the calculation can be extremely long if the solution space is vast, the decision maker may not have the time to wait, and the process will certainly compute many wasteful solutions which are of little interest. Even if time were not an issue, the difficulty to visualize, interpret and understand the Pareto optimal results can compromise the trust from the DM, especially when more than three objectives are considered.

ii. A priori, before starting any calculations. This is the most efficient approach, as theoretically only one solution is calculated. However, it is also probably the most difficult from the decision maker's point of view, as it assumes that they are perfectly aware of their preferences and acceptable tradeoffs, and are able to formulate them precisely. In practice, when dealing with complex and interdisciplinary problems, this knowledge is generally unavailable until the solutions are calculated, and therefore the risk of reaching an infeasible or unsatisfactory solution is high (Meignan et al., 2015; Piemonti et al., 2017b).

iii. Interactively, as the optimization progresses. This is a common response to the limitations of a priori and a posteriori approaches. By involving the human decision maker directly in the search process (Kok, 1986), interactive optimization (IO) allows the user to learn from solutions as they are produced, refine their preferences, and in turn restrict the search to the most relevant areas of the solution space.

The goal of this paper is to highlight the current gaps in literature which are limiting the application of IO to large problems, and propose a novel methodology addressing these gaps.

1.1. Background of Interactive Optimization

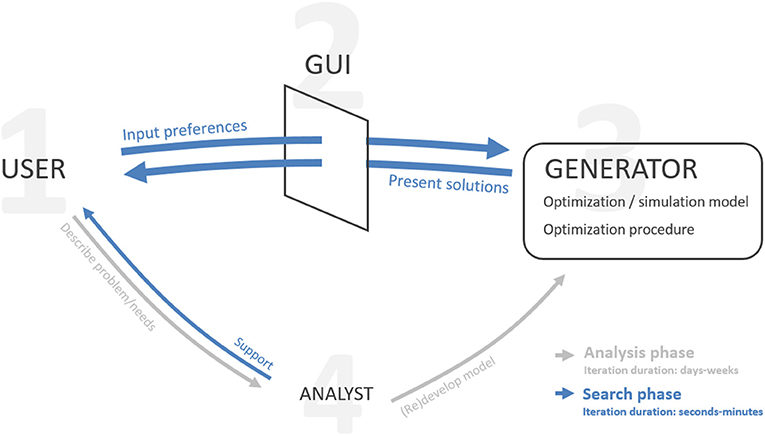

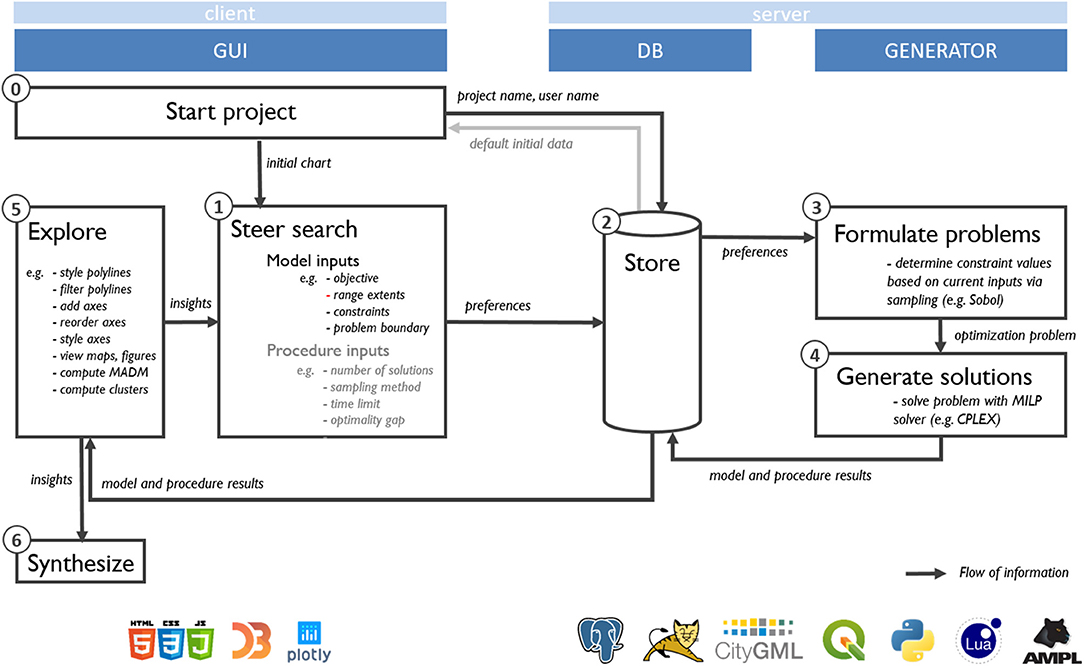

Interactive optimization consists of four main components which are combined to form a human-computer interaction system: a user, a graphical user interface (GUI), a solution generator and an analyst (Figure 1).

Figure 1. Main components and flows of information in interactive optimization. GUI: graphical user interface. Adapted from Spronk (1981) and Meignan et al. (2015).

During a preparatory analysis phase, the user describes the problem and criteria to the best of their knowledge, and the analyst develops the model accordingly. A feedback loop, which can overlap with the search phase, ensures the model captures the user's requirements as these evolve (Fisher, 1985). During the search phase, the user steers the generation of solutions through the GUI. Here, the role of the analyst becomes more passive (Spronk, 1981). The basic mechanism of IO consists in the oscillation between a generation phase, an exploration phase, and a steering phase. Typically, the process begins by generating and presenting one or several predetermined solutions for the user to explore. They study and compare their characteristics which are presented in the GUI, and react to them by communicating their likes or dislikes, also formalized through the GUI. These inputs are used to steer the subsequent calculations toward desired areas of the solution space. The process repeats until the user is convinced to have found the most satisfactory solution.

The main premise for human-computer interaction is that complex problems can be better solved by harnessing the respective strengths of each party (Fisher, 1985; do Nascimento and Eades, 2005; Hamel et al., 2012). The relatively superior human capabilities are in the expertise of the problem and subjective evaluations, as well as skills in strategic thinking, learning, pattern recognition and breaking rules consciously (Fisher, 1985; Klau et al., 2010; Shneiderman, 2010; Wierzbicki, 2010; Babbar-Sebens et al., 2015).

The relative strengths of the computer are in counting or combining physical quantities, storing and displaying detailed information and performing repetitive tasks rapidly and simultaneously over long periods of time (Shneiderman, 2010). In IO, the interface, or graphical user interface (GUI), allows to dialog with the user, both displaying results visually and receiving the user's preferences as inputs via mouse events or textual entries. The solution generator component consists of an optimization or simulation model describing the problem by its decision variables, objective functions and constraints, and an optimization procedure, which can be either an exact or a heuristic-based algorithm searching for solutions to the optimization problem (Collette and Siarry, 2004; Meignan et al., 2015). Some of the widely used meta-heuristic procedures include evolutionary algorithms, simulated annealing, and swarm particle optimization. Such algorithms mimic natural phenomena to explore a solution space toward optimal solutions. Unlike exact methods, they rely on stochastic exploration of solutions, searching for combinations of variables which lead to the best performance of a fitness function, or objective. This makes their implementation often simpler, but at the price of requiring many iterations to reach what is often only an approximation of the Pareto front. In the case of exact methods, such as linear (LP) or mixed-integer linear programming (MILP), the solution space is explored in a deterministic way, making the procedure overall more efficient and guaranteeing to find (at least weakly) Pareto optimal solutions. When dealing with many objectives, a parametrized scalarization function is commonly used to convert the multiple objective problem into several single objective ones, making it possible to use widely available and rapid single-objective solvers. By varying the parameters in the scalarization function, a range of Pareto optimal solutions to the initial multiobjective problem can be generated (Branke et al., 2008; Chankong and Haimes, 2008). Together, the optimizer and GUI provide an efficient and systematic framework to generate and represent a large number of Pareto optimal solutions which are most relevant to the user.

There are several compelling benefits of involving a human user in the interactive optimization process. First, the incorporation of expert knowledge, intuition and experience can compensate the unavoidable simplifications induced by the model (Meignan et al., 2015; Piemonti et al., 2017a; Liu et al., 2018). Second, computational effort is reduced by focusing on only the most promising regions of the solution space (Balling et al., 1999; do Nascimento and Eades, 2005; Liu et al., 2018). Third, the interaction process promotes trust, facilitates learning, and increases the user's confidence in the solutions and thus their likelihood of actually implementing them (Hwang and Masud, 1979; Spronk, 1981; Shin and Ravindran, 1991; Liu et al., 2018). Finally, this approach avoids the need to specify any explicit a priori preference information (Hwang and Masud, 1979; Allmendinger et al., 2016).

On the other hand, the main drawbacks are that IO methods rely on the assumptions that a human DM is available, that they are willing to devote time to the solution process, and that they are able to understand the process, inputs asked of them, and resulting outcomes (Hwang and Masud, 1979; Spronk, 1981; Collette and Siarry, 2004; Branke et al., 2008; Liu et al., 2018). This implies that developed methodologies should be both easily understandable, and able to quickly generate representative Pareto optimal solutions for the user to explore.

1.2. Related Work

1.2.1. Review of Interactive Optimization Procedures

Over the past decades, a variety of interactive optimization methods have been developed, with efforts both in improving the underlying search procedures, and interaction mechanisms. (Kok, 1986; Vanderpooten, 1989) provided an early attempt at describing and organizing IO methods. They distinguished between search-based methods, in which the DM's preference structure is supposed stable and preexisting, and learning-based methods, which promotes the discovery of preferences in problems where these are not known or difficult to express.

Many efforts have been done to review and synthesize the technical developments in the field of interactive optimization. The earlier developments of search-based methods are described by Hwang and Masud (1979), Collette and Siarry (2004), Branke et al. (2008), and Chankong and Haimes (2008). These are often classified according to the preference structures required from the DM (e.g., reference points, weights, bounds, tradeoff quantification) and the implications these have on generating Pareto optimal solutions. More recently, Meignan et al. (2015) provided an extensive review of the technical aspects of existing methods. They classified interactive methods based on the following features: the type of optimization procedure used (exact, heuristic or metaheuristic approaches), the user's contribution to the optimization process (affecting either the model or the procedure), and the characteristics of the optimization system (direct or indirect user feedback integration). Regarding optimization procedures, they found that heuristic- or metaheuristic-based approaches were predominant (25 out 32 surveyed studies), while exact approaches were the minority. A possible explanation for the popularity of metaheuristics is that in interactive methods, approximations of global optima may be good enough given the expected inaccuracies of the model. Another perspective favors however exact methods, especially for large problems with thousands of variables. Chircop and Zammit-Mangion (2013) argue that for unconstrained search spaces, stochastic search procedures will require a large number of objective function evaluations, leading to computationally intensive algorithms. Conversely, exact methods, by relying on scalarization techniques and efficient deterministic single objective approaches can quickly find optima of even large scale multiobjective problems [Branke et al., 2008; Williams, 2013; Schüler et al., 2018b, (p. 61, 64)]. This efficiency is critical because the number of solutions required to explore the solution space grows exponentially with the number of objectives (Cohon, 1978; Copado-Méndez et al., 2016). Ultimately however, the question of whether exact or heuristic methods are most efficient is debatable, and depends on the problem considered. For structured, convex, linear or quadratic programming problems, metaheuristics may not be more competitive than exact, gradient-based methods. On the other hand, because they rely on multiple solutions per iteration, evolutionary algorithms can easily benefit from parallel processing to achieve greater search efficiency. For these reasons, Branke et al. (2008, p. 64) nuance their conclusion by suggesting further research to understand the respective niches of each procedure, and to develop hybrid approaches exploiting their respective strengths.

Beyond the underlying technical aspects of interactive optimization, growing interest has been devoted to the learning opportunities which it provides. In this vein, Klau et al. (2010) argued that promoting effective interaction and learning mechanisms is more important than efficient algorithms. The reasoning is that whichever solution is produced, it ultimately is a simplification of reality which isn't directly usable. It is thus crucial that the user is able to correctly interpret and recontextualize the results. Therefore, the insights gained during the process, about the tradeoffs, synergies and feasible boundaries are eventually more useful outcomes than the solution itself.

Allmendinger et al. (2016) employ the term “navigation” to encompass not only the optimization procedure, but also the efforts made on real-time exploration of optimization results. However, among the six so-called navigation methods reviewed by Allmendinger et al. (2016), four are a posteriori methods (meaning solutions are precalculated), while only two are interactive (Korhonen and Wallenius, 1988; Miettinen et al., 2010). Their review further reveals that most of these methods tend to handle only five or less objectives, and do not consider problems with more objectives or highly uncertain conditions. They call for the development of new methods which can easily handle complex problems with many objectives, and which provide more intuitive GUIs and interaction mechanisms.

1.2.2. Interfaces for Multiobjective Interactive Optimization

The need for intuitive visualization of multiobjective optimization results and interaction with the optimizer has been recognized as a central issue (Xiao et al., 2007; Branke et al., 2008, p. 15). Meignan et al. (2015) conclude that “the development of more natural and intuitive forms of interaction with the optimization system is essential for the integration of advanced optimization methods in decision support tools.” Branke et al. (2008, p. 52) explicitly mention the importance of user-friendliness in IO as a topic for future research. This is also true for the representation of interaction with the problem, e.g., how the DM inputs their preferences (Miettinen and Kaario, 2003), and the representation of the preferences themselves (Branke et al., 2008, p. 201). Liu et al. (2018) note that despite interactive optimization being “essentially a visual analytics task,” literature is rather silent on the specifics of visuals and interaction approaches, focusing rather on optimization procedures and preference models.

Much attention has been given to advanced visualization methods for results of a posteriori multiobjective optimization methods, although their adoption in interactive methods is still low. Efforts in this area are mainly motivated by the fact that while Pareto fronts up to three objectives can be mapped in traditional planar or three dimensional representations, problems with many-objectives (i.e., more than three) are more challenging due to both the complexity of data to display, and the space required. Among the many available techniques reviewed by Branke et al. (2008) and Miettinen (2014), parallel coordinates stand out as an intuitive and scalable alternative. Introduced by Inselberg (1985) and extensively described by Inselberg (1997, 2009), parallel coordinates are similar to radar charts, except the dimensions are displayed as vertical side-by-side axes instead of radially. This allows the method to scale well to many dimensions, and facilitates the comparison of values and identification of tradeoffs, trends and clusters in the data (Shenfield et al., 2007; Akle et al., 2017). Data points are depicted as polygonal lines (or polylines), which intersect the axes at their corresponding values. The main drawbacks of parallel coordinates include cluttering of the chart when displaying many alternatives, and the impossibility to visualize all pairwise relationships between dimensions in a single chart (Heinrich and Weiskopf, 2013; Johansson and Forsell, 2016). Studies also emphasize the need for users to receive basic training to better harness parallel coordinates (Shneiderman, 1996; Wolf et al., 2009; Johansson and Forsell, 2016; Akle et al., 2017). The recent developments of interactive data visualizations have greatly alleviated these limitations by allowing the user to filter the displayed solutions, reorder axes by dragging them to explore specific pairwise relationships, or change the visual aspect of lines such as color or opacity to reveal patterns across all dimensions (Bostock et al., 2011; Fieldsend, 2016). Heinrich and Weiskopf (2013) provide an extensive review of parallel coordinates and recent developments in their interactive features.

Several studies investigated the practical applicability of parallel coordinates in the context of multiobjective optimization. Akle et al. (2017) studied their effectiveness in comparison to radar charts and combined tables for the balancing of multiple criteria and selection of preferred solutions. They found parallel coordinates to be the most effective and engaging approach for exploration, requiring less cognitive load and stress than the other charts. They also remark that parallel coordinates were the least known method, and suggest that training users could further improve the performance and usability of the approach. Stump et al. (2009) also encountered this need, and undertook a study comparing the understanding of users who never used their tool with those who had previous experience (Wolf et al., 2009). They found that novice users showed less certainty in which visualizations to use or actions to perform, and concluded that more training prior to using the tool would be necessary. They also suggest that a simplified interface with less steering and visualization features, as well as less densely packed alternatives might help users focus on relevant information and actions (Shneiderman, 2010). It has furthermore been suggested that parallel coordinates cannot display certain kinds of information and should be complemented (e.g., with spatial representations) for a more complete representation of alternatives (Xiao et al., 2007). Kok (1986), Sato et al. (2015) and Bandaru et al. (2017b) point out that when DMs only have a vague understanding of their preferences, it may be easier to specify loose ranges of preferences in the objective space, rather than exact points of preferences. The action of “brushing” available in interactive parallel coordinates charts addresses this issue. Brushing is a common action in interactive data visualization, where the user selects and highlights a subset of data, typically by dragging the pointer over the area of interest (Martin and Ward, 1995). One limitation of parallel coordinates is the visualization of Pareto fronts. It is however possible to make some interpretations, though these differ from traditional 2D plots and require familiarity with the charts. Li et al. (2017) provide some insights on how to translate Cartesian representations of Pareto fronts into parallel coordinates.

Given the widespread attention received by parallel coordinates, their adoption in the context of multiobjective optimization is not surprising. However, their use remains predominantly confined to a posteriori exploration of precalculated solutions (Bagajewicz and Cabrera, 2003; Xiao et al., 2007; Kipouros et al., 2008; Franken, 2009; Raphael, 2011; Rosenberg, 2012; Miettinen, 2014; Ashour and Kolarevic, 2015; Fieldsend, 2016; Akle et al., 2017; Bandaru et al., 2017a). Only a few studies suggested using parallel coordinates to steer the optimization procedures, but all adopt meta-heuristic approaches, limiting their applicability to smaller problems with few variables (Fleming et al., 2005; Stump et al., 2009; Sato et al., 2015; Hernández Gómez et al., 2016).

1.2.3. Overview of Existing Interactive Optimization Methods

A selected number of methods are described hereafter, outlining their responses to the issues above as well as the remaining gaps. For a more extensive overview of existing approaches, we refer to Branke et al. (2008), Meignan et al. (2015), Allmendinger et al. (2016).

The Pareto Race tool developed by Korhonen and Wallenius (1988) is considered a multiobjective linear programming navigation method (Allmendinger et al., 2016; Greco et al., 2016), and uses a visual interactive method to steer the search freely, in real-time. Much effort was dedicated to make the use of the software simple and intuitive to lay users, for example simplifying the preference input to “faster,” or “more/less of this objective,” and letting the program translate this into corresponding parameters (increments, aspiration levels, choice of goals). A later adaptation of the tool allowed handling also non-linear (i.e., quadratic-linear) problems, improving the efficiency of generating a continuous and representative portion of the Pareto front (Korhonen and Yu, 2000). The display in Pareto Race consists in bar charts reflecting the last computed solution, from which the user can request more or less of any of the objectives in the next iteration. The main limitation of this simple and intuitive method lies in the underlying iterative nature: displaying only one solution at a time prevents gaining a clear overview of all solutions and relationships between objectives. Therefore, for large problems with many objectives, the user may not be able to explore all potentially interesting solutions in a realistic amount of time. The larger the problem, the less freedom the user has to change their mind frequently (Korhonen, 1996), which limits the applicability of this method for large problems.

Another navigation method is Pareto Navigation (Monz et al., 2008), however to achieve quick and responsive interaction between the user and the tool, solutions are precalculated, while the user can then explore them, or request recombinations of existing plans which require less time to compute.

The approach developed by Miettinen and Mäkelä (2000) was allegedly the first interactive optimization method made available online. WWW-NIMBUS is a classification-based interactive multiobjective optimization method, which asks the user to classify the objectives whether they should be improved, remain identical or be relaxed. The principle is that in each iteration, the current solution should be improved according to the user's specifications. Because the process produces Pareto optimal solutions, it is necessary that at least one objective is allowed to diminish. While the original implementation was technically limited to relatively small problems, subsequent versions improved both the optimization procedures and the GUI. Hakanen et al. (2007) developed IND-NIMBUS, which included a new nonlinear solver to tackle large-scale problems such as simulated moving beds, and provided new visualizations to compare results, including 3D histograms and parallel coordinates. In A-GAMS-NIMBUS, Laukkanen et al. (2012) combined linear and nonlinear solvers, as well as a bi-level decomposition for a heat exchanger network synthesis problem in industrial processes. The ambition was to provide quick resolution of solutions, which ranged between 1 and 43 minutes per iteration.

In a separate work, Miettinen et al. (2010) proposed a reference-point interactive method, NAUTILUS, addressing two behavioral biases, namely loss aversion and anchoring effects, by encouraging the user to not interrupt the search before finding a most preferred solution. To do so, the very first solution presented to the user is a dominated one, so that each iteration does not necessarily imply sacrificing one objective in favor of another, as happens in most IO methods, where tradeoffs necessarily occur when moving from one non-dominated solution to another.

Babbar-Sebens et al. (2015) developed WRESTORE, an interactive evolutionary algorithm tool to search for preferred watershed conservation measures. The process follows a predetermined sequence of interactive “sessions” during which the user is presented with maps and quantitative indicators for different alternatives. During a session, the user either explores and learns from previously generated alternatives, is asked to rate the new alternatives with a psychometric scale (e.g., “good,” “bad,” etc.), or is supported by an automated search procedure which relieves the user from providing inputs by relying on deep learning models which mimic the user's preference model. In addition, the tool is web-based and aims to enable multiple users to explore alternatives and steer the search jointly. The visualization of the alternatives is done essentially by displaying decision variables on maps with colored layers and icons, and providing quantitative performance indicators via histogram charts. The authors concluded on the relevance of surrogate models to reduce latencies due to computational time. In their study, they report durations of around 10 min per solution using the current watershed model, and total experiment durations spanning over several hours or days. A follow-up work from Piemonti et al. (2017b), the authors studied the usability of the tool, and identified three main improvement points: (i) time dedicated to preference elicitation should be minimized to avoid user fatigue, (ii) the interface should facilitate the access and comparison of detailed information in areas of interest, and (iii) that the accumulated findings of the user should be recapitulated at the end of the process.

Stump et al. (2009) present a trade-space visualization (ATSV) tool, which aims to help designers explore the design space in search of preferred solution. This is an adaptation of the a posteriori exploration of a precalculated trade-space which (Balling et al., 2000) first coined “design by shopping.” This feature-rich tool proposes various multidimensional visualizations (scatterplots, parallel coordinates, 3D glyph plots) to explore the trade space and steer the search for new solutions. The visualization tool is coupled to a simulation model by means of an evolutionary algorithm, and the search is influenced by user inputs consisting in reference points or preference ranges. The tool provides a very flexible exploration of the design space, including infeasible and dominated solutions. While this allows a more exhaustive exploration, this necessarily is done at the expense of additional computation time, and therefore the approach is limited to computationally inexpensive simulation models, or dependent on intermediate calculation phases which rely on low-fidelity surrogate models to narrow the design space before initiating more intensive calculations.

1.3. Research Gaps and Objectives

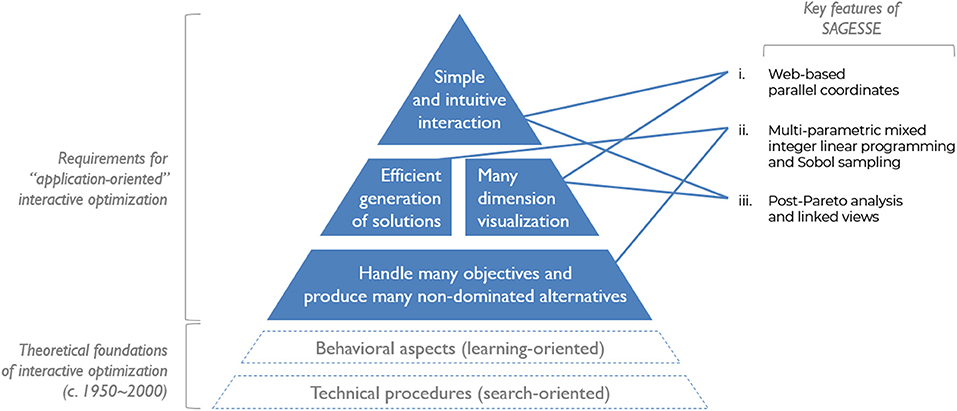

A wide variety of preference types, procedures and interfaces for interactive methods emerge from the existing literature. However, in spite of the early efforts in developing effective search-based procedures, and the more recent efforts in making tools which enable user learning, there remains a slow progression of “application-oriented” methods, which succeed in being adopted outside of academia and for addressing real, large-scale problems (Gardiner and Vanderpooten, 1997; Allmendinger et al., 2016). Four interconnected requirements, which remain only partially achieved in existing methods, can explain this gap (Figure 2):

• First and foremost, methods must have the ability to handle many objectives, and produce many efficient alternatives reflecting the complexity of real-world problems. Xiao et al. (2007, p. 235) noted that most interactive methods still rely on a relatively limited number of solutions, possibly overlooking important Pareto optimal ones, while Allmendinger et al. (2016) found that they typically involve a limited number of objectives, rarely exceeding five. The notion of efficient, or Pareto optimal is also essential, because the goal is to focus the attention of decision makers on the most competitive solutions, and avoid wasting time on less interesting ones (Balling et al., 2000).

• The previous requirement leads to the need for methods which are capable of overcoming the associated computational burden. It is crucial that results are delivered promptly to reduce latency time for users, whose willingness to participate might otherwise be compromised (Collette and Siarry, 2004; Branke et al., 2008; Miettinen et al., 2010). This entails not only that individual solutions are rapidly calculated, but also that they efficiently provide a representative overview of the Pareto optimal front. So far, it appears the underlying trade-off with computational speed has been between either addressing only computationally inexpensive problems (or relying on low-fidelity approximations) (Stump et al., 2009), or facing longer calculation times, possibly disrupting the search and learning experience (Babbar-Sebens et al., 2015).

• Visualization approaches for multiobjective optimization results are equally important, and have been extensively reviewed by Packham et al. (2005), Miettinen (2014), and Fieldsend (2016), clarifying the advantages and limits of the available options (scatterplot matrices, spider charts, Chernoff faces, glyphs…). Among these, parallel coordinates increasingly stand out among the most efficient approaches. They are known for their intuitive representations (Packham et al., 2005; Akle et al., 2017), as well as for occupying the least amount of space per criterion, making them highly scalable to many criteria (Fleming et al., 2005). Despite these strengths, like other visualizations, parallel coordinates also suffer from a lack of readability when displaying many solutions, and the difficulty to view all pairwise relationships in a single chart. The development of interactive visualization methods such as the data-driven documents (D3) library has allowed to partly overcome these issues by filtering solutions and rearranging axes (Bostock et al., 2011; Heinrich and Weiskopf, 2013). While Inselberg (1997) provided valuable guidelines in how to effectively interpret relationships in parallel coordinates, recent studies considered more closely the interpretation of Pareto optimal solutions (Li et al., 2017; Unal et al., 2017). Furthermore, the display of quantitative criteria may not always suffice to take informed decision, and augmenting the traditional display of results with, for example, maps (Xiao et al., 2007), depictions of physical geometries (Stump et al., 2009), values of decision variables (Gardiner and Vanderpooten, 1997) or qualitative criteria (Cohon, 1978) is advised.

• Finally, a simple and intuitive interface is necessary to top the aforementioned requirements. The user must be able to not only easily understand the results, but also steer the process with minimal effort. However, the use of complex jargon, and difficult inputs are still considered barriers against a wider adoption of interactive methods in practice (Cohon, 1978; Wolf et al., 2009; Akle et al., 2017). Meignan et al. (2015), Allmendinger et al. (2016), and Branke et al. (2008, p. 52) all conclude their reviews with a call for improvements in the development of user-friendly interfaces and methods.

Figure 2. Summary of requirements for “application-oriented” interactive optimization methodologies. The key features from the methodology proposed in this paper (SAGESSE) and their relationship to the requirements are indicated on the right.

While the methods reviewed above address one or several of these requirements, none addresses them all simultaneously. The objectives of this paper are thus (i) to introduce a new interactive optimization methodology addressing the requirements in Figure 2, and (ii) to demonstrate its applicability to a large problem. The case-study used for the second objective relies on the multiparametric mixed integer linear programming approach described by Schüler et al. (2018b), applied to the context of urban planning.

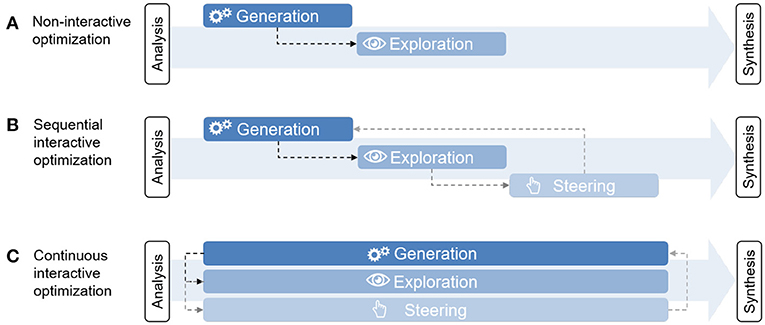

2. Description of the SAGESSE methodology

SAGESSE – for Systematic Analysis, Generation, Exploration, Steering and Synthesis Experience – is an interactive optimization methodology designed to address the requirements summarized in Figure 2. It differs from most traditional sequential or alternating paradigms found in interactive optimization, as the steps of generation, exploration and steering blend together to form a single, continuous, interactive search process capable of tackling large problems (Figure 3). This is made possible by combining in particular three main features (Figure 2): (i) web-based parallel coordinates, as a means to simultaneously explore multiple dimensions and steer the underlying alternative generation process, (ii) deterministic optimization methods coupled with a quasi-random “Sobol” sampling method to efficiently capture large solution spaces, and (iii) on-the-fly application of Post-Pareto analysis techniques (i.e., multiattribute decision analysis and cluster analysis) as well as linked views to various representations of the solutions to support decisions. Preceding the interactive search, an analysis phase is performed by the user and the analyst to translate the constituents of the real-world problem into an optimization model (decision variables, objectives, constraints). Following the interactive search, the methodology provides a way to synthesize the gained knowledge by extracting the subset of most preferred solutions and key criteria. Overall, SAGESSE consists in an experience: a practical contact with facts, which leaves an impression on its user (Oxford, 2018). This means the user doesn't merely obtain a final solution suggested by the model, but rather acquires the knowledge and confidence of why certain solutions are preferable to them. As noted by French (1984), “a good decision aid should help the decision maker explore not just the problem, but also himself.” Finally, the confidence is reinforced by the systematic nature of the methodology, exploring the solution space with an optimization model and rigorous sampling technique, reducing the chances of missing a better alternative.

Figure 3. Novel paradigm for interactive multiobjective optimization, where generation, exploration and steering are performed continuously instead of sequentially.

2.1. Overview of Workflow

Figure 4 describes the general workflow of the methodology, which consists of six main steps. While in principle steps 1 to 5 all occur simultaneously (i.e., generation, exploration and steering tasks happen at the same time), their methodological aspects are explained hereafter sequentially.

Figure 4. Overview of components, workflow and main software involved in the interactive optimization methodology and case-study. Gray text indicates optional tasks.

2.2. Starting a Project

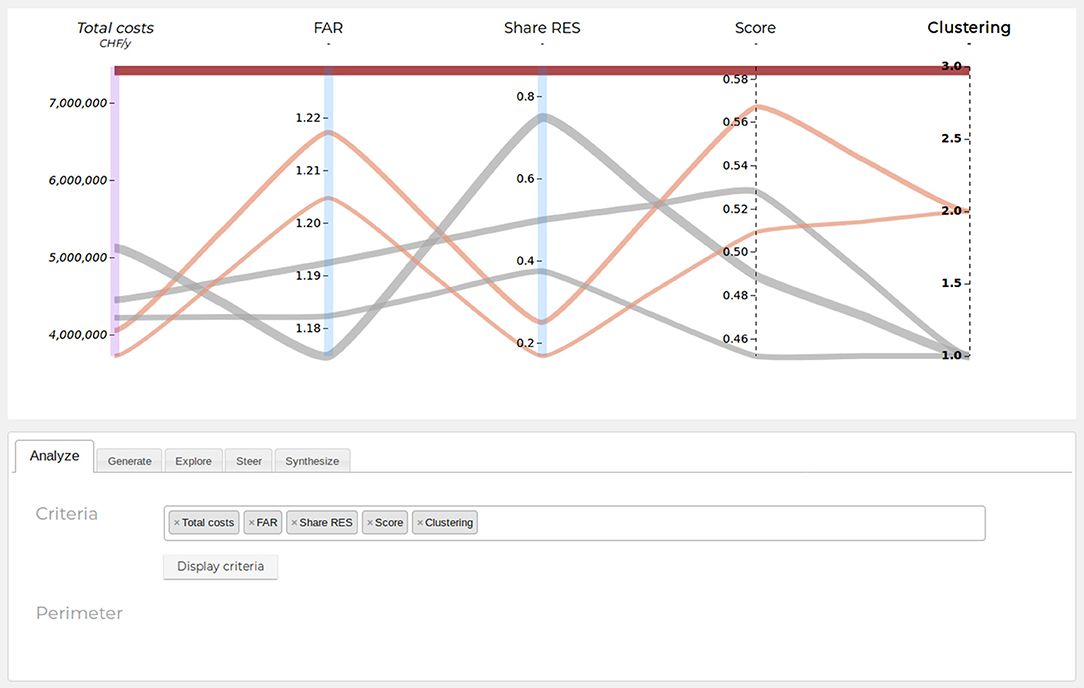

When accessing the interface, the user can either start a new project, or reload an existing one. For a new project, by default an empty parallel coordinates chart with preselected criteria is displayed. An advantage of starting from an empty chart is that it attenuates the risk of anchoring bias, which may cause the user to fixate too soon on possibly irrelevant starting solutions, at the expense of exploring a wider variety of solutions (Miettinen et al., 2010; Meignan et al., 2015). Figure 5 shows the main components of the GUI, namely the parallel coordinates chart, and the tabs from which the user can perform and control the main SAGESSE actions.

Figure 5. Snapshot of the graphical user interface demonstrating several features of the SAGESSE methodology, including axis and polyline styling, multiattribute and cluster analysis results, and the axis selection menu. The line color indicates the belonging of a line to one of the three clusters (bold axis label), while the line thickness is proportional to total costs (italic axis label).

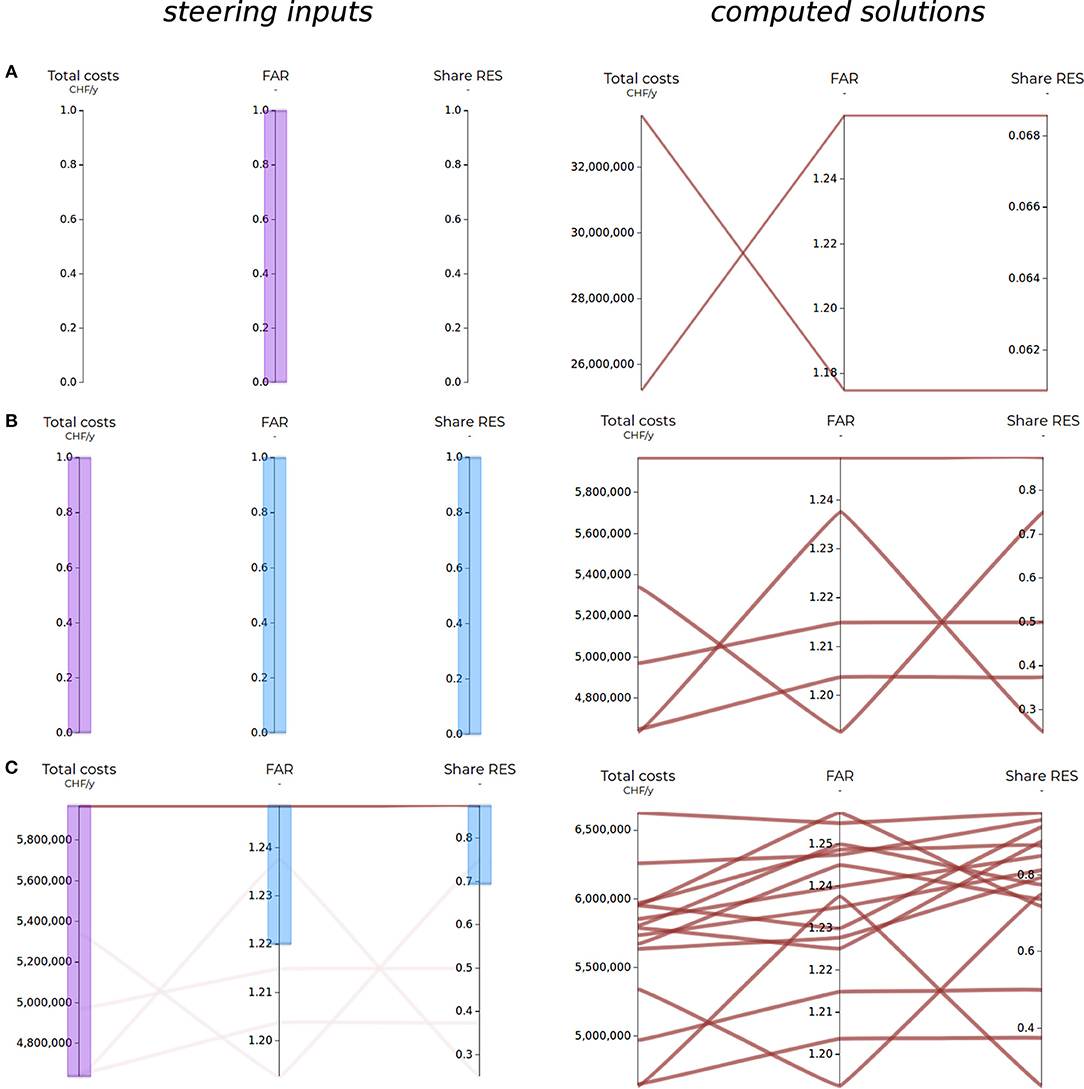

2.3. Steering the Search

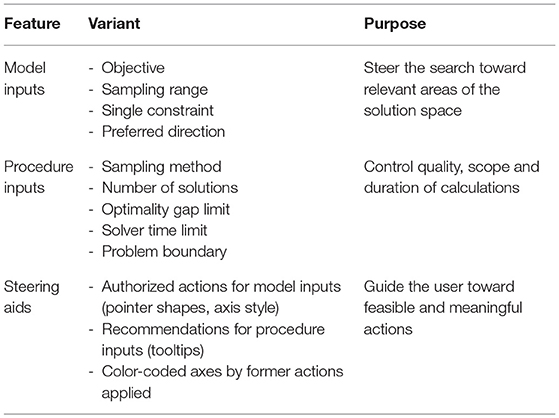

The user can influence the search in two ways: by providing inputs which influence either the optimization model, or the optimization procedure (Figure 4, Step 1). All user inputs are stored in the database, where the generator components can access them for further processing. These inputs, as well as various visual aids, constitute the available steering features, described hereafter and summarized in Table 1.

2.3.1. Optimization Model Inputs

The user specifies their preferences directly on the parallel coordinates chart which is used to display the solutions. This is done by brushing the axes to be optimized or constrained (Martin and Ward, 1995).

There are three associated steering actions performed in Step 1, which will characterize the axes and the role they play in the the optimization procedure in Steps 3 and 4 (Figure 4).

• The first action consists in defining the main objective in the ϵ-constraint (epsilon-constraint) formulation described in section 2.5. Exactly one objective is specified for any new problem to be formulated. This is done by brushing the axis with the “objective” brush (colored in purple). For this action, the numeric values of the brush boundaries do not matter.

• The second action consists in marking one or several axes as single constraint so that they achieve at least (or at most, respectively) a specified value. A red brush is used for this action, and either the upper or lower bound of the brush defines the value to achieve, depending on the preferred direction of the criterion (see below).

• The third action allows to systematically vary the value of a parametrized constraint within the boundaries of a brushed range (in blue). The numeric values for these parametrized constraints are automatically determined by the chosen sampling method (cf. section 2.5), based on the requested number of solutions. While specifying single constraints denotes a “satisficing” (satisfy + suffice) behavior, which might arise if the user is certain that there is no tangible gain in achieving a value better then the specified one (Branke et al., 2008, p. 8), specifying ranges allows instead the optimization of multiple objectives (see section 2.5).

Finally, for any criterion marked as either of the above actions, its preferred direction can be specified. For example, a cost criterion's preference will be “less,” indicating that less of that criterion is preferred to more. A benefit criterion will be “more,” as more is preferred to less. Practically, “less” results in minimization for criteria brushed as objectives, and in upper constraints for criteria brushed as ranges or constraints. Conversely, “more” results in maximization for objectives, and lower constraints for ranges or constraints. Typically, default preferences are known and already included during the analysis phase for each criterion, however they can be edited by the user during the search phase, e.g., to test extreme cases.

2.3.2. Optimization Procedure Inputs

The first type of input regarding the optimization procedure is the stopping criteria for the solver, i.e., a solving time limit, and an optimality gap limit. The optimality gap is a useful feature specific to deterministic global optimization, which allows to produce solutions “that differ from the optimum by no more than a prescribed amount” (Lawler and Wood, 1966). Thus, a user can decide a priori if they would be willing to accept a solution differing by no more than e.g., 5% from the theoretical optimum, in exchange for reduced computational time. Setting looser limits, i.e., lower time or larger optimality gap limits, leads to solutions being returned earlier by the solver, but being potentially further away from the global optimum. While the first is always desirable, the latter might be acceptable in case a close approximation of the optimum solution may suffice. However it is very important to not set these limits too loosely, as solutions too far from the optimum can lead to false interpretations. It is worth emphasizing also that because the calculations are continuously performed while the user explores existing solutions, a “waiting time” of up to two minutes seems acceptable. Indeed, given the user is likely occupied interpreting the already calculated alternatives across the many criteria available, they are therefore most likely not completely idle.

A second input is the sampling method to be employed within the specified ranges, and an associated number of solutions to be sampled (see section 2.5). A third input consists in the desired scope or boundary of the problem. For example, in the case of urban planning, the perimeter to be considered in the problem can be increased or reduced.

While in principle these inputs could also be made directly via the parallel coordinates, they are specified here with buttons, forms and drop down menus. Except for the problem boundary, it should be noted that these inputs are typically predefined by the analyst, and do not require particular understanding from the user. They are rather intended for more experienced users and modelers.

2.3.3. Steering Assistance

Given the different types of content that can be displayed on the axes of the parallel coordinates chart, their typology and permitted steering actions must be clearly and intuitively conveyed to the user. The use of colored brushes, different axis styles and textual tooltips are used for this purpose. The axes can display two main types of information:

i. Methodology-specific information is displayed on axes with a dashed line style (Figure 5). They contain metadata related to the optimization procedure (e.g., iteration number, achieved optimality gap by the solver), or requested by the user in the exploration step (e.g., clustering results or aggregated score, see section 2.7).

ii. Context-specific information generated by the optimization model is displayed on axes with a continuous line style (Figure 5). As such, they can represent an objective function, a constraint, a decision variable, a model parameter or a post-computed criterion (i.e., which is calculated after all decision variables have been determined). Functions expressed as a non-linear combination of decision variables can generally not be optimized with linear solvers, and, depending on the respective formulation, can also not be constrained. They are thus restricted to being post-computed. Axes containing linear functions, model parameters or decision variables can also be brushed as single equality constraints to fix their values, as ranges in which the constraints are systematically varied, or as objectives. To guide the user in the steering process, adapted mouse pointers inform them whether or not an action is allowed on any hovered axis. Furthermore, tooltips briefly explain any forbidden action, and, if possible, how to proceed to achieve an equivalent outcome (for example by specifying a range for a criterion, whose underlying objective function implies a non-linear combination of decision variables, but which can be transformed into a linear constraint).

Another feature to assist the user in steering consists in highlighting the axes which played an active role in the optimization problem (Figure 5). This information is specific to each solution, as the role of an axis is not unique and can change as the search progresses. Thus, when hovering over a polyline, the axes which acted as objective, range or constraint in the generation of that solution are temporarily colored with the respective colors (purple, blue, and red). For example, this helps the user identify criteria which can potentially be further improved because they were so far only post-computed, and those which could be relaxed for the improvement of others.

2.4. Storing Data

A relational database is used to store both the data provided by the user in the interface (e.g., project details, raw steering preferences), and the data produced by the solution generator engine (e.g., problem formulations, solution results and related metadata). The data model for interactive optimization which was developed for the present methodology is described by Schüler et al. (2018a).

2.5. Formulating Problems

Once the user has specified the desired criteria to optimize (i.e., using objective and range brushes in Step 1), the goal is to solve the following generic multiobjective optimization problem, assuming without loss of generality all minimizing objectives (Collette and Siarry, 2004):

where the vector f(x) ∈ ℝk contains the k objective functions to minimize, g(x) ∈ ℝq are the inequality constraints, h(x) ∈ ℝr are the equality constraints, and x ∈ ℝd are the d decision variables in the feasible region S ⊂ ℝd, whose values are to be determined by the optimization procedure.

In principle, this problem could be solved with either a deterministic or a heuristic method (cf. section 1.1). However, in order to benefit from widely available and efficient optimization algorithms such as the simplex or branch-and-bound algorithms (Lawler and Wood, 1966), a deterministic approach is chosen here. To solve the problem deterministically, a scalarization function is applied to transform the multiobjective optimization problem in Equation (1) into N parametrized single-objective optimization problems which will each return a Pareto optimal solution to the original multiobjective problem (Branke et al., 2008). By varying the parameters of the scalarized function, different solutions from the Pareto front can be produced. In summary, to generate the points on a Pareto front what is needed is (i) an appropriate scalarization function, and (ii) a systematic approach to vary the parameters (Laumanns et al., 2006). The requirements for both of these aspects in the context of interactive optimization are discussed next.

2.5.1. Adopted Scalarization Function

Scalarization functions have three key requirements in the context of interactive methods (Branke et al., 2008): (i) they must have the capability of generating the entire Pareto front, while (ii) relying on intuitive input information which accurately reflect the user's preferences, and (iii) being able to quickly provide an overview of different areas on the Pareto front. Two of the most common and intuitive scalarization techniques are the weighted sum (WSM) and the ϵ-constraint methods (Mavrotas, 2009; Oberdieck and Pistikopoulos, 2016). While both are able to generate Pareto optimal solutions, the WSM only partially meets the above requirements. In the WSM, a new unique objective function is created, which consists of the weighted sum of all original k objective functions (Collette and Siarry, 2004, p. 45):

where ; n = 1, …, N, and N is the number of combinations of the weight parameters wn, i leading to Pareto optimal solutions. A first limitation of the WSM is that if the Pareto front is non-convex, the scalar function is not capable to generate solutions in that area (Branke et al., 2008; Mavrotas, 2009; Wierzbicki, 2010). Second, the WSM is biased toward extreme solutions, instead of more balance between the objectives (Branke et al., 2008; Wierzbicki, 2010). The specification of weights as inputs can have other counterintuitive and error-prone consequences on objectives, and thus be more frustrating to use for controlling the search (Cohon, 1978; Larichev et al., 1987; Tanner, 1991; Laumanns et al., 2006; Branke et al., 2008; Wierzbicki, 2010). For example, Wierzbicki (2010, p. 45) illustrates how in a three objective problem where each objective has an equal weight of 0.33, the attempt to strongly increase the first objective, slightly increase the second, and allow to reduce the third, will not be reflected accordingly in the change of weights. Indeed, in the proposed example, modifying the weights to 0.55, 0.35, and 0.1 respectively for each objective in fact only leads to an increase of the first objective, while both others are decreased. The larger the number of objectives, the greater such issues are expected to occur, and thus the more difficult it becomes for the user to determine weights which accurately reflect their preferences. Finally, the weighted sum also requires some form of normalization of incommensurable criteria toward comparable magnitudes, which can also influence the results and might require the user to specify upper and lower bounds a priori (Mavrotas, 2009).

In the ϵ-constraint method, introduced by Haimes et al. (1971), instead of optimizing all k objectives simultaneously, only one is optimized, while the other objectives are converted to parametrized inequality constraints:

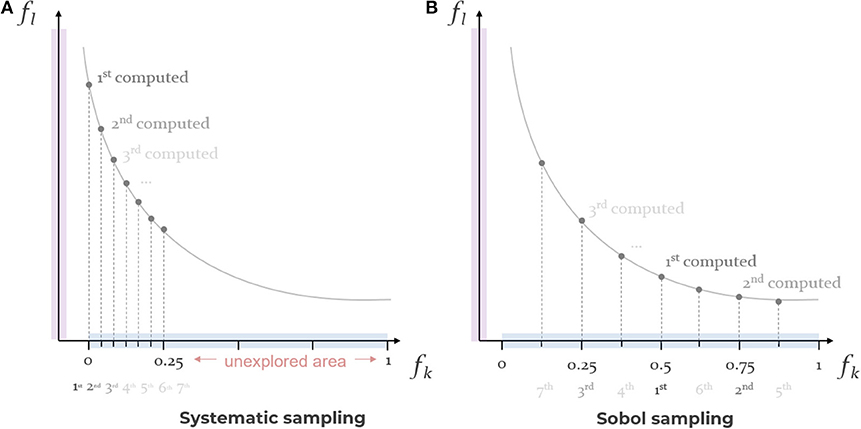

where l ∈ 1, ..., k; n = 1, …N, and N is the total number of points calculated in the Pareto front, and where ϵn, j are parameters representing the upper bounds for the auxiliary objectives j ≠ l. In the original method, Nj unique upper bounds for each objective are determined within a range of interest , by incrementing by a fixed value . The minimum and maximum bounds can either be based on the expertise of the DM, or be computed by minimizing and maximizing each objective individually. The problem is solved for each unique combination of ϵn, j, i.e., for a total of N combinations, where (Chankong and Haimes, 2008, p. 285) (Figure 6A).

Figure 6. Schematic comparison of (A) systematic and (B) Sobol sampling for specifying constraints in an ϵ-constraint problem minimizing two objectives: state after 7 computed samples of a total of 25 requested points. Purple: main ϵ-constraint objective fl. Blue: arbitrary range of interest in the auxiliary objective fj, in which the upper bounds ϵr, j are automatically allocated by the sampling method (note: the ticks indicate the relative position of the constraints for a normalized range, and the subscripts r indicate the order in which each upper bound is used by the solver).

Conceptually, the ϵ-constraint method can be understood as the specification of a virtual grid in the objective space, and solving the single-objective optimization problem for each of the N grid points (Laumanns et al., 2006). The main advantages of the ϵ-constraint method over the weighted-sum method are that: (i) it can handle both convex and non-convex Pareto fronts (avoiding the need to evaluate the convexity of the solution space), (ii) the specification of bounds is a more intuitive and less misleading concept than setting weights (Cohon, 1978; Kok, 1986; Wierzbicki, 2010), and (iii) the variation of constraints leads to a richer and less redundant set of solutions (Branke et al., 2008; Mavrotas, 2009). For these reasons, the ϵ-constraint is adopted in the present interactive optimization methodology.

In addition to that, the original ϵ-constraint method in Equation (3) can be reformulated as a multiparametric optimization problem (Pistikopoulos et al., 2007), in which not only the upper bounds ϵn, j of the auxiliary objectives are varied, but also any other model parameter θt in the vector θ ∈ ℝm. Thus, assuming without loss of generality all minimizing functions, the nth problem being solved can be written as:

where n = 1, …N, and N is the total number of points calculated in the Pareto front. For simplicity, all parameters to be varied by a sampling scheme (regardless of whether they refer to a function fj or to a model parameter θt) are referred to as ϵn, p, where p = 1, …, P, and where, by definition, P = k − 1 + u is the total number of varied parameters. Thus, let E be the matrix of all sampled parameters in Equation (4), which contains in each row the sampled parameters of the nth problem being sent from the client to the optimization procedure:

Referring to the steering actions performed by the user and defined in section 2.3.1, the brushed objective here corresponds to fl in Equation (4), while the lower and upper bounds of brushed ranges correspond to the lower and upper bounds of the range of interest in Equation (5). It is worth noting here that for the particular case where fj(x, θ) = xd, the user can also control and vary individual decision variables directly. Finally, single constraints do not require sampling, and are thus handled separately: when brushed on an axis representing a function, a single parameter is fixed as equal to the upper value of the brush (or to the lower value of the brush for a maximizing function). Furthermore, as, from a modeling perspective, parameters do not possess any “preferred direction”, in case a single constraint brush is employed to fix their value, the lower bound of the brush is considered by default.

Despite the advantages of the ϵ-constraint method, Chankong and Haimes (2008, p. 285) noted that it can be inefficient when perturbating the values of the ϵn, p bounds in the incremental fashion described above. As such, and especially when many dimensions are involved, the generation of solutions using the ϵ-constraint method can be time-consuming and uneven across the objective space when interrupted prematurely, leading to a poor representation of the Pareto front (Collette and Siarry, 2004; Chankong and Haimes, 2008; Copado-Méndez et al., 2016). This lack of efficiency is particularly problematic in interactive methods, as the user should be presented with an overview of the Pareto optimal solutions as fast as possible in order to know which areas lead to preferred alternatives. The use of sampling techniques to facilitate and improve the determination of ϵn, p in Equation (4) is discussed next.

2.5.2. Adopted Sampling Method

Several studies have investigated ways to improve the determination of parameters in the ϵ-constraint method. For example, Chircop and Zammit-Mangion (2013) proposed an original algorithm to explore two dimensional problems more efficiently and evenly with the ϵ-constraint method, avoiding sparse Pareto fronts. However, their procedure is restricted to bi-objective problems. The use of various sampling techniques has also been studied as a means to efficiently explore a space with a minimum amount of points. Burhenne et al. (2011) compared various sampling techniques and found that the Sobol sequence (Sobol, 1967) leads to a more efficient and robust exploration of parameter spaces, and is thus recommended when sample sizes must be limited due to time or computational limitations. A Sobol sequence is a quasi-random sampling technique designed to progressively generate points as uniformly as possible in a unit hypercube (Figure 6B) (Burhenne et al., 2011). Closely related to the present approach, Copado-Méndez et al. (2016) tested the use of pseudo- and quasi-random sequences to allow the ϵ-constraint method to handle many objectives more efficiently. They obtained better quality and faster representations of Pareto optimal solutions using a combination of Sobol sequences and objective reduction techniques, compared to the standard ϵ-constraint method and other random sequences. Franken (2009) also uses a Sobol sequence approach for the exploration of promising input parameters for particle swarm optimization in parallel coordinates. However, the adoption of quasi-random sequences for real-time benefits in interactive optimization has not been tried. This approach – rather than a regular systematic sampling typically adopted in the ϵ-constraint method – is particularly relevant when dealing with interactive methods, as the order with which the solutions are explored is critical when the user's time is limited. Furthermore, the Sobol sequence greatly removes a burden from the user, who must only specify loose ranges of approximate preference or interest, and the sequence automatically takes care of determining the constraints in the next most efficient location of the solution space.

In SAGESSE, the quasi-random Sobol sampling method (Sobol, 1967) is therefore adopted and can be selected to vary the parameters in Equation (5), ensuring a quick and efficient exploration of the entire space with a minimum amount of solutions (Figure 6B).

With the Sobol sampling approach, the user specifies a number of solutions N, and the corresponding parameters in E are computed as:

where sn, p is an element in the matrix SN×P, whose rows contain the Sobol sequence of N coordinates in a P-dimensional unit hypercube. Various computer-based Sobol sequence generators have been developed and implemented to compute the elements of SN×P. Here, a Python implementation based on Bratley and Fox (1988) was used, allowing the generation of sequences including up to 40 dimensions (Naught101, 2018). Other generators could increase this number, e.g., allowing sequences for up to 1111 dimensions (Joe and Kuo, 2003). As illustration, the numeric values sampled with the Sobol approach for N = 5 points and P = 3 parameters in ranges [0, 1] are provided in Esob, Equation (7). This choice of range further implies that in this example, the coordinates of the parameters are in fact identical to those of the Sobol sequence in a unit hypercube:

Alternatively, a standard systematic sampling method can also be used (Gilbert, 1987), which can in some cases be preferred to the Sobol sampling method. With systematic sampling, the space is systematically explored by dividing the sampled dimensions into regular intervals. An important drawback from this sampling method is that it can lead to misleading or biased insights if the sampled solution space contains “unsuspected periodicities” (Gilbert, 1987). In addition, it is less convenient for real time optimization because of its slower progression throughout the solution space (Figure 6A). Nevertheless, this sampling technique can provide more control to the user than the Sobol approach. For example, it can be used to perform a systematic sensitivity analysis on the parameters in Equation (4), by systematically combining specific values on different axes. Unlike for the Sobol sequence, in this approach, the total number of sampled points is given implicitly by , where Np is the number of requested points in the range of interest of each dimension.

Therefore, each dimension thus contains Np unique values to sample, computed as:

where is the increment between each ϵn′, p. The corresponding matrix Esys resulting from systematic sampling is then populated by combining all parameter values in the following order:

As an example, for three dimensions sampled with systematic sampling between [0, 1] and for N1 = 3, N2 = 2, N3 = 2, the resulting matrix of varied parameters is:

2.6. Generating Solutions

In this step, the single-objective optimization problems formulated based on Equation (4) are solved. In particular, the solver receives from the client the main objective to optimize, as well as the values for all specified parameters contained in EN×P. As long as the user has not specified new objectives on the parallel coordinates chart, the generation process continues to add solutions in the current ranges, taking as inputs the rows of EN×P one after another. As soon as a change in objectives occurs, the solver interrupts the current sampling sequence and starts again with the newly provided objective and EN×P.

2.7. Exploring Solutions

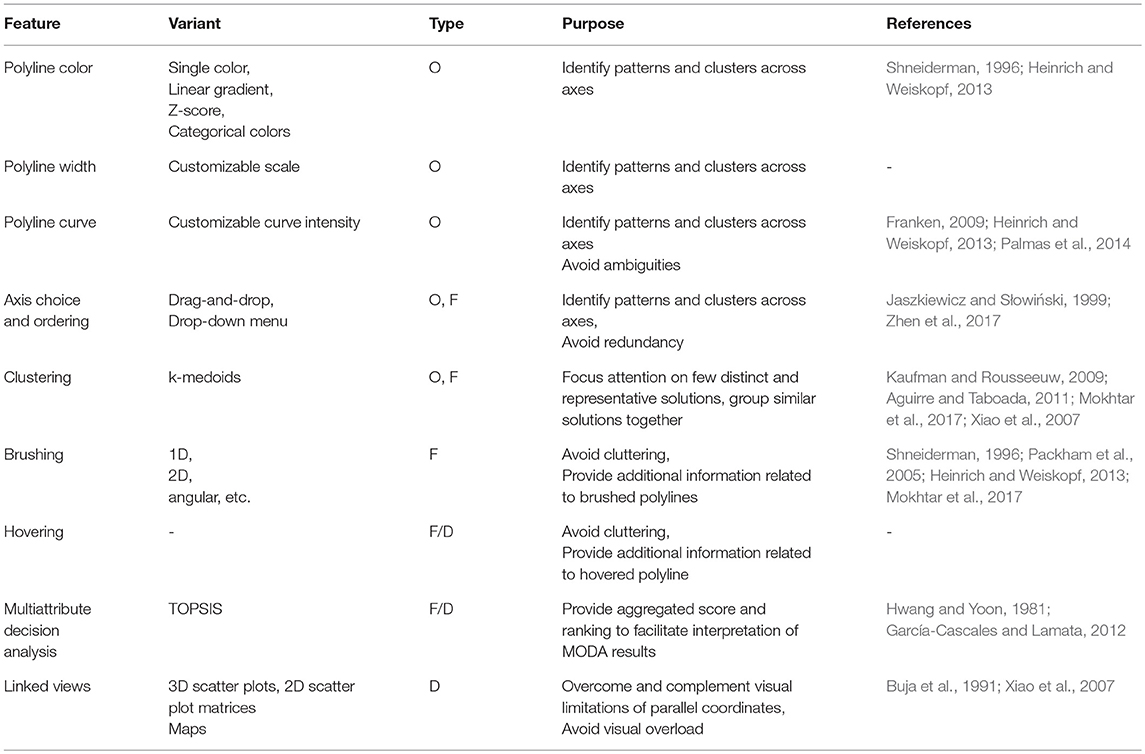

The purpose of exploration is for the user to learn about tradeoffs and synergies between the solutions, and develop their confidence in what qualifies a good solution. The interface should offer a positive and intuitive experience, respecting the information-seeking mantra “overview, filter, details on demand” (Shneiderman, 1996). Parallel coordinates provide a basis for this mantra (Heinrich and Weiskopf, 2013), allowing the user to develop a feeling for achievable values in competing objectives, and understand the reasons preventing the achievement of goals. The available functionalities supporting exploration are summarized in Table 2 and described below, organized according to their main purpose (i.e., overview, filter or details).

Table 2. Adopted exploration features related to the parallel coordinates interface, classified by type (O: overview, F: filter, D: details on demand).

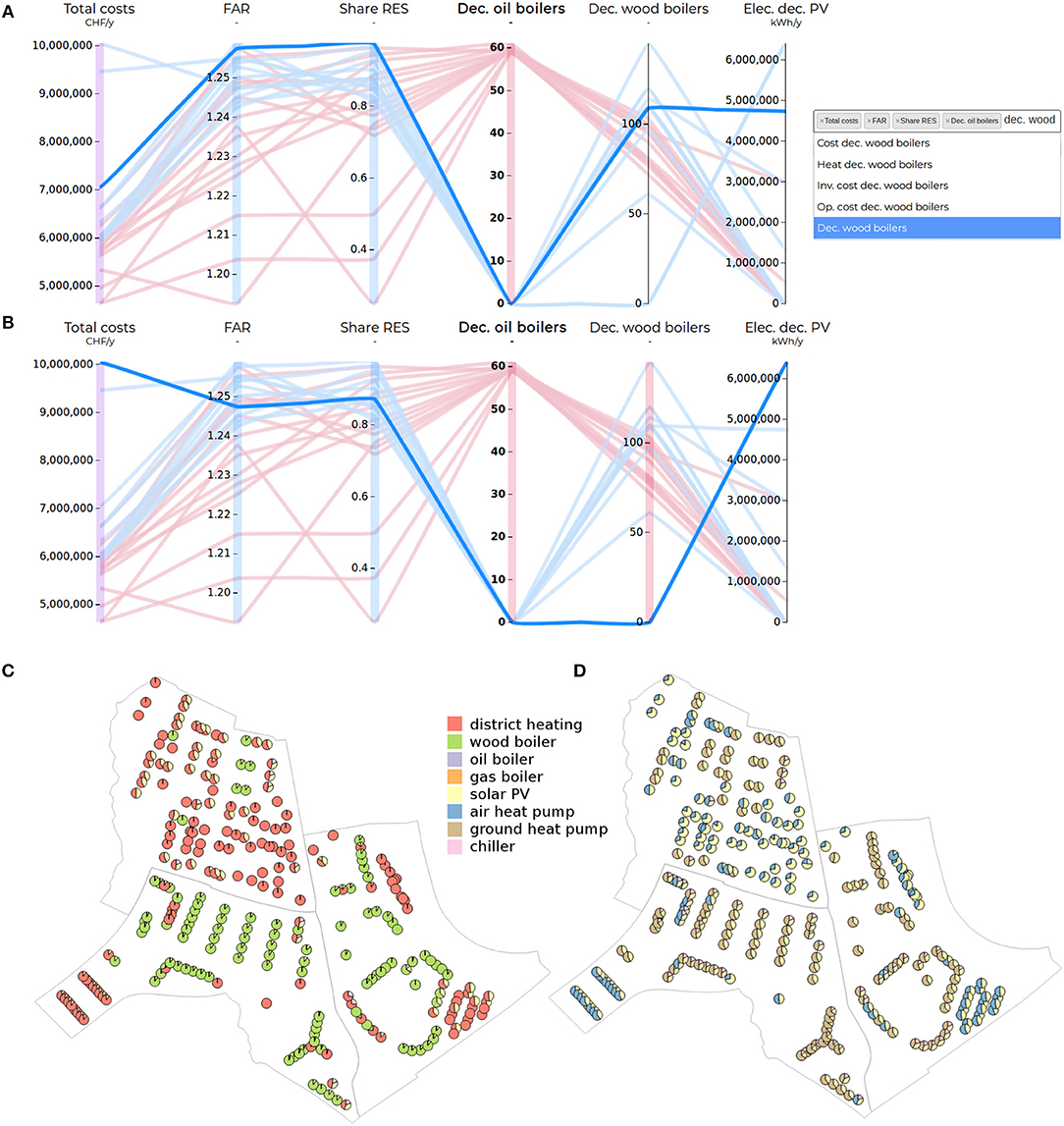

2.7.1. Overview of Relationships Between Criteria

The parallel coordinates reveal tradeoffs (or negative correlations) between two axes as crossing lines, and synergies (or positive correlations) non-crossing lines (Inselberg, 1997; Li et al., 2017). Because the chart can only show such patterns for pairs of adjacent axes, two approaches are available to explore more relationships. First, the implementation of the chart (which relies on the data driven documents (D3) library Bostock et al., 2011; Chang, 2012) allows to dynamically drag-and-drop axes in various positions, making it possible to quickly investigate specific pairs on demand. Second, different visual encodings for the polylines can be used to emphasize various aspects of the data (Cleveland and McGill, 1985). In addition to their vertical position along each axis, properties such as color, width, line style, transparency, animation etc. can be mapped to polylines to reflect the values of a criterion. Here, color and width are used to reveal the relationships between the criterion being mapped with respect to all other axes. This allows for example to highlight high (respectively low) performing solutions, as well as clusters of solutions on any given axis. The available coloring options include linear bi- or multi-color gradients (each color shade indicates increasing values), Z-score gradient (indicating the deviation from the mean value) and categorical (assigning a unique color to each value). The line width property can be assigned to an other criterion, so that high polylines with high values are thicker than those with low values. Another way to improve readability and identify patterns and clusters is by using curves instead of lines. The user can adjust the intensity of the curvature of polylines in order to balance the readability of correlations (most readable with straight lines), and of overlapping lines and clusters (most readable with curves) (Heinrich and Weiskopf, 2013).

2.7.2. Filtering Solutions and Criteria

The user can filter the polylines to display only those of interest by “brushing” the desired axes (Heinrich and Weiskopf, 2013; Bandaru et al., 2017b). The ability to display only the solutions which satisfy desired values on the different axes is a common response to the problem of cluttering, which causes parallel coordinates to become unreadable when too many lines are present (Johansson and Forsell, 2016; Li et al., 2017). Various brushing options are available (Heinrich and Weiskopf, 2013), including a normal one-dimensional brush (defining upper and lower bounds on an axis), multiple one-dimensional brushes (to select several distinct portions on an axis), an angular brush to filter solutions according to their slope (i.e., correlation) between two axes, or two-dimensional brushes which allows the selection of solutions based on their path between two axes. Additional information can be computed for the brushed subset of polylines, such as clustering or multiattribute decision analysis. Related to brushing is the action of hovering a polyline with the pointer, which highlights it across the chart, and displays additional information (e.g., its exact numeric values for the different visible axes, information not currently displayed on the axes, or information regarding how the polyline was generated, cf. section 2.3.3). Hovering is also a way to access information in linked views, such as a graphical representation of the solution.

Another way to filter the displayed information concerns the visible axes representing the criteria. While parallel coordinates scale well to large numbers of criteria (Inselberg, 2009), in practice, working simultaneously with up to “seven plus or minus two” axes is advised to account for the user's cognitive ability (Miller, 1956). French (1984) argue that in the case of multicriteria decision analysis, this number may already be too large for simultaneous consideration. Because the relative importance of criteria in the decision process is not necessarily known in advance, and might change as new knowledge is discovered, the user must be able to access and dismiss axes in real-time. For this purpose, a drop-down menu allows to type or scroll for other criteria, and easily dismiss currently visible ones. This allows to compose new charts on-the-fly which reflect the most relevant information to the user at a given point. In the experience of the authors, the act of including criteria incrementally facilitates the consideration of even over seven axes, because the complexity gradually builds up in a structured way.

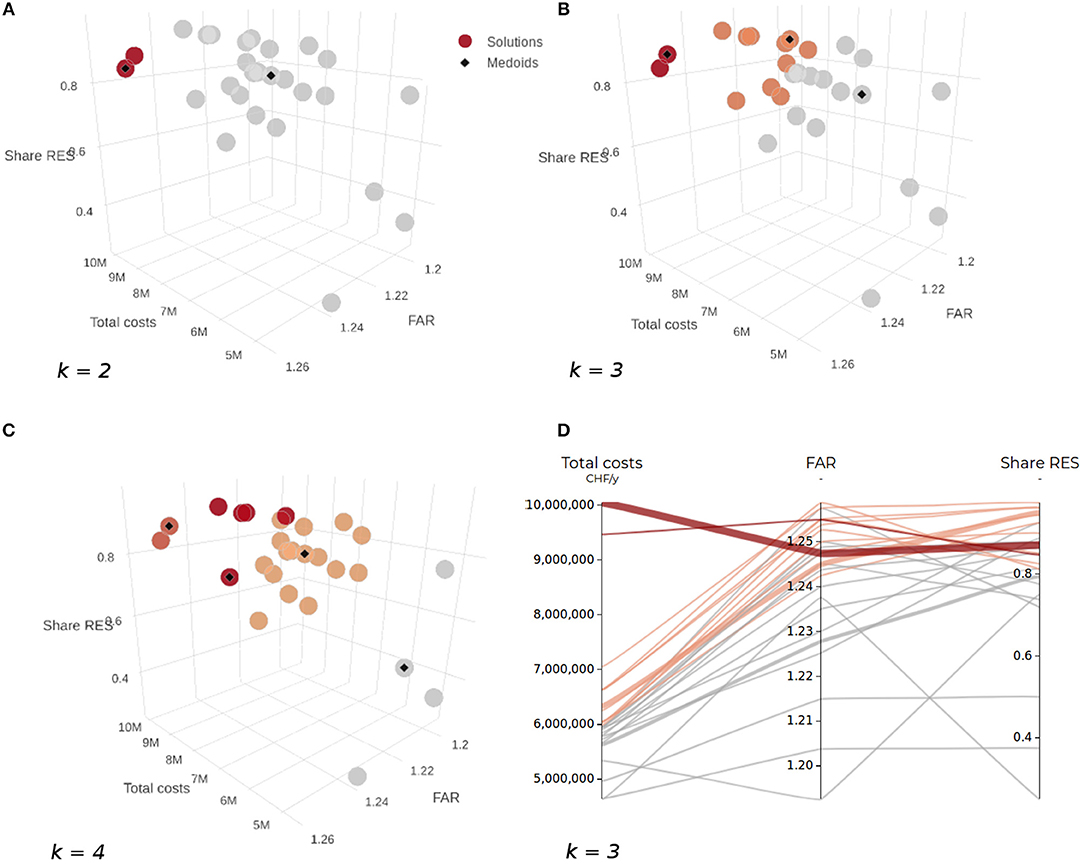

2.7.3. Filtering Representative Solutions With Clustering

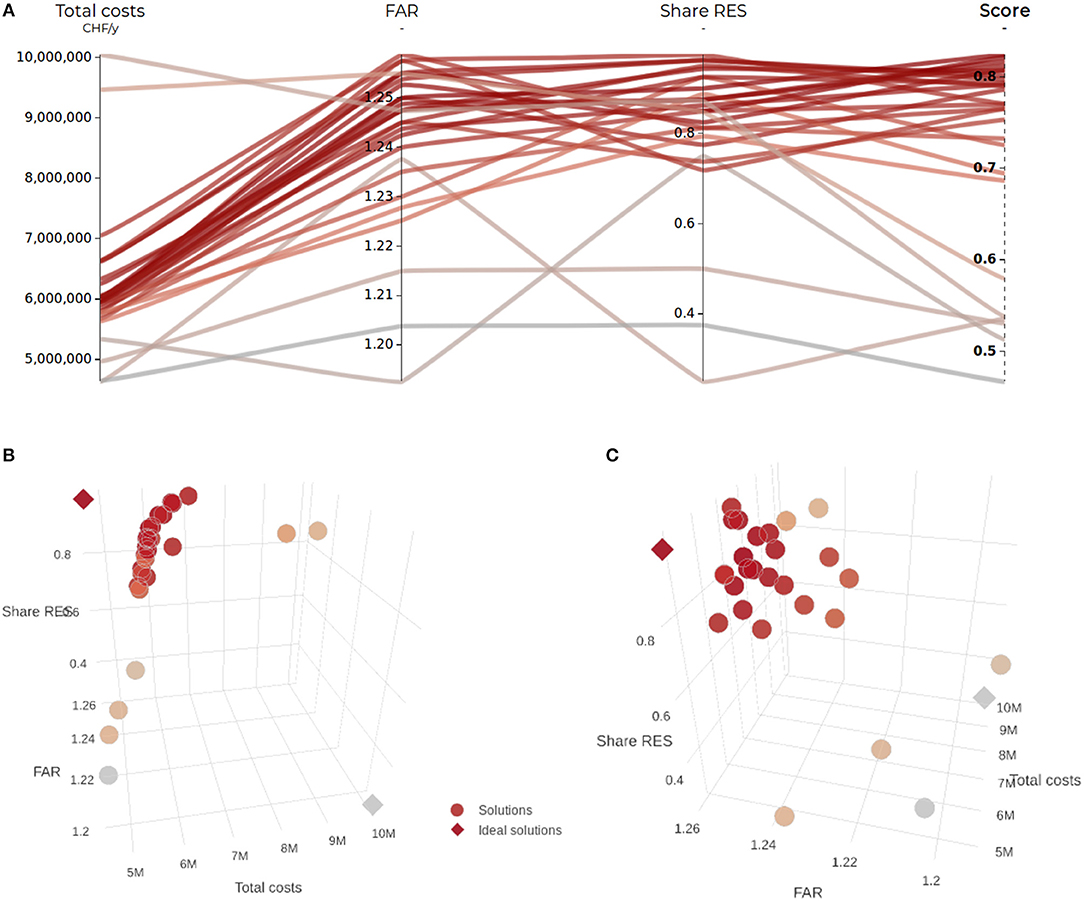

The use of clustering techniques is a common approach to help make the selection of solution from a large Pareto optimal set more manageable (Aguirre and Taboada, 2011; Zio and Bazzo, 2012; Chaudhari et al., 2013). Clustering aims to group objects with similar characteristics into distinct partitions, or clusters. Practically, an algorithm seeks configurations for which “objects of the same cluster should be close or related to each other, whereas objects of different clusters should be far apart or very different” (Kaufman and Rousseeuw, 2009). A popular k-medoids technique called partitioning around medoids (PAM) is adopted here (Kaufman and Rousseeuw, 2009, p. 68). Unlike the related k-means technique which computes k virtual cluster centroids, k-medoids directly determines the most representative solutions from the existing data set (Park and Jun, 2009). While this increases computational effort, it reduces its sensitivity to outliers. Furthermore, the additional computational effort is justified in the case of interactive optimization for decision support, as it allows to focus the attention of the user on existing representative solutions, instead of virtual points which may not actually be feasible. Another main limitation of both k-means and k-medoids is the need to input a number of clusters a priori (Aguirre and Taboada, 2011). While various quality indices could be applied to assess the quality of the clusters (Goy et al., 2017), the direct feedback from the graphical display in parallel coordinates and 3D scatter plots lets the user easily explore the effect of various inputs on the final clusters (Kaufman and Rousseeuw, 2009, p. 37). The inputs consist in both the number of clusters k (specified by the user in the GUI) as well as the initial seed medoids which are chosen randomly by the algorithm from the solution set each time it is executed.

2.7.4. Filtering Solutions With Multiattribute Decision Analysis Score

When many solutions are compared across many dimensions, it can become overwhelming to distinguish which stand out overall. Psychological studies have emphasized the limited ability of human decision makers in balancing multiple conflicting criteria, even between a limited number of alternatives (French, 1984; Larichev et al., 1987; Jaszkiewicz and Słowiński, 1999). Here an aggregative multiattribute decision analysis (MADA) method is proposed to facilitate this task, by revealing the best rank or score of each alternative relative to the others (Cajot et al., 2017a). Each solution is attributed a score that is displayed as an additional axis in the parallel coordinates, and that is used as additional decision criterion.

As pointed out in the introduction (Hwang and Masud, 1979), multiobjective decision analysis (MODA) methods are designed for the generation of alternatives, while the strength of MADA lies in the evaluation and comparison of predetermined alternatives. Many methods could be adopted, and there is a wide body of literature comparing the similarities, pros and cons of these methods (Zanakis et al., 1998; Cajot et al., 2017a). The often implicit assumption with most MADA methods is that the criteria can compensate each other. While the combined strength of interactive multiobjective optimization and parallel coordinates precisely is to avoid the need to aggregate the different incommensurable criteria, providing the user with a simplified aggregated metric can nevertheless provide useful and reassuring support to make sense of the data. The resulting score is not intended to replace the DM's decision, but rather to focus their attention on a limited number of alternatives, and stimulate questions and learning (e.g., discovering what characterizes a top- or low-ranked alternative). As reviewed in Cajot et al. (2017a), the application of MADA as a way to support decisions on precalculated non-dominated sets generated with MODA is fairly common (e.g., Aydin et al., 2014; Ribau et al., 2015; Fonseca et al., 2016; Carli et al., 2017). However, these applications are typically performed a posteriori. Here, the feedback from the MADA score provides direct insights which the user can use to steer the search with MODA.

To avoid burdening the user with further methodological aspects, the Technique for Order of Preference by Similarity to Ideal Solution (TOPSIS) method is adopted for its most intuitive and understandable principle, limited need for inputs and ability to handle many criteria and solutions (Zanakis et al., 1998; Cajot et al., 2017a). The method ranks each alternative according to its proximity to an ideal solution—which would present the best value in every criterion—and respectively according to its distance from a negative ideal solution—which would present all worst values (Hwang and Yoon, 1981). The final score provided by TOPSIS is a relative closeness metric between 0 and 1, where higher scores reflect higher proximity to the positive ideal solution.

Two methodological aspects must be in particular considered in the TOPSIS method, namely the normalization of data, and the choice of ideal solutions.

First, to account for different scales in the criteria, values must be normalized for comparability (Kaufman and Rousseeuw, 2009). Two linear scale normalization methods are implemented here to handle various cases: the “max” and the “max-min” variants (Chakraborty and Yeh, 2009). The normalized values obtained with the “max” variant are . This method is advocated by García-Cascales and Lamata (2012), as it reduces the consequences of rank reversal. However, in situations where the spread of values is not consistent across criteria, this normalization tends to neglect the importance of criteria with more compact values. In case the criterion is sensitive to such small changes, these should be accounted for in the TOPSIS score. Thus, to avoid this bias, the “max-min” variant distributes all values between 0 and 1, providing not only comparable magnitudes between criteria, but also comparable spread (Chakraborty and Yeh, 2009). With this variant, the normalized values are calculated as . At the expense of being more sensitive to rank reversal, this method more accurately accounts for criteria with small spreads.

Regarding the choice of ideal solutions, while the original TOPSIS methods computes them relatively to the studied data, García-Cascales and Lamata (2012) propose the adoption of absolute positive and negative ideal points, either defined by the user or by context-specific rules. Here, the relative approach is preferred, in order to avoid asking the user for additional information, especially because of the possibly large number of criteria. They may however choose to apply the method on all computed solutions, or just a subset, for example for comparing only solutions selected in the comparer dashboard (cf. section 2.8). If the user wishes to benefit from more reliable and consistent MADA results not subject to rank-reversal, they can manually provide reasonable upper and lower bounds for each criterion, and use those absolute values instead. As noted by Wierzbicki (2010, p. 51), there are no fundamental reasons to restrict such analyses to the ranked alternatives, and using more information (e.g., absolute values if known, or historical data), or less (e.g., limiting the definition of ideals only through non-dominated solutions) can affect the strictness of the ratings. They can also give higher weights to criteria to better reflect their subjective preferences, though again to reduce the need for inputs, equal weights are assumed by default. As illustrated in Figure 9, the scores provided by the TOPSIS method easily reveal the solutions which perform best, i.e., which have both high values in criteria to maximize, and low values in the criteria to minimize.

2.7.5. Details on Demand: Linking Views to Parallel Coordinates

In some cases, the content and format of parallel coordinates is not sufficient or adapted to convey certain types of information. Xiao et al. (2007) highlight in particular the need to complement parallel coordinates visualizations with maps, while (Stump et al., 2009) suggest displaying physical geometries of generated designs - not just the design variables and performance metrics. Furthermore, Cohon (1978) noted that the communication of qualitative concepts such as aesthetics may be difficult for analysts to handle, although desirable for decision makers. Closely related is the importance to also communicate the decision variables, when requested, because in some cases the objectives and criteria alone may not provide all the necessary information to decide (Gardiner and Vanderpooten, 1997, p. 296, shenfield2007).

To address these gaps, two features are implemented. The first allows to visualize all (or subsets of) solutions in interactive 2D scatter plot matrices and 3D scatter plots (Plotly-Technologies-Inc., 2015). While these are restricted to a limited number of dimensions, they offer a more direct and familiar interpretation of distances then in parallel coordinates. The second allows to access additional information regarding individual solutions. For this purpose, polylines are made clickable to allow the user to access other types of information associated to a solution. When clicked, a visual dashboard opens below the chart, which could display any additional information such as images, charts, maps, exact numerical values, etc. Both views are linked, so that when the cursor hovers over the information in the dashboard, the corresponding polyline is highlighted. Like the axes, the dashboard offers reorderable containers to allow side-by-side comparisons between graphical representations.

In the urban planning case-study presented in section 3, clicking a polyline triggers the generation of geographic maps, which complement the parallel coordinates chart with spatial and morphological information, providing also a more detailed insight into the decision variables of a solution (location, size, and type of buildings, energy technologies, etc.).

2.8. Synthesizing the Search

The ability to effectively convey key analytical information from the methodology to complement the decision maker's intuitive and emotional thought process is essential to influence the decision process. Studies performed by Trutnevyte et al. (2011) showed for example that combining analytical and intuitive approaches in elaborating municipal energy visions led stakeholders to revise their initial preferences and values and take better quality decisions. However, the choice of parallel coordinates may not be the most effective way to communicate the analytical insights obtained from the search, in particular to stakeholders or decision makers which did not actively take part in the search. Indeed, Wolf et al. (2009) noted how novice users might feel overwhelmed or less likely to exploit a dense amount of solutions in parallel coordinates. Piemonti et al. (2017b) further emphasized the added-value of summarizing the most preferred alternatives found in interactive optimization methods to make the selection process easier, while Gardiner and Vanderpooten (1997) suggested the importance of synthesizing the characteristics of obtained solutions to facilitate the communication to others.