Does artificial intelligence enhance physician interpretation of optical coherence tomography: insights from eye tracking

- Division of Cardiology, Hamilton General Hospital, Hamilton Health Sciences, McMaster University, Hamilton, ON, Canada

Background and objectives: The adoption of optical coherence tomography (OCT) in percutaneous coronary intervention (PCI) is limited by need for real-time image interpretation expertise. Artificial intelligence (AI)-assisted Ultreon™ 2.0 software could address this barrier. We used eye tracking to understand how these software changes impact viewing efficiency and accuracy.

Methods: Eighteen interventional cardiologists and fellows at McMaster University, Canada, were included in the study and categorized as experienced or inexperienced based on lifetime OCT use. They were tasked with reviewing OCT images from both Ultreon™ 2.0 and AptiVue™ software platforms while their eye movements were recorded. Key metrics, such as time to first fixation on the area of interest, total task time, dwell time (time spent on the area of interest as a proportion of total task time), and interpretation accuracy, were evaluated using a mixed multivariate model.

Results: Physicians exhibited improved viewing efficiency with Ultreon™ 2.0, characterized by reduced time to first fixation (Ultreon™ 0.9 s vs. AptiVue™ 1.6 s, p = 0.007), reduced total task time (Ultreon™ 10.2 s vs. AptiVue™ 12.6 s, p = 0.006), and increased dwell time in the area of interest (Ultreon™ 58% vs. AptiVue™ 41%, p < 0.001). These effects were similar for experienced and inexperienced physicians. Accuracy of OCT image interpretation was preserved in both groups, with experienced physicians outperforming inexperienced physicians.

Discussion: Our study demonstrated that AI-enabled Ultreon™ 2.0 software can streamline the image interpretation process and improve viewing efficiency for both inexperienced and experienced physicians. Enhanced viewing efficiency implies reduced cognitive load potentially reducing the barriers for OCT adoption in PCI decision-making.

Introduction

To employ intracoronary imaging findings in procedural decisions, physicians require the ability to perform real-time image interpretation with speed and accuracy (1). The lack of such proficiency among many interventional cardiologists limits the adoption of optical coherence tomography (OCT) despite its clinical utility (2). The use of artificial intelligence (AI) to automate substantial portions of the image interpretation task may improve physician experience with imaging (3–6). The image review software Ultreon™ 2.0 improves on AptiVue™ by using AI to detect calcium and external elastic lamina (EEL), reducing the need for physician identification of these structures. In addition, a simplified interface is used that toggles different representations of the vessel lumen rather than simultaneously showing multiple representations, thereby reducing the cognitive load on the physician (7, 8). The goal of these changes is to streamline the interpretation process for less experienced physicians.

Eye-tracking studies are a way to gain insight into the ease with which physicians extract information from these two software platforms. Eye tracking has been used to investigate decision-making in other fields such as psychology, neuroscience, marketing, human–computer interaction, and medicine (9–18). It provides detailed information on where and how long participants focus on different elements of a visual scene, whether their searching is strategic and whether their gaze coincides with the location of the pertinent information of the visual scene (17, 19).

In this study, an eye-tracking technology was used to understand how visual changes in the OCT software platform from AptiVue™ to Ultreon™ 2.0 impact the viewing efficiency and accuracy of physicians using OCT for percutaneous coronary intervention (PCI) decision-making. We compared experienced and inexperienced physicians to explore the impact differed among who were physicians who experienced vs. inexperienced with the AptiVue™ platform.

Methods

We conducted a descriptive study of eye-tracking patterns of interventional cardiologists reviewing OCT console images from both Ultreon™ 2.0 and AptiVue™ software platforms.

Participants

Interventional cardiologists and fellows in training were recruited from a tertiary center in Canada. Operators were considered inexperienced if they self-reported performing less than 50 OCT studies as primary operator with the AptiVue™ platform. Written informed consent was obtained from all participants.

Imaging materials and interpretation tasks

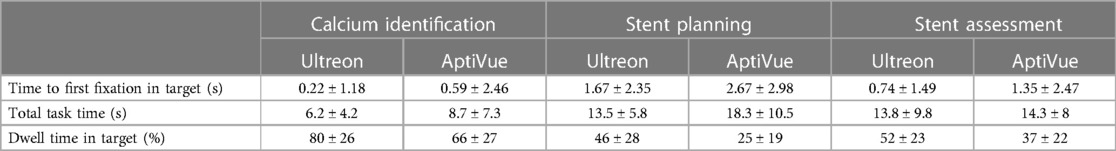

Six static images were selected for each of three OCT imaging tasks, namely, (1) calcium identification, (2) stent sizing, and (3) stent assessment. Two versions of each static image were prepared, one using Ultreon™ 2.0 and the other using AptiVue™. Two counterbalanced sets of 18 images were constructed such that each set contained six images of each task, half on Ultreon™ 2.0 and half on AptiVue™, but only one version of each image is required in each set so that a participant would only see each image once. Example images of morphology assessment, stent sizing, and stent review are shown in Figure 1 contrasting the visual interface of Ultreon™ 2.0 and AptiVue™.

Figure 1. Comparison of AptiVue™ and Ultreon™ 2.0 interfaces. In the AptiVue™ software, panel A provides a cross-sectional image with the possibility of utilizing measurement tools inside the curtain menu (*) (length, area, thickness, angle) and a visual estimation of the mean diameter (green text). In Ultreon™ 2.0, all information is at a glimpse in panel A1. AI-plaque morphology recognizes the calcium burden at a given threshold (orange arc), mean EEL diameter (dashed line - E), lumen diameter (L), and mean lumen area (LA) (green-highlighted boxes). B and C are fused together in Ultreon™ 2.0. This provides several information (highlighted green box): in orange the calcium-plaque burden above the preselected threshold, lesion length, EEL diameter, lumen diameter and LA at the proximal and distal references, and MLA. (A) Morphology assessment. In comparison with AptiVue™, Ultreon™ 2.0 presents AI-plaque recognition (A1) with orange band recognizing calcium. This is also presented in orange bands in the longitudinal/lumen profile (B) where the calcium is detected (*) based on the preselected threshold (in this case ≥180°). (B) Stent sizing. In comparison with AptiVue™, Ultreon™ 2.0 presents AI recognition of EEL (dashed line - E) which is displayed in the cross-sectional area (A1) and the lumen/longitudinal section (B). In addition, L and LA are displayed at the cross-sectional area (A1) and at the proximal and distal references together with the selected length (B). MLA is also shown (B). (C) Stent assessment. In comparison with AptiVue™, Ultreon™ 2.0 fuses panels B and C and provides expansion, MSA (highlighted box), and apposition (*) bars combined in one picture. Expansion at the selected frame and LA values is also shown in panel A1. In addition, L and LA are displayed at the cross-sectional area (A) and at the proximal and distal references.

For calcium identification, participants were asked to interpret if the calcium arc was more or less than 180° based on the static image. For stent sizing, participants were required to give the correct stent diameter and length. Stent diameter was based on rounding down from the distal EEL diameter (if available) or rounding up from the distal lumen diameter. Stent length was based on the measurement identified by both software platforms. For stent assessment, participants needed to identify whether any OCT-identified concerns included a composite of (1) significant edge dissection (defined as a 60° arc and more than 3 mm length), (2) malapposition (defined as more than 300 μm from stent struts to the lumen), and (3) underexpansion (defined as less than 90% of the tapered reference) (20). If one or more features were not identified, the answer was deemed incorrect.

Training

All participants were oriented to both platforms. All inexperienced operators received formal training in the Ultreon™ 2.0 software. This training consisted of a standardized instruction tutorial provided by Abbott Vascular, structured in two separate videos on pre- and post-PCI image review following the MLD MAX algorithm (21).

Image review and eye tracking

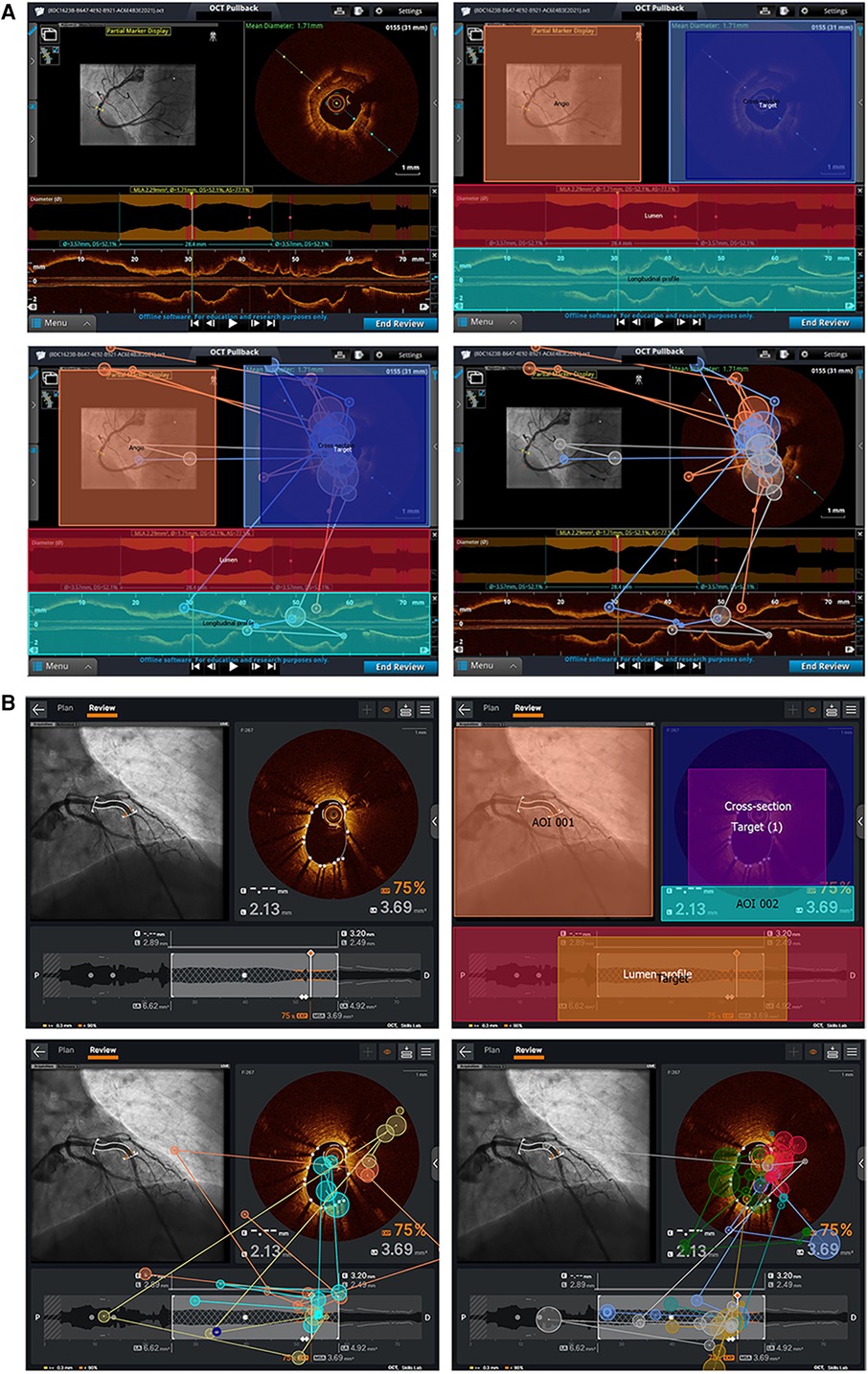

Participants were randomized to review one of the two sets of 18 static images. Images were equally distributed between each of the three different tasks and each of the two console types. Participants provided an interpretation while their eye movements were tracked. Eye movements were recorded using an SMI RED 250 Hz eye tracker (SensoMotoric Instruments, Germany), a non-invasive tool which uses a small infrared camera mounted at the bottom of a laptop screen to follow the pupils. Areas of interest (AOI) specific to each task and software system were graphically identified within the static images and verified by two experts (GC and MS). Within each task, the most relevant area of interest (in special cases multiple areas of interest) was determined as the area of interest containing all necessary information to correctly complete the task as shown in Figure 2.

Figure 2. AOI and sample eye–tracking data for Ultreon™ 2.0 and AptiVue™ platforms. (A) AOI and target creation for one of the stent result assessment tasks in the Ultreon™ 2.0 group. Right upper picture: AOI is defined by delimiting specific areas/boxes (Target and Target 1) that the software will recognize as areas where gaze/fixation data must be registered. Left and right bottom pictures: Fixation data of inexperienced and experienced operators, respectively, undertaking this stent assessment task without the overlying AOI and target boxes. (B) AOI and target creation for one of the calcium assessment tasks in the AptiVue™ group. Left upper picture: The image is imported into the eye–tracking dedicated computer and defined as a task image. Right upper picture: Areas of interest are defined by delimiting specific areas/boxes that the software will recognize for areas where gaze/fixation data must be registered. The “target” box defines the area of interest for the specific analysis. Left bottom picture: Fixation data of experienced operators undertaking this task with overlying AOI and target. Right bottom picture: Fixation patterns of experienced operators without the overlying AOI and target boxes.

Outcomes

The primary study outcomes were viewing efficiency and interpretation accuracy. Viewing efficiency was assessed as follows: (a) time to first fixation (time taken for the participant to look at the target area of interest for the prescribed task), (b) total task time (time taken to complete the prescribed task), and (c) dwell time in target defined as the percentage time in target compared with total task time (s). Interpretation accuracy was defined as the proportion of correct responses provided by the participant. Heat maps of eye movements were created to qualitatively compare experienced vs. inexperienced operator eye movements.

Data analysis

For each participant and task, viewing efficiency and accuracy metrics were averaged and subjected to a mixed multivariate model. Platform type (AptiVue™ vs. Ultreon™ 2.0) and task type (identification of calcium, stent sizing, stent assessment) were treated as within-subject variables. Operator experience (inexperienced vs. experienced) was treated as a between-subject variable. Statistical analysis was performed using SPSS version 26 (IBM, Redmond).

Heat maps were reviewed by three OCT imaging experts (GC, TS, MS), to identify key themes in the viewing patterns using an inductive approach with constant iterative comparisons across platforms, experience levels, and task.

Results

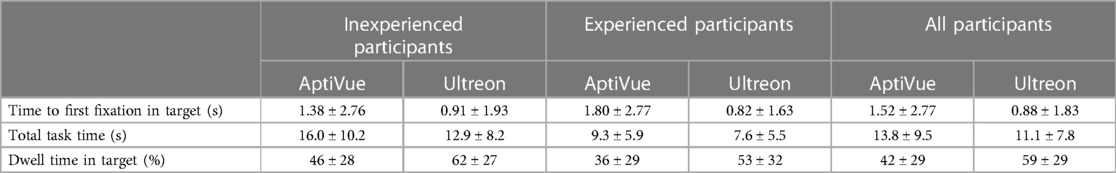

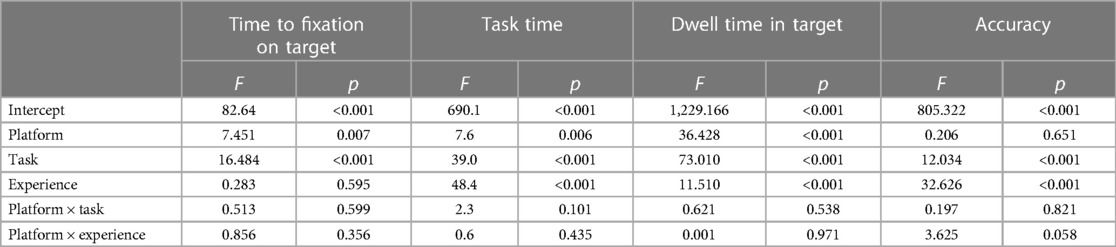

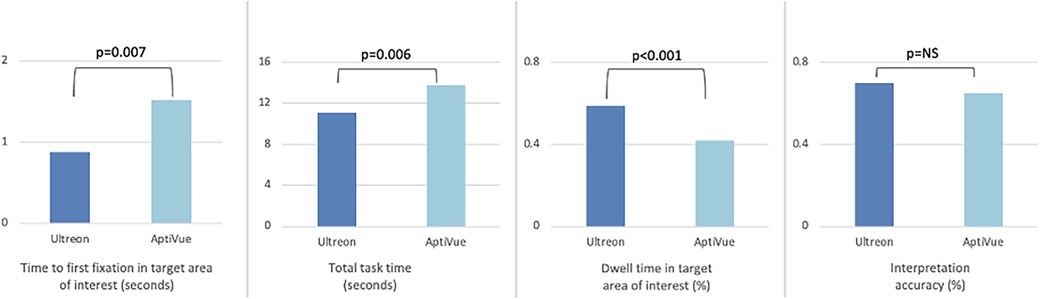

Eighteen physicians participated in the study. Of these, 10 (56%) were practicing interventional cardiologists, and eight (44%) were fellow physicians [two (11%) were women]. Twelve (67%) self-reported performing less than 50 OCTs as first operator and were considered inexperienced. Tables 1 and 2 summarize the results by experience level and task type. Figure 3 compares average viewing efficiency and interpretation accuracy between the Ultreon™ 2.0 and AptiVue™ platforms.

Figure 3. Comparison of viewing efficiency and interpretation accuracy metrics between Ultreon™ 2.0 and AptiVue™ platforms. The use of the Ultreon™ 2.0 platform compared with AptiVue™ while performing three interpretation tasks relevant to percutaneous coronary intervention (quantifying the arc of calcium, choosing a stent size, assessing a stent result) was associated with reduced time to first fixation in the most relevant target area of interest, reduced total task time, increased dwell time in the target area of interest, and preserved interpretation accuracy.

Viewing efficiency

Time to first fixation (TFF) in target was low, averaging 1.2 s (95% CI 1.0–1.5 s). In a mixed multivariate analysis, Ultreon™ 2.0 TFF was 0.9 s (95% CI 0.5–1.2 s) vs. 1.6 s for AptiVue™ (95% CI 1.2–2.0 s, p = 0.007) (Table 3). The reduced TFF using the Ultreon™ 2.0 platform was present for both inexperienced and experienced operators, 0.9 ± 1.9 vs. 1.4 ± 2.8 s and 0.8 ± 1.6 s vs. 1.8 ± 2.8, respectively. The effect was also consistent across tasks with TFF significantly reduced for the calcium assessment (0.2 vs. 0.6 s), stent planning (1.7 vs. 2.7 s), and stent assessment tasks (0.7 vs. 1.3 s). Interactions between software platform and experience level or task type were not significant.

Total task time (TTT) averaged 11.4 s (95% CI 10.6–12.3 s). TTT was lowered using the Ultreon™ 2.0 platform (10.2 s, 95% CI 9.0–11.5, vs. 12.6 s, 95% CI 11.4–13.9 for AptiVue™, p = 0.006) both among inexperienced (12.9 ± 8.2 vs. 16.0 ± 10.2 s) and experienced (7.6 ± 5.5 vs. 9.3 ± 5.9 s) operators. TTT also exhibited a significant reduction across the different tasks (6.2 vs. 8.7 s for the calcium assessment, 13.5 vs. 18.3 s for the stent planning, and 13.8 vs. 14.3 s for the stent assessment).

Dwell time (DT) in the target area of interest averaged 49% (95% CI 47%–52%). It was significantly increased using the Ultreon™ 2.0 platform (58%, 95% CI 54%–67%, vs. 41%, 95% CI 37%–44%, p < 0.001). DT also significantly varied across experience and task (both p < 0.001). However, the interaction variables with the platform for both experience and task were not significant.

Interpretation accuracy

Overall interpretation accuracy of the OCT images was 73% (95% CI 0.67–0.78). In a mixed multivariate analysis, the software platform did not affect interpretation accuracy (Ultreon™ 74%, 95% CI 67%–81%, vs. AptiVue™ 71%, 95% CI 64%–78%, Table 3). However, experienced participants outperformed inexperienced participants (87%, 95% CI 79%–95%, vs. 58%, 95% CI 52%–64%, p < 0.001). Interpretation accuracy also varied across task type (calcium identification 82%, 95% CI 73%–90%; stent sizing 56%, 95% CI 47%–64%; stent assessment 80%, 95% CI 72%–88%, p < 0.001). Interactions between the software platform and experience level or task type were not significant.

Heat maps

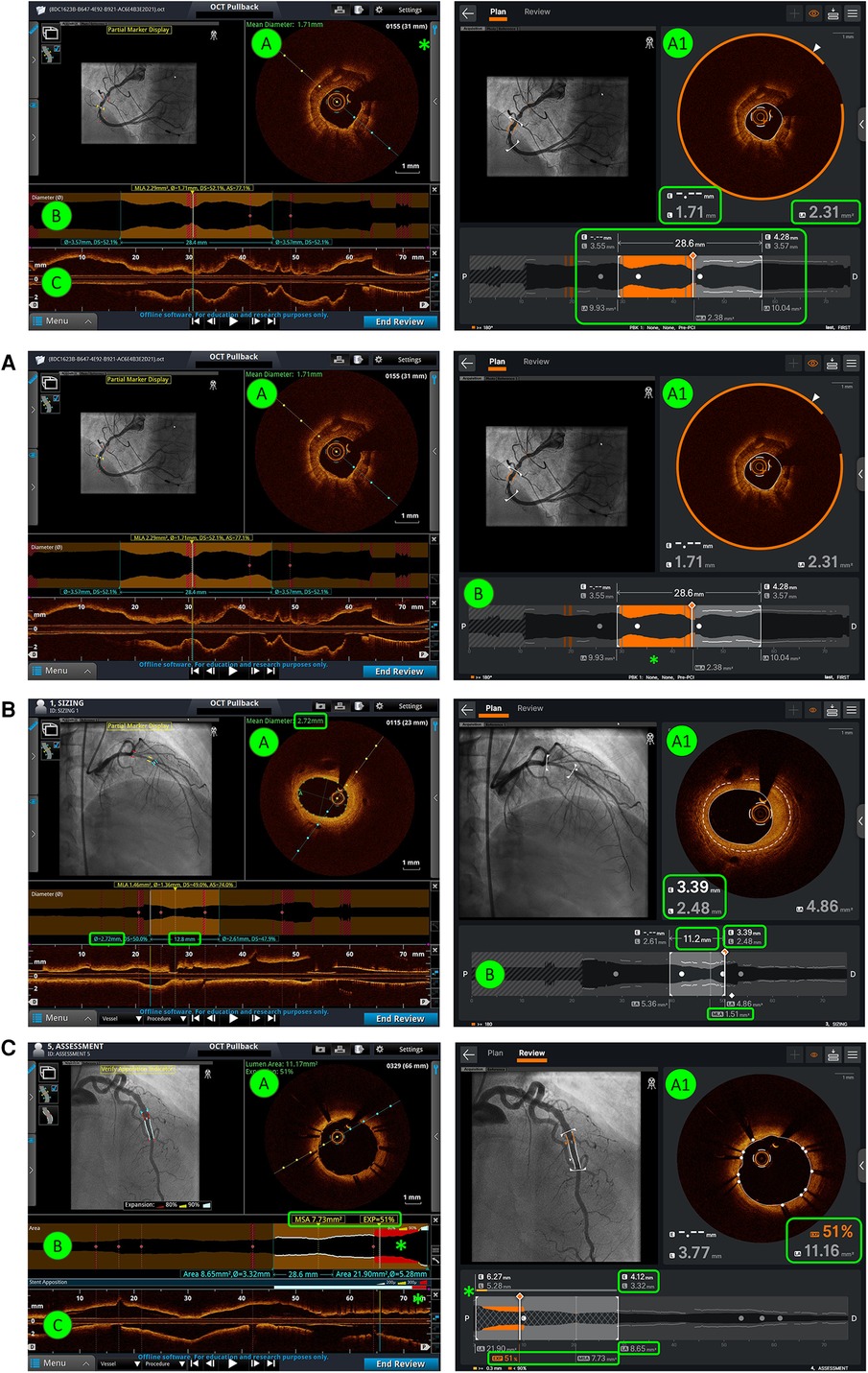

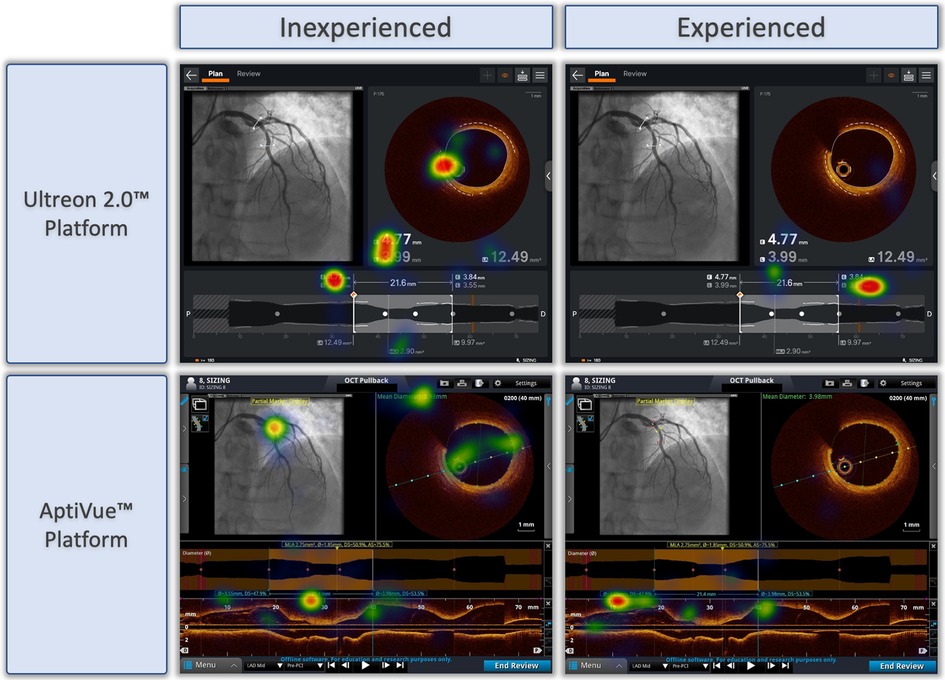

Qualitative review of heat maps showed fewer areas of focus in Ultreon™ 2.0 vs. AptiVue™ platforms and fewer areas of focus among experienced vs. inexperienced participants. A representative sample of heat maps for the stent sizing task is shown in Figure 4. Both experienced and inexperienced participants focused on key numbers in the Ultreon™ 2.0 platform but had more areas of focus in the cross section and longitudinal view in the AptiVue™ platform.

Figure 4. Representative heat maps of eye tracking during OCT-guided stent sizing task with Ultreon™ and AptiVue™ platforms. Eye-tracking heat maps which identify areas of fixation, with warm colors reflecting longer duration of gaze, and colder colors less duration of gaze. Representative samples of inexperienced and experienced participants deciding on stent size based on OCT imaging using the Ultreon™ 2.0 and AptiVue™ platforms.

Discussion

This is the first study to use eye tracking to explore physician interpretation of intravascular imaging using an AI-enhanced software. Two key findings are reported: (1) Ultreon™ 2.0 improved the viewing efficiency of both inexperienced and experienced physicians compared with AptiVue™, and (2) physicians experienced in intracoronary imaging using the AptiVue™ platform retained their expertise and accuracy in the Ultreon™ 2.0 platform.

Eye tracking has been used to evaluate physician performance in non-invasive imaging interpretation tasks (13, 15). Much of the literature comes from radiology where expertise is associated with decreased time on task and quicker focus on relevant areas of interest (13). Both metrics (TTT and TFF) were better among experienced vs. inexperienced physicians in this study. More importantly, the potential impact of comprehensively integrating AI into the image interpretation process was also assessed in this study. The Ultreon™ 2.0 software was associated with improvements in TTT, TFF, and DT for both inexperienced and experienced physicians suggesting that the streamlined interface and AI integration made both groups more proficiently behave in their viewing patterns. Thus, the phenomenon of “expertise reversal,” in which experienced physicians who have already accustomed to the greater amount of visual information that the AptiVue™ software provides might have had their performance worse, did not occur (22).

AI-enabled systems, such as Ultreon™ 2.0, hold a promise to improve time-sensitive decisions as part of a broader approach of collaboration between physicians and AI (23). Importantly, whether physicians can leverage AI involves a host of human and technological factors. In this study, physicians leveraged the AI-enhanced OCT data with minimal training regardless of their levels of expertise. This ease of use relates to the intrinsic interpretability of the AI enhancements which are displayed as a visual overlay on top of raw cross-sectional imaging data, a concept termed explainability within the AI literature (24, 25). Many motivations for incorporating AI explainability into the interface design including a desire to help physicians justify AI decisions, correct errors, and build trust through knowing why the AI output was produced are noted (26). This approach may account for the successes of integrating AI within the Ultreon™ 2.0 platform in contrast to mixed success in integrating AI in other areas (3).

The enhanced viewing efficiency noted using Ultreon™ 2.0 holds relevance for clinical practice and the cognitive load of physicians seeking to use OCT datasets in planning PCI. Cognitive load theory suggests that effective learning and decision-making processes are limited by the amount of information that working memory can process at any given time (7). The high-resolution, cross-sectional dataset from OCT has a wealth of information to guide PCI. However, to take a full advantage of this dataset, physicians must process and interpret this additional information under the time pressures of clinical care, a scenario that may test the limits of their working memory (8). By standardizing the OCT workflow (21), and using AI-enhanced software to focus clinician attention on this standardized workflow, the use of cognitive load barriers could be reduced. In the LightLab experience, a standardized OCT workflow meaningfully impacted physician PCI decisions and reduced equipment use but increased procedure time by 9 min on average (27). Our eye-tracking findings suggest that Ultreon™ 2.0 may streamline the interpretation process by reducing complex information into manageable chunks, thus aligning with the principles of cognitive load theory.

Study limitations

Several limitations are worth mentioning. First, our study was conducted using static images that forced the interrogated physician to provide interpretation and answers without the ability to dynamically manipulate the image. This simplifies the task and would potentially underestimate the streamlining provided by Ultreon™ 2.0 compared with AptiVue™. Second, while our participants had diverse training and experience with OCT, all were recruited from a single center.

Conclusions

Using OCT to make PCI decisions, physicians had improved the viewing efficiency with preserved accuracy using an AI-enabled, visually streamlined Ultreon™ 2.0 software compared with the AptiVue™ platform.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

GC: Writing – original draft, Writing – review & editing. NP: Writing – review & editing. TS: Writing – review & editing. MS: Writing – review & editing.

Funding

The authors declare financial support was received for the research, authorship, and/or publication of this article.

This work was funded by an unrestricted educational grant from Abbott Vascular.

Conflict of interest

MS, NP, and TS have received honoraria from Abbott Vascular.

The remaining author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Ali ZA, Galougahi KK, Mintz GS, Maehara A, Shlofmitz R, Mattesini A. Intracoronary optical coherence tomography: state of the art and future directions. EuroIntervention. (2021) 17:e105–23. doi: 10.4244/EIJ-D-21-00089

2. Sung JG, Sharkawi MA, Shah PB, Croce KJ, Bergmark BA. Integrating intracoronary imaging into PCI workflow and catheterization laboratory culture. Curr Cardiovasc Imaging Rep. (2021) 14(6):6. doi: 10.1007/s12410-021-09556-4

3. Asan O, Choudhury A. Research trends in artificial intelligence applications in human factors health care: mapping review. JMIR Human Factors. (2021) 8(2):e28236. doi: 10.2196/28236

4. Williams LH, Drew T. What do we know about volumetric medical image interpretation?: a review of the basic science and medical image perception literatures. Cogn Res Princ Implic. (2019) 4:21. doi: 10.1186/s41235-019-0171-6

5. Bartuś S, Siłka W, Kasprzycki K, Sabatowski K, Malinowski KP, Rzeszutko Ł, et al. Experience with optical coherence tomography enhanced by a novel software (Ultreon™ 1.0 software): the first one hundred cases. Medicina. (2022) 58(9):1227. doi: 10.3390/medicina58091227

6. Januszek R, Siłka W, Sabatowski K, Malinowski KP, Heba G, Surowiec S, et al. Procedure-related differences and clinical outcomes in patients treated with percutaneous coronary intervention assisted by optical coherence tomography between new and earlier generation software (Ultreon™ 1.0 software vs. AptiVue™ software). J Cardiovasc Dev Dis. (2022) 9(7):218. doi: 10.3390/jcdd9070218

7. Paas F, Tuovinen JE, Tabbers H, Van Gerven PWM. Cognitive load measurement as a means to advance cognitive load theory. Educ Psychol. (2003) 38(1):63–71. doi: 10.1207/S15326985EP3801_8

8. Ehrmann DE, Gallant SN, Nagaraj S, Goodfellow SD, Eytan D, Goldenberg A, et al. Evaluating and reducing cognitive load should be a priority for machine learning in healthcare. Nat Med. (2022) 28(7):1331–3. doi: 10.1038/s41591-022-01833-z

9. Mikhailova A, Raposo A, Sala SD, Coco MI. Eye-movements reveal semantic interference effects during the encoding of naturalistic scenes in long-term memory. Psychon Bull Rev. (2021) 28(5):1601–14. doi: 10.3758/s13423-021-01920-1

10. Tatler BW, Hayhoe MM, Land MF, Ballard DH. Eye guidance in natural vision: reinterpreting salience. J Vis. (2011) 11(5):5. doi: 10.1167/11.5.5

11. Motoki K, Saito T, Onuma T. Eye-tracking research on sensory and consumer science: a review, pitfalls and future directions. Food Res Int. (2021) 145:110389. doi: 10.1016/j.foodres.2021.110389

12. Kooiker MJG, Pel JJM, van der Steen-Kant SP, van der Steen J. A method to quantify visual information processing in children using eye tracking. J Vis Exp. (2016) 113:54031. doi: 10.3791/54031

13. van der Gijp A, Ravesloot CJ, Jarodzka H, van der Schaaf MF, van der Schaaf IC, van Schaik JPJ, et al. How visual search relates to visual diagnostic performance: a narrative systematic review of eye-tracking research in radiology. Adv Health Sci Educ Theory Pract. (2017) 22(3):765–87. doi: 10.1007/s10459-016-9698-1

14. LoGiudice AB, Sherbino J, Norman G, Monteiro S, Sibbald M. Intuitive and deliberative approaches for diagnosing “well” versus “unwell”: evidence from eye tracking, and potential implications for training. Adv Health Sci Educ Theory Pract. (2021) 26(3):811–25. doi: 10.1007/s10459-020-10023-w

15. Al-Moteri MO, Symmons M, Plummer V, Cooper S. Eye tracking to investigate cue processing in medical decision-making: a scoping review. Comput Human Behav. (2017) 66:52–66. doi: 10.1016/j.chb.2016.09.022

16. Ashraf H, Sodergren MH, Merali N, Mylonas G, Singh H, Darzi A. Eye-tracking technology in medical education: a systematic review. Med Teach. (2018) 40(1):62–9. doi: 10.1080/0142159X.2017.1391373

17. Kok EM, Jarodzka H, de Bruin ABH, BinAmir HAN, Robben SGF, van Merriënboer JJG. Systematic viewing in radiology: seeing more, missing less? Adv Health Sci Educ Theory Pract. (2016) 21(1):189–205. doi: 10.1007/s10459-015-9624-y

18. Sibbald M, de Bruin ABH, Yu E, van Merrienboer JJG. Why verifying diagnostic decisions with a checklist can help: insights from eye tracking. Adv Health Sci Educ Theory Pract. (2015) 20(4):1053–60. doi: 10.1007/s10459-015-9585-1

19. Henderson JM. Gaze control as prediction. Trends Cogn Sci. (2017) 21(1):15–23. doi: 10.1016/j.tics.2016.11.003

20. Ali ZA, Landmesser U, Maehara A, Matsumura M, Shlofmitz RA, Guagliumi G, et al. Optical coherence tomography-guided coronary stent implantation compared to angiography: a multicentre randomised trial in PCI—design and rationale of ILUMIEN IV: OPTIMAL PCI. EuroIntervention. (2023) 389:1466–76. doi: 10.4244/EIJ-D-20-00501

21. Shlofmitz E, Croce K, Bezerra H, Sheth T, Chehab B, West NEJ, et al. The MLD MAX OCT algorithm: an imaging-based workflow for percutaneous coronary intervention. Catheter Cardiovasc Interv. (2022) 100(Suppl 1):S7–13. doi: 10.1002/ccd.30395

22. Kalyuga S. The expertise reversal effect. Managing cognitive load in adaptive multimedia learning. IGI Global (2009). p. 58–80.

23. Knop M, Weber S, Mueller M, Niehaves B. Human factors and technological characteristics influencing the interaction of medical professionals with artificial intelligence-enabled clinical decision support systems: literature review. JMIR Hum Factors. (2022) 9(1):e28639. doi: 10.2196/28639

24. Markus AF, Kors JA, Rijnbeek PR. The role of explainability in creating trustworthy artificial intelligence for health care: a comprehensive survey of the terminology, design choices, and evaluation strategies. J Biomed Inform. (2021) 113:103655. doi: 10.1016/j.jbi.2020.103655

25. Loftus TJ, Tighe PJ, Ozrazgat-Baslanti T, Davis JP, Ruppert MM, Ren Y, et al. Ideal algorithms in healthcare: explainable, dynamic, precise, autonomous, fair, and reproducible. PLoS Digital Health. (2022) 1(1):e0000006. doi: 10.1371/journal.pdig.0000006

26. Adadi A, Berrada M. Peeking inside the black-box: a survey on explainable artificial intelligence (XAI). IEEE Access. (2018) 6:52138–60. doi: 10.1109/ACCESS.2018.2870052

27. Bergmark B, Dallan LAP, Pereira GTR, Kuder JF, Murphy SA, Buccola J, et al. Decision-making during percutaneous coronary intervention guided by optical coherence tomography: insights from the LightLab initiative. Circ Cardiovasc Interv. (2022) 15(11):872–81. doi: 10.1161/CIRCINTERVENTIONS.122.011851

Keywords: optical coherence tomography, artificial intelligence, eye tracking, intracoronary imaging, OCT, Ultreon, AptiVue

Citation: Cioffi GM, Pinilla-Echeverri N, Sheth T and Sibbald MG (2023) Does artificial intelligence enhance physician interpretation of optical coherence tomography: insights from eye tracking. Front. Cardiovasc. Med. 10:1283338. doi: 10.3389/fcvm.2023.1283338

Received: 25 August 2023; Accepted: 20 November 2023;

Published: 8 December 2023.

Edited by:

Kenichiro Otsuka, Osaka City University Graduate School of Medicine, JapanReviewed by:

Hirotoshi Ishikawa, Kashibaseiki Hospital, JapanHaibo Jia, The Second Affiliated Hospital of Harbin Medical University, China

© 2023 Cioffi, Pinilla-Echeverri, Sheth and Sibbald. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Matthew Gary Sibbald matthew.sibbald@medportal.ca

Abbreviations AI, artificial intelligence; AOI, area of interest; DT, dwell time; EEL, external elastic lamina; OCT, optical coherence tomography; PCI, percutaneous coronary intervention; TFF, time to first fixation; TTT, total task time.

Giacomo Maria Cioffi

Giacomo Maria Cioffi Natalia Pinilla-Echeverri

Natalia Pinilla-Echeverri Tej Sheth

Tej Sheth