Abstract

Quality by design (QbD) has recently been introduced in pharmaceutical product development in a regulatory context and the process of implementing such concepts in the drug approval process is presently on-going. This has the potential to allow for a more flexible regulatory approach based on understanding and optimisation of how design of a product and its manufacturing process may affect product quality. Thus, adding restrictions to manufacturing beyond what can be motivated by clinical quality brings no benefits but only additional costs. This leads to a challenge for biopharmaceutical scientists to link clinical product performance to critical manufacturing attributes. In vitro dissolution testing is clearly a key tool for this purpose and the present bioequivalence guidelines and biopharmaceutical classification system (BCS) provides a platform for regulatory applications of in vitro dissolution as a marker for consistency in clinical outcomes. However, the application of these concepts might need to be further developed in the context of QbD to take advantage of the higher level of understanding that is implied and displayed in regulatory documentation utilising QbD concepts. Aspects that should be considered include identification of rate limiting steps in the absorption process that can be linked to pharmacokinetic variables and used for prediction of bioavailability variables, in vivo relevance of in vitro dissolution test conditions and performance/interpretation of specific bioavailability studies on critical formulation/process variables. This article will give some examples and suggestions how clinical relevance of dissolution testing can be achieved in the context of QbD derived from a specific case study for a BCS II compound.

Similar content being viewed by others

INTRODUCTION

The regulatory framework surrounding the manufacture of pharmaceutical products ensures patient safety through the use of well-defined processes with specified parameter ranges governed by a change control process, which places a significant burden on industry. As a consequence, it is difficult to implement a continuous improvement culture and some manufacturing processes remain fixed and suboptimal to the detriment of industry, the payer and potentially the patient. However, under the new Quality by Design paradigm (QbD) (1) it will be possible to use knowledge and data from product development studies to create a design space within which continuous improvement can be implemented but where the burden for management of change control lies within Industry without the need to seek further regulatory approval. This represents a fundamental shift in the relationship between regulators and the pharmaceutical scientists and will have a major impact on the way pharmaceutical products and processes are developed and their quality assured.

Traditionally pharmaceutical development has focused on the delivery of product to the next phase of clinical studies and thus formulation design has tended to be iterative and empirical. Change was driven by the need to modify process as part of scale up or where an early clinical formulation did not posses the required attributes for a commercial product (e.g. shelf-life). Where possible, change during phase III was kept to a minimum to avoid the need for bioequivalence studies (in vitro or in vivo) to bridge between pivotal clinical trial material and commercial product. Manufacturing processes were therefore fixed early with the intention that material produced from those processes would be equivalent in quality and that quality was measured by end product testing to specification. Dissolution testing was then used to demonstrate that batches of product have similar performance to pivotal clinical batches. Generally, there is no relationship established between the in vitro test and in vivo performance for immediate release solid dosage forms.

This approach often leads to the desired clinical attributes provided by the formulation not being considered explicitly at the onset of development and consequently not designed into the product. In this environment, testing of the product was performed post manufacture to ensured quality of clinical or commercial batch. While this approach has been successful in assuring product quality to the public, it has led to a less efficient pharmaceutical development manufacturing sector. This impacts on the availability and costs of medicines to society (2) and it does not build the understanding that facilitates continuous improvements. In recognition of this, significant opportunities are available currently to improve pharmaceutical development and manufacturing via a shift in approaches to product and process development, analysis, control and regulation. As pharmaceuticals continue to have an increasingly prominent role in healthcare, Quality by Design (QbD) has recently been introduced in pharmaceutical product development in a regulatory context (1).

The aim of QbD is to make more effective use of the latest pharmaceutical science and engineering principles and knowledge throughout the lifecycle of a product. This has the potential to allow for more flexible regulatory approaches where, for example, post-approval changes can be introduced without prior approval and end-product batch testing can be replaced by real time release. Through this understanding, the process and product can be designed to ensure quality and the role of end product testing is reduced. Under QbD, dissolution becomes a key tool for understanding product performance and for measuring the impact of changes in input parameters or process. A design space bounded by clinical quality rather than equivalent dissolution may allow greater opportunity for continuous improvement without regulatory approval. (Clinical quality is defined as the product having attributes that assure the same safety and efficacy as the pivotal clinical trial. For the purpose of this paper, the product will be bioequivalent or have equivalent pharmacokinetics as the pivotal trials product.)

In this context good pharmaceutical quality ensures that the risk of not achieving the desired clinical attributes is acceptably low (3). The pharmaceutical industry has taken steps to embrace QbD via the development of a discussion document known as ‘Mock P.2’ with a fictitious tablet product, Examplain (4,5).

Establishing boundaries based on the clinical impact of the product design and manufacturing process is a challenge for biopharmaceutical scientists, as links needs to be established from manufacturing and composition variables to product clinical performance. The different components of this chain of events related to safety/efficacy of the active drug is illustrated in Fig. 1.

Traditional surrogates for measuring clinical quality (i.e. clinical pharmacokinetics studies) are not viable for the large number of batches generated during process establishment, as it is not feasible to test all batches in the clinic. Under QbD, variants would be deliberately made at the extremes of the proposed design space to look for variability rather than trying to make all batches equivalent. The present bioequivalence guidelines and biopharmaceutical classification system (BCS) provides a platform for application of in vitro dissolution as a surrogate for clinical quality. However to support QbD, the application of dissolution needs to be further developed beyond generic approaches to compound and product specific applications. This can be achieved by presenting a higher level of understanding which incorporates a link to clinical quality in regulatory documentation and applying risk based concepts which are also an important element in the new regulatory paradigm (6).

Therefore, the following paper will review the current accepted practices for demonstrating clinical quality and then propose further development of these practises to meet the needs of pharmaceutical development, manufacture and regulation in the twenty-first century, particularly focussing on approaches for BCS II compounds. These approaches will focus on the evaluation of the clinical impact of key product and process variables leading to establishment of the clinical boundary of the design space and subsequent potential regulatory flexibility and continuous improvement which is part of the FDA pilot programme (7). Finally a generic overview of the approaches for BCS II compounds and suggestion for BCS III and IV compounds will be presented. This review is focussed on understanding the safety and efficacy of a product in relation to bioavailability of the parent drug, as drug pharmacokinetics are widely accepted as a surrogate for safety and efficacy (8,9). Aspects of safety and efficacy related to impurities and degradation products or other much less common factors such as excipient interactions on pharmacokinetics beyond dissolution effects and local irritation caused by API or excipients are not discussed in this paper.

Body

Established Approaches to Assure Clinical Quality

Clinical quality in a QbD context should ideally be defined for a certain compound based on a relationship between pharmacokinetics (PK) and pharmacodynamics (PD) of desirable and undesirable effects. However, due to the difficulty of establishing such PK/PD relationships and the need for validation of PD biomarkers to clinical efficacy and safety this information is not readily available in many development projects. In such cases, the pharmacokinetic limits defined in the regulatory framework within the bioequivalence area provides a conservative basis to define clinical quality. Translated to the QbD situation this implies that manufacturing acceptance criteria and manufacturing or composition changes should produce products with in vivo performance within acceptance criteria for bioequivalence. Development of other more novel approaches to establish clinically meaningful quality criteria should be an area for future research and consideration but is outside the scope for the present paper. Also, it is the intention here to present the process to link in vitro methods to clinical outcomes rather than a discussion on formulation and process parameters that should be investigated.

Currently, the general approach in US and Europe for demonstrating equivalent safety and efficacy for a new or changed product relative to the approved or early phase clinical trial product is shown in Fig. 2. In summary clinical quality (safety and efficacy) can be assessed by comparing drug pharmacokinetics in volunteers after administration of the reference and new product (Bioequivalence studies) or for BCS Class I compounds comparing dissolution across the physiological pH range (8–10). The BCS is used to describe the key factors for predicting drug absorption in man and identify the likelihood that changes to the formulation or manufacturing process will affect clinical pharmacokinetics and therefore safety and efficacy (10). Thus according to BCS, a substance is categorised on the basis of its aqueous solubility (sol) and intestinal permeability (perm) where four classes are defined [Class I (high sol/high perm), II (low sol/high perm), III (high sol/low perm), and IV (low sol/low perm)]. Additionally, the properties of drug substance are combined with the dissolution characteristics of the drug product to control clinical quality. The drugs with high solubility and high permeability are grouped as Class I drugs for which biowaivers are possible (8,9). For the remaining classes, a bioequivalence study is generally required because in vitro tests that could be used to assess the impact of the changes on clinical safety and efficacy are not readily available. However some (smaller) changes, which have been shown to have a low probability of affecting drug dissolution (11) and absorption, thus safety and efficacy, can be assessed using dissolution testing (12–14). A more comprehensive review of possibilities for replacing in vivo bioequivalence studies with in vitro dissolution has been published (15). Moving forward towards meaningfully large design spaces (1) will likely require changes greater than those allowed under SUPAC (Scale-Up and Postapproval Changes). It is also not practical to test all potential product variants generated during the establishment of product and manufacturing design space in the clinic. Therefore, using compound specific knowledge it will be necessary to establish a link between in vitro tests and the safety and efficacy (volunteer PK). Once this link has been established, the in vitro (dissolution) test can be used as a surrogate of clinical performance and the clinical performance of all variants from the design space establishment can be assessed. Taking the concept of using volunteer pharmacokinetics to measure safety, efficacy and dissolution, the following Section describes an approach to establish a compound/product specific dissolution test that is a surrogate for bioavailability.

Use of Dissolution Testing as a Surrogate for Clinical Quality Assessment in QBD-Case Example

Compound Properties

The compound has a molecular weight of 475 with a ClogP of 5.7 and LogD (Octanol) 2.6. The drug substance is a weak base with pKa 9.41 and 5.33 (dibasic) that displays high permeability but low solubility due to low solubility above pH 6. As such, it is a BCS Class II compound. It is stable in gastrointestinal fluid. The highest strength is 300 mg given as tablets.

Risk Identification, Analysis and Evaluation

It is important to identify and understand the raw materials and process variables that could impact upon clinical safety and efficacy through effects on dissolution. This is especially relevant for low solubility drugs, such as the presented compound. An Initial Clinical Quality Risk Assessment (6) was performed to identify these risks with an aim to assess the in vivo impact through dissolution of the most relevant raw material attributes and process variables.

The key question asked during this risk assessment process was ‘what is the overall risk of each potential failure mode affecting in vivo pharmacokinetics (and therefore patient safety and efficacy)?’ The potential failure modes identified as part of this process and the associated RPNs (Risk Product Number), which represent the overall magnitude of risk derived from science and prior knowledge, are presented in Fig. 3 The RPN is a product of objective scores taking into account detectability, probability and severity.

Figure 3 shows the failure modes with the potential to retard dissolution rate, for example, drug substance particle size, has the highest risk of impacting in vivo pharmacokinetics. The highest risks to the clinical performance of drug product tablets (shown in red) were identified and are presented in Table I with their respective dissolution retardation mechanisms.

The highest risk factors had been identified and covered a broad range of potential in vivo dissolution retardation mechanisms. The next step was to manufacture a range of tablets incorporating these variables that could be tested in a clinical study to assess the impact of varying these variables on in vivo pharmacokinetics. Due to ethical and logistical reasons testing of all potential variables in vivo was impractical. Therefore, the bioavailability of tablet variants manufactured to incorporate relevant retardation mechanisms through changes of only the highest risk raw materials and process variables was assessed in a volunteer study. Future variants (which should be lower risk than those tested in vivo) would then be assessed using a discriminatory in vitro validated dissolution test that would be developed to act as a surrogate for in vivo data. Another advantage of this approach is that it may allow the development of an in vitro in vivo correlation (IVIVC).

Based on the output from the Initial Clinical Quality Risk Assessment, four tablet variants were developed incorporating the highest risk product and process variables (Table II). All of these tablets were based on the commercial tablet formulation, which is represented as the standard tablet (Variant A). The variants consisted of a drug substance particle size variant, a process variant and a formulation variant.

All three tablet variants, plus the standard tablet, were tested using a preliminary discriminatory dissolution test (see below). This assessment ensured that tablets were included that covered both a range of dissolution profiles and all the dissolution retardation mechanisms previously identified. This provided the greatest chance of establishing an IVIVC and ensuring the broad applicability of the selected dissolution test.

Establishment of Dissolution Method Conditions

Initially, consideration was given to the standard approaches to the development of a dissolution method such as that proposed by the FDA (16). Following this approach, pH modification alone would have produced a method, which allowed complete release (pH 1.2, USP2, 50 rpm) and also had some physiological relevance (that is conditions representative of the stomach).

However, this would have been incompatible with the scientific risk-based approach in which the risk assessment and risk evaluation steps of the overall risk assessment stage (6) are fundamental to understanding the risk control strategy to be adopted. Within this framework the following elements were considered particularly important:

-

1.

Identification of hazard

-

2.

Likelihood of hazard occurring

-

3.

Impact of hazard occurring

-

4.

Ability to detect event.

Within this context and by consideration of ICH Q6A guidance (17), the objectives for the initial dissolution method was:

-

1.

To distinguish amongst different processing and formulation variables

-

2.

To have in vivo relevance

-

3.

To complete dissolution within a timescale appropriate for a routine control test (this is a desirable feature but sometimes separate in vivo predictive in vitro methods may be needed which is not suitable for routine control)

The dissolution of the tablets was assessed in aqueous buffers across the pH range 1.2 to 6.8 (see Fig. 4). At low pH, tablet dissolution was fast with high variability observed between individual tablets and poor discrimination. At neutral pH, low recoveries were observed due the low solubility of the drug at pH 6.8. From these studies, it was concluded that aqueous buffers did not provide the optimum conditions for a test capable of differentiation between processing and formulation variables for the tablets.

In accordance with Dissolution testing guidance for industry (18), the use of surfactants to increase solubility by micellar solubilisation was evaluated. The dissolution of tablets was assessed in surfactant containing media. Dissolution in one surfactant media exhibited the potential for differentiation between processing and formulation variables while providing conditions resembling to the small intestine where bile acid mixed micelles acts as solubilisers (Fig. 4). The optimum surfactant concentration was identified and at this concentration, the rate of tablet dissolution was sufficiently slow to provide the potential for discrimination between tablet variants whilst affording complete dissolution within a timescale appropriate for use as a routine control test.

The in vitro performance (dissolution rate) of the three tablet variants, plus the standard tablet, was assessed using the preferred method, the dissolution test with surfactant. The dissolution profiles obtained demonstrated differentiation between the tablet variants, indicating that the method can detect changes in the drug product caused by deliberately varying the highest risk process parameters and product attributes identified from the Initial Clinical Quality Risk Assessment.

Evaluation of the Tablet Variants In Vivo

Once these tablet variants had been produced incorporating the highest risk product and process variables identified from the Initial Primary Quality Risk Assessment and providing a good range of in vitro dissolution profiles, they were dosed in a clinical volunteer pharmacokinetic study, together with an oral solution. The solution was to be used as a comparison to the tablet variants both as a reference to allow calculation of in vivo dissolution of the tablets via deconvolution and also to demonstrate whether more rapid dissolution than Variant A (standard tablet) would still produce the same clinical safety and efficacy. The wide dissolution range allowed the best opportunity for an IVIVC to be developed. This could be achieved in accordance with the IVIVC guidance for Industry (14). This guidance is relevant, as the concepts it contains are applicable to the development of an IVIVC for a BCS Class II compound in an immediate release product when dissolution is the rate-determining step.

The plasma concentration-time profiles for the oral solution and each of the 4 drug substance tablet variants are summarized in Fig. 5.

The 4 tablet variants exhibited similar in vivo performance and thus it was concluded that the clinical pharmacokinetics of the drug were not affected significantly by the variations in the tablets assessed in this study. As the 4 tablet variants have similar in vivo performance but different in vitro profiles it was apparent that there would be no direct relationship and so development of an IVIVC was not attempted.

In summary, the in vitro dissolution test was able to differentiate between the tablet variants incorporating the highest risk product and process variables resulting in slower in vitro dissolution. However, the slower in vitro dissolution had no significant impact on the pharmacokinetics of drug substance in human subjects. This lack of dependency could be explained by a high enough drug solubility in the GI tract which means that dissolution is faster than other physiological processes (e.g. gastric emptying and permeation). Also the long elimination half-life makes Cmax less sensitive to changes in absorption rate. The in vivo study demonstrated that:

-

1.

The product and process variables and related retardation mechanisms investigated in this study do not impact on clinical pharmacokinetics within tested ranges

-

2.

Tablets manufactured with a dissolution rate greater than or equal to that of the slowest dissolving formulation (Tablet Variant D) will demonstrate equivalent pharmacokinetics to the standard tablets (Tablet Variant A) and to an oral solution.

-

That dissolution rate is not important within the range studied

-

Tablet variants with dissolution profiles more rapid than that of Tablet Variant A will also produce similar bioavailability as evidenced by the similar performance of the oral solution.

-

-

3.

As the variants dosed encompassed three different mechanisms to alter drug release from the tablet the overly discriminatory dissolution test is a sensitive surrogate to assess clinical quality of all outputs from further processing studies.

Application of Clinically Relevant Dissolution Criteria in Establishment of a Design Space

QbD principles have been utilised to develop the product and gain an in-depth understanding of the clinical impact of the highest risk product and process variables. Initially, these risks were considered to be relatively high based on unknown probability (default high score), high severity and low detectability (high risk score). However, after execution of a successful risk reduction programme, which included the manufacture of tablet variants incorporating the highest risk variables, the development of a clinically relevant dissolution test, and the dosing of the tablet variants in the in vivo study, it was clear that the absolute risk was low. This is based on the fact that probability is now considered low for the highest risks, with detectability increased, and severity reduced. If future product is shown to have a dissolution profile faster than Variant D, clinical quality will be assured. These concepts were used to establish the design space (1) for the product.

Furthermore the dissolution test will assist in the qualification of future changes such as site, scale, equipment, and method of manufacture using the dissolution method and limit provided by Variant D.

Use of Dissolution Testing as a Surrogate for Clinical Quality Assessment in QbD-Recommendations

From examination of the case study five key steps to ascertaining clinical performance by dissolution testing in QbD can be identified. These are:

-

1.

Conduct Quality Risk Assessment (QRA) to allow the most relevant risk to clinical quality to be identified based on prior knowledge of this and other products.

-

2.

Develop dissolution test(s) with physiological relevance that is most likely to identify changes in the relevant mechanisms for altering drug dissolution, e.g. testing at lowest acceptable solubility/mildest agitation.

-

3.

Understand the importance of changes to these most relevant manufacturing variables on clinical quality based on dissolution data combined with

-

a.

BCS based prior knowledge

-

b.

And/or clinical ‘bioavailability’ data

-

a.

-

4.

Establish the dissolution limit which assure clinical quality (i.e. no effect by changes)

-

a.

Clinical ‘bioavailability/exposure’ data

-

i.

Classical IVIVC (already accepted today in SUPAC)

-

ii.

In vivo “safe space” (see below)

-

i.

-

b.

Prior knowledge (BCS).

-

a.

-

5.

Ensure dissolution within established limits to assure clinical quality used to define a Design Space.

The above example illustrates the approach to be used for a class II drug in connecting dissolution to bioavailability and thereby assuring clinical quality of a product. A BCS based approach on how to establish the link for drugs according to class I, III and IV are proposed in Fig. 6.

For Class I drugs, it is proposed that dissolution within 30 min would provide sufficient assurance of clinical quality. This limit is based on the present understanding that for such a rapid dissolution, product drug dissolution does not affect rate and extent of absorption for a class I drug (10). For BCS class I drugs in vitro dissolution is already accepted as surrogate for in vivo studies for IR formulations with sufficiently rapid dissolution (8,10) and should thus be possible to apply also within the context of QbD. Thus, no specific IVIVC studies would be needed to establish a clinically relevant Design Space. The current regulatory guidance suggests testing at three different pH within the physiological range and the use of mild agitation (100 rpm in USP I method or 50 rpm in USP II method). In context of QbD, testing at most challenging pH with respect to drug solubility would be sufficient unless the risk analysis identifies other pH dependent effects on dissolution such as pH dependence of critical excipients.

This simpler approach should also be possible to apply to class III drugs. The suitability of dissolution as a surrogate for bioequivalence studies of class III drugs is generally not accepted in context of formulation changes or generic products. However, it should be noted that in context of QbD, a more “knowledge rich” situation where risks are identified, carefully evaluated and mitigated provides more favourable conditions for replacing in vivo studies with in vitro tests. In addition, it has been proposed to extend the use of biowaivers based on dissolution testing to class III drugs (19–22). The scientific rationale being that for products with rapid dissolution, this is not a rate limiting factor in the absorption process and thereby a low risk factor. This has been illustrated in simulations by Kortejarvi and colleagues which showed that the difference in Cmax was less than 10% for a solid dosage form with complete dissolution after 1 hour and a solution for a wide range of elimination rates and low permeabilities (23). This insensitivity has also been confirmed by in vivo studies with metformin (22) and ranitidine (24). Thus, applying dissolution testing as a surrogate for bioavailability studies in context of QbD represents an acceptably low risk provided that excipients that could affect permeability or gastro-intestinal transit are not changed. Similar in vitro testing strategies as described for class I drugs above, i.e. testing at most discriminating conditions, is judged to be sufficient also for class III drugs. Although simulations show that absorption of class III drugs are less sensitive to dissolution variations within normal limits than class I drugs (23) the impact of substantially delayed dissolution in vivo is potentially higher for class III drug due to risk of a reduction in extent of absorption if dissolution occurs in more distal parts of the gastro-intestinal tract. As a result, a more conservative dissolution limit of 15 min is proposed for class III drugs. If slower product dissolution than the above proposed limits is desired for class I and III drugs, a bioavailability study is probably needed using the same approach suggested for class II drugs.

The same approach as suggested for class II drugs should also be applicable to class IV drugs provided that are no additional effects beyond dissolution that could affect bioavailability like excipient changes affecting permeability are considered. It should be noted that the BCS classification is a fairly conservative approach where drugs classified as having low solubility actually could behave as a high solubility drug, e.g. shown by similar plasma profile for solution and solid formulation. In such a case, class IV drugs could be handled similar to class III drugs.

Setting Specifications within QbD

A generic approach to the establishment of a dissolution test that controls clinical quality and the role of BCS within this has been described. The final aspect to this approach is to set a dissolution specification that assures safety and efficacy (Fig. 7).

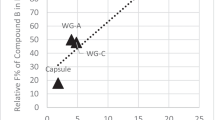

For a BCS Class II compound, as the case example above, 3 scenarios exist as illustrated in Fig. 8, these are:

-

1.

A classical level A IVIVC (14) is established and a specification that controls Cmax and AUC by maximum ±10% is set in accordance with available acceptance criteria.

-

2.

A “safe space” specification is set based on no effect seen in the clinical study and the slowest dissolution profile tested in the clinical study (i.e. what occurred in the case study presented—in this case the specification is set at the boundary of knowledge rather than on a biological effect).

-

3.

The final option is a mixed safe space/IVIVC result in which clinical pharmacokinetics is only affected for a few of the variants tested clinically. Again this would allow a dissolution specification to be set that allowed Cmax and AUC to be controlled to 10%.

Further Investigation of Safe Space Concept by Absorption Simulations

Generally, it is believed that IVIVC is possible for Class II if in vitro dissolution rate is similar to in vivo dissolution rate (10). However, the above case example illustrated a situation where a classical IVIVC was not achieved, not due to a failure of the in vitro dissolution method but as a consequence that dissolution was not a critical factor for peak plasma levels and extent of exposure within the range studied. Modelling by use of absorption simulation software was performed to further explore the likelihood of attaining such “safe space” for class II drugs by probing the role of physiological factors like gastric emptying and permeation rate in relation to the dissolution rate.

In vitro and in vivo simulations were conducted. Dissolution profiles were generated in silico using the Noyes-Whitney/Nernst-Brunner expression based on solubility and other physicochemical inputs. Particle size was varied to mimic different formulation performance (25,26). Disposition pharmacokinetics was described by a compartmental pharmacokinetic model. Plasma profiles were simulated for a neutral compound with poor solubility (0.02μg/ml, chosen to represent a Class II compound at 30 mg), and with high or very high permeability (Peff 10 and 40 × 10−4 cm/s). A suspension where particle size was varied to change the dissolution rate and a solution (‘infinite’ solubility) were also modelled. Two gastric emptying rates were studied with both formulation types: fast (0.1 min) and average (15 min). It was shown that only when gastric emptying rate was fast and permeability very high that a level A IVIVC across the dissolution range could be established. In the more physiological relevant simulations, gastric emptying and/or permeability had a dampening effect on absorption such that dissolution is not rate determining and so a ‘safe space’ was produced for faster dissolving formulations. We conclude from this that for the magnitude of dissolution perturbations that could be expected to be introduced into formulations by competent formulators, ‘safe space’ or ‘mixed safe space/IVIVC’ is the most likely outcome. This conclusion is somewhat at variance with the perceived knowledge and should be the subject of further research.

Polli and co-workers have performed some investigations on this subject and developed a model to show that immediate release products may not be expected to yield Level A correlations (27). Instead, the degree of correlation has been found to be in a gradual and continuous fashion dependent on the relative rate of first-order permeation and first-order dissolution constants (α) and the fraction of dose absorbed. Polli and Ginski went on to investigate the model using three compounds, metoprolol, piroxicam and ranitidine (Class I, II and III compounds respectively). Data generated showed that large α values (α ≥ 1) were dissolution rate limited, small α values (α ≤ 1) were permeation rate limited and when α≈1, it was ‘mixed’ dissolution rate limited and permeation rate limited absorption (28). The modelling described in this article is consistent with the earlier findings of Polli and co-workers (27). It was noticed that although Piroxicam is a BCS Class II compound and has high permeation rate, the resulting α values for the more rapidly dissolving formulations of piroxicam were below 1 or about 1 emphasising the possibility of a ‘safe space’ or lack of classical Level A IVIVC for a class II compound.

In summary, a large α is required for IVIVC of a class II compound. However, for many Class II compounds in formulations developed by competent formulators following Quality by Design approaches this is unlikely. The likelihood instead is to achieve a safe space operating model, as the quality should be higher and as consequence dissolution sufficiently fast to produce a low α. Nevertheless, the formulator is provided with the unique opportunity to elucidate the mechanisms controlling oral absorption from this class of compounds through the formulation approaches.

In the cases (mainly BCS class II and IV drugs) that merits a specific bioavailability study to settle the link between dissolution testing and clinical quality it is highly likely that the results could be generalised beyond the specific factors studied. That could be achieved by understanding the mechanism behind the effect on drug dissolution. If this is known, it should be possible to predict the in vivo performance from in vitro dissolution data for all formulation/manufacturing factors affecting the same mechanisms just by including one representative and relevant factor in the in vivo study. For example, if tablet disintegration is expected to be a critical factor, tablets with different disintegration times would be included in the in vivo study. However, disintegration could be affected by several factors such as tablet compression, granule properties and binder/disintegrants. In such a case, the aim of the in vivo study would be to validate the in vitro method versus alterations in tablet disintegration rather than against a specific formulation or process factor. This should be possible to obtain by using tablets in the in vivo study with different disintegration times obtained by varying just one factor, e.g. compression force, rather than testing all factors in vivo. Although this is a trivial example it indicates how a higher level of understanding can be applied in the context of QbD.

Future Perspectives

The application of dissolution testing as surrogate for in vivo studies in establishing clinically relevant dissolution criteria may be further developed beyond the proposals in the present paper. Three key areas can be identified that could be applied as is or after additional refinement by research work;

-

1.

Development of in vitro dissolution methods that provides more in vivo realistic conditions

-

2.

Establishment of relationship between dissolution and bioavailability variables using mechanistic based computational absorption modelling

-

3.

Mechanistic understanding and modelling of drug dissolution based on drug substance, excipient and formulation properties.

Current dissolution methods are based on providing an acceptable risk level as proven by long term experience rather than modelling the in vivo situation. More in vivo relevant in vitro test methods would allow more flexible approaches than described above further reducing the need for additional in vivo studies. Some factors related to medium composition have already been well established in the literature for example, the lower surface tension in GI fluids compared to dissolution buffers, intestinal surfactants ionic compositions, GI volumes (29). However this knowledge is not fully utilised in dissolution testing supporting product development. More in vivo realistic methods capturing dynamic changes of medium composition are available or under development (30,31) that may play a role in QbD in the future. Other factors still needing more research and understanding in order to establish in vivo relevant dissolution methods are modelling of intestinal hydrodynamics, in vivo drug precipitation/solid state conversions and conditions for dissolution in the more distal parts of the GI tract.

In addition, the use of algorithms that model the absorption process in a more mechanistic manner (32,33) than simple IVIVC approaches would aid the establishment of drug specific definitions of “safe space” dissolution limits (see Fig. 8). This kind of approach would require validation of applied in silico models using bioavailability data for the specific drug obtained in development studies thereby potentially removing the need to conduct specific IVIVC type studies.

Mechanistic understanding of dissolution data can be gained by generating information in dissolution experiments additional to amount drug dissolved. This could for example be obtained by imaging techniques, analysis of dissolved excipients or particle analysis techniques like Focused Beam Reflectance Measurements. The identification of dissolution mechanisms in this manner enhances quality of risk assessments and also possibility to define relevant in vitro test. Computational algorithms integrating the relevant factors controlling the drug dissolution from a product may also be utilised in the future for example to optimise design spaces and focus experimental efforts to relevant topics.

It is important that investments made in the areas above are translated into models not only useful in a certain development project but are more generally applicable in order to be cost-efficient. Ultimately, this will be achieved by striving for mechanistic models capturing scientific knowledge and validated by project experiences. Thus, there is a need for additional research as well as for ways to better capture project learning and sharing to support achievement of a more efficient pharmaceutical development and manufacturing process.

CONCLUSION

In vitro dissolution testing together with BCS considerations could provide a key link between manufacturing/product design variables and clinical safety/efficacy in QbD. Some guides as to how to apply dissolution testing in this context building on the current BE/BCS guidelines have been suggested derived from a specific case study for a BCS II compound.

The concept of IVIVC between in vitro dissolution and in vivo drug plasma concentrations needs to be extended in context of QbD beyond present Level A targets. “Safe space” regions where bioavailability is unaffected by pharmaceutical variations providing different dissolution profiles should be an acceptable or even desirable outcome in an IVIVC type study.

Dissolution testing should be applicable to assure desired clinical performance for a wide range of drugs supporting the use of QbD based on BCS considerations and specific product knowledge. This is ascertained by conducting biorelevant dissolution tests, assessing relevant manufacturing variables and determining a link between dissolution and bioavailability data as exemplified in the present paper.

References

ICH Harmonised Tripartite Guideline. International committee of harmonisation of technical requirements for registration of pharmaceuticals for human use.Q8: Pharmaceutical Development. 2003.

US Food and Drug Administration, Department of Health and Human Sciences. Pharmaceutical cGMPs for the 21st Century-A Risk-Based Approach. 2004.

J. Woodcock. Pharmaceutical quality in the 21st century—an integrated systems approach at AAPS workshop on pharmaceutical quality—a science and risk based approach in the 21st century. 2005.

Mock P2 for “Examplain” Hydrochloride. Available at: http://www.efpia.org/Content/Default.asp?PageID = 263&DocID = 2933. Accessed March 5, 2008.

Potter C, Beerbohm R, Coupe A, Erni F, Fischer G, Folestad S, et al. A guide to EFPIA’s Mock P.2 document. Pharmaceutical Technology Europe. Available at: http://www.ptemag.com/pharmtecheurope/PAT/A-guide-to-EFPIAS-Mock-P2-document/ArticleStandard/Article/detail/392205. Accessed March 5, 2008.

ICH Harmonised Tripartite Guideline. International committee of harmonisation of technical requirements for registration of pharmaceuticals for human use. Q9 Quality Risk Management. 2006.

US Food and Drug Administration. FDA Docket No. 2005N-0262—Submission of Chemistry, Manufacturing and Controls Information in a New Drug Application Under The New Pharmaceutical Quality Assessment System: Notice of Pilot Program (Federal Regiser/ Vol.17, No. 134/Thursday, July 14, 2005/pp40719–40720). 2005.

US Food and Drug Administration. Guidance for Industry Waiver on In vivo Bioavailability and Bioequivalence Studies for Immediate-Release Solid Oral Dosage forms based on a Biopharmaceutics Classification System. 2000.

The European Agency for the Evaluation of Medicinal Products (EMEA) CfPMPC. Note for Guidance on the Investigation of Bioavailability and Bioequivalence. 2002.

G. L. Amidon, H. Lennernas, V. P. Shah, and J. P. Crison. A theoretical basis for a biopharmaceutics drug classification: The correlation on in vitro drug product dissolution and in vivo bioavailability. Pharm. Res. 12:413–420 (1995).

D. A. Piscitelli, S. Bigora, C. Propst, S. Goskonda, P. Schwartz, L. J. Lesko, L. Augsburger, and D. Young. The impact of formulation and process changes on in vitro dissolution and the bioequivalence of piroxicam capsules. Pharmaceutical Development & Technology. 3(4):443–452 (1998)

US Food and Drug Administration. Guidance for Industry Immediate Release Solid Oral Dosage Forms Scale-Up and Postapproval Changes: Chemistry, Manufacturing, and Controls, In Vitro Dissolution Testing, and In Vivo Bioequivalence Documentation. 1995.

The European Agency for the Evaluation of Medicinal Products (EMEA) CfPMPC. Note for Guidance on quality of modified release products: A: oral dosage forms, B: transdermal dosage forms (quality). 2000.

US Food and Drug Administration. Guidance for Industry Extended Release Oral Dosage Forms: Development, Evaluation, and Application of In Vitro/In Vivo Correlations. 1997.

E. Gupta, D. M. Barends, E. Yamashita, K. A. Lentz, A. M. Harmsze, V. P. Shah, et al. Review of global regulations concerning biowaivers for immediate release solid oral dosage forms. Eur. J. Pharm. Sci. 29(3–4 SPEC. ISS.):315–324 (2006).

C. Noory, N. Tran, L. Ouderkirk, and V. Shah. Steps for development of a dissolution test for sparingly water-soluble drug product. Dissolution Technologies. 7:16–18 (2000).

ICH Harmonised Tripartite Guideline. Specifications: Test procedures and acceptance criteria for new drug substances and new drug products: Chemical structures. 1999.

US Food and Drug Administration. Guidance for Industry Dissolution Testing of Immediate Release Solid Oral Dosage Forms. 1997.

L. X. Yu, G. L. Amidon, J. E. Polli, H. Zhao, M. U. Mehta, D. P. Conner, et al. Biopharmaceutics Classification System: The Scientific Basis for Biowaiver Extensions. Pharm. Res. 19:921–925 (2002).

H. Vogelpoel, J. Welink, G. L. Amidon, H. E. Junginger, K. K. Midha, H. Moeller, et al. Biowaiver monographs for immediate release solid oral dosage forms based on biopharmaceutics classification system (BCS) literature data: Verapamil hydrochloride, propranolol hydrochloride, and atenolol. J. Pharm. Sci. 93:1945–1956 (2004).

H. H. Blume, and B. S. Schug. The biopharmaceutics classification system (BCS): Class III drugs - better candidates for BA/BE waiver? Eur. J. Pharm. Sci. 9:117–121 (1999).

C.-L. Cheng, L. X. Yu, H.-L. Lee, C.-Y. Yang, C.-S. Lue, and C.-H. Chou. Biowaiver extension potential to BCS Class III high solubility-low permeability drugs: bridging evidence for metformin immediate-release tablet. Eur. J. Pharm. Sci. 22:297–304 (2004).

H. Kortejarvi, A. Urtti, and M. Yliperttula. Pharmacokinetic simulation of biowaiver criteria: the effects of gastric emptying, dissolution, absorption and elimination rates. Eur. J. Pharm. Sci. 30:155–166 (2007).

J. E. Polli. In vitro–in vivo relationships of several “immediate” release tablets containing a low permeability drug. In Young et. al. (eds.), In Vitro–In Vivo Correlations, Plenum Publishing Corp, New York, 1997, pp 191–198.

E. Brunner. Velocity of reaction in non-homogeneous systems. Zeit. physikal. Chem. 47:56–102 (1904).

W. Nernst. Theory of reaction velocity in heterogenous systems. Zeit. physikal. Chem. 47:52–55 (1904).

J. E. Polli, J. R. Crison, and G. L. Amidon. Novel approach to the analysis of in vitro–in vivo relationships. J. Pharm. Sc. 85:753–760 (1996).

J. E. Polli, and M. J. Ginski. Human drug absorption kinetics and comparison to Caco-2 monolayer permeabilities. Pharm. Res. 15:47–52 (1998).

E. Galia, E. Nicolaides, D. Horter, R. Lobenberg, C. Reppas, and J. B. Dressman. Evaluation of various dissolution media for predicting in vivo performance of class I and II drugs. Pharm. Res. 15(5):698–705 (1998).

S. Blanquet, E. Zeijdner, E. Beyssac, J. P. Meunier, S. Denis, R. Havenaar, et al. A dynamic artificial gastrointestinal system for studying the behavior of orally administered drug dosage forms under various physiological conditions. Pharm. Res. 21:585–591 (2004).

M. Wickham, R. Faulks. Apparatus, system and method. patent WO/2007/010238. 2007.

B. Agoram, W. S. Woltosz, and M. B. Bolger. Predicting the impact of physiological and biochemical processes on oral drug bioavailability. Adv Drug Deliv. Rev. 50(Suppl. 1):S41–S67 (2001).

M. B. Bolger, B. Agoram, R. Fraczkiewicz, and B. Steere. Simulation of absorption, metabolism, and bioavailability. Drug Bioavailability. Methods Princ. Med. Chem. 18:420–443 (2003).

Acknowledgements

The authors wish to thank colleague Iain Grant in Pharmaceutical & Analytical R&D and Colm Farrell from Icon who provided assistance with the research and manuscript preparation.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License ( https://creativecommons.org/licenses/by-nc/2.0 ), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Dickinson, P.A., Lee, W.W., Stott, P.W. et al. Clinical Relevance of Dissolution Testing in Quality by Design. AAPS J 10, 380–390 (2008). https://doi.org/10.1208/s12248-008-9034-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1208/s12248-008-9034-7