Abstract

Over the last 70 years, Feynman diagrams have played an essential role in the development of many theoretical predictions derived from the standard model Lagrangian. In fact, today they have become an essential and seemingly irreplaceable tool in quantum field theory calculations. In this article, we propose to explore the development of computational methods for Feynman diagrams with a special focus on their automation, drawing insights from both theoretical physics and the history of science. From the latter perspective, the article particularly investigates the emergence of computer algebraic programs, such as the pioneering SCHOONSCHIP, REDUCE, and ASHMEDAI, designed to handle the intricate calculations associated with Feynman diagrams. This sheds light on the many challenges faced by physicists when working at higher orders in perturbation theory and reveal, as exemplified by the test of the validity of quantum electrodynamics at the turn of the 1960s and 1970s, the indispensable necessity of computer-assisted procedures. In the second part of the article, a comprehensive overview of the current state of the algorithmic evaluation of Feynman diagrams is presented from a theoretical point of view. It emphasizes the key algorithmic concepts employed in modern perturbative quantum field theory computations and discusses the achievements, ongoing challenges, and potential limitations encountered in the application of the Feynman diagrammatic method. Accordingly, we attribute the enduring significance of Feynman diagrams in contemporary physics to two main factors: the highly algorithmic framework developed by physicists to tackle these diagrams and the successful advancement of algebraic programs used to process the involved calculations associated with them.

Similar content being viewed by others

1 Introduction

The standard model of elementary particle physics, which describes three of the four known fundamental forces in the universe—electromagnetic, weak and strong interactions; gravitation is excluded—provides us with an impressive comprehensive framework for understanding the behavior of the constituents of all visible matter, as well as all the unstable elementary particles that have been discovered, for example in cosmic rays or at particle accelerators. As such, it stands as one of the most rigorously tested theories in the realm of fundamental physics. Over the past decades, a myriad of different high-precision measurements have been performed and, remarkably, the nineteen free parameters of the Standard Model align perfectly—within statistical uncertainties—with these various experimental results. Nonetheless, the process of testing such a theory involves not only precise experiments but also accurate theoretical predictions. To achieve this, observables, i.e., measurable quantities like cross sections or the average lifetime of a particle, must be derived from the abstract Lagrangian of the Standard Model. This is done within the theoretical framework of quantum field theory (QFT).

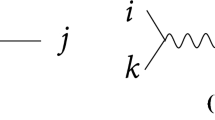

Aimed since the late 1920s at unifying quantum mechanics and special relativity, QFT builds nowadays on groundbreaking developments made in the late 1940s in the framework of quantum electrodynamics (QED), the relativistic theory that deals more specifically with the electromagnetic field. In particular, four leading physicists—Julian Schwinger, Sin-Itiro Tomonaga, Richard Feynman, and Freeman Dyson—developed techniques, known as renormalization, which enabled the infinities that had hitherto hindered the correct development of the theory to be discarded and replaced by finite measured values [1]. Generally, calculations within this framework are intricate and involved. However, driven by conceptual and mathematical issues, Richard Feynman devised an ingenious approach to address this complexity: the intuitive graphical notation known as Feynman diagrams (or graphs) [2]. These diagrams are employed to represent interaction processes, utilizing lines to depict particles and vertices to symbolize their interactions. The external lines in a graph symbolize the initial and final state particles, while the internal lines are associated with virtual particles (see Fig. 1). Thanks to the associated Feynman rules, mathematical terms are then assigned to each element of these diagrams.Footnote 1 This allows the translation of the visual representation of a particle processFootnote 2 into an algebraic expression providing its probability amplitude. Initially met with skepticism by established experts, Dyson succeeded in providing a rigorous derivation of Feynman diagrams and their related rules from the foundations of QED, thereby dispelling doubts [9, 10]. Since then, Feynman graphs have become an indispensable tool for calculations in QFT. In fact, they have played a crucial role in the development of the vast majority of theoretical predictions derived from the standard model Lagrangian.

Leading-order Feynman diagram for the process \(e^+e^-\rightarrow \mu ^+\mu ^-\). An electron–positron pair annihilates into a muon–antimuon pair via a virtual photon \(\gamma \). Throughout this paper, we define the external lines on the left (right) of the diagram to denote the initial (final) state of the process. All Feynman diagrams have been produced with FeynGame [11]

The success of Feynman diagrams has garnered significant attention from historians of physics, resulting in numerous works devoted to their study. Many of them focused on Feynman’s creative contributions to QED, analyzing their context in detail, the various influences received and unveiling their epistemic novelty [1, 12,13,14,15,16].Footnote 3 In a different vein, David Kaiser, who examined Feynman diagrams as calculation tools, delved into their dispersion in postwar physics as it provides “a rich map for charting larger transformations in the training of theoretical physicists during the decades after World War II” [3, p. xii]. This work remarkably expanded our understanding of the pivotal role Feynman graphs currently hold in high-energy physics. However, despite this existing literature, there are still notable gaps in historical investigations regarding an essential technical aspect related to the application of Feynman’s diagrammatic method in high-energy physics: the algebraic manipulation of the associated equations. Exploring this aspect in more depth would yield valuable insights, as it has long shaped the work of numerous theoretical physicists and remains particularly relevant in contemporary physics due to the many challenges it presents. Indeed, based on the so-called perturbation theory, which assumes the particle interactions to be small, calculations with Feynman diagrams are only approximate within QFT. A single graph represents only an individual term in the perturbative expansion, and to achieve higher accuracy, more and more Feynman diagrams of increasing complexity must be included, leading to more and more complex computations. In modern calculations, for phenomena such as those observed at the Large Hadron Collider (LHC), significant computational efforts on dedicated computer clusters are required, giving rise to a multitude of technical challenges in manipulating the expressions involved. The algorithmic evaluation of Feynman diagrams has consequently now almost evolved into a field of research in its own right.

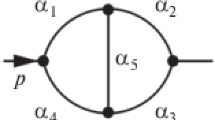

The present paper therefore aims to address directly the topic of algebraic and algorithmic approaches to Feynman diagrams. Our goal is to provide some insights into related issues and current status of their solution. As we will see this naturally leads us to considerations on the question of automating the calculations associated with Feynman graphs. Indeed, the rather well-defined algebraic structure of amplitudes derived from Feynman diagrams has since long raised hope that the computation of physical observables like cross sections and decay rates can be automated to a very high degree. This became progressively more desirable as higher orders in perturbation theory were calculated to improve accuracy. As part of this process, the number of mathematical operations required increases dramatically, given that the number of Feynman diagrams can become very large, and that their individual computation involves very complicated mathematical expressions, in particular if some of the internal lines form closed loops (see Fig. 2). The question of automation, which remains particularly relevant today, then naturally throws light on the specific role played by computers in Feynman diagram calculations. This is why special attention will be paid to the development of dedicated algebraic programs. By enabling mathematical expressions to be manipulated at variable level, they have become absolutely crucial to the progress made over the last decades. And in fact, as computer algebra systems such as MATHEMATICA, MAXIMA or MAPLE [17,18,19] are now an integral part of the physicist’s daily toolbox, it is interesting to note—as our developments will illustrate—that questions initially posed by problems linked to the calculation of Feynman diagrams were instrumental in providing the initial impetus for the development of such programs and laid some of their foundations.Footnote 4

In line with the special issue it belongs to, this “tandem” article consists of two distinct parts. Section 2, authored by historian of science Jean-Philippe Martinez, delves into the emergence of algebraic programs designed for calculating Feynman diagrams. Specifically, it highlights three pivotal programs, namely SCHOONSCHIP, REDUCE, and ASHMEDAI, whose development began around 1963. These programs played an essential role in testing the validity of QED at higher orders of perturbation theory at the turn of the 1960s and 1970s. Moreover, they brought about significant changes in the practices associated with Feynman diagram computations. This last point is then examined through an analysis of the critical assessment made at this time of the introduction of algebraic programs in theoretical high-energy physics, revealing their success, future challenges and highlighting the theme of automation. Section 3, authored by physicist Robert V. Harlander, offers an overview of the developments toward the automated evaluation of Feynman diagrams since these pioneering days, up until today. Rather than aiming for a historically precise discussion though, the focus of this part is on an outline of the main algorithmic concepts developed for perturbative QFT calculations based on Feynman diagrams. Computer algebra systems play an even bigger role today, as the evaluation of the occurring momentum integrals has been largely mapped onto algebraic operations, as will be discussed in detail in Sect. 3.5. In order to give a realistic impression of the field to the interested non-expert, the presentation includes a number of technical details which should, however, still be accessible to the mathematically inclined layperson. An excellent review for specialists is given by Ref. [21] and pedagogical introductions to calculating Feynman diagrams can be found in Refs. [22, 23], for example. Note, however, that two appendices at the end of the paper (Appendices A and B) provide further resources on the structure of Feynman diagrams and the calculation of sample one-loop integrals, respectively. They are designed to provide further insights into the mathematics related to Feynman diagrams, but are not essential for the understanding of the issues explored in the main text of the article.

While each part can be read independently due to its self-contained nature, the article as a whole aims to achieve a sense of unity. However, as the above outline of the contents suggests, this is not attempted by a continuous historical treatment from the beginnings to today. Our main emphasis is on the origins of algebraic and algorithmic approaches to Feynman diagrams, and their current status. The rich and comprehensive developments at the intermediate stages, on the other hand, are beyond the scope of the current format, and shall be left for future investigations. Instead, the article strives to achieve unity not only through its introductory and concluding sections, but also by establishing a continuous link between the technical challenges faced in the early stages (Sect. 2) and those encountered in the present (Sect. 3). Thus, cross-references are used repeatedly to supply context for both the historical and physical developments. They provide a better understanding of the many questions that have arisen since the inception of theoretical evaluation of Feynman diagrams and highlight the transformations and prospects brought about by the advancement of computer algebraic programs.

2 The emergence of computer-aided Feynman diagram calculations

2.1 QED put to experimental test: toward the need of algebraic programs

2.1.1 1950s–1960s: higher-and-higher orders

Since its inception in the late 1920s, the development of QED faced numerous challenges that impeded its progress.Footnote 5 The most notable obstacle was the occurrence of divergent integrals when dealing with higher-order perturbative calculations. These integrals were leading to undefined results, in particular when considering the self-energy of the electron and the vacuum zero-point energy of electron and photon fields. As briefly mentioned in the introduction, the development of renormalization techniques in the late 1940s then sought to address the issue of divergent integrals in QED by absorbing infinities into “bare” parameters and replacing them with finite, measurable values (see Sect. 3.4). These techniques paved the way for the development of covariant and gauge invariant formulations of quantum field theories, enabling in principle the computation of observables at any order of perturbation theory. Consequently, in light of these remarkable results, the newly refined QED was promptly subjected to rigorous experimental testing.

The more accurate the experiments became, the more one had to calculate higher orders of the perturbative series to determine corrections to the theoretical values and check for potentially unknown physical effects. In most cases, higher-order irreducible elements appear, which require computation from scratch. Feynman’s diagrammatic approach provides physicists with powerful tools for such an undertaking. Thanks to the Feynman rules, each term of the perturbative expansion can be deduced from graphs whose number of vertices increases with the correction orders. The internal intricacy of the different diagrams, as expressed by the appearance of loops, then reflects the growing complexity of the calculations to be performed (more details in Sect. 3). Moreover, in all instances, it must be emphasized that the number of diagrams involved in each correction increases drastically. To give an overview, let us consider the magnetic moment \({\mathbf {\mu }}_e\) of the electron, defined as \({\mathbf {\mu }}_e=-g_e e{\textbf{S}}/2m_e\), where \(m_e\) is the electron mass and \(\textbf{S}\) is its spin. Taking into account only the tree-level Feynman diagram of Fig. 3a, the gyromagnetic ratio is \(g_e=2\). The QED corrections to this quantity are called the anomalous magnetic moment, also referred to as “\(g-2\).” At order \(e^2\), they are described by the single one-loop diagram of Fig. 3b; at order \(e^4\) by 7 two-loop diagrams (see Fig. 4); at order \(e^6\) by 72 three-loop diagrams; at order \(e^8\) by 891 four-loop diagrams; and so on\(\ldots \) (see, e.g, Ref. [24]).Footnote 6 As a result, the close relationship between experiment and theoretical calculations determined much of the dynamics of early postwar high-energy physics.Footnote 7

In fact, two essential calculations for the development and confirmation of QED at the lowest significant order were carried out before Feynman developed his diagrammatic method. They were related to the Lamb shift and the anomalous magnetic moment of the electron. The former refers to the energy difference between the \(^2S_{1/2}\) and \(^2P_{1/2}\) levels of the hydrogen atom, first observed in 1947 by Willis E. Lamb and Robert C. Retherford [28]. That same year, to explain this observation, Hans Bethe used non-relativistic quantum mechanics and the novel idea of mass renormalization to calculate the self-interaction of the electron with its own electromagnetic field [29]. His successful approach spurred several decisive developments in QED in the late 1940s [1, Chap. 5]. On the other hand, the successful theoretical approach to the contribution of quantum mechanical effects to the magnetic moment of an electron is due to Julian Schwinger, who in 1948 performed the first calculation of radiative corrections in QED [30]. His results were in good agreement with measurements realized at the same time by Polykarp Kusch and Henry M. Fowley at Columbia University [31, 32].

The implementation of Feynman diagrams in the theoretical scheme of QED allowed for improved computations and the study of higher-order corrections. Robert Karplus and Norman M. Kroll estimated for the first time in 1950 the anomalous magnetic moment of the electron at order \(e^4\) [33]. A few algebraic errors affecting results to the fifth decimal place were identified in the late 1950s by André PetermannFootnote 8 and Charles M. Sommerfield, whose new calculations anticipated the precision experiments performed in 1963 by David T. Wilkinson and Horace R. Crane [34,35,36]. Concerning the Lamb shift, the first calculations remained limited to relativistic corrections at the lowest order (see, e.g., Ref. [37]). It was only in 1960 that Herbert M. Fried and Donald R. Yennie, and, independently, Arthur J. Layzer, first calculated radiative corrections of order \(e^2\) [38, 39]. While various considerations to increase sophistication and accuracy were subsequently developed [40,41,42], it was not until 1966 that higher-order calculations were performed analytically by Maximilliano F. Soto Jr. [43]. In detail, he calculated the seven Feynman diagrams for the radiative corrections of order \(e^4\) to the elastic scattering of an electron in a Coulomb potential (Fig. 4).

Initially, Soto Jr.’s results were not independently verified by the community. This can be explained, in part, by the rather tedious nature of the calculations, as we will discuss further in Sect. 2.1.2. But it can also be seen as a form of disinterest. Indeed, despite small deviations, the agreement between theory and experiment was generally considered good in the mid-1960s, both for the Lamb shift and for the anomalous magnetic moment of the electron. In fact, the community was in no hurry to pursue research at higher orders of perturbation theory (see, e.g., Ref. [44]). And it was only after a rather long pause that advances in low-energy, high-precision QED experiments were made that turned the tide. In particular, a new experimental determination of the fine structure constant \(\alpha \)—the dimensionless coupling constant associated with electromagnetic interaction—was obtained in 1969 using the Josephson effect [45]. Predicted in 1962 by Brian D. Josephson, this tunnel effect manifests itself by the appearance of a current between two superconductors separated by a narrow discontinuity made of a non-superconducting insulating or metallic material [46]. The corresponding measurement of \(\alpha \) in 1969 differed from previous measurements by three standard deviations. The new value was subsequently used to reevaluate different theoretical expressions such as the Lamb shift, fine structure, and hyperfine splitting in different atoms, as well as the anomalous magnetic moments of the electron and the muon (see, e.g., Ref. [47]). From these results and further experimental refinements, theoretical and empirical values of the \(^2S_{1/2}\) and \(^2P_{1/2}\) splitting in hydrogen showed a severe disagreement [48, 49]. This untenable situation explicitly called for the reconsideration of Soto Jr.’s results, as will be discussed in detail in Sect. 2.2.4.

New considerations of the anomalous magnetic moment of leptons were also motivated by parallel experiments aimed directly at measuring it. For the electron, various observations in the late 1960s showed a discrepancy of three standard deviations to the value obtained by Wilkinson and Crane in 1963 (see, e.g., Ref. [50]). However, the situation turned out to be rather confusing, as these results were contradicted in the early 1970s by new experiments that, in addition, improved the accuracy [51, 52]. In the case of the muon, pioneering experiments had been carried out in the late 1950s in the Nevis cyclotron laboratories at Columbia University [53, 54].Footnote 9 They not only made possible the observation of parity violation in muon decay, but also provided a first estimate of its anomalous magnetic moment, in good agreement with the theoretical predictions of Vladimir B. Berestetskii, Oleg N. Krokhin, and Aleksandr K. Khlebnikov [56]. Nonetheless, while the \(g_\mu -2\) CERN experiment, launched in 1959, initially confirmed this result, new measurements performed in phase 2 with a precision 25 times higher indicated in 1968 a divergence from the theory [57, 58]. These different results, taken together, stimulated an increased interest in refining the theoretical value of the anomalous magnetic moment of leptons. To this end, higher-order terms in the perturbative series were progressively computed (to be also discussed in Sect. 2.2.4).

The verification of the agreement between experimental measurements and theoretical calculations of the anomalous magnetic moments of the electron and muon became the most critical test of QED in the late 1960s and early 1970s. For the first time, experiments were accurate enough to verify the validity of the renormalization procedure and confirm the perturbative expansion up to three-loop diagrams. As mentioned above, there are in this case 72 Feynman graphs that contribute to the anomalous magnetic moment of the electron (order \(e^6\) corrections). Due to mirror symmetry, only forty of these are distinct, and of these forty, twelve involve fermion loops (Fig. 5). Three of the latter have vacuum polarization insertions of order \(e^4\), seven have vacuum polarization insertions of order \(e^2\), and two have light-by-light scattering insertions. In the case of the muon, the difference with the electronic moment comes specifically from electron loop insertions in the muon vertices of these 12 diagrams. As the order and number of diagrams increase, so do the difficulty and length of the calculation work.

2.1.2 The providential computer

In addition to issues raised by the increasing complexity of the integrations to be performed in the computation of Feynman diagrams (which will be discussed in Sect. 2.3.4), the main concern of physicists in the 1960s stemmed from the presence of Dirac matrices—also called \(\gamma \)-matrices—in the expressions. Although straightforward in principle, the calculation of their traces results in a huge number of terms as the number of matrices grows. To give a rough idea, the trace of a product of twelve different \(\gamma \)-matrix expressions generates 10 395 different terms (more details in Sect. 3.3). The calculations resulting from the need to work at higher orders proved to be “hopelessly cumbersome,” “horrendous,” if not “inhuman” [40, p. 271] [25, p. 167] [59, p. 18]. In this respect, the help of digital computers revealed indispensable. The gradual democratization in the scientific field of this relatively new and rapidly evolving technology proved particularly providential in this context: it was the only one that could provide effective solutions for automating the tedious tasks to be performed.Footnote 10

The essential role played by computers in QED soon became recognized by different theoretical physicists. In their 1970 report on the state of the field, Stanley J. Brodsky and Sidney D. Drell explicitly mentioned the techniques underlying recent extensions of computational capabilities, along with new experimental measurements, as reasons for progress toward the successful confrontation of theory with experiment [25, p. 189]. Similarly, according to Ettore Remiddi, while the renewed interest at the turn of the 1960s and 1970s in evaluating higher-order radiative corrections found its “natural justification in the recent progress in high precision, low energy QED experiments,” it only “became possible on account of the increasing use of large digital computers” [44, p. 193]. Indeed, the growing complexity of calculations had been accompanied by a corresponding growth in computing facilities, and immediately the benefits proved to be enormous. Compared to the traditional pencil-and-paper approach, three main virtues of digital computers can be highlighted. Deeply interrelated, they proved decisive for the successful development of Feynman diagram computations, as will be described below. First of all, bookkeeping—i.e., the ability of computers to record, track, and manipulate vast data sets—emerged as a direct response to problems such as the massive generation of terms in the trace of Dirac matrices. It freed physicists from the endless collection of notebooks that kept track of intermediate expressions in operations. Moreover, computers considerably improved the accuracy of calculations. Not only do they eliminate human errors in arithmetic, they also handle complex mathematical operations with exceptional precision. Finally, the introduction of digital machines brought unprecedented speed and efficiency, ultimately enabling physicists to conduct more extensive and thorough investigations and to explore a much wider range of theoretical scenarios. As one of the pioneers in computer-assisted QFT, Anthony C. Hearn, stated as early as 1966: “At present, a known six months of human work can be done in less than fifteen minutes on an IBM 7090” [61, p. 574].

From a computer science point of view, calculations related to Feynman diagrams require symbolic manipulation, also called algebraic computation. This domain deals with algorithms operating on objects of a mathematical nature through finite and exact representations. For high-energy physics, according to Hearn:

It is really little more than a polynomial manipulation problem involving exotic data structures with well defined rules for simplification. In addition, one needs a mechanism for the substitution for variables and simple expressions. Rational algebra need not be used, because denominators, if they occur, have a very simple structure, and can therefore be replaced by variables or expressions in the numerator. Because one has to program these capabilities as efficiently as possible, successful systems designed for QED usually also make good general-purpose polynomial manipulation systems [59, p. 19].

In this framework, the procedure initially followed to compute Feynman diagrams can be briefly depicted as follows:

-

(i)

A one-to-one mapping between the diagram and the algebra is established following the Feynman rules. We call (a) the resulting expression.

-

(ii)

A procedure for the automatic recognition of divergences is included.

-

(iii)

Dirac traces of (a) are computed.

-

(iv)

Information collected on point (ii) is used to carry out the renormalization program.

-

(v)

Integration over the internal momenta of (a) is performed.

This procedure gives the diagram’s contributions to the scattering amplitude of the particular process under study in terms of multidimensional integrals. Their resolution then enables us to extract values such as contributions to the anomalous magnetic moment of the leptons, or to the Lamb shift. The product of the amplitude with its Hermitian conjugate also provides us with the contributions to the differential cross sections.Footnote 11

Historically, the initial steps toward setting up such a procedure on a computer were taken in the early 1960s through the pioneering development of algebraic programs whose initial purpose was none other than to perform Dirac matrix calculations. The first acknowledged reference to such a program was made by Feynman himself, at the Third International Conference on Relativistic Theories of Gravitation held in Poland in 1962. Relating his failure to compute a diagram as part of developments of a quantum theory of gravitation, he mentioned that “the algebra of the thing was [finally] done on a machine by John Matthews,” being probably for him “the first problem in algebra that [\(\ldots \)] was done on a machine that has not been done by hand” [62, p. 852]. Most likely, Matthews was then working on a Burroughs 220—a vacuum-tube computer with a small amount of magnetic-core memory produced since 1957 by ElectroData Corporation—for which he used the dedicated algebraic language, BALGOL, to write the program [59, p. 20] [63, p. 76]. Nevertheless, it seems that the latter remained confidential, and various independent projects with similar objectives soon appeared. In line with the remarkable character of Matthews’ work, which Feynman emphasized, the new dynamics they triggered proved so powerful that their success played a major role in placing algebraic programs and their development at the heart of scientific practice. Among the various technological innovations of the 1960s, SCHOONSCHIP, REDUCE, and ASHMEDAI proved to be the most impactful and played the most fundamental role in the critical evaluation of QED.

2.2 Technological innovations in the 1960s: SCHOONSCHIP, REDUCE, and ASHMEDAI

2.2.1 SCHOONSCHIP

The program SCHOONSCHIP was developed in the early 1960s by the Dutch theorist Martinus J. G. Veltman, co-recipient with Gerard ’t Hooft of the 1999 Nobel Prize in Physics for elucidating the quantum structure of electroweak interactions. In fact, it is precisely early work dealing with this specific research theme that was at the origin of Veltman’s interest in computer algebra. SCHOONSCHIP initially aimed at facilitating the resolution of a rather basic problem in this framework, that of radiative corrections to photons interacting with a charged vector boson. Various experimental successes in the late 1950s and early 1960s of the “vector-minus-axial vector” theory had indeed led many physicists to believe that vector bosons were mediators of weak interactions. This “V-A” theory, developed in 1957 by George Sudarshan and Robert Marshak, and independently by Feynman and Murray Gell-Mann, introduced in the Hamiltonian operator an axial vector current on which the parity operator has a different effect than on the polar vector currents [64,65,66]. It had been formulated to provide a theoretical explanation for the experimental observation in 1956 at Columbia University of parity violation for the weak interaction by Chien-Shiung Wu and her assistants [67]. They were following the working hypothesis on parity of Tsung-Dao Lee and Chen Ning Yang—a work for which they won the 1957 Nobel Prize—a duo who later began a systematic study of the interactions of vector bosons with photons and worked toward deriving their specific Feynman rules [68, 69]. In this context, Lee independently tackled in 1962 the calculations of the lowest order radiative corrections to the vector–boson–photon coupling [70]. This approach, which Veltman was eager to extend while working in the theory division at CERN, is specifically what inspired the development of SCHOONSCHIP [63, p. 77] [71, p. 342]. Indeed, the full calculations resulted in an expression with about 50,000 terms in the intermediate steps.

Designed during an extended stay at the Stanford Linear Accelerator Center, the first operational version of SCHOONSCHIP ran in December 1963 on an IBM 7094—a secondgeneration (transistorized) mainframe computer—and successfully contributed to solving a problem concerning the radiative corrections to photons interacting with a charged vector boson [63, 72]. According to Veltman’s recollections on receiving his Nobel Prize, its name, rather original and difficult for many to pronounce, was chosen “among others to annoy everybody not Dutch” [71, p. 342]. In Dutch, it literally means “clean ship,” and comes from a naval expression referring to clearing a messy situation. And SCHOONSCHIP was in principle prepared to clear up more messy situations than just electroweak interaction. After all, Veltman had not designed his code to be limited to one specific problem and it soon revealed itself to be a general-purpose symbolic manipulation program capable of supporting the study of a large variety of processes [59, 71] [73, p. 289]. SCHOONSCHIP was written in assembly language, considered a low-level programming language since it consists mainly of symbolic equivalents of the architecture’s machine code instructions. This choice was made partly because Veltman considered compilers—which allow machine-independent (high-level) programming languages to be efficiently converted on a specific computer—to be responsible for wasting memory and slowing down execution speed [20, 63] [74, p. 516]. In 1966, SCHOONSCHIP was ported to the CDC 6600, a machine manufactured by Control Data Corporation and considered as the first successful supercomputer [75]. With the development of hard disks and file storage, the program’s distribution to all existing CDC computers gradually became possible along the 1970s [76].

2.2.2 REDUCE

REDUCE was developed from 1963 by Hearn. As a postdoc in theoretical physics at Stanford working on Feynman diagrams, the Australian-born scientist was already interested in automating their calculations [61, 77]. In this perspective, John McCarthy, one of the founders of the field of artificial intelligence, encouraged him to use LISP, the computer language he had been developing at M.I.T since 1958 [78]. Primarily designed for symbolic data processing, this high-level language had already been used by 1962 for tasks as diverse as electrical circuit theory, mathematical logic, game playing, and—what concerned Hearn more specifically—symbolic calculations in differential and integral calculus [79]. It allowed for the development of a system that proved “easily capable of modification or extension [and also] relatively machine independent” [80, p. 80]. In 1963, McCarthy, who had just joined the Stanford Computer Science Department, also provided Hearn access to hardware facilities.

While Hearn’s work was initially oriented toward the calculation of the algebraic trace of a product of Dirac matrices and its application to various specific problems in high-energy physics, expectations were soon surpassed, as was the case with SCHOONSCHIP [61]. The program developed using batch processing on an IBM 7090 turned out to be a general-purpose algebraic simplification system and was released as such in 1967 [80]. It was capable of handling, among other things, expansion ordering and reduction of rational functions of polynomials, symbolic differentiation, substitutions for variables and expressions appearing in other expressions, simplification of symbolic determinants and matrix expansions, as well as tensor and non-commutative algebraic calculations. As Hearn later acknowledged, the name of the program, REDUCE, was also intended with a touch of playfulness: “algebra systems then as now tended to produce very large outputs for many problems, rather than reduce the results to a more manageable form. ‘REDUCE’ seemed to be the right name for such a system” [77, p. 20].

In addition to the main LISP package, specific facilities were provided to express outputs in the form of a FORTRAN source program [80, p. 88]. Originally developed for IBM in the 1950s by John Backus and his team, FORTRAN (FORmula TRANslating system) is a compiled imperative programming language that proved particularly accessible to engineers and physicists [81]. Indeed, long computational results were converted into a format suitable for numerical computation and graphical display. In 1970, REDUCE 2 was made available. This new version offered increased facilities for simplifying algebraic expressions [82]. It was written in an ALGOL-like syntax, called RLISP. ALGOL, short for “Algorithmic Language,” refers to a family of programming languages for scientific computations originally developed in 1958.Footnote 12 These additions and modifications later contributed to the wide distribution of REDUCE and the creation of a community of users. While in the early 1970s SCHOONSCHIP was available only for the CDC 6000/7000 series, REDUCE ran on the PDP-10, most IBM 360 and 370 series machines, the CDC 6400, 6500, 6600, and 6700, and began to be implemented on new systems such as the UNIVAC 1100 series [84, 85].

2.2.3 ASHMEDAI

The development of ASHMEDAI by Michael J. Levine, an American Ph.D. student at the California Institute of Technology, also began in the early 1960s. Its genesis seems to be closely linked to that of SCHOONSCHIP. Indeed, according to Veltman, the idea of developing such programs arose from a discussion, in which Levine took part, at CERN in the summer of 1963 [63, p. 77]. The two physicists were then working on phenomena related to the development of the V-A theory. More precisely, Levine’s dissertation was dealing with neutrino processes of significance in stars [86]. In particular, he was computing the transition probabilities and rates of dissipation of energy by neutrino–antineutrino pairs for several processes predicted by recent developments in the theory of weak interaction. ASHMEDAI, which supplied assistance for these tasks, was initially written in FAP (Fortran Assembly Program), a machine-oriented symbolic language designed “to provide a compromise between the convenience of a compiler and the flexibility of an assembler” [87, p. 1]. The initial package consisted of about 4000 commands, used 20,000 wordsFootnote 13 of storage, and ran for about 8 minutes per diagram on an IBM 7090 [86, pp. 80–81].

As a symbolic manipulation program, ASHMEDAI was promptly capable of performing operations such as substitution, collection of terms, permutation of Dirac matrices and taking traces of products of Dirac matrices [88, p. 69]. So, while it was developed to “do many of the tedious straightforward operations arising in Weak Interaction and Quantum Electrodynamics calculations,” Levine saw as early as 1963 that it could naturally become a more general algebraic language to be applied to various problems [86] [89, p. 359]. This prospect, however, was never realized to the extent of SCHOONSCHIP and REDUCE. ASHMEDAI remained seen as a special purpose program, with limited long-term success. But it should be noted that some of its features of specific interest to the development of high-energy physics were praised in the 1960s and 1970s (see, e.g., Refs. [26, 63]). In particular, Levine’s work to establish routines performing symbolic algebra manipulations for Dirac matrix and tensor algebra revealed particularly effective. Compared to REDUCE, ASHMEDAI had the advantage of being able to compute larger products of \(\gamma \)-matrices and was initially found to be more suitable for higher-order calculations [90].

In the late 1960s and early 1970s, ASHMEDAI experienced a complete makeover. FORTRAN became the high-level source language to “facilitate writing, debugging, modifying and disseminating the code,” and an improved user interface was developed [89, p. 359]. Moreover, some algorithms were degraded to facilitate coding and many features deemed unnecessary were removed. In addition to flexibility, the use of FORTRAN offered better portability as well. Already being implemented on a CDC 3600 in the late 1960s, ASHMEDAI was run by Levine on a Univac 1108 in the mid-1970s and was also available on many PDP-10 and IBM 360-370 machines [89, 90].

2.2.4 First applications

Naturally, the first results of calculations performed with the help of algebraic programs were supplied to the community by their creators and concerned electroweak interactions. For instance, motivated by the development of electron–electron and electron–positron colliding-beam projects—most notably at Stanford—Hearn collaborated in 1965 with Yung-su Tsai to compute the differential cross section for the as-of-then hypothetical process \(e^++e^-\rightarrow W^++W^-\rightarrow e^++\bar{\nu }_e+e^-+\nu _\mu \) [91]. Also, Levine, whose PhD results were at odds with some more recent estimations, published in 1967 the calculations to the lowest order in the coupling constants of the cross section contributions of four Feynman diagrams for a nonlocal weak interaction mediated by a charged vector meson [88]. These two cases are examples of a type of problem involving relatively little analytical work for which algebraic programs to compute traces of Dirac matrices gradually gained popularity at the turn of the 1960s and 1970s. The implementation of SCHOONSCHIP on a CDC 6600 computer at the Brookhaven National Laboratory near New York played an important role in this process. In several cases, it proved to be valuable for low order calculations related to projected processes in upcoming electron–positron colliders (see, e.g, Refs. [92, 93]).Footnote 14

Nevertheless, the real test of the usefulness and effectiveness of algebraic programs in high-energy physics would come directly from the situation presented in Sects. 2.1.1 and 2.1.2. The pioneering work of Veltman, Hearn, and Levine in the field of computational physics had actually anticipated the situation in which computer-assisted calculations would prove essential to ensure the validity of the new QED through a careful verification of the adequacy with experiment. Thus, as required by the increasing number and complexity of higher-order Feynman diagrams, these algebraic programs naturally began to be used around 1970 for the computation of the two-loop (order \(e^4\)) contributions to the Lamb shift, and the three-loop (order \(e^6\)) contributions to the anomalous magnetic moment of the electron and the muon. An interesting aspect of this process is that it quickly took on a strong collaborative dimension that allowed for testing not only the theory but also the new computational techniques that had just been developed. Indeed, while different teams were at work to calculate all the necessary Feynman diagrams, the results were systematically cross-checked using different algebraic programs.Footnote 15

As mentioned in Sect. 2.1, with respect to the Lamb shift, the determination of radiative corrections of order \(e^4\) requires the computation of the seven Feynman diagrams shown in Fig. 4.Footnote 16 First done analytically by hand in 1966 by Soto Jr., it was not until 1970 that computer-assisted calculations were performed at Stanford by Thomas Appelquist and Brodsky [43, 48, 49]. REDUCE automatically computed the traces of Dirac matrices, applied projections, and reduced the expressions to Feynman parametric integrals (see Appendix B.2). The new value obtained, in disagreement with Soto Jr.’s, was found to be in excellent conformity with the most recent experimental results. If John A. Fox also recalculated with REDUCE the specific contribution of the “crossed” graph, most of the verifications were made by physicists closely related to CERN, where SCHOONSCHIP was available [97].Footnote 17 Benny Lautrup, Petermann and Eduardo de Rafael computed the contributions at order \(e^4\) for the “corner” diagrams, and Lautrup independently checked the contribution of the vacuum polarization term [98]. Petermann also carried out calculations for the “crossed” graph [99]. Finally, on the CERN CDC 6600 and on that of the University of Bologna, Riccardo Barbieri, Juan Alberto Mignaco, and Remiddi recomputed the contributions from all seven Feynman diagrams [100, 101]. Overall, these SCHOONSCHIP results confirmed those obtained with REDUCE. But as a further verification of the methods, in the first and last cases at CERN, contributions at order \(e^4\) of the anomalous magnetic moment of the electron were also calculated and found in good agreement with the results initially obtained by Petermann in 1957 [34].

With regard to the evaluation of contributions of order \(e^6\) to the anomalous magnetic moment of the electron, as mentioned previously, 72 diagrams had to be calculated (the twelve graphs including fermion loops are reproduced in Fig. 5). Their first approach with algebraic programs then also took place at the turn of the 1960s and 1970s.Footnote 18 With the help of REDUCE, Janis Aldins, Brodsky, Andrew J. Dufner, and Toichiro Kinoshita, split between Stanford and Cornell, determined the corrections due to the two Feynman diagrams with light-by-light scattering insertions [102].Footnote 19 Following the same method, Brodsky and Kinoshita calculated the seven graphs with vacuum polarization insertions of order \(e^2\), while the three with vacuum polarization insertions of order \(e^4\) had been computed previously with SCHOONSCHIP at CERN by Mignaco and Remiddi [104, 105]. These ten diagrams were also investigated separately by Jacques Calmet and Michel Perrottet of the Centre de Physique Théorique of Marseille who found results in good agreement [106]. For this purpose, they used an independent program written in LISP by the former. Finally, in 1971, Levine and Jon Wright presented their results for the sum of the remaining diagrams [107, 108]. Specifically, they computed with ASHMEDAI all 28 distinct graphs that do not involve fermion loops.Footnote 20 This contribution alone justifies the special place occupied by Levine’s program in this historical account. Not only did it prove to be quite “elegant,” but it was also, by its magnitude and complexity, “one of the most spectacular calculations in quantum electrodynamics” performed in the early 1970s [26, p. 14] [63, p. 77]. Indeed, bosonic loop graphs, as opposed to those with closed fermion loops, are generally much more difficult to calculate. The results obtained were confirmed in 1972 by Kinoshita and Predrag Cvitanović who used the CDC 6600 version of SCHOONSCHIP at Brookhaven National Laboratory but also verified some of their calculations by hand and with a PDP-10 version of REDUCE 2 [110].

The overall result of this collective effort was found to be in good agreement with the recent precision experiments carried out in the late 1960s. This was also the case for the anomalous magnetic moment of the muon, for which contributions of order \(e^6\) had naturally been calculated together with those of the electron by the various actors mentioned above [96]. As a result, by the early 1970s, the situation was such that trust had not only been improved in the new QED and QFT but also been established in algebraic programs. The achievement of similar results, by separate teams using different machines, programs, and techniques, was the guarantee of their acceptance as indispensable computational tools in theoretical high-energy physics and beyond [59, p. 19] [73] [89, p. 364].

2.3 Early 1970s: initial assessment

The rise of algebraic programs to solve QFT problems, as described above, was in fact part of a much broader movement that saw, from the mid-1940s onward, the emergence of digital computers as fully fledged tools for scientists. As far as experimental high-energy physics is concerned, Peter Galison described in his seminal book Image and Logic: A Material Culture of Microphysics how computers invaded laboratories in the postwar period and profoundly altered practices [111]. This was particularly the case with the development of the Monte Carlo method—whose dissemination in the community, between experiment and theory, was recently reassessed by Arianna Borrelli [112]—a family of algorithmic techniques aimed at calculating approximate numerical values using random processes as inputs. Stemming from work linked to the early post-war developments of the American nuclear project—most notably by Nicholas Metropolis, Stanislaw Ulam, and John von Neumann—it played a major role, particularly from the early 1960s, in the gradual emergence of computer simulations of experiments, but also, for what concerns us more directly, in the numerical estimation of complicated integrals.

All things considered, it appears that the progressive democratization of the computer in the field of physics ultimately led to the creation of a veritable community of specialists. To this end, new scientific journals were created. The first issue of the Journal of Computational Physics appeared in 1966 and that of Computer Physics Communications in 1969. Also, international meetings were soon organized in response to the keen interest aroused. In the early 1970s, a Colloquium on Advanced Computing Methods in Theoretical Physics was held in Marseille [113]. From August 2 to 20, 1971, the International Centre for Theoretical Physics in Trieste organized extended research courses on the theme of “Computing as a Language of Physics” [114]. The European Physical Society also followed the lead and set up a long series of European conferences on computational physics, the first of which was held in Geneva in April 1972 [115]. This dynamic proved to lay new foundations. As Frederick James of CERN later stated:

The advent of these schools, conferences, and journals has been important, not only in advancing the techniques of computational physics, but also in helping to establish the identity of computational physics, and offering a forum for communication between physicists from different fields whose primary working tool is the computer [116, p. 412].

Computational physics, as a field, was born. And with it, the ability of computers to perform large amounts of elementary algebra began to be fully exploited.

Widely understood, computer-assisted algebraic manipulation was a rather fashionable theme in the early 1970s. But there was still a wide gap between aspirations and reality, as Veltman illustrated in his particular style: “Many programs doing this sort of work have been written. In fact, there are probably more programs than problems. And it is a sad thing that most of these programs cannot solve any of the problems” [63, p. 75]. QFT calculations—in addition to other successes in celestial mechanics and general relativity—had nevertheless proved that a promising future was possible. Programs such as SCHOONSCHIP and REDUCE had far exceeded the expectations of their initial goal and had become general-purpose algebraic manipulation systems. Hearn, among others, therefore envisioned a successful application of these techniques in other areas, particularly in quantum physics where they appeared relevant to many subfields, such as semiconductor theory, solid state physics, or plasma theory. However, this process was still in its infancy, and it was clear that it would rely heavily on experience developed up to that point within the specific framework of QFT [59, p. 18].

As a result of the general emulation created in the emerging field of computational physics and the prospect of widespread dissemination of new algebraic methods in physics, the early 1970s proved a propitious time for an initial assessment of the various achievements to date. Several publications and talks were explicitly aimed at establishing the state of the art in computer-assisted Feynman diagram calculations. In many respects, they also addressed the various challenges and prospects for their future development. In addition to the question of automation, which will be considered in Sect. 2.4, four recurring themes were discussed: hardware facilities, the calculation of Dirac matrix traces, the renormalization procedure, and the handling of integrations.

2.3.1 Hardware facilities

As the three examples of Sect. 2.2 show, the development of algebraic programs for QFT required the use of advanced programming techniques and resulted from the dedication of people with access to large computing facilities. The dependence of these programs on such installations led to the recognition of some hardware challenges in the early 1970s. The most obvious was that of dissemination and accessibility to a wider audience. In this regard, as has already been suggested several times, REDUCE was clearly the champion. The flexibility of the high-level language used and its machine independence greatly favored its portability and widespread use. In addition, the development of FORTRAN-like output generation was conducive to its use by non-specialists in digital computing. On the other hand, although ASHMEDAI shared similar characteristics to REDUCE, its dissemination seemed to suffer from its lack of generality. Its use apparently remained limited to Levine’s inner circle at the Carnegie Mellon University in Pittsburgh, which he had joined in 1968 [63, p. 77] [117] [118, p. 344]. Finally, as far as SCHOONSCHIP is concerned, although it has proved central to the results presented above, it turns out that the machine-dependence induced by the use of a low-level language has been a serious obstacle to its rapid expansion. In 1972, its availability was limited to a few strategic centers equipped with CDC 6000/7000 computers: CERN, Bologna, Brookhaven and the French Atomic Energy Commission, at Saclay near Paris [63, p. 77] [74, p. 517]. This problem was nevertheless recognized, and in recently published reminiscences in Veltman’s honor, Remiddi recalled that Hugo Strubbe, a student of the Nobel laureate, was hired by CERN in the early 1970s to work on implementing a version of SCHOONSCHIP for IBM mainframes [74, pp. 518–519] [76].Footnote 21

The distinction between machine-dependent and machine-independent programs (low-level and high-level languages) was also at the heart of one important hardware-related issue raised by developers in the early 1970s, that of memory shortage due to the “blow-up” problem [73, p. 237] [118]. It is well-known that algebraic problems, even the simplest ones beginning and ending with fairly short formulas, often lead to the appearance of long intermediate expressions. As mentioned in Sect. 2.1.2, at low orders this blow-up mainly originates in the calculation of traces of Dirac matrices.Footnote 22 Managing these intermediate expressions proves particularly costly in terms of memory, since they often have to be stored in core during the calculation. Sometimes, the memory capacities of hardware installations are even exceeded, condemning the process to failure. This situation represented a real challenge at the turn of the 1960s and 1970s and was well recognized by the various actors mentioned above. It led Hearn, for example, to explicitly recommend processing algebraic calculations on “a computer with a high speed memory of 40 000 words or more” [120, p. 580].Footnote 23

This time, in light of this challenge, the advantage clearly went to SCHOONSCHIP, which proved not only incredibly fast but also very economical in terms of storage [121]. Indeed, high-level languages such as LISP and FORTRAN required much more storage than their low-level counterparts, on the order of “about 20 times” more, according to Veltman [63, p. 76]. This led the Dutch physicist to seriously doubt the ability of high-level language programs to contribute extensively to problems considered beyond human capabilities. Nevertheless, before we even consider any hardware improvements that would fully refute this assertion, the developments of algebraic computations in QFT invite us to moderate it. Apart from the implementation of simplifying algorithms that will be discussed in the next section, the challenge posed by memory limitations was not perceived as a dead end by users of high-level languages. For them, it was above all a matter of manipulating data artfully, and for Hearn, a “clever programmer [could] make optimum use of whatever facilities he has at his disposal” [120, p. 580]. ASHMEDAI, which proved more efficient than REDUCE in this respect, was in fact the best example of this. In addition to using auxiliary memory, Levine provided for the possibility of treating the intermediate terms of calculations as “virtual” [89, p. 362]. Consider an initial polynomial \(P_1\), successively transformed into polynomials \(P_2\) and \(P_3\) by two different operations. By performing the operations on separate terms of \(P_1\), it is possible, once a corresponding term of \(P_3\) is obtained, to destroy the created but now useless part of \(P_2\). In this way, \(P_2\) turns out to be virtual, i.e., it never fully exists at any given time, allowing a more memory-efficient execution of ASHMEDAI.

2.3.2 Traces of Dirac matrices

As shown by the QFT computations described in Sect. 2.2.4, it is clear that the digital treatment of Dirac matrices was the great success of the 1960s. SCHOONSCHIP, REDUCE and ASHMEDAI had indeed fulfilled their initial function, enabling physicists to access in a very short time results that would have required years of work before the emergence of algebraic programs. This is why, while Hearn emphasized in the early 1970s that the greatest successes had arisen “from our ability to do polynomial manipulation, simple substitutions and differentiation” and Calmet asserted that “[p]resently, there is no real difficulty to handle the algebra for such calculations,” the revolution in computation times was naturally the subject of much praise [59, p. 19] [96, p. 1]. Hearn pointed out, for example, that the “algebraic reduction of a diagram might take fifteen minutes of computer times,” while Levine put forward a “[time] spent in Dirac Matrix manipulation [of] three minutes” for a single bosonic loop graph contributing at order \(e^6\) to the anomalous magnetic moment of the electron [59, p. 19] [89, p. 360]. Since SCHOONSCHIP proved to be faster than programs in high-level languages, this assessment period naturally proved propitious for Veltman to extol the merits of his program. In addition to the above-mentioned saving of memory by a factor of around twenty, he also suggested that calculations could be performed in an interval thirty times shorter than its competitors written in LISP [63, p. 76].

In fact, it is clear that memory and time were in many ways critical factors in the development of early algebraic programs. In this respect, in addition to hardware considerations and artful manipulation of data, implementing mathematical algorithms to simplify the expression of Dirac matrix traces, and consequently the weight of calculations, was highly valued. This was particularly the case within the REDUCE framework, for which the first algorithm to be put forward on several instances was proposed by John S. R. Chisholm [122]. It enables a product of traces sharing common indices to a sum of single traces. Nonetheless, in the course of its digital implementation, John A. Campbell and Hearn noted that its effectiveness was limited to cases of two shared indices, since for three or more “the same expression arises over and over again in the computation of seemingly different traces” [123, p. 324]. It was therefore superseded by another proposal by Joseph Kahane, which leads to the reduction of any partially contracted product of Dirac matrices to a simple canonical form involving only the uncontracted indices [124]. But despite their interest, in comparison with REDUCE’s competitors, such algorithms remained insufficient solutions to the abovementioned challenges. Hearn, therefore, set out to improve them. In the early 1970s, he was working with Chisholm on an extension of Kahane’s procedure, with a view to developing a new algorithm which, he claimed, would lead “to further efficiencies in trace calculation and also reduce the amount of computer code necessary” [125] [120, p. 579].

2.3.3 Renormalization

Among the various stages of the complete Feynman diagram calculation procedure described in Sect. 2.1.2, the question of renormalization has so far not been addressed. And with good reason: despite the hopes raised by the rise of algebraic programs, its consistent approach in a computer context had yielded very few concrete results by the early 1970s. This is illustrated by the various cases examined in Sect. 2.2.4, where the careful handling of divergences had to be carried out by renormalization or regularization methodsFootnote 24 in procedures that required a great deal of effort on the part of physicists, and for which the added value of computers was minimal. There were two main reasons for this. On the one hand, Hearn pointed out that the renormalization process was “the one in which heuristics play the greatest role in deciding how the expression should be manipulated” [120, p. 593]. On the other hand, as Brodsky and Kinoshita in particular recognized, the digital conversion of the standard renormalization procedure was far from being a straightforward process: “[\(\ldots \)] the usual prescription of renormalization found in every textbook of quantum electrodynamics is not necessarily the most convenient for practical calculation, in particular, for computer calculations” [105, p. 360]. In response to these difficulties, the two theorists put forward the development, around 1970, of various alternative renormalization schemes of a more algebraic nature (see also, e.g., Refs. [126, 127]). Nevertheless, their intuition proved somewhat unfortunate: the more algebraic the procedures, the more heuristic they turned out to be, ultimately preventing their easy implementation in computer programs [120, p. 593].

In the spirit of Feynman’s original method, an attempt to apply algebraic computational techniques to renormalization theory should nevertheless be highlighted. In the late 1960s, Calmet and Michel Perrottet developed a set of LISP and FORTRAN programs aimed at generating Feynman diagrams and their relative counterterms at a given order, as well as the integration over internal momenta and resulting suppression of divergences [128]. Nevertheless, their work remained limited to the renormalization of Feynman diagrams in the simplest case of scalar theory, where divergences appear only through vacuum polarization insertions of order \(e^2\). As a minor step toward potential generalization, Calmet also introduced in 1974 a procedure coded within the framework of REDUCE that offered a first advance toward automatic recognition of divergences in a Feynman diagram [129]. This procedure was not only able to recognize whether a graph was divergent or not but also to differentiate between vacuum polarizations, electron self-energies, and vertex divergences.Footnote 25

2.3.4 Integrations

The development of efficient routines for integration was another major issue addressed by physicists in their evaluation of computational methods for Feynman diagrams [44, 59, 73, 96, 118].Footnote 26 They had to deal with particularly messy and complex multidimensional integrals whose integrands present sharp spikes distributed over the integration region. In the early 1970s, numerical methods had to be widely used, but the integrals were so complicated that the usual Monte Carlo procedure failed in the majority of cases, leading to divergent results. The most successful approach was then an adaptive-iterative routine known as SPCINT, LSD, or RIWIAD, which combined the Monte Carlo and Riemann sum methods. It was based on an original idea by G. Clive Sheppey in 1964, subsequently improved and applied to elementary particle physics by Dufner and Lautrup [130] [96, p. 17] [131]. During its execution, the integration domain is divided into smaller subregions, and for each subregion, the value of the integrand and its variance are computed at randomly selected points. The variance obtained is utilized to enhance the selection of intervals along each axis, ensuring that more points are concentrated in regions where the function exhibits insufficient smoothness. This process is then iteratively repeated.Footnote 27

Among the various calculations mentioned in Sect. 2.2.4 as early successes in the development of algebraic programs, once the divergences had been eliminated by a renormalization or regularization procedure (see the discussion in Sect. 2.3.3), amplitudes were always integrated numerically, except in two cases. In calculating the crossed graph contributions of order \(e^4\) to the Lamb shift (see Fig. 4), Fox relied on the hand-operated analytical procedure developed by Soto Jr. in 1966 [43, 97]. Barbieri, Mignaco, and Remiddi also developed an analytical approach to the computation of the Lamb shift contributions from the seven two-loop Feynman diagrams, for which they were partially assisted in some specific algebraic manipulation tasks by SCHOONSCHIP [100, 101]. The singularity of these works does not, however, reflect a lack of interest in analytic integration and its possible computer implementation. It was recognized that the latter could prove faster, more accurate and more powerful than any numerical method [59, 134]. In the words of Antoine Visconti, “analytic integration method should [even] represent the final answer to the evaluation of Feynman integrals” [118, p. 336]. And in fact, according to one of its leading figures, Joel Moses, the field of symbolic integration had just gone through its “stormy decade” [134]. With the development of faster, more reliable computers, advances in integration theory in the 1960s had seen the emergence of procedures that completely solved the problem of integration for the usual elementary functions.

SIN (Symbolic INtegrator), developed by Moses, was probably the most successful program for this purpose at the beginning of the 1970s [134]. Originally written in LISP for the IBM 7094 during 1966–1967,Footnote 28 it was inspired by the pioneering work in artificial intelligence of James R. Slagle, who in 1961 developed a heuristic program, SAINT (Symbolic Automatic INTegrator), for solving basic symbolic integration problems [135, 136]. SIN developed a strategy of successive attempts—in three stages (cheap general method, methods specific to a certain class of integrals, general method)—to solve a problem using different integration methods. But while the results were promising, it could only calculate “simple” functions, proved inflexible for many multiple integrals, and was very demanding on memory [84, p. 35]. As such, it was insufficiently suited to the heavy calculations of QFT, which is why numerical solutions were preferred. The algebraic procedures required were too powerful, sometimes involving breaking the polynomial nature of the calculation to substitute certain known functions for simple variables in the result obtained. And in fact, as the orders of perturbation theory and the internal complexity of Feynman diagrams increased, the symbolic implementation of many integrations proved particularly difficult at the turn of the 1960s and 1970s.

The multiplicity of challenges in this field therefore meant that high-energy physicists had to invest heavily in finding solutions to their specific problems. This was notably the case for Petermann, who collaborated at CERN with Doris Maison on the development of the SINAC (Standard INtegrals Analytic Calculation) program, using the CDC 6600 version of SCHOONSCHIP [137, 138].Footnote 29 Their initial aim was to develop a procedure for calculating subtracted generalized polylogarithms, also known as subtracted Nielsen functions, a class of functions appearing in several physics problems—i.e., electrical network problems or Bose–Einstein and Fermi–Dirac statistics analyses. It was established that the analytical structure of many expressions relating to radiative corrections in QED could be enumerated in terms of Spence functions, a subclass of Nielsen functions [104, 140]. Nevertheless, the impact of Maison and Petermann’s work was rather limited in the early 1970s, as it only proved fully relevant for corrections of two-loop order. Indeed, at three-loop order, the integrals to be manipulated present a higher degree of complexity, and no similar regularity had yet been established for Nielsen functions. It was unclear whether previous methods could be extended, or whether new methods would have to be developed. In short, in Calmet’s words: “the analytical structure of solutions [was] still too poorly known to hope for a general solution” [141, p. 37].Footnote 30 To illustrate these difficulties, it is worth mentioning that in 1973 Levine and Ralph Roskies attempted to calculate analytically the integrals relating to Feynman diagrams without fermion loops contributing to the anomalous magnetic of the electron at order \(e^6\) [142]. Working within the algebraic framework of ASHMEDAI, they were only able to find a solution for six of the 28 independent graphs.

2.4 Toward complete automation?

2.4.1 Computers in physics: the specter of dehumanization

According to the previous assessment of the development of calculation techniques for Feynman diagrams, it appears that the various successes encountered were tempered by hardware limitations and a combination of heuristic and mathematical problems. However, on the whole, these difficulties were not seen as a dead end from a computer science point of view. On the one hand, physicists were working on “extremely elementary and for the most part rather crude” facilities, for which it was reasonable to foresee progress [73, p. 236]. On the other hand, as might be expected of any field in its formative years, the various algebraic programs used in high-energy physics in the early 1970s were highly evolutive. In 1971, Hearn simply regarded REDUCE as a “growing system” in its infancy [82, p. 132]. Its updated version, REDUCE 2, had just been released, only 3 years after the initial version. SCHOONSCHIP was also constantly modified to include new features. As a result, its manual had to be thoroughly revised in 1974 [85].

Optimism about possible improvements was certainly warranted, as it actually was for computational physics as a whole. Computers had only recently invaded laboratories, and their significance, importance, and great potential were already widely recognized (see, e.g., Refs. [143, 144]). In 1971, Lew Kowarski described an “intrusion,” a “sudden event” whose impact was “dramatic” and led to “spectacular applications” in the field of nuclear physics [145, p. 27]. For him, this was a great liberation in space and time for the scientist. Nevertheless, as he also suggested, this excitement was in fact dampened by voices expressing a growing fear of the dehumanization of scientific practices. In this respect, in his historical approach to the development of experimental high-energy physics, Galison described how automation and the prospect of its generalization became, in the 1960s, the object of concerns and tensions that have, in many ways, shaped the evolution of the field [111]. Part of the community feared that a form of dependence on the computer would lead physicists to lock themselves into a world of very fine measurements and no discoveries.

Hans Bethe, in his introduction to Computers and their Role in the Physical Sciences published in 1970 by Sidney Fernbach and Abraham Taub, remarkably echoed these debates from the point of view of a theoretical physicist [146]. As a member of the Manhattan Project, he had been a privileged witness to the introduction of digital computers into physics. As such, he deeply appreciated the progress they had made over more than two decades, in both pure and applied physics. But he also clearly saw their role as being “our servant, meant to make our life easier, and that [they] must never become our master” [146, p. 9]. He insisted that computers were not capable of understanding, that they were only there to carry out the orders they were given, in other words, that “[t]heir answers cannot be more intelligent than the questions they are asked” [146, p. 8]. Bethe was not only unoptimistic about the future usefulness of their learning abilities but also unwilling for them to lead to potential independence. In addressing the themes of understanding, intelligence and learning, Bethe was in fact projecting his own abilities as a theoretical physicist onto the computer. And, of course, he could not foresee any success for the latter regarding an activity as abstract as the conceptual development of theories.

For this last area, as a specialist in the development of algebraic programs, Hearn was also convinced that computers had made no contribution by the early 1970s:

In every generation there may arise an Einstein or Feynman who actually develops a new theory of nature completely different from any previously considered. Here is an area where computers so far have made zero impact, primarily, I suppose, because no one yet understands just how an Einstein first obtains that insight which suggests a new theory to him. Perhaps computers could help check some calculational point during the formulation of the work, but, by and large, the insight is produced by some rare quality we call ‘genius’ as yet impossible to classify in terms that a computer can simulate [59, p. 17].Footnote 31

But as the various italicized adverbs suggest, Hearn was not on the same wavelength as Bethe when it came to future expectations. It should be remembered that the former had developed REDUCE in circles close to McCarthy, a specialist in artificial intelligence. In this context, it appears that the dehumanization of physics did not really concern Hearn. On the contrary, the idea that computers could understand, be intelligent and learn was familiar to him, and he had clearly integrated it, at least in principle, into his framework of thought and research project. Thus, his general assessment of the development of algebraic programs in the early 1970s was marked by a certain enthusiasm for the future and the potential complete automation of Feynman diagram computation. At the Second Symposium on Symbolic and Algebraic Manipulation, held in Los Angeles in 1971, he declared: “At any rate, my dream of a completely automated theoretical physicist now looks a little closer to reality than two or three years ago” [59, p. 21].

2.4.2 The automated high-energy theoretical physicist

Hearn’s ideal of a fully automated theoretical physicist attracted attention at the turn of the 1960s and 1970s. For example, it was explicitly mentioned by David Barton and John Peter Fitch in their review of the applications of algebraic manipulation programs in physics [73, p. 297]. Calmet also readily shared this view. In 1972, at the First European Conference on Computational Physics in Geneva, the French physicist defended the idea that a completely automated treatment of QED seemed “quite reasonable because of the improvement in the algorithms and techniques for algebraic manipulationFootnote 32 and the availability of powerful computers.” In Hearn-inspired terminology, he even saw the “realization of an ‘automated QED physicists’ [as] a goal which [was] no longer out of reach” [84, pp. 202–203]. One good reason for believing in this possibility was provided by an article published by Campbell and Hearn in 1970 [123]. The former had worked a little earlier on extending the initial version of REDUCE in order to allow matrix elementsFootnote 33 to be derived from arbitrary Feynman diagrams [148].Footnote 34 At the end of the 1960s, his direct collaboration with Hearn became another ambitious extension. They developed a system of programs in LISP 1.5, working within the framework of REDUCE, which handled “all stages of calculation from the specification of an elementary-particle process in terms of a Hamiltonian of interaction or Feynman diagrams to the derivation of an absolute square of the matrix element for the process” [123, p. 280].

To this end, they first worked out the computer representation of a Feynman diagram and the automatic generation of all the graphs required for a given process at a given order. To do this, they relied in particular on Wick’s theorem, a method for reducing arbitrary products of creation and annihilation operators to sums of products of pairs of these operators. Several subroutines were used to examine potential pairs and generate appropriate diagrams. Thereafter, the set of programs developed by Campbell and Hearn was able to handle the specification of the Feynman rules and their application to a given diagram in order to determine physical matrix elements as a unique mapping of one data space onto another. It performed the algebra of Dirac matrices but was also trained to recognize integrals, proceed with their integration and finally calculate cross sections. This impressive result for its time, which demonstrated the theoretical possibility of automating a complete diagram calculation, nevertheless presented some serious limitations. Firstly, Campbell and Hearn’s identification of topologically equivalent diagrams proved to be rather laborious and was subsequently judged to be insufficiently efficient [149].Footnote 35 Also, as only the simplest cases were tackled, no renormalization procedure was carried out.

With regard to the latter shortcoming, the work of Calmet and Perrottet, as described in Sect. 2.3.3, was a clear and methodical manifestation of a genuine interest in developing a more complete, and therefore more automated, algebraic procedure for calculating Feynman diagrams. For a few years around 1970, their contributions made the Centre de Physique Théorique in Marseille—alongside the University of Utah where Hearn had been appointed in 1969—a leading institution in the progressive automation of theoretical high-energy physics.Footnote 36 There, Calmet and Perrottet benefited from the support of Visconti, an experienced figure in QFT. At the end of the 1960s, he had supervised their doctoral thesis on computer-assisted calculations of radiative corrections, and fully embraced the prospect of complete automation [118]. During this period, the number and variety of programs and subroutines used and developed by Calmet and Perrotet were considerable. In addition to the various renormalization packages already mentioned and Calmet’s program for calculating contributions of order \(e^6\) to the electron’s anomalous magnetic moment, mention should also be made of ACOFIS (Algebraic Computation of Feynman Integrals Scalar), written in LISP by Perrottet and designed to calculate diagrams that are at most logarithmically divergent. A FORTRAN program based on the Monte Carlo method was used for the numerical evaluation of numerous integrals on Feynman parameters, while much effort was also devoted to developing routines for generating Feynman diagrams [106, 128, 129]. FRENEY, developed by Perrottet in FORTRAN, was based on combinatorial analysis [151]. DIAG I and DIAG II, written in LISP by Calmet, were based on the generative functional formalism [128, p. 197]. In both cases, the elimination of unnecessary diagrams was achieved by the comparison method, which, however, required excessive memory usage [149].