Abstract

Higgs pair production is a crucial phenomenological process in deciphering the nature of the TeV scale and the mechanism underlying electroweak symmetry breaking. At the Large Hadron Collider, this process is statistically limited. Pushing the energy frontier beyond the LHC’s reach will create new opportunities to exploit the rich phenomenology at higher centre-of-mass energies and luminosities. In this work, we perform a comparative analysis of the \(hh+\text {jet}\) channel at a future 100 TeV hadron collider. We focus on the \(hh\rightarrow b\bar{b} b\bar{b}\) and \(hh \rightarrow b\bar{b} \tau ^+\tau ^-\) channels and employ a range of analysis techniques to estimate the sensitivity potential that can be gained by including this jet-associated Higgs pair production to the list of sensitive collider processes in such an environment. In particular, we observe that \(hh \rightarrow b\bar{b} \tau ^+\tau ^-\) in the boosted regime exhibits a large sensitivity to the Higgs boson self-coupling and the Higgs self-coupling could be constrained at the 8% level in this channel alone.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The observed lack of any conclusive evidence for new interactions beyond the Standard Model (BSM) during the LHC’s run-1 and the first 13 TeV analyses has tightly constrained a range of well-motivated BSM scenarios. For instance, the ATLAS and CMS collaborations have already set tight limits on top partners in supersymmetric (e.g. [1, 2]) and strongly-interacting theories (e.g. [3, 4]), which makes a natural interpretation of the TeV scale after the Higgs boson discovery more challenging than ever.

With traditional BSM paradigms facing increasing challenges as more data becomes available, a more bottom-up approach to parametrising potential new physics interactions has received attention recently. By interpreting Higgs analyses using Effective Field Theory (EFT), any heavy new physics scenario that is relevant for the Higgs sector can be investigated largely model-independently [5, 6], at the price of many ad hoc interactions to lowest order [7] in the EFT expansion.

Current measurements as well as first extrapolations of these approaches to the high luminosity (HL) phase of the LHC have provided first results as well as extrapolations of EFT parameters [8,9,10,11,12]. One of the parameters, which is particularly sensitive to electroweak symmetry breaking potential yet with poor LHC sensitivity prospects is the Higgs self-interaction. Constraining the trilinear self-interaction directly requires a measurement of (at least) \(pp \rightarrow hh\) [13,14,15,16,17]; accessing quartic interactions in triple Higgs production is not possible at the LHC [18, 19] and seems challenging at future hadron colliders at best [20, 21]. Early studies of the LHC’s potential to observe Higgs pair production have shown the most promising channels to be the \(hh\rightarrow b\bar{b} \gamma \gamma \) [22] and \(hh\rightarrow b\bar{b} \tau ^+\tau ^-\) channels [23, 24]. Recent projections by ATLAS [25] and CMS [26], based on an integrated luminosity of 3 ab\(^{-1}\) and on the pileup conditions foreseen for the HL-LHC, estimate a sensitivity to the di-Higgs signal in the range of 1–2\(\sigma \). Recent phenomenological papers [27,28,29], combining the sensitivity to several different di-Higgs final states, reach similar conclusions. ATLAS [25] quotes a sensitivity to the value of the Higgs self-coupling (assuming SM-like coupling values for all other relevant interactions) in the range of \(-0.8< \lambda /\lambda _{SM} < 7.7\), at 95% confidence limit. Improving this sensitivity baseline is one of the main motivations of future high energy hadron colliders, and proof-of-principle analyses suggest that a vastly improved extraction of trilinear Higgs coupling should become possible [30,31,32,33,34] at a future 100 TeV collider.

Most of these extrapolations have focused on gluon fusion production \(p(g)p(g)\rightarrow hh\). Owing to large gluon densities at low momentum fractions, the associated di-Higgs cross section increases by a factor of \(\sim 39\) compared to 14 TeV collisions [35, 36], with QCD corrections still dominated by additional unsuppressed initial state radiation [37,38,39,40,41,42,43]. While the process’ kinematic characteristics of Higgs pair production remain qualitatively identical to the LHC environment, extra jet emission becomes significantly less suppressed leading to a cross section enhancement of \(pp\rightarrow hhj\) of \(\sim 80\)Footnote 1 compared to 14 TeV collisions. This provides another opportunity for the 100 TeV collider: Since the measurement of the self-coupling is largely an effect driven by the top quark threshold [17], accessing relatively low di-Higgs invariant masses is the driving force behind the self-coupling measurement. In fact, recoiling a collimated Higgs pair against a jet kinematically decorrelates \(p_{T,h}\) and \(m_{hh}\). Compared to \(pp\rightarrow hh\), it thus exhibits a much higher sensitivity to the variation of the Higgs trilinear interaction while keeping \(p_{T,h}\) large [24], which is beneficial for the reconstruction and separation from backgrounds. However, such an approach is statistically limited at the LHC. Given the large increase in \(pp\rightarrow hh+\text {jet}\) production in this kinematical regime as well as the increased luminosity expectations at a 100 TeV collider, it can be expected that jet-associated Higgs pair production can add significant sensitivity to self-coupling studies at a 100 TeV machine.

Quantifying this sensitivity gain in a range of exclusive final states with different phenomenological techniques is the purpose of this work. More specifically we consider final states with largest accessible branching fractions \(hh \rightarrow b\bar{b} b\bar{b}\) [23, 44, 45] and \(hh \rightarrow b\bar{b} \tau ^+ \tau ^-\) [23, 24, 46], where we also differentiate between leptonic and hadronic \(\tau \) decays (and consider their combination).

This work is organised as follows: We consider the \(b\bar{b} \tau \tau \) channel in Sect. 2. In particular we compare the performance gain of a fully-resolved di-Higgs final state analysis extended by substructure techniques highlighting the importance of high-transverse momentum Higgs pairs that are copious at 100 TeV. We discuss the \(b\bar{b} b\bar{b}\) channel in Sect. 3.

2 The \(jbb\tau \tau \) channels

2.1 General comments

Let us first turn to the \(jbb\tau \tau \) channels. We will see that these are more sensitive to variations of the trilinear Higgs coupling and they therefore constitute the main result of this work. This is in line with similar studies at the LHC (see Refs. [24, 44, 46]) that show that the signal vs. background ratio can be expected to be better for this channel than for the four b case.

We study the various decay modes of the taus and consider two exclusive final states, purely leptonic tau decays \(h\rightarrow \tau _{\ell } \tau _{\ell }\) and mixed hadronic-leptonic decays \(h\rightarrow \tau _{\ell } \tau _h\), where the subscripts \(\ell \) and h denote the leptonic (to \(e, \mu \)) and hadronic decays of the taus, respectively. The scenario involving the purely hadronic decays, \(h\rightarrow \tau _h \tau _h\) will undoubtedly add to the significance. However, scenarios involving two hadronic taus will incur stronger QCD backgrounds and hence we will need to simulate various fake backgrounds and will also require an accurate knowledge of the \(j \rightarrow \tau _j\) fake rate, where j denotes a light jet. At this stage, we do not feel confident that we can reliably estimate these fake backgrounds, and hence neglect this decay mode in the present study.

There are three categories of backgrounds that we consider for this scenario. The most dominant background results from \(t\bar{t}j\) with the leptonic top decays (\(t \rightarrow b W \rightarrow b \ell \nu \)), which includes decays to all the three charged leptons.Footnote 2 Furthermore, we have the pure EW background and a mixed QCD-EW background of \(j b \bar{b} \tau ^+ \tau ^-\).Footnote 3 The pure EW and QCD+EW processes consist of various sub-processes. A typical example for the pure EW scenario is \(pp\rightarrow HZ/\gamma ^* + \; \text {jet} \rightarrow b\bar{b}\tau ^+\tau ^- + \; \text {jet}\). Whereas, for the QCD+EW processes, a typical example is \(pp\rightarrow b\bar{b} Z/\gamma ^* + \; \text {jet} \rightarrow b\bar{b}\tau ^+\tau ^- + \; \text {jet}\). In all these background processes, either from the \(\tau \) decays or from the W-boson decays (for the \(t\bar{t}j\) background), we may encounter leptons (\(e,\mu \)). There are potentially other irreducible backgrounds like \(W \; (\rightarrow \ell \nu )+\) jets but these turn out to be completely subdominant when compared to the other backgrounds. This is shown in the context of the \(hh \rightarrow bb\tau \tau \) present and future analyses by ATLAS [47] and CMS [48, 49]. Similar conclusions will hold in the present study. Hence we neglect such backgrounds from our present analysis. All samples, including the signal, are generated with MadGraph5_aMC@NLO [50] in Born-level mode, and we neglect effects from jet merging up to higher jet multiplicities. For our signal samples, the Higgs bosons are decayed using MadSpin [51, 52]; the showering is performed using Pythia 8 [53]. To account for QCD corrections we use global K factors for the signal of \(K=1.8\) for the EW contributions (extrapolating from [54]), \(K=1.5\) for the QCD+EW contribution [55] as well as \(K=1.0\) for \(t\bar{t} j\) following [56].

To operate with an efficient Monte Carlo tool chain, we generate the EW and mixed QCD+EW events with the following generator level cuts: \(p_T^{b} > 23\) GeV, \(p_T^{\ell }> 8\) GeV, \(|\eta ^{b,\ell }| < 3\), \(p_T^j > 100\) GeV, \(|\eta ^j| < 5\), \(\Delta R_{b,b}>0.2\), \(\Delta R_{\ell \ell } > 0.15\), \(\Delta R_{b/j,\ell }>0.3\), \(90~\text {GeV}< M_{b,b} < 160~\text {GeV}\) and \(90~\text {GeV}< M_{\ell , \ell } < 200~\text {GeV}\), where \(\ell = e, \mu , \tau \) and b denotes final state bottom quarks. R is the azimuthal angle—pseudo-rapidity (\(\phi \)–\(\eta \)) distance and M denotes invariant masses. The same requirements are imposed on \(t\bar{t} j\), however, without a lower bound on \(M_{\ell \ell }\). The only event generator cut applied to the signal is transverse momentum cut on the light flavor jet \(p_T^j > 100\) GeV.

Given the discriminating power of \(m_{T2}\) which was motivated in Ref. [46] to reduce the \(t\bar{t}\) background, we consider a similar variable with the aim to reduce the dominant \(t\bar{t}+{\text {jet}}\) background. The top background final state can be described schematically through a decay chain

where \(B,B'\) (\(C,C'\)) denote the visible (invisible) decay products of the top branching (\(A=t,\bar{t}\)). For such a branching one can construct the \(m_{T2}\) variable [57]

where \(m_T\) denotes the transverse mass constructed from \(\mathbf {b}_T\), \(\mathbf {c}_T\) and \(m_B\)

with transverse energy \(e_i^2 = m_i^2 + {\mathbf {p}}_{i,T}^2\), \(i=B,C\). \(m'_T\) refers to the same observable calculated from the primed quantities in Eq. (2.1). The minimisation in Eq. (2.1c) is performed over all momenta \(\mathbf {c}_T\) and \(\mathbf {c}'_T\), subject to the condition that their sum needs to reproduce the correct \({\mathbf {p}}_T^{\Sigma }\), which is normally chosen to coincide with the overall missing energy  . However, because the tau’s decay is partially observable, we can modify the \(m_{T2}\) definition to include the visible transverse momenta of the tau leptons by identifying

. However, because the tau’s decay is partially observable, we can modify the \(m_{T2}\) definition to include the visible transverse momenta of the tau leptons by identifying

As we will see below, this modified \(m_{T2}\) plays a crucial role in suppressing the dominant \(t\bar{t}j\) background. We must emphasise here that many distinctly different definitions of \(m_{T2}\) have been considered in Ref. [58]. The authors in Ref. [46] have considered several such definitions of the \(m_{T2}\) variable and found them having very similar discriminatory power.

2.2 The resolved \(\tau _{\ell } \tau _{\ell }\) channel

The leptonic di-tau final states are undoubtedly the cleanest channels out of the three di-tau options. We can identify exactly two leptons (\(e, \mu \)), two b-tagged jets and at least one hard non b-tagged jet. We therefore pre-select the events by requiring the following cuts at reconstruction level:Footnote 4 jets are clustered with size 0.4 and \(p_T^{j}>30\) GeV in \(|\eta |<4.5\); the hardest jet is required to have \(p_T^{j_1}>105~\text {GeV}\). Leptons are required to have \(p_T^{\ell }>10~\text {GeV}\) and \(|\eta | < 2.5\). We require two leptons and select two jets with \(p_T > 30\) GeV and \(|\eta | < 2.5\), which are subsequently b-tagged. All objects need to be well separated \(\Delta R(b,b/j_1/\ell ),\Delta R(\ell ,j_1) >0.4\) and \(\Delta R(\ell ,\ell )>0.2\). To efficiently suppress the Z-induced background we demand \(105~\text {GeV}< M_{b,b} < 145 ~\text {GeV}\). Furthermore we require a significant amount of missing energy  GeV.

GeV.

After these pre-selection requirements we apply a boosted decision tree (BDT) analysis which is the experiments’ weapon of choice when facing a small signal vs. background ratio (see e.g. the very recent ATLAS \(t\bar{t} h\) analysis [62]). We include a large amount of (redundant) kinematic informationFootnote 5 to the training phase, as listed in Table 1.Footnote 6

We focus on a training of the boosted decision tree for a SM-like value of the trilinear Higgs coupling \(\lambda _{\text {SM}}\). We employ the boosted decision tree algorithm of the TMVA framework [63] on the basis of 30 \(\text {ab}^{-1}\) of data at 100 TeV. Our results are tabulated in Table 2. As can be seen, we can typically expect small signal vs background ratios at small signal cross sections. The latter is mostly due to the small fully-leptonic branching ratios of the tau pairs.

2.3 The resolved \(\tau _{\ell } \tau _{h}\) channel

Given the small S / B for the fully leptonic channel of the previous section we consider the case where one tau lepton decays leptonically while the other tau decays hadronically.

Recently, a major CMS level-1 trigger update has increased the hadronic tau tagging efficiency by a factor of two [65,66,67] for tau candidates with \(p_T\gtrsim 20~\text {GeV}\), robust against pile-up effects. Fully-hadronic di-tau decays of the Higgs boson for 13 TeV collisions can be tagged at 70% with a background rejection of around 0.999. These improvements suggest that a single tau tagging performance of 70% in a busier environment of the hhj final state at 100 TeV is not unrealistic and we adopt this working point in the following, assuming a sufficiently large background rejection for fakes to be negligible.

We follow the analysis of the previous section and employ similar variables for the BDT. The only difference here is that here we demand 2 b-tagged jets, one \(\tau \)-tagged jet, one lepton and at least one hard non \(b,\tau \)-tagged jet. All the aforementioned variables for the \(\tau _{\ell } \tau _{\ell }\) scenario, Table 1, can be utilised here with the only difference of replacing one lepton by a \(\tau _h\).Footnote 7 The distributions are shown in Figs. 1, 2 and 3 and the results are tabulated in Table 3. As can be seen, different to the fully-leptonic case, the increase in signal allows us to suppress the dominant \(t\bar{t}j\) background further without compromising the signal count too much. This leads to a much larger expected sensitivity in the \(\tau _\ell \tau _h\) channels.

Combining the results of the previous section with the \(\tau _\ell \tau _h\) results into a log-likelihood CLs hypothesis test [68,69,70] assuming the SM as null hypothesis values of (assuming no systematic uncertainties)

at 68% confidence level. Here, \(\kappa _{\lambda } = \lambda /\lambda _{\text {SM}}\), is the measure of the deviation of the Higgs trilinear coupling with respect to the SM expectation.

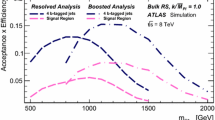

2.4 The significance of high-\(p_T\) final states

So far our strategy has focused on resolved particle-level objects without making concessions for the larger expected sensitivity of the high \(p_T\) final states. Jet-substructure techniques (see e.g. [71]) are expected to be particularly suited for kinematic configurations for which \(h\rightarrow b\bar{b}\) recoils against the light-flavor and hard jet [24], while the \(h\rightarrow \tau \tau \) decay happens at reasonably low transverse momentum. This way, although one Higgs is hard, low invariant Higgs pair-masses can be accessed from an isotropic \(h\rightarrow \tau \tau \) decay given a collimated \(b\bar{b}\) pair. This particular kinematic configuration is not highlighted in the previous section and we can expect that the sensitivity of Eq. (2.22.3) will increase once we focus with jet-substructure variables on this phase-space region which is highly relevant for our purposes. The benefit of this analysis will hence be two-fold: firstly we will exploit the background rejection of the non-Higgs final states through the adapted strategies of jet-substructure techniques. And secondly we will directly focus on a phase space region where we can expect the impact of \(\kappa _\lambda \ne 1\) to be most pronounced.

To isolate this particular region, we change the analysis approach of Sects. 2.2 and 2.3. Before passing the events to the BDT we require at least two so-called fat jets of size \(R=1.5\) and \(p_T^{j} > 110~\text {GeV}\). One of these fat jets is required to contain displaced vertices associated with B mesons. We remove the jet constituents (that can contain leptons) and re-cluster the event along with our standard anti-kT choice. We then require either two isolated leptons (\(\tau _\ell \tau _\ell \) cases) or one isolated lepton together with one \(\tau \)-tagged jet (\(p_T>30~\text {GeV}\)) using again a tagging efficiency of 70% (\(\tau _\ell \tau _h\) cases). All these objects are required to be in the central part of the detector \(|\eta | <2.5\). Subsequently we apply substructure techniques to the jet containing displaced vertices following the by-now standard procedure of Ref. [71] (we refer the reader for details to this publication and limit ourselves to quoting our choices of mass drop parameter 0.667 and \(\sqrt{y}=0.3\)). After jet-filtering we double-b tag the two hardest subjets with an efficiency of 70% (2% mistag rate) and require the identified B-mesons to have \(p_T>25~\text {GeV}\).Footnote 8 Finally, we require the leptons to be separated by \(\Delta R(\ell \ell )>0.2\) in the \(\tau _\ell \tau _\ell \) case. In the \(\tau _h\tau _\ell \) case we require the lepton to be sufficiently well-separated from the hadronic tau \(\Delta R(\ell ,\tau _h)>0.4\).

We use the (jet-substructure) observables of Table 4 as BDT inputFootnote 9 (for a discussion of redundancies of the used observables see below). The signal vs. background discriminating power is shown in Figs. 4 and 5. We can increase the sensitivity of the signal by using the collinear approximation outlined in Ref. [72] for the \(\tau \tau \) pair.

The combined results are tabulated in Table 5. As can be seen, this approach retains larger signal and background cross sections compared to the fully-resolved approach that has a combined \(S/B\simeq 0.08\). The sensitivity to \(\kappa _\lambda \) is slightly more pronounced in the jet-substructure approach as expected. Together with the increased statistical control we can therefore constrain \(\kappa _\lambda \) slightly more tightly (assuming again no systematic uncertainties)

at 68% confidence level using the identical CLs approach as above.

Before concluding this section we note that for our \(b\bar{b} \tau ^+ \tau ^-\) analyses, the S / B values are 10% or more for the boosted combined (\(\tau _{\ell } \tau _h + \tau _{\ell } \tau _{\ell }\)) analysis and the resolved \(\tau _l \tau _h\) analysis. For the \(\tau _{\ell } \tau _{\ell }\) analysis however, we get S / B below 5%. Such values of S / B are not uncommon in Higgs analyses at the LHC. For example the S / B in the inclusive \(H\rightarrow \gamma \gamma \) search is 1/30, and in the observation of \(VH(\rightarrow b\bar{b})\), the S / B is in the range of 1–2% [73], depending on the vector boson decay mode. Ultimately, what counts is the precision with which the background rate can be determined. In our case, as in the LHC examples given above, the background rate can be extracted directly from the data, using the sidebands of the various kinematical distributions that we consider.

2.5 Comments on cut-and-count experiments and redundancies

A possible source of criticism of BDT based signal selection is that they cannot be straightforwardly mapped onto cut-and-count analyses, and the obtained signal region does not necessarily consist of connected physical phase space regions. In a busy collider environment with many competing processes and background rates that exceed the expected signal by orders of magnitude, multivariate methods are nevertheless very powerful tools that allow to extract information in various forms.Footnote 10

The kinematics of \(pp\rightarrow h h j\) is fully determined by five independent parameters. This raises the question whether the observed correlations of observables might allow us to consider subsets of the observables listed above. We investigate this by systematically removing correlated observables to trace their impact on our final sensitivity; we focus on the boosted selection as it shows the largest physics potential.

When removing observables which exhibit correlations of more that 70%, we find our signal yields decreased in the percent range while the background (most notably \(t\bar{t}j\)) increases by \(\gtrsim 15\%\). The impact on the signal, although small in size, is such that the \(\kappa _\lambda \)-dependence of the cross section becomes flatter. In total, focussing on observables with less than 70% correlation therefore translates into constraints on the trilinear coupling \( 0.89< \kappa _\lambda < 1.28\) at 30/ab, which is clearly worse than the projection of Eq. (2.42.5). Decreasing our correlation threshold to 60%, we find our sensitivity even further decreased. This, together with a uniform relative importance of the observables for the BDT output score, indicates that the comprehensive list of observables indeed provides important discriminatory power, in particular when fighting against the large \(t\bar{t} j\) background.

We can test the robustness of our analysis by comparing it against a more traditional cut-and-count approach. As part of the BDT analysis we can use the BDT’s observable ranking to choose rectangular cuts in a particularly adapted way. From the cut-flow documented in Table 6, we see, that we can reproduce the BDT S / B sensitivity within a factor of two.

3 The jbbbb channel

Finally, we consider the \(b \bar{b} b \bar{b} j\) channel for completeness. In order to compete with the large pure QCD background that contributes to this process and to trigger the event we need to consider very hard jets, \(p_T^{j_1}\gtrsim 300~\text {GeV}\). For a more efficient background simulation, we therefore again generate the background events already with relatively hard cuts at the generator level. We choose the jet transverse momentum \(p_T^j > 250\) GeV, the \(\Delta R\) separation between bottom quarks and the light jet \(\Delta R_{b,j}> 0.4\), bottom quark transverse momentum threshold \(p_T^b > 15\) GeV, as well as bottom rapidity range \(|\eta ^b| < 3.0\). Furthermore, the jet rapidity range is restricted to \(|\eta ^j| < 5.0\) and we also require the bottom quarks to be separated in distance \(\Delta R_{b,b} > 0.2\) as well as invariant mass \(M_{b,b} > 30\) GeV.Footnote 11 For the signal, we only impose the generation level cut, \(p_T^{j_1} > 200\) GeV. Throughout this part of the analysis, we will include a flat b-tagging efficiency of 70% with mistag efficiency 2%.

Discriminating observables contributing to the boosted analysis of Sect. 2.4

Discriminating observables contributing to the boosted analysis of Sect. 2.4

To account for QCD corrections we use again global K factors as described above. In addition to the backgrounds discussed for the \(\tau \) channels, we also need to include a pure QCD background leading to four final state b quarks. The QCD corrections for this highly-involved final state are not available. We choose to use \(K=1\). We note that this is consistent with the range of K factors for inclusive 4 jet production discussed in Ref. [75].

3.1 The resolved channel

The signal vs. background ratio is small for such inclusive selections. Therefore, in order to assess the sensitivity that can be reached in principle, we will again employ a multi-variate analysis strategy. Before passing the events to the multi-variate algorithm, we pre-select events according to the \(hh+\text {jet}\) signal event topology. For the resolved analysis we require 4 b-tagged jets and at least one hard non b-tagged jet with \(p_T^{j} > 300\) GeV. The b-tagged jets are required to have a minimum \(p_T\) of 30 GeV and need to fall inside the central detector region \(|\eta ^b| < 2.5\). All reconstructed objects need to be separated by \(\Delta R >0.4\). Furthermore we define two masses: Firstly, \(M_h^{\text {min},M}\) which is the reconstructed Higgs masses from pairing b-tagged jets close to the Higgs mass of 125 GeV. And secondly, \(M_h^{\text {min},\Delta R}\) which follows from requiring that the first Higgs arises from the b-tagged jets with the smallest \(\Delta R\) separation. We require that both \(M_h^{\text {min},M},M_h^{\text {min},\Delta R}>30~\text {GeV}\). Finally we only use the Higgs bosons reconstructed upon utilising the minimum mass difference procedure.

Again we input a number of kinematic distributions to the BDT, detailed in Table 7, the results are shown in Table 8. The signal vs. background ratio is extremely small, \(O(10^{-3})\), leaving the analysis highly sensitive to systematic uncertainties with only little improvement possible using jet-substructure approaches.

3.2 The boosted channel

We follow here the philosophy of Sect. 2.4, by exploiting the fact that most of the sensitivity to the Higgs self-coupling comes from configurations where the di-Higgs system has a small invariant mass. This can be achieved by requiring the di-Higgs system to recoil against one or more high \(p_T^{j}\) jets. If the Higgses have enough transverse momentum, their decay products, the \(b \bar{b}\) pairs, will be collimated and eventually will be clustered as large radius jets. Such jets can be identified and disentangled from QCD jets with the use of standard substructure techniques.

Events are first pre-selected by requiring at least two central fat jets with parameter \(R=0.8\) that contain at least two b-subjets. The fat jets are selected if \(p_T^{j} > 300\) GeV and \(|\eta ^{j}| < 2.5\). We assume, as previously, a conservative \(70\%\) b-tagging efficiency. We further ask the di-fatjet pair to be sufficiently boosted, \(p_T^{jj} > 250\) GeV, and the leading jet to have a \(p_T^{j_1} > 400\) GeV. Finally, we require that \(\Delta R(j_1,j_2) < 3.0\) as well as \((p_T^{j_1} - p_T^{j_2})/p_T^{jj} < 0.9\).

The last steps of the event selection make use of jet-substructure observables and are designed to identify the collimated Higgs fat jets with high purity. The main background contribution is QCD \(g \rightarrow b \bar{b}\) events, where configurations are dominated by soft and collinear splittings. The resulting jets are hence often characterized by one hard prong, as opposed to fat jets containing the Higgs decay products, that will feature a clear two-prong structure. The “2” versus “1” prong hypotheses of a jet can be tested with the \(\tau _{2,1}\) observable [76]. Moreover Higgs jets typically have an invariant mass close to \(m_H = 125\) GeV, as opposed to QCD jets that tend to have a small mass. QCD jets can therefore be rejected by requiring a soft-dropped mass \(m_{SD}\) [77] of the order of the Higgs mass. These two observables are shown in Fig. 6 for the leading reconstructed fat-jet. The Higgs-jet tag consists in selecting jets with \(\tau _{2,1} < 0.35\) and \(100< m_{SD} < 130\) GeV. This simple selection yields a tagging efficiency of 6% and a mistag rate of 0.1%. We apply the Higgs-jet tagging procedure to the two fat jets.

The final results for the boosted analysis are summarized in Table 9. Although we find only a mild improvement on the significance compared to the resolved analysis, there is a clear improvement on the signal over background ratio \(\sim 0.02\), allowing to better control background systematics.

4 Summary and conclusions

Di-Higgs searches and their associated interpretation in terms of new, non-resonant physics are a key motivation for a future high-energy pp collider. Recent analyses have mainly focused on direct \(pp\rightarrow hh\) production, which has the shortcoming of back-to-back Higgs production generically accessing a phase space region with only limited sensitivity to the modifications of the trilinear Higgs coupling. This situation can be improved by accessing kinematical configurations where a collinear Higgs pair recoils against a hard jet, thus accessing small invariant masses \(M_{hh}\simeq 2m_t\) over a broad range of final state kinematics. This is the region where modifications of the trilinear Higgs coupling are most pronounced.

In this work, we have focussed on this hhj final state at a 100 TeV collider. As exclusive final state cross sections are small, we focus in particular on the dominant \(hh\rightarrow b\bar{b} b\bar{b}\) and \(hh\rightarrow b\bar{b} \tau ^+ \tau ^-\) decay channels. Multi-Higgs final states suffer from small rates even in these dominant Higgs decay modes, which necessitates considering multivariate analysis techniques. We find that although the four b final state is challenged by backgrounds with some opportunities to enhance sensitivity at large momenta, the \(hh\rightarrow b\bar{b} \tau \tau \) final states provide a promising avenue to add significant sensitivity to the search for non-standard Higgs interactions. In particular, the hadronic tau decay channels which can be isolated with cutting-edge reconstruction techniques introduced by the CMS collaboration, drives the sensitivity. Relying on boosted final states, we show that hhj production could in principle allow to constrain the Higgs self-coupling at the 8% level at 30/ab (assuming no systematic uncertainties and other couplings to be SM-like). This precision is thus worse than the \(\sim 4\%\) result obtained for the inclusive \(hh(\rightarrow b\bar{b}\gamma \gamma )\) channel shown in Ref. [34]. Given the complexities of these analyses involving the Higgs self-coupling, we find it important that there be several independent modes to probe its value with a precision below the 10% threshold. Furthermore, the different kinematical regimes probed by the hh and the hhj measurements could be sensitive in different ways to possible deviations from the SM expectations. This motivates \(pp\rightarrow hh j\) with semi-leptonic tau decays as an additional main search channel for modified Higgs physics.

Notes

We impose \(p_T(j) > 100\) GeV at the parton level.

The top decays to \(b, \ell \; \text {and} \; \nu \) have been implemented as decay chains in the MadGraph5_aMC@NLO framework, at the production level.

The “EW” and “QCD+EW” processes correspond in MadGraph5_aMC@NLO to the interaction orders QED \(=\) 4 QCD \(=\) 1 (pure EW) and QED \(=\) 2 QCD \(=\) 3 (mixed QCD+EW) respectively. We note that both classes of processes include single-Higgs production contributions.

Jets are defined through the anti-kT algorithm [59, 60] with a jet resolution parameter 0.4 inside the rapidity range \(|\eta ^j| < 4.5\). For the b-tagging efficiency we choose 60% at a 2% mistagging rate, which is a realistic at the LHC [61]. Isolated muons and electrons are defined by requiring a small hadronic energy deposit in the vicinity of the lepton candidate, \(E^{\text {had}}/p_T^{\ell }<10\%\) within \(\Delta R<0.2\).

The redundant variables which do not affect the significance are: \(p_T^{b_2}, p_T^{\ell _2}, \Delta R (b_2 \ell _2), \Delta R (\ell _2 j)\) and \(\Delta \phi (bb,\ell \ell )\), where the index refers to the \(p_T\) ordering of an object.

We have checked our results for overtraining.

The redundant variables for this case are: \(\Delta R (b_2 \ell ), \Delta R (b_2 \tau _h), \Delta R (b_1 j), \Delta R (b_2 j)\) and \(\Delta R (\tau _h j)\).

Furthermore, we also require light jets faking b-jets to have \(p_T > 25\) GeV.

Here also we obtain no change in sensitivity upon removing the redundant variables, viz., \(p_{T,2}^{\text {filt}}, p_T^{\tau _{\text {vis},1}}, p_T^j, \Delta R (b_2 j), \Delta R (\tau _{\text {vis},1} j), \Delta R (b_1 j)\) and \(\frac{p_T^{\tau _{\text {vis},2}}}{p_T^{\tau _{\text {vis},1}}}\).

For instance, the recently reported evidence of \(t\bar{t} h\) production [74] crucially relies on neural net analyses.

Jets are defined as in Sect. 2.2.

References

CMS Collaboration, Search for supersymmetry in events with at least one soft lepton, low jet multiplicity, and missing transverse momentum in proton–proton collisions at \(\sqrt{s}=13~\text{TeV}\). Technical Report. CMS-PAS-SUS-16-052, CERN, Geneva (2017)

ATLAS Collaboration, Search for direct top squark pair production in final states with two leptons in \(\sqrt{s} = 13\) TeV \(pp\) collisions with the ATLAS detector. Technical Report. ATLAS-CONF-2017-034, CERN, Geneva (2017)

ATLAS Collaboration, Search for pair production of vector-like top partners in events with exactly one lepton and large missing transverse momentum in \(\sqrt{s}=13\) TeV \(pp\) collisions with the ATLAS detector. Technical Report. ATLAS-CONF-2016-101, CERN, Geneva (2016)

CMS Collaboration, Search for a vector-like top partner produced through electroweak interaction and decaying to a top quark and a Higgs boson using boosted topologies in the all-hadronic final state. Technical Report. CMS-PAS-B2G-16-005, CERN, Geneva (2016)

W. Buchmuller, D. Wyler, Effective Lagrangian analysis of new interactions and flavor conservation. Nucl. Phys. B 268, 621–653 (1986)

K. Hagiwara, R.D. Peccei, D. Zeppenfeld, K. Hikasa, Probing the weak boson sector in \(e^+ e^- \rightarrow W^+ W^-\). Nucl. Phys. B 282, 253 (1987)

B. Grzadkowski, M. Iskrzynski, M. Misiak, J. Rosiek, Dimension-six terms in the standard model Lagrangian. JHEP 10, 085 (2010). arXiv:1008.4884

C. Englert, A. Freitas, M.M. Muhlleitner, T. Plehn, M. Rauch, M. Spira, K. Walz, Precision measurements of Higgs couplings: implications for new physics scales. J. Phys. G 41, 113001 (2014). arXiv:1403.7191

C. Englert, R. Kogler, H. Schulz, M. Spannowsky, Higgs coupling measurements at the LHC, Eur. Phys. J.C76(7), 393 (2016). arXiv:1511.05170

A. Falkowski, M. Gonzalez-Alonso, A. Greljo, D. Marzocca, Global constraints on anomalous triple gauge couplings in effective field theory approach. Phys. Rev. Lett. 116(1), 011801 (2016). arXiv:1508.00581

T. Corbett, O.J.P. Eboli, D. Goncalves, J. Gonzalez-Fraile, T. Plehn, M. Rauch, The Higgs legacy of the LHC run I. JHEP 08, 156 (2015). arXiv:1505.05516

T. Corbett, O.J.P. Eboli, D. Goncalves, J. Gonzalez-Fraile, T. Plehn, M. Rauch, The non-linear Higgs legacy of the LHC Run I. arXiv:1511.08188

D.A. Dicus, C. Kao, S.S.D. Willenbrock, Higgs boson pair production from gluon fusion. Phys. Lett. B 203, 457–461 (1988)

E.W.N. Glover, J.J. van der Bij, Higgs boson pair production via gluon fusion. Nucl. Phys. B 309, 282–294 (1988)

A. Djouadi, W. Kilian, M. Muhlleitner, P.M. Zerwas, Production of neutral Higgs boson pairs at LHC. Eur. Phys. J. C 10, 45–49 (1999). arXiv:hep-ph/9904287

T. Plehn, M. Spira, P.M. Zerwas, Pair production of neutral Higgs particles in gluon–gluon collisions. Nucl. Phys. B 479, 46–64 (1996). arXiv:hep-ph/9603205 [Erratum: Nucl. Phys. B 531, 655 (1998)]

U. Baur, T. Plehn, D.L. Rainwater, Measuring the Higgs boson self coupling at the LHC and finite top mass matrix elements. Phys. Rev. Lett. 89, 151801 (2002). arXiv:hep-ph/0206024

T. Plehn, M. Rauch, The quartic Higgs coupling at hadron colliders. Phys. Rev. D 72, 053008 (2005). arXiv:hep-ph/0507321

F. Kling, T. Plehn, P. Schichtel, Maximizing the significance in Higgs boson pair analyses. Phys. Rev. D 95(3), 035026 (2017). arXiv:1607.07441

A. Papaefstathiou, K. Sakurai, Triple Higgs boson production at a 100 TeV proton–proton collider. JHEP 02, 006 (2016). arXiv:1508.06524

B. Fuks, J.H. Kim, S.J. Lee, Scrutinizing the Higgs quartic coupling at a future 100 TeV proton–proton collider with taus and b-jets. Phys. Lett. B 771, 354–358 (2017). arXiv:1704.04298

U. Baur, T. Plehn, D.L. Rainwater, Probing the Higgs selfcoupling at hadron colliders using rare decays. Phys. Rev. D 69, 053004 (2004). arXiv:hep-ph/0310056

U. Baur, T. Plehn, D.L. Rainwater, Examining the Higgs boson potential at lepton and hadron colliders: a comparative analysis. Phys. Rev. D 68, 033001 (2003). arXiv:hep-ph/0304015

M.J. Dolan, C. Englert, M. Spannowsky, Higgs self-coupling measurements at the LHC. JHEP 10, 112 (2012). arXiv:1206.5001

ATLAS Collaboration, Study of the double Higgs production channel \(H(\rightarrow b\bar{b})H(\rightarrow \gamma \gamma )\) with the ATLAS experiment at the HL-LHC. Technical Report. ATL-PHYS-PUB-2017-001, CERN, Geneva (2017)

CMS Collaboration, Higgs pair production at the High Luminosity LHC. Technical Report. CMS-PAS-FTR-15-002, CERN, Geneva (2015)

F. Goertz, A. Papaefstathiou, L.L. Yang, J. Zurita, Higgs boson self-coupling measurements using ratios of cross sections. JHEP 06, 016 (2013). arXiv:1301.3492

A. Adhikary, S. Banerjee, R.K. Barman, B. Bhattacherjee, S. Niyogi, Revisiting the non-resonant Higgs pair production at the HL-LHC. arXiv:1712.05346

J.H. Kim, Y. Sakaki, M. Son, Combined analysis of double Higgs production via gluon fusion at the HL-LHC in the effective field theory approach. arXiv:1801.06093

W. Yao, Studies of measuring Higgs self-coupling with \(HH\rightarrow b\bar{b} \gamma \gamma \) at the future hadron colliders, in Proceedings, 2013 Community Summer Study on the Future of U.S. Particle Physics: Snowmass on the Mississippi (CSS2013): Minneapolis, MN, USA, July 29–August 6, 2013 (2013). arXiv:1308.6302

A.J. Barr, M.J. Dolan, C. Englert, D.E. Ferreira de Lima, M. Spannowsky, Higgs self-coupling measurements at a 100 TeV hadron collider. JHEP 02, 016 (2015). arXiv:1412.7154

A. Azatov, R. Contino, G. Panico, M. Son, Effective field theory analysis of double Higgs boson production via gluon fusion. Phys. Rev. D 92(3), 035001 (2015). arXiv:1502.00539

H.-J. He, J. Ren, W. Yao, Probing new physics of cubic Higgs boson interaction via Higgs pair production at hadron colliders. Phys. Rev. D 93(1), 015003 (2016). arXiv:1506.03302

R. Contino et al., Physics at a 100 TeV pp collider: Higgs and EW symmetry breaking studies. CERN Yellow Report, no. 3 (2017), pp. 255–440. arXiv:1606.09408

LHC Higgs Cross Section Working Group Collaboration, D. de Florian et al., Handbook of LHC Higgs cross sections: 4. Deciphering the nature of the Higgs sector. arXiv:1610.07922

LHC Higgs Cross Section HH Subgroup. https://twiki.cern.ch/twiki/bin/view/LHCPhysics/LHCHXSWGHH. Accessed 20 Apr 2018

S. Dawson, S. Dittmaier, M. Spira, Neutral Higgs boson pair production at hadron colliders: QCD corrections. Phys. Rev. D 58, 115012 (1998). arXiv:hep-ph/9805244

D. de Florian, J. Mazzitelli, Higgs boson pair production at next-to-next-to-leading order in QCD. Phys. Rev. Lett. 111, 201801 (2013). arXiv:1309.6594

S. Borowka, N. Greiner, G. Heinrich, S. Jones, M. Kerner, J. Schlenk, U. Schubert, T. Zirke, Higgs boson pair production in gluon fusion at next-to-leading order with full top-quark mass dependence. Phys. Rev. Lett. 117(1), 012001 (2016). arXiv:1604.06447 [Erratum: Phys. Rev. Lett. 117(7), 079901 (2016)]

D.Y. Shao, C.S. Li, H.T. Li, J. Wang, Threshold resummation effects in Higgs boson pair production at the LHC. JHEP 07, 169 (2013). arXiv:1301.1245

D. de Florian, J. Mazzitelli, Higgs pair production at next-to-next-to-leading logarithmic accuracy at the LHC. JHEP 09, 053 (2015). arXiv:1505.07122

R. Frederix, S. Frixione, V. Hirschi, F. Maltoni, O. Mattelaer, P. Torrielli, E. Vryonidou, M. Zaro, Higgs pair production at the LHC with NLO and parton-shower effects. Phys. Lett. B 732, 142–149 (2014). arXiv:1401.7340

F. Maltoni, E. Vryonidou, M. Zaro, Top-quark mass effects in double and triple Higgs production in gluon–gluon fusion at NLO. JHEP 11, 079 (2014). arXiv:1408.6542

D.E. Ferreira de Lima, A. Papaefstathiou, M. Spannowsky, Standard model Higgs boson pair production in the (\( b\overline{b} \))(\( b\overline{b} \)) final state. JHEP 08, 030 (2014). arXiv:1404.7139

J.K. Behr, D. Bortoletto, J.A. Frost, N.P. Hartland, C. Issever, J. Rojo, Boosting Higgs pair production in the \(b\bar{b}b\bar{b}\) final state with multivariate techniques. Eur. Phys. J. C 76(7), 386 (2016). arXiv:1512.08928

A.J. Barr, M.J. Dolan, C. Englert, M. Spannowsky, Di-Higgs final states augMT2ed—selecting \(hh\) events at the high luminosity LHC. Phys. Lett. B 728, 308–313 (2014). arXiv:1309.6318

Higgs Pair Production in the \(H(\rightarrow \tau \tau )H(\rightarrow b\bar{b})\) channel at the High-Luminosity LHC. Technical Report. ATL-PHYS-PUB-2015-046, CERN, Geneva (2015)

CMS Collaboration, Search for non-resonant Higgs boson pair production in the \(\rm b\overline{b}\tau ^+\tau ^-\) final state. Technical Report. CMS-PAS-HIG-16-012, CERN, Geneva (2016)

CMS Collaboration, A.M. Sirunyan et al., Search for Higgs boson pair production in events with two bottom quarks and two tau leptons in proton–proton collisions at \(\sqrt{s} =13\) TeV. Phys. Lett. B 778, 101–127 (2018). arXiv:1707.02909

J. Alwall, R. Frederix, S. Frixione, V. Hirschi, F. Maltoni, O. Mattelaer, H.S. Shao, T. Stelzer, P. Torrielli, M. Zaro, The automated computation of tree-level and next-to-leading order differential cross sections, and their matching to parton shower simulations. JHEP 07, 079 (2014). arXiv:1405.0301

S. Frixione, E. Laenen, P. Motylinski, B.R. Webber, Angular correlations of lepton pairs from vector boson and top quark decays in Monte Carlo simulations. JHEP 04, 081 (2007). arXiv:hep-ph/0702198

P. Artoisenet, R. Frederix, O. Mattelaer, R. Rietkerk, Automatic spin-entangled decays of heavy resonances in Monte Carlo simulations. JHEP 03, 015 (2013). arXiv:1212.3460

T. Sjöstrand, S. Ask, J.R. Christiansen, R. Corke, N. Desai, P. Ilten, S. Mrenna, S. Prestel, C.O. Rasmussen, P.Z. Skands, An introduction to PYTHIA 8.2. Comput. Phys. Commun. 191, 159–177 (2015). arXiv:1410.3012

T. Binoth, T. Gleisberg, S. Karg, N. Kauer, G. Sanguinetti, NLO QCD corrections to ZZ+ jet production at hadron colliders. Phys. Lett. B 683, 154–159 (2010). arXiv:0911.3181

J.M. Campbell, R.K. Ellis, Next-to-leading order corrections to \(W^+\) 2 jet and \(Z^+\) 2 jet production at hadron colliders. Phys. Rev. D 65, 113007 (2002). arXiv:hep-ph/0202176

G. Bevilacqua, H.B. Hartanto, M. Kraus, M. Worek, Top quark pair production in association with a jet with next-to-leading-order QCD off-shell effects at the large hadron collider. Phys. Rev. Lett. 116(5), 052003 (2016). arXiv:1509.09242

C.G. Lester, D.J. Summers, Measuring masses of semi-invisibly decaying particles pair produced at hadron colliders. Phys. Lett. B 463, 99–103 (1999). arXiv:hep-ph/9906349

P. Baringer, K. Kong, M. McCaskey, D. Noonan, Revisiting combinatorial ambiguities at hadron colliders with \(M_{T2}\). JHEP 10, 101 (2011). arXiv:1109.1563

M. Cacciari, G.P. Salam, G. Soyez, The anti-k(t) jet clustering algorithm. JHEP 04, 063 (2008). arXiv:0802.1189

M. Cacciari, G.P. Salam, G. Soyez, FastJet user manual. Eur. Phys. J. C 72, 1896 (2012). arXiv:1111.6097

ATLAS Collaboration, Measurement of the b-tag Efficiency in a Sample of Jets Containing Muons with 5 fb1 of Data from the ATLAS Detector, ATLAS-CONF-2012-043

ATLAS Collaboration, M. Aaboud et al., Search for the Standard Model Higgs boson produced in association with top quarks and decaying into a \(b\bar{b}\) pair in \(pp\) collisions at \(\sqrt{s} = 13\) TeV with the ATLAS detector. arXiv:1712.08895

A. Hocker et al., TMVA—toolkit for multivariate data analysis. PoS ACAT, 040 (2007). arXiv:physics/0703039

LHC Higgs Cross Section Working Group Collaboration, J.R. Andersen et al., Handbook of LHC Higgs cross sections: 3. Higgs properties. arXiv:1307.1347

CMS Collaboration, L. Cadamuro, The CMS level-1 tau algorithm for the LHC Run II. PoS EPS-HEP2015, 226 (2015)

CMS Collaboration Collaboration, L1 calorimeter trigger upgrade: tau performance, CMS-DP-2015-009

L. Mastrolorenzo, The CMS Level-1 Tau identification algorithm for the LHC Run II. Nucl. Part. Phys. Proc. 273–275, 2518–2520 (2016)

T. Junk, Confidence level computation for combining searches with small statistics. Nucl. Instrum. Methods A 434, 435–443 (1999). arXiv:hep-ex/9902006

A.L. Read, Modified frequentist analysis of search results (the CL(s) method), in Workshop on Confidence Limits, CERN, Geneva, Switzerland, 17–18 Jan 2000: Proceedings (2000), pp. 81–101

A.L. Read, Presentation of search results: the CL(s) technique. J. Phys. G 28, 2693–2704 (2002) [11 (2002)]

J.M. Butterworth, A.R. Davison, M. Rubin, G.P. Salam, Jet substructure as a new Higgs search channel at the LHC. Phys. Rev. Lett. 100, 242001 (2008). arXiv:0802.2470

A. Elagin, P. Murat, A. Pranko, A. Safonov, A new mass reconstruction technique for resonances decaying to di-tau. Nucl. Instrum. Methods A 654, 481–489 (2011). arXiv:1012.4686

ATLAS Collaboration, M. Aaboud et al., Evidence for the \( H\rightarrow b\overline{b} \) decay with the ATLAS detector. JHEP 12, 024 (2017). arXiv:1708.03299

ATLAS Collaboration, M. Aaboud et al., Evidence for the associated production of the Higgs boson and a top quark pair with the ATLAS detector. Phys. Rev. D (2017). arXiv:1712.08891 (submitted)

M.L. Mangano et al., Physics at a 100 TeV pp collider: standard model processes. CERN Yellow Report, no. 3 (2017), pp. 1–254. arXiv:1607.01831

J. Thaler, K. Van Tilburg, Identifying boosted objects with N-subjettiness. JHEP 03, 015 (2011). arXiv:1011.2268

A.J. Larkoski, S. Marzani, G. Soyez, J. Thaler, Soft drop. JHEP 05, 146 (2014). arXiv:1402.2657

Acknowledgements

S.B. would like to thank Shilpi Jain for her immense help during various stages of this project. S.B. would also like to thank Biplob Bhattacherjee and Amit Chakraborty for many helpful and insightful discussions. S.B. acknowledges the support of the Indo French LIA THEP (Theoretical high Energy Physics) of the CNRS, France and is also supported by a Durham Junior Research Fellowship COFUNDed between Durham University and the European Union under Grant agreement number 609412. C.E. is supported by the IPPP Associateship scheme and by the UK Science and Technology Facilities Council (STFC) under Grant ST/P000746/1.

Author information

Authors and Affiliations

Corresponding author

Additional information

IPPP/18/10, LAPTH-003/18, CERN-TH-2018-023.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funded by SCOAP3

About this article

Cite this article

Banerjee, S., Englert, C., Mangano, M.L. et al. \(hh+\text {Jet}\) production at 100 TeV. Eur. Phys. J. C 78, 322 (2018). https://doi.org/10.1140/epjc/s10052-018-5788-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-018-5788-y