Abstract

For obtaining reliable nanostructural details of large amounts of sample—and if it is applicable—small-angle scattering (SAS) is a prime technique to use. It promises to obtain bulk-scale, statistically sound information on the morphological details of the nanostructure, and has thus led to many a researcher investing their time in it over the last eight decades of development. Due to pressure from scientists requesting more details on increasingly complex nanostructures, as well as the ever improving instrumentation leaving less margin for ambiguity, small-angle scattering methodologies have been evolving at a high pace over the past few decades.

As the quality of any results can only be as good as the data that go into these methodologies, the improvements in data collection and all imaginable data correction steps are reviewed here. This work is intended to provide a comprehensive overview of all data corrections, to aid the small-angle scatterer to decide which are relevant for their measurement and how these corrections are performed. Clear mathematical descriptions of the corrections are provided where feasible. Furthermore, as no quality data exist without a decent estimate of their precision, the error estimation and propagation through all these steps are provided alongside the corrections. With these data corrections, the collected small-angle scattering pattern can be made of the highest standard, allowing for authoritative nanostructural characterization through its analysis. A brief background of small-angle scattering, the instrumentation developments over the years, and pitfalls that may be encountered upon data interpretation are provided as well.

Export citation and abstract BibTeX RIS

Content from this work may be used under the terms of the Creative Commons Attribution 3.0 licence. Any further distribution of this work must maintain attribution to the author(s) and the title of the work, journal citation and DOI.

1. Introduction

1.1. Scattering to small angles

The interaction of radiation with inhomogeneities in matter can cause a small deviation of the radiation from its incident direction, called small-angle scattering (figure 1). Such small-angle scattering (SAS) occurs in all kinds of materials, be they (partially) crystalline or amorphous solids, liquids or even gases, and can take place for a wide variety of radiation, such as electrons (SAES) [159, 21], gamma rays (SAGS) [91, 90], light (LS) [73, 27], x-rays (SAXS) [102, 63, 2] and even neutrons (SANS) [2, 10, 75]. For the purpose of this review, we shall limit ourselves to x-ray scattering. This is one of the more prolific sub-fields of small-angle scattering, though it should be noted that many of the principles and corrections presented here, which apply to x-rays, may be applied to neutrons as well as some of the other forms.

Figure 1. The scattering of radiation to small angles by a sample (small-angle scattering). Angles normally used for diffraction analysis are also shown.

Download figure:

Standard image High-resolution imageThe phenomenon of small-angle scattering can and has been explained in a variety of ways, with many explanations starting from the interaction between a wave and a point-shaped interacting object [63, 51]. For crystallographers, however, this phenomenon may be more readily understood as peak broadening of the [000] reflection (which is present for all materials), whereas for the mathematically inclined, small-angle scattering can be defined as the observation of a slice through the intensity component of the 3D Fourier transform of the electron density [34, 2, 170, 155, 135].

Small-angle x-ray scattering can be applied to a large variety of samples, with the majority consisting of two-phase systems [180]. In multiphase systems where the electron density of one phase is drastically higher than that of the remaining phases a two-phase approximation can be made [100]. This assumption can be done as the scattering power in SAXS is related to the electron density contrast between the phases (squared), so that the larger the difference in electron density, the larger the scattering contribution. With such a two-phase approximation, SAXS is used to study precipitation in metal alloys [54, 36], structural defects in diamonds [164], pore structures in fibres [186, 25, 135], particle growth in solutions [190], coarsening of catalyst particles on membranes [167], characterization of catalysts [158], soot growth in flames [89], structures in glasses [192], void structure in ceramics [2], and for structural correlations in liquids [184], to name but a few besides the plethora of biological studies (which are well discussed in other work [96, 176]).

Small-angle scattering thus has a wide field of applicability in systems with only one or two phases. When the number of phases in the sample is increased to three, the complexity increases dramatically, drastically lowering the fields of application [180]. Some existing examples are studies on the extraction of hydrocarbons from coal [28], absorption studies on carbon fibres [79] and determination of closed versus open pores in geopolymers [112]1. For multiphase systems straightforward SAXS is rarely attempted, though some groundwork for such applications has recently been laid [181]. Instead, element-specific techniques such as anomalous SAXS (ASAXS) [192, 180] or combinations between SAXS and SANS [129] are used to extract element-specific information.

One additional drawback of SAXS, besides its preference for two-phase systems, is the ambiguity of the resulting data. As in common, straightforward SAXS measurements only the scattering intensity is collected (and not the phase of the photons), critical information is lost which prevents the full retrieval of the original structure (the 'phase problem'). As concisely explained by Shull and Roess [165]: 'Basically it is the distribution of electron density which produces the scattering, and therefore nothing more than this distribution, if that much, can be obtained without ambiguity from the x-ray data'. This means that a multitude of solutions may be equally valid for a particular set of collected intensities which may only be resolved by obtaining structural information from other techniques such as transmission electron microscopy (TEM) [197] or atom probe (AP) [76, 77]. This has drastic effects on the retrievable information.

In particular, of the three most-wanted morphological aspects: (1) shape, (2) polydispersity, and (3) packing, two must be known or assumed to obtain information on the third [63, 51, 137]2. This can be illustrated with a few examples. By making a monodisperse assumption about the particle size distribution and assuming infinite dilution (i.e. no packing effects), the possible particle shapes become limited and can be extracted by low-resolution molecule shape resolving programs [177]. Alternatively, knowledge on the particle size distribution and particle shape can result in a solution for the arrangement of the particles in space, as applied in structure resolving programs [187, 147]. Lastly, by making a low-density packing assumption and given a known particle shape (from TEM), a unique particle size distribution remains [111, 133, 132, 150, 158].

Despite these drawbacks, many practical applications have confirmed the validity of such small-angle scattering-derived information. For example, literature shows good agreement between TEM and SAXS analyses of gold nanoparticles [119], krypton bubbles in copper [139], commercially available silica sphere dispersions [64], coated silica particles [32, 136], zeolite precursor particles [33], spherical precipitates in Ni-alloys [162], and the diameter of rod-like precipitates in MgZn alloys [150], to name but a few.

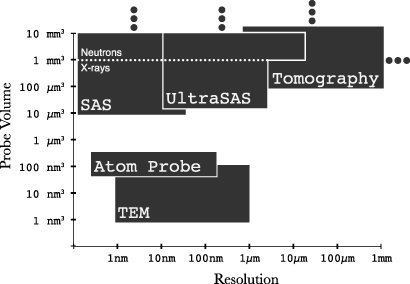

Small-angle x-ray scattering thus needs to be combined with supporting techniques (such as TEM, AP or porosimetry) and is best performed on samples with two main contrasting phases. When these conditions are met, however, it will provide information on morphological features ranging from the sub-nanometre region to several micron. This information is valid for the entire irradiated volume of sample, which can be tuned from cubic micrometres to cubic millimetres and beyond (figure 2). Furthermore, it can quantify the structural details of samples that are more challenging to quantify using electron microscopy, such as structures of glasses, fractal structures and numerous in situ studies, as well as volume fraction and size distribution studies.

Figure 2. Typical size range of distinguishable nanostructural features (horizontal axis) and sampling volume (vertical axis) of various volumetric techniques: transmission electron microscopy (TEM), atom probe (AP), tomography and small-angle and ultra-small-angle scattering techniques (SAS/ultraSAS). Dots indicate straightforward extensibility in the indicated direction.

Download figure:

Standard image High-resolution image1.2. The push for better data

From the inception of SAXS around the 1930s, significant effort was expended on improving the data obtained from the instruments as it became clear to the early researchers that what you get out of it depends on what you put into it (i.e. that the quality of the results were linearly dependent on the quality of the data collected). A good overview of the early efforts is given by Bolduan and Bear [15]. In particular, advances in collimation led to the widespread use of three collimators to reduce background scattering [15, 198], focusing and monochromatization crystals (and even practical point-focusing monochromators [48, 49, 163, 56]), high-intensity x-ray sources and total reflective mirrors. These early developments have led to near-universal adoption of all of these elements in subsequent instruments to improve the flux and signal-to-noise ratio.

X-ray sources in particular have increased drastically in brightness, leading to a similar increase in photon flux at the sample position for many small-angle scattering instruments. Initially, photon fluxes from laboratory sources were on the order of 103 to 104 photons s−1 (at the SAXS instrument sample position, estimated using Fankuchen [49]). This has increased to the current flux from microsource tubes and rotating anode generators of about 107 photons s−1, useful for most common x-ray scattering experiments. For monitoring of dynamic processes, position-resolved, or SAXS tomography experiments where higher fluxes are required, synchrotron-based instruments can deliver around 1011–1013 photons s−1 to the sample environment. On specialized beamlines such as BL19LXU at the SPring-8 synchrotron, fluxes of 1014 photons s−1 can be obtained. X-ray lasers such as SACLA in Japan, the European XFEL and the LCLS in the US provide very intense pulses of x-rays, but the total flux is typically only about 1011 photons s−1.

The thus obtained reduction of parasitic scattering and increase in flux was further exploited by the advent of new detection systems. The first SAXS instruments employed step-scanning Geiger counters [87] or photographic film (with a notable instrument even using three photographic films simultaneously [72] so that sufficient information could be collected to measure in absolute units [71]), which were rather laborious and time-consuming detection solutions. The photographic films in particular had a very nonlinear response to the incident intensity, necessitating complex corrections [23]. The advent of image plates [29] and 2D gas-filled wire detectors [57] mostly replaced the prior solutions, though image plates have a low time resolution (given the need to read and erase them), and the 2D gas-filled wire detectors suffer from a low spatial resolution due to a considerable point-spread function [108]. Charge coupled device (CCD) detectors enjoy a modicum of success, though they suffer from reduced sensitivity alongside a slew of other issues [7]. A costly but overall relatively problem-free detector came about with the development of the direct-detection photon counting detector systems such as the linear position sensitive MYTHEN detector [156], the 2D PILATUS detector [44], its upcoming successor, the EIGER detector [88], and the Medipix and PIXcel detectors [19]. The required corrections for these detectors will be discussed in section 3.3.

1.3. The next steps

A typical small-angle measurement currently consists of three steps: a rather straightforward data collection step, a data correction step to isolate the scattering signal from sample- and instrumental distortions, and an analysis step. While several works exist that detail the measurement procedure as well as the analysis [170], comprehensive reviews of all possible data correction steps are less easy to find. This work therefore discusses the data collection and in particular highlights the possible data correction steps. After the data correction steps, a corrected scattering pattern of the highest of standards is obtained, which can be quite valuable. Good quality data and a good understanding of their accuracy and information content limitations greatly facilitates the process of data analysis and therefore forms the basis of any sound structural insights.

2. Data collection

2.1. The importance of good data

At the core of a good small-angle scattering methodology lies the collection of reliable, consistent data with good estimates for the data uncertainty. Once high-quality data have been collected for a particular sample, it can be forever be subjected to a variety of analyses. The data collected in the timespan of several days, during sample measurements at synchrotrons in particular, is often subjected to analysis (many) months after the measurement. Ensuring that the collected scattering pattern is an accurate representation of the actual scattering, therefore, is of the utmost importance in any small-angle scattering methodology.

It almost does not need mentioning that conversely, poorly collected data should be shunned. It will confuse at best, and provide wrong conclusions at worst which could lead to disaster. Poorly collected small-angle scattering data have little to no information content in small-angle scattering, and likely consists of mostly background and parasitic scattering. In order to aid the novice researcher in collecting sufficient (and the right) information from a SAXS measurement, a data collection checklist is provided in the appendix.

2.2. Instrumentation

While in the past many instruments were designed and built in-house, nowadays many good instruments can be obtained from a large variety of instrument manufacturers. Given the current ease of obtaining money for a complete instrument rather than instrument development, and the drastic reduction in time required between planning and operation, the extra cost involved may in many cases be offset by the benefits. These instruments come in a variety of flavours and colours, but can essentially be divided into three main classes: (1) pinhole-collimated instruments, (2) slit-collimated instruments, and (3) Bonse–Hart instruments relying on multi-bounce crystals as angle selectors3.

2.2.1. Pinhole-collimated instruments.

The first of these three, pinhole-collimated instruments (schematically shown in figure 3) have become very popular due to their flexibility in terms of samples and easy availability of data reduction and analysis procedures. While initially eschewed for slit-collimated instruments due to the drastically higher primary beam intensity of the latter, improvements in point-source x-ray generators as well as 2D focusing optics have reduced the weight of this argument somewhat. This class of instruments also dominates the small-angle scattering field at synchrotrons as well as neutron sources due to their aforementioned flexibility.

Figure 3. The required components for a small-angle scattering experiment.

Download figure:

Standard image High-resolution imageThese instruments typically consist of a point-based x-ray source followed by x-ray optics. These optics are either used to parallelize the photons emanating from the source, or focus the x-rays to a spot on the detector or sample. After the x-ray optics, the beam is then further collimated using either three or more collimators made from round pinholes or sets of slit blades, separated by tens of centimetres to several metres (a particular effect of the collimation on beam properties is given in section 2.2.4). While the third collimator was required to remove slit or pinhole scattering from the second collimator [15, 196, 138], the recent development of single-crystalline 'scatterless' slits remove the need for the third collimator [58, 109].

There are two main instrument variants in circulation as to what happens after the collimation section. One type of instrument ends the in-vacuum collimation section with an x-ray transparent window, allowing for an in-air sample placement and environment before entering another x-ray transparent window delimiting the vacuum section to the detector (this sample-to-detector vacuum section is also known as the 'flight tube'). As this introduction of two x-ray transparent windows and an air path generates a non-negligible amount of small-angle scattering background, it does not lend itself well to samples with low scattering power [43]. The second instrument variant, therefore, consists of a vacuum sample chamber (and often a vacuum valve which can be closed to maintain the vacuum in the flight tube during the sample change procedure), and thus allows an uninterrupted flightpath from collimation through the sample into the flight tube. While this generates the least unwanted scattering, it does add restrictions to the sample and sample environments that can be put in place [138].

At the end of the flight tube sits the in-vacuum beamstop, whose purpose is to prevent the transmitted beam from damaging the detector or causing unwanted parasitic scattering, and can be one of three types. This beamstop can be a normal beamstop, which blocks all of the transmitted beam. It is useful in many cases, however, to have an estimate for the amount of radiation flux present in the transmitted beam. To this end, the beamstop can be replaced or augmented with a small PIN diode, which measures the flux directly (albeit on arbitrary scale), or the beamstop can be made 'semitransparent', meaning that the beamstop is adapted to pass through a heavily attenuated amount of radiation which subsequently falls onto the detector. The presence of either of the two latter options can be used to benefit the accuracy of the data reduction step, leading to more accurate data and therefore more accurate results.

Finally, the flight tube exits in a window followed (almost) immediately by the detector. For detectors with a large detecting area, this exit window (and the flight tube exit section) must be engineered to be strong and large, sometimes leading to visible parasitic scattering from the window material. It is therefore recommended to keep the detector small, allowing for a small and modular flight tube with very little exit window issues. Alternatively, for very modern systems, some detectors can work in-vacuum as well which removes this last (small) source of parasitic scattering and allows for step-less translation of the detector and beamstop within this vacuum, drastically increasing the flexibility in angular measurement range.

One alternative to this type of instrument was the 'Huxley–Holmes' camera which contained two separate optical components for monochromatization and focusing, to achieve a very low background [202]. While this instrument is performing well, the authors currently recommend going for a more common configuration instead consisting of focusing optics followed by scatterless slits [201].

2.2.2. Slit-collimated instruments.

A second type of instrument exists which is much more compact than the pinhole-collimated systems, is less expensive and illuminates a larger amount of sample to collect more scattering. This type of instrument is often referred to as a 'Kratky' or 'block-collimated' camera, perhaps best explained by Kratky [103] and Glatter and Kratky [63]. This camera is commonly built on an x-ray source emitting a beam with a line-shaped cross-section, and collimates the x-ray beam using rectangular blocks of metal4. While this instrument is sometimes referred to as an ultra-small-angle scattering instrument, it is typically used as a normal small-angle scattering instrument.

The line-shaped cross-section of the x-ray beam does bring with it a major drawback, in that the collected scattering pattern is substantially different from the pattern one would obtain from a pinhole-collimated instrument, and therefore needs a modified data correction procedure. Effectively, the scattering pattern is distorted or blurred due to a superposition of intensity contributions from various scattering points along the line-shaped beam. While the collected 'slit-smeared' scattering patterns can be subjected to a numerical correction to compensate for this smearing effect, such desmearing processes in the best case merely amplify the noise in the system and in the worst case introduces artefacts which could be mistaken for real features [188]. This desmearing procedure will be discussed in more detail in section 3.4.9. Furthermore, analysis of samples containing an anisotropic structure becomes more tedious, leaving the instrument most suited to isotropically scattering samples.

There are a number of instruments preceding the block-collimated camera, which employed a line-shaped x-ray beam collimated with a series of slits [149, 198, 72]. While these formed the basis of the first SAXS instruments, and are by definition slit-collimated instruments, they are no longer in widespread use.

2.2.3. Bonse–Hart instruments.

A third type of instrument is one particularly suitable for ultra-small-angle scattering purposes (for the analysis of larger structures typically from several nanometres to several microns), and is known as the 'Bonse–Hart' camera [17]. These instruments utilize the high angular selectivity of crystalline reflections to single out a very narrow band of scattering angles for observation, i.e. using the crystals as angle selectors both for collimation- as well as analysis purposes. While the idea of using crystalline reflections was not new [49, 149], the advantage of the implementation by Bonse and Hart [17] was the use of multiple reflections in channel-cut crystals to improve the off-angle signal rejection in a straightforward manner [26].

The incident beam is collimated to a highly parallel beam through multiple crystalline reflections rejecting all but the angles in reflection condition. The sample is placed into this parallel beam effecting small-angle scattering as the beam passes through the sample. A second crystal (a.k.a. 'analyser crystal') is then used to pick out a single angular band of the scattered radiation. Through rotation of the analyser crystal, the scattered intensity at various angles can be evaluated with an extremely high angular precision or resolution. A few standalone instruments have been constructed on synchrotrons [26, 40, 84, 81], and several more have been built as complementary instruments around laboratory x-ray sources (tube—as well as rotating anode sources) [17, 65, 106, 26].

The instrument angular resolution is defined mostly by the rocking curve of the crystalline reflection, also known as the 'Darwin width', which is the angular bandpass window of the crystalline reflection [5, 82]. While a large variety of crystalline materials can be used in the instrument, the channel-cut crystals are usually made from either silicon or germanium due to their high degree of crystalline perfection over large sizes [5]. These crystals have Darwin widths (FWHM) for the common (111) and (220) x-ray reflections of about 0.0002°, thus defining the q-resolution for most such instruments to be about 0.001 nm−1 (where the multiple reflections do not change the Darwin width, but improve the off-reflection rejection) [26, 178, 40, 65, 106]. When a higher resolution is required, for example to measure larger structures, the combination of high-energy and higher-order crystalline reflections can lead to a ten-fold increase in resolution [82]. Neutrons can also be used instead of x-rays, as the Darwin width for a neutron reflection is about 0.105 times that of the x-ray counterpart, which can therefore lead to a similar increase in resolution [5].

As the channel-cut crystals only collimate in one direction, these instruments suffer from a similar slit-smearing effect as the Kratky-type instruments discussed in section 2.2.2. Desmearing of the data is therefore required, unless effort and intensity is expended to collimate the beam in the perpendicular direction as well [105, 65]. An additional drawback to these instruments is the requirement for a step-scanning evaluation of the scattering curve5, which increases measurement times considerably. Due to the fast intensity falloff at higher angles, and the extremely narrow angular acceptance window of the analyser crystal, this instrument performs best at ultra-small angles but has much reduced efficiency at larger angles. These properties render this type of instrument a useful addition to existing SAXS instrumentation, but is less frequently encountered as a standalone instrument. Given the particulars of the data, Bonse–Hart data correction may require additional consideration (e.g. for the determination of the sample transmission factor and the effects of the rocking curve on the data) [26, 178, 203].

While the difference between a Kratky camera and a Bonse–Hart camera initially seemed to be in favour of the Kratky camera [104], it gradually became clear that both instruments have their place in the lab. For small-angle scattering measurements on weakly scattering systems at common small angles (i.e. 0.1 ≤ q (nm−1) ≤ 3), a Kratky camera performs very well, while for measurements to very small angles (i.e. below q (nm−1) ≈ 0.1) the Bonse–Hart approach would be the preferred instrument [37, 26].

2.2.4. A note on collimation and coherence.

In typical scattering measurements, only a fraction of the volume is irradiated with coherent radiation (i.e. with in-phase electromagnetic fields), therefore only that fraction of the irradiated sample volume contributes to the scattered intensity [189]. In other words, the irradiated sample volume typically contains a multitude of so-called 'coherence volumes', each of which contributes to the scattering pattern. As there is no inter-volume coherence, it is the sum of the scattering intensities (as opposed to the sum of the amplitudes) from each of these volumes that is detected [110].

These coherence volumes are defined by two components, the longitudinal component (parallel to the beam direction) and the transversal component (perpendicular to the beam direction, see figure 4). The longitudinal component is dependent on the degree of monochromaticity of the radiation, and is large for monochromatic radiation and quite small for polychromatic beams [110]. The transversal dimension ζt of the coherence volume is defined through the collimation, in particular through the dimensions of the beam-defining collimator and its distance to the sample, and can be estimated as [189]:

where λ is the wavelength of the radiation, l the distance between the beam-defining collimator and the sample, and w the size of the collimator opening (ζt can be calculated for each direction for collimators with nonuniform openings).

Figure 4. Coherence volume after a slit. The larger the slit, the smaller the transversal coherence length.

Download figure:

Standard image High-resolution imageThe estimation of the transversal coherence length is an important check for experiments. Scattering objects with dimensions close to or larger than the transversal coherence length may not contribute significantly to the small-angle scattering as the coherence volume will be within a uniform region of material (an effect seen amongst others by Rosalie and Pauw [150]). This effect can be exploited to investigate the actual transversal coherence length in an instrument as shown by Gibaud et al [61]. For a more detailed treatment of coherence (i.e. when it is approaching significance or what happens when a single coherence volume encompasses the sample), the reader is referred to the aforementioned literature.

3. Data reduction and correction

3.1. What corrections?

While a scattering pattern may have been recorded on the best available instrumentation, there are nonetheless some corrections to be done. The corrections must correct (as much as possible) for any data distortions introduced by the x-ray detection system. Further small corrections consist of spherical corrections, polarization correction and sample self-absorption correction. More significant corrections are corrections for background, dark current or natural background, deadtime correction and scaling to absolute units. Many of these steps also need to be done in an appropriate order. These corrections will be discussed in this section, accompanied by magnitude estimates and error propagation methods where appropriate. Note that the data corrections and correction sequences provided here are given with pinhole-collimated instruments in mind. While most corrections translate to the slit-collimated- and Bonse–Hart instrument types as well, data correction for these two may require extra care. In particular for Bonse Hart-specific corrections and considerations, the reader is referred to Zhang and Ilavsky [203] and Chu et al [26].

The goal of all these corrections is to recover the true scattering cross-section (which is often still called the 'intensity' or 'absolute intensity' colloquially) as well as an estimate for its relative σr and absolute uncertainty σa for all datapoints j: Itrue,j ± σr,j ± σa (though more advanced error analysis is possible [74]). Note that the absolute uncertainty is independent of the datapoints as it is the uncertainty estimate for the total scaling of the scattering cross-section.

It is the common consensus in the small-angle scattering community that ensuring the correct implementation of all these data corrections rests on the shoulders of the instrument manufacturer, the beam line responsible (in case of synchrotrons) or the instrument responsible. In other words, the beginning small-angle scatterer should never have to deal with these, and should receive corrected scattering cross-sections with uncertainties. The reasoning behind this is that in order to do most of these corrections a level of instrument understanding and characterization is needed which cannot be expected of the casual user. In reality, however, the user can be left to their own devices and an idea on the required steps and sequence may be of some help. Several data processing packages are available to aid the user with the most pressing data correction steps [13, 80, 95, 93] (not an exhaustive list).

The purpose of this section is to introduce every possible correction, and provide a modular toolbox for constructing data correction sequences. Some corrections are 'turtles all the way down', increasing in complexity the more it is investigated. For these, only the top 'turtles' are given, with enough references to fine-tune the details as required. Finally, example data correction schemes are given of increasing complexity to accommodate the occasional experimentalist, the professional and the SAXS-o-philic perfectionist.

3.2. Data reduction steps and sequence

The required data steps are indicated in table 1, ordered by their approximate position in the data reduction and correction sequence. Where applicable, the paragraph in which the data correction in question is discussed is indicated as well. Convenient two-letter abbreviations have also been provided. While the table includes a fair few corrections and is suitable to a variety of detectors, it should not be considered universal as some detectors are in need of more corrections, or application of the corrections in a slightly different order.

Table 1. The data reduction and correction steps in approximate order of application. The abbreviations in the 'Abbrv.'-column are listed in the appendix. The columns 'σr' and 'σa' indicate whether a correction affects either the relative uncertainty and/or the absolute uncertainty (if so, column marked with ∘). Also indicated are four types of detectors (CCD: a typical CCD detector with tapered fibres or image intensifier, IP: image plate, DD: direct-detection systems such as the hybrid pixel detectors, and WD: wire detectors and similar), whose columns indicate the severity of the effect of each correction for that detector, where '+' indicates the correction has to be applied, '−' indicates a minor correction that can be ignored. Complexity (Cx) column indicates the approximate complexity of the correction implementation, with 0 being easy, and 3 complicated.

| Step no. | Abbrv. | Description | Section | σr | σa | CCD | IP | DD | WD | Cx |

|---|---|---|---|---|---|---|---|---|---|---|

| (1) | DS | Data read-in corrections for manufacturer's data storage peculiarities | 3.3.1 | + | + | + | + | 0–3 | ||

| (2) | DZ | Dezingering—removing high-energy radiation streaks | 3.3.2 | + | − | − | − | 2 | ||

| (3) | FF | Detector flat-field correction | 3.3.3 | ∘ | + | − | + | + | 1 | |

| (4) | DT | Detector deadtime correction (photon counting detectors) | 3.3.4 | ∘ | − | − | − | + | 2 | |

| (5) | GA | Detector nonlinear response (gamma) correction | 3.3.5 | ∘ | + | − | − | + | 1 | |

| (6) | TI | Normalize by measurement time | 3.4.3 | ∘ | ∘ | + | + | + | + | 0 |

| (7) | DC | Subtraction of natural background or dark current measurement (itself subjected, when applicable, to steps 1–6) | 3.3.6 | + | + | + | + | 0 | ||

| (8) | FL | Normalize by incident flux | 3.4.2 | ∘ | ∘ | + | + | + | + | 0 |

| (9) | TR | Normalize by transmission | 3.4.2 | ∘ | ∘ | + | + | + | + | 0 |

| (10) | GD | Detector geometric distortion correction | 3.3.7 | ∘ | + | ± | − | + | 3 | |

| (11) | SP | Spherical distortion correction (area dilation) | 3.4.6 | ∘ | − | − | − | − | 1 | |

| (12) | PO | Correct for polarization (even for unpolarized beams) | 3.4.1 | ∘ | − | − | − | − | 1 | |

| (13) | SA | Correct for sample self-absorption | 3.4.7 | ∘ | − | − | − | − | 1–3 | |

| (14) | BG | Subtract background (itself subjected to steps 1–11) | 3.4.5 | ∘ | ∘ | + | + | + | + | 0 |

| (15 | TH | Normalize by sample thickness | 3.4.3 | ∘ | + | + | + | + | 0 | |

| (16) | AU | Scale to absolute units | 3.4.4 | ∘ | + | + | + | + | 1 | |

| (17) | MK | Mask dead and/or shadowed pixels | 3.3.8 | + | + | + | + | 0 | ||

| (18) | MS | Correct for multiple scatteringa | 3.4.8 | ∘ | − | − | − | − | 3 | |

| (19) | SM | Correct for instrumental smearing effectsa | 3.4.9 | ∘ | − | − | − | − | 3 | |

| (20) | — | Radial or azimuthal averaging | 3.4.10 | ∘ | 0 |

aThese are more robustly dealt with by smearing the data fitting model rather than desmearing the data.

3.3. Detector corrections

In order to detect x-rays, a wide variety of detectors have become available. Depending on the detection method, imperfections and physical limitations may cause a deviation of the detected signal from the true signal (the number of scattered photons). In a perfect case, you would measure the same (true) scattering signal irrespective of the type of detector used.

Real detectors, however, have imperfections, tradeoffs and drawbacks. Some of these detectors and their individual drawbacks will be discussed here, after elaboration on the possible distortions. The distortions can generally be divided into two categories, intensity distortions and geometry distortions. Intensity distortions are deviations in the amount of measured intensity, and geometry distortions are deviations in the location of the detected intensity. First and foremost, there are data read-in corrections to consider.

3.3.1. Data read-in corrections: DS.

The first step for any data correction is to read in the information from detectors. While for point- and linear position sensitive detectors (PSDs), the choice has almost universally been made for the convenience of ASCII data, for image detectors this has not been so straightforward.

Therefore, whenever a detector system is bought, particular attention needs to be paid to the data format of the images one obtains. For some reason, quite a few detector manufacturers worldwide prefer their own image data formats over more standard image formats (a list of some of these formats can be found in the documentation accompanying the NIKA package [80]). This tendency hinders data preservation efforts (though one should preserve corrected and reduced data rather than the original data, a point discussed in section 3.6) and sometimes causes read-in issues of the data in data reduction packages. Two cases in particular have come to the attention of the author, the Rigaku data format and the Bruker data format, which will be used to illustrate the issue.

The Rigaku data format has all the characteristics of a 16-bit TIFF image, and will actually load as such. Without going into details, 16 bits (per image value) would get you a maximum per-pixel value of 216: 65 536. This value would be insufficient for storing the number of photons obtained for example from the aforementioned PILATUS hybrid pixel detector, which therefore uses a 32-bit image format. The Rigaku format treats such count numbers slightly differently in order to store them in 16 bits: 15 bits behave like normal bits up to a value of 215 (32 768), the 16th bit acts not as a standard bit but as a 'multiply-by-32'-flag6. While this is documented [148], the danger lies in the compatibility of their data format with standard binary data: the intensities will be wrong, but the scientist ignorant of this issue will not immediately notice something is awry.

The Bruker data format, on the other hand, is unlikely to be compatible with any standard image reading routines, and authoritative information on the image format is not very easy to obtain. Some of their image formats appears to use an 8-bit image format (i.e. with per-pixel maximum values of 256), with a subsequent 'overflow' list detailing pixels that have exceeded this 8-bit limit. Implementation and read-in of these data is therefore cumbersome, perhaps even unnecessarily complicated given the alternatives.

In the best case, detector systems adhere to known and common image formats [44]. Active development is ongoing for supporting detector data of these and more complicated multi-chip detectors and instruments in the NeXus format [94, 99]. The NeXus format itself is based on the very versatile, portable, well-documented and open HDF5 data storage format [53]. Such standards will hopefully resolve some of the challenges related to data ingestion into data reduction procedures.

3.3.2. Dezingering: DZ.

Spurious signals can be detected for a range of reasons: from external sources such as cosmic rays, nearby x-ray sources or atmospheric radioactive decay, or from internal sources such as the employed electronics. These often appear as spikes or streaks in the detected signal, varying in location and amount from image to image. Integrating (e.g. CCD) detectors without energy discrimination are most heavily affected by these phenomena, whilst photon counting, energy discriminating detectors often only show a single extra count (or streak of 1 extra count) upon event occurrence.

Given their potentially high values, zingers can significantly affect the recorded signal, and should be removed in CCD-based detectors. The trick for their detection and subsequent removal is to record multiple images per measurement and mask all statistically significant differences. A suitable computational procedure is described by Barna et al [7] and Nielsen et al [128].

3.3.3. Flat-field correction: FF.

No two detection surfaces (pixels) are exactly the same due to manufacturing tolerances, slight damage or differences in the underlying electronics, to name but a few. Therefore, every detector apart from point detectors (i.e. every spatially resolved detector) has to be corrected for interpixel sensitivity, with the notable exception of image plates7. These interpixel sensitivity variations can easily be on the order of 15% for some detectors [182]. The correction is straightforward in theory: collect a uniform, high amount of scattering on the detector, assume the per-pixel detector response should be identical for this scattering, and use the relative difference in detected signal between the pixels as a normalization matrix for future measurements. In practice, though, uniformly distributing a large number of photons (of the right energy) on the detector surface can be a challenge.

One solution is to irradiate directly with a low-power x-ray source placed some distance from the detector, as discussed in detail by Barna et al [7]. This solution needs small corrections for area dilation and air absorption, in addition to a few more detector-specific ones, and needs a separate check of the uniformity of the source. The advantage is that it can be tuned to the energy of interest, and that a sufficient number of photons is easily acquired [146, 41, 59].

Alternatively, doped glasses can be used to obtain a flat-field image, as suggested by Moy et al [121]. This has the advantage of reduced complexity in setting up the flat-field measurement, but may suffer from nonuniform images [7] and has a reduced photon flux. Some use the uniform scattering of water as flat-field measurement data, despite water not scattering uniformly at very small angles (though this can be corrected for), and the scattering intensity at larger angles being quite low for obtaining good per-pixel statistics [125]. Similarly, samples with known scattering behaviour can be used for such purposes [59].

Another solution common in laboratory settings is the use of radioactive sources (emitters) which can be easily accommodated in most instruments [126]. The major drawback of that solution is the differences between the emitter energy and the energy used during normal measurements, and a very low detected count rate necessitating impractically long collection times for decent flat-field images. The alternative suggested by Né et al [126] is the image collection during slow and well-controlled scanning of an emitter over the detector surface, with the challenge of achieving a homogeneous exposure.

The alternatives which place the radiation source at the sample location share one further advantage in case of detectors using phosphorescent screens. The advantage is that through placement of the radiating source at the sample location, one simultaneously corrects for the dependency of the response of phosphorescent screens to the direction of incident photons. If this is not done, one might consider correcting for this effect separately [7, 199].

Given these challenges, it is therefore recommended for (time-stable) detectors to obtain flat-field images from the manufacturer who should be equipped to record these. The corrected intensity Ij,cor for datapoint j can be retrieved from the input intensity Ij using a flat-field image Fj (which can be normalized to 1 to avoid large numbers):

If there are uncertainties available when performing this step, they will propagate as:

3.3.4. Deadtime correction: DT.

After arrival of a photon on a detection surface or in a detection volume, a certain amount of time is needed for the detector to recover from this event before a second photon can be detected. This time is called the 'dead time': a photon arriving in this timespan will not be detected. More precisely, the electronic pulses generated by the arrival of two near-simultaneous photons will start to overlap, causing either rejection of both photons due to the compound pulse being too high (energy rejection), or the two pulses being counted as one. This is discussed clearly by Laundy and Collins [107].

This correction can be unnecessary for some of the modern hybrid detectors at the count-rates they are commonly subjected to. The PILATUS detector, for example, only shows a >2% deviation from a linear response at an incident photon rate of more than 450 000 photons/pixel s−1 [101]. Gas-based detectors, especially 1D and 2D wire detectors very much need this correction.

One aspect of this correction that is of high importance is that when the data uncertainty is calculated based on counting statistics (i.e. Poisson statistics), these uncertainties should be calculated from the detected photons, not from the deadtime-corrected photons. This implies that there is a count rate characteristic for each detector beyond which the data accuracy decreases! This phenomenon is evident from Laundy and Collins [107].

The number of deadtime-corrected counts Ijcor can be obtained from the detected number of counts Ij collected in time t by numerically finding a solution for [107]:

with

where τ1 is the minimum time difference required between a prior pulse and the current pulse for the current pulse to be recorded correctly. Similarly, τ2 is the minimum arrival time difference required between the current pulse and a subsequent pulse for the current pulse to be recorded correctly. As pulses follow an asymmetric profile like a log-normal function, these two times can be different (for a 1 μs pulse shaping time this can be τ1 = 3.0 μs and τ2 = 2.0 μs [107]).

At this point we can also estimate the uncertainty (standard deviation) σr,j for the corrected counts through [107]:

Interestingly, if τ1 and τ2 are known, the true uncertainty σr,j can be retrieved from the deadtime-corrected values through insertion of equation (4) into (6), which may be of use in detector systems where the deadtime correction is performed by the detector system itself.

3.3.5. Gamma correction: GA.

Most non-photon counting detectors do not necessarily give an output linearly proportional to the incident amount of radiation. This used to be especially severe for films, which required accurate corrections for each film type [23]. For more modern detection systems the effect appears small (i.e. on the order of 1%), but may nevertheless be considered especially when approaching the limits of the dynamic range [123, 124, 67]. It is relevant for image plates [118, 29, 126, 8] and may be considered for some CCD detectors as well [67]. It may even be relevant for some gas-based photon counting detectors insofar it is not already accounted for with the deadtime correction [8].

This correction can be applied by characterizing the detector response for various fluxes of incident radiation, for example through attenuating monochromatic radiation using a series of calibrated foils to reduce the incident flux [67]. Simply collecting radiation for a longer time may obfuscate the detector response to incident flux with other time-dependent effects especially for image plates [29], unless this is explicitly taken into account [67]. Furthermore, the energy of the incident radiation has to be identical to the energy used for normal measurements, as the gamma correction can be energy dependent [85].

One alternative solution to circumvent the need for this correction is to determine the range of incident radiation amounts where the detector response is linear, and to stay within that range. For samples which exhibit scattering covering a wider dynamic range than thus supported, attenuators can be devised in the beam path to locally attenuate the signal [125]. Introducing additional elements into the beam path may, however, cause scattering or act as a high-pass energy filter leading to 'radiation hardening', and such modifications should therefore not be applied without thorough considerations of the consequences.

Lastly, while it cannot be considered a true nonlinearity correction, for image plates the measured intensity is also a function of measurement time (i.e. the delay after exposure before measuring) [126]. Internal decay causes a reduction of the measurable signal over time, with a fast decay component (with a half-time on the order of minutes) and a slow decay component (on the order of hours). Effectively, this can even cause intensity variations on the order of several per cent during the read-out of the image plate. A decay time correction should therefore be considered for accurate reproduction of intensity, and such a correction is described amongst others by Hammersley et al [67]. It should be noted that this time decay is likely also dependent on the energy of the used x-rays as it is for protons [16].

This correction is applied if the nonlinear behaviour of the intensity can be expressed as a function of the incident radiation γ(I):

The relative datapoint uncertainty scales similarly:

3.3.6. Darkcurrent and natural background correction: DC.

There are two factors adding to the detected signal even without the presence of an x-ray beam, these are the detector 'dark current' and the omnipresent natural radiation. While these are two separate effects, their correction is identical and can be simultaneously considered. The cause of the dark current signal depends on the detector. Some detector electronics add their own 'pedestal' bias to prevent negative voltages entering the analogue-to-digital converter (ADC) [7, 125], which can be considered a form of dark current. CCD chips may also exhibit a baseline noise, also known as 'read noise', photomultiplier tubes (PMTs) in image plate systems detect a small leak current without any incident photons and ion chambers also detect a small current without radiation. Natural background radiation furthermore adds a time-proportional level of noise in any detector [126].

The dark current components are homogeneously distributed over the entire detector, and can thus (for statistical purposes) be corrected for by subtraction of a single value from each detected pixel value. This single value is a summation of all three dark current components: a time-independent component, a time-dependent component and a flux-dependent component. To elaborate, the time-independent component would be the base amount ('pedestal')-level, applicable to detectors based on PMTs and CCDs [39]. Naturally occurring background radiation can be considered part of the time-dependent component, visible in every detector. One important note here is that the image plates start collecting natural radiation from the time of their last erasure rather than from the start of the measurement [126]. Some detectors may also show a time-dependent dark current in addition to the natural background [124]. These two components can be easily determined through evaluation of the total detected signal as a function of exposure time without an applied x-ray beam. The last component, the flux-dependent dark current level is a specific complication encountered in some image-intensifier-based CCD detectors, and requires the simultaneous determination of the dark current signal alongside the measured signal through partial masking of the detection surface with x-ray absorbent material [143, 125].

This can be expressed mathematically as:

where Da is the time-independent component, Db the time-dependent factor times the measurement time t, and Dc the flux-dependent component for those detectors suffering from that particular complication (determined simultaneously with the measurement). Image plates furthermore have a natural decay which means that the time-dependent component may not be truly linear over large timescales. It is therefore best practice to determine the dark current contribution using exposure times similar to the measurement times. For accurate determination of the dark current contribution when measurement times are small, the averaging of multiple exposures on the timescale of the measurement can improve statistics [125].

As the dark current is ideally pixel-independent, Da,Db and Dc can be determined to high precision when averaged over the entire detector. This should render their relative uncertainties σ(D)/D rather small thus only having a minor effect on the intensity uncertainty. The uncertainty should propagate (assuming Poisson statistics) approximately as:

3.3.7. Geometric distortion: GD.

Among the more complicated detector corrections is that of the geometric distortion, which can be severe for some detectors (in particular for wire detectors and image-intensifier-based CCD detectors), small for others (i.e. <1% for fibre-optically coupled CCD detectors) [124], to non-existent for direct-detection systems. The electronics and design of image intensifiers in CCD cameras and electronics of wire detectors can give rise to pixels being assigned incorrect geometric positions, leading to geometric distortion [7]. Even image plate readers can show this effect due to the read-out mechanics [108], and it therefore seems a necessary correction for all detectors save those based on direct-detection (e.g. the PILATUS detector). In order to put the detected pixels back in their right 'place', i.e. in a location corresponding to the arrival location of the detected photon on the detector surface, a geometric distortion correction must take place.

The most common method for this is to place a mask with regularly spaced holes in front of the detector, which is subsequently irradiated with more-or-less uniform photons originating from the sample position. This then allows for the evaluation of where on the detector the photons are observed versus where the photons actually arrived through the holes in the mask [108, 185].

These corrections only really can take care of smoothly varying distortions, and are ill-suited for corrections of abrupt distortions as those found upon occurrence of discontinuous shears in fibre-optically coupled detectors [7]. Corrections for these distortions must be considered separately [35]. Rather than trying to correct the actual image by, for example, inserting or interpolating pixel values (e.g. [185]), one good way of dealing with these corrections is to determine a coordinate look-up table ('displacement maps') for each pixel. These maps can subsequently be used during the data averaging procedure (see section 3.4.10) to put the detected datapoints in the right bins [92, 93].

Image plates, besides the small geometric distortion mentioned above also require a specific correction: one that corrects for variance in subsequent placements of image plates. Since it is mechanically challenging to reproducibly place an image plate to within 50 μm (or approximate grain size), every image plate may be slightly offset. The variance in placement for a given image plate placement and read-out procedure (ideally designed to minimize placement variance) can be quantified and evaluated for significance of severity. If necessary, symmetry in the scattering patterns can be exploited to determine the beam centre of every image. A procedure for achieving this is described by Le Flanchec et al [108].

Due to the detector specificity of the required correction and the relatively complex procedure, the methods for correcting image distortions are not reproduced here. Geometric distortions should not affect the datapoint uncertainties.

3.3.8. Masking of incorrect pixels: MK.

In virtually any detection system there will be 'broken' pixels, either pinned to the maximum or minimum value, or simply giving incorrect response to the incident radiation. Additionally, pixels masked by the beamstop or the beamstop holder should be ignored as well. For masking these, an oft used technique is to record a scattering pattern of a strong scatterer, after which a Boolean array can be manually generated, indicating for each pixel whether it should be masked or not. For space-saving purposes, this Boolean array can be reduced to a list of pixel indices to be masked.

This array (or list of pixel indices) can subsequently be used in the averaging procedure to not consider invalid pixels in the procedure. Such masking does not affect the uncertainties.

3.4. Other corrections

There are a range of corrections to be done that are independent from the type of detector used. These are corrections for e.g. sample transmission (closely related to the background subtraction), correction for polarization and area dilation. Included in these correction is the correction (or rather the scaling) to go from 'intensity' to scattering cross-section which can later be used to retrieve volume fractions or number of scatterers to a reasonably good degree (with an expected accuracy σa/I of about 10%).

3.4.1. Polarization correction: PO.

The scattering effect of photons depends on the polarization of the incident radiation and the direction of the scattered radiation [168]. This phenomenon causes a slight reduction in intensity. While this effect is commonly corrected for in wide angle diffraction studies, it is often considered negligible in small-angle scattering data correction [141, 14, 145, 128]. When quantified, the correction amounts to nearly 1% for scattering angles 2θ of about 5°. This correction applies both to unpolarized radiation as well as polarized radiation, in the former the correction is isotropic, and in the latter anisotropic (as shown in figure 5). Depending on the angular range collected, the polarization correction may be considered for a slight increase in accuracy.

The correction factor for 2D detector images is given by Hura et al [78] as:

where ψ is the azimuthal angle on the detector surface (defined here clockwise, 0 at 12 o'clock) 2θ the scattering angle, and Pi the fraction of incident radiation polarized in the horizontal plane (azimuthal angle of 90°)8. The correction for unpolarized radiation is achieved when Pi = 0.5, most synchrotron beam lines have a Pi ≈ 0.95.9 As this is a correction between datapoint values, only the relative uncertainty σr,j is affected similarly to the effect of polarization on the intensity:

Figure 5. Correction factor for two-dimensional detectors given 95% in-plane polarization (typical reported value for synchrotrons), and no polarization (i.e. 50% in-plane polarization).

Download figure:

Standard image High-resolution image3.4.2. Transmission and flux corrections: TR and FL.

Any material inserted into the beam absorbs a certain amount of radiation [78, 3]. This affects the amount of background scattering impinging on the detector as well as the amount of scattering of the remaining radiation by the sample (differing slightly depending on the path through the sample as well as shown in section 3.4.7). As the amount of radiation scattered by the sample is typically small, the absorption or transmission factor can be determined by measuring the flux directly before and after the sample.

There are three commonly applied methods for measuring the sample absorption, one 'in situ' method commonly found at synchrotrons and two offline methods. At synchrotrons, so-called ionization chamber detectors can be installed (usually in air) directly before and after the sample position. These detectors are very straightforward in their construction, typically consisting of two electrodes suspended in air [161, 179, 117]. They are particularly suitable as they exhibit no parasitic scattering and can be used to monitor the incident flux as well as the absorption over the duration of the measurement. Assuming a non-identical but linear response for both upstream (u) and downstream (d) detectors, the readings without sample (indicated with subscript 0) and after sample insertion (subscripted 1) can be used to calculate the transmission factor Tr through:

It is not always possible to insert ionization chambers, for example when working with a completely evacuated instrument. In that case, two alternative solutions can be applied to measure the beam flux sequentially before and after insertion of the sample. In one solution, the beamstop is modified to either: (1) allow for a small fraction of the direct beam to pass through and be detected by the main detector (i.e. a 'semitransparent beamstop'), or (2) where the beamstop is augmented with a small10 pin-diode measuring the direct beam flux [120, 45]. The second option is to place a strong scatterer in the beam downstream from the sample position, and measure the integrated scattering signal from the strong scatterer [138]. For the beamstop modification case, the ratio of the two fluxes (before and after insertion of the sample) is the transmission factor. In the last case, the ratio of the two integrated intensities on the detector is the transmission factor:

where I0 is the intensity of the primary beam without sample, and I1 the intensity of the beam after insertion (and downstream) of the sample. A transmission factor correction for highly absorbing samples scattering to wide angles is discussed in section 3.4.7. The transmission factor is dependent on the linear absorption coefficient μ and thickness d of a material through:

The transmission correction can be applied by dividing the detected intensity with the transmission factor. Furthermore, the detected intensity is proportional to the incident flux on the material fs, which can be similarly corrected for:

The relative intensity uncertainty remains largely unaffected by this correction (if the background is small and/or shows little localization), but the absolute uncertainty is directly related to the uncertainties of the measured transmission and flux:

3.4.3. Time and thickness corrections: TI and TH.

The time and thickness corrections are nearly identical to the transmission and flux corrections (section 3.4.2) and equally straightforward: the detected intensity is proportional to the measurement time11, and the amount of scattered radiation is proportional to the amount of material in the beam. The thickness correction is applied to correct for differences in the amount of material in the beam.

These two corrections are applied through normalization of the measured intensity with the sample measurement time ts and thickness ds:

with uncertainty

3.4.4. Absolute intensity correction: AU.

Scaling the data to reflect the materials' differential scattering cross-section can be a great boon to the value of the data. This scaling allows for the evaluation of the scattering power of the sample in material specific absolute terms which can lead to e.g. the determination of the volume fraction of scatterers or their specific surface area and to check the validity of assumptions made. Furthermore, it allows for proper scaling between techniques, and can help distinguish multiple scattering effects. This scaling gives the scattering profile the units of scattering probability per unit time, per sample volume, per incident flux and per solid angle, which if worked out comes to m−1 sr−1 (though centimetres are sometimes used instead of metres) [195, 14, 42, 204].

This scaling can be achieved in two ways; either through direct calibration with samples whose scattering power can be calculated, or through the use of secondary standards. A discussion of the benefits and drawbacks of either have been well explained by Dreiss et al [42]. The most straightforward method is using a secondary standard such as Lupolen or calibrated glassy carbon samples [152, 204], as they do not require detailed knowledge on detector behaviour and beam profiles, and scatter significantly allowing for rapid collection of sufficient intensity to perform the calibration. The scattering of these samples in absolute intensity units is determined separately, and its calibrated datafile should come with the sample [195, 204]. By comparing the intensity in the calibrated datafile with the locally collected intensity, a calibration factor C can be determined:

where the subscript st denotes the calibration standard, and Ist,cor is the measured and corrected intensity and  is the known scattering pattern (calibrated datafile) from the calibration sample. The calibration factor can be determined by a least-squares fit or linear regression (with least squares allowing for inclusion of counting statistics and optional flat background contribution).

is the known scattering pattern (calibrated datafile) from the calibration sample. The calibration factor can be determined by a least-squares fit or linear regression (with least squares allowing for inclusion of counting statistics and optional flat background contribution).

The accuracy of this determination depends on a large amount of uncertainties. Practically, though, an accuracy of about 10% can be achieved [122]. It may be approximated as:

The approximation is finally applied to the measured data as:

Whose absolute uncertainty follows:

3.4.5. Background correction: BG.

In any scattering measurement, it is of great importance to isolate the (coherent) scattering of the objects under investigation from all other parasitic scattering contributions such as windows, solvents, gases, collimators, sample holders and any incoherent scattering components. For example, this would be the removal of the scattering of capillaries and solvents from the scattering pattern of a suspension or solution, or the removal of instrumental background (scattering from windows, air spaces, etc) from the scattering pattern collected for a polymer film. A background measurement thus contains as many as possible of the components present in the sample measurement, minus the actual sample. A detailed discussion of suitable background samples can be found in Br let et al [18].

let et al [18].

In most cases, the background measurement should be performed with as many variables identical to the sample measurement, and subjected to the same data corrections12. This means that the background measurement should be measured for the same amount of time as the sample measurement. However, in case the detector behaviour is well characterized and the signal-to-noise ratio (i.e. the sample-to-background scattering signal) is large, the sample-to-background measurement time ratio may be skewed (to favour sample measurement time) in order to improve the statistics after background subtraction [169, 134]. The correction is applied as:

with Ij,b the background measurement intensity for datapoint j. The uncertainties, both absolute and relative, propagate as follows:

3.4.6. Correcting for spherical angles: SP.

Most detectors are flat with uniform, square pixels, but we wish to collect the intensity over a solid angle of a (virtual) sphere. The projection of the detector pixels on the sphere results in a difference in solid angle covered by each pixel (illustrated in figure 6) [14, 7, 108]. Therefore, we need to correct the intensity for the difference between these areas13.

Figure 6. The need for spherical corrections illustrated for straight detectors (as opposed to tilted detectors). One unit angle covers a different number of pixels, which needs to be corrected for.

Download figure:

Standard image High-resolution imageThe correction for this effect achieved by means of a few geometrical parameters. This correction is given by [14] as:

where LP is the distance from the sample to the pixel, L0 the distance from the sample to the point of normal incidence (usually identical to the direct beam position except in case of tilted detectors), and px and py are the sizes of the pixels in the horizontal and vertical direction, respectively. As it is unnormalized, this correction factor typically assumes very large values. When normalized to assume a value of 1 at the point of normal incidence, the correction becomes:

Its magnitude is shown in figure 7, and is generally less than 1% for scattering angles lower than 5°. It very quickly becomes more severe beyond those angles.

Figure 7. Area dilation correction showing an increasing need for application of the correction beyond about 5°.

Download figure:

Standard image High-resolution image3.4.7. Sample self-absorption correction: SA.

When scattering occurs in a sample, the scattered radiation has to travel some distance through the sample. Depending on the sample geometry and the scattering angle, this scattered radiation has to travel through more or less material. The direction-dependent absorption thus occurring can induce an angle-dependent scattered intensity reduction which is most severe for scattering to wider angles and for samples with a high attenuation coefficient [18, 175, 200, 11]. This is essentially a correction of the transmission factor correction described in section 3.4.2.

Its correction for plate-like samples to a scattering pattern takes the form of:

which can be expressed in terms of linear absorption coefficient μ and thickness d as:

where 2θ denotes the scattering angle. As the numerator and denominator of the fraction tend to zero for 2θ = 0, at that point Ij,cor = Ij must be substituted. This correction is only valid for plate-like samples, for which it is still straightforward to derive. For spherical samples and cylindrical samples, the direction-dependent attenuation becomes much more complicated [175, 200], and an extra level of difficulty is added for off-centre beams [11].

Figure 8 shows the magnitude of the correction depending on the transmission factor and scattering angle. As previously remarked, the effect is most severe for highly absorbing samples and wide angles.

Figure 8. Absorption due to sample geometry for a sample for a range of absorptions.

Download figure:

Standard image High-resolution imageAs the correction is rather minimal for small-angle scattering, its effects on the uncertainties are expected equally negligible. Given the estimated complexity of the uncertainty propagation in this case, its derivation is here omitted.

3.4.8. Multiple scattering correction: MS.

Multiple scattering occurs when a scattered photon still travelling through the material undergoes a subsequent scattering event. As the probability for any photon to scatter (irrespective of whether it has scattered or not) is proportional to the scattering cross-section of the material and the amount of sample in the beam, multiple scattering becomes more dominant for strongly scattering, thick samples [154, 30, 115, 114]. It effects a 'smearing' of the true scattering profile, which can significantly affect analyses [24, 30].

When the possibility of multiple scattering exists for a particular sample measurement (i.e. with a transmission factor below approximately 1/e and strongly scattering samples) it is prudent to test whether it is a significant contribution. This can be performed experimentally by measuring samples with different thicknesses or by changing the incident wavelength. If the scattering profile after corrections significantly differ, chances are that multiple scattering may need to be accounted for [114]. Alternatively, the multiple scattering effect can be estimated analytically [154] or using Monte Carlo based procedures [30, 160].

Like any smearing effect, correcting data (also known as 'desmearing') for multiple scattering effects is much more involved than smearing the model fitting function. When given the choice, implementing a smearing procedure in the fitting model rather than desmearing the data is preferred [60]. Correcting for multiple scattering is generally a complex, iterative procedure where the multiple scattering smearing profile is estimated and removed from the data [114]. It becomes even more complicated for samples with direction-dependent sample thicknesses and hence different multiple scattering probabilities [12, 174]. One avenue for simplifying the correction and estimation is by approximation of the multiple scattering effect as mainly consisting of double scattering [24, 60, 11].

3.4.9. Instrumental smearing effects correction: SM.

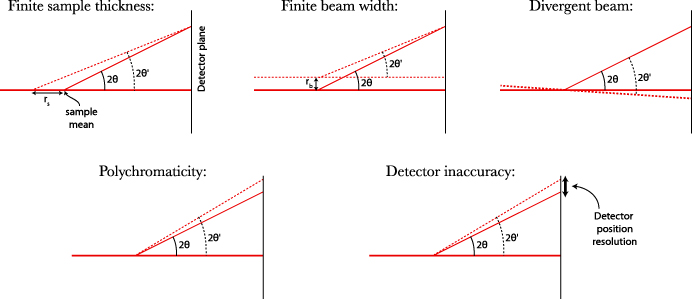

Besides the smearing effect of the multiple scattering phenomenon, there are more aspects which contribute to a reduction in definition, or sharpness of the scattering pattern (graphically explained in figure 9). These are: (a) the wavelength spread, (b) the beam divergence and (c) the beam profile at the sample, (d) finite sample thickness effects, and (e) the finite resolution of the detector due to detection position sensing limitations [140, 6, 70]. All these aspects effect a smearing or 'blurring' of the observed scattering pattern that can reduce the definition of sharp features, which in turn may lead to (for example) an overestimation of polydispersity. This paragraph will introduce the aforementioned five aspects.

Figure 9. Different smearing contributions affecting the scattering pattern.

Download figure:

Standard image High-resolution imageThe wavelength spread  directly affects the smearing in q-space as it introduces a spectrum rather than a single value for the wavelength, leading to a distribution Rw(q,〈q〉) of q around the mean 〈q〉. This smearing effect by Rw(q,〈q〉) is proportional to [140]:

directly affects the smearing in q-space as it introduces a spectrum rather than a single value for the wavelength, leading to a distribution Rw(q,〈q〉) of q around the mean 〈q〉. This smearing effect by Rw(q,〈q〉) is proportional to [140]:

For most pinhole-collimated, crystal-monochromated x-ray instruments, the wavelength spread, and therefore Rw(q,〈q〉) is very small compared to the other resolution-limiting factors such as collimation effects and detector spread. The wavelength spread in these systems typically assumes values smaller than 10−3 and is therefore often not considered [140]. For Bonse–Hart instruments, however, the monochromaticity is tied to the instrument resolution and its consideration is therefore a necessity [26, 178, 203].

The smearing effect due to the sample thickness is similarly very small. This is the smearing effect introduced by a variation in the sample-to-detector distance due to the sample radius (as the exact origin of a scattering event may lie anywhere within the sample). The maximum relative deviation in q:  can be easily derived from geometrical considerations, resulting in a function of the scattering angle 2θ, the mean distance from the sample to the detector L0 and the sample radius r:

can be easily derived from geometrical considerations, resulting in a function of the scattering angle 2θ, the mean distance from the sample to the detector L0 and the sample radius r:

Evaluation of the deviation given by equation (32) for typical values of 2θ,L0 and r (5°, 1 m and 1 mm, respectively) shows that the resulting uncertainty in q due to the sample thickness is only a fraction of a per cent (0.2% for the example values given). Incidentally, the same equation can be used to determine the uncertainty in q arising from uncertainties in the sample-to-detector distance measurement.

Besides the previous two, the incident beam characteristics (in particular its profile and divergence defined by the collimation) and detector position sensing inaccuracies also cause a smearing of the detected scattering pattern [140, 6, 70]. The finite beam size, defined by the collimation, has a smearing effect similar to the previously discussed sample thickness effect, where the scattering events are distributed in space over the entire irradiated sample cross-section. This leads to a distribution of scattering angles impinging on each detector position rather than a single value. This finite beam width effect, with the beam radius denoted as rb, can be derived in the same way as the sample thickness effect, leading to:

Evaluating this for a variety of configurations shows that this effect may assume significant values for smaller angles and large beams. This is one of the aspects limiting the final resolution of an instrument, along with the beam divergence and detector position resolution.

The collimation defines both the beam size at the sample position as well as the divergence of the beam. The divergence of the beam at the sample position similarly effects an angular spread  that smears the definition of q. A good derivation of this is available in the literature [140, 138]. Lastly, the finite position sensitivity of the detector introduces yet another smearing contribution, at minimum defined by the size of the individual detection elements, but commonly defined by the point-spread function of the detector [138].

that smears the definition of q. A good derivation of this is available in the literature [140, 138]. Lastly, the finite position sensitivity of the detector introduces yet another smearing contribution, at minimum defined by the size of the individual detection elements, but commonly defined by the point-spread function of the detector [138].

While these beam- and detector-contributions can be considered separately, the compound smearing contributions can be directly evaluated as the image of the direct beam on the detector (with which the 'true' scattering convolves) [141]. It has recently been demonstrated that the compound method produces more accurate results than the separate approach in practice [38].

These smearing effects are often not considered in the evaluation or correction of pinhole-collimated x-ray scattering instruments (if they are considered, they are usually incorporated as a model smearing rather than a data desmearing). There are some notable exceptions by Le Flanchec et al [108] and Stribeck and Nochel [172], in the latter example it is applied to allow for improved intercomparability of 2D scattering patterns collected with differing collimation.