Abstract

When an agent invests in new industrial activities, he has a limited initial knowledge of his project's returns. Acquiring information allows him both to reduce the uncertainty on the dangerousness of this project and to limit potential damages that it might cause on people's health and on the environment. In this paper, we study whether there exist situations in which the agent does not acquire information. We find that an agent with time-consistent preferences, as well as an agent with hyperbolic ones, will acquire information unless its cost exceeds the direct benefit they could get with this information. Nevertheless, a hyperbolic agent may remain strategically ignorant and, when he does acquire information, he will acquire less information than a time-consistent type. Moreover, a hyperbolic-discounting type who behaves as a time-consistent agent in the future is more inclined to stay ignorant. We then emphasize that this strategic ignorance depends on the degree of precision of the information. Finally, we analyse the role that existing liability rules could play as an incentive to acquire information under uncertainty and with regard to the form of the agent's preferences.

Similar content being viewed by others

Introduction

Recent environmental policies favour the ‘pollutant-payer’ Principle. This Principle points out the pollutant financial liability for the eventual incidents induced by his activities. Investing in technological innovations generates uncertainty about the future returns, as well as about the damages that such innovations could involve and about the cost to pay in case of troubles. To reduce this uncertainty, the agent has the opportunity to acquire information, for example through research activities, on his project's potential consequences on human health and the environment. Does the agent systematically exert this option?

To address this question, we explore whether there exist situations in which a private agent chooses not to acquire information at the time he decides to develop new industrial activities. We define information acquisition as a costly agent's effort exercised in order to reduce the existing uncertainty on future payoffs. We associate a degree of information precision to this effort's level. A higher effort today will imply more precision tomorrow. Acquiring information also allows the agent to update his decisions, in particular he may decide to prematurely stop the project and then limit potential damages in case of accident. Using such an approach allows us to consider both the problem of emerging risk management and the trade-off between activities’ development and precautionary measures.

Moreover, we consider that our agent may have hyperbolic discounting preferences. In other words, he may discount at a relatively higher rate the short-term events than the long-term events. Discount rate gathers all the psychological motives of the agent's investment choice, such as anxiety, confidence, or impatience. Our agent is also ‘sophisticated’, that is, he is perfectly aware that the decisions at period t may be different if we analyse them from the perspective of the agent at period t+1 than from the perspective of the agent at period t. In this regard, we consider the agent with hyperbolic discounting preferences as a collection of risk-neutral incarnations with conflicting goals.

Hyperbolic discounting preferences assumption still creates controversies (see Read, 2001 and Rubinstein, 2003). However, empirical evidences (Frederick et al., 2002) persuade more and more economists on this type of preferences. In fact, Strotz (1956) is the first to suggest an alternative to exponential discounting. In addition, Phelps and Pollack (1968) introduce the hyperbolic discounted utility function as a functional form of these preferences. Elster (1979) applies this formalization to a decision problem in characterizing time inconsistency by a decreasing discount rate between the present and the future, and a constant discount rate between two future periods. Laibson (1997, 1998) uses this formulation to savings and consumption problems, while Brocas and Carrillo (2000, 2004, 2005) consider the problems of information value, irreversible consumption and irreversible investment. More recently, O’Donoghue and Rabin (2008) investigate procrastination on long-term projects by people who have a time-inconsistent preference for immediate gratification.

Our approach relies on two building blocks. First, it is related to the real options theory. Acquiring information is both costly and defined as a right, not as an obligation, for the agent. This real option allows him to stop his project and recover a part of his initial investment. This contrasts with the standard literature in which the investment is irreversible and the flow of information is exogenous (Arrow and Fisher, 1974; Henry, 1974; Brocas and Carrillo, 2000, 2004). This theoretical approach quantifies the value of management flexibility in a world of uncertainty. It then contributes to add a new dimension with the introduction of endogenous information.

Furthermore, it also examines the literature relying on hyperbolic discounting preferences and information acquisition. Bénabou and Tirole (2002, 2004) show that a “comparative optimism”, or a “self-confidence” is at the origin of time-inconsistent behaviour. Such behaviour inhibits all learning processes and uncertainty may strengthen this effect. In addition, Carrillo and Mariotti (2000) study intertemporal consumption decisions, involving a potential risk in the long run, and show that hyperbolic discounting preferences may favour strategic ignorance. In our paper, we view as a dangerous ignorance what Carrillo and Mariotti call a strategic ignorance. Indeed, when our agent refuses information, he does not get the possibility to prematurely stop his project and then to limit the potential cost of damages. Therefore, his behaviour might be dangerous for people's health and the environment.

Carrillo and Mariotti (2000) point out that a person with hyperbolic preferences might choose not to acquire free information in order to avoid over-consumption or engagement in activities that may require much more fundamental research on potential social costs or externalities than they could involve in the long term. Our model offers a new explanation. It shows that a hyperbolic agent does not refuse free information but free information with a certain degree of precision. By introducing a costly information linked to information precision, we find that a time-consistent agent as well as a hyperbolic type will acquire information unless the cost exceeds the direct benefit. Nevertheless, a hyperbolic agent may remain ignorant if the degree of information precision is not high enough to make information relevant for him. On the other hand, when a hyperbolic agent does acquire information, he acquires less information than a time-consistent type. Moreover, if we introduce the possibility that the hyperbolic agent behaves as a time-consistent agent on future actions, we show that the agent will be more inclined to remain ignorant. We then emphasize the relevance of information precision for hyperbolic types’ information acquisition decision.

The asbestos case is a typical example of the suitability of information precision. Greeks and Romans were the first to remark that slaves were afflicted with a sickness of the lungs when they were in contact with asbestos. Then, in 1898, the annual reports of the Chief Inspector of Factories advised that asbestos creates health risks. However, asbestos industry evaluated that these reports lacked precision, and refused this available information on the asbestos risks. In the 1970s, after many facts revealed the link between cancer and asbestos, the first regulation appeared. The use of asbestos in new construction projects is now banned in many developed countries (Henry, 2003). The use of antibiotics as growth hormone is also a characteristic example. In 1943, the Luria–Delbruck experiment demonstrated antibiotic resistance of bacterial populations.Footnote 1 However, the farming and Pharmaceutical industry considered that the information was not relevant, and preferred to ignore it (Henry and Henry, 2002). Currently, additional information leads the European Commission, the World Health Organisation, the Centers for Disease Control and the American Public Health Association to support the elimination of antibiotics growth.

We should also remark that the private agent considered in our approach can be viewed as a firm. If dramatic examples in the past could let us believe that firms’ managers and/or shareholders may choose to neglect a potential danger in the long term, in order to get immediate gratification, there is no empirical study on such an assumption of time-inconsistent preferences of firms when facing uncertainty. Thus, it is not clear that a firm always behaves as a time-inconsistent private agent under uncertainty. One reason is that there are many interactions among people in a firm and particularly between its manager and shareholders, who are subject to conflicting goals and therefore do not consider time horizon in the same way, that is, manager might be more interested in the day-to-day performance of the firm, while shareholders might have a long-term view of the its development. Such conflicts should have an impact on the behaviour of the decisions makers, and time-inconsistent behaviour may, or may not, be a result of such conflicts.

In this regard, we allow, in the paper, the decision maker to be characterized by different types of preferences: time-consistent preferences, hyperbolic discounting preferences or hyperbolic discounting preferences with self-control. If we suppose that the decision maker is a firm, the firm's preferences will then be those of the decision maker, regardless of the possible interactions existing in the firm. Indeed, we suppose that the firm is represented by a board of shareholders in charge of all strategic decisions, which only maximizes the profit of the firm, and could be either time-consistent or time-inconsistent. Under such an assumption, the firm can be represented by a private arbitrary individual with time-consistent or hyperbolic discounting preferences.

Regarding environmental policies, it seems interesting that the agent acquires information. Indeed, this information allows him to give up his project and then to reduce harmful consequences on the environment in case of accident. In fact, exercising this option could be interpreted as an agent's voluntary application of the Precautionary Principle. From the Rio Conference, the Precautionary Principle states that: “In order to protect the environment, the precautionary approach shall be widely applied by States according to their capabilities. Where there are threats of serious or irreversible damage, lack of full scientific certainty shall not be used as a reason for postponing cost-effective measures to prevent environmental degradation”. The cost of protection is transferred from State to agents. We propose to study how State could lead the agent to acquire information when this agent misses to do it. We find that a strict liability rule, for example applying the “pollutant-payer” Principle, may not always be a useful tool to encourage the acquisition of information. On the other hand, to a certain extent, a negligence rule may offer an alternative solution to solve this uncertainty learning problem.

The remainder of paper is organized as follows. The next section presents the model. The subsequent section investigates the optimal decision making. The penultimate section proposes a sensitive analysis of the model's results to changes in the parameters. Finally, the last section analyses whether the existing liability frameworks, such as strict liability rule and negligence rule, encourage the agent to acquire information. All proofs are given in the Appendix.

The model

We consider a three-period model. At period 0, the agent invests a given amount of money I>0 in a project that may create damage to people's health and/or to the environment. There are two possible states of the world, H and L, associated with different probabilities of damage θH and θL, respectively. We assume that state H is more dangerous than state L, therefore

At period 0, the prior beliefs of the agent are p0 on state H, and 1−p0 on state L. Thus, the expected probability of the damage is given by:

At period 0, the agent pays C⩾0 to obtain information at period 1 through a signal σ∈{h, l} on the true state of the world. We define the precision of the signal as the probability the signal corresponds to the state. We represent it as an increasing and concave function f(C) such that:

and

and

Hence, the information precision depends on the information cost C. If the agent does not pay, that is, C=0, then the signal is not informative.Footnote 2 On the other hand, a larger cost implies a higher precision.

According to Bayes’ rule, the probability of being in state H given signal h and C, and the probability of being in state H given signal l and C are, respectively:

and

At period 1, according to signal σ∈{h, l}, we define x σ ∈{0, 1} as the agent's decision to stop the project (x σ = 0), or to continue it (x σ =1). We assume that if the agent stops his project (x σ = 0), then he recovers a part of his investment D, 0<D<I. In the standard literature, investment is irreversible. Under uncertainty on the payoffs, the agent has to choose the level of investment today and that of tomorrow. This creates flexibility for the investment. In our model, the agent invests and then starts the project regardless of the situation. Letting a possibility to recover a part of his investment allows recuperating management flexibility. In addition, under D=0, stopping the project would purely imply financial costs. This would seriously restrict decisions on precautionary measures.

At period 2, an accident may happen. If the project is carried out until period 2, the agent gets a payoff R2>0. From this payoff must be subtracted the financial cost of the accident K>0 that occurs with probability θH or θL depending on the state of the world. If the project has been stopped at period 1, this financial cost is lower K′>0 and also occurs with probability θH or θL. According to the “pollutant-payer” Principle, the agent has to pay for his project's consequences on human health and the environment, even if as he has stopped his project the damages are less costly. We consider that K and K′ represent the total cost relative to the negative external effect of the agent's decisions, that is, public and private costs.

In order to formalize the hyperbolic discounting preferences, we use Phelps and Pollack (1968)'s functional form. Let D(k) represent a discount function such that:

Hyperbolic discounted utility function is then defined as follows:

To simplify Phelps and Pollack's (1968) formalization, we assume that δ=1.Footnote 3 Here and throughout the paper, as Frederick et al. (2002) suggest, the discount rate β gathers all the psychological motives of the agent's investment choice, such as anxiety, confidence, or impatience.Footnote 4 If β=1, the psychological motives have no influence on the agent's choice, and his preferences are time-consistent. On the other hand, if β<1, then the agent's preferences change over time, indicating that what the agent decides today might be discordant with what he decides tomorrow.

We consider an agent with hyperbolic discounting preferences as being made up of many different risk-neutral selves with conflicting goals.Footnote 5 Each self represents the agent at a different point in time. Hence, at each period t, there is only one self called “self-t”. Each self-t depreciates the following period with a discount rate β.

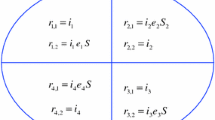

Therefore, expected payoffs of self-2, self-1, and self-0 may be expressed recursively. If signal σ has been perceived, V2(x σ ,σ,C) is self-2's expected payoff:

Likewise, self-1's expected payoff is

where (1−x σ )D represents self-1's current payoffs and βV2(x σ ,σ,C) self-1's expected payoffs for period 2. Finally, self-0's expected payoff can be expressed as follows:

When self-1 knows with certainty the state S∈{L, H}, let us consider BS(β) as the difference between self-1's expected payoff when he decides to carry on the project, and self-1's expected payoff when he decides to stop it:

We assume that it is always more profitable for self-1 to stop (continue) his project when he knows with certainty that the true state of the world is H (L). Therefore, we suppose that:

The agent has the possibility not to acquire information (C=0) and thus to remain uninformed (No Learning). Under no learning, define self-1's expected payoff when he decides to achieve the project as follows:

and self-1's expected payoff when he decides to stop the project by

We consider that the agent who starts a project without information always completes it. It is then more profitable for him to continue the project than stopping it at period 1. Formally, for β⩽1:

For all β⩽1, define θ̂(β) as the probability of a damage that makes the agent indifferent between continuing the project and stopping it at period 1. That is:

Hence for β⩽1, at period 1, it is more profitable for an uninformed agent to continue the project than to stop it if:

Under such an assumption, we consider that the uninformed agent adopts a non-precautionary behaviour. Indeed, by ignoring information, he does not make any effort either to reduce the uncertainty linked to his project, or to protect human health and the environment.

To ensure that an uninformed agent always chooses to complete his project, we restrict our study to an agent with hyperbolic discounting preferences that satisfy condition (1). Since θ̂(β) is increasing with β,Footnote 6 condition (1) is equivalent to β˜<β, with β˜ given by E(θ)=θ̂(β˜).Footnote 7 Therefore, we analyse the hyperbolic agent's behaviour with a discount rate β∈(β˜, 1).

The optimal decision making

In this section, we present the agent's optimal decision making. We propose to study three kinds of preferences. First, time-consistent preferences which suppose that the agent's optimal decision is sustained as circumstances change over time, and thus that his future selves act according to the preferences of his current self. In other words, a time-consistent agent gives the same weight to the current period and the future one. Then, hyperbolic discounting preferences is one where the agent's future selves choose strategies that are optimal for them, even if these strategies are suboptimal from the current self's point of view. With this kind of preferences, the agent gives a larger weight to the present, and the same weight to future periods. Finally, we analyse hyperbolic discounting preferences with self-control in the future. A hyperbolic agent with self-control gives a larger weight to the first period, but at the following periods he behaves as a time-consistent agent, that is, he gives the same weight to what will be current and future periods.

Regardless of the preferences, at each period t, the agent is represented by his self-t. At period 0, self-0 chooses how much he is willing to pay to acquire information, knowing that at period 1, self-1 takes decision to stop or to continue the project. We use the backward induction method in order to characterize the agent's optimal decisions.

Stopping or continuing the project

We start by studying self-1's behaviour. Regardless of the preferences, for σ∈{h, l} and for C⩾0, self-1 continues the project if his expected payoff by continuing the project is higher than when he stops it. That is:

Since a time-consistent agent and a hyperbolic type with self-control give the same weight to period 1 and period 2, that is, β=1 between both periods, then for σ∈{h, l} and for C⩾0, their expected payoff is as follows:

By contrast, a hyperbolic agent prefers the present, that is, β<1 between period 1 and period 2. Therefore, for σ∈{h, l}, C⩾0 and β<1, his expected payoff is:

For σ∈{h, l} and C⩾0, denote both the equilibrium strategy by xσ* and the revised expected probability of damage by E(θ∣σ,C)=P(H∣σ,C)θH+(1−P(H∣σ,C))θL. Conditions under which self-1 stops or continues his project are given by the following proposition.

Proposition 1

-

For σ∈{h, l}, C⩾0 and β∈(β˜,1]: If E(θ∣σ,C)<θ̂(β), then the agent continues the project, that is, xσ*=1; if θ̂(β)<E(θ∣σ,C), then the agent stops the project, that is, xσ*=0; finally, if θ̂(β)=E(θ∣σ,C), then the agent is indifferent between stopping and continuing the project, that is, xσ*∈{0, 1}.

Owing to condition (1), regardless of the preferences, self-1 is confronted with two strategies. In the first strategy, he always continues the project regardless of the signal, and in the second one, he stops his project when he receives signal h (being in the most dangerous state of the world), while when he gets signal l he continues it. From an environmental point of view, we may qualify the former strategy as a cautious one. Indeed, in this strategy, the agent prefers withdrawing the project when there exists a possibility for the most dangerous state to be revealed. He is then more willing to prevent potential damages.

Lemma 1

-

For all C⩾0, we have E(θ∣l, C)⩽E(θ)⩽E(θ∣h, C), and E(θ∣h, C) is increasing with C while E(θ∣l, C) is decreasing with C.

Hence, regardless of the preferences, the self-0's decision on the cost to spend in order to acquire information affects the self-1's choices. Indeed, a higher cost increases information precision at period 1. This increase both improves the perception of self-1 on the true state of the world and emphasizes the decision of stopping the project when self-1 receives signal h, that is, being in the most dangerous state of the world.

Information acquisition

We now turn to self-0's optimal decision to acquire information regarding the form of his preferences.

Agent with time-consistent preferences

Self-0 of a time-consistent agent (β=1) chooses optimally how much he is willing to pay in order to acquire information knowing that self-1 either always decides to continue the project regardless of the signal (case 1), or only chooses to continue it if he receives signal l (case 2).

Under time-consistent preferences, define by  the optimal information cost from self-0's perspective, under the strategy

the optimal information cost from self-0's perspective, under the strategy  maximizes expected payoff, that is, it solves the following problem:

maximizes expected payoff, that is, it solves the following problem:

Let us first study case 1 in which self-1 always continues the project, that is, {x h =1, x l =1}. Self-0's expected payoff under this strategy is:

Since V0(1, 1, C) is decreasing with C, it is obvious that, from self-0's perspective, the optimal information cost is: C11*(1). In such a case, the signal does not have any influence on self-1's behaviour, it is then not reliable for him to acquire it.

Let us turn to case 2 in which self-1 only gives up the project if he receives signal h, that is, {x h =0, x l =1}. Self-0's expected payoff under this strategy is as follows:

Lemma 2

-

(i) C01*(1) is characterized by:

(ii) C01*(1) is strictly positive.

Self-0 anticipates that self-1 only continues the project if he receives signal l. The signal is trustworthy, and it is optimal to pay a positive amount to get it.

Finally, define C*(1) as the optimal information level over all the strategies. To determine C*(1), we compare self-0's expected payoffs of both strategies and select the level of expense that leads, from self-0's perspective, to the highest expected payoff.

Proposition 2

-

If

then self-0 pays C*(1)=C01*(1) to acquire information; otherwise, C*(1)=0, that is, self-0 remains uninformed.

We remark that if self-0 could get the level of information precision f(C01*(1)) without paying, that is, free information, condition (3) would always be satisfied. Indeed, according to Proposition 1, the right-hand side of condition (3) is always positive.Footnote 8 Information cost may be a brake to information acquisition. Self-0 may then stay ignorant if information is too costly.

Agent with hyperbolic discounting preferences

Self-0 of a hyperbolic agent (β∈(δ˜, 1)) selects the optimal level of information knowing that his future selves may deviate from this optimal choice. Our agent is sophisticated, he recognizes that there is a conflict between his early preferences and his later ones, contrary to a naive agent who does not foresee such a conflict and believes that in the future he will behave as a time-consistent agent.

As previously, two cases arise. First case, self-1 always completes his project whatever the signal, and second case he stops it if he receives signal h.

Under hyperbolic preferences, define by  the optimal information cost from self-0's perspective, under strategy {x

h

, x

l

}.

the optimal information cost from self-0's perspective, under strategy {x

h

, x

l

}.  is characterized as follows: self-0 first anticipates that self-1 will implement the strategy {x

h

, x

l

}, and thus chooses the cost that maximizes his expected payoff under the anticipated strategy {x

h

, x

l

}. If this cost is rather precise (high) to ensure that self-1 actually chooses the anticipated strategy, then this cost is optimal. Otherwise, if it is not that precise, self-0 pays the lowest cost that leads self-1 to implement strategy {x

h

, x

l

}.

is characterized as follows: self-0 first anticipates that self-1 will implement the strategy {x

h

, x

l

}, and thus chooses the cost that maximizes his expected payoff under the anticipated strategy {x

h

, x

l

}. If this cost is rather precise (high) to ensure that self-1 actually chooses the anticipated strategy, then this cost is optimal. Otherwise, if it is not that precise, self-0 pays the lowest cost that leads self-1 to implement strategy {x

h

, x

l

}.

Therefore if self-1 always continues the project (case 1), self-0's expected payoff under strategy {x h =1, x l =1} is given by:

C11*(β) solves the following problem:

According to Proposition 1, the constraint E(θ∣l,C)<E(θ∣h,C)<θ̂(β) allows to verify that the information cost chosen by self-0 always leads self-1 to continue the project regardless of the signal. As V0(1, 1, C) is decreasing with C, it is not optimal to acquire information. Moreover, according to condition (1), when self-0 decides not to acquire information, self-1 always chooses to carry on the project. Then not acquiring information satisfies the constraint, and C11*(β)=0.

If self-1 only gives up the project if he receives signal h (case 2), self-0's expected payoff under strategy {x h =0, x l =1} is given by:

In this case, strategy {x h =0, x l =1} is ex post optimal. Thus, C01*(β) solves the following problem:

To determine C01*(β), let us first consider C01*(β), the optimal information cost when self-0 anticipates that self-1 will implement the strategy {x h =0, x l =1}. C01(β) solves the following problem:

Lemma 3

-

(i) For β∈[β˜,1), C01(β) is characterized by:

(ii) C01(β) is strictly positive.

As for a time-consistent agent, if self-0 of a hyperbolic type anticipates that self-1 only continues the project if he receives signal l, then the signal is useful for him. Thus, self-0 is willing to pay a positive cost to acquire it.

In order to select C01*(β), self-0 has to check that C01(β) is rather high to lead self-1 not to deviate to strategy {x h =0, x l =1}. In other words, according to Proposition 1, we have to verify that C01(β) satisfies the constraint E(θ∣l,C)<θ̂(β)<E(θ∣h,C). Otherwise, from self-0's perspective, the signal given by the C01(β) is not informative enough, and self-0 selects the smallest information level that leads self-1 to implement the strategy {x h =0, x l =1}. Let us define by Ĉ(β) the smallest information cost that ensures strategy {x h =0, x l =1} is optimal for self-1. That is, Ĉ(β) is the smallest C⩾0 that verifies that E(θ∣l,C)⩽θ̂(β)⩽E(θ∣h,C).

Lemma 4

-

(i) For β∈[β˜,1), Ĉ(β) is characterized by:

(ii) f(Ĉ(β˜))=f(0)=½.

We then characterize the optimal level of information, C01*(β) in the following lemma.

Lemma 5

-

For β∈(β˜,1), if

then C01*(β)=C01(β). Otherwise, C01*(β)=Ĉ(β).

Hence, information precision needs to reach at least the information precision level f(Ĉ(β)) in order to avoid a deviation of strategy at period 1.

Now, we determine the optimal level of information C*(β) by comparing self-0's expected payoff of the two studied strategies and selecting the level of information that leads to self-0's highest expected payoff.

Proposition 3

-

For β∈(β˜,1) if

and (5) hold then self-0 pays C*(β)=C01(β) to acquire information; otherwise, if one of these two conditions is not satisfied, then self-0 does not pay C*(β)=0, and stays uninformed.

Conditions (5) and (6) both define conditions under which self-0 of a hyperbolic agent acquires information. Condition (5) emphasizes the role of information precision; self-0 refuses information with a certain degree of precision, lower than f(Ĉ(β)).

According to Proposition 1, and since θ̂(β) is increasing with β, the right-hand side of condition (6) may be negative.

If the right-hand side of condition (6) is positive, condition (6) may also not hold. The discount factor may be so low that information cost exceeds expected benefit. Self-0 prefers ignoring information in order to get higher payoff.

On the other hand, if the right-hand side of condition (6) is negative, then condition (6) never holds. Expected payoff without information is higher than the one with information. It is then not optimal for self-0 to acquire information.

Let us introduce the possibility that information precision f(C01(β)) is free. If the right-hand side of condition (6) is positive, then the information acquisition decision depends only on condition (5), that is, on the degree of information precision. In this case, if f(C01(β)) is high enough, that is, higher than f(Ĉ(β)), self-0 acquires free information. By contrast, if the right-hand side of condition (6) is negative, then self-0 never acquires information. In fact, the possibility that self-1 gives up the project is so high that self-0 may choose not to acquire information, even if it is free, in order to avoid such a risk of withdrawing.

Agent with hyperbolic discounting preferences and self-control

Self-0 of a hyperbolic agent with self-control's expected payoff is similar to the that of self-0 of a hyperbolic agent. However, hyperbolic agent with self-control behaves in the future as a time-consistent agent, implying that conditions under which his self-1 continues and stops the project are similar to the ones of self-1 of a time-consistent agent.

Hence, self-0 of a hyperbolic agent with a self-control problem under strategy {x h =1, x l =1} is

and under strategy {x h =0, x l =1}:

Under such preferences, let us define C**(β) as the optimal level of information. With similar resolution then for hyperbolic agent, we obtain the following proposition.

Proposition 4

-

For β∈(β,1) if condition (6) and

hold then self-0 pays C**(β)=C01(β) to acquire information; otherwise, if one of these two conditions is not satisfied, then he does not pay C**(β)=0, and stays uninformed.

Conditions (6) and (7) both define the decision of a hyperbolic agent with self-control to acquire information.

According to Proposition 1, the right-hand side of condition (6) is always positive. However, condition (6) may not hold if the discount factor is such that the cost of acquiring information is higher than the expected benefit. In this case, a hyperbolic agent with self-control does not acquire information.

Let us turn to condition (7). This condition implies that self-0 will never acquire information if the degree of precision of this information does not reach a certain threshold f(Ĉ(1)). Below this threshold, self-1 does not stop the project when he receives signal h, being in the most dangerous state of the world. He always carries on the project: the information is then not relevant for him.

With the possibility of getting f(C01(β)) for free, then condition (6) always holds. The decision to get information is only restricted by condition (7). In other words, self-0 of a hyperbolic agent with self-control will refuse free information with a degree of precision lower than f(Ĉ(1)).

Differences in behaviour according to preferences

Using decisions made by a time-consistent agent as a benchmark on information acquisition, we propose in this part to compare these decisions to those of a hyperbolic agent and to those of a hyperbolic type with self-control.

According to Proposition 1, we note that since θ̂(β) is increasing with β, for a given information precision, hyperbolic discounting preferences favour the decision to give up the project. Indeed, compared to self-1 of hyperbolic agent, a higher information precision is necessary to prompt self-1 of a time-consistent agent and self-1 of a hyperbolic agent with self-control to stop the project. In fact, hyperbolic discounting emphasizes a taste for immediate benefits rather than for long-term ones.

Moreover, we observe that Eq. (2) is equivalent to Eq. (4) with β=1. This implies that C01(β) with β=1 has a similar characterization than C01*(1). Therefore, C01(β) with β=1 may be interpreted as the cost that both the hyperbolic agent and the hyperbolic agent with self-control are willing to pay to get information when they behave as a time-consistent agent, that is, their future selves act according to the preferences of their current self.Footnote 9

We also note that C01(β) is increasing with β.Footnote 10 When both types of hyperbolic agents choose to be informed, they acquire less information than a time-consistent agent. In fact, this is because a time-consistent agent puts more weight on the futureFootnote 11 and is more interested in the future benefits and costs than hyperbolic agents.

Let us deeply consider the conditions related to information acquisition, that is, condition (3) for a time-consistent agent; conditions (5) and (6) for a hyperbolic agent; and conditions (6) and (7) for a hyperbolic agent with self-control.

Regarding condition (6), its fulfilment can be characterized as follows:

Lemma 6

-

If condition (3) holds, then there exists

such that for all

such that for all  condition (6) does not hold and for all

condition (6) does not hold and for all  condition (6) holds. Otherwise, for all β∈(β˜,1], condition (6) does not hold.

condition (6) holds. Otherwise, for all β∈(β˜,1], condition (6) does not hold.

Self-0 of a time-consistent agent may acquire information, while self-0 of hyperbolic agent and self-0 of hyperbolic agent with self-control do not. Since self-0 of hyperbolic agent and self-0 of hyperbolic agent with self-control use a lower discount factor, they have a higher preferences for the present than self-0 of a time-consistent agent. Therefore, the cost of information may then be more valuable than future payoffs, implying an information refusal. Moreover, for hyperbolic agent, condition (6) also may not hold because the effect of the discount factor between period 1 and 2 creates a higher taste for the immediate gratification at period 1. As self-1 may prefer giving up the project, self-0 prefers staying uniformed.

This result on hyperbolic type is also emphasized by the existing literature. As underlined by Carrillo and Mariotti (2000), there is a direct impact of hyperbolic preferences, which may lead the agent to ignore information. Indeed, an agent with strong preferences for the present is more willing to earn money now and then to withdraw his project, than to wait for future payoffs and potentially suffer a financial cost. Moreover, according to Akerlof (1991), a hyperbolic agent, also, always postpones a costly activity. It is thus not surprising that a hyperbolic agent may choose to postpone his acquisition of information, which is here equivalent to not doing it.

Let us now characterize condition (5). It imposes a minimum information precision to ensure that a hyperbolic agent's future self takes into account the signal he receives. However, the specifications under which this condition is satisfied are not straightforward. According to Part (ii) of Lemma 4, f(Ĉ(β˜)) = ½<f(C01(β˜)). However, due to the general form of our precision function, we are not able to define when f(Ĉ(β)) is higher than, equal to, or lower than f(C01(β)). Therefore, three possibilities arise:

-

(P1): There is no intersection between f(Ĉ(β)) and f(C01(β)). That is for all β∈(β˜,1), f(Ĉ(β))<f(C01(β));

-

(P2): There exists one intersection between f(Ĉ(β)) and f(C01(β)). Define β1∈(β˜,1) such that f(Ĉ(β1)) = f(C01(β1)). Then, for all β∈(β˜, β1), f(Ĉ(β))<f(C01(β)) while for all β∈(β1,1), f(C01(β))⩽f(Ĉ(β));

-

(P3): There exist several intersections between f(Ĉ(β)) and f(C01(β)). Therefore, for some β∈(β˜, 1), f(Ĉ(β))<f(C01(β)), and for the others f(C01(β))⩽f(Ĉ(β)).

Let us now characterize condition (7). As for condition (5), three possibilities arise when considering the optimal level of information:

-

(P̃1): There is no intersection between f(Ĉ(1)) and f(C01(β)). That is, for all β∈(β˜, 1), f(Ĉ(1))<f(C01(β));

-

(P̃2): There exists one intersection between f(Ĉ(1)) and f(C01(β));

-

(P̃3): There is no intersection between f(Ĉ(1)) and f(C01(β)). That is, for all β∈(β˜, 1), f(Ĉ(1))⩾f(C01(β)).

Time-consistent preferences vs. hyperbolic preferences With regard to these conditions, let us consider possibility (P1). Condition (5) is always satisfied, which means that the minimum precision imposed on the signal to be powerful is lower than the information precision provided by C01(β). In such a case, the decision to get information for a hyperbolic agent only depends on condition (6).

According to Lemma 6, if a time-consistent agent does not acquire information, a hyperbolic one does not either. In fact, information is too costly for both types of agent. On the other hand, even if a time-consistent agent acquires information, a hyperbolic one may remain ignorant.

As depicted in Figure 1, below  a hyperbolic agent has such strong preferences for the present that he prefers ignoring the information in order to avoid a premature stop of his project. Carrillo and Mariotti (2000) view such a strategic ignorance as a way to prevent the consumption of a potentially dangerous product. In our case, it describes a dangerous behaviour, which favours innovation to the detriment of any precautionary efforts. We consider this ignorance to be dangerous.

a hyperbolic agent has such strong preferences for the present that he prefers ignoring the information in order to avoid a premature stop of his project. Carrillo and Mariotti (2000) view such a strategic ignorance as a way to prevent the consumption of a potentially dangerous product. In our case, it describes a dangerous behaviour, which favours innovation to the detriment of any precautionary efforts. We consider this ignorance to be dangerous.

On the other hand, above  the agent is willing to pay to be informed and follows the strategy {x

h

=0, x

l

=1}. By acquiring information in order to reduce the uncertainty on the potential risks, the hyperbolic agent chooses to adopt a precautionary behaviour. We note that the optimal level of information increases with β. Therefore, a time-consistent agent is willing to pay a higher information cost than a hyperbolic agent. Indeed, as previously underlined, a time-inconsistent agent is more concerned by current reward and cares less about a potential delayed financial cost.

the agent is willing to pay to be informed and follows the strategy {x

h

=0, x

l

=1}. By acquiring information in order to reduce the uncertainty on the potential risks, the hyperbolic agent chooses to adopt a precautionary behaviour. We note that the optimal level of information increases with β. Therefore, a time-consistent agent is willing to pay a higher information cost than a hyperbolic agent. Indeed, as previously underlined, a time-inconsistent agent is more concerned by current reward and cares less about a potential delayed financial cost.

Tables 1 and 2 briefly consider possibility (P2). It is a more sophisticated case insofar as conditions (3), (5) and (6) interact with the agent's decision to get information.

Let us consider the two cases (Table 1) in which a time-consistent agent always acquires information (i.e., condition (3) holds and thus  exists). First, regarding the case where

exists). First, regarding the case where  ⩽β1, one should notice the possibility that the agent prefers staying uninformed even if the discount rate is close to one and chooses to get information for lower values of β. Such a result is explained by the fact that the minimum precision imposed by the hyperbolic agent through condition (5) is very high. It is thus not possible in this case to define an information cost that allows reaching this minimum precision, even if this cost provides enough precise information to fulfil condition (6). Secondly, it is possible that, when

⩽β1, one should notice the possibility that the agent prefers staying uninformed even if the discount rate is close to one and chooses to get information for lower values of β. Such a result is explained by the fact that the minimum precision imposed by the hyperbolic agent through condition (5) is very high. It is thus not possible in this case to define an information cost that allows reaching this minimum precision, even if this cost provides enough precise information to fulfil condition (6). Secondly, it is possible that, when  >β1, getting enough precision is always too costly for a hyperbolic agent. In fact, when

>β1, getting enough precision is always too costly for a hyperbolic agent. In fact, when  >β1, there is no cost that would provide useful information, such that condition (5) holds, and condition (6) is satisfied. In this case, for any value of β<1, hyperbolic agent never acquires information while a time-consistent agent is always willing to do so.

>β1, there is no cost that would provide useful information, such that condition (5) holds, and condition (6) is satisfied. In this case, for any value of β<1, hyperbolic agent never acquires information while a time-consistent agent is always willing to do so.

By considering the results in Table 2, it is also not optimal to acquire information regardless of the form of the preferences. Indeed, if the cost of the information that would provide enough precision to a time-consistent agent is too high, condition (3) is not satisfied. In such a case, it is not possible to define  and thus to fulfil condition (6). In others words, as for a time-consistent agent, the level of information that would provide a hyperbolic agent with enough precision on the dangerous state H is too costly with regard to the expected payoff that he could get with such information.

and thus to fulfil condition (6). In others words, as for a time-consistent agent, the level of information that would provide a hyperbolic agent with enough precision on the dangerous state H is too costly with regard to the expected payoff that he could get with such information.

Finally, since possibility (P3) is a mix of possibilities (P1) and (P2), it does not raise new results. We do not detail it.

Time-consistent preferences vs. hyperbolic preferences with self-control Let us then consider possibility (P̃1). Condition (7) is always satisfied and thus we get the same results as for possibility (P1) described in the previous section.

Regarding possibility (P̃2), conditions (3), (6) and (7) have to be characterized to determine the optimal cost of information that the hyperbolic agent with self-control is willing to pay. Let us first consider condition (7). The level of information precision from which self-1 of a hyperbolic agent with self-control decides to implement strategy {x

h

=0, x

l

=1} is independent of β because its self-1 and self-2 behave as time-consistent selves. Define β2∈(β˜, 1) such that f(Ĉ(1))=f(C01(β2)). Since a higher β implies a higher C01(β) and since f is increasing, then below β2 condition (7) is not satisfied while it is above β2. When taking conditions (3) and (6) into account, two cases arise: If the agent's preferences are such that the cost of information that provides a precise enough signal to implement the strategy {x

h

=0, x

l

=1}, and if  then the agent always chooses to pay this cost and thus to acquire information. On the other hand, if either the cost of information that needs to be paid to get a useful signal does not satisfy conditions (3) and (6), or if the agent's preferences for the present are too strong, the hyperbolic agent prefers staying ignorant. Moreover, according to Lemma 6, if a time-consistent agent does not satisfy condition (3), a hyperbolic one with self-control does not satisfy condition (6) either. Then, both types of agents do not acquire information.

then the agent always chooses to pay this cost and thus to acquire information. On the other hand, if either the cost of information that needs to be paid to get a useful signal does not satisfy conditions (3) and (6), or if the agent's preferences for the present are too strong, the hyperbolic agent prefers staying ignorant. Moreover, according to Lemma 6, if a time-consistent agent does not satisfy condition (3), a hyperbolic one with self-control does not satisfy condition (6) either. Then, both types of agents do not acquire information.

Finally, let us turn to possibility (P̃3). In this case, condition (7) is never satisfied, and thus a hyperbolic agent with self-control, even if he behaves as a time-consistent agent in the future, never gets information. Indeed, he prefers staying ignorant in order to avoid a lower expected payoff.

Hyperbolic preferences vs. hyperbolic preferences with self-control Condition (6) is common to both types of hyperbolic agents, that is, with and without self-control. Therefore, the difference between their decisions to acquire information comes from the fulfilment of conditions (5) and (7).

According to Propositions 3 and 4, since f and Ĉ are increasing, when condition (7) holds, then condition (5) is also satisfied. However, the reverse is not always true. When a signal h is produced, a hyperbolic agent without self-control requires less precise information to stop the project than if he had a self-control capacity. Indeed, such an agent is more concerned by current rewards and is more willing to give up in order to recover a part of his investment. Behaving as a time-consistent agent in the future increases the threshold of the minimum degree of information precision that conditions the relevance of the information. Thus, while a hyperbolic agent acquires information, a hyperbolic agent with self-control may remain ignorant because the signal produced does not lead him to withdraw the project when it is dangerous.

Overall, we sum up our results. We find that all types of agents, regardless of their preferences, always acquire information unless the cost exceeds direct benefits. Nevertheless, a hyperbolic agent may remain strategically ignorant and, when he does acquire information, he acquires less information than a time-consistent agent. Likewise, such a hyperbolic agent who behaves as a time-consistent agent with regard to future actions is more inclined to remain ignorant. In fact, with self-control, the agent needs a higher information precision to give up his project. Moreover, the information refusal depends not only on the cost of this information but also on the degree of the precision that this information is able to provide. Information precision plays a vital role in the information acquisition decision of hyperbolic agents.

Sensitivity analysis

In order to get a better understanding of the agent's behaviour in the context of uncertainty, we propose to study, for each kind of preferences, the effects of changes in the parameters. In particular, we wonder how the agent's prior beliefs may affect the agent's strategy; whether the probability level that a damage occurs has an impact on the agent's decision making; and what are the influences of a project's returns and costs on the agent's behaviour.Footnote 12

We find that prior beliefs have a clear effect on the time-consistent agent's decisions. When the agent has a strong prior belief on realization of the worse state of the world (state H), his ability to both acquire information and to stop the project increases, while his information level is decreases. Since the agent believes that an accident has a higher risk to occur, information on the project consequences is more reliable because it gives him the possibility to give up his investment. In addition, this withdrawal opportunity is more often chosen because the agent's beliefs of getting a negative payoff increased if he continues the project. However, such an increase leads to a lower expected payoff and therefore to a lower expense to get information.

By contrast, since the decision to acquire information also depends on the level of its precision, the impact of prior belief on the two types of hyperbolic agent is unclear. First of all, we have an unambiguous effect on the chosen level of information and on the decision to give up the project. As the agent reduces the level of information acquired, the precision of this information also decreases. On the other hand, as it increases the agent's willingness to stop the project, the necessary minimum level of information precision induced also decreases. In other words, the agent's prior belief creates a trade-off between information precision and the project's development, and then has an ambiguous effect on his choice to be informed.

In addition, we observe that a change of the probability that a damage occurs differently affects the chosen level of information according to the state in which the change occurs. For all kinds of preferences, a higher probability in state L has a similar effect on the agent's decision than a higher prior belief on the realization of state H. A higher probability in state H also produces the same impact on the decision of stopping the project and acquiring information, but it leads to a higher level of information to acquire for all kinds of preferences. Since by assumption, for all β∈(β˜, 1] BH(β)<0<BL(β) then a lower probability in state H, and/or a higher probability in state L leads to a reduction of the expected payoff. In order to compensate for this waste of money, the agent prefers paying less to be informed.

Now, we analyse the effects of the project's returns and costs on the agent's decisions. In fact, these parameters have a direct impact on the expected payoff. We observe that, regardless of the preferences, a higher R2, a lower D, a lower K and/or a higher K′ imply a higher expected payoff by continuing the project than the one by stopping it. Hence, these changes decrease the agent's ability to withdraw the project regardless of the design of his preferences.

Furthermore, for all kinds of preferences, we note that the effect of R2 and D on the expected payoff also depends on the prior belief. Indeed, if p0>½(p0<½), that is, if the agent thinks that realization of the worse state of the world has a higher probability to occur than the one of the less dangerous, a higher (lower) R2 and/or a lower (higher) D decreases the expected payoff. In this context, the agent chooses to get less information in order to compensate for the loss of money. However, the costs’ effect on the acquired level of information is ambiguous; it depends on both the agent's beliefs and the probability that a damage occurs.

Finally, as previously explained, since the two types of hyperbolic agents face a constraint on the precision of the information, the impact of both returns and costs on the agent's choice to be informed is ambiguous. There is a trade-off between the variations of the minimum level of information precision, and the level of precision effectively chosen. On the other hand, for a time-consistent agent, returns and costs have a clear impact. If the expected payoff, when the agent continues the project, increases, that is, R2 increases and/or K decreases, then the agent is less willing to acquire the information in order to avoid stopping the project. If the expected payoff induced by the withdrawal of the project increases, that is, D increases and/or K′ decreases, then the agent will acquire more often information. In fact, he favours the possibility to stop the project by acquiring information. Table 3 summarizes all these results.

Liability rules’ influence on the information acquisition

All firms are constrained by a legal framework in which liability rules specify how to allocate financial damages from an accident. Regarding technological innovations as well as other risky activities, it is important that firms receive the right incentives in order not to neglect risk and uncertainty in learning. In this regard, this section proposes to analyse whether, at the agent level, existing regulatory frameworks of risk, such as strict liability rule and negligence rule, may have an impact on the agent's willingness to acquire information.

The strict liability rule

Under a strict liability rule, it is said that “if the victims can demonstrate a causality link between the damages and the agent's activity or the product sold, the agent is fully liable and thus he must pay for the damages caused by his activities”.Footnote 13 Shavell (1980) and Miceli (1997) show that, under time-consistency, such a rule is an incentive for agents to consider the effect of both their level of care and their level of activity on accident losses. Hence, this rule allows both to prevent risks and to reduce the potential damages by leading the agent to exercise an optimal level of prevention.

In the model, we assume that if an accident occurs, the agent is liable for damages and must pay for them. Therefore, in defining the level of care, as the amount paid by the agent to acquire information, we implicitly suppose that a strict liability rule is enforced.Footnote 14 Acquiring information reflects the agent's level of interest for the potential activity's losses. Indeed, if the agent is informed, he may stop his activity and then limit the cost of damages to K′. On the other hand, if he does not acquire information, he never stops his project and therefore exposes people and the environment to a more severe risk.

As previous sections emphasize, regardless of the agent's preferences (time-consistent or hyperbolic), if the optimal amount paid related to the strategy {x h =0, x l =1} does not satisfy conditions (3) and (6), the agent never chooses to implement this strategy and always prefers staying uninformed. In others words, even if the agent is fully liable, if developing research activities or resorting to experts is too costly with regard to the expected returns of his project, the agent has to choose staying ignorant about the level of danger of this project in order to be able to realize it. When considering the efficiency of such behaviour, we remark that, in this case, it is Pareto efficient for both agents not to acquire information. Using long-run preferences to measure the welfare, as suggested by O’Donoghue and Rabin (1999), it is efficient not to acquire information. Indeed, such a criterion supposes that we compare the decisions of a time-consistent agent to those of a hyperbolic one.Footnote 15 It seems that the source of problem is not the liability rule enforced, but the cost of information acquisition when facing the development of new technologies. This raises arguments to limit innovation when uncertainty is too strong in that it could encourage all kinds of decision makers to neglect a potential danger.

However, even if the optimal amount paid related to the strategy {x h =0, x l =1} satisfies conditions (3) and (6) when an agent has strong preferences for the present, it does not mean that he always chooses to acquire information, even if he is fully liable. Moreover, even if he has weaker preferences for the present, such behaviour may still occur. Indeed, if the signal provided is not viewed as reliable, the agent always prefers staying ignorant (conditions (5) and (7)). In such a case, ignorance is Pareto efficient behaviour, while using long-run preferences as a welfare measure, it is not an efficient decision. Thus, according to the welfare criteria chosen, the strict liability rule allows either efficient, but potentially dangerous, behaviour, or inefficient behaviour. Both cases inhibit any precautionary efforts, and thus might expose people and the environment to severe risks in the future.

Overall, a strict liability rule does not seem to be a useful tool to encourage agent to acquire information. This raises the question: how to design correct incentives which would lead people to take the best decision for their own long run interest?

The negligence rule

Under a negligence rule, it is said that “the injurer is liable for the victims’ damages only if he fails to take a minimum level of care”.Footnote 16 In other words, after an accident, the Court of Law does not consider that an agent is liable and has to pay a financial cost for the damages if he has exercised the minimum level of care specified by the legal framework. In such a case, victims or States have to assume financial costs.

However, how is one to define the minimum level of care? According to Shavell (1980, 1992) and Miceli (1997), under a negligence rule, the minimum level of care is defined as the optimal level of care that an injurer chooses if he has to pay for the damages. In our model, the minimum level of care is then the optimal amount paid to acquire information. Propositions 2–4 imply that when condition (3) does not hold for the agent with time-consistent preferences, when conditions (5) and (6) do not hold for hyperbolic agent, and when conditions (7) and (6) do not hold for hyperbolic agent with self-control, then it is not optimal for him to acquire information, that is, C*(β)=C**(β)=0. On the other hand, when these conditions are satisfied, acquiring information is optimal (C*(β)>0, C**(β)>0) for the agent, regardless of his preferences. Hence, this definition of the minimum level of care may lead the agent to neglect information without being liable for the damages. This does not encourage the agent to acquire information and then to decide whether he continues or stops the project in order to limit the cost of damages.

Therefore, how do incentive agents get information? We propose to define the minimum level of care as the minimum amount, Cmin(β), that an agent has to pay not to be liable if an accident occurs, and which leads him to acquire information, regardless of his preferences. In this respect, the agent has an incentive to be informed.

Self-0's intertemporal expected payoff when the agent is not responsible for the financial damages in case of an accident occurs is thus given by:

In fact, V0NR(x h , x l , C) equals self-0's intertemporal expected payoff V0(x h , x l , C) with K=K′=0.

Propositions 2–4 show that regardless of his preferences, the agent may optimally prefer not to acquire information and be liable for the damages in case of an accident. To avoid such an effect, the legal framework should impose that the minimum level of care Cmin(β) verifies that regarding the strategy {x h =0, x l =1}, self-0's intertemporal expected payoff when self-0 pays Cmin(β) and is not liable for the damages, that is, V0NR(0, 1, Cmin(β)), is at least equal to self-0's intertemporal expected payoff when self-0 does not pay and is then liable for the damages. According to condition (1), we know that when an agent decides to be uninformed, he always continues his project. Then, self-0's intertemporal expected payoff when self-0 decides not to pay and is liable for the damages is V0(1, 1, 0). The minimum level of care Cmin(β) is then characterized by:

which is equivalent to

We can easily check that Cmin(β) exists and is strictly positive. Therefore, regardless of his preferences, an agent has an incentive to pay at least Cmin(β). Indeed, for a time-consistent agent, who can commit in the long run, it is more interesting to invest Cmin(β) and implement the strategy {x h =0, x l =1} than not getting any information. However, since a hyperbolic discounting agent does not have consistent preferences in the future, even if from self-0's perspective, Cmin(β) is more relevant, such a cost cannot ensure that self-1 chooses the strategy {x h =0, x l =1}. In fact, the agent is never liable when paying for such a cost regardless of the strategy chosen at period 1, thus self-1 always deviates from the anticipated strategy {x h =0, x l =1}. In other words, such a level of care supposes that the agent behaves as a naive agent who does not foresee any problem of self-control. Such a design of incentives does not influence him to behave in a precautionary way and does not limit the exposure of people or the environment to a potential danger.

Alternatively, a sophisticated agent is aware of his self-control problem and aims at overcoming it. In this regard, the minimum level of care should ensure that V0NR(0, 1, Cmin(β))=V0(1, 1, 0), but also prevent any possible deviation from the strategy {x h =0, x l =1}. Thus, Cmin(β) should also verify that the intertemporal expected payoff, under the strategy {x h =0, x l =1} when the agent is not liable, is at least equal to the intertemporal expected payoff under the strategy {x h =1, x l =1} when the agent is not liable. Finally, Cmin(β) is defined by:

which is equivalent to Cmin(β) = βE(θ)K.

Such a design of incentives might influence the hyperbolic agent to behave in a precautionary way.

To go further …

Another point has to be mentioned concerning the level of the penalty if an accident occurs. What would happen if the returns of the agent were lower than or equal to the potential cost of damages, that is, R2⩽K and D⩽K′? The results of the model apply. Indeed, the model's assumptions do not allow to consider cases where the agent would not invest in the project, and do not consider that the agent may not be able to financially assume the damages he may cause.

Intuitively, if we introduce this possibility, the agent should subscribe an insurance contract to cover potential damages linked to his activity. Moreover, if a limited liability rule is enforced, that is, a catastrophic accident, whose damages are higher than the financial capacities of the firm, is considered as a bankruptcy, and the total cost of accident is limited to the return of the firms, the agent will only be partly liable for these damages. Under uncertainty, such a protective measure may have perverse effects. Even if the cost of potential damages as well as its probability is not completely known, the agent may not care about reducing this uncertainty because he will only lose his benefits if an accident occurs, regardless of the size of this accident. Hence, limited liability application is not a relevant rule in the context of uncertainty. In addition, under such a liability rule, insurance might also have an important impact on firms’ behaviour. An interesting further work would be to study the effect of the insurance premium on the information acquisition. Does it increase the information acquisition? Insurance could cover a part of the damage costs, however taking an insurance is costly. What would be the trade-off between this cost and benefit? What about the discounting effect?

Conclusion

In this paper, we consider an agent who invests in new industrial activities, and then has an uncertainty on activities’ consequences. Getting information allows him to reduce this uncertainty. To a certain extent, information acquisition could be viewed as precautionary efforts insofar as it allows the one under uncertainty to limit the potential damages that the project could entail, and to improve the protection of people's health and that of the environment. Possible examples of applications include innovations in new technologies (e.g., nanotechnologies, mobile phones), pharmaceutical firms (e.g., development and production of new drugs), or chemical firms (e.g., production of new fertilizers).

In our model, we analyse individual behaviour with different types of preferences: time-consistent, hyperbolic discounting and hyperbolic discounting with self-control in the future. However, we wonder if an institution as a firm still conserves hyperbolic behaviour. When one person owns a business, the preferences of the owner would define the preferences of the firm. When a firm comprises of more than one partner, then they would have strategic interaction among them. However, all of them should operate for the common goal of making profit. In the decision to acquire information, there should be no competition between individuals, they should all make a decision in order to favour their firm. Hence, the board of shareholders in charge of the strategic decisions may be represented by an arbitrary individual with time-consistent or hyperbolic discounting preferences.

We find that the hyperbolic agent does not refuse free information but free information with a certain degree of precision. By introducing a costly information linked to information precision, we find that a time-consistent agent as well as a hyperbolic type will acquire information unless the cost exceeds the direct benefit. Nevertheless, a hyperbolic agent may remain ignorant if the degree of information precision is not high enough to make information relevant for him. On the other hand, when a hyperbolic agent does acquire information, he acquires less information than a time-consistent type. Moreover, if we introduce the possibility that the hyperbolic agent behaves as a time-consistent agent on future actions, we show that the agent will be more inclined to remain ignorant. We then emphasize the relevance of information precision for hyperbolic types’ information acquisition decision.

With a sensitive analysis, we show that prior beliefs, probabilities that damage occurs and returns and costs of the project do not influence the agent's decisions in the same way according to the preferences.

Finally, we analyse the way in which liability rules influence the agent's decision to acquire information. We find that a strict liability rule does not appear to be a useful tool in order to incentivize an agent, regardless of his preferences, to acquire information. However, we propose an alternative solution, the negligence rule, which might lead the agent to behave in a precautionary way, regardless of his preferences.

In addition, if the information acquisition is not spontaneous, other responsibility forms or rules might be considered. Strotz (1956) emphasizes the necessity to define pre-commitment strategies in a context of hyperbolic preferences in order to reduce the impact of the hyperbolic discounting preferences on the agents’ decision. In an innovation context, pre-commitment could be realized with contracts establishing the innovation's agenda in the long run. From this perspective, the negligence rule could be an interesting alternative solution. Agents who are familiar with risk and uncertainty, such as the insurers, could also define such a pre-commitment strategy. One could then imagine insurance contracts with a deductible to allow better control of the precautionary efforts undertaken by agents and reduce the financial risk they are exposed. However, to go further, such a contract could only be defined if the probability of damages is known, which may not be the case under uncertainty (i.e., when facing scientific uncertainty). Indeed, insurance companies would have to charge an ambiguity premium in addition to the traditional premium, which could lead to an unaffordable contract, or would refuse to insure the project of the firm. Overall, insuring emerging risks is still under debate, mostly depending on the definition of a financial liability of firms for the damages that their activities might cause in the future, even if it is still not possible to precisely quantify such damages.

Moreover, regarding the current application of a strict liability rule, experience also underlines the persuasive role that such a rule can play on the producers’ behaviour. Weill (2005) notes that when the “burden of the proof” is on the potential injurer, and not on the victims, as is the case under a negligence rule, producers are more likely to withdraw potentially dangerous products from the market. The recent European legislation on chemicals (REACH directive)Footnote 17 tackles the challenging issue related to the application of the precautionary principle to enhance innovation as well as protect people and the environment. It is based on a strict liability rule, under which the “burden of the proof” is on the industry, but it also requires manufacturers and importers to take the responsibility “to gather information on the properties and risks of all substances produced or imported”.Footnote 18 This legislation proposes an interesting way to implement the precautionary principle to deal with chemicals, by combining the positive effects of a strict liability rule with a research obligation for firms that should avoid the negative ones. This approach should provide relevant elements in the current debate on the regulation of other kinds of scientific and/or technological innovation.

Notes

We do not consider exogenous information, such as public information. In this paper, our interest is an agent's own initiative in acquiring information and his willingness to pay for it.

δ<1 only implies a lower discount rate. Taking δ=1 does not change any results.

In fact, Akerlof (1991) defines β as the “salience of current payoffs relative to the future stream of returns”. In the literature, it is also interpreted as a lack of willpower (Bénabou and Tirole, 2002), of foresight (O’Donoghue and Rabin, 1999; Masson, 2002) or as impulsiveness (Ainslie, 1992).

Following Strotz (1956), this conflict captures the agent's time-inconsistency preferences.

Proof in the Appendix section.

According to self-1's expected payoffs, an uninformed agent with a discount rate β=0 does not pay attention to the future, and then always prefers stopping his project in order to recover at least a part D of his initial investment. As the agent is indifferent between stopping and carrying on the project, we get β=β˜, then β˜ is different to zero.

We note that p0f(C01*(1))BH(1)+(1−p0)(1−f(C01*(1)))BL(1) may be rewritten as [p0f(C01*(1))+(1−p0)(1−f(C01*(1)))][K−K′][θ̂(1)−E(θ∣h,C01*(1))].

In the literature, this agent is called a myopic agent.

Proof in the Appendix section.

The result also holds if the agent puts more relative weight on the future.

Proofs are in the Appendix section.

See Shavell (1980) and Miceli (1997).

However, we do not take into account the way in which victims have to demonstrate a causality link between damages and the activity or the product sold.

O’Donoghue and Rabin (1999) define long-run preferences by: U0(u t , …, u T ) = Σ τ = t Tδτ=tu τ . In the paper, we assume that δ=1 and thus U0=V0 when β=1.

See Shavell (1980) and Miceli (1997).

REACH stands for Registration, Evaluation, Authorization and Restriction of Chemicals.

For more details on REACH, see European Commission (http://ec.europa.eu/enterprise/reach).

References

Ainslie, G. (1992) Picoeconomics: The Strategic Interaction of Successive Motivational States within the Person, New York: Cambridge University Press.

Akerlof, G.A. (1991) ‘Procrastination and obedience’, American Economic Review 81 (2): 1–19.

Arrow, K.J. and Fisher, A.C. (1974) ‘Environmental preservation, uncertainty, and irreversibility’, Quarterly Journal of Economics 88 (2): 312–319.

Bénabou, R. and Tirole, J. (2002) ‘Self confidence and personal motivation’, Quarterly Journal of Economics 117: 871–913.

Bénabou, R. and Tirole, J. (2004) ‘Willpower and personal rules’, Journal of Political Economy 112 (4): 848–886.

Brocas, I. and Carrillo, J.D. (2000) ‘The value of information when preferences are dynamically inconsistent’, European Economics Review 44: 1104–1115.

Brocas, I. and Carrillo, J.D. (2004) ‘Entrepreneurial boldness and excessive investment’, Journal of Economics and Management Strategy 13 (2): 321–350.

Brocas, I. and Carrillo, J.D. (2005) ‘A theory of haste’, Journal of Economic Behavior and Organization 56: 1–23.

Carrillo, J.D. and Mariotti, T. (2000) ‘Strategic ignorance as a self disciplining device’, Review of Economic Studies 67: 529–544.

Elster, J. (1979) Ulysses and the Sirens: Studies in Rationality and Irrationality, New York: Cambridge University Press.

Frederick, S., Loewenstein, G. and O’Donoghue, T. (2002) ‘Time discounting and time preference: A critical review’, Journal of Economic Literature 40: 350–401.

Henry, C. (1974) ‘Investment decisions under uncertainty: The irreversibility effect’, American Economic Review 64 (6): 1006–1012.

Henry, C. (2003) ‘Seminar on “Principe de Précaution et Risque Environnemental’, Chaire de développement durable EDF-Polytechnique, 16 June 2003.

Henry, C. and Henry, M. (2002) ‘L’essence du principe de précaution: la science incertaine mais néanmoins fiable’, Séminaire développement durable et économie de l’environnement, Iddri, EDF-Ecole Polytechnique, n11.

Laibson, D. (1997) ‘Golden eggs and hyperbolic discounting’, Quarterly Journal of Economics 112 (2): 443–477.

Laibson, D. (1998) ‘Life cycle consumption and hyperbolic discount functions’, European Economic Review 42: 861–871.

Masson, A. (2002) ‘Risque et horizon temporel: quelle typologie des consommateurs - épargnants?’, Risques (49).

Miceli, T. (1997) Economics of the Law, New York: Oxford university Press.

O’Donoghue, T. and Rabin, M. (1999) ‘Doing it now or later’, American Economic Review 89 (1): 103–124.

O’Donoghue, T. and Rabin, M. (2008) ‘Procrastination on long-term projects’, Journal of Economic Behavior and Organization 66 (2): 161–175.

Phelps, E.S. and Pollack, R.A. (1968) ‘On second-best national saving and game-equilibrium growth’, Review of Economic Studies 35 (2): 185–199.

Read, D. (2001) ‘Is time-discounting hyperbolic or subadditive?’ Journal of Risk and Uncertainty 23: 5–32.

Rubinstein, A. (2003) ‘Is it economics and psychology?: The case of hyperbolic discounting’, International Economic Review 44: 1207–1216.

Shavell, S. (1980) ‘Strict liability versus negligence’, Journal of Legal Studies 9 (1): 1–25.

Shavell, S. (1992) ‘Liability and the incentive to obtain information about risk’, Journal of Legal Studies 21 (2): 259–270.

Strotz, R.H. (1956) ‘Myopia and inconsistency in discounting utility maximization’, Review of Economic Studies 23 (3): 165–180.

Weill (2005) ‘European proposal for chemicals regulation: REACH and beyond proposition de règlement européen des produits chimiques: REACH, enjeux et perspective’, Les actes de l’Iddri, n 2.

http://en.wikipedia.org/wiki/Luria-Delbr%C3%BCck_experiment.

Acknowledgements

We thank Jean-Marc Bourgeon, Dominique Henriet, Pierre Picard, Fraņcois Salaníe, Sandrine Spaeter-Loehrer, Eric Strobl and Nicolas Treich as well as the audience of the 4th International Finance Conference, of the AFSE-JEE 2007, of the EGRIE 2007, of the ADRES 2008, of the SCSE 2008, of the CEA 2008, of the North American Summer Meeting of the Econometric Society 2008, and two anonymous referees for helpful comments and discussions. The views expressed in this article are the sole responsibility of the authors and do not necessarily reflect those of their institutions. The traditional disclaimer applies.

Author information

Authors and Affiliations

Appendix

Appendix

Proof of θ̂(β) is increasing with β

-

We differentiate θ̂(β) with respect to β, we obtain:

which is positive. Therefore, θ̂(β) is increasing with β. □

Proof of Proposition 1

-

At period 1, the agent receives signal σ∈{h, l}. For all C⩾0 and for all β∈(β˜,1]: He chooses to continue, that is, x σ =1 if:

He chooses to stop, that is, x σ =0 if:

He is indifferent between stopping and continuing the project, that is, x σ ∈{0, 1} if:

Proof of Lemma 1

-

We first have:

and

which are negative or equal to zero because θH>θL, and for all C⩾0 we have f(C)⩾1/2. Therefore for all C⩾0, we get E(θ∣l,C)⩽E(θ)⩽E(θ∣h,C).

We then differentiate E(θ∣h,C) with respect to C, we obtain:

which is positive because f is increasing and θH>θL. Thus, E(θ∣h,C) is increasing with C.

We now differentiate E(θ∣l,C) with respect to C, we obtain:

which is negative because f is increasing and θH>θL. Thus, E(θ∣l,C) is decreasing with C. □

Proof of Lemma 2

-

Part (i) of Lemma 2For β=1, we study the concavity of V0(0, 1, C): we differentiate twice times V0(0, 1, C) with respect to C, we obtain:

which is negative because f is concave, BL(1) is positive and BH(1) is negative. Thus, for β=1, V0(0, 1, C) is concave.

C01*(1) is then characterized by the first-order condition:

Remark: Since BL(1) is positive and BH(1) is negative, we get f′(C01*(1))>0.

Part (ii) of Lemma 2

We suppose that C01*(1)=0. We then get f′(C01*(1))=+∞. However, since 0<(1−p0)BL(1)−p0BH(1) we cannot have f′(C01*(1))=+∞. There is a contradiction. Therefore, C01*(1)>0. □

Proof of Proposition 2

-

In this part β=1. The optimal information level C*(1) is such that:• if V0(1, 1, 0)<V0(0, 1, C01*(1)) then C*(1)=C01*(1);• otherwise C*(1)=0.

We then compare V0(0, 1, C01*(1)) and V0(1, 1, 0). We obtain: