Abstract

Factors predicting the outcome of predator invasions on native prey communities are critical to our understanding of invasion ecology. Here, we tested whether background level of risk affected the survival of prey to novel predators, both native and invasive, predicting that high-risk environments would better prepare prey for invasions. We used naïve woodfrog as our prey and exposed them to a high or low risk regime either as embryos (prenatal exposure) or as larvae (recent exposure). Tadpoles were then tested for their survival in the presence of 4 novel predators: two dytiscid beetles, crayfish and trout. Survival was affected by both risk level and predator type. High risk was beneficial to prey exposed to the dytiscids larvae (ambush predators), but detrimental to prey exposed to crayfish or trout (pursuit predators). No effect of ontogeny of risk was found. We further documented that high-risk tadpoles were overall more active than their low-risk counterparts, explaining the patterns found with survival. Our results provide insights into the relationship between risk and resilience to predator invasions.

Similar content being viewed by others

Introduction

When species are introduced in a novel environment, voluntary or accidentally and become established1,2, they can have a range of impacts. Some authors report little impact on native communities3, others even report a positive impact on the community4,5 but most often, authors report major negative impacts on native species6,7. If ecologists are to predict and prevent ‘negative’ invasions, we need to first understand how invaders establish and how/why they are successful. Introduced species can reduce native biodiversity via three general ecological pathways: (1) they can out-compete native species, by using a broader range of resources8 or being more tolerant of extreme environmental changes9; (2) they may encounter weak or absent predation pressure from native predators, leading to a rapid increase in their density10; and/or (3) they may extirpate native prey population that are unable to recognize or respond to these novel predators7,11. A number of factors have been put forward to explain the characteristics of successful invaders2, but relatively little empirical work has been done to predict the characteristics of natives being invaded12.

In this study, we focus on characteristics related to the native community, rather than that related to the invasive ones. We focus on understanding the mechanisms relating to point (3) above: can we understand the factors that may render some prey species more susceptible to decline as a results of predator invasions? Sih et al.12 recently highlighted two mechanisms through which prey may not be able to survive encounters with invasive predators. The first, coined the ‘prey naivety hypothesis’, is a situation in which native prey lack an evolutionary history with the introduced predator and hence display weak or inefficient antipredator responses. The second, coined the ‘cue similarity hypothesis’ posits that if prey do not use the proper type of cues to recognize the invaders as risky, or if the cues usually used are too dissimilar to that of invasive predators, they may succumb to their attack13. Native prey responding inappropriately to novel predators and suffering increased predation pressure could eventually become locally extirpated7,14.

The ability of prey in general to survive predatory encounters might be the strongest selective force affecting the spatial and temporal distribution of animals15. A growing body of research has focused on understanding mechanisms through which prey respond to their predators. Some prey have evolved morphological adaptations, such as armour or defensive spines, to decrease their risk of being attacked16,17,18. Others have attempted to decrease the selective pressure by altering the timing of key life-history switch points, such as hatching19 or metamorphosis20,21,22 so as to minimize overlap with predators. However, both those traits require time to evolve and become prevalent in a population. For instance, Freeman et al.23 showed that invaded mussel populations could evolve inducible morphological defences (thickening of their shells) within 15 years of the introduction of an invasive crab. Behavioural adaptations, on the other hand, are by far the most plastic and rapid type of antipredator adaptations prey can display13,15,24. Prey can alter when and where to eat, decreasing predation risk in an instant. Avoidance, fleeing and freezing are all common antipredator behaviours widespread in the animal kingdom15, but often require the recognition of the predator by the prey.

A few species have been shown to possess an innate recognition of some of their predators25,26. However, those are relatively rare and the selective pressure needed to fix such a trait likely requires a long evolutionary overlap between prey and predator, high predator specificity (low predator diversity) and a large payback in terms of survival27. In contrast, the vast majority of prey species rely on learning to recognize predators28,29. Learning mechanisms are widespread and highly efficient, usually requiring a single encounter for the information to be learned30,31. Despite all this, the question remains: how does a prey individual survive the very first encounter with a novel predator? A recent study by Brown et al.32 have shed some light on this phenomenon. They showed that populations of aquatic prey (fishes and amphibians) could display antipredator responses towards novel cues (neophobia) if they were maintained in high-risk environments for an extended period of time, but not in low-risk environments. If individuals occur in an environment where predation threats are widespread and predation risk is high, then exposure to a novel stimulus may, more often than not, be related to a predator and hence, should be feared. This framework would provide prey with a mechanism to increase their survival during a first-time encounter with a novel predator, allowing them to learn the threat and subsequently use learning mechanisms to identity and avoid the threat in future encounters32.

Two studies have investigated the effect of specific predator exposures on the subsequent ability of prey to survive other predators. Hettyey et al.33 raised agile frogs, Rana dalmatina, from eggs, in the presence of four predators and found that tadpole survival was not affected by an interaction between the species of predator tadpoles were raised with and the species of predator they were exposed to during the trials: all predator-exposed tadpoles survived roughly equally well and noticeably better than control tadpoles. No difference in survival was found between predator types (sit-and-wait vs. pursuit predators). These results differ from those of Teplitsky et al.34 who found that agile frog tadpoles, raised for 4 weeks with either a sit-and-wait predator (dragonfly larvae) or a pursuit predator (stickleback) developed different morphology, which would explain why stickleback-exposed tadpoles were more likely to survive a stickleback encounter than a dragonfly-exposed tadpole.

To our knowledge, background level of risk has never been proposed as a factor potentially affecting the outcome of a first encounter between naïve prey and novel predators, which could have crucial implications for biological invasions. If background level of risk could influence the ‘readiness’ of species to respond to novel predators, then it might be possible for the background level of risk to be a good predictor of invasion success. Native prey species living in high-risk environments should be less susceptible to predator invasions than those living in relatively lower risk environments. We tested this hypothesis by using woodfrog tadpoles (Lithobates sylvaticus) as our naive prey species. Amphibians are one of the taxa suffering some of the most severe global population declines partly due to invasions35, so we reasoned that woodfrogs could be used as an amphibian model. We used a series of novel predators acting as invaders – all of them known to be predators on amphibian larvae: (1) lesser diving beetle larvae (Acilius semisulcatus), (2) water tigers (Dytiscus alaskensis), (3) crayfish (Orconectes virilis) and (4) rainbow trout (Oncorhynchus mykiss). While all 4 predator species would be completely novel (ontogenetically) to the individual tadpoles, the lesser beetle larvae and the water tigers are native to the area where the tadpoles were collected – hence the tadpoles likely had recent evolutionary experience with them, while the trout and crayfish do not naturally occur in the vicinity of these populations. While they may have shared similar habitats during the last ice age36, they have been isolated since then. These last two species, although not having a status of invaders at our field site, are known for their exotic and/or invasive status in a number of aquatic ecosystems across North-America. Again, our prey are totally naïve to all predators, excluding any possibilities that experience with other predators would be causing them to better survive encounters with one predator compared to another. In a series of experiments, we maintained tadpoles in a low- or high-risk environment for at least 5 days (5-9 days) and then staged encounters between tadpoles and predators, as would happen during an invasion. We repeated this testing by using tadpoles which were maintained under low-risk environment, but had experienced either a low- or high-risk environment as embryos (in the egg). This allowed us to investigate any ontogenetic effect of risk exposure on the prey's ability to survive an encounter with novel predators. To provide some mechanistic explanation for our findings, we also quantified differences in activity between prey from both risk treatments.

Methods

Ethical statement

All work herein was in accordance with the Canadian Council on Animal Care guidelines and was approved by the University Council on Animal Care (protocol 20060014) of the University of Saskatchewan.

Water and test species

The experiment took place in central Alberta, Canada, in May/June 2013. All the maintenance and testing took place outdoors. Two weeks prior to the start of the experiment, a 1900-L tub was placed outdoors and filled with well water and seeded with plankton and aquatic plants from a local pond, using a fine-mesh dip net. This ensured that our test water contained natural pond odours, but lacked any cues from local predators. Our previous work has shown that woodfrog tadpoles from our field site do not have an innate recognition of various predators37,38.

Woodfrogs were collected locally as described below. Lesser beetle larvae and water tigers were collected from a nearly pond using dip nets, a few days prior to being used. Crayfish were trapped from Blackstrap Lake, Saskatchewan, 3 weeks prior to being used. Forty juvenile rainbow trout were obtained from the Cold Lake Fish Hatchery, Alberta, 2 weeks before the start of the experiment. At our field site, trout and crayfish were housed separately in 100-gal black troughs filled with well water, while the lesser beetle larvae and water tigers were housed individually in 0.5 L cups, due to their high aggression and cannibalistic tendencies. The choice of predators was limited by our inability to import invasive species. However, trout are one of the most common exotic species found throughout North America, due to their economic value for recreational fishing and virile crayfish have been introduced in many parts of the United States, Mexico and Europe.

Manipulating background level of risk

Creating background levels of risk required us to increase the perceived predation risk of the animals without them associating it with a specific predator. Hence, we used injured conspecific cues as general, non-specific indicator of risk. Injured conspecific cues are used by a wide variety of aquatic organisms, from coral to fish and amphibians, as a reliable indicator of risk39 and are known to elicit changes in behaviour, morphology and life-history28. This is a well-established methodology to manipulate background level of risk in aquatic species32,40. While we acknowledge that injured conspecific cues alone do not provide the full range of information available to prey when conspecifics get eaten by predators (i.e., diet cues), a number of predators can release those cues naturally during a successful (chewing, handling) or unsuccessful (failed capture, harm to appendages like tails) predation events, making them ecologically relevant.

Larval risk

Six clutches of woodfrog eggs, collected from a local pond the same day they were laid (May 3rd) were placed in a plastic pool filled with well water. The pool contained aquatic plants and was left floating on the pond to equalize temperature and sun exposure between the pond and the pool. Upon hatching, the tadpoles were fed alfalfa to supplement the algae already present in the pool. Tadpoles were 2 weeks old and Gosner stage 25 at the time the experiment began. Groups of 30 randomly selected tadpoles were placed in 20 tubs (40 × 30 × 30 cm), containing ~ 12 L of well water. The tadpoles were left of acclimate for 12 h, after which half were exposed twice a day (0900–2000 hr) to a high risk (injured tadpole cue) treatment while the other half was exposed at the same time to a low risk (water) treatment. The high risk treatment consisted of an injection of 20 mL of water containing 3 crushed tadpoles, while the low risk treatment consisted of injection 20 mL of well water. This background risk exposure phase ran for 7 days prior to the beginning of the first experiment (when the first tadpoles were removed), but ran continuously until the last experiment was finished (day 12, when the last tadpoles were removed). Note that tadpoles were used only once and were discarded (released) once their trial ended. Most of the experiments temporally overlapped, so the 5-day difference in risk could not affect the outcome of our experiments.

Embryonic risk

Six egg clutches were collected on May 9th, all laid within 48 h of time of collection (4 of them were slightly older, 2 of them were freshly laid). Each clutch was split into 4 roughly equal subclutches and each subclutch was placed in a 3.7-L pail containing 2 L of aged well water. Twice a day until hatching, the eggs received either a low risk (water injection) or high risk (injured tadpole cue injection) treatment. The treatments were performed between 1100–1300 and 1700–1900, with a complete water change 1 h after injection. When the embryos were close to hatching (wriggling and straightening in the shell), the treatment was stopped. As a result, 4 clutches were treated for 3 days, while the last 2 were treated for 4.5 days. The high risk treatment consisted of an injection of 20 mL of water containing 3 crushed tadpoles. The solution was filtered prior to injection and was introduced in the vicinity of the egg mass in the pail. The low risk injection consisted of 20 mL of well water, injected in the same manner as the high risk solution.

Despite the fact that the embryos in the older clutches hatched earlier than those in the younger ones, we did not find any difference in hatching time among risk treatments; high risk and low risk embryos from the same clutch hatched over the same period (a few hours). After hatching, the tadpoles were provided with alfalfa pellets and left to grow until tested (2–3 weeks). Water was partially changed (~30–50%) every 3 days.

The setup of survival experiments differed among predators, due to the difference in the size and ecology of the species. However, we attempted to standardize the predation trials by keeping the predator size/sqrt(arena area) constant – regardless of species. We used the sqrt function to give predator additional space to move in both directions, not just one. We did not use volume, as tadpoles rarely use the water column, spending most of their time on the bottom. The area at the bottom thus represented the foraging arena for the predators. We achieved ratios that were quite comparable (lesser beetles: 2.2/sqrt(325) = 8.2; water tigers: 3.9/sqrt(1100) = 8.5; crayfish: 6/sqrt(2600) = 8.5, trout: 9/sqrt(6000) = 8.4). Each cm of predator was attributed an area equivalent to a square of ~8.4 × 8.4 cm. While the absolute value of this ratio does not matter biologically, the point is that the increase in area among predator types was proportional to the increase of the size of the predators. The shape of the arena differed among predators, because we could not find containers of the right area that would all share the same shape. However, we do not believe that the shape per se, would change the outcome of a predation trials, as long as corners (in our rectangular containers) were not used as shelters. We did not see prey or predators having an advantage in the corners – and this advantage/disadvantage, if it existed, would be present in both experimental groups.

Experiment 1: Survival trials

The effect of embryonic risk on survival with predatory trout

This experiment took place in 370-l black oval water troughs (bottom: ~60 × 100 cm hereafter pool), filled half-way with well water and containing leaf litter that served as tadpole food and shelter. The pools were placed in a forested area to avoid the water from heating up too much during the afternoon. To avoid possible confounds due to light or cover amount, pools were physically paired, each pair sharing similar small scale environmental conditions.

Five tadpoles (mean size ± SD: 13 +/− 1 mm), were released in each of the 24 pools, with half of the pools containing tadpoles from the high risk background group, the other half containing tadpoles from the low risk background group. To avoid a possible spatial confound, each pair of pools received tadpoles from the high and low groups, but the allocation of treatments within the pair was random. After a 2-h acclimation period, we introduced one trout in each pool. No difference in tadpole size (t-test: P = 0.69) nor trout size (mean length ± SD = 9.2 ± 1.2 cm, P = 0.7) was found between the 2 risk groups. Tadpoles and trout were left to interact for 36 h. After a few hours, one trout jumped from its pool and was removed from the experiment, hence leaving us with a sample size of 11 in the low risk group and 12 in the high risk group. The number of surviving tadpoles in each pool was analyzed using a Generalized Linear Model, using a Poisson probability distribution for count data, a Log link function and a Wald Chi-square to test the different parameters in the model. Risk was introduced as a fixed factor and tadpole clutch as a random factor.

The effect of larval risk on survival with predatory trout

This experiment was run after Experiment 1. While we initially planned to follow the same protocol as described above, some unforeseen circumstances caused us to lose the trout used above. We thus used the 16 remaining trout (length ± SD: 9.3 ± 1.1 cm) in a paired design, where each trout were exposed to both high risk and low risk tadpoles, the order of treatment randomized over two consecutive trials, but ensuring that the same number of trout were exposed to high and low risk tadpoles each day (i.e., 8 high and 8 low). In this design, each trout served as its own control. The 2 sets of trials were separated by a 24 h period. The trials were similar to those described above. The size of the tadpoles did not differ between the 2 risk groups (t-test: P = 0.78). We compared the numbers of surviving tadpoles using a 2-tailed Wilcoxon signed rank test for paired, non-parametric data.

The effect of larval risk on survival with predatory crayfish

The crayfish experiments took place in containers (67 × 40 × 16.5 cm) filled with 16 l of well water and a leaf litter substrate. We ran 12 paired replicates in each of the 2 risk groups (high risk vs low risk). We used 12 crayfish (rostrum to telson: 6.9 ± 0.7 cm), each exposed to tadpoles from the high and low risk groups for 48 hours. Just like the previous trout experiment, 6 crayfish first were allocated to the high risk group and then the low risk group, while the 6 others received the treatment in the opposite order. The numbers of surviving tadpoles in both groups were compared using 2-tailed Wilcoxon signed rank test.

The effect of embryonic risk on survival with predatory crayfish

We ran 9 paired replicates for the embryonic risk survival, using the same protocol as described above. Again, the numbers of surviving tadpoles in both groups were compared using 2-tailed Wilcoxon signed rank test.

The effect of larval risk on survival with predatory water tigers

This predation setup differs from that of other predators, because water tigers were very efficient at capturing tadpoles, so we elected to run shorter-term predator encounters. Groups of 3 tadpoles were added to a container (38 × 29 × 15 cm) containing 8 L of water and slough grass, which served as a natural perch for the predator. The tadpoles were left to acclimate for 20 min. A water tiger was introduced and the time to capture the first tadpole (latency to capture) was recorded with a stopwatch. The predator was then immediately removed from the arena and the surviving tadpoles released. If no capture was made after 10 min, the trial was stopped, the tadpoles released and the predator attributed a score of 600 sec. We used 19 predators and ran a total of 49 replicates in each of the 2 risk groups. The size of the predators (from head to tail) was 35.7± 7.6 mm. The average size of tadpoles used was 16.2 ± 0.9. There were no difference in the size of the tadpoles used in the two groups (P = 0.69). The time to capture (sec) were analyzed using a generalized randomized block design ANOVA, in which risk was introduced as a fixed factor and predator as a random factor.

The effect of embryonic risk on survival with predatory lesser beetle larvae

While we originally wanted to use water tigers for both experiments, the number of individuals that molted increased, leaving us with a sample size too small for this experiment (molting individuals do not feed for a few days prior to and following the molt). We used lesser beetle larvae, which are smaller, but share the hunting behaviour of water tigers. Individual tadpoles from each of the 2 risk groups were setup in a 3.7 L circular pails (20 cm diameter), containing 1 L of water (depth: 10 cm) and floating slough grass for predator anchorage. After a 30 min acclimation period, a lesser beetle larva was introduced to the container and left to interact with the tadpole. The size of predators did not differ between risk groups (22.5 ± 1.7 mm, p = 0.77), neither did tadpole size (15.6 ± 0.5 mm, P>0.9). After 24 h, we recorded whether the tadpole were missing (eaten) or alive (binary response variable). We had a total of 15 replicates in the high risk group and 16 in the low risk group. The data were analyzed using a 2-tailed Chi-square test. Because two of the cells had an expected frequency less than 5, we used a 2-tailed Fisher's Exact Test.

Experiment 2: Assessment of activity in high- vs. low risk tadpoles

While some ecologists have measured activity of tadpoles during predation trials, the size and visibility of the tadpoles in our mesocosm prevented us from collecting reliable data. Hence, we used a well-documented bioassay41, to test tadpoles that received the high- and low-risk treatments as larvae. Forty untested, randomly chosen tadpoles from each of the 2 treatments were setup individually in 0.5-L containers and left to acclimate for 1 h. The data collection consisted of 3 blocks of observation (14:00, 15:30 and 17:00 h), each block containing 10 scans. At the beginning of each block, an observer (blind to the treatments) scanned the tadpoles for 2 min and recorded which ones were active or motionless. A new scanning starting 2 min after the end of the previous one, for a total of 10 scanning events for each of the 3 observation blocks. For each tadpole, the proportion of time spent moving was computed from the number of scans where the tadpoles was found moving divided by 30 (the total number of observations). The difference between high- and low-risk groups was tested using a 2-tailed independent t-test (N = 40/group).

Results

Embryonic risk and survival with trout

Neither clutch (Wald χ12 = 1.6, P = 0.2) nor clutch x risk (Wald χ12 = 0.03, P = 0.8) had an effect, so “clutch” was dropped from the analysis. Risk was found to significantly affect the number of surviving tadpoles (Wald χ12 = 5.5, P = 0.019), with tadpoles from the low risk group surviving better than those in the high risk group. Only 9/60 (15%) of tadpoles survived in the high risk groups, while 21/55 (38%) tadpoles survived in the low-risk group (Figure 1A).

Comparison of mean survival (± SE where applicable) of tadpoles exposed to high risk vs low risk as embryos (left panels) or as tadpoles (right panel).

Survival trials were setup with rainbow trout (top panels), crayfish (middle panel) or dytiscid beetles (bottom panels). Measurement of survival varied with each specific experimental setup (see text for details) (photos: AC, MF).

Larval risk and survival with trout

The results indicated that tadpoles from the low risk group survived better than those in the high risk group (Z = -2.1, P = 0.034). We found that 18/80 (22%) tadpoles survived in the high risk group, while 33/80 (41%) survived in the low risk group (Figure 1B).

Larval risk and survival with crayfish

We found that tadpoles from the low risk survived better than those in the high risk (Z = -2.4, P = 0.015). In the low risk group, 56/60 (93%) tadpoles survived, while 46/60 (77%) survived in the high risk group (Figure 1D).

Embryonic risk and survival with crayfish

Our one-sample t-test revealed that tadpoles from the low risk group survived better than those from the high risk group (Z = -2-2, P = 0.027). This time, 28/45 (62%) tadpoles survived in the high risk group, while 39/45 (87%) survived in the low risk group (Figure 1C).

Laval risk and survival with beetles

Our ANOVA revealed no effect of predators (F18,78 = 1.3, P = 0.21) but a significant effect of risk (F1,78 = 5.8, P = 0.018). Predators spent on average 391 sec to capture tadpoles in the low risk group, but 590 sec (51% increase) to capture the ones in the high risk group (Figure 1E).

Embryonic risk and survival with beetles

The analysis revealed that tadpoles in the high risk group survived better than those in the low risk group (p = 0.011). In total, 3/16 (19%) tadpoles survived in the low risk group, while 10/15 (67%) survived in the high risk group (Figure 1F).

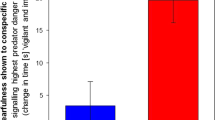

Activity

The analysis revealed that high-risk tadpoles were significantly more active (12% higher) than low-risk tadpoles (t’-test: t’65.2 = 3.8, P<0.001, figure 2).

Discussion

Our results indicate that background level of risk had a significant effect on the ability of tadpoles to survive first-time encounter with novel predators, as would be the case in the context of a predator invasion. However, the direction of the effect was not consistent across predators and seems dependent on the type of predator that was introduced. High-risk environments were beneficial to tadpoles when the introduced predator was a lesser beetle larva or a water tiger. However, the high-risk background seemed detrimental to survival when predators were crayfish or juvenile rainbow trout.

Predator type

Our woodfrog differed in their evolutionary history with the different predators. The aquatic beetles shared some evolutionary history with tadpoles from our field site, which in turn, explains why they may display antipredator behaviours that are more in tune with the mode of attack of those predators. While some species of amphibians may be able to fine-tune morphological adaptations to different types of predators34,42, it is unlikely that morphology would explain our results given the short duration of our experimental exposure (7 days). Almost all studies on morphological defences exposed their test subjects for several weeks in order to observe a measurable, biologically relevant effect. Only one study has shown small changes in morphology as early as 4 days post-treatment, but this change was only observed in tadpoles showing a reversal of investment43 and the ecological relevance of this observed effect size has not been yet demonstrated. In addition, exposure to injured tadpole cues alone did not seem to elicit a specific morphological adaptation, compared to control cues in other ranid species44, hence emphasizing that our results are mediated principally by behavioural traits, rather than morphological ones.

While crayfish and trout could be considered evolutionarily novel, one difference confounds the ‘evolutionary history’ explanation. Trout are very obvious pursuit predators, actively detecting moving tadpoles and hunting them. Crayfish move at a slower pace, but their movements also track moving tadpoles. The aquatic beetles, on the other hand, are considered ambush predators. While they will launch into short-distance pursuit bursts, they more frequently find a perching site and wait for an ‘unaware’ prey to swim at grabbing distance. Could hunting strategy explain the observed survival patterns? We unfortunately did not find any native pursuit predators occurring with tadpoles at our field site to formally test this possibility. However, our activity and habitat preference data may shed some light onto our results. Activity data indicate that tadpoles from a high-risk environment are overall more active than tadpoles from a low-risk environment. These results are in line with those predicted by the risk allocation hypothesis40,45, where prey exposed to intense bouts of risk may have little choice but to dramatically increase foraging and/or mating efforts during expected bouts of safety, in order to compensate for lost opportunities. Alternatively, a number of studies have showed that high-risk conditions in one life-history stage would often result in amphibians trying to shorten the duration of this stage and move on to the next stage faster (ontogenetic predation escape)46,47,48,49. Increasing local predation risk may force tadpoles to increase their foraging effort to increase growth rate and reach metamorphosis earlier. Either way, a differential swimming pattern due to local risk level likely renders the high-risk tadpoles more conspicuous to pursuit predators, all the while making them too fast for ambush predators. While observations were nearly impossible for the long-term predation trials, observations of tadpoles exposed to water tigers are consistent with these explanations. Tadpoles swimming faster past the water tigers often escaped unharmed, as the predator did not have time to launch an attack (pers. obs.). If an attack did occur, the first one was almost always unsuccessful and tadpoles then undertook a freezing behaviour, which, in itself, decreased their encounter rate with ambush predators. Could this behavioural pattern represent an adaptation to high-risk situations?

Ontogeny of risk exposure

An interesting outcome of this series of experiments is that the ontogeny of risk exposure does not seem to affect the direction of the results. With the present design, we cannot directly test whether or not the timing of risk exposure would affect survival in a quantitative manner, but we can certainly conclude that it does not affect the outcome in a qualitative manner. This point is very interesting in itself. On one hand, we have tadpoles that have been experiencing a high risk or low risk environment in their recent past. On the other hand, we have tadpoles having experienced the same risk regime (concentration, frequency and type), however for a few days as embryos. How could those two treatments lead to the same survival outcome? We know that recent history of risk affects the decisions of an individual to hide, forage or mate, as outlined in the Risk Allocation Hypothesis40,45. However, those are adaptations to short-term patterns of risk. It is however possible that risk experienced as embryo led to dramatic alterations in life histories. We know of many long-term effects of prenatal stressors and while the word ‘stressor’ is often viewed as negative, some stressors may help individuals get ready for and become more adapted to future environmental conditions, as outlined in the Adaptive Calibration Model (ACM) for instance50. In this model, it is hypothesized that the stress response system selectively encodes, filters or amplifies information about the environment, to affect the individual's developmental trajectories leading to physiological or behavioral responses that are the most adapted to the environmental conditions. If early environmental conditions result in high sensitivity to risk, for instance, high sensitivity animals may display a much stronger response to risk cues compared to those low-sensitivity individuals. This may explain why tadpoles exposed to cues from predatory salamander as embryos, maintain an antipredator avoidance to salamander cues at least 5-6 weeks later51,52, while tadpoles exposed to the same cues as young tadpoles, will only maintain a response to salamander cues for 2–3 weeks53. In addition, our results follow the predictions of the ACM that, under high stress (i.e., high risk) conditions, animals should exhibit a ‘fast life style’50,54 – this reinforces the above-mentioned suggestion that tadpoles are increasing activity to grow faster and metamorphose earlier.

Species invasions

Our results suggest that background level of risk does in fact alter the survival of native prey when exposed to novel predators which could be invaders, but not in a consistent manner. Background level of risk – a priori – should have a positive effect on native prey survival. Numerous examples of predator-free populations getting readily reduced by invasive species can attest to that effect55. In addition, relaxed selection pressure has been identified as a factor facilitating species invasion56. Hence, more predation pressure should be better. While much is still unknown, one thing is certain: prey having to cope with evolutionarily novel predators will have to display rapid changes to their phenotypes. A recent meta-analysis of more than 3000 rates of phenotypic change found that rapid environmental change often induced rapid, abrupt phenotypic change, suggesting that most of that change involves phenotypic plasticity rather than immediate genetic evolution57. In other words, prey that rely on the tools they already have may fare better than those requiring selection. If prey do not have adequate defences, induced antipredator defences may appear in populations within a relatively short timeframe, at least in some species10,23. The future of native prey populations seems dependent on outcome of the race between the invasive predator' rate of consumption, the native population growth rate and the speed at which the native population can display induced antipredator defences.

To conclude, our results are the first to show that manipulations of background level of risk, using generic (non-predator specific) risk cues, can alter the survival of native prey exposed to live, novel predators. More work needs to be done to validate our results in other taxa and to test the ‘predator hunting strategy’ hypothesis. It would be particularly fascinating to test whether alarm calls of birds and mammals function to increase background risk in the same way as chemical alarm cues do in aquatic systems. Increases in background levels of risk allow prey to display antipredator responses or alter their life-history strategy to increase survival in high-risk environments. These findings bring us one step forward in our understanding of predator-prey dynamics and their consequences for invasions.

References

Blackburn, T. M. & Duncan, R. P. Determinants of establishment success in introduced birds. Nature 414, 195–197 (2001).

Kolar, C. S. & Lodge, D. M. Progress in invasion biology: predicting invaders. Trends Ecol. Evol. 16, 199–204 (2001).

Bruno, J., Fridley, J., Bromberg, K. & Bertness, M. Insights into biotic interactions from studies of species invasions. Species invasions: insights into ecology, evolution and biogeography, 13–40 (2005).

Rodriguez, L. F. Can invasive species facilitate native species? Evidence of how, when and why these impacts occur. Biol. Invas. 8, 927–939 (2006).

D'Antonio, C. & Meyerson, L. A. Exotic plant species as problems and solutions in ecological restoration: a synthesis. Restor. Ecol. 10, 703–713 (2002).

Salo, P., Korpimaki, E., Banks, P. B., Nordstrom, M. & Dickman, C. R. Alien predators are more dangerous than native predators to prey populations. Proc. R. Soc. B 274, 1237–1243 (2007).

Clavero, M. & García-Berthou, E. Invasive species are a leading cause of animal extinctions. Trends Ecol.Evol. 20, 110–110 (2005).

Crowder, D. W. & Snyder, W. E. Eating their way to the top? Mechanisms underlying the success of invasive insect generalist predators. Biol. Invas. 12, 2857–2876 (2010).

Larson, E. R., Magoulick, D. D., Turner, C. & Laycock, K. H. Disturbance and species displacement: different tolerances to stream drying and desiccation in a native and an invasive crayfish. Freshw. Biol. 54, 1899–1908 (2009).

Phillips, B. L. & Shine, R. An invasive species induces rapid adaptive change in a native predator: cane toads and black snakes in Australia. Proc. R. Soc. B 273, 1545–1550 (2006).

Didham, R. K., Tylianakis, J. M., Gemmell, N. J., Rand, T. A. & Ewers, R. M. Interactive effects of habitat modification and species invasion on native species decline. Trends Ecol. Evol. 22, 489–496, 10.1016/j.tree.2007.07.001 (2007).

Sih, A. et al. Predator-prey naivete, antipredator behavior and the ecology of predator invasions. Oikos 119, 610–621, 10.1111/j.1600-0706.2009.18039.x (2010).

Sih, A., Ferrari, M. C. O. & Harris, D. J. Evolution and behavioural responses to human-induced rapid environmental change. Evol. App. 4, 367–387, 10.1111/j.1752-4571.2010.00166.x (2011).

Denoël, M., Dzukic, G. & Kalezic, M. L. Effects of widespread fish introductions on paedomorphic newts in Europe. Cons. Biol. 19, 162–170 (2005).

Lima, S. L. & Dill, L. M. Behavioral decidsion made under the risk of predation - a review and prospectus. Can. J. Zool. 68, 619–640 (1990).

DeWitt, T. J., Sih, A. & Hucko, J. A. Trait compensation and cospecialization in a freshwater snail: size, shape and antipredator behaviour. Anim. Behav. 58, 397–407 (1999).

Pettersson, L. B., Nilsson, P. A. & Bronmark, C. Predator recognition and defence strategies in crucian carp, Carassius carassius. Oikos 88, 200–212 (2000).

Hammill, E., Rogers, A. & Beckerman, A. P. Costs, benefits and the evolution of inducible defences: a case study with Daphnia pulex. J. Evol. Biol. 21, 705–715, 10.1111/j.1420-9101.2008.01520.x (2008).

Sih, A. & Moore, R. D. Delayed hatching of salamander eggs in response to enhanced larval predation risk. Am. Nat. 142, 947–960 (1993).

Werner, E. E. Amphibian metamorphosis - growth rate, predation risk and the optimal size at transformation. Am. Nat. 128, 319–341 (1986).

Wildy, E. L., Chivers, D. P. & Blaustein, A. R. Shifts in life-history traits as a response to cannibalism in larval long-toed salamanders (Ambystoma macrodactylum). J. Chem Ecol. 25, 2337–2346 (1999).

McCormick, M. I. Delayed metamorphosis of a tropical reef fish (Acanthurus triostegus): a field experiment. Mar. Ecol.-Prog. Ser. 176, 25–38 (1999).

Freeman, A. S. & Byers, J. E. Divergent induced responses to an invasive predator in marine mussel populations. Science 313, 831–833 (2006).

Lima, S. L. Nonlethal effects in the ecology of predator-prey interactions - What are the ecological effects of anti-predator decision-making? Bioscience 48, 25–34 (1998).

Berejikian, B. A., Tezak, E. P. & LaRae, A. L. Innate and enhanced predator recognition in hatchery-reared chinook salmon. Env. Biol. Fishes 67, 241–251 (2003).

Vilhunen, S. & Hirvonen, H. Innate antipredator responses of Arctic charr (Salvelinus alpinus) depend on predator species and their diet. Behav. Ecol. Sociobiol. 55, 1–10, 10.1007/s00265-003-0670-8 (2003).

Ferrari, M. C. O., Gonzalo, A., Messier, F. & Chivers, D. P. Generalization of learned predator recognition: an experimental test and framework for future studies. Proc. R. Soc. B 274, 1853–1859, 10.1098/rspb.2007.0297 (2007).

Ferrari, M. C. O., Wisenden, B. D. & Chivers, D. P. Chemical ecology of predator-prey interactions in aquatic ecosystems: a review and prospectus. Can. J. Zool. 88, 698–724 (2010).

Griffin, A. S. Social learning about predators: a review and prospectus. Learn. Behav 32, 131–140 (2004).

Crane, A. L. & Ferrari, M. C. O. in Social learning theory: phylogenetic considerations across animal, plant and microbial taxa (ed K. B. Clark) pp 53–82 (Nova Science Publishers, 2013).

Brown, G. E. & Chivers, D. P. in Ecology of predator-prey interactions (eds P. Barbosa & I. Castellanos) 34–54 (Oxford University Press, 2005).

Brown, G. E., Ferrari, M. C., Elvidge, C. K., Ramnarine, I. & Chivers, D. P. Phenotypically plastic neophobia: a response to variable predation risk. Proc. R. Soc. B 280 (2013).

Hettyey, A., Vincze, K., Zsarnóczai, S., Hoi, H. & Laurila, A. Costs and benefits of defences induced by predators differing in dangerousness. J. Evol. Biol. 24, 1007–1019 (2011).

Teplitsky, C. et al. Escape behaviour and ultimate causes of specific induced defences in an anuran tadpole. J. Evol. Biol. 18, 180–190 (2005).

Blaustein, A. R. et al. The complexity of amphibian population declines: understanding the role of cofactors in driving amphibian losses. Ann. N-Y Acad. Sci. 1223, 108–119, 10.1111/j.1749-6632.2010.05909.x (2011).

Lee-Yaw, J. A., Irwin, J. T. & Green, D. M. Postglacial range expansion from northern refugia by the wood frog, Rana sylvatica. Mol. Ecol. 17, 867–884 (2008).

Ferrari, M. C. O. & Chivers, D. P. Temporal variability, threat sensitivity and conflicting information about the nature of risk: understanding the dynamics of tadpole antipredator behaviour. Anim. Behav. 78, 11–16, 10.1016/j.anbehav.2009.03.016 (2009).

Ferrari, M. C. O. & Chivers, D. P. The ghost of predation future: threat-sensitive and temporal assessment of risk by embryonic woodfrogs. Behav. Ecol. Sociobiol. 64, 549–555, 10.1007/s00265-009-0870-y (2010).

Ferrari, M. C. O., Wisenden, B. D. & Chivers, D. P. Chemical ecology of predator-prey interactions in aquatic ecosystems: a review and prospectus. Can. J. Zool. 88, 698–724 (2010).

Ferrari, M. C. O., Sih, A. & Chivers, D. P. The paradox of risk allocation: a review and prospectus. Anim. Behav. 78, 579–585, 10.1016/j.anbehav.2009.05.034 (2009).

Chivers, D. P. & Ferrari, M. C. Social learning of predators by tadpoles: does food restriction alter the efficacy of tutors as information sources? Anim. Behav. 89, 93–97 (2014).

Benard, M. F. Survival trade-offs between two predator-induced phenotypes in Pacific treefrogs (Pseudacris regilla). Ecology 87, 340–346 (2006).

Relyea, R. A. Predators come and predators go: the reversibility of predator-induced traits. Ecology 84, 1840–1848 (2003).

Schoeppner, N. M. & Relyea, R. A. Interpreting the smells of predation: how alarm cues and kairomones induce different prey defences. Funct. Ecol. 23, 1114–1121 (2009).

Lima, S. L. & Bednekoff, P. A. Temporal variation in danger drives antipredator behavior: The predation risk allocation hypothesis. Am. Nat. 153, 649–659 (1999).

Chivers, D. P. et al. Predator-induced life history changes in amphibians: egg predation induces hatching. Oikos 92, 135–142 (2001).

Johnson, J. B., Saenz, D., Adams, C. K. & Conner, R. N. The influence of predator threat on the timing of a life-history switch point: predator-induced hatching in the southern leopard frog (Rana sphenocephala). Can. J. Zool. 81, 1608–1613, 10.1139/z03-148 (2003).

Kusch, R. C. & Chivers, D. P. The effects of crayfish predation on phenotypic and life-history variation in fathead minnows. Can. J. Zool. 82, 917–921, 10.1139/z04-066 (2004).

Warkentin, K. M. How do embryos assess risk? Vibrational cues in predator-induced hatching of red-eyed treefrogs. Anim.Behav. 70, 59–71 (2005).

Del Giudice, M., Ellis, B. J. & Shirtcliff, E. A. The adaptive calibration model of stress responsivity. Neuroscience & Biobehavioral Reviews 35, 1562–1592 (2011).

Mathis, A., Ferrari, M. C. O., Windel, N., Messier, F. & Chivers, D. P. Learning by embryos and the ghost of predation future. Proc. R. Soc. B 275, 2603–2607, 10.1098/rspb.2008.0754 (2008).

Ferrari, M. C. O., Manek, A. K. & Chivers, D. P. Temporal learning of predation risk by embryonic amphibians. Biol. Lett. 6, 308–310, 10.1098/rsbl.2009.0798 (2010).

Ferrari, M. C. O., Brown, G. E., Bortolotti, G. R. & Chivers, D. P. Linking predator risk and uncertainty to adaptive forgetting: a theoretical framework and empirical test using tadpoles. Proc. R. Soc. B 277, 2205–2210, 10.1098/rspb.2009.2117 (2010).

Sih, A. Effects of early stress on behavioral syndromes: an integrated adaptive perspective. Neurosci. Biobehav. Rev. 35, 1452–1465 (2011).

Blumstein, D. T. Moving to suburbia: ontogenetic and evolutionary consequences of life on predator-free islands. J. Biogeog. 29, 685–692 (2002).

Lahti, D. C. et al. Relaxed selection in the wild. Trends Ecol.Evol. 24, 487–496, 10.1016/j.tree.2009.03.010 (2009).

Hendry, A. P., Farrugia, T. J. & Kinnison, M. T. Human influences on rates of phenotypic change in wild animal populations. Mol. Ecol. 17, 20–29 (2008).

Acknowledgements

All work herein followed Uof S Animal Care protocol 20060014. We thank Brittney Hoemsen for identifying the species of dytiscids, Jean & Glen for giving us access to the field site and providing housing, Keith for hauling the mesocosms to the field site, Chloé for her invaluable efforts of assistance, Harold and Oliver for their support. A special thank-you goes to the Sniper for letting us solve the world's potato beetle problem by scooping dytiscids from his slough.

Author information

Authors and Affiliations

Contributions

M.F., G.B., A.C. and D.C. conceived and designed the project, A.C., D.C. and M.F. collected data, M.F. analyzed data and wrote the first draft of the manuscript, M.F., G.B., A.C. and D.C. contributed to the revisions.

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Rights and permissions

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License. The images or other third party material in this article are included in the article's Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder in order to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-nd/4.0/

About this article

Cite this article

Ferrari, M., Crane, A., Brown, G. et al. Getting ready for invasions: can background level of risk predict the ability of naïve prey to survive novel predators?. Sci Rep 5, 8309 (2015). https://doi.org/10.1038/srep08309

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/srep08309

This article is cited by

-

Mitigating impacts of invasive alien predators on an endangered sea duck amidst high native predation pressure

Oecologia (2022)

-

The legacy of predator threat shapes prey foraging behaviour

Oecologia (2022)

-

Not so naïve: endangered mammal responds to olfactory cues of an introduced predator after less than 150 years of coexistence

Behavioral Ecology and Sociobiology (2021)

-

Early-life and parental predation risk shape fear acquisition in adult minnows

Animal Cognition (2021)

-

Prey adaptation along a competition-defense tradeoff cryptically shifts trophic cascades from density- to trait-mediated

Oecologia (2020)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.