Abstract

Concrete-filled steel tube columns (CFSTCs) are important elements in the construction sector and predictive analysis of their behavior is essential. Recent works have revealed the potential of metaheuristic-assisted approximators for this purpose. The main idea of this paper, therefore, is to introduce a novel integrative model for appraising the axial compression capacity (Pu) of CFSTCs. The proposed model represents an artificial neural network (ANN) supervised by satin bowerbird optimizer (SBO). In other words, this metaheuristic algorithm trains the ANN optimally to find the best contribution of input parameters to the Pu. In this sense, column length and the compressive strength of concrete, as well as the characteristics of the steel tube (i.e., diameter, thickness, yield stress, and ultimate stress), are considered input data. The prediction results are compared to five ANNs supervised by backtracking search algorithm (BSA), earthworm optimization algorithm (EWA), social spider algorithm (SOSA), salp swarm algorithm (SSA), and wind-driven optimization. Evaluating various accuracy indicators showed that the proposed model surpassed all of them in both learning and reproducing the Pu pattern. The obtained values of mean absolute percentage error of the SBO-ANN was 2.3082% versus 4.3821%, 17.4724%, 15.7898%, 4.2317%, and 3.6884% for the BSA-ANN, EWA-ANN, SOSA-ANN, SSA-ANN and WDA-ANN, respectively. The higher accuracy of the SBO-ANN against several hybrid models from earlier literature was also deduced. Moreover, the outcomes of principal component analysis on the dataset showed that the yield stress, diameter, and ultimate stress of the steel tube are the three most important factors in Pu prediction. A predictive formula is finally derived from the optimized SBO-ANN by extracting and organizing the weights and biases of the ANN. Owing to the accurate estimation shown by this model, the derived formula can reliably predict the Pu of concrete-filled steel tube columns.

Similar content being viewed by others

Introduction

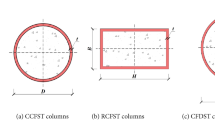

The world of engineering has witnessed continuous development of sophisticated algorithms and apparatus that lead to less complicated calculations and easier implementations of evaluative models1,2,3,4. For instance, many experimental efforts have used such developments to evaluate the quality of construction materials5,6,7,8. Focusing on civil and structural engineering, experts have benefited from novel approaches to analyze structural elements and construction materials such as concrete and steel9,10,11,12. Above many construction materials, concrete has been broadly used for various civil engineering projects13,14,15,16,17. Steel is another popular material that, owing to specific advantages, has received huge attention in generating structural elements18,19. In recent decades, engineers have suggested using composite structural elements to take advantage of both concrete and steel20,21,22. Becoming an effective construction material, numerous studies have been dedicated to assessing the capacity of composite structures. For instance, Shakouri Mahmoudabadi et al.23 investigated the behavior of concrete columns fortified with glass fiber-reinforced polymer bars subjected to eccentric loading using experimental and finite element analysis models. They observed that the loading capacity of the specimens declines as the eccentricity rises. As a particular type, concrete-filled steel tube column (CFSTC) is highly regarded in civil engineering works worldwide24,25,26. In this regard, many experimental and numerical approaches have been developed for exploring their behaviors27,28,29, and more particularly, the axial compression capacity (Pu)30,31.

Due to the highly non-linear relationship between the mechanical parameters of construction material and influential characteristics, recent scientific efforts advice employing machine learning like artificial neural network (ANN)32, gradient tree boosting algorithm33, support vector regression (SVR)34, and adaptive neuro-fuzzy inference system (ANFIS)35 models for such purposes. These models are able to map and reproduce the intrinsic dependency of any output parameter on its corresponding inputs36,37,38. For example, Ghasemi and Naser39 could successfully use two explainable artificial intelligence techniques called XGBoost and random forest to predict the compressive strength of 3D concrete mixtures. These techniques also revealed the pivotal role of specimen age and fine aggregate quantity in the prediction task. As for the Pu-related simulations, many scholars have benefited from these models to establish a firm predictive intelligence. Le40 could predict the bearing capacity of elliptical CFSTC subjected to axial load using ANFIS and present a graphical user interface for this purpose. Ahmadi et al.41 professed the applicability of ANN and also its superiority over experimental tools for the same objective. The suggested model could achieve correlation values of around 0.93 in the training and validation phases, and about 0.90 in the testing phase. A powerful ANN was optimized and used by Tran et al.42,43. This model, along with sensitivity analysis, investigated the effect of inputs and pointed out the steel tube diameter as the most efficient factor. Gene expression programming is another popular intelligent model that was hired by Nour and Güneyisi44 for evaluating the ultimate strength of CFSTC created from recycled aggregate concrete. Naser et al.45 presented another successful use of this algorithm.

More sophisticated efforts that sought optimal solutions resulted in designing capable search strategies for intricate problems46,47,48. These models are called metaheuristic techniques that simulate the problem in their specific environment and finally provide the optimum solution49,50,51. The pivotal objective of many studies has been showing the optimization competency of these algorithms52,53,54. A well-known application of metaheuristic is assisting conventional predictors toward a more reliable performance. Mai et al.55 proposed the combination of radial basis function (RBF) ANN with firefly algorithm (FFA), differential evolution (DE), and genetic algorithm (GA) for estimating the Pu of square CFSTC. A comparison showed that the RBF-FFA model can perform 28, 37, and 52% more accurately than RBF-GA, RBF-DE, and conventional ANN, respectively. Likewise, Ren et al.56 synthesized particle swarm optimization (PSO) and support vector machine for analyzing the ultimate bearing capacity of CFSTC. Due to the higher accuracy, the proposed model was preferred over theoretical and empirical techniques. Hanoon et al.57 trained an ANN with a PSO algorithm and achieved a good accuracy (coefficient of variation between 4.98% and 9.53%) in evaluating the flexural bending capacity of CFST beams. Ngo and Le58 incorporated SVR, which is a popular intelligent predictor, with grey wolf optimization (GWO) for analyzing the bearing capacity of CFSTCs. Due to the considerable accuracy improvements caused by the proposed model (from 10.3 to 87.9%), it was introduced as an effective tool for this purpose. Further similar applications of such algorithms can be found for invasive weed optimization (IWO)59, genetic algorithm (GA)60, and balancing composite motion optimization (BCMO)61.

From the above-discussed studies, it can be found that the combination of regular predictors with metaheuristic algorithms makes promising evaluative models for various concrete-related parameters62,63. On the other hand, the advent of new metaheuristic algorithms calls for extensive investigations into the suitability of the existing models. This study is therefore concerned with designing a novel integrative model based on ANN supervised by satin bowerbird optimizer (SBO)64 for estimating the Pu of CCFSTC. Moreover, to have a comparative approach, the performance of the SBO is compared to five other optimizers, namely backtracking search algorithm (BSA)65, earthworm optimization algorithm (EWA)66, social spider algorithm (SOSA)67, salp swarm algorithm (SSA)68, and wind-driven optimization (WDO)69 in the present study, as well as several methods in the previous literature. It is worth mentioning that the selected algorithms have not been earlier used for this purpose; and owing to the comparisons that will be performed among a large number of techniques, the findings of this research provide valuable insights into the literature of machine learning applications in estimating the Pu of CCFSTCs. The optimum configurations of the used models are discovered to predict the Pu from related geometrical and physical parameters. Two other outcomes of this study are (i) implementing statistical analysis on the Pu dataset to identify the most important parameters and (ii) a mathematical monolithic formula that can eliminate the need for computer-aided computations for calculating the Pu.

In the following, the manuscript is organized as follows: Section “Materials and methods” describes the used material (i.e., data, algorithms, and accuracy criteria), Section “Results and discussion” presents the results along with relevant discussion about the findings, and Section “Conclusions” gives the conclusions.

Materials and methods

Data provision

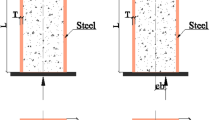

The CCFST data that is used for feeding the models of this research is taken from a previously done study by Tran et al.70. They analyzed the Pu of CCFSTC with ultra-high-strength concrete (UHSC) by finite element methods. A large dataset was produced that presents 768 Pu values versus some parameters that affect it. These parameters are called inputs (versus the Pu which is called target) that include column length (L), the diameter of steel tube (D), the thickness of steel tube (t), the yield stress of steel tube (fy), ultimate stress of steel tube (fu), and compressive strength of UHSC (fc’). Figure 1a–f show how these parameters change over the dataset. Likewise, Fig. 1g depicts the behavior of the Pu. Also, Table 1 reports the statistical indicators of the dataset.

Providing a sufficient number of samples to the machine learning models is of great importance in attaining a dependable analysis. The dataset consists of 768 records, which after permutation, were divided into two quite different parts with respect to the famous 80:20 ratio. The reason for permuting the dataset is to have samples from all parts of the dataset. These sub-datasets contain 614 and 154 samples which are used in the training and testing processes, respectively. In the training phase, the model explores the dependence of the Pu on the whole inputs and generates a pattern accordingly. Then, it applies the pattern to the smaller dataset to see how accurately the model can predict new Pus.

The SBO

Based on the courtship and copulation of a so-called bird “satin bowerbird”, Moosavi and Bardsiri64 proposed a new optimization of the ANFIS called SBO. Up to now, many scholars have chosen this algorithm for their optimization purposes71,72. Moayedi and Mosavi73, for example, created a powerful hybrid of ANN using the SBO applied to electrical load prediction. The algorithm draws on six major steps: (a) random generation of the population, probability calculation (for each individual), elitism, spotting changes in the positions, mutation, and finally synthesizing old and new populations64.

In a more clear description, after creating a random population, the position of each bird is presented by a K-dimensional vector. Next, the algorithm calculates a probability value based on Eq. 1 that stands for the attractiveness of the birds.

in which \({fit}_{i}\) gives the fitness of the ith bird obtained from the below equation:

where \(f({X}_{k})\) stands for the cost function of the bower k. These values are compared in the elitism step to select the best-fitted member. In this regard, the higher the fitness is, the better the solution is.

Equation 3 expresses the adjustment of other bowerbirds’ positions throughout iterative efforts.

in which \({\lambda }_{j}\) is step length indicator, \({X}_{ij}\) stands for the element j in the position vector of the bowerbird i (likewise \({X}_{best,j}\) denotes this element in the position vector of the best bowerbird), noting that j is obtained from the roulette wheel technique. In this algorithm, more experienced bowerbirds may eliminate weaker ones in the courtship competition. It leads to a mutation process which can be expressed by the below relationship74,75.

where the maximum and minimum values of variables are respectively denoted by \({Var}_{max}\) and \({Var}_{min}\) and the difference between them is shown by z. Lastly, the former population is combined with the new ones at the end of each cycle. The whole population is then evaluated and sorted with respect to the fitness values and those with the lowest cost are preserved. This process continues iteratively until a computational goal is satisfied76

The benchmarks

Toward a comparative assessment of the proposed model, five different metaheuristic methods, namely BSA, EWA, SOSA, SSA, and WDO are used in this work. The same duty of the SBO (i.e., training the ANN) is assigned to these algorithms. While each algorithm simulates the problem based on a specific strategy, they are all known as population-based techniques. It means that each algorithm hires a population of search agents (e.g., earthworms in the EWA) to seek the optimum solution in the problem space. After designating proper parameters (e.g., the population size), relevant physical/natural rules are applied to provide optimal training for the ANN. Another similarity among these algorithms is that they need to be implemented for a large number of iterations (e.g., 1000) to minimize the cost function properly (will be explained in Section “Network optimization (training)”). The overall description of these strategies is presented in Table 2 and further methodological details can be found in studies given in the last column.

Accuracy assessment criteria

There are different indicators to assess the accuracy of predictive models. Each one follows a specific formula comparing the predicted and expected values of the simulated parameter. In this work, four famous ones, namely the RMSE, mean absolute error (MAE), mean absolute percentage error (MAPE), and Pearson correlation coefficient (R) are used. The first three indicators deal with the error of prediction, while R indicates the goodness of fit in a regression chart. The formulation of these indicators is defined as follows:

where N represents the number of data. Also,\({P}_{u {i}_{observed}}\) and \({P}_{u {i}_{estimated}}\) stand for the ith observed and estimated values of Pu (with averages of \({\overline{P} }_{u\,observed}\) and \({\overline{P} }_{u\,estimated}\)), respectively.

Results and discussion

Network optimization (training)

The role of metaheuristic algorithms in combination with an ANN was explained in the previous sections. By unsupervised optimization, they achieve the optimal parameters (biases and weights) for the given ANN. Determining the structure of the ANN is a prerequisite of this process. The number of processors (i.e., neurons) in the hidden layers is an important variable. In this work, this variable is determined based on the previous experience of the authors supported by a trial-and-error test for the values. It was revealed that among 15 tested values (i.e., 1, 2, …, 15), five neurons build the most accurate network. So, given the number of inputs (i.e., six) and the single output, the ANN takes the format of 6 × 5 × 1.

The SBO algorithm was combined with the mentioned ANN to create the SBO-ANN hybrid. As illustrated in Fig. 2, this process has the following steps:

-

1.

The selected ANN model is fed by the training dataset,

-

2.

The mathematical representation of the ANN is created (will be explained in Section “An explicit formula”). The variables of this equation are the weights and biases of the ANN which must be tuned,

-

3.

Training RMSE is designated as the objective function,

-

4.

The mathematical ANN is exposed to the SBO algorithm as its optimization problem and the SBO tries to minimize this function so it achieves a lower RMSE (i.e., better training). This process is considered the main optimization step which is carried out by trying to improve the problem variables (i.e., weights and biases) in every iteration of the SBO.

A significant parameter of such optimization techniques is the size of the population (SoP). A well-accepted way to find a suitable SoP is by testing a wide range of them87. Figure 3a shows the convergence of the tested SBO-ANNs. According to this figure, all curves reach a relatively steady situation after one thousand iterations. Meanwhile, the training RMSE of each iteration gives the objective function (the y-axis). This figure also says that the lowest error is obtained for the SoP = 500. Thus, the results of this configuration will be considered for the SBO-ANN performance assessment.

The above efforts were executed for the benchmark algorithms (i.e., BSA, EWA, SOSA, SSA, and WDO) as well. In Fig. 3b, the convergence curves of all models are gathered and compared. Note that, the curves of the BSA-ANN, EWA-ANN, SOSA-ANN, SSA-ANN, and WDO-ANN belong to the SoPs of 400, 200, 200, 400, and 400, respectively. As is seen, there is a distinction between the final RMSE of the EWA-ANN and SOSA-ANN with others. Also, the RMSE of the SBO-ANN is below the benchmarks.

Knowing that optimization algorithms have a stochastic behavior, multiple runs are performed for each of the above conditions to ensure the repeatability of the results. Figure 3b, the RMSEs corresponding the initial solutions of the BSA, EWA, SOSA, SSA, WDO, and SBO were 8813.5833, 10,156.1479, 186,630.4071, 8056.0601, 12,194.4660, and 38,763.7112 which were minimized by these algorithms down to 1554.9111, 6408.0760, 4653.5890, 1233.5169, 1247.4574, 934.1530, respectively. These reductions show a nice optimization competency for all used algorithms concerning the problem at hand.

These results show that the efforts of the SBO algorithm have been more productive relative to other algorithms. This superiority is professed by higher accuracy of training (i.e., lower error). To prove this, the outputs of the training data are compared to the observed Pus. Figure 4 illustrates this comparison in the form of regression charts. At a glance, the prediction of all six models is in very good agreement with expectations. However, the points of the BSA-ANN, SSA-ANN, and WDO-ANN are more aggregated than EWA-ANN and SOSA-ANN. The R values are obtained 0.99485, 0.90565, 0.95233, 0.99663, and 0.99655 for the BSA-ANN, EWA-ANN, SOSA-ANN, SSA-ANN, and WDO-ANN, respectively. As for the SBO-ANN, with the R-value of 0.99817, it outperformed all mentioned models.

The above comparison is indicated by other accuracy indicators, too. The RMSEs of the BSA-ANN, EWA-ANN, SOSA-ANN, SSA-ANN, WDO-ANN, and SBO-ANN were 1554.91, 6408.07, 4653.58, 1233.51, 1247.45, and 934.15, respectively (Fig. 4b). These values reflect the high quality of training carried out by the metaheuristic algorithms. The MAEs and the corresponding MAPEs were 1137.59 and 4.1591%, 5056.13 and 19.9943%, 3652.30 and 16.0975%, 965.20 and 3.7931%, 947.07 and 3.4434%, and 669.75 and 2.5060%. As these values imply, the training process is associated with tolerable and small errors. A low level of error means that the algorithms have nicely understood the neural relationship (between the Pu of CCFSTC and the L, D, t, fy, fu, and fc’) and have tuned the network parameters accordingly.

Testing performance

As explained, the networks were initially derived from the information of 154 CCFSTCs in the training phase. This data was used to assess the efficiency of the models in dealing with unseen column conditions. In this process, when metaheuristic algorithms provide a calculation pattern for the ANNs, it should be demonstrated that this pattern can be applied to new problems.

Figure 5 shows the regression charts of the testing data. Based on the R values of 0.99485, 0.91217, 0.95068, 0.99519, 0.99522, and 0.99802, all testing products show an excellent (> 91%) goodness-of-fit. Similar to Fig. 4, the points of the EWA-ANN and SOSA-ANN are more scattered compared to other models.

For further evaluation, Fig. 6 depicts the difference between the observed Pus and the pattern predicted by each model. The overall trend of the points is nicely estimated by all lines. No significant misleading has occurred and it shows that the neural-metaheuristic models can bear abrupt changes. Thus, the used models are competent enough to predict the Pu by taking the inputs. However, in compliance with previous results, the lines pertaining to the BSA-ANN, SSA-ANN, WDO-ANN, and SBO-ANN show a higher consistency with the observed values. Also, the magnified sections indicate that the smallest underestimating and overestimating cases (i.e., errors) are observed for the SBO-ANN line. Moreover, the RMSEs of 1507.82, 5906.41, 4559.30, 1418.51, 1406.62, and 927.09, as well as the MAEs of 1186.11, 4556.12, 3614.79, 1119.15, 1047.78, and 625.36 indicate that the prediction errors are at a tolerable level. It can be also revealed by the MAPEs of 4.3821, 17.4724, 15.7898, 4.2317, 3.6884, and 2.3082%.

Comparative assessment

The idea of evaluating some benchmark methods is a well-known way of demonstrating the efficiency of a new method. In this work, the performance of the proposed SBO was compared with five capable metaheuristic techniques, namely the BSA, EWA, SOSA, SSA, and WDO. All results manifested that the SBO is superior to the benchmarks in terms of all accuracy indicators. For example, the smallest relative error (i.e., MAPE) in both training and testing phases were obtained by the SBO to be 2.5060 and 2.3082%, respectively.

For a better evaluation, a scoring system is developed among the models to compare their accuracies. According to earlier literature, using scoring systems is a popular approach for comparison of machine learning models88. In this regard, for each accuracy indicator, a score is designated to each model with respect to its rank so that the higher the accuracy, the larger the score. In this research, there are 6 models, and accordingly, the scores may vary from 1 to 6. As an example, the EWA-ANN had the highest RMSE and lowest R; hence, its score is 1 for both accuracy criteria. In contrast, the SBO-ANN had the highest R and lowest RMSE; hence, its score is 6 for both accuracy criteria. For each model, an overall score is calculated (as the summation of all obtained scores) to make the final judgment of ranking in each phase.

The results are shown in Table 3. Apart from the SBO which grasped the largest overall score = 24 in both phases, the SSA and WDO have a close competition for the second position. Their overall scores = 18 in the training phase, while the WDO gave a better testing performance (with overall scores of 20 vs. 16). The BSA emerged as the fourth accurate model, followed by the SOSA and EWA (with respective overall scores of 12, 8, and 4 in both phases).

Moreover, Fig. 7 plots the Taylor Diagrams for graphical comparison. In this figure, the points are positioned with respect to their standard deviation and correlation coefficients simultaneously. The point of the target data is black and its position should be compared to the points of the used models. As is seen, the red plus sign which corresponds to the SBO-ANN model is the nearest to the Target point in both training and testing phases, followed by the points of the BSA-ANN, WDO-ANN, and SSA-ANN. After that, there is a considerable gap between the mentioned points and those of the SOSA-ANN and EWA-ANN; demonstrating poorer predictions for these two models. Altogether, the comparison shown in Fig. 7 is in agreement with Table 3; both declaring the SBO-ANN as the outstanding model of the study.

For further comparison, Fig. 8 depicts the boxplots of the target and predicted Pus. Visual interpretation of this figure confirms the comparison results in Fig. 7 and Table 3, because the results of the SBO-ANN are closest to the target values (in terms of minimum, mean, maximum, and median values).

An explicit formula

This section is concerned with presenting a neural formula that can predict the Pu. All hybrid models used in this work had the same structure of the neural network (i.e., 6 × 5 × 1) as shown in Fig. 9. The difference was their computational weights and biases that were tuned by various metaheuristic algorithms. It was decided to present the formula of the SBO-ANN as it provided a more accurate solution.

In order to extract the formula of a three-layered ANN, two equations should be created (see Fig. 9):

-

1.

One large equation that accounts for the computations in the middle layer as given in Eq. 9:

$$ \left[ Q \right] = \frac{2}{{1 + e^{{ - 2\left( {\left[ {IW} \right] . \left[ {Input} \right]} \right) + \left[ {b1} \right])}} }} - 1,\quad (i = 1,2, \ldots ,5) $$(9) -

2.

Another equation that accounts for the computations in the output layer; releasing the final Pu as given in Eq. 10:

$$ P_{u} = \left[ {LW} \right] \cdot \left[ Q \right] + \left[ {b2} \right], $$(10)in which \([Q]\) is the outcome of the middle layer which is the input of the output layer. Also, [Input] is the vector of inputs, [IW] is the vector of weights between the input and hidden neurons, [b1] is the vector of biases of the hidden neurons, [LW] is the vector of weights between the output and hidden neurons, and [b2] is the bias of the output neuron; as introduced below:

$$\left[Input\right]=\left[\begin{array}{c}D\\ L\\ t\\ {f}_{y}\\ {f}_{u}\\ {f}_{c}{\prime}\end{array}\right],$$(11)$$\left[IW\right]= \left[\begin{array}{cccccc}0.7456& -0.9534& -0.8111& -0.8484& 0.3690& 0.6106\\ 0.8780& -0.4792& 1.0182& -0.1693& -1.0045& 0.5260\\ -0.8427& 0.1059& 1.0328& 0.9392& 0.7887& -0.2435\\ 0.9189& 1.0170& -0.0325& 0.6495& 0.9647& 0.3456\\ 0.2949& 1.0359& 0.6691& 1.0239& 0.3983& -0.7327\end{array}\right],$$(12)$$\left[b1\right]=\left[\begin{array}{c}-1.8307\\ -0.9154\\ 0.0000\\ 0.9154\\ 1.8307\end{array}\right],$$(13)$$LW=\left[\begin{array}{ccccc}0.4121& -0.9363& -0.4462& -0.9077& -0.8057\end{array}\right],$$(14)$$b2= \left[\begin{array}{c}0.6469\end{array}\right],$$(15)

Discussion, limitations, and future work

As is known, preventing computational drawbacks such as overfitting and local minima is of great importance in machine learning implementations. In this work, this issue was taken under control using powerful optimization algorithms that employ specific strategies to keep their solution safe from computational weaknesses. Therefore, it can be said that the used ANNs have not experienced overfitting and local minima problems.

In comparison with solutions that were suggested in earlier studies, it can be said that the proposed SBO-ANN achieved significant improvements. In a study by Zheng et al.89, three optimization algorithms of equilibrium optimization (EO)90, grey wolf optimization (GWO)91, and Harris hawk optimizer (HHO)92 were combined with ANFIS93 for the Pu prediction. Likewise, two ANNs were optimized by Hu et al.94 using social ski-driver (SSD)95 and future search algorithm (FSA)96. Table 4 compares the RMSE, MAPE, MAE, and R values of these models with the SBO-ANN. According to these results, the accuracy of the SBO-ANN model is higher than all five benchmarks, due to lower error values (RMSE, MAPE, MAE) and higher R values in both training and phases.

Referring to Figs. 4 and 5, one may argue that while all models achieve a reliable R (> 0.90), there are notable differences between the obtained values. For instance, REWA-ANN = 0.90565 vs. RSBO-ANN = 0.99817 in the training phase and REWA-ANN = 0.91217 vs. RSBO-ANN = 0.99802 in the testing phase. Since all models have been trained and tested using the same datasets, the reason behind these differences must be sought in the optimization ability of the used algorithms (see Fig. 3). On the other hand, based on Table 3, it should be noted that there is a consistency between the training and testing performance of the models; as the model with the strongest training yielded the best testing quality and vice versa.

In machine learning applications, it is essential to understand the significance of the used input factors. Statistical analysis is commonly used for this purpose to see which input factors have the greatest effect on the prediction of a given target parameter (here Pu). In this work, principal component analysis (PCA)97 is used to establish an importance assessment method. In the PCA method, after analyzing the dataset:

-

1.

The primary outcomes are several components each having an eigenvalue. As a well-accepted threshold, eigenvalue = 1 is used to determine which components are considered principal (if eigenvalue > 1). In this work, among the six created components, two of them reached an eigenvalue > 1. These two components are called PC1 and PC2 which together account for nearly 60.30% of variation in data.

-

2.

PC1 and PC2 are then analyzed to identify the most significant inputs. Each input factor in these PCs is attributed to a loading factor. In case the loading factor is > 0.75 (or < -0.75), the input is considered significant98. Figure 10 shows the results, according to which, fy and fu in PC1 along with D in PC2 satisfy this condition.

Considering the limitations of this study, a number of ideas can be raised for future efforts as follows:

-

1.

Replacing the used metaheuristic algorithms with newer members of this family and comparing the results toward improving the obtained solution.

-

2.

Exposing the models to external datasets in order to extend their generalizability.

-

3.

Taking advantage of the PCA results in order to train the models using the most important input factors and compare them with the models trained by the original dataset.

-

4.

Developing a graphical user interface (GUI) from the suggested models.

Conclusions

This paper offered a novel hybrid algorithm for approximating the axial compression capacity of concrete-filled steel tube columns. To this end, an ANN was properly supervised by the satin bowerbird optimizer to analyze the dependency of the Pu on several input parameters. To achieve the optimum configuration of the model, the best population size of the SBO was determined. The goodness of the training results reflected a high learning accuracy of the suggested model (e.g., MAPE = 2.5060). This model could also predict the Pu for unseen samples with low error (e.g., MAPE = 2.3082). In both phases, the SBO-ANN surpassed five other metaheuristic ensembles, namely BSA-ANN, EWA-ANN, SOSA-ANN, SSA-ANN, and WDA-ANN. In addition, the proposed model presented more accurate results compared to several methods from the literature. Moreover, the results of principal component analysis revealed that fy, fu, and D are the most important parameters on the Pu. Altogether, the findings of this research can be practically used for optimizing the CFSTC design. Finally, an explicit formula was derived from the developed model which can predict the Pu without the need for computer-aided software. Regarding the limitations, some ideas were suggested for future efforts toward optimizing the model and data leading to better solutions.

Data availability

The data analysed during this study are taken from an earlier study Ref.70 and are publicly available in the mentioned paper.

References

Fu, C., Yuan, H., Xu, H., Zhang, H. & Shen, L. TMSO-Net: Texture adaptive multi-scale observation for light field image depth estimation. J. Vis. Commun. Image Represent. 90, 103731 (2023).

Li, T., Shi, H., Bai, X., Zhang, K. & Bin, G. Early performance degradation of ceramic bearings by a twin-driven model. Mech. Syst. Signal Process. 204, 110826 (2023).

Huang, H., Yao, Y. & Zhang, W. A push-out test on partially encased composite column with different positions of shear studs. Eng. Struct. 289, 116343 (2023).

Liu, C. et al. The role of TBM asymmetric tail-grouting on surface settlement in coarse-grained soils of urban area: Field tests and FEA modelling. Tunn. Undergr. Space Technol. 111, 103857 (2021).

Pang, B. et al. Inner superhydrophobic materials based on waste fly ash: Microstructural morphology of microetching effects. Compos. B Eng. 268, 111089 (2024).

He, H., Wang, S., Shen, W. & Zhang, W. The influence of pipe-jacking tunneling on deformation of existing tunnels in soft soils and the effectiveness of protection measures. Transp. Geotech. 42, 101061 (2023).

Li, Z. et al. Ternary cementless composite based on red mud, ultra-fine fly ash, and GGBS: Synergistic utilization and geopolymerization mechanism. Case Stud. Constr. Mater. 19, e02410 (2023).

Liu, W., Liang, J. & Xu, T. Tunnelling-induced ground deformation subjected to the behavior of tail grouting materials. Tunn. Undergr. Space Technol. 140, 105253 (2023).

Zhang, J. & Zhang, C. Using viscoelastic materials to mitigate earthquake-induced pounding between adjacent frames with unequal height considering soil-structure interactions. Soil Dyn. Earthq. Eng. 172, 107988 (2023).

Yao, Y., Zhou, L., Huang, H., Chen, Z. & Ye, Y. Cyclic performance of novel composite beam-to-column connections with reduced beam section fuse elements. Structures 50, 842–858 (2023).

Sun, G., Kong, G., Liu, H. & Amenuvor, A. C. Vibration velocity of X-section cast-in-place concrete (XCC) pile–raft foundation model for a ballastless track. Can. Geotech. J. 54(9), 1340–1345 (2017).

Shu, Z. et al. Reinforced moment-resisting glulam bolted connection with coupled long steel rod with screwheads for modern timber frame structures. Earthq. Eng. Struct. Dyn. 52(4), 845–864 (2023).

Sun, L., Yang, Z., Jin, Q. & Yan, W. Effect of axial compression ratio on seismic behavior of GFRP reinforced concrete columns. Int. J. Struct. Stability Dyn. 20(06), 2040004 (2020).

Abedini, M.; Zhang, C., Performance assessment of concrete and steel material models in LS-DYNA for enhanced numerical simulation, a state of the art review. Arch. Comput. Methods Eng. (2020).

Ju, Y., Shen, T. & Wang, D. Bonding behavior between reactive powder concrete and normal strength concrete. Constr. Build. Mater. 242, 118024 (2020).

Zhang, W., Kang, S., Lin, B. & Huang, Y. Mixed-mode debonding in CFRP-to-steel fiber-reinforced concrete joints. J. Compos. Constr. 28(1), 04023069 (2024).

He, H. et al. Employing novel N-doped graphene quantum dots to improve chloride binding of cement. Constr. Build. Mater. 401, 132944 (2023).

Liang, F., Wang, R., Pang, Q. & Hu, Z. Design and optimization of press slider with steel-aluminum composite bionic sandwich structure for energy saving. J. Clean. Prod. 428, 139341 (2023).

Huang, H., Yao, Y., Liang, C. & Ye, Y. Experimental study on cyclic performance of steel-hollow core partially encased composite spliced frame beam. Soil Dyn. Earthq. Eng. 163, 107499 (2022).

Li, H., Yang, Y., Wang, X. & Tang, H. Effects of the position and chloride-induced corrosion of strand on bonding behavior between the steel strand and concrete. Structures 38, 105500 (2023).

Zhang, X., Liu, X., Zhang, S., Wang, J., Fu, L., Yang, J. & Huang, Y., Analysis on displacement‐based seismic design method of recycled aggregate concrete‐filled square steel tube frame structures. Struct. Concrete (2023).

Zhang, X. et al. Experimental and numerical analysis of seismic behaviour for recycled aggregate concrete filled circular steel tube frames. Comput. Concrete 31(6), 537 (2023).

Shakouri Mahmoudabadi, N. et al. Effects of eccentric loading on performance of concrete columns reinforced with glass fiber-reinforced polymer bars. Sci. Rep. 14(1), 1890 (2024).

Varma, A. H., Ricles, J. M., Sause, R. & Lu, L.-W. Experimental behavior of high strength square concrete-filled steel tube beam-columns. J. Struct. Eng. 128(3), 309–318 (2002).

Morino, S. & Tsuda, K. Design and construction of concrete-filled steel tube column system in Japan. Earthq. Eng. Eng. Seismol. 4(1), 51–73 (2003).

Wang, X., Li, L., Xiang, Y., Wu, Y. & Wei, M. The influence of basalt fiber on the mechanical performance of concrete-filled steel tube short columns under axial compression. Front. Mater. 10, 1332269 (2024).

Schneider, S. P. Axially loaded concrete-filled steel tubes. J. Struct. Eng. 124(10), 1125–1138 (1998).

Shen, Z.-Y., Lei, M., Li, Y.-Q., Lin, Z.-Y. & Luo, J.-H. Experimental study on seismic behavior of concrete-filled L-shaped steel tube columns. Adv. Struct. Eng. 16(7), 1235–1247 (2013).

Li, N., Lu, Y.-Y., Li, S. & Liang, H.-J. Statistical-based evaluation of design codes for circular concrete-filled steel tube columns. Steel Compos. Struct. 18(2), 519–546 (2015).

Baig, M. N., Fan, J. & Nie, J. Strength of concrete filled steel tubular columns. Tsinghua Sci. Technol. 11(6), 657–666 (2006).

Dundu, M. Compressive strength of circular concrete filled steel tube columns. Thin-Walled Struct. 56, 62–70 (2012).

Nguyen, M.-S.T., Thai, D.-K. & Kim, S.-E. Predicting the axial compressive capacity of circular concrete filled steel tube columns using an artificial neural network. Steel Compos. Struct. 35(3), 415–437 (2020).

Vu, Q.-V., Truong, V.-H. & Thai, H.-T. Machine learning-based prediction of CFST columns using gradient tree boosting algorithm. Compos. Struct. 259, 113505 (2021).

Shi, M.-L., Lv, L. & Xu, L. A multi-fidelity surrogate model based on extreme support vector regression: Fusing different fidelity data for engineering design. Eng. Comput. 40(2), 473–493 (2023).

Ly, H.-B., Pham, B. T., Le, L. M., Le, T.-T., Le, V. M. & Asteris, P. G. Estimation of axial load-carrying capacity of concrete-filled steel tubes using surrogate models. Neural Comput. Appl. 1–22 (2020)

Su, Y. et al. End-to-end deep learning model for underground utilities localization using GPR. Autom. Constr. 149, 104776 (2023).

Es-haghi, M. S., Rezania, M. & Bagheri, M. Machine learning-based estimation of soil’s true air-entry value from GSD curves. Gondwana Res. 123, 280–292 (2023).

Sadegh Es-haghi, M., Abbaspour, M., Abbasianjahromi, H. & Mariani, S. Machine learning-based prediction of the seismic bearing capacity of a shallow strip footing over a void in heterogeneous soils. Algorithms 14(10), 288 (2021).

Ghasemi, A. & Naser, M. Tailoring 3D printed concrete through explainable artificial intelligence. Structures 56, 104850 (2023).

Le, T.-T. Practical hybrid machine learning approach for estimation of ultimate load of elliptical concrete-filled steel tubular columns under axial loading. Adv. Civil Eng. 2020 (2020).

Ahmadi, M., Naderpour, H. & Kheyroddin, A. ANN model for predicting the compressive strength of circular steel-confined concrete. Int. J. Civil Eng. 15(2), 213–221 (2017).

Tran, V.-L., Thai, D.-K. & Kim, S.-E. A new empirical formula for prediction of the axial compression capacity of CCFT columns. Steel Compos. Struct. 33(2), 181–194 (2019).

Tran, V.-L., Thai, D.-K. & Kim, S.-E. Application of ANN in predicting ACC of SCFST column. Compos. Struct. 228, 111332 (2019).

Nour, A. I. & Güneyisi, E. M. Prediction model on compressive strength of recycled aggregate concrete filled steel tube columns. Compos. Part B Eng. 173, 106938 (2019).

Naser, M., Thai, S. & Thai, H.-T. Evaluating structural response of concrete-filled steel tubular columns through machine learning. J. Build. Eng. 34, 101888 (2021).

Harandizadeh, H., Toufigh, M. M. & Toufigh, V. Application of improved ANFIS approaches to estimate bearing capacity of piles. Soft Comput. 23(19), 9537–9549 (2019).

Chaudhuri, P. & Maity, D. Cost optimization of rectangular RC footing using GA and UPSO. Soft Comput. 24(2), 709–721 (2020).

Long, X., Mao, M.-H., Su, T.-X., Su, Y.-T. & Tian, M.-K. Machine learning method to predict dynamic compressive response of concrete-like material at high strain rates. Defence Technol. 23, 100–111 (2023).

Es-Haghi, M. S., Shishegaran, A. & Rabczuk, T. Evaluation of a novel Asymmetric Genetic Algorithm to optimize the structural design of 3D regular and irregular steel frames. Front. Struct. Civil Eng. 14, 1110–1130 (2020).

Barkhordari, M. & Es-Haghi, M. Straightforward prediction for responses of the concrete shear wall buildings subject to ground motions using machine learning algorithms. Int. J. Eng. 34(7), 1586–1601 (2021).

Es-haghi, M. S. & Sarcheshmehpour, M. (2021) A novel strategy for tall building optimization via combination of asymmetric genetic algorithm and machine learning methods.

Yu, C., Chen, M., Cheng, K., Zhao, X., Ma, C., Kuang, F., & Chen, H. SGOA: Annealing-behaved grasshopper optimizer for global tasks. Eng. Comput. 1–28.

Shan, W. et al. Double adaptive weights for stabilization of moth flame optimizer: Balance analysis, engineering cases, and medical diagnosis. Knowl.-Based Syst. 214, 106728 (2021).

Es-Haghi, M. S., Salehi, A. & Strauss, A. Enhanced teacher-learning based algorithm in real size structural optimization. J. Civil Eng. Manag. 28(4), 292–304 (2022).

Mai, S. H., Seghier, M. E. A. B., Nguyen, P. L., Jafari-Asl, J. & Thai, D.-K. A hybrid model for predicting the axial compression capacity of square concrete-filled steel tubular columns. Eng. Comput. 38, 1–18 (2020).

Ren, Q., Li, M., Zhang, M., Shen, Y. & Si, W. Prediction of ultimate axial capacity of square concrete-filled steel tubular short columns using a hybrid intelligent algorithm. Appl. Sci. 9(14), 2802 (2019).

Hanoon, A. N., Al Zand, A. W. & Yaseen, Z. M. Designing new hybrid artificial intelligence model for CFST beam flexural performance prediction. Eng. Comput. 38, 1–27 (2021).

Ngo, N.-T. & Le, H. A. Integration of support vector regression and grey wolf optimization for estimating the ultimate bearing capacity in concrete-filled steel tube columns. Neural Comput. Appl. 33, 1–18 (2021).

Sarir, P. et al. Optimum model for bearing capacity of concrete-steel columns with AI technology via incorporating the algorithms of IWO and ABC. Eng. Comput. 37, 1–11 (2019).

Luat, N.-V., Shin, J. & Lee, K. Hybrid BART-based models optimized by nature-inspired metaheuristics to predict ultimate axial capacity of CCFST columns. Eng. Comput. 38, 1–30 (2020).

Duong, H. T., Phan, H. C., Le, T.-T. & Bui, N. D. Optimization design of rectangular concrete-filled steel tube short columns with Balancing Composite Motion Optimization and data-driven model. Structures 28, 757–765 (2020).

Moayedi, H., Kalantar, B., Foong, L. K., Tien Bui, D. & Motevalli, A. Application of three metaheuristic techniques in simulation of concrete slump. Appl. Sci. 9(20), 4340 (2019).

Liu, C. et al. Computational estimation of the earthquake response for fibre reinforced concrete rectangular columns. Steel Compos. Struct. 34(5), 743–767 (2020).

Moosavi, S. H. S. & Bardsiri, V. K. Satin bowerbird optimizer: A new optimization algorithm to optimize ANFIS for software development effort estimation. Eng. Appl. Artif. Intell. 60, 1–15 (2017).

Civicioglu, P. Backtracking search optimization algorithm for numerical optimization problems. Appl. Math. Comput. 219(15), 8121–8144 (2013).

Wang, G.-G., Deb, S. & dos Santos Coelho, L. Earthworm optimisation algorithm: A bio-inspired metaheuristic algorithm for global optimisation problems. IJBIC 12(1), 1–22 (2018).

James, J. & Li, V. O. A social spider algorithm for global optimization. Appl. Soft Comput. 30, 614–627 (2015).

Mirjalili, S. et al. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 114, 163–191 (2017).

Bayraktar, Z., Komurcu, M. & Werner, D. H. Wind Driven Optimization (WDO): A novel nature-inspired optimization algorithm and its application to electromagnetics. In 2010 IEEE Antennas and Propagation Society International Symposium, 1–4. IEEE (2010).

Tran, V.-L., Thai, D.-K. & Nguyen, D.-D. Practical artificial neural network tool for predicting the axial compression capacity of circular concrete-filled steel tube columns with ultra-high-strength concrete. Thin-Walled Struct. 151, 106720 (2020).

Nair, R. P., & Kanakasabapathy, P., Satin bower bird algorithm for controller parameter optimization in an autonomous AC microgrid. In Advances in Smart Grid Technology, 21–30. Springer (2020).

Chellamani, G. K. & Chandramani, P. V. An optimized methodical energy management system for residential consumers considering price-driven demand response using satin bowerbird optimization. J. Electr. Eng. Technol. 15(2), 955–967 (2020).

Moayedi, H. & Mosavi, A. Electrical power prediction through a combination of multilayer perceptron with water cycle ant lion and satin bowerbird searching optimizers. Sustainability 13(4), 2336 (2021).

Mostafa, M. A., Abdou, A. F., Abd El-Gawad, A. F. & El-Kholy, E. SBO-based selective harmonic elimination for nine levels asymmetrical cascaded H-bridge multilevel inverter. Aust. J. Electr. Electron. Eng. 15(3), 131–143 (2018).

Chintam, J. R. & Daniel, M. Real-power rescheduling of generators for congestion management using a novel satin bowerbird optimization algorithm. Energies 11(1), 183 (2018).

Chen, W., Chen, X., Peng, J., Panahi, M. & Lee, S. Landslide susceptibility modeling based on ANFIS with teaching-learning-based optimization and Satin bowerbird optimizer. Geosci. Front. 12(1), 93–107 (2021).

Wu, D., Foong, L. K. & Lyu, Z. Two neural-metaheuristic techniques based on vortex search and backtracking search algorithms for predicting the heating load of residential buildings. Eng. Comput. 38, 1–14 (2020).

Fadel, W., Kilic, U. & Ayan, K. Optimal reactive power flow of power systems with two-terminal HVDC and multi distributed generations using backtracking search algorithm. Int. J. Electr. Power Energy Syst. 127, 106667 (2021).

Rad, M. H. & Abdolrazzagh-Nezhad, M. A new hybridization of DBSCAN and fuzzy earthworm optimization algorithm for data cube clustering. Soft Comput. 24(20), 15529–15549 (2020).

Ghosh, I.; Roy, P. K. Application of earthworm optimization algorithm for solution of optimal power flow. In 2019 International Conference on Opto-Electronics and Applied Optics (Optronix), 1–6. IEEE (2019).

James, J. & Li, V. O. A social spider algorithm for solving the non-convex economic load dispatch problem. Neurocomputing 171, 955–965 (2016).

El-Bages, M. & Elsayed, W. Social spider algorithm for solving the transmission expansion planning problem. Electric Power Syst. Res. 143, 235–243 (2017).

Guo, Z., Moayedi, H., Foong, L. K. & Bahiraei, M. Optimal modification of heating, ventilation, and air conditioning system performances in residential buildings using the integration of metaheuristic optimization and neural computing. Energy Build. 214, 109866 (2020).

Moayedi, H. et al. Hybridizing four wise neural-metaheuristic paradigms in predicting soil shear strength. Measurement 156, 107576 (2020).

Moayedi, H., Bui, D. T. & Thi Ngo, P. T. Shuffled frog leaping algorithm and wind-driven optimization technique modified with multilayer perceptron. Appl. Sci. 10(2), 689 (2020).

Bayraktar, Z., Komurcu, M., Bossard, J. A. & Werner, D. H. The wind driven optimization technique and its application in electromagnetics. IEEE Trans. Antennas Propag. 61(5), 2745–2757 (2013).

Moayedi, H., Mehrabi, M., Mosallanezhad, M., Rashid, A. S. A. & Pradhan, B. Modification of landslide susceptibility mapping using optimized PSO-ANN technique. Eng. Comput. 38, 1–18 (2018).

Sun, Y., Dai, H.-L., Moayedi, H., Le, B. N. & Adnan, R. M. Predicting steady-state biogas production from waste using advanced machine learning-metaheuristic approaches. Fuel 355, 129493 (2024).

Zheng, Y. et al. Analyzing behavior of circular concrete-filled steel tube column using improved fuzzy models. Steel Compos. Struct. 43(5), 625 (2022).

Faramarzi, A., Heidarinejad, M., Stephens, B. & Mirjalili, S. Equilibrium optimizer: A novel optimization algorithm. Knowl.-Based Syst. 191, 105190 (2020).

Mirjalili, S., Mirjalili, S. M. & Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 69, 46–61 (2014).

Heidari, A. A. et al. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 97, 849–872 (2019).

Jang, J.-S. ANFIS: Adaptive-network-based fuzzy inference system. IEEE Trans. Syst. Man Cybernet. 23(3), 665–685 (1993).

Hu, P., Aghajanirefah, H., Anvari, A. & Nehdi, M. L. Combining artificial neural network and seeker optimization algorithm for predicting compression capacity of concrete-filled steel tube columns. Buildings 13(2), 391 (2023).

Tharwat, A. & Gabel, T. Parameters optimization of support vector machines for imbalanced data using social ski driver algorithm. Neural Comput. Appl. 32, 6925–6938 (2020).

Elsisi, M. Future search algorithm for optimization. Evolut. Intell. 12(1), 21–31 (2019).

Abdi, H. & Williams, L. J. Principal component analysis. Wiley Interdiscipl. Rev. Comput. Stat. 2(4), 433–459 (2010).

Xu, T. et al. An innovative machine learning based on feed-forward artificial neural network and equilibrium optimization for predicting solar irradiance. Sci. Rep. 14(1), 2170 (2024).

Author information

Authors and Affiliations

Contributions

All authors wrote the main manuscript text. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liu, Y., Liang, Y. Satin bowerbird optimizer-neural network for approximating the capacity of CFST columns under compression. Sci Rep 14, 8342 (2024). https://doi.org/10.1038/s41598-024-58756-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-58756-7

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.