Abstract

Flue-cured tobacco grading plays a crucial role in tobacco leaf purchase and the formulation of tobacco leaf groups. However, the traditional flue-cured tobacco grading mode is usually manual, which is time-consuming, laborious, and subjective. Hence, it is essential to research more efficient and intelligent flue-cured tobacco grading methods. Most existing methods suffer from the more classes less accuracy problem. Meanwhile, limited by different industry applications, the flue-cured tobacco datasets are hard to be obtained publicly. The existing methods employ relatively small and lower resolution tobacco data that are hard to apply in practice. Therefore, aiming at the insufficiency of feature extraction ability and the inadaptability to multiple flue-cured tobacco grades, we collected the largest and highest resolution dataset and proposed an efficient flue-cured tobacco grading method based on deep densely convolutional network (DenseNet). Diverging from other approaches, our method has a unique connectivity pattern of convolutional neural network that concatenates preceding tobacco feature data. This mode connects all previous layers to the subsequent layer directly for tobacco feature transmission. This idea can better extract depth tobacco image information features and transmit each layer’s data, thereby reducing the information loss and encouraging tobacco feature reuse. Then, we designed the whole data pre-processing process and experimented with traditional and deep learning algorithms to verify our dataset usability. The experimental results showed that DenseNet could be easily adapted by changing the output of the fully connected layers. With an accuracy of 0.997, significantly higher than the other intelligent tobacco grading methods, DenseNet came to the best model for solving our flue-cured tobacco grading problem.

Similar content being viewed by others

Introduction

As the primary raw material of the cigarette industry, flue-cured tobacco leaves affect the quality of tobacco formula products. Thus, improving the economic value of flue-cured tobacco is vital to meet cigarette industry development needs. The grading of flue-cured tobacco will result in the quality of the leaf, which will be bargained finally. Meanwhile, because different grades of flue-cured tobacco leaves have different chemical composition contents, and the amount of toxic substance (such as nicotine or CO), varies when different chemicals are burned, so flue-cured tobacco grading will affect the sensory evaluation quality and the smoke index that highly related to the smoker’s health1, while we should explore more explicit grading method to improve smoker's health. Therefore, flue-cured tobacco grading is significant to the national economy and smoker’s health1.

As we mentioned, it is a complicated task to realize intelligent flue-cured tobacco grading. Flue-cured tobacco leaves are divided into 42 grades in China according to seven factors: maturity, leaf structure, body, oil, color intensity, length, and waste. The traditional flue-cured tobacco grading mode mainly relies on human sensory methods (eyes and hands) to judge the appearance quality of tobacco leaves, which has two disadvantages: one is the traditional tobacco grading mode needs long-termly to train talents, which is time, cost, resources, and labor consuming. Another is the low classification accuracy caused by human subjectivity and instability.

All these problems seriously hinder tobacco leaf purchase and cigarette production. With the hot development of artificial intelligence (AI), AI technology has been applied in agriculture2,3,4,5, traffic engineering6 and industry7,8. For instance, in agriculture, feature enhancement and DMS-Robust Alexnet were used to identify maize leaf disease9, ABCK-BWTR and B-ARNet were combined to identify tomato leaf diseases10, and reference11 utilized CASM-AMFMNet to classify grape leaf diseases.

Hence, intelligent tobacco grading will be the trend in the future development of the tobacco industry. At present, the research on intelligent tobacco grading technology has achieved significant growth, mainly including three aspects:

-

(1)

The first way is using infrared12 and hyperspectral techniques, combined with stoichiometry to construct a classification model to achieve rapid and nondestructive tobacco classification13,14,15. However, the near-infrared equipment cost is high, and the spectrum it scans is more sensitive to environments (such as temperature and humidity), making classification results inaccurate.

-

(2)

Another way to realize tobacco grading is to establish decision rules based on fuzzy mathematics and chemical composition16,17. For example, in reference16, the classification accuracy was about 0.94 for the trained tobacco leaves, and the accuracy of the non-trained tobacco leaves was about 0.72. But this method has low accuracy because of the complex repeated reasoning.

-

(3)

The last way is computer vision solved by machine learning algorithms18,19,20,21,22, such as traditional machine learning methods (e.g. support vector machines (SVMs), random forests (RFs))23, deep learning methods19,24, and neural network25. Specially, due to the important economic status of tobacco, the industries have accumulated a large amount of tobacco data in the production process. But how to fully use these data and explore their value has become the trend of intelligent development in the tobacco industry. Deep learning can learn the rich internal rules and interpret information from the data26 and convolutional neural network (CNN) has strong feature extraction ability27. Therefore, deep learning is a crucial technology based on many labeled data for tobacco grading. Among them, the deep CNN has become the most critical technology to solve image recognition28,29,30,31. Due to the high effectiveness of CNN, such as Highway Networks32, Residual Networks (ResNets)33, Alexnet28, VGG31 etc., many scholars have done groundbreaking tobacco grading works through its variant structures34,35. For instance, in reference19, they fine-tuned a VGG16 network structure for tobacco grading. In reference25, a CNN classifier was used for tobacco grading leaves, and the accuracy was 0.9625. In reference36, a FDANet was proposed for flue-cured tobacco grading.

The methods mentioned above, although previously acknowledged, are still inadequate in terms of accuracy, rendering their practical application a challenge. Moreover, a predicament emerges wherein the accuracy dwindles as the number of classes increases. This phenomenon can be attributed to the insufficient feature extraction ability of deep convolutional neural networks caused by traditional connection mode of layers.

Hence, propelled by the large-scale flue-cured tobacco grade data accumulated by tobacco industries, we proposed an intelligent tobacco grading method based on DenseNet, surmounting the aforementioned quandaries. Namely, to extract tobacco grade characteristics deeply, we utilized DenseNet37 as a backbone. By concatenating preceding tobacco feature data, the connection mode of DenseNet is more conducive for extracting fine-grained specific visual information about tobacco leaves. The proposed method can make deep model thoroughly learned the sample distribution of each grade, thereby unlock the full potential of deep learning.

Moreover, based on the high-resolution professional data, we verified that the DenseNet structure performs better than other deep CNN structures on our high-quality tobacco grading datasets, improving the accuracy of tobacco grading.

In summary, our work is as follows:

-

(1)

We collected and sorted out large amounts of flue-cured tobacco grade data with high resolution and high usability that can support follow-up work. Meanwhile, we aim to reach enterprise-level publish for boosting industrial development.

-

(2)

We pre-processed the data using affine transformation, normalization, exponential moving average, etc., providing smoother data for better training. Then we designed the experiments for our dataset based on DenseNet.

-

(3)

We evaluated various classical methods on our flue-cured tobacco grade data, where the competitive experimental results demonstrated the high performance of DenseNet.

Our work can significantly reduce the use of the workforce and provide theoretical and technical support for the intelligent grading of tobacco leaves in the tobacco industry. Meanwhile, applying deep learning in tobacco can impact other industries such as agriculture and botany.

The remainder of this paper is structured as follows: “Methods” Section introduces the process of our method in detail. In “Experiments and results” Section, the experimental setup is given and the related results are discussed. Finally, “Conclusions” Section provides a summary of this paper as well as the scope of future work.

Methods

In this section, we first introduce data acquisition, including experimental instruments and image acquisition method, and then propose the detailed pre-processing process for our dataset. Finally, we illustrate the idea of intelligent flue-cured tobacco grading based on DenseNet. Figure 1 shows the framework of our method.

As Fig. 1 shows, the tobacco grading process based on DenseNet includes training and test phases. In the training phase, the data was processed by pre-processing, feature extraction, classification, and prediction. While in the test phase, we first froze the parameters of the DenseNet model obtained in the training phase, then the test images were pre-processed and processed by the entire DenseNet model, including the feature extraction and classification. Finally, the model outputted the prediction results.

Data acquisition

To collect tobacco images, we chose the CMOS BV-C5400 line array industrial camera and the shot of BV-L1024 in our experiment. Table 1 shows the detailed configuration of our equipment and Fig. 2 illustrates the pipeline of tobacco data acquisition process.

We can learn that data acquisition process includes image acquisition and label acquisition. Specifically, the tobacco samples were unfolded and laid flat on the conveyor belt first, and then the images were collected when the conveyor belt was running. Subsequently, industry experts marked the actual grading of tobacco samples according to the GB 2635–1992, a flue-cured tobacco grading standard in China.

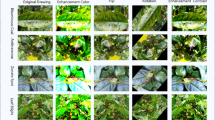

We chose 20 flue-cured grades that are the most widely used among 42 grades, including B1F, B1K, B2F, B2K, B2V, B3F, B4F, C1F, C1L, C2F, C2L, C3F, C3L, C3V, C4F, CX1K, CX2K, X1F, X2F, and X3F. Due to the significance of those grades, they can solve most flue-cured grading problems. We selected 21,113 representative tobacco images from the 20 grades to form our experimental dataset. Figure 3 shows some samples of the dataset.

Our dataset is a significant improvement both in quantity and quality compared to other datasets and it has been applied in the cigarette factory, produced specific effects, and improved grading efficiency.

Data pre-processing

We divided the dataset into the train set and test set with the proportion of 8:2. Table 2 details the information on sample division.

To make the original data more suitable for neural network processing and fully extract features, the original data must undergo pre-processing operations, including rotation, translation, normalization, and others before model training. Figure 4 shows the image pre-processing process, and Fig. 5 visualizes some tobacco leaf images after preprocessing.

In our experiment, the input size of the original image was 2456 × 2058, and the image was scaled to 500 × 250 as actual input for better training. Subsequently, we operated the image by an affine transformation, horizontal random rotation, vertical flip, and transformation to a grayscale image. We used the Cross-Entropy (CE) as the loss function, which mainly describes the distance between the actual and expected output probabilities. In other words, the smaller the Cross-Entropy value is, the closer the two probability distributions are. Assuming that the probability distribution \( p\left( x \right)\) is the expected output and the probability distribution \( q\left( x \right)\) is the actual output, then the solution of Cross-Entropy Loss is shown in Eq. (1):

The normalization operation is required to facilitate subsequent data processing and ensure faster convergence during program run time.

Exponential moving average (EMA), also called weighted moving average, is an averaging method that gives more weight to recent data. The significance of EMA lies in using moving average parameters to improve the model on the test data of robustness. The moving average can be considered the mean value of the variables in the past period. Compared with direct assignment for a variable, getting the value of the moving average on the image is smoother, less jittered, and reduces fluctuations.

For instance, for the variables of \( \left[ {\theta_{1} ,\theta_{2} ,\theta_{3} , \ldots ,\theta_{n} } \right]\), where \(\theta_{1} ,\theta_{2,} \theta_{3,} \ldots \theta_{n}\) represent the pixel values of tobacco images. The ordinary way to get the average is as follows:

In Eq. (2),\( avr\) represents the average of n variables. However, \(avr_{k} \) in EMA is computed as follows:

In Eq. (3),\( avr_{k}\) represents the average of the first k pieces of data, \(\alpha\) is the weight value (generally set as 0.9–0.999), which is set as 0.999 in this paper. Consequently, the \(avr_{k}\) is the input for the neural network.

Deep convolutional neural network

Convolutional neural network

DenseNet is one of the convolutional neural networks, and the structure of the convolution process is illustrated in Fig. 6. Processing by multi-convolution and pooling layers, the images are transformed into helpful feature maps, then fully a connected layer combined with the Softmax layer results in the grade prediction.

Flue-cured tobacco feature extraction based on DenseNet

Traditional connection mode of a deep convolutional neural network summarizes preceding feature data when the data pass into a network layer. This mode can lead to vanishing-gradient and poor reuse of feature data problems. These problems result in the insufficiency of feature extraction ability for different grades of tobacco leaves. In contrast to other methods, DenseNet combines data by concatenating them. So traditional convolutional networks with \({\text{L}}\) layers have \({\text{L}}\) connections—one between each layer and its subsequent layer—while DenseNet has \(\frac{{{\text{L}}\left( {{\text{L}} + 1} \right){ }}}{2} \) direct connections. The detailed process of proof is shown in reference37. As a result, flue-cured tobacco feature extraction based on DenseNet alleviated the vanishing-gradient problem, encouraged feature reuse, and substantially strengthened the feature extraction ability. Therefore, we utilized DenseNet as a backbone to extract tobacco leaf features and automatically classify tobacco leaves.

The details of the DenseNet connection are as follows: assume that \(X_{0}\) is the input single tobacco leaf image, the output of layer L is \(X_{l}\), and the neural network has \(L\) layers totally, and each layer will go through a transition layer such as Batch Normalization (BN), rectified linear units (ReLU), Pooling, or Convolution (Conv), then we define the nonlinear transformation operation layer is \(F_{l} \left( \cdot \right)\). In \(F_{l} \left( \cdot \right)\), \(l\) denotes the index number of layers.

In a traditional deep convolutional neural network, take the output of layer \(l{\text{th}}\) as the input of layer \(\left( {l + 1} \right){\text{th}}\). The layer transition calculation method of \(l{\text{th}}\) layer and \(\left( {l - 1} \right){\text{th}}\) layer is shown in Eq. (4), so the \(l{\text{th}}\) layer only receives the output from one previous layer.

This information flow mode may impede the data transmission in the network. However, DenseNet has a different connectivity pattern. They connect any layer to all subsequent layers directly. Figure 7 illustrates the connection pattern of layers and information flow.

As Fig. 7 shows, the \(l{\text{th}}\) layer receives the feature maps of all preceding layers. This connection mode can better carry out feature extraction and better transmit each layer's features, reducing the loss of feature information. Specifically, the feature transmission mode of DenseNet is shown in Eq. (5).

In Eq. (5), \(\left[ {X_{0} ,X_{1} ,...,X_{l - 1} } \right]\) refers to the concatenation of the feature-maps produced in layers 0 to \(l - 1\), (i.e. \(1 + 2 + 3 + \cdots {\text{L}} = \frac{{L\left( {L + 1} \right)}}{2}\)), so they introduce \(\frac{{L\left( {L + 1} \right)}}{2}\) connections to the network. Compared with other deep convolutional neural networks, the feature transmission mode of DenseNet can better extract depth image information features.

Experiments and results

In this section, we first illustrate our experiment environment and experimental hyperparameter settings and then introduce our experiments and the results.

Experiments

Experiment settings

Our experiment was based on python 3.8, PyTorch 1.8 environment, and a GPU of Tesla V100. First, we carried out flue-cured tobacco grading experiments on the traditional machine learning methods (SVM, RF, KNN, LightGBM and XGBoost). Table 3 shows the grid search hyperparameters and the optimal parameters for 20 grades of traditional machine learning methods.

Then, we used DenseNet and other deep models to verify the validity of the dataset. Table 4 is the hyperparameter settings for deep models. In deep learning, hyperparameters are parameters that need to be set manually before the model is trained. They are not automatically learned from the training data, but are set by humans. The selection and fine-tuning of these hyperparameters have an important effect on both the performance and training process of the model. Specifically, hyperparameters can control the complexity of model. For instance, in Table 5, different numbers represent different network structures of DenseNet. Moreover, Hyperparameters can affect the training process and convergence rate of the model. For example, the learning rate determines the stride size of each model parameter update. Too high or too low a learning rate may lead to instability or convergence difficulties in the training process. Besides, the setting of hyperparameters can help prevent the model from overfitting. For example, in Table 4, a normalization parameter is a common hyperparameter that plays an important role in data pre-processing, improving feature comparison and weight balance, accelerating model convergence, preventing numerical overflows, and enhancing model generalization.

Nevertheless, the selection and adjustment of hyperparameters is an iterative and time-consuming process. It requires comprehensive consideration and adjustment in relation to specific problems, data sets and models. At the same time, the optimal value of the hyperparameter is not fixed and may depend on the task.

In Table 4, Numbers from 1 to 9 are the parameter settings of image pre-processing. The main purpose of the operation is to eliminate irrelevant information, recover useful real information, enhance the detectability of relevant information and simplify the data to the maximum extent, so as to better fit the deep model structures and improve the reliability of feature extraction and recognition. Numbers 10–13 are the parameter settings of model training. It is worth noting that the settings of the value are all through repeated experiments, experience and observations. And they are most suitable for our experimental environment and the results obtained by using these parameters are optimal.

Evaluation metrics

The used evaluation metrics are computed by Eqs. (6–9). Here, TP is “True Positive”, it means true is 0 and prediction is 0; FN is “False Negative”, it means true is 0 and prediction is 1, FP is “False Positive”, it means true is 1 and prediction is 0, TN is “True Negative”, it means true is 1 and prediction is 1.

-

①

Accuracy

Equation (6) shows the overall Accuracy of the classification model (for all classes), here \(N\) is the number of all samples.

-

②

Precision

Precision reflects the ability of the model to distinguish negative samples. The higher the Precision, the stronger the ability of the model. Precision is computed by Eq. (7).

-

③

Recall

On the contrary, Recall reflects the ability of the model to recognize positive samples. The higher the Recall, the stronger the ability of the model. Recall is calculated by Eq. (8).

-

④

F1-Score

Combining the metrics of Precision and Recall, the value range of F1-Score is from 0 to 1, where 1 is the best and 0 is the worst. The higher the F1-score, the more robust the model. The computing method of F1-Score is shown as Eq. (9).

Results and analysis

We first used accuracy as the evaluation indicator of different methods. Figure 8 is the accuracy of different number of tobacco grades for traditional methods. It suggests that an increase of tobacco grade categories significantly decreases the classification accuracy of traditional machine learning methods (SVM, RF, KNN, LightGBM and XGBoost). Therefore, when the number of tobacco grades is large, traditional machine learning methods are not suitable for tobacco grading. However, the accuracy of DenseNet is significantly higher and more stable than others. Moreover, Table 5 shows the performance of flue-cured tobacco grading based on different DenseNet structures on the test dataset.

As shown in Table 5, the flue-cured tobacco grading results of different DenseNet structures are slightly different, but all are above 0.995, and Fig. 9 is the loss of DenseNet169 during the training process.

To verify the grading accuracy of DenseNet, we compared DenseNet with other methods, including traditional machine learning and deep learning methods, and the results are Tables 6 and 7. Here, “Accuracy without preprocessing” represents the experimental results without preprocessing. From Tables 6 and 7, we can learn that our preprocessing on the final result is positive, it can improve the accuracy to some extent. Besides, the final accuracy of tobacco classification based on DenseNet has reached 0.997, which is significantly higher than others. DenseNet can find more subtle and general features among tobacco grades and dig more individual features for tobacco, so flue-cured tobacco grading based on DenseNet performs better than others.

From Tables 6 and 7, we can see deep learning methods perform better than traditional machine learning methods. Besides, as the number of network layers increases, the convergence is faster and the grading performance becomes better.

In order to prove the advantages of our proposed method from different views, we utilized more evaluation indicators, including precision, recall and F1-score. Table 8 illustrates the results. We can learn that tobacco grading based on DenseNet still significantly outperforms the other methods.

Hence, we can conclude that DenseNet has almost entirely learned the image features of different grades of flue-cured tobacco leaves, so it can accurately identify each flue-cured tobacco grade.

Discussion

Although our proposed method outperforms other tobacco grading approaches, it has its limitations. Firstly, our method requires a large amount of labeled data, but obtaining enough supervised data is laborious and time-consuming and sometimes it is almost impossible due to privacy, safety or ethic issues. Therefore, the scarcity of data poses a significant challenge for constructing an excellent model. Furthermore, deep neural networks have requirements in terms of hardware and computation time, which leads the model is not as easy to implement. Nonetheless, we remain confident that with the right resources and expertise, our method will make contributions to the tobacco grading.

Conclusions

This paper used DenseNet to fully extract flue-cured tobacco image information and achieved intelligent tobacco grading with high accuracy. Compared with traditional intelligent tobacco leaf classification technologies, such as SVM, RF, KNN, and other deep learning models, the efficiency and stability have significantly improved.

Our research has provided a promising solution for large-scale flue-cured tobacco grading and yielded remarkable results that have theoretical and practical implications. From a theoretical standpoint, our research enriches the existing theoretical system of tobacco grading and provides new insights into the fundamental methods and models. Besides, our work has a deeper understanding of the relationship between deep neural networks and their connection mode with model performance, which provides an idea for subsequent research in theory. For example, when the accuracy of tobacco leaf grading is not ideal, more samples or changing connection mode of the network can be attempted. On the other hand, the practical implications of our research are significant. For instance, our method can be used by tobacco enterprise to develop new grading tools that can improve the efficiency and effectiveness of their work. Meanwhile, it can also promote the standardization and unified development of tobacco leaf purchase to a certain extent, which can save costs and improve the economic benefits of enterprises ultimately. Besides, it can also provide new ideas for other fields, such as the agriculture, planting industry and medical domain, and promote the landing of artificial intelligence technology.

In future work, we plan to investigate other advanced tobacco grading methods and study more lightweight networks to reduce the computational time when training models, ensuring the balance between the accuracy and computational cost. On the other hand, we will promote the practical applications of our method for tobacco enterprise such as online intelligent grading system to improve efficiency. Furthermore, we will try to apply our proposed algorithm to more applications such as medical images identification and agricultural disease detection.

Data availability

The datasets generated and/or analysed during the current study are not publicly available because the raw data is currently private, but it is available from the corresponding author on reasonable request.

References

Han, F. G. Tobacco Chemistry 92–101 (China Agriculture Press, 2010).

Rehman, T. U., Mahmud, M. S., Chang, Y. K., Jin, J. & Shin, J. Current and future applications of statistical machine learning algorithms for agricultural machine vision systems. Comput. Electron. Agric. 156, 585–605 (2019).

Kok, Z. H., Shariff, A. R. M., Alfatni, M. S. M. & Khairunniza-Bejo, S. Support vector machine in precision agriculture: A review. Comput. Electron. Agric. 191, 106546 (2021).

Li, Z., Guo, R., Li, M., Chen, Y. & Li, G. A review of computer vision technologies for plant phenotyping. Comput. Electron. Agric. 176, 105672 (2020).

Wang, Y. & Qin, L. Research on state prediction method of tobacco curing process based on model fusion. J. Ambient Intell. Humaniz. Comput. https://doi.org/10.1007/s12652-021-03205-w (2021).

Liang, G. et al. Semantics-aware dynamic graph convolutional network for traffic flow forecasting. IEEE Trans. Veh. Technol. https://doi.org/10.1109/TVT.2023.3239054 (2023).

Jay, L., Xiang, L., Yuan-Ming, X., Shaojie, Y. & Ke-Yi, S. Recent advances and prospects in industrial AI and ap-plications. Acta Autom. Sin. 46, 2031–2044 (2020).

Tian-You, C. Development directions of industrial artificial intelligence. Acta Autom. Sin. 46, 2005–2012 (2020).

Lv, M. et al. Maize leaf disease identification based on feature enhancement and DMS-robust alexnet. IEEE Access 8, 57952–57966 (2020).

Chen, X. et al. Identification of tomato leaf diseases based on combination of ABCK-BWTR and B-ARNet. Comput. Electron. Agric. 178, 105730 (2020).

Suo, J. et al. Casm-amfmnet: A network based on coordinate attention shuffle mechanism and asymmetric multi-scale fusion module for classification of grape leaf diseases. Front. Plant Sci. 13, 846767 (2022).

Qin, Y., Liu, X., Zhang, F., Shan, Q. & Zhang, M. Improved deep residual shrinkage network on near infrared spectroscopy for tobacco qualitative analysis. Infrared Phys. Technol. 129, 104575 (2023).

Jun, B. et al. Automatic grading of flue-cured tobacco leaves based on NIR technology and extreme learning machine algorithm. Acta Tabacaria Sin. 23, 60–68 (2017).

Sahu, A. & Dante, H. Non-destructive rapid quality control method for tobacco grading using visible near-infrared hyperspectral imaging. in Image Sensing Technologies: Materials, Devices, Systems, and Applications V vol. 10656 1065603 (International Society for Optics and Photonics, 2018).

Li, R. et al. Nondestructive and rapid grading of tobacco leaves by use of a hand-held near-infrared spectrometer, based on a particle swarm optimization-extreme learning machine algorithm. Spectrosc. Lett. 53, 685–691 (2020).

Zhang, F. & Zhang, X. Classification and quality evaluation of tobacco leaves based on image processing and fuzzy comprehensive evaluation. Sensors 11, 2369–2384 (2011).

Tan, X. et al. Intelligent grading of flue-cured tobacco leaves based on rough set theory. Nongye Jixie Xuebao Trans. Chin. Soc. Agric. Mach. 40, 169–174 (2009).

Luo, H. & Zhang, C. Features representation for flue-cured tobacco grading based on transfer learning to hard sample. in 2018 14th IEEE International Conference on Signal Processing (ICSP) 591–595 (IEEE, 2018).

Li, G. et al. Research on tobacco leaf grading algorithm based on transfer learning. in 2021 IEEE International Conference on Artificial Intelligence and Computer Applications (ICAICA) 32–35 (2021). doi:https://doi.org/10.1109/ICAICA52286.2021.9497953.

Su, H. Data research on tobacco leaf image collection based on computer vision sensor. J. Sens. 2021, 1–11 (2021).

Leming, Z., Jinyuan, S., Jianjun, L. & Runjie, L. Application of probabilistic neural network in tobacco automatical grading. J. Agric. Mech. Res. 12, 32–35 (2011).

Harjoko, A. et al. Image processing approach for grading tobacco leaf based on color and quality. Int. J. Smart Sens. Intell. Syst. 12, 1–10 (2019).

Zhang, X. et al. Research on the effect of chemical component on tobacco grading. in International Conference on Applied Human Factors and Ergonomics 318–324 (Springer, 2020).

Setiawan, W. & Purnama, A. Tobacco leaf images clustering using DarkNet19 and K-means. in 2020 6th Information Technology International Seminar (ITIS) 269–273 (IEEE, 2020).

Marzan, C. S. & Ruiz, C. R. Automated tobacco grading using image processing techniques and a convolutional neural network. Int. J. Mach. Learn. Comput. 9, 807–813 (2019).

Ning, X. et al. Hyper-sausage coverage function neuron model and learning algorithm for image classification. Patt. Recogn. 136, 109216 (2023).

Wang, C. et al. Learning discriminative features by covering local geometric space for point cloud analysis. IEEE Trans. Geosci. Rem. Sens. 60, 1–15 (2022).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. ImageNet classification with deep convolutional neural networks. Commun. ACM 60, 84–90 (2017).

LeCun, Y., Bottou, L., Bengio, Y. & Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 86, 2278–2324 (1998).

Srivastava, R. K., Greff, K. & Schmidhuber, J. Training very deep networks. arXiv preprint http://arxiv.org/abs/1507.06228 (2015).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv preprint http://arxiv.org/abs/1409.1556 (2014).

Srivastava, R. K., Greff, K. & Schmidhuber, J. Highway networks. arXiv preprint http://arxiv.org/abs/1505.00387 (2015).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 770–778 (2016).

Lu, M., Jiang, S., Wang, C., Chen, D. & Chen, T. Tobacco leaf grading based on deep convolutional neural networks and machine vision. J. ASABE 65, 11–22 (2022).

Odabas, M. S., Şenyer, N. & Kurt, D. Determination of quality grade of tobacco leaf by image processing on correlated color temperature. Concurr. Comput.: Pract. Exp. 35(2), e7506 (2023).

Chen, D. et al. Feature-reinforced dual-encoder aggregation network for flue-cured tobacco grading. Available at SSRN 4355545.

Huang, G., Liu, Z., Van Der Maaten, L. & Weinberger, K. Q. Densely connected convolutional networks. in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 4700–4708 (2017).

Bhatia, N. & others. Survey of nearest neighbor techniques. arXiv preprint http://arxiv.org/abs/1007.0085 (2010).

Breiman, L. Random forests. Mach. Learn. 45, 5–32 (2001).

Ke, G. et al. Lightgbm: A highly efficient gradient boosting decision tree. Adv. Neural Inf. Process. Syst. 30 (2017).

Chen, T. & Guestrin, C. Xgboost: A scalable tree boosting system. in Proceedings of the 22nd acm sigkdd International Conference on Knowledge Discovery and Data Mining 785–794 (2016).

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A. & Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 4510–4520 (2018).

Author information

Authors and Affiliations

Contributions

X.W.X conceived the paper, designed and implemented the experiments, provided strategic support, and wrote the main manuscript; H.L.G acquired tobacco dataset for the paper and statistical analysis; R.T.H provided strategic support and reviewed the manuscript; X.Q.D provided strategic support; S.P.P implemented the experiments and provided strategic support; Y.C. implemented the experiments.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xin, X., Gong, H., Hu, R. et al. Intelligent large-scale flue-cured tobacco grading based on deep densely convolutional network. Sci Rep 13, 11119 (2023). https://doi.org/10.1038/s41598-023-38334-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-38334-z

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.