Abstract

Climate change is expected to have impacts on the balance of global food trade networks and food security. Thus, seasonal forecasts of precipitation and temperature are an essential tool for stakeholders to make timely choices regarding the strategies required to maximize their expected cereal yield outcomes. The availability of state-of-the-art seasonal forecasts such as the European Centre for Medium-Range Weather Forecasts (ECMWF) system 5 (SEAS5) may be an asset to help decision making. However, uncertainties and reduced skill may hamper the use of seasonal forecasts in several applications. Hence, in this work, we aim to understand the added value of such dynamical forecasts when compared to persistent anomalies of climate conditions used to predict the production of wheat and barley yields. With that in mind, empirical models relating annual wheat and barley yields in Spain to monthly values of precipitation and temperature are developed by taking advantage of ECMWF ERA5 reanalysis. Then, dynamical and persistence forecasts are issued at different lead times, and the skill of the subsequent forecasted yield is verified through probabilistic metrics. The results presented in this study demonstrate two different outcomes: (1) wheat and barley yield anomaly forecasts (dynamical and persistent) start to gain skill later in the season (typically from April onwards); and (2) the added value of using the SEAS5 forecast as an alternative to persistence ranges from 6 to 16%, with better results in the southern Spanish regions.

Similar content being viewed by others

Introduction

Wheat and barley crops are paramount to food supply chains at global and national levels. Indeed, these cereals are part of the diet and the nutritional basis of humans and animals1. Rainfed winter wheat and barley are vital crops in Spain, representing approximately 23 and 31% of the total crop area in Spain in 2019, i.e., more than half of the total cereal cultivation area of the country (https://www.mapa.gob.es). Furthermore, rainfed winter wheat and barley in Spain represent about 8 and 25%, respectively, of the total EU-27 areas dedicated to these crops, being the main producer of barley and the fifth largest producer of wheat in the EU-27 (EUROSTAT, 2020). Thus, the importance of steady and consistent production of these cereals every year is of critical importance to stakeholders (such as farmers), countries’ economies, and the European supply of wheat and barley. Recent studies show how climate-related impacts on European crop production have tripled over the last five decades2, representing a present risk for food security. Increasing crop shortfalls induced by weather disasters are also expected at the global level3,4. If poor harvest occurs across multiple regions simultaneously, the global food system may be heavily compromised5,6, particularly in a changing climate.

To make farsighted strategic choices, stakeholders need to have timely, accurate, and reliable knowledge of crop yield production at their disposal7. Considering the importance of meteorological conditions to cereal production, seasonal forecasts of meteorologic variables such as precipitation and temperature have a key role8. Several works have studied the use of different seasonal forecasts on crop yields located in different regions of the world9,10,11,12,13,14,15,16 and have adopted different methodologies to estimate crop yields, ranging from crop models forced with seasonal forecasts17 to empirical models based on simple regressions15,18 or more advanced statistical methods such as machine learning19.

Since 2017, the latest generation of the European Centre for Medium-Range Weather Forecasts (ECMWF) seasonal forecasting system version 5 (SEAS5) has become operational, providing an ensemble of the evolution of the coupled earth system (atmosphere, land, ocean) with lead times up to 6 months. Such seasonal forecasts could be an essential tool for stakeholders to make timely, conscious, and proactive choices regarding the actions necessary to maximize the production of wheat and barley yields by choosing ideal management strategies. However, the quality of dynamic forecasts pose a significant hurdle for farmers and other stakeholders to use them20. Indeed, forecasts with longer lead times are generally less accurate than those with shorter ones, and precipitation forecasts are less accurate than temperature forecasts20. Hence, it is crucial to evaluate the added value of such dynamical forecasts when compared with simpler approaches, such as assuming forecasts based on persistent climate conditions, i.e., assuming that anomalies persist in the following months. The aim of this study is to compare cereal yield production (specifically wheat and barley in Spain) using linear regression models fed with dynamical and persistence forecasts, and to compare the skill achieved by both. To accomplish this, the following two steps are proposed:

-

1.

Model Building: To select and calibrate linear regression models to estimate wheat and barley by using ERA5 monthly accumulated precipitation and minimum and maximum temperatures over the cereals’ growing season. This first step is based on prior publications by the authors and is only adapted here to the proposed database of ERA518,21.

-

2.

Forecast: Dynamic (SEAS5) and persistency forecasts of these variables are fed into the linear regression models to obtain forecasted wheat and barley yield estimates. The skill of both forecasts of these yields was compared, taking the yield estimated with ERA5 as the “benchmark”. This aims to understand if using seasonal forecasts of the predictors is a better strategy than relying on the persistent climate (which relies on the assumption that the climate anomaly of the previous month will be the climate anomaly of the next months). This second step, which constitutes the main goal of this study, aims at analysing the added value of using dynamical seasonal forecasts to predict cereal yields and informing end-users (e.g., farmer) and forecast developers on the usefulness of such datasets in the regions of study for the cereals in question.

Data and methods

Region of study and agriculture data

Two different regions located in Spain, encompassing five adjacent provinces each, are chosen as the focus of this study. These provinces were selected because of their large percentages of agricultural land use dominated by rainfed crops, such as wheat and barley (Fig. 1, left panel). The choice of such a collection of provinces is built upon previous works18,21,22, where similar crop systems and regions were analysed. The main rationale for the choice of the provinces builds upon three criteria18: (1) the land use within the province is dominated by agricultural practices, i.e., more than 50% of the pixels at each province correspond to agricultural areas; (2) the agricultural areas are dominated by rainfed crops, i.e., non- irrigated arable land prevails in more than 50% of the agricultural areas; (3) the provinces are adjacent and non-isolated.

Map highlighting selected adjacent provinces in the Iberian Peninsula, called Region 1 and Region 2 (left), and the respective province names and time series of wheat (orange) and barley (blue) standardized yield anomalies for the period 1993–2019 for Region 1 (right, top panel) and for Region 2 (right, bottom panel). The figure was produced in Python 3.8 (https://www.python.org/).

Wheat and barley annual production (ton) and agriculture-specific area (ha) for each of the selected provinces were obtained from the Spanish Agriculture, Food and Environment Ministry (available at https://www.mapa.gob.es). Average production of wheat and barley is of 2.9 and 2.8 ton/ha, respectively, in region 1, and 2.3 ton/ha in region 2, for both cereals. Annual yield anomalies (ton/ha) were then estimated for each individual province (dividing the yearly production of cereals in ton by the area assigned to that crop in ha in the respective province), and the regions’ spatial average was finally obtained. Furthermore, long-term trends associated with nonclimatic factors such as technological progress were removed by linear detrending23,24. Finally, the time series were standardized (Fig. 1, right panel). To simplify notation, let us consider the northernmost region as Region 1 (shown in blue in Fig. 1) and the central/southern Spanish region as Region 2 (orange in Fig. 1).

Annual wheat and barley production datasets are available since 1993 (see subsection 2.3) until the last available report pertaining to 2019.

Precipitation and temperature reanalysis

ERA525 hourly fields of precipitation and 2-m temperature were retrieved from the Copernicus Climate Data Store. These were subsequently converted to (1) monthly accumulated precipitation (hereafter designated by PRERA5, in mm) by accumulating the hourly fields; (2) monthly maximum temperature (hereafter TXERA5, in K) obtained as the monthly mean of daily maximum 2-m temperature; and (3) monthly minimum temperature (hereafter TNERA5, in K) following a similar rationale as in TXERA5 but using daily minimum 2-m temperature.

ERA5 fields are available from 1950 onwards. However, data are truncated to encompass the period starting in January 1993 (see subsection 2.3) and ending in December 2019 (see subsection 2.1). Precipitation and temperature fields were spatially aggregated for each region.

Precipitation and temperature seasonal forecasts

The ECMWF seasonal forecast system SEAS526 is used in this study. Monthly reforecasts (hereafter hindcasts) of total precipitation (m s−1) and maximum and minimum 2-m temperatures (K; hereafter TXS5 and TNS5, respectively) at a spatial resolution of 1° × 1° are available from 1993 until 2016, whereas forecasts are employed from 2017 to 2019. Both hindcasts and forecasts start on the 1st of each month, with a 6-month lead time, and are composed of 25 ensemble members. In this study, a lead-time of 0 indicates the forecast for the issue month (e.g., for the forecasts issued in January, lead-time 0 is respective to the January forecast, and lead-time 5 to the June forecast).

Total precipitation is converted from monthly means to monthly accumulated values by multiplying by the number of days in the month and is converted to mm, hereafter referred to as PRS5. Furthermore, the ensemble mean of the PRS5, TXS5 and TNS5 fields is obtained by applying the arithmetic mean over the 25 members.

Coupled atmosphere–ocean models have systematic biases and drift (lead time-dependent biases)27. To guarantee a similar mean climate between SEAS5 and ERA5, a simple bias correction was applied in the following form:

where \({\text{PR}}_{{{\text{S}}5}}^{^{\prime}}\), \({\text{TX}}_{{{\text{S}}5}}^{^{\prime}}\), and \({\text{TN}}_{{{\text{S}}5}}^{^{\prime}}\) are the corrected seasonal forecasts; \({\upalpha }\) is the correction factor for each of the three variables; and \({\text{m}}\) and \({\text{lt}}\) are the calendar month and lead time, respectively. The correction factors are estimated as:

where \(\overline{{{\text{PR}}}}_{{{\text{ERA}}5}}\), \(\overline{{{\text{TX}}}}_{{{\text{ERA}}5}}\), and \(\overline{{{\text{TN}}}}_{{{\text{ERA}}5}}\) are the multiannual mean of ERA5 precipitation and maximum and minimum temperatures, respectively, for the verification month mv (\({\text{m}}_{{\text{v}}} = {\text{m}} + {\text{lt}}\), circular over 12 months); and \(\overline{{{\text{PR}}}}_{{{\text{S}}5}}\), \(\overline{{{\text{TX}}}}_{{{\text{S}}5}}\), \(\overline{{{\text{TN}}}}_{{{\text{S}}5}}\) are the multiannual and ensemble means of the forecasts.

For persistence forecasts, let us consider that the issue month is March (bearing in mind that the forecast is issued on the 1st day of the month), and the target predictor month is May. In this case, the forecast for May is the known predictor at the date of issue, which in this example is the ERA5 predictor in February (i.e., in March, the known climate anomalies are those from the previous month). The persistence forecast28 is used here as the baseline forecast, i.e., the a priori knowledge that the farmer holds in the field based on empirical observation. An example of the methodology for persistent forecasts may be found on Supplementary Material, Fig. S1. Let’s assume the user wants to know the yield forecast in the issue month M, and the model predictors are in months M-2, M + 2, and M + 3. Predictor in M-2 is in the past and is therefore known at issue month. In this case, the ERA5 value of the predictor is used. However, predictors in months M + 2 and M + 3 are in the future relative to the issue month and are still unknown. For these predictors the persistent anomaly is used, i.e., we assign the anomaly for months M + 2 and M + 3 as being equal to the anomaly that is observed at issue month (thus the term persistence). Since the anomaly of month M is only known at the end of the month (i.e., effectively in the beginning of M + 1), the known predictors when the issue month M starts are those from the previous month M-1. Hence, predictors in months M + 2 and M + 3 are issued with the values of M − 1, i.e., the persistence.

Regression models for cereal yield production

Grid points of ERA5/SEAS5 that overlap Region 1 and Region 2 are selected, accounting for a total of 8 and 10 grid points, respectively (Supplementary Material, Fig. S2). Then, the spatial averages of PRERA5, TXERA5, TNERA5, \({\text{PR}}_{{{\text{S}}5}}^{^{\prime}}\), \({\text{TX}}_{{{\text{S}}5}}^{^{\prime}}\), and \({\text{TN}}_{{{\text{S}}5}}^{^{\prime}}\) are computed for the two regions. With the aim of understanding how well ERA5 and forecasts compare for the three variables used in this study (PR, TX, TN), a month-by-month Pearson’s linear correlation \({\text{R}}\) is computed between time-series of weather variables from: (1) ERA5 and SEAS5 and (2) ERA5 and persistence. Standardized anomalies are computed for all variables by removing the interannual linear trend and dividing by the interannual standard deviation, transforming PRERA5, TXERA5, TNERA5, \({\text{PR}}_{{{\text{S}}5}}^{^{\prime}}\), \({\text{TX}}_{{{\text{S}}5}}^{^{\prime}}\), and \({\text{TN}}_{{{\text{S}}5}}^{^{\prime}}\) into \({\text{PR}}_{{{\text{ERA}}5}}^{{\text{a}}}\), \({\text{TX}}_{{{\text{ERA}}5}}^{{\text{a}}}\), \({\text{TN}}_{{{\text{ERA}}5}}^{{\text{a}}}\), \({\text{PR}}_{{{\text{S}}5}}^{{{a^{\prime}}}}\), \({\text{TX}}_{{{\text{S}}5}}^{{{a^{\prime}}}}\), and \({\text{TN}}_{{{\text{S}}5}}^{{{a^{\prime}}}}\).

The methodology employed here to develop linear models to predict wheat and barley production is similar to that used in Bento et al.22. A forward stepwise regression algorithm was applied to select groups of statistically significant predictors for linear regression models (p-value to choose predictor was 0.05). The pool of predictors is organized from October to June, i.e., the growing season of the cereals, and consists of ERA5 standardized anomalies (\({\text{PR}}_{{{\text{ERA}}5}}^{{\text{a}}}\), \({\text{TX}}_{{{\text{ERA}}5}}^{{\text{a}}}\), \({\text{TN}}_{{{\text{ERA}}5}}^{{\text{a}}}\)), accounting for a total of 27 predictors. Hence, the first year is 1994 (starting in October 1993 and ending in June 1994), and the last is 2019 (staring in October 2018 and ending in June 2019), i.e., 26 growing season periods. Consequently, 4 models are derived, representative of the two cereals and the two regions. With the aim of avoiding overfitting, a leave-one-year-out cross-validation method is used28. The predicted and observed wheat and barley yields, with and without cross-validation, are then compared using Pearson's linear correlation coefficient R, \({\text{R}}^{2}\) adjusted to the number of predictors in the regression model \({\text{R}}_{{{\text{adj}}}}^{2}\), and the mean absolute error (MAE).

Forecasting cereal yield production

The four linear models developed with ERA5 predictors are now used with forecasted predictors, i.e., instead of using ERA5 variables, SEAS5 and persistence forecasts are used (reminding that persistence is actually the ERA5 fields known at the issue date). Let’s assume the user is growing winter wheat in Spain. In April, the user wants to have an idea of how much wheat is expected to harvest in the summer. Thus, he may use dynamical forecasts to estimate predictors from April to summer, or he may assume a persistent climate anomaly (e.g., if the previous months have been dry, then the next months will also be dry). These forecasted climate anomalies are used in the model, which is the one developed with the “observational” datasets (here with ERA5), giving a prediction of winter wheat that could be harvested in summer. This is the reason why we developed the models with ERA5 and then applied the forecasts of predictors to these models. Due to the forecast horizon of 7 months (the first lead time is for the current month, and the last lead time is issued 6 months before the current month), the forecasts may start up to 6 months prior to the later in the season predictor of each model (e.g., if the later predictor is for the month of May, a forecast of yield can be produced starting in November; if the later predictor is in April, the forecasts can start in October). Considering that winter wheat and barley start growing after the winter dormancy, in late winter or early spring (January to March), and that the user would beneficiate of an early warning several months before the harvest (~ July), the forecasts start in January. If, in a given issue month, the predictors’ month is in the past, then the ERA5 values are used (e.g., since the considered starting issue month is January, all predictors in October, November, and December are past months and thus are assigned ERA5 values). This method is used for both SEAS5 and persistence forecasts.

The forecasted wheat and barley anomaly yields (using both SEAS5 and persistence) are then verified by comparison with the observed anomalies. Contingency tables are built to allow for the probabilistic (yes/no) forecast verification of a given event28,29. Here, an event is defined as one year having yield losses if it presents a yield anomaly below zero in that given year. To validate the forecasts, three verification metrics are used: the false alarm rate (FAR), hit rate (H), and Heidke skill score (HSS)28. The HSS measures the fractional improvement of the forecast over the standard forecast, and it is normalized by the total range of possible enhancement over the standard. The range of the HSS is -∞ to 1. Negative values indicate that the chance forecast is better, 0 means no skill, and a perfect forecast obtains a HSS of 1. Furthermore, for each issue month, the distribution of the differences between forecasted and observed yield anomalies is presented in the form of boxplots, and the respective root mean square error (RMSE) is displayed to allow for a quantitative overview of the results. With the objective of quantifying the added value of using dynamical over persistence forecasts, the percent difference (\({\text{P}}_{{{\text{diff}}}}\)) is estimated as:

where \({\text{RMSE}}_{{{\text{S}}5}}\) and \({\text{RMSE}}_{{{\text{per}}}}\) are the RMSE between forecasted (SEAS5 and persistence, respectively) and observed yield anomalies. Negative values of \({\text{P}}_{{{\text{diff}}}}\) indicate the added value (in percentage) of using the dynamical forecasts relative to persistence.

The continuous ranked probability score (CRPS)28 was also estimated to compare yields obtained with the dynamic and persistence forecasts. Here, the full 25-member ensemble of SEAS5 forecasts was compared with the yield observations. It is important to note that although the SEAS5 is a probabilistic forecast, persistence is deterministic. Thus, the CRPS of the persistence is the mean absolute error (MAE).

Ethics declaration

The plant collection and use was in accordance with all the relevant guidelines.

Results

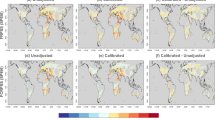

The Pearson correlation between spatially averaged ERA5 and SEAS5 variables (1993–2019) for both regions is shown in Fig. 2 (top). As an example, precipitation in July with lead time 2 is the correlation between the SEAS5 forecast issued in May valid to July and ERA5 July precipitation. Differences between precipitation and temperature correlations are conspicuous. The former shows significant correlations essentially in the first month of forecasting (lead time 0, i.e., the issue month), except in late spring and summer, whereas both maximum and minimum temperatures show more frequent significant correlations, which tend to continue for longer lead times in some months.

Pearson correlation coefficient (R) between monthly time series of PR, TX, and TN (left to right) from ERA5 and SEAS5 (top panels) and ERA5 and persistence (bottom panels). The figure was produced in Python 3.8 (https://www.python.org/).

A similar procedure is adopted for the persistence forecast, as shown in Fig. 2 (bottom). Using the same example as above, precipitation in July with lead time 2 is the correlation between ERA5 July precipitation and ERA5 April precipitation (in this case, the persistence is the value known when the forecast is issued two months in advance, on the 1st of May, i.e., ERA5 precipitation in April).

Then, the linear models for wheat and barley yield prediction are developed through a stepwise regression taking a pool of 27 potential predictors (\({\text{PR}}_{{{\text{ERA}}5}}^{{\text{a}}}\), \({\text{TX}}_{{{\text{ERA}}5}}^{{\text{a}}}\), \({\text{TN}}_{{{\text{ERA}}5}}^{{\text{a}}}\) from October to June). Table 1 shows the resulting four models and the respective metrics with and without cross-validation (cv).

Correlation coefficients between predicted and observed crop yields vary between 0.85 and 0.91 without cross-validation and show small signs of degradation when applying the leave-one out cross-validation scheme, ranging between 0.78 and 0.85. The model applied to barley in Region 2 has more predictors (five) and consequently has a better fit to the observed anomalies. Although the larger the number of predictors is, the \({\text{R}}^{2}\) adjusted to the number of predictors in the regression model \({\text{R}}_{{{\text{adj}}}}^{2}\) continues to be larger in this case, which establishes model robustness. The MAE varies between 0.33 (barley) and 0.42 (wheat) without cross-validation and between 0.44 and 0.52 with cross validation. Wheat and barley in Region 1 retained similar predictors focused into the spring months (March, April, and May), with small variations in the weights attributed. On the other hand, Region 2 (located more towards southern Spain) wheat and barley yield equations present a mix of winter and spring predictors. The only common predictor of the four cereal/region pairs is April precipitation, always with a positive contribution, i.e., favourable to larger cereal production. The maximum temperature in March was present in the three models and was absent in only wheat Region 2. In contrast to April precipitation, March maximum temperature has negative weights, i.e., higher maximum temperatures handicap the amount of wheat and barley produced. This agrees with the expected behaviour of the growing season cycles in this region23, where a good production year is defined by moderate precipitation and temperatures in early spring, substantial precipitation and below average temperatures in April and May, and a warm and dry June, which allows for a gradual maturation beginning the formation of filled grains23,30.

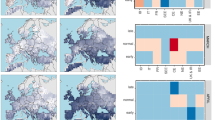

Linear models displayed in Table 1 are now evaluated with predictors from seasonal forecasts, the dynamical SEAS5, and the persistent climate. Figure 3 shows the verification metrics (FAR, H, and HSS) for the event of loss of yield (i.e., “yes” is yield anomaly < 0, and “no” is yield anomaly ≥ 0) starting in the issue month of January until the issue month of June. It is noteworthy that none of the models include predictors in June (see Table 1), which means that the verification metrics in June represent yield anomalies obtained using ERA5 predictors alone, thus being what we may call the “benchmark forecast” (separated by a vertical black line in Fig. 3). This benchmark forecast has some degree of error since correlations are not perfect, i.e., equal to 1 (see Table 1), and the verification metrics of Fig. 3 are not 1 (in the case of H and HSS) or 0 (in the case of FAR). Furthermore, the last predictor for wheat in Region 2 is April, which means that in addition to June, yield anomalies in May are also estimated with full ERA5 predictors (and consequently show identical results to verification metrics in June). Solid lines in Fig. 3 represent forecast verification metrics (H in blue; FAR in orange; HSS in green) for SEAS5, whereas dashed lines represent the same metrics for the persistence forecast. The results show no clear difference between SEAS5 and persistence. Indeed, the behaviour of both forecasts is similar, with larger differences occurring in Region 2. Notably, in this region, both wheat and barley yields forecasted in April show better skill when persistence is used (albeit showing lower accuracy, as discussed ahead). Furthermore, and as expected, these results show a tendency toward higher skill as the growing season progresses (and inclusion of more ERA5 predictors and subsequently fewer forecasted predictors). The results from Region 1 show low skill in the winter months for both SEAS5 and persistence and a significant leap in skill metric from April onwards (together with an increase in hit rate and a decrease in FAR).

Forecast verification metrics: False alarm ratio FAR (orange), hit rate H (blue), and Heidke skill score HSS (green) for wheat yield anomalies (top) and barley yield anomalies (bottom) located in Region 1 (left) and Region 2 (right). Metrics for yields obtained with dynamical forecast SEAS5 are displayed in solid lines, whereas metrics for yields obtained with persistence forecasts are shown in dashed lines. The figure was produced in Python 3.8 (https://www.python.org/).

The distribution of differences between forecasted and observed yield anomalies is shown in Fig. 4. Although similar results are displayed between SEAS5 and persistence (coherent with Fig. 3), some differences may be noted. As an example, for wheat and barley yield anomaly differences in Region 1 issued from February onwards, it is clear that there is a consistently larger interquartile distance when using persistence forecasts, meaning that the dynamical SEAS5 may be relatively more accurate than the persistence in forecasting yield anomalies of these cereals. The RMSE of forecasted versus observed yield anomaly supports this, with yield forecasts based on SEAS5 consistently having lower RMSEs than those related to persistence forecasts. Similar results may be found in Region 2 for barley yield anomalies. Here, differences between interquartile ranges are even larger, especially in the issue months of March and April (with RMSEs diverging by approximately 0.3). In the case of wheat in this region, the results show much more scattering from both forecasts and larger interquartile distances for the dynamical forecast in issue months of March and April. However, differences between persistent and observed wheat yield anomalies show larger extremes (whiskers tend to stretch to larger differences for both positive and negative values), which translates into more accurate forecasts when using the dynamical model (lower RMSE).

Distribution of differences between forecasted and observed yield anomalies of wheat (top) and barley (bottom) located in Region 1 (left) and Region 2 (right), encompassing the growing seasons of 1994–2019 (26 yields per issue month). Box plots filled in dark gray represent those differences estimated with the dynamical SEAS5 forecasts, whereas box plots filled in dark red represent differences when the forecast is assumed to be persistent. The x-axis represents the forecast issue month. Differences in anomalies of the “benchmark forecasts” are separated from the remaining anomalies by a vertical black line (June for wheat in Region 1 and barley in both regions and May and June for wheat in Region 2). On top of each box plot is displayed the RMSE, and inside each panel the percentage difference \({\text{P}}_{{{\text{diff}}}}\). The box ranges from the 25th to the 75th percentile, and the whisker ranges between the 5th and 95th percentiles. The median is illustrated as the horizontal black line inside the box. The figure was produced in Python 3.8 (https://www.python.org/).

Discussion

The rationale behind this research arises from the expected increase in socioeconomic pressure over the agricultural sector as a consequence of a fast-changing climate. Climate-related crop failures across multiple countries, namely, in Europe during 2003, 2010 and 201831,32,33, have increased the need for adaptation to climate change. Therefore, reliable seasonal forecasts of crop yields are required for a timely response and to guideline stakeholders and policymakers. Particularly in the Iberian Peninsula, different spatiotemporal responses of wheat and barley to climate conditions have been suggested18,21, and our results provide further insight into the design of current crop monitoring and forecasting systems over these regions.

The results presented in this study demonstrate two different outcomes: (1) wheat and barley yield anomaly forecasts (dynamical and persistent) start to gain skill later in the season (typically from April onwards); and (2) the added value of using the SEAS5 forecast as an alternative to persistence ranges from 6 to 16%, with better results in the southern Spanish regions.

Relative to the first outcome, similar results were also found in17, indicating a lack of skill in the winter months and an increase in skill when using seasonal forecasts of SEAS5 issued later in the season in Spain for wheat flowering prediction, supporting our findings. Nevertheless, it is relevant to stress that the cited work and others focused on different regions of the world13,16,34 use information from process-based crop models forced with seasonal forecasts. Indeed, empirical models similar to those developed in this study15,18,19,21,35 do not integrate a comprehensive formulation of the physical processes that drive crop and climate interactions, intending only to represent large-scale impacts of climate on yields. However, empirical models are shown to typically be capable of reproducing results obtained from mechanistic models36,37, and due to their simplicity, they may be more friendly to be employed by stakeholders to set management strategies7. The usefulness of skilful forecasts issued from April onwards will depend on factors related to these strategies. Indeed, harvesting typically occurs in late spring/early summer, and this study indicates that stakeholders may start receiving skilful forecasts approximately one to two months ahead (typically in April/May). According to a survey done to U.S.A. winter wheat stakeholders, management decisions must take place 0–2.5 months before operationalization38, which is within the lead times discussed here. However, the extent to which complex dynamical seasonal forecasts introduce an added value when compared with forecasts based on the knowledge of the meteorological conditions that occurred in previous months is a relevant issue to be addressed. This leads to the discussion of the second outcome of this study.

The forecast verification metrics show that there is no major added value in using dynamical forecasts instead of persistence forecasts in terms of event configuration. Indeed, if the interest of the stakeholder is to merely know with a few months in advance if the yield of a given year will be better or worse than the mean yield of the previous decades, then using persistence is sufficient, as it seems to produce similar forecasting skill as using ECMWF SEAS5 as input. However, the same is not true if the stakeholder intends to have a more accurate forecast of the cereal yield. In this case, the results point to better achievable accuracy when employing the dynamical forecast system. Indeed, percent differences (\({\text{P}}_{{{\text{diff}}}} )\) of RMSE obtained for dynamical forecasts versus observations relative to persistence forecasts versus observations (considering all lead times included) are always negative, ranging from about − 6% for both cereals in Region 1 to circa − 16% and − 9% for wheat and barley in Region 2, respectively. Similar results were obtained in another study16 for the Mediterranean region of Australia (with a similar climate to that of Iberia). There, the authors concluded that the dynamical forecasts had a narrower prediction range (more accurate) than the climatology driven ones (the authors used climatology forecasts instead of persistence), with the handicap of inducing more misleading forecasts than the climatology (lower skill scores). With the implementation of the probabilistic forecast score CRPS, results show an increase towards a larger added value in using SEAS5 instead of persistence (Supplementary Fig. S3). However, this metric is intended to evaluate probabilistic forecasts, and persistence is a deterministic forecast, which partially explains the larger differences obtained.

It is also noteworthy to highlight that the equation to estimate wheat in Region 2 presents three out of four predictors dependent on precipitation. This is a variable that presents small predictive capacity even for short lead times (see Fig. 2), a matter also discussed in39 and40. Furthermore, the models developed here (Table 1) are largely dependent on the size and quality of the databases used, and thus, more detailed wheat and barley production datasets would be ideal to increase the robustness of the results. Finally, the methodology employed here for wheat and barley may be applied to other crops with different outcomes. Future work may also focus on understanding how teleconnection patterns relevant for springtime influence the ability of persistent forecasts to achieve good forecasting skill. Indeed, both precipitation and temperature are influenced by patterns such as the North Atlantic Oscillation (NAO), the East Atlantic (EA) pattern, the El Niño–Southern Oscillation (ENSO), or the Mediterranean Oscillation (MO)41,42,43,44.

Concluding remarks

Timely knowledge of yield before harvest is a critical piece of information that could help stakeholders take the necessary actions to maximize production and avoid expenses that may affect small farmers and country economies alike. This is where seasonal forecasts of meteorological variables play a crucial role. Indeed, permanent advances in dynamical forecasting systems, such as the state-of-the-art ECMWF SEAS5, may bring new possibilities in this field45,46. Here, this system is used to forecast wheat and barley yield anomalies taking advantage of multiple linear regression models and is further compared to what may be called the “poor-man” forecasting system of issuing forecasts based on persistent climate. Our results indicate that persistence is as good as the dynamical system in terms of predicting gains or losses of wheat and barley at different lead times, but the dynamical SEAS5 allows for a more accurate choice (6–16% RMSE improvement). These results, focused on crops located on the Iberian Peninsula, should provide guidelines on the design of crop monitoring and forecasting systems and give an idea to the regional stakeholder whether to invest or not in such forecasting techniques, depending on the objectives and requirements of the undertaking. Finally, it is important to acknowledge that the Iberian Peninsula is part of the Mediterranean basin, well known for being a major climate change “hot spot” where temperatures are rising faster than the world average while precipitation is slightly declining47. Thus, some of these key climate variables selected by our crop regression models for the two considered regions are not stationary. Not only have they been changing in recent decades but are bound to change even further in coming decades due to unavoidable warming scenarios (IPCC, 2021). To what extent this could undermine some of our results should be the focus of further research.

Data availability

Cereal production data is available at https://www.mapa.gob.es, and ERA5 and SEAS5 are available at https://cds.climate.copernicus.eu/.

References

Shewry, P. R. Wheat. J. Exp. Bot. 60, 1537–1553 (2009).

Brás, T. A., Seixas, J., Carvalhais, N. & Jagermeyr, J. Severity of drought and heatwave crop losses tripled over the last five decades in Europe. Environ. Res. Lett. 16, 065012 (2021).

Lesk, C., Rowhani, P. & Ramankutty, N. Influence of extreme weather disasters on global crop production. Nature 529, 84–87 (2016).

Kornhuber, K. et al. Amplified Rossby waves enhance risk of concurrent heatwaves in major breadbasket regions. Nat. Clim. Chang. 10, 48–53 (2020).

Anderson, W. B., Seager, R., Baethgen, W., Cane, M. & You, L. Synchronous crop failures and climate-forced production variability. Sci. Adv. 5, 10048 (2019).

Gaupp, F., Hall, J., Hochrainer-Stigler, S. & Dadson, S. Changing risks of simultaneous global breadbasket failure. Nat. Clim. Chang. 10, 54–57 (2020).

Basso, B. & Liu, L. Seasonal crop yield forecast: Methods, applications, and accuracies. in Advances in Agronomy 154, 201–255 (Academic Press, 2019).

Klemm, T. & McPherson, R. A. The development of seasonal climate forecasting for agricultural producers. Agric. For. Meteorol. 232, 384–399 (2017).

Hansen, J. W., Mason, S. J., Sun, L. & Tall, A. Review of seasonal climate forecasting for agriculture in sub-Saharan Africa. Exp. Agric. 47, 205–240 (2011).

Zinyengere, N. et al. Using seasonal climate forecasts to improve maize production decision support in Zimbabwe. Agric. For. Meteorol. 151, 1792–1799 (2011).

Capa-Morocho, M. et al. Crop yield outlooks in the Iberian Peninsula: Connecting seasonal climate forecasts with crop simulation models. Agric. Syst. 149, 75–87 (2016).

Ramírez-Rodrigues, M. A. et al. The value of seasonal forecasts for irrigated, supplementary irrigated, And rainfed wheat cropping systems in northwest Mexico. Agric. Syst. 147, 76–86 (2016).

Canal, N. et al. Anticipation of the winter wheat growth based on seasonal weather forecasts over France. Meteorol. Appl. 24, 432–443 (2017).

Peng, B., Guan, K., Pan, M. & Li, Y. Benefits of seasonal climate prediction and satellite data for forecasting U.S. maize yield. Geophys. Res. Lett. 45, 9662–9671 (2018).

Qader, S. H., Dash, J. & Atkinson, P. M. Forecasting wheat and barley crop production in arid and semi-arid regions using remotely sensed primary productivity and crop phenology: A case study in Iraq. Sci. Total Environ. 613–614, 250–262 (2018).

Brown, J. N., Hochman, Z., Holzworth, D. & Horan, H. Seasonal climate forecasts provide more definitive and accurate crop yield predictions. Agric. For. Meteorol. 260–261, 247–254 (2018).

Ceglar, A. & Toreti, A. Seasonal climate forecast can inform the European agricultural sector well in advance of harvesting. Clim. Atmos. Sci. 4, 1–8 (2021).

Ribeiro, A. F. S., Russo, A., Gouveia, C. M. & Páscoa, P. Modelling drought-related yield losses in Iberia using remote sensing and multiscalar indices. Theor. Appl. Climatol. 136, 203–220 (2019).

Filippi, P. et al. An approach to forecast grain crop yield using multi-layered, multi-farm data sets and machine learning. Precis. Agric. 20, 1015–1029 (2019).

Kusunose, Y. & Mahmood, R. Imperfect forecasts and decision making in agriculture. Agric. Syst. 146, 103–110 (2016).

Bento, V. A. et al. The impact of climate change in wheat and barley yields in the Iberian Peninsula. Sci. Rep. 11, 1–12 (2021).

Ribeiro, A. F. S., Russo, A., Gouveia, C. M. & Páscoa, P. Copula-based agricultural drought risk of rainfed cropping systems. Agric. Water Manag. 223, 105689 (2019).

Gouveia, C. & Trigo, R. M. Influence of Climate Variability on Wheat Production in Portugal. in geoENV VI–Geostatistics for Environmental Applications 335–345 (Springer Netherlands, 2008). doi:https://doi.org/10.1007/978-1-4020-6448-7_28

Páscoa, P., Gouveia, C. M., Russo, A. & Trigo, R. M. The role of drought on wheat yield interannual variability in the Iberian Peninsula from 1929 to 2012. Int. J. Biometeorol. 61, 439–451 (2017).

Hersbach, H. et al. Global reanalysis: Goodbye ERA-Interim, hello ERA5. ECMWF Newsl. 159, 17–24 (2019).

Johnson, S. J. et al. SEAS5: The new ECMWF seasonal forecast system. Geosci. Model Dev. 12, 1087–1117 (2019).

Manzanas, R. Assessment of model drifts in seasonal forecasting: Sensitivity to ensemble size and implications for bias correction. J. Adv. Model. Earth Syst. 12, e2019MS001751 (2020).

Wilks, D. S. Statistical Methods in the Atmospheric Sciences. (Academic Press, 2011).

Jolliffe, I. T. & Stephenson, D. B. Forecast Verification: A Practitioner’s Guide in Atmospheric Science, Second Edition. (Wiley, 2011).

Gouveia, C. & Trigo, R. M. The Impacts of the NAO on the Vegetation Activity in Iberia. in Advances in Global Change Research 46, 113–128 (Springer International Publishing, 2011).

European Agri-cooperatives, E. F. Assessment of the impact of the heat wave and drought of the summer 2003 on agriculture and forestry. Comm. Agric. Organ. Eur. Union Gen. Comm. Agric. Coop. Eur. Union, Brussels 15 (2003).

Loboda, T., Krankina, O., Savin, I., Kurbanov, E. & Hall, J. Land management and the impact of the 2010 extreme drought event on the agricultural and ecological systems of European Russia. in Land-Cover and Land-Use Changes in Eastern Europe after the Collapse of the Soviet Union in 1991 173–192 (Springer International Publishing, 2016). https://doi.org/10.1007/978-3-319-42638-9_8

Beillouin, D., Schauberger, B., Bastos, A., Ciais, P. & Makowski, D. Impact of extreme weather conditions on European crop production in 2018: Random forest-Yield anomalies. Philos. Trans. R. Soc. B Biol. Sci. 375, 1129 (2020).

Morari, F. et al. Coupling proximal sensing, seasonal forecasts and crop modelling to optimize nitrogen variable rate application in durum wheat. Precis. Agric. 22, 75–98 (2021).

Filippi, P., Whelan, B. M., Vervoort, R. W. & Bishop, T. F. A. Mid-season empirical cotton yield forecasts at fine resolutions using large yield mapping datasets and diverse spatial covariates. Agric. Syst. 184, 102894 (2020).

Estes, L. D. et al. Comparing mechanistic and empirical model projections of crop suitability and productivity: Implications for ecological forecasting. Glob. Ecol. Biogeogr. 22, 1007–1018 (2013).

Lobell, D. B. & Asseng, S. Comparing estimates of climate change impacts from process-based and statistical crop models. Environ. Res. Lett. 12, 15001 (2017).

Klemm, T. & Mcpherson, R. A. Assessing decision timing and seasonal climate forecast needs of winter wheat producers in the South-Central United States. J. Appl. Meteorol. Climatol. 57, 2129–2140 (2018).

Dutra, E., Johannsen, F. & Magnusson, L. Late spring and summer subseasonal forecasts in the Northern Hemisphere midlatitudes: Biases and skill in the ECMWF model. Mon. Weather Rev. 149, 2659–2671 (2021).

Tian, D., Wood, E. & Yuan, X. CFSv2-based sub-seasonal precipitation and temperature forecast skill over the contiguous United States. Hydrol. Earth Syst. Sci. 21, 1477–1490 (2017).

Ríos-Cornejo, D., Penas, Á., Álvarez-Esteban, R. & del Río, S. Links between teleconnection patterns and precipitation in Spain. Atmos. Res. 156, 14–28 (2015).

Ríos-Cornejo, D., Penas, Á., Álvarez-Esteban, R. & del Río, S. Links between teleconnection patterns and mean temperature in Spain. Theor. Appl. Climatol. 122, 1–18 (2015).

Martinez-Artigas, J., Lemus-Canovas, M. & Lopez-Bustins, J. A. Precipitation in peninsular Spain: Influence of teleconnection indices and spatial regionalisation. Int. J. Climatol. 41, E1320–E1335 (2021).

Frías, M. D., Herrera, S., Cofiño, A. S. & Gutiérrez, J. M. Assessing the skill of precipitation and temperature seasonal forecasts in Spain: Windows of opportunity related to ENSO events. J. Clim. 23, 209–220 (2010).

Fan, H. et al. Predictable patterns of wintertime surface air temperature in Northern Hemisphere and their predictability sources in the SEAS5. J. Clim. 33, 10743–10754 (2020).

Gubler, S. et al. Assessment of ECMWF SEAS5 seasonal forecast performance over South America. Weather Forecast. 35, 561–584 (2020).

Cramer, W. et al. Climate change and interconnected risks to sustainable development in the Mediterranean. Nat. Clim. Chang. 8, 972–980 (2018).

Acknowledgements

This work was supported by national funds through FCT (Fundação para a Ciência e a Tecnologia, Portugal) under project IMPECAF -PTDC/CTA-CLI/28902/2017 and project UIDB/50019/2020 – IDL. Andreia F. S. Ribeiro acknowledges funding from the Swiss National Science Foundation (project number 186282).

Author information

Authors and Affiliations

Contributions

V.B., A.R., and E.D. conceived the study. V.B. performed the analysis of the results with the contribution of all authors. V.B. wrote the manuscript, and all authors provided comments and feedback.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bento, V.A., Russo, A., Dutra, E. et al. Persistence versus dynamical seasonal forecasts of cereal crop yields. Sci Rep 12, 7422 (2022). https://doi.org/10.1038/s41598-022-11228-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-11228-2

This article is cited by

-

Hydrological drought forecasts using precipitation data depend on catchment properties and human activities

Communications Earth & Environment (2024)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.