Abstract

Numerous scientific and engineering applications require numerically solving systems of equations. Classically solving a general set of polynomial equations requires iterative solvers, while linear equations may be solved either by direct matrix inversion or iteratively with judicious preconditioning. However, the convergence of iterative algorithms is highly variable and depends, in part, on the condition number. We present a direct method for solving general systems of polynomial equations based on quantum annealing, and we validate this method using a system of second-order polynomial equations solved on a commercially available quantum annealer. We then demonstrate applications for linear regression, and discuss in more detail the scaling behavior for general systems of linear equations with respect to problem size, condition number, and search precision. Finally, we define an iterative annealing process and demonstrate its efficacy in solving a linear system to a tolerance of 10−8.

Similar content being viewed by others

Introduction

Many problems in science, engineering, and mathematics can be reduced to solving systems of equations with notable examples in modeling and simulation of physical systems, and the verification and validation of engineering designs. Conventional methods for solving linear systems range from exact methods, such as matrix diagonalization, to iterative methods, such as fixed-point solvers, while polynomial systems are typically solved iteratively with homotopy continuation. The advent of quantum computing has opened up the possibility of new methods for solving these challenging problems. For example, a quantum algorithm for solving systems of linear equations was established for gate-based quantum computers1 and demonstrated with small-scale problem instances2. Additionally, an algorithm for solving linear systems within the adiabatic quantum computing model3 was experimentally demonstrated4, followed by a more recent proposal5.

In this work, we present an approach for solving a general system of nth-order polynomial equations based on the principles of quantum annealing, followed by a demonstration of the algorithm for a system of second-order polynomial equations on commercially available quantum annealers. We then narrow the scope to examples of linear equations by first demonstrating an application to linear regression, before elucidating results on ill-conditioned linear systems motivated by the discretized Dirac equation Dϕ = χ from lattice quantum chromodynamics (QCD). The solution to the discretized Dirac equation is currently the only approach for evaluating non-perturbative QCD. However, well-known numerical challenges slow convergence with conventional solvers6,7. We end by using quantum annealing to solve a similar system and characterize the performance from experimental demonstrations with a commercial quantum annealer.

Polynomial Systems of Equations

We consider the system of N polynomial equations

where i ∈ {1, ..., N}, and P(n) is a rank n + 1 tensor of known real-valued coefficients for the polynomial of order n, and the real-valued vector x denotes the solution. Truncating to first order recovers a linear system of equations, i.e., \({P}_{i}^{(0)}+\sum _{j}\,{P}_{ij}^{(1)}{x}_{j}=0\).

Prior to this work, there exists no direct methods for solving a general nth-order polynomial system. For linear systems, existing approaches include direct diagonalization using Gauss-Jordan elimination or iterative methods such as conjugate-gradient. In practice, direct diagonalization is limited in computational efficiency, as those methods scale sharply with the size of the matrix. By contrast, iterative methods may have greater computational efficiency but the performance and stability are often sensitive to the input matrix. Preconditioning improves convergence of linear systems by transforming the input as M−1P(1)x = M−1b, where the preconditioner M must be inexpensive to invert and M−1 should be “close” to \({P}^{{(1)}^{-1}}\), so that M−1P(1) resembles a matrix close to unity. Identifying an effective preconditioner plays an important role in numerical convergence of iterative methods8,9,10,11,13. For lattice QCD applications12, the low-lying spectrum of the Dirac operator slows iterative convergence and preconditioning has been used to project out these low-lying modes. Acquiring the low-lying eigenpairs or singular triplets of D is in general computationally expensive and requires the use of additional iterative methods that also suffer from critical slowing down. Solutions to address this issue include EigCG6,7, inexact deflation8, and adaptive multigrid9,10,11,13.

Results

Quantum Annealing for Polynomial Solvers

Quantum annealing offers an alternative approach to solving a general system of equations. We map each variable xj using R number of qubits such that

where ψrj ∈ {0, 1}, ai ∈ ℝ and bi ∈ ℝ such that xj ∈ {bj + 2r−1aj|r ∈ ℤ≥ < R}. Defining the vectors

where |V| is the cardinality operator yielding the number of elements in a generic vector V. The objective function χ2 which solves Eq. (1) is given by minimizing the residual sum of squares in the qubit-basis

where ⋅ is the dot product, \(\circ \) is the Hadamard product, and \(\otimes \) is the tensor product. In particular, \({(\circ {\mathscr{A}}\otimes {\mathcal R} )}^{n}\equiv \circ {{\mathscr{A}}}^{\otimes n}\otimes { {\mathcal R} }^{\otimes n}\), where V⊗n is a repeated n sequence of tensor products. The ground state of Eq. (3) solves a system of polynomial equations. For current commercial quantum annealers, auxiliary qubits are required to reduce multi-linear terms down to bilinear interactions through quadratization14,15,16,17,18,19,20,21. We provide the details of quadratization through reduction-by-substitution on a system of second-order polynomials in Methods.

Finally, we note that resulting energy at the end of the optimization corresponds to exactly the residual sum of squares if the constant terms in χ2 are correctly accounted for. It follows that the entire energy spectrum is positive, and if the exact solution is recovered, then the ground state energy must be zero.

Quantum Annealing for Linear Solvers

A system of linear equations simplifies Eq. (3) to involve only bilinear terms without quadratization, and reduces to a quadratic unconstrained binary optimization (QUBO) problem where \({H}^{{\rm{QUBO}}}(\psi )=\sum _{ij}\,{\psi }_{i}{Q}_{ij}{\psi }_{j}\) with

where \({P^{\prime} }_{n}={P}_{n}^{(0)}+\sum _{i}\,{b}_{i}{P}_{ni}^{(1)}\). In addition, constant terms that arise from the substitution of Eq. (2) are omitted for simplicity and leaves the solution vector Ψ unchanged, but should be included when interpreting the energy as the residual sum of squares.

Application to Linear Regression

Given a set of N identical and independent observations of the

variable, the mean and covariance of yi follows

where the angle brackets denote the expectation value over N observations. A fitting function F(xi, p) may be defined with respect to the set of P unknown parameters p = {pn: n ∈ {1, ..., P}}, and a corresponding objective function for generalized least squares may be defined as

where the optimal value for the set p is determined by minimizing Eq. (6).

Restriction to linear least squares demands that the fitting function is linear in the unknown parameters, and therefore may be written in the form

where fn(xi) can be any function. The solution for linear regression is obtained by expanding Eq. (6) with Eq. (7) and yields

The extrema of the objective function can be determined by taking the derivative of Eq. (8) with respect to pn yielding a matrix equation of the form \(\sum _{j}\,{A}_{ij}^{(1)}{p}_{j}={A}_{i}^{(0)}\) analogous to Eq. (1) where

The solution to least squares minimization can then be mapped to a QUBO problem following Eq. (5), and amenable to methods of quantum annealing.

Discussion

System of Second Order Polynomial Equations

We demonstrate the validity of the algorithm on a system of two second order polynomial equations. The problem is chosen to be small such that the solution can be confirmed by an explicit search over the entire Hilbert space, and evaluated onto a D-Wave annealer. Consider the following system of equations,

with four real solutions at

For the sake of discussion, we set up Eq. (3) to solve for the solution at (2, 3) by choosing \({\mathscr{A}}=(\begin{array}{ll}1 & 1\end{array})\), \( {\mathcal B} =(\begin{array}{ll}0 & 0\end{array})\), and \( {\mathcal R} =(\begin{array}{l}{2}^{0},{2}^{1}\end{array})\). The tensors P(n) are obtained by inspection,

After transforming to the qubit-basis, direct search of the ground state of the 4-body Hamiltonian yields

where the consecutive pairs of binary variables maps to the binary representation of xi with little-endianness due to specific choices of \({\mathscr{A}}\), \( {\mathcal B} \) and \( {\mathcal R} \). The solution is reproduced when transforming the set of generalized n-dimensional \({\mathscr{Q}}\equiv \{{Q}^{(0)},{Q}^{(1)},\ldots ,{Q}^{(N)}\}\) matrix as defined in Eq. (4), to upper-triangular tensors, and also reproduced when further reducing the dimensionality of elements in Q(n≥2) with repeated indicies, yielding in general the most sparse upper-triangular representation \({{\mathscr{Q}}}^{sparse}\).

We quadratize the \({{\mathscr{Q}}}^{sparse}\) set of rank 0 to N tensors to the QUBO representation with reduction-by-substitution14,15,16,21 by introducing \(\frac{1}{2}N(N-1)\) auxiliary qubits ψa to enforce the following constraint,

such that the constraint is minimized when \({\psi }_{ij}^{a}={\psi }_{i}{\psi }_{j}\). The coefficient C should be chosen large enough such that the constraint is satisfied under optimization. Additional details of the quadratization used is given in Methods.

We repeat the exercise of solving the same system of polynomial equations on the D-Wave annealer with the symmetrized and quadratized representation, and successfully reproduce the solution,

where the last six auxiliary qubits confirm consistency of reducing many body interactions down to bilinear terms. Finally, we mention that with reduction-by-substitution, a system of mth-order polynomials needs to be quadratized m − 1 times, requiring exponentially more auxiliary qubits.

The software implementation of various many-body and 2-body quadratized representations for a system of second-order polynomial equations, the brute force solver, and D-Wave solver are made publicly available22.

Linear Regression

As an example, consider the following artificially generated data

where x ∈ Z: x ∈ [0, 49]. The Toeplitz correlation matrix23 is chosen to simulate a correlated time-series dataset, where the correlations decay exponentially as a function of x. Following the notation in Eq. (7), we assume a linear fit

and we estimate the parameters An given the data D(x). Using Eq. (2), we express each parameter Ai as a 4-bit unsigned integer

and construct the problem Hamiltonian following Eqs (5) and (9). The required 12 logical qubits (3 parameters × 4-bit representation) support a total of 4096 possible solutions. Explicit evaluation finds the true ground-state to have energy E0 = −1.418 and eigenstate

which corresponds to the parameter values

These correct coefficients for the generating function in Eq. (11) verify the design of the algorithm.

We next test the algorithm by solving the objective function using quantum annealing. The target Hamiltonian of Eq. (11) is solve with a D-Wave annealer, and results for 100,000 independent evaluations are acquired using an annealing schedule with T = 200μs. The correct result is reproduced in 0.5% of the solves, while the lowest 0.8% of the eigenvalue spectrum is obtained by 10% of the solves with overall results biased towards the lower-lying eigenspectrum.

Conditioned Systems of Linear Equations

In this section we show results and scaling of a classical method and the quantum annealer. One of the criteria for categorizing the “difficulty” of a linear system is condition number. The condition number of a matrix P(1) is defined as the ratio of maximum and minimum singular values.

In the case of symmetric matrices, this is equivalent to the ratio of largest and smallest eigenvalues.

We vary our test matrices in two ways: (1) vary the problem size while holding the condition number fixed, (2) the problem size is held constant with varying condition number. The accurately of the solution will be judged by the relative residual sum of squares,

where E0 is the ground-state energy. For conjugate gradient, a tolerance for Eq. (16) is utilized as a terminating criterion and the number of iterations when this point is reached is recorded. For quantum annealing the role of the relative residual is more subtle. The annealer is run many times and the lowest energy eigenpair is returned. The eigenvector from this set is substituted for xapprox, allowing a relative residual to be defined for the total anneal.

Classical Solutions

For the examples with a classical linear solver, conjugate gradient is used on N = 12, with varying condition number. Although conjugate gradient is not the optimal choice for classically solving such systems, the scaling comparison in condition number with the quantum algorithm is informative. Figure 1 shows slightly worse than square root scaling of conjugate gradient with condition number.

The number of conjugate gradient iterations grows slightly worse than \(\sqrt{\kappa ({P}^{(1)})}\). The stopping criterion is a tolerance of 10−6 for the norm of the relative residual. All matrices are rank 12, with smaller eigenvalues as κ(P(1)) increases, but identical eigenvectors. The same right-hand side is solved for all cases.

The matrices from this and subsequent results are constructed by creating a random unitary matrix of rank N, denoted as U. A diagonal matrix Λ is then linear populated by evenly spaced real-valued eigenvalues, such that \(\max ({\rm{\Lambda }})/\min ({\rm{\Lambda }})=\kappa \). The matrices are trivially formed as \({P}^{(1)}=U{\rm{\Lambda }}{U}^{\dagger }\). A common right-hand side is taken for all P(1): a vector of length N with linearly spaced decimals between 1 and −1.

Quantum annealing

In the following section, we demonstrate the scaling of the annealing algorithm under varying problem size, condition number, and precision of the search space. We conclude by applying the algorithm iteratively on a fixed problem and study the convergence of the relative residual.

Problem size: In Fig. 2a, we study the scaling behavior for κ = 1.1 and R = 2. Due to prior knowledge of the conjugate-gradient solution, the search space for all N parameters are fixed for the set of problems, and encompass the minimum and maximum results of the solution vector x. Additionally, knowledge of the result allows us to identify the ground-state QUBO solution by minimizing the difference between the conjugate-gradient and QUBO results (the forward error), and studies the theoretical scaling of the algorithm absent of current hardware limitations. Due to the small condition number of this study, minimizing the forward error is equivalent to minimizing the backwards error.

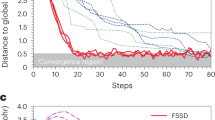

(a) (Top) The black dashed line shows the theoretical minimum relative residual of the algorithm as predicted by minimizing the forward error, given the search precision and search range used for the test. The red crosses are results of the lowest energy state from 100,000 quantum annealing measurements. Physical measurements deviate from the theoretical minimum as problem size grows. (Bottom) The corresponding percentage of measurements in the minimum energy state are shown in blue. The vertical axis is shown in a logarithmic scale. (b) Analogous to (a) but for varying condition number. The forward error prediction (dashed black line) is omitted and not a reliable measure of the relative residual for larger condition numbers. Note that the vertical axis of the bottom plot showing the percentage of measurements observed in the lowest-lying state is on a linear scale. (c) This plot is analogous to (a) but for varying search precision. Note that both vertical axes are on a logarithmic scale. (d) (Top) The relative residual exponentially decreases with each iteration of the algorithm. By the ninth iteration the result reaches single precision. (Bottom) The percentage of quantum annealing solutions in the lowest-lying state. The algorithm successfully resolves the solution at single precision for this example without issue.

With increased problem size, we observe that the percentage of annealed solutions which return the ground state decreases exponentially. This indicates the solution for a dense matrix may require exponentially more evaluations to obtain for current quantum annealers. The observed scaling is consistent with the assumption that the energy gap exponentially vanishes with increasing size for a dense Hamiltonian. In particular, beyond n = 16, only one out of 100,000 evaluations yield the resulting annealed solution, demonstrating that the real ground-state is well beyond the reach of the available statistics.

Condition number: Fig. 2b demonstrates the scaling of the algorithm with respect to changing condition number. The problem size is fixed to N = 12, and R = 2. The condition number affects the solution vector x, and therefore for this study we restrict the search range to span exactly the minimum and maximum values of x. The chosen search range keeps the resulting relative residual approximately constant under varying condition number. For linear systems with larger condition numbers, minimizing the forward error is no longer a reliable estimate of the residual of the backwards error, and is therefore dropped from this study.

With increasing condition number, we observe that the percentage of solutions that converge to the lowest-lying state is a relatively constant value as demonstrated by a less than one order-of-magnitude change between the different examples. This behavior is in stark contrast with the scaling observed in Fig. 2a, and suggests that with increasing condition number, the ground state is exponentially easier to identify. This is in amazing contrast to the classical result from Fig. 1, in which convergence to the solution decreases as condition number is raised.

Precision of search: Fig. 2c explores the behavior of the algorithm as R is increased for N = 4 and κ = 1.1. We observe that the relative residual exponentially decreases, as expected due to sampling an exponential number of solutions. However, increasing R also requires exponentially more evaluations from the annealer in order to resolve the ground state. Similarly to Fig. 2a, we observe that the forward error for problem sizes beyond R = 5 starts to deviate from the backward error, an indication that the limits of hardware control have been reached.

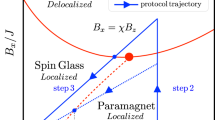

Iterative approach: Finally, we explore the possibility of iteratively applying the algorithm in order to decrease the relative residual of the final solution. We demonstrate this technique on N = 4, with κ = 1.1, and R = 4. For this study, we initially set \({\rm{\min }}({\mathscr{X}})=-\,1\) and \({\rm{\max }}({\mathscr{X}})=1\). With each iteration, we narrow the optimization to two neighboring values of the result allowed by the search space. Figure 3 shows how the search space is refined with each iteration of the algorithm and converges to the conjugate gradient solution. Figure 2d shows that the relative residual exponentially decreases with the application of each iteration, while the number of anneals required to sample the ground state stays relatively constant. The solution from quantum annealing at the final (ninth) iteration agrees with conjugate-gradient at single precision accuracy.

Search region over successive iterations of the algorithm. The shaded regions indicate the search region for each component of the solution vector \({\mathscr{X}}\). The gray horizontal line is the result from a conjugate-gradient solver. (a) Search region for the first five iterations for all parameters. (b) Search region zoomed in on a single parameter reaching single precision accuracy.

Methods

Quadratization

Quadratization (i.e. to make quadratic) maps terms in the Hamiltonian that are multi-linear with respect to the binary variables \({\mathscr{X}}\), to a larger Hilbert space involving only bilinear (i.e. quadratic) contributions. This transformation is required to realize quantum algorithms with non-linear operations on near-term quantum computers. There exists in current literature, a rich selection of methods to perform such a task14,15,16,17,18,19,20,21. For this work, we apply reduction-by-substitution14,15,21, where the constraint equation is given by Eq. (10).

One constraint equation is required to define each auxiliary qubit ψij. After taking into consideration that in the qubit basis, the Hamiltonian is symmetric under permutations of all indices, \(\frac{1}{2}N^{\prime} (N^{\prime} -1)\) auxiliary qubits are needed to account for every unique quadratic combination of the underlying basis of length N′. In Fig. (4), we provide the smallest non-trivial example which maps a system of two second-order polynomial equations (i.e. N = 2), with R = 2 after the n-body Hamiltonian has been reduced to the set \({{\mathscr{Q}}}^{sparse}\). The constraint equations have an overall coefficient C which needs to be large enough such that the constraints are satisfied under optimization. For visual clarity, the constraints in the second quadrant are entered in the lower triangular section, but in practice should be accumulated with the upper triangular section occupied by Q(2).

Example QUBO for a system of second-order polynomial equations. The QUBO can be organized into four quadrants as indicated by the solid black lines, corresponding to bilinear (2nd quadrant), tri-linear (1st and 3rd quadrants) and quadra-linear (4th quadrant) contributions. Within the quadrants, the elements are colored to reflect the effective one (red), two (green), three (blue), and four (yellow) qubit interactions after accounting for repeated indices. The entries in \({{\mathscr{Q}}}^{sparse}\) are distributed to entries in the QUBO corresponding to the order of interaction: Q(1) to red, Q(2) to green, Q(3) to blue, Q(4) to yellow. The coefficients of the constraint equations contributes to entries in red text.

Generalization to a system of more equation (N > 2), or finer searches (R > 2) is straightforward, and implemented in the accompanying software22. Generalizing to higher-order polynomial equations required additional levels of quadratization that was not implemented in this study.

Quantum Annealing

Akin to adiabatic quantum optimization24, quantum annealing prepares a quantum statistical distribution that approximates the solution by applying a slowly changing, time-dependent Hamiltonian25,26,27, where measurements drawn from the distribution represent candidate solutions. Unlike adiabatic quantum computing, quantum annealing permits non-adiabatic dynamics at non-zero temperature, making this approach easier to realize experimentally but also more challenging to distinguish quantum mechanically26,28,29,30,31,32,33,34. While examples of non-trivial advantages have been observed for fixed-size problem instances35,36,37,38,39, more general statements about computational complexity remain unresolved40.

We demonstrate the proposed algorithm using the D-Wave 2000Q commercial quantum annealer. This hardware is based on cryogenically cooled superconducting electronic elements that implement a programmable Ising model. Each quantum register element expresses a single Ising spin variable, but the D-Wave 2000Q supports only a limited connectivity between these elements. In particular, the i-th spin variable may be assigned a bias Qii and can be coupled to a unique set of six neighboring registers through the coupling Qij. A densely connected Hamiltonian can be embedded into the hardware by using secondary constraints to build chains of strongly correlated elements in which \({Q}_{ij}^{{\rm{constraint}}}\gg {Q}_{ij}^{{\rm{problem}}}\). This coupling constraint favors chains of spin elements which behave as a single spin variable41. Previous studies have identified optimal mappings of the infinite dimensional to three-dimensional Ising model42,43. For the D-Wave 2000Q, approximately 64 logical spin variables may be represented within the 2048 physical spin elements. Our examples use the dwave-sapi2 Python library44, which is a software tool kit that facilitates cloud access to the annealer and supports a heuristic embedding method for the available hardware.

Data Availability

Software to reproduce the solutions of the second-order system of polynomial equations discussed in this work is made publicly available at https://github.com/cchang5/quantum_poly_solver.

References

Harrow, A. W., Hassidim, A. & Lloyd, S. Quantum Algorithm for Linear Systems of Equations. Phys. Rev. Lett. 103, 150502, https://doi.org/10.1103/PhysRevLett.103.150502 0811.3171 (2009).

Zheng, Y. et al. Solving systems of linear equations with a superconducting quantum processor. Phys. review letters 118, 210504 (2017).

Subasi, Y., Somma, R. D. & Orsucci, D. Quantum algorithms for linear systems of equations inspired by adiabatic quantum computing. arXiv preprint arXiv:1805.10549 (2018).

Wen, J. et al. Experimental realization of quantum algorithms for linear system inspired by adiabatic quantum computing. arXiv preprint arXiv:1806.03295 (2018).

Borle, A. & Lomonaco, S. J. Analyzing the Quantum Annealing Approach for Solving Linear Least Squares Problems. ArXiv e-prints 0804.4884 (2018).

Stathopoulos, A. & Orginos, K. Computing and deflating eigenvalues while solving multiple right hand side linear systems in quantum chromodynamics. SIAM J. Sci. Comput. 32, 439–462, https://doi.org/10.1137/080725532 0707.0131 (2010).

Abdel-Rehim, A., Orginos, K. & Stathopoulos, A. Extending the eigCG algorithm to non-symmetric linear systems with multiple right-hand sides. PoS LAT2009, 036, https://doi.org/10.22323/1.091.0036 0911.2285 (2009).

Lüscher, M. Local coherence and deflation of the low quark modes in lattice qcd. J. High Energy Phys. 2007, 081 (2007).

Brandt, A. Multi-level adaptive solutions to boundary-value problems. Math. Comput. 31, 333–390 (1977).

Brannick, J., Brower, R. C., Clark, M. A., Osborn, J. C. & Rebbi, C. Adaptive Multigrid Algorithm for Lattice QCD. Phys. Rev. Lett. 100, 041601, https://doi.org/10.1103/PhysRevLett.100.041601 0707.4018 (2008).

Clark, M. Accelerating Lattice QCD Multigrid on GPUs Using Fine-grained Parallelization. In Proceedings of the 34 th annual International Symposium on Lattice Field Theory (LATTICE2016). 24–30 July 2016. University of Southampton, 267 1612.07873 (2016).

Chang, C. C. et al. A per-cent-level determination of the nucleon axial coupling from quantum chromodynamics. Nature 558, 91–94, https://doi.org/10.1038/s41586-018-0161-8 1805.12130 (2018).

Frommer, A., Kahl, K., Krieg, S., Leder, B. & Rottmann, M. Adaptive Aggregation Based Domain Decomposition Multigrid for the Lattice Wilson Dirac Operator. arXiv e-prints arXiv:1303.1377 1303.1377 (2013).

Rosenberg, I. G. Reduction of bivalent maximization to the quadratic case. Cahiers duCentre d’Etudes de Recherche Oper. 17, 71–74 (1975).

Boros, E. & Hammer, P. L. Pseudo-boolean optimization. Discret. Appl. Math. 123, 155–225, https://doi.org/10.1016/S0166-218X(01)00341-9 (2002).

Biamonte, J. D. Nonperturbative k-body to two-body commuting conversion hamiltonians and embedding problem instances into ising spins. Phys. Rev. A 77, 052331, https://doi.org/10.1103/PhysRevA.77.052331 (2008).

Boros, E. & Gruber, A. On quadratization of pseudo-boolean functions. In ISAIM (2012).

Humble, T. S. et al. An integrated programming and development environment for adiabatic quantum optimization. Comput. Sci. Discov. 7, 015006 (2014).

Tanburn, R., Okada, E. & Dattani, N. Reducing multi-qubit interactions in adiabatic quantum computation without adding auxiliary qubits. Part 1: The “deduc-reduc” method and its application to quantum factorization of numbers. arXiv e-prints arXiv:1508.04816 1508.04816 (2015).

Okada, E., Tanburn, R. & Dattani, N. S. Reducing multi-qubit interactions in adiabatic quantum computation without adding auxiliary qubits. Part 2: The “split-reduc” method and its application to quantum determination of Ramsey numbers. arXiv e-prints arXiv:1508.07190 1508.07190 (2015).

Dattani, N. Quadratization in discrete optimization and quantum mechanics. arXiv e-prints arXiv:1901.04405 1901.04405 (2019).

Chang, C. C. & Humble, T. S. quantum_poly_solver. https://github.com/cchang5/quantum_poly_solver (2019).

Hardin, J., Garcia, S. R. & Golan, D. A method for generating realistic correlation matrices. ArXiv e-prints 1106.5834 (2011).

Farhi, E., Goldstone, J., Gutmann, S. & Sipser, M. Quantum Computation by Adiabatic Evolution. eprint arXiv:quantph/0001106 quant-ph/0001106 (2000).

Ray, P., Chakrabarti, B. K. & Chakrabarti, A. Sherrington-kirkpatrick model in a transverse field: Absence of replica symmetry breaking due to quantum fluctuations. Phys. Rev. B 39, 11828–11832, https://doi.org/10.1103/PhysRevB.39.11828 (1989).

Kadowaki, T. & Nishimori, H. Quantum annealing in the transverse Ising model. Phys. Rev. E 58, 5355–5363, https://doi.org/10.1103/PhysRevE.58.5355 cond-mat/9804280 (1998).

Das, A. & Chakrabarti, B. K. Colloquium: Quantum annealing and analog quantum computation. Rev. Mod. Phys. 80, 1061–1081, https://doi.org/10.1103/RevModPhys.80.1061 (2008).

Farhi, E. et al. A Quantum Adiabatic Evolution Algorithm Applied to Random Instances of an NP-Complete Problem. Science 292, 472–476, https://doi.org/10.1126/science.1057726 quant-ph/0104129 (2001).

Dickson, N. et al. Thermally assisted quantum annealing of a 16-qubit problem. Nat. Commun. 4, https://doi.org/10.1038/ncomms2920 (2013).

Amin, M. H. S., Averin, D. V. & Nesteroff, J. A. Decoherence in adiabatic quantum computation. Phys. Rev. A 79, 022107, https://doi.org/10.1103/PhysRevA.79.022107 0708.0384 (2009).

Albash, T. & Lidar, D. A. Decoherence in adiabatic quantum computation. Phys. Rev. A 91, 062320, https://doi.org/10.1103/PhysRevA.91.062320 1503.08767 (2015).

Wang, W., Machta, J. & Katzgraber, H. G. Bond chaos in spin glasses revealed through thermal boundary conditions. Phys. Rev. B 93, 224414, https://doi.org/10.1103/PhysRevB.93.224414 1603.00543 (2016).

Nishimura, K., Nishimori, H., Ochoa, A. J. & Katzgraber, H. G. Retrieving the ground state of spin glasses using thermal noise: Performance of quantum annealing at finite temperatures. Phys. Rev. E 94, 032105, https://doi.org/10.1103/PhysRevE.94.032105 1605.03303 (2016).

Marshall, J., Rieffel, E. G. & Hen, I. Thermalization, Freeze-out, and Noise: Deciphering Experimental Quantum Annealers. Phys. Rev. Appl. 8, 064025, https://doi.org/10.1103/PhysRevApplied.8.064025 1703.03902 (2017).

Rønnow, T. F. et al. Defining and detecting quantum speedup. Science 345, 420–424 (2014).

Katzgraber, H. G., Hamze, F. & Andrist, R. S. Glassy chimeras could be blind to quantum speedup: Designing better benchmarks for quantum annealing machines. Phys. Rev. X 4, 021008 (2014).

Denchev, V. S. et al. What is the computational value of finite-range tunneling? Phys. Rev. X 6, 031015 (2016).

Mandrà, S., Zhu, Z., Wang, W., Perdomo-Ortiz, A. & Katzgraber, H. G. Strengths and weaknesses of weak-strong cluster problems: A detailed overview of state-of-the-art classical heuristics versus quantum approaches. Phys. Rev. A 94, 022337, https://doi.org/10.1103/PhysRevA.94.022337 (2016).

Albash, T. & Lidar, D. A. Demonstration of a scaling advantage for a quantum annealer over simulated annealing. Phys. Rev. X 8, 031016, https://doi.org/10.1103/PhysRevX.8.031016 (2018).

Mandrà, S. & Katzgraber, H. G. A deceptive step towards quantum speedup detection. Quantum Sci. Technol. 3, 04LT01 (2018).

Choi, V. Minor-Embedding in Adiabatic Quantum Computation: I. The Parameter Setting Problem. ArXiv e-prints 0804.4884 (2008).

Choi, V. Minor-embedding in adiabatic quantum computation: I. the parameter setting problem. Quantum Inf. Process. 7, 193–209 (2008).

Klymko, C. F., Sullivan, B. D. & Humble, T. S. Adiabatic quantum programming: minor embedding with hard faults. Quantum Inf. Process. 13, 709–729 (2014).

Inc., D.-W. S. Developer guide for python (2018).

Acknowledgements

We thank Hidetoshi Nishimori (Tokyo Tech), Tetsuo Hatsuda (RIKEN), and Chih-Chieh Chen (ITRI) for discussions. This work was performed under the auspices of the U.S. Department of Energy by LLNL under Contract No. DE-AC52-07NA27344 (AG). Access to the D-Wave 2000Q computing system was provided by Oak Ridge National Laboratory. TSH acknowledges support from the Department of Energy, Office of Science, Early Career Research Project and the ORNL Directed Research and Development funds. This manuscript has been authored by UT-Battelle, LLC, under Contract No. DE-AC0500OR22725 with the U.S. Department of Energy. The Department of Energy will provide public access to these results of federally sponsored research in accordance with the DOE Public Access Plan (http://energy.gov/downloads/doe-public-access-plan).

Author information

Authors and Affiliations

Contributions

Initial idea was proposed by C.C.C. Design of test cases was done by A.G. and C.C.C. Conjugate-gradient solves was done by A.G. Calculation done on the DWave 2000Q was performed by C.C.C. and T.S.H. Cross checks with density matrix renormalization group was performed by S.S. and C.C.C. All authors contributed to writing and editing of the final manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chang, C.C., Gambhir, A., Humble, T.S. et al. Quantum annealing for systems of polynomial equations. Sci Rep 9, 10258 (2019). https://doi.org/10.1038/s41598-019-46729-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-019-46729-0

This article is cited by

-

Parallel in time dynamics with quantum annealers

Scientific Reports (2020)

-

Hybrid classical-quantum linear solver using Noisy Intermediate-Scale Quantum machines

Scientific Reports (2019)

-

Least-squares solutions to polynomial systems of equations with quantum annealing

Quantum Information Processing (2019)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.