Abstract

We introduce a deceptively simple behavioral task that robustly identifies two qualitatively different groups within the general population. When presented with an isochronous train of random syllables, some listeners are compelled to align their own concurrent syllable production with the perceived rate, whereas others remain impervious to the external rhythm. Using both neurophysiological and structural imaging approaches, we show group differences with clear consequences for speech processing and language learning. When listening passively to speech, high synchronizers show increased brain-to-stimulus synchronization over frontal areas, and this localized pattern correlates with precise microstructural differences in the white matter pathways connecting frontal to auditory regions. Finally, the data expose a mechanism that underpins performance on an ecologically relevant word-learning task. We suggest that this task will help to better understand and characterize individual performance in speech processing and language learning.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$209.00 per year

only $17.42 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Code availability

All computer code used for this study is available upon request.

Data availability

All data needed to evaluate the conclusions in the paper are present in the paper and/or the Supplementary Information. Additional data related to this paper may be requested from the authors.

References

Condon, W. S. & Sander, L. W. Neonate movement is synchronized with adult speech: interactional participation and language acquisition. Science 183, 99–101 (1974).

Repp, B. H. Sensorimotor synchronization: a review of the tapping literature. Psychon. Bull. Rev. 12, 969–992 (2005).

Woodruff Carr, K., White-Schwoch, T., Tierney, A. T., Strait, D. L. & Kraus, N. Beat synchronization predicts neural speech encoding and reading readiness in preschoolers. Proc. Natl Acad. Sci. USA 111, 14559–14564 (2014).

Patel, A. D. The evolutionary biology of musical rhythm: was Darwin wrong? PLoS Biol. 12, e1001821 (2014).

Janata, P. & Grafton, S. T. Swinging in the brain: shared neural substrates for behaviors related to sequencing and music. Nat. Neurosci. 6, 682–687 (2003).

Merchant, H., Grahn, J., Trainor, L., Rohrmeier, M. & Fitch, W. T. Finding the beat: a neural perspective across humans and non-human primates. Philos. Trans. R. Soc. Lond. B Biol. Sci. 370, 20140093 (2015).

Assaneo, M. F. & Poeppel, D. The coupling between auditory and motor cortices is rate-restricted: evidence for an intrinsic speech–motor rhythm. Sci. Adv. 4, o3842 (2018).

Park, H., Ince, R. A. A., Schyns, P. G., Thut, G. & Gross, J. Frontal top–down signals increase coupling of auditory low-frequency oscillations to continuous speech in human listeners. Curr. Biol. 25, 1649–1653 (2015).

Steele, C. J., Bailey, J. A., Zatorre, R. J. & Penhune, V. B. Early musical training and white-matter plasticity in the corpus callosum: evidence for a sensitive period. J. Neurosci. 33, 1282–1290 (2013).

Varnet, L., Ortiz-Barajas, M. C., Erra, R. G., Gervain, J. & Lorenzi, C. A cross-linguistic study of speech modulation spectra. J. Acoust. Soc. Am. 142, 1976–1989 (2017).

Ding, N. et al. Temporal modulations in speech and music. Neurosci. Biobehav. Rev. 81(Pt. B), 181–187 (2017).

Long, M. A. et al. Functional segregation of cortical regions underlying speech timing and articulation. Neuron 89, 1187–1193 (2016).

Magrassi, L., Aromataris, G., Cabrini, A., Annovazzi-Lodi, V. & Moro, A. Sound representation in higher language areas during language generation. Proc. Natl Acad. Sci. USA 112, 1868–1873 (2015).

Ripollés, P. et al. Strength of temporal white matter pathways predicts semantic learning. J. Neurosci. 37, 11101–11113 (2017).

López-Barroso, D. et al. Word learning is mediated by the left arcuate fasciculus. Proc. Natl Acad. Sci. USA 110, 13168–13173 (2013).

Thiebaut de Schotten, M. et al. Atlasing location, asymmetry and inter-subject variability of white matter tracts in the human brain with MR diffusion tractography. Neuroimage 54, 49–59 (2011).

Lopez-Barroso, D. et al. Language learning under working memory constraints correlates with microstructural differences in the ventral language pathway. Cereb. Cortex 21, 2742–2750 (2011).

Saffran, J. R., Aslin, R. N. & Newport, E. L. Statistical learning by 8-month-old infants. Science 274, 1926–1928 (1996).

Morillon, B. & Baillet, S. Motor origin of temporal predictions in auditory attention. Proc. Natl Acad. Sci. USA 114, E8913–E8921 (2017).

Cummins, F. Rhythm as entrainment: the case of synchronous speech. J. Phonetics 37, 16–28 (2009).

Hickok, G. & Poeppel, D. The cortical organization of speech processing. Nat. Rev. Neurosci. 8, 393–402 (2007).

Rauschecker, J. P. & Scott, S. K. Maps and streams in the auditory cortex: nonhuman primates illuminate human speech processing. Nat. Neurosci. 12, 718–724 (2009).

Pulvermüller, F. & Fadiga, L. Active perception: sensorimotor circuits as a cortical basis for language. Nat. Rev. Neurosci. 11, 351–360 (2010).

Catani, M., Jones, D. K. & Ffytche, D. H. Perisylvian language networks of the human brain. Ann. Neurol. 57, 8–16 (2005).

Zatorre, R. J., Fields, R. D. & Johansen-Berg, H. Plasticity in gray and white: neuroimaging changes in brain structure during learning. Nat. Neurosci. 15, 528–536 (2012).

Krakauer, J. W., Ghazanfar, A. A., Gomez-Marin, A., MacIver, M. A. & Poeppel, D. Neuroscience needs behavior: correcting a reductionist bias. Neuron 93, 480–490 (2017).

Hage, S. R. & Nieder, A. Dual neural network model for the evolution of speech and language. Trends Neurosci. 39, 813–829 (2016).

Guenther, F. H. Speech sound acquisition, coarticulation, and rate effects in a neural network model of speech production. Psychol. Rev. 102, 594–621 (1995).

Turken, A. U. & Dronkers, N. F. The neural architecture of the language comprehension network: converging evidence from lesion and connectivity analyses. Front. Syst. Neurosci. 5, 1 (2011).

Fries, P. Rhythms for cognition: communication through coherence. Neuron 88, 220–235 (2015).

Bengtsson, S. L. et al. Extensive piano practicing has regionally specific effects on white matter development. Nat. Neurosci. 8, 1148–1150 (2005).

Pernet, C. R., Wilcox, R. & Rousselet, G. A. Robust correlation analyses: false positive and power validation using a new open source Matlab toolbox. Front. Psychol. 3, 606 (2013).

Rousseeuw, P. J. & Van Driessen, K. A fast algorithm for the minimum covariance determinant estimator. Technometrics 41, 212–223 (1999).

Verboven, S. & Hubert, M. LIBRA: a MATLAB library for robust analysis. Chemom. Intell. Lab. Syst. 75, 127–136 (2005).

Rousselet, G. A. & Pernet, C. R. Improving standards in brain–behavior correlation analyses. Front. Hum. Neurosci. 6, 119 (2012).

Cureton, E. E. Rank-biserial correlation. Psychometrika 21, 287–290 (1956).

Kerby, D. S. The simple difference formula: an approach to teaching nonparametric correlation. Compr. Psychol. https://doi.org/10.2466/11.IT.3.1 (2014).

Dutoit, T. & Pagel, V. Le projet MBROLA: vers un ensemble de synthétiseurs vocaux disponibles gratuitement pour utilisation non-commerciale. Actes des Journées d'Études sur la Parole, Avignon 441–444 (1996).

Ermentrout, G. B. & Rinzel, J. Beyond a pacemaker’s entrainment limit: phase walk-through. Am. J. Physiol. 246, R102–R106 (1984).

Strogatz, S. H. Nonlinear Dynamics and Chaos with Applications to Physics, Biology, Chemistry, and Engineering (Perseus Books, 1994).

Chen, D. L., Schonger, M. & Wickens, C. oTree—an open-source platform for laboratory, online, and fieldexperiments. J. Behav. Exp. Financ. 9, 88–97 (2016).

Huss, M., Verney, J. P., Fosker, T., Mead, N. & Goswami, U. Music, rhythm, rise time perception and developmental dyslexia: perception of musical meter predicts reading and phonology. Cortex 47, 674–689 (2011).

Crump, M. J. C., McDonnell, J. V. & Gureckis, T. M. Evaluating Amazon’s Mechanical Turk as a tool for experimental behavioral research. PLoS One 8, e57410 (2013).

Chi, T. & Shamma, S. NSL Matlab Toolbox https://isr.umd.edu/Labs/NSL/Software.htm (2003).

Arthur, D. & Vassilvitskii, S. k-means++: the advantages of careful seeding. in Proc. 18th Ann. ACM-SIAM Symp. Discrete Algorithms 1027–1025 (Society for Industrial and Applied Mathematics, 2007).

Oostenveld, R., Fries, P., Maris, E. & Schoffelen, J. M. FieldTrip: open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput. Intell. Neurosci. 2011, 156869 (2011).

Adachi, Y., Shimogawara, M., Higuchi, M., Haruta, Y. & Ochiai, M. Reduction of non-periodic environmental magnetic noise in MEG measurement by Continuously Adjusted Least squares Method. IEEE Trans. Appl. Supercond. 11, 669–672 (2001).

de Cheveigné, A. & Simon, J. Z. Denoising based on time-shift PCA. J. Neurosci. Methods 165, 297–305 (2007).

Nolte, G. The magnetic lead field theorem in the quasi-static approximation and its use for magnetoencephalography forward calculation in realistic volume conductors. Phys. Med. Biol. 48, 3637–3652 (2003).

Fan, L. et al. The human Brainnetome Atlas: a new brain atlas based on connectional architecture. Cereb. Cortex 26, 3508–3526 (2016).

Poeppel, D. The analysis of speech in different temporal integration windows: cerebral lateralization as ‘asymmetric sampling in time’. Speech Commun. 41, 245–255 (2003).

Zatorre, R. J. & Belin, P. Spectral and temporal processing in human auditory cortex. Cereb. Cortex 11, 946–953 (2001).

Boemio, A., Fromm, S., Braun, A. & Poeppel, D. Hierarchical and asymmetric temporal sensitivity in human auditory cortices. Nat. Neurosci. 8, 389–395 (2005).

Giraud, A. L. et al. Endogenous cortical rhythms determine cerebral specialization for speech perception and production. Neuron 56, 1127–1134 (2007).

Telkemeyer, S. et al. Sensitivity of newborn auditory cortex to the temporal structure of sounds. J. Neurosci. 29, 14726–14733 (2009).

Jenkinson, M., Beckmann, C. F., Behrens, T. E. J., Woolrich, M. W. & Smith, S. M. FSL. Neuroimage 62, 782–790 (2012).

Leemans, A. & Jones, D. K. The B-matrix must be rotated when correcting for subject motion in DTI data. Magn. Reson. Med. 61, 1336–1349 (2009).

Smith, S. M. Fast robust automated brain extraction. Hum. Brain Mapp. 17, 143–155 (2002).

Wang, R., Benner, T., Sorensen, A. G. & Wedeen, V. J. Diffusion Toolkit: a software package for diffusion imaging data processing and tractography. Proc. Int. Soc. Magn. Reson. Med. 15, 3720 (2007).

Smith, S. M. et al. Tract-based spatial statistics: voxelwise analysis of multi-subject diffusion data. Neuroimage 31, 1487–1505 (2006).

Andersson, J. L. R., Jenkinson, M. & Smith, S. Non-linear registration, aka spatial normalisation. FMRIB Technial Report TR07JA2. (Oxford Centre for Functional Magnetic Resonance Imaging of the Brain, Department of Clinical Neurology, Oxford University, 2007).

Catani, M. et al. Symmetries in human brain language pathways correlate with verbal recall.Proc. Natl Acad. Sci. USA 104, 17163–17168 (2007).

Rojkova, K. et al. Atlasing the frontal lobe connections and their variability due to age and education: a spherical deconvolution tractography study. Brain Struct. Funct. 221, 1751–1766 (2016).

Smith, S. M. & Nichols, T. E. Threshold-free cluster enhancement: addressing problems of smoothing, threshold dependence and localisation in cluster inference. Neuroimage 44, 83–98 (2009).

Nichols, T. E. & Holmes, A. P. Nonparametric permutation tests for functional neuroimaging: a primer with examples. Hum. Brain Mapp. 15, 1–25 (2002).

Duffau, H. et al. New insights into the anatomo-functional connectivity of the semantic system: a study using cortico-subcortical electrostimulations. Brain 128, 797–810 (2005).

Acknowledgements

We thank J. Rowland and J.-R. King for comments and advice. This work was supported by NIH grant 2R01DC05660 (D.P.) and FP7 Ideas: European Research Council grant ERC-StG-313841 (R.d.D.-B.).

Author information

Authors and Affiliations

Contributions

M.F.A., P.R., J.O., W.M.L., R.d.D.-B. and D.P. designed the research and wrote the manuscript; M.F.A., P.R., J.O. and W.M.L. acquired and analyzed the data.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Journal peer review information Nature Neuroscience thanks Sylvain Baillet, Narly Golestani and other anonymous reviewer(s) for their contribution to the peer review of this work.

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Integrated supplementary information

Supplementary Figure 1 Demographic information.

(a) Age distribution within groups, no significant difference between groups’ age (Mann-Whitney-Wilcoxon test, two-sided p = 0.22). (b) Percentage of female participants within each group, no significant difference in the gender distribution between groups (Fisher’s exact test, p = 0.82). (c) Percentage of early multilingual participants; they learned at least one other language in addition to English before 4 years of age. No significant difference between groups (Fisher’s exact test, p = 0.19). (d) Percentage of late multilingual participants: participants who learned at least one other language in addition to English after 4 years of age. No significant difference between groups (Fisher’s exact test, p = 0.19). (e) Years of music experience: number of years that the participant played at least one instrument, more than 2 hours per week (Mann-Whitney-Wilcoxon test, two-sided p = 0.0032). Double asterisks stands for significant difference between conditions. In all previous panels: Orange/light blue correspond to high/low synchronizers (Nhigh = 43 and Nlow = 41), dots to individual participants, black lines to mean across participants, and shadowed region the SD. (f) Histogram of the years of music experience (N = 75). Participants above one SD were excluded.

Supplementary Figure 2 SSS-test, accelerated version.

(a) Histogram of the PLVs between the envelope of the perceived and produced speech signals, bandpass filtered at 3.5–5.5 Hz. Two clusters were obtained by a kmeans algorithm (black line represents the threshold; individuals above/below this line are labeled as high/low). (b) Produced speech envelopes’ spectrograms. Upper panel: average across high synchronizers (Nhigh = 33); the red trace represents the time evolution of the perceived syllable rate. Lower panel: average across low synchronizers (Nlow = 22). (c) Reported perception. Percent of blocks reported as: ‘The rate of the presented syllables did not change/increased/decreased’. Orange/blue correspond to high/low synchronizers’ answers.

Supplementary Figure 3 SSS-test, online version.

(a) Histogram of the PLVs between the envelope of the perceived and produced speech signals, bandpass filtered at 3.5–5.5 Hz. Two clusters were obtained by a kmeans algorithm (black line represents the threshold; individuals above/below this line are labeled as high/low). (b) Rhythm perception task: participants’ d prime scores. High synchronizers are marginally better than the lows (Nhigh = 35 and Nlow = 34; Mann-Whitney-Wilcoxon test, two-sided p = 0.08). Dots: individual subjects. Black lines: mean across participants. Shadowed region: SD. (c) Rhythm production task: Average spectra of the utterances’ envelopes. Low synchronizers showed more power, although only marginally, than highs for frequencies from 3.6 to 3.7 Hz. Straight lines on top: marginal difference between groups (Nhigh = 31 and Nlow = 44; Wilcoxon signed rank test, two-sided puncorrected < 0.001). No frequency survived a FDR correction under two-sided p = 0.05. Shadowed region: SD.

Supplementary Figure 4 Word-learning task and accelerated SSS-test, online version.

(a) Histogram of the PLVs between the envelope of the perceived and produced speech signals, bandpass filtered at 3.5–5.5 Hz. Two clusters were obtained by a kmeans algorithm (black line represents the threshold; individuals above/below this line are labeled as high/low). (b) Percent of correct answers for the statistical word-learning task (Nhigh = 25, Nlow=35; r = 0.37, Rank-Biserial Correlation; Mann-Whitney-Wilcoxon test, two-sided p = 0.015). Orange/light blue correspond to high/low synchronizers. * p<0.05. Dots: individual participants. Black lines: mean across participants. Shadowed region: SD. Green dashed line: chance level in a two alternative forced-choice post-learning task.

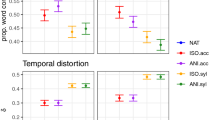

Supplementary Figure 5 Behavioral results from neurophysiological study.

Syllable detection task, percent of correctly identified syllables (N = 37, Wilcoxon Signed-Rank test, two-sided p = 0.011). Orange/blue correspond to high/low synchronizers. Dots: individual participants. Black lines: mean across participants. Shadowed region: SD. Green dashed line: chance level.

Supplementary Figure 6 Brain-to-stimulus synchronization data from neurophysiological study.

(a) PLV between brain activity and the cochlear envelope of the perceived syllables within each region where high and low synchronizers were significantly different. The aim of the scatter plots is to visualize the magnitude of the effect. Accordingly, the Rank-Biserial correlation for each region is: rBA9/46d = 0.55, rIFJ = 0.52, rBA9/46v = 0.51, rBA44d = 0.52, rIFS = 0.51, rBA45c = 0.52, rBA44v = 0.59, and rBA44op = 0.53. Orange/blue correspond to high/low synchronizers (Nhigh = 18 and Nlow = 19). Dots: individual participants. Black lines: mean across participants. Shadowed region: SD. Green dashed line: chance level. (b) Temporal ROI comprising bilateral superior, middle and posterior temporal gyri. (c) Whole brain surface map showing the PLV differences between groups (PLVhighs - PLVlows).

Supplementary Figure 7 Asymmetry of auditory entrainment results in neurophysiological study.

(a) Auditory asymmetry: comparison between groups. Asymmetry was computed as: (PLVright—PLVleft)/0.5(PLVright + PLVleft). The asymmetry of auditory entrainment was significantly different between groups (r = 0.42, Rank-Biserial Correlation; Mann-Whitney-Wilcoxon test, two-sided p = 0.029). Right inset: ROIs, left and right early auditory regions. (b) Brain-to-stimulus synchrony in each hemisphere averaged within temporal ROIs. The data show that, while the typical rightward lateralization in tracking the speech envelope was present in low synchronizers, this was reduced in the high synchrony group (Wilcoxon signed-rank test, two-sided plow = 0.0013 and phigh = 0.089). (c) Scatter plot of the correlation between structural and neurophysiological values. Mean FA laterality as a function of the auditory entrainment’s asymmetry. There was a significant relationship (N = 36, Spearman r = 0.36, p = 0.026; Skipped Spearman r = 0.38, t = 2.40, CI = 0.04, 0.66) between the neurophysiological auditory asymmetry and the structural laterality of the white matter cluster (see Fig. 3 for the cluster) that differentiates between groups. While the structural leftwards laterality and the reduced rightward asymmetry shown by the high synchronizers might seem counterintuitive, in both cases high synchronizers show a more leftwards pattern of results as compared to low synchronizers (the correlation between structural laterality and auditory asymmetry is positive). Orange/light blue correspond to high/low synchronizers respectively. ** p < 0.005 (Wilcoxon signed-rank test), * p < 0.05 (Mann-Whitney-Wilcoxon test). Dots: individual participants. Black lines: mean across participants. Shadowed region: SD.

Supplementary Figure 8 Tractography results.

(a) Box-plots showing the mean (center line) and SD (grey areas) volumes (corrected for TIV, see tractography methods) for the total left arcuate (sum of the anterior, posterior and long segment volumes; left panel; N = 36, Wilcoxon signed-rank test, two-sided p = 0.0025; r = 0.60, Rank-Biserial correlation; FDR-corrected for multiple comparisons) and the two control tracts (right panel: left IFOF, two-sided p = 0.76, r = 0.06, Rank-Biserial correlation, and left ILF, two-sided p = 0.57, r = 0.11, Rank-Biserial correlation). Even though we cannot specify which exact segment of the arcuate is responsible for these differences (the volume of each of the three segments of the arcuate on their own did not differentiate between high and low synchronizers: long segment, p = 0.21, r = 0.24; anterior segment, p = 1, r = 0; posterior segment, p = 0.079, r = 0.34, Rank-Biserial correlation), the results do show that the dorsal pathway for language processing—connecting temporo-parietal regions with premotor areas and the inferior frontal cortex—is structurally enhanced in high synchronizers as compared to low. (b) Arcuate dissections (long segment in red, anterior in green and posterior in yellow) for representative high (top) and low (bottom) synchronizers. The arcuate dissections for the remaining 30 individuals showed a similar result. The behavioral PLV obtained using the SSS-Test is also shown. (c). Scatter plots display the correlation (N = 36) between the total volume of the left arcuate and the FA laterality values of the TBSS cluster (see Fig. 3 in the main manuscript; negative values imply a leftwards structural lateralization; left panel, an overlap-in yellow- between the TBSS cluster-in red- and a probabilistic atlas of the left arcuate fasciculus-in blue63 is also shown) and also the synchrony of the left inferior/middle frontal gyri with the speech syllable rate (right panel). In other words, the larger the volume of the virtually dissected left arcuate, the more the TBSS FA cluster was lateralized to the left (see Fig. 3 in the main manuscript; Spearman’s r = −0.46, p = 0.0051; Skipped Spearman r = −0.43, t = −2.80, CI = −0.10, −0.72); and the higher the brain-to-stimuli synchrony in frontal regions was (that is, neurophysiology; Spearman´s r = 0.35, p = 0.036; Skipped Spearman r = 0.35, t = 2.18, CI = 0.02, 0.63). In contrast, the volumes of the left IFOF and left ILF did not differentiate between groups and were not correlated with the FA TBSS cluster (IFOF: Spearman´s r = −0.12, p = 0.45; Skipped Spearman r = −0.09, t = −0.52, CI = −0.42, 0.28; ILF: Spearman´s r = −0.18, p = 0.28; Skipped Spearman r = −0.19, t = −1.17, CI = −0.51, 0.15) and the frontal neurophysiological results (IFOF: Spearman´s r = −0.006, p = 0.97; Skipped Spearman r = 0.07, t = 0.46, CI = −0.29, 0.42; ILF: Spearman´s r = −0.10, p = 0.52; Skipped Spearman r = −0.22, t = −1.35, CI = −0.55, 0.13). No significant differences were found for FA or RD measures (all ps > 0.11). Orange/blue, high/low synchronizers. Dots: individual participants. Black lines: mean across participants. Shadowed region: SD.

Supplementary Figure 9 SSS-test, joint bimodal distribution.

For each joint distribution we calculated Hartigans’ dip test statistic for testing unimodality (Hartigan, J.A., Hartigan, P.M. The Dip test of Unimodality. Annals of Statistics, 13, 70–84, 1985) using R (R Core Team. R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. URL http://www.R-project.org/. 2013) and the diptest package (https://cran.r-project.org/web/packages/diptest/index.html). Although Hartigans´s dip test does not directly test for bimodality, it allows to reject the null hypothesis that the tested distribution is unimodal. The figure shows the histogram of the PLVs between the envelope of the perceived and produced speech signals, bandpass filtered at 3.5–5.5 Hz for (a) all the SSS-test experiments pooled together (N = 388; stable rate in-lab, stable rate in-lab replication, stable rate online version, accelerated rate in-lab and accelerated online version); (b) all the SSS-test experiments with a stable rate pooled together (N = 273; stable rate in-lab, stable rate in-lab replication and stable rate online version) and (c) all the SSS-test experiments with an accelerated syllable rate pooled together (N = 155 accelerated rate in-lab and accelerated online version). D, dip tests statistic.

Supplementary information

Supplementary Figures 1–9, Supplementary Table 1

Supplementary Figures 1–9 and Supplementary Table 1.

Rights and permissions

About this article

Cite this article

Assaneo, M.F., Ripollés, P., Orpella, J. et al. Spontaneous synchronization to speech reveals neural mechanisms facilitating language learning. Nat Neurosci 22, 627–632 (2019). https://doi.org/10.1038/s41593-019-0353-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41593-019-0353-z