Abstract

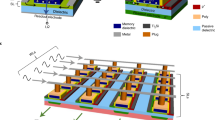

In the nervous system, dendrites, branches of neurons that transmit signals between synapses and soma, play a critical role in processing functions, such as nonlinear integration of postsynaptic signals. The lack of these critical functions in artificial neural networks compromises their performance, for example in terms of flexibility, energy efficiency and the ability to handle complex tasks. Here, by developing artificial dendrites, we experimentally demonstrate a complete neural network fully integrated with synapses, dendrites and soma, implemented using scalable memristor devices. We perform a digit recognition task and simulate a multilayer network using experimentally derived device characteristics. The power consumption is more than three orders of magnitude lower than that of a central processing unit and 70 times lower than that of a typical application-specific integrated circuit chip. This network, equipped with functional dendrites, shows the potential of substantial overall performance improvement, for example by extracting critical information from a noisy background with significantly reduced power consumption and enhanced accuracy.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

$259.00 per year

only $21.58 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The dataset used in this study is publicly available52 at http://ufldl.stanford.edu/housenumbers/. Other data that support the findings of this study are available from the corresponding author upon reasonable request. Source data are provided with this paper.

Code availability

The code that supports the multilayer neural network simulations in this study is available at https://github.com/Tsinghua-LEMON-Lab/Dendritic-computing. Other codes that support the findings of this study are available from the corresponding author upon reasonable request.

References

Simonyan, K. & Zisserman, A. Two-stream convolutional networks for action recognition in videos. In Proceedings of the 27th International Conference on Neural Information Processing Systems 568–576 (MIT Press, 2014).

Deng, L. et al. Recent advances in deep learning for speech research at Microsoft. In Proc. IEEE International Conference on Acoustics, Speech and Signal Processing 8604–8608 (IEEE, 2013).

Esteva, A. et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature 542, 115–118 (2017).

Silver, D. et al. Mastering the game of Go with deep neural networks and tree search. Nature 529, 484–489 (2016).

Chen, C., Seff, A., Kornhauser, A. & Xiao, J. Deepdriving: learning affordance for direct perception in autonomous driving. In Proc. IEEE International Conference on Computer Vision (ICCV) 2722–2730 (IEEE, 2015).

Quoc, V. L. Building high-level features using large scale unsupervised learning. In Proc. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 8595–8598 (IEEE, 2013).

Jouppi, N. P. et al. In-datacenter performance analysis of a tensor processing unit. In Proceedings of the 44th Annual International Symposium on Computer Architecture 1–12 (ACM, 2017).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Tang, J. et al. Bridging biological and artificial neural networks with emerging neuromorphic devices: fundamentals, progress and challenges. Adv. Mater. 31, 1902761 (2019).

Tsien, J. Z. Principles of intelligence: on evolutionary logic of the brain. Front. Syst. Neurosci. 9, 186 (2016).

Moore, J. J. et al. Dynamics of cortical dendritic membrane potential and spikes in freely behaving rats. Science 355, eaaj1497 (2017).

Takahashi, N., Oertner, T. G., Hegemann, P. & Larkum, M. E. Active cortical dendrites modulate perception. Science 354, 1587–1590 (2016).

Takahashi, N. et al. Locally synchronized synaptic inputs. Science 335, 353–356 (2012).

Trenholm, S. et al. Nonlinear dendritic integration of electrical and chemical synaptic inputs drives fine-scale correlations. Nat. Neurosci. 17, 1759–1766 (2014).

Antic, S. D., Zhou, W.-L., Moore, A. R., Short, S. M. & Ikonomu, K. D. The decade of the dendritic NMDA spike. J. Neurosci. Res. 88, 2991–3001 (2010).

Lavzin, M., Rapoport, S., Polsky, A., Garion, L. & Schiller, J. Nonlinear dendritic processing determines angular tuning of barrel cortex neurons in vivo. Nature 490, 397–401 (2012).

Trong, T. M. H., Motley, S. E., Wagner, J., Kerr, R. R. & Kozloski, J. Dendritic spines modify action potential back-propagation in a multicompartment neuronal model. IBM J. Res. Dev. 61, 11:11–11:13 (2017).

Hawkins, J. & Ahmad, S. Why neurons have thousands of synapses, a theory of sequence memory in neocortex. Front. Neural Circuits 10, 23 (2016).

Schemmel, J., Kriener, L., Müller, P. & Meier, K. An accelerated analog neuromorphic hardware system emulating NMDA- and calcium-based non-linear dendrites. In Proc. International Joint Conference on Neural Networks (IJCNN) 2217–2226 (IEEE, 2017).

Bhaduri, A., Banerjee, A., Roy, S., Kar, S. & Basu, A. Spiking neural classifier with lumped dendritic nonlinearity and binary synapses: a current mode VLSI implementation and analysis. Neural Comput. 30, 723–760 (2018).

Wang, Z. et al. Memristors with diffusive dynamics as synaptic emulators for neuromorphic computing. Nat. Mater. 16, 101–108 (2016).

Prezioso, M. et al. Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature 521, 61–64 (2015).

Choi, S. et al. SiGe epitaxial memory for neuromorphic computing with reproducible high performance based on engineered dislocations. Nat. Mater. 17, 335–340 (2018).

Tuma, T., Pantazi, A., Le Gallo, M., Sebastian, A. & Eleftheriou, E. Stochastic phase-change neurons. Nat. Nanotechnol. 11, 693–699 (2016).

Pickett, M. D., Medeiros-Ribeiro, G. & Williams, R. S. A scalable neuristor built with Mott memristors. Nat. Mater. 12, 114–117 (2012).

Stoliar, P. et al. A leaky-integrate-and-fire neuron analog realized with a Mott insulator. Adv. Funct. Mater. 27, 1604740 (2017).

Ambrogio, S. et al. Equivalent-accuracy accelerated neural-network training using analogue memory. Nature 558, 60–67 (2018).

Sheridan, P. M. et al. Sparse coding with memristor networks. Nat. Nanotechnol. 12, 784–789 (2017).

Wang, Z. et al. Fully memristive neural networks for pattern classification with unsupervised learning. Nat. Electron. 1, 137–145 (2018).

Yao, P. Fully hardware-implemented memristor convolutional neural network. Nature 577, 641–646 (2020).

Agmon-Snir, H., Carr, C. E. & Rinzel, J. The role of dendrites in auditory coincidence detection. Nature 393, 268–272 (1998).

Magee, J. C. Dendritic integration of excitatory synaptic input. Nat. Rev. Neurosci. 1, 181–190 (2000).

Branco, T., Clark, B. A. & Hausser, M. Dendritic discrimination of temporal input sequences in cortical neurons. Science 329, 1671–1675 (2010).

Vaidya, S. P. & Johnston, D. Temporal synchrony and gamma-to-theta power conversion in the dendrites of CA1 pyramidal neurons. Nat. Neurosci. 16, 1812–1820 (2013).

Stuart, G. J. & Spruston, N. Dendritic integration: 60 years of progress. Nat. Neurosci. 18, 1713–1721 (2015).

Cazemier, J. L., Clascá, F. & Tiesinga, P. H. E. Connectomic analysis of brain networks: novel techniques and future directions. Front. Neuroanat. 10, 110 (2016).

Fu, Z.-X. et al. Dendritic mitoflash as a putative signal for stabilizing long-term synaptic plasticity. Nat. Commun. 8, 31 (2017).

Bono, J. & Clopath, C. Modeling somatic and dendritic spike mediated plasticity at the single neuron and network level. Nat. Commun. 8, 706 (2017).

De Paola, V. et al. Cell type-specific structural plasticity of axonal branches and boutons in the adult neocortex. Neuron 49, 861–875 (2006).

Lai, H. C. & Jan, L. Y. The distribution and targeting of neuronal voltage-gated ion channels. Nat. Rev. Neurosci. 7, 548–562 (2006).

Strukov, D. B. & Williams, R. S. Exponential ionic drift: fast switching and low volatility of thin-film memristors. Appl. Phys. A 94, 515–519 (2008).

Wedig, A. et al. Nanoscale cation motion in TaOx, HfOx and TiOx memristive systems. Nat. Nanotechnol. 11, 67–74 (2015).

Kamiya, K. et al. Physics in designing desirable ReRAM stack structure—atomistic recipes based on oxygen chemical potential control and charge injection/removal. In Proc. International Electron Devices Meeting 20.22.21–20.22.24 (IEEE, 2012).

Goux, L. et al. Ultralow sub-500 nA operating current high-performance TiN/Al2O3/HfO2/Hf/TiN bipolar RRAM achieved through understanding-based stack-engineering. In Proc. Symposium on VLSI Technology (VLSIT) 159–160 (IEEE, 2012).

Palmer, L. M. et al. NMDA spikes enhance action potential generation during sensory input. Nat. Neurosci. 17, 383–390 (2014).

Ujfalussy, B. B., Makara, J. K., Lengyel, M. & Branco, T. Global and multiplexed dendritic computations under in vivo-like conditions. Neuron 100, 579–592 (2018).

Muñoz, W., Tremblay, R., Levenstein, D. & Rudy, B. Layer-specific modulation of neocortical dendritic inhibition during active wakefulness. Science 355, 954–959 (2017).

Chang, T., Jo, S.-H. & Lu, W. Short-term memory to long-term memory transition in a nanoscale memristor. ACS Nano 5, 7669–7676 (2011).

Ohno, T. et al. Short-term plasticity and long-term potentiation mimicked in single inorganic synapses. Nat. Mater. 10, 591–595 (2011).

Gao, B., Wu, H., Kang, J., Yu, H. & Qian, H. Oxide-based analog synapse: physical modeling, experimental characterization and optimization. In Proc. IEEE International Electron Devices Meeting (IEDM) 7.3.1–7.3.4 (IEEE, 2016).

Yao, P. et al. Face classification using electronic synapses. Nat. Commun. 8, 15199 (2017).

Netzer, Y. et al. Reading digits in natural images with unsupervised feature learning. In Proc. NIPS Workshop on Deep Learning and Unsupervised Feature Learning 1–9 (ACM, 2011).

Pei, J. et al. Towards artificial general intelligence with hybrid Tianjic chip architecture. Nature 572, 106–111 (2019).

Gidon, A. et al. Dendritic action potentials and computation in human layer 2/3 cortical neurons. Science 367, 83–87 (2020).

Acknowledgements

This work was supported in part by the China Key Research and Development Program (2019YFB2205403), the National Natural Science Foundation of China (61851404, 61674089 and 91964104), Beijing Municipal Science and Technology Project (Z191100007519008) and the National Young Thousand Talents Program.

Author information

Authors and Affiliations

Contributions

X.L., H.W. and S.S. conceived and designed the experiments. X.L. and Q.Z. performed the experiments. X.L., J.T., B.G. and H.W. analysed the data. P.Y., W.Z., L.D. and Y.X. contributed to the benchmark. W.W., N.D., J.J.Y. and H.Q. contributed to the materials and analysis. X.L., J.T., B.G. and H.W. wrote the paper. All authors discussed the results and commented on the manuscript. H.W. and H.Q. supervised the project.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Nanotechnology thanks Alexantrou Serb and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

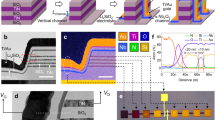

Extended Data Fig. 1 Characterizations of artificial dendrite device.

Current response from the fabricated artificial dendrite device under a positive and b negative voltage pulses, respectively. c, Cross-sectional transmission electron microscope image of the device and the corresponding elements distribution profile from energy-dispersive spectroscopy (scale bar, 20 nm). An oxygen-rich layer (light color) was observed at the TaOx/Pt interface, which was formed probably due to oxygen scavenge by Pt.

Extended Data Fig. 2 Device-to-device variation of the artificial dendrite devices.

a, Measured current response of three typical artificial dendrites in the integrated neural network shown in Fig. 5c under different applied voltages (from bottom to top: 2 V, 2.5 V and then from 3 V to 5 V in steps of 0.1 V). b, Measurement of the nonlinear filtering and integration property on eight artificial dendrite devices in response to a series of voltage pulses. c, Measurement of the nonlinear current response to a linearly ramped voltage pulse from 18 devices on a 4-inch wafer.

Extended Data Fig. 3 Inhibitory input and relaxation effects on the artificial dendrite device.

One inhibitory input cancelled out the integration effect of (a) one, (b) two, and (c) three excitatory inputs, respectively. d, Current response of the artificial dendrite to a series of voltage pulses to show the device in off, on, and relaxation states. Small voltage pulses (2 V, 1 ms) are applied before and after an on-switching voltage pulse (5 V, 10 ms).

Extended Data Fig. 4 Nonlinear current response of the artificial dendrite device.

Measured current responses on the artificial dendrite device in the (a) off-state, and (b) on-state, respectively. c, Current response under three consecutive voltage pulses (5 V in amplitude) lasting 1 ms applied at short intervals of 100 μs.

Extended Data Fig. 5 Simulation of multilayer neural network with artificial dendrites.

a, Illustration of the neural network example: three layers with sizes of (3072, 500, 10), nine dendritic branches for each neuron in the hidden layer, and four dendritic branches for each output neuron. b, Illustration of the neural network inference process on the SVHN dataset, where most noise information was filtered out through hierarchical dendritic filtering. For comparison, the image after the dendritic processing (that is, interlayer image) was reconstructed by combining different branches of the image processed by each dendrite, which was calculated by multiplying the dendrite’s output with the element-wise product of the input pixels and the corresponding synaptic weights.

Extended Data Fig. 6 Performance analysis of the multilayer neural network with artificial dendrites.

a, Power advantage as a function of the I-V nonlinearity (see definition in Supplementary Information S10) of the artificial dendrites with different ON/OFF ratios (while Vapplied is 5 V). b, Power advantage as a function of the I-V nonlinearity of the artificial dendrites with different applied voltages (while ON/OFF ratio is 10). c, Power advantage vs. I-V nonlinearity of the artificial dendrites (while Vapplied is 2 V and ON/OFF ratio is 100). The power advantage is defined as the ratio between the power consumption of the neural networks without and with artificial dendrites. d, Recognition accuracy vs. I-V nonlinearity of the artificial dendrites. The power consumption is calculated as the integral of the applied voltage and the output current of each soma at each time step.

Extended Data Fig. 7 Electrical characterizations of the optimized dendrite and soma devices.

a, Measured current response of the optimized dendrite device, 5 × 5 μm2 in size, with a low operation voltage of 2 V. b, More than 200 cycles of current response on the optimized dendrite device demonstrate a much reduced operation time of 10 μs under a voltage pulse of 3 V. c, Measured I-V curves for the optimized soma device with a reduced size of 2 × 2 μm2. d, Using an input voltage of 5 V for the optimized dendrite-soma unit, so that the required time duration of one image inference process can be reduced down to tens of microseconds.

Extended Data Fig. 8 Comparison of the recognition accuracy and power consumption on different hardware platforms.

MLP, multilayer perceptron for the classification of complete SVHN dataset.

Supplementary information

Supplementary Information

Supplementary Figs. 1–13, Discussion, Table 1 and refs. 1–7.

Source data

Source Data Fig. 2

Unprocessed measurement source data.

Source Data Fig. 4

Unprocessed measurement source data.

Source Data Fig. 5

Calculated source data.

Source Data Extended Data Fig. 1

Unprocessed measurement source data.

Source Data Extended Data Fig. 2

Unprocessed measurement source data.

Source Data Extended Data Fig. 3

Unprocessed measurement source data.

Source Data Extended Data Fig. 4

Unprocessed measurement source data.

Source Data Extended Data Fig. 5

Unprocessed measurement and simulation source data.

Source Data Extended Data Fig. 6

Simulation source data.

Source Data Extended Data Fig. 7

Unprocessed measurement source data.

Source Data Extended Data Fig. 8

Calculated source data.

Rights and permissions

About this article

Cite this article

Li, X., Tang, J., Zhang, Q. et al. Power-efficient neural network with artificial dendrites. Nat. Nanotechnol. 15, 776–782 (2020). https://doi.org/10.1038/s41565-020-0722-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41565-020-0722-5

This article is cited by

-

Temporal dendritic heterogeneity incorporated with spiking neural networks for learning multi-timescale dynamics

Nature Communications (2024)

-

A Fully-Integrated Memristor Chip for Edge Learning

Nano-Micro Letters (2024)

-

An ultrasmall organic synapse for neuromorphic computing

Nature Communications (2023)

-

A low-power vertical dual-gate neurotransistor with short-term memory for high energy-efficient neuromorphic computing

Nature Communications (2023)

-

Introducing the Dendrify framework for incorporating dendrites to spiking neural networks

Nature Communications (2023)