Abstract

The compositional design of metallic glasses (MGs) is a long-standing issue in materials science and engineering. However, traditional experimental approaches based on empirical rules are time consuming with a low efficiency. In this work, we successfully developed a hybrid machine learning (ML) model to address this fundamental issue based on a database containing ~5000 different compositions of metallic glasses (either bulk or ribbon) reported since 1960s. Unlike the prior works relying on empirical parameters for featurization of data, we designed modeling guided data descriptors in line with the recent theoretical models on amorphization in chemically complex alloys for the development of the hybrid classification-regression ML algorithms. Our hybrid ML modeling was validated both numerically and experimentally. Most importantly, it enabled the discovery of MGs (either bulk or ribbon) through the ML-aided deep search of a multitude of quaternary to scenery alloy compositions. The computational framework herein established is expected to accelerate the design of MG compositions and expand their applications by probing the complex and multi-dimensional compositional space that has never been explored before.

Similar content being viewed by others

Introduction

Since their discovery in 1960s1, metallic glasses (MGs) have attracted tremendous research interest because of their promising structural and functional properties, such as superb strength2,3, high wear/abrasion resistance4, remarkable magnetic permeability4, excellent corrosion resistance4, and superior electrochemical catalytic ability5. However, as of today, a widespread use of MGs in various engineering applications is still rare. One of the roadblocks against the wide deployment of MGs is their limited size, resulting from the poor glass forming ability (GFA) of MGs relative to that of other glassy systems, such as oxide glasses6. To date, the design of MGs with a targeted GFA is still challenging.

While quantifying GFA of MGs is the enduring research effort in the MG literature7,8,9, the search of MGs is mostly based on the empirical rules, such as the Inoue’s rules10, which is usually an iterative process of trial and error and hence inefficient on chemically complex alloys11,12. To overcome this issue, combinatorial experimental techniques, such as multi-target physical vapor deposition13, were adopted, which enabled the synthesis of hundreds of alloy compositions in one experimental run and hence greatly improved the efficiency in compositional screening. In addition, one emerging tool that can further accelerate the search of chemically complex MGs is the use of machine learning (ML) to guide high-throughput experiments14,15,16. In principle, ML is a data-driven approach and able to solve multi-variable (or multi-dimensional) complex problems by establishing a direct input–output correlation without specific programming17,18 that requires a thorough understanding of the underlying physics. In practice, the success of a ML-based approach depends on the size of database. The general trend is that the more are the valid data the more reliable are the ML-generated predictions18.

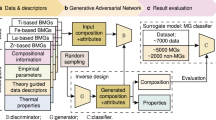

Owing to the versatility of the ML-based approach, it becomes increasingly popular as a tool in the recent development of MGs14,16,19,20,21. However, as discussed in ref. 18, one of the challenges with the application of ML in materials science is the relatively small size of the dataset available for use. By tradition, people tend to report only successful results (or positive data) while discard those unsuccessful (negative data) in the development of materials. Therefore, over the past 60 years, people reported about ~5000 MG compositions, out of which ~1000 came with measured GFAs. As seen in Fig. 1a, these MGs are mostly based on transition metals, such as Fe, Zr, Cu, Ni, Ti, Ag, Pd, and Co, and a few based on alkaline and rare earth metals. Despite the substantial efforts dedicated to the development of MGs, however, the size of the MG dataset is still small relative to others, such as the Inorganic Crystal Structure Database that contains ~240,000 structures (https://icsd.fiz-karlsruhe.de). In principle, people need both positive and negative data, as presentative and diverse as possible, in training ML models to avoid modeling bias. Thus, people built classification type ML models by including both typical MG and non-MG compositions14,16,19,21. As a result, the size of the dataset was extended to thousands of compositions19. Although the classification type ML models are useful for the fast screening of alloy compositions, they are incapable of pinpointing alloy compositions with superior GFAs.

a The comparison of different types of MGs in terms of their proportion in the hitherto reported MGs with measured GFAs. The inset highlights the elements used in the prior development of MGs with counts indicating the total number of times of an individual element being found in the reported MG compositions. b The breakdown of the data descriptors we developed. c The illustration for the training/validation of our classification ML model based on adaptive boosting (AB), support vector machine (SVM), and k-nearest neighbor (KNN). d The illustrated four types of regression ML models. e The illustration of the predicted GFA diagrams for ternary alloys. f The development of MGs through ML predictions. The scale bar indicates a length of 5 mm.

On the other hand, regression type ML models were also developed for predicting GFA of MGs19,22. In fact, the reported GFA values are mostly integers as they correspond to the size of the largest mold in alloy casting, which however are usually taken as the data output of different ML models19,22. Consequently, the round-up error of the reported GFA values results in significant data scattering23 (see Supplementary Fig. 1), hence compromising the predictability of the regression type ML models. In addition, the distribution of D is highly skewed towards the small value range (see Supplementary Fig. 2). Because of these difficulties, it is a challenge to develop a reliable ML model to guide the design of BMGs. According to the prior work19, if one considers all individual and collective attributes of constituent elements, he could come up with 186 data descriptors (a very high dimension). However, according to Zhang et al.18, data descriptors of a low dimension, as derived from physical modeling, are preferred for machine learning18,24. In this work, we propose hybrid machine learning algorithms to tackle the above problems by combining classification-type ML modeling for initial alloy screening and regression-type ML modeling for GFA prediction. As a result, we build our ML models based on the dataset containing ~7000 compositions (see Supplementary Table 1 for the counts of elements covered by these compositions) available in the literature8,22,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48 to date for classification and regression. In addition, we develop the data descriptors based on the physical models recently developed for glass formation in chemically complex systems49,50,51,52. In theory, this can reduce the size of dataset needed for successful ML applications18.

Results

Modeling-guided hybrid machine learning

In principle, even some elemental metals can be vitrified (to form glass) at the cooling rate as high as 1014 K s-1 53. However, such a high cooling rate corresponds to a very limited sample size or a very poor GFA. According to the prior work10, it is known that the GFA of metallic liquids can be improved by mixing different sized elements, enhancing the local chemical affinity between atomic pairs52 and increasing overall compositional complexity in favor of crystallization frustration54. In line with these three principles, we here develop three classes of descriptors (a total of 8) (see Fig. 1b and Supplementary Table 2) for our hybrid ML model, as described below:

-

(1)

Descriptors derived from atomic size. In the MG literature, the traditional models accounting for the atomic size effect on GFA were mostly developed for binary alloys55,56. In principle, these models were rooted in the same notion that one could easily distinguish solvent (or base) atoms from solute atoms in a MG. However, this notion does not apply easily to chemically complex or baseless MGs which apparently have no unique solvent or base elements, such as high entropy MGs (HEMGs)57. In this case, we generalized the geometrical model proposed by Ye et al.50 by further considering coordination number deficiency and chemical fluctuation around a central atom, which was not accounted for in the original model. As a result, in our generalized geometrical model, we derive four additional data descriptors by minimizing the elastic energy storage caused by the atomic size misfit in chemically complex alloys. Unlike the previous works50,58, we consider the presence of coordination deficiency and chemical fluctuation during energy minimization (see Supplementary Note 1).

-

(2)

Descriptors derived from local chemical affinity. According to the most recent atomistic simulations52, the GFA of multi-component alloys is correlated with the standard deviation (\(\varepsilon _ -\)) of the cohesive energies of the constituent elements and the averaged interaction energies \(\bar \varepsilon\) between dislike elements. Inspired by this recent discovery52, we compute \(\varepsilon _ -\) and \(\bar \varepsilon\) for the variety of alloy compositions and include them as the two additional data descriptors (see Supplementary Note 2).

-

(3)

Descriptors derived from entropy. According to the confusion principle59, one could improve the GFA of metallic liquids by promoting different crystallization pathways in their super-cooled state. As a result, the competition between the different crystallization processes leads to a dynamic slow-down and thus enhances the GFA. From a thermodynamic viewpoint, this is equivalent to reducing the configurational entropy of the super-cooled metallic liquid, which is in line with the Adam–Gibbs model60. However, it is extremely difficult to compute the configurational entropy of a chemically complex metallic liquid without knowing its configurational energy landscape. To circumvent this difficulty, we propose to compute the correlated configurational entropy of mixing (Scorr) as an approximation to probe the configurational entropy of multicomponent alloys (See Supplementary Note 3). It should be noted that Scorr was recently developed by He et al.51 in their study of phase selection in high entropy alloys (HEAs). According to He et al.51, HEAs tend to form glass with a low Scorr value while tend to form random solid solution with a high Scorr value. This finding is consistent with the confusion principle59. Besides, Scorr is implicitly related to chemical short-range ordering (CSRO). In principle, a higher degree of CSRO implies a lower value of Scorr. However, the correlation between CRSO and atomic size misfit is not straightforward. According to the recent work of He et al.61, atomic size misfit could act against or in favor of CRSOs, depending on the chemistry/size of atomic pairs. This is an interesting and important topic that warrants future research.

For our classification ML modeling, we consider binary labeling of the data output. We first designate the alloy compositions that were reported to be capable of forming MG ribbons or BMGs with the label ‘1’ as typical MG compositions, while the rest with the label ‘0’ as non-typical MG compositions. According to the previous works8,9, we take lnD rather than D—the GFA value of a MG composition—as the data output for our regression ML model. In doing so, we obtained a distribution of the data output close to a normal distribution, which reduced the data skewness (Supplementary Fig. 2) and thus improved the performance of our regression ML models (Supplementary Fig. 3).

In our database, we have ~5000 MG compositions and ~2000 non-MG compositions. To rule out a possible modeling bias towards MG compositions, we applied the synthetic minority over-sampling technique (SMOTE)62 to oversample our non-MG compositions by 150%. In the literature, SMOTE has been widely used in data over-sampling63, which can generate high-quality synthetic data after ruling out data outliers (noise) and data redundancy. In our work, the synthetic compositions were generated without knowing whether they belong to MGs or non-MGs. As a result, this generated additional ~3000 virtual non-MG compositions to keep the size balance between the typical MG and non-MG compositions. After that, we employed three ML algorithms for the training and validation of the classification ML model, including adaptive boosting (AB), support vector machine (SVM) and k-nearest neighbors (KNN) (see Fig. 1c and Methods). According to our results, the AB model outperforms the other two for its highest testing accuracy of 87.7% with the area-under-curve (AUC) value of 0.95 (Supplementary Fig. 4). It outperforms the model trained with the original data without over-sampling, especially with respect to the prediction about non-MGs (see Supplementary Fig. 5). Next, we developed four classes of regression ML models, including artificial neural network (ANN), support vector regression (SVR), Gaussian process regression (GPR), and random forest (RF), as illustrated in Fig. 1d. Out of the four classes, we built 11 different regression ML models with the use of different algorithms, which led to 6 ANN-based models, 3 GPR-based models, 1 SVR-based model, and 1 RF-based model (see Methods). Subsequently, we tested/validated the ANN-based ML models by hold-out validation while the others via 10-fold cross-validation (see Methods). As a result, we found three best performing regression ML models, i.e., the Levenberg-Marquardt backpropagation ANN (LMANN) model, the rational quadratic kernel GPR (RQGPR) model, and the Exponential kernel GPR (ExpGPR) model. These regression ML models are characterized by low error and high coefficient of determination (~0.8) (see Supplementary Fig. 6).

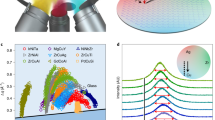

Validation by experiments

To validate our ML modeling, we calculate the GFAs of three ternary (or pseudo-ternary) alloy systems, namely, the Zr–Cu–Al, Zr–Co–Al, and Zr–Cu–(Ag, Al), using the LMANN, RQGPR, and ExpGPR ML model, and compare our predictions with the available experimental data, as reported by Inoue et al.64, Bhatt et al.65, and Kim et al.66. It is worth noting that the data for these systems were not included in our original dataset for training and testing of our ML models. As shown in Fig. 2a–c, it is evident that the RQGPR model captures the trend of the experimental results very well, including the cases that failed to produce BMGs (Fig. 2a, b) and the marginal cases that nearly produced a BMG (Fig. 2b). The predictions of the ExpGPR model are like those of the RQGPR model (Supplementary Fig. 7); however, the predictions of the LMANN model are relatively poor, some of which are far off the experimental results (see Supplementary Fig. 8) even though the LMANN model appears to show a similar coefficient of determination and relative error with the other two models. Based on these findings, we adopted the RQGPR model to guide our development of MGs.

a Comparison of the predicted GFAs of the Zr–Cu–Al alloys with the experimental results taken from the works of Inoue et al.64 and Bhatt et al.65, b comparison of the predicted GFAs of the Zr–Co–Al alloys with the experimental results taken from the works of Inoue et al.64 and Kim et al.66, and c comparison of the predicted GFAs of the Zr–Cu–(Ag, Al) alloys with the experimental results taken from the work of Inoue et al.64.

Discovery of chemically complex and high entropy metallic-glasses

Aside from the experimental validation, we demonstrate the predictability of our hybrid ML modeling through the discovery/development of MG compositions that have not been reported before. For the present work, we consider 8 common metals (Fe, Zr, Cu, Ni, Ti, Al, Co, and Hf) as the possible constituent elements. These metals are non-toxic and easy to access, which have economic values and hence were frequently used in the previous design of MGs, as seen in Fig. 1a. It is worth noting that the atomic fraction of each constituent element was varied over a wide range during the deep search of the compositional space for MGs. For the present work, we discovered 12 MG compositions based on the predictions of the classification ML model. For comparison, we developed 23 alloy compositions (see Supplementary Table 3), including the 12 MG compositions as well as another 11 non-MG compositions which failed to pass our classification ML modeling. Next, the GFAs of these 12 glass-forming alloys were predicted by the RQGPR model in terms of lnD, as shown in Fig. 3a. Based on these computational results, we prepared both bulk and/or ribbon samples for each of these 23 compositions by arc melting and/or melt spinning (see Methods).

As seen in Fig. 3b, the experimental results clearly show that the 12 predicted glass-forming alloys can form glass in either bulk or ribbon (or both) while those 11 predicted as bad glass-formers or non-MGs cannot (see Supplementary Fig. 9). Furthermore, we discovered that the 6 glass-forming alloys with high GFAs could form glass in bulk (see Fig. 3b, c). By comparison, 6 glass-forming alloys with predicted low GFAs could only form glassy ribbons (see Fig. 3b and Supplementary Fig. 10) but were crystallized in bulk (see Supplementary Fig. 11). Here it is worth noting that any small difference in lnD is magnified in an exponential manner when the prediction is transformed back to D. Thus, in the linear scale, the predicted trend is more meaningful than the exact value of the predicted D. Based on this trend prediction, we successfully locate the compositions of a few BMGs. We also measured the chemical composition of the glass-forming alloys with energy dispersive X-ray spectroscopy (EDX). The results show that the exact composition is consistent with the predicted one within a relative error of 9% (see Supplementary Table 4).

Before moving to the next section, it is worth noting that the traditional empirical approach67, which is based on the atomic size difference parameter (δ) and the heat of mixing (ΔHmix), predicts that an alloy tends to form a metallic glass if δ > 0.065 and ΔHmix <−12 kJ mol−1. However, as seen in Fig. 3d, this traditional approach is insufficient and unable to distinguish glass-forming alloys from others because they share a similar value of δ and/or ΔHmix. We also obtained the glass transition temperature (Tg), the crystallization onset temperature (Tx), and the liquidus temperature (Tl) of our MGs by differential scanning calorimetry (DSC) (see Methods and Supplementary Fig. 12) or following the method in refs. 68,69 (see Supplementary Note 4 and Supplementary Table 5). According to the literature26, the ratio of Tg/Tl—also termed as the ‘reduced glass transition temperature, Trg’—scales positively with the GFA of MGs. As shown in Fig. 4, there is a clear trend that Tg ~ 0.63Tl, within a margin of ±0.05 Tl for different classes of MGs, our high entropy MG ribbons are clearly off this trend because of their relatively high Tl or low Tg/Tl ratio. Based on the DSC results, we also calculated Trg and \(\gamma = \frac{{T_{{{\mathrm{x}}}}}}{{T_{{{\mathrm{g}}}} + T_{{{\mathrm{g}}}}}}\) of our MGs. As shown in Supplementary Fig. 13, the results reaffirm that the GFA of the high entropy MGs is low, which can be attributed to the high thermal stability (high Tl) of their corresponding crystals.

Discussion

First, we are interested in the difference between our designed chemical complex and high entropy MGs, which may cause their disparity in GFA. Figure 5a compares the descriptor values of the Zr54Ni16Cu14Ti10Al6 BMG and the Zr25Hf25Al25Co25 glass ribbon in radar charts. Apparently, their key difference is that the glass ribbon possesses a much higher descriptor values in \(\left| {\overline {\delta c} } \right|\) and \(\sigma _{\delta c}\). A similar pattern was observed on other MGs because of their compositional similarity (see Supplementary Fig. 14 and Supplementary Table 6). In theory50, these two descriptors quantify the tolerance of a crystalline structure against atomic size misfit. Therefore, the larger these two descriptors are the more tolerant (or stable) the crystalline structure is against amorphization. For the discovered MGs, since their descriptor values look similar except for \(\left| {\overline {\delta c} } \right|\) and \(\sigma _{\delta c}\), we can therefore attribute the disparity in their GFAs to a structural difference that is closely linked to the excessive atomic size misfit as indicated by the descriptor radar charts.

Radar chart of 8 descriptors in a Zr54Ni16Cu14Ti10Al6; Radar chart of 8 descriptors in b Zr25Hf25Al25Co25.The atom probe tomography (APT) elemental mapping of c the Zr25Hf25Al25Co25 glass ribbon and d the Zr54Ni16Cu14Ti10Al6 BMG. The scale bar indicates a length of 20 nm. e The transmission electron microscopy (TEM) image of the Zr25Hf25Al25Co25 glass ribbon. The inset is the selected area diffraction pattern. The scale bar indicates a length of 50 nm. f The high-resolution transmission electron microscopy (HRTEM) image of the Zr25Hf25Al25Co25 glass ribbon. The scale bar indicates a length of 5 nm. g The bright field scanning transmission electron microscopy (STEM) image of the Zr25Hf25Al25Co25 glass ribbon. The inset shows the HRTEM image at the same size scale. The scale bars indicate a length of 2 nm. h The TEM image of the Zr54Ni16Cu14Ti10Al6 BMG. The inset is the selected area diffraction pattern. The scale bar indicates a length of 20 nm. i The HRTEM image of the Zr54Ni16Cu14Ti10Al6 BMG. The scale bar indicates a length of 5 nm. j The STEM image of the Zr54Ni16Cu14Ti10Al6 BMG. The inset shows the HRTEM image at the same size scale. The scale bars indicate a length of 2 nm.

Inspired by the above results, we examined the amorphous structure of Zr54Ni16Cu14Ti10Al6 and Zr25Hf25Al25Co25 across micro-, nano-, and atomic-scale through 3D atom probe tomography (APT) and aberration corrected transmission electron microscopy (see Methods). As shown by the APT elemental mapping (Fig. 6c, d) image, both Zr25Hf25Al25Co25 and Zr54Ni16Cu14Ti10Al6 show structural homogeneity at the nano scale, and structural homogeneity is observed by the TEM and HRTEM results as well (Fig. 6e, f, h, i). Interestingly, the STEM image (Fig. 6g) of the Zr25Hf25Al25Co25 MG shows a clear sub-nanometer scale chemical fluctuation which cannot be resolved by APT and TEM owing to its limited spatial resolution (1–2 nm). According to the prior works, this contrast can be attributed to a density fluctuation, which can result from excessive plasticity70,71,72 or simply signals an unusual capacity of a MG for plastic flows72,73. In contrast, the STEM image (Fig. 6j) of the Zr54Ni16Cu14Ti10Al6 BMG display structural homogeneity in atomic-scale. Evidently, these findings echo well with what the data descriptor radar charts reveal about the probable structural difference between these two MGs (Fig. 6g, j): namely, the Zr25Hf25Al25Co25 MG can afford a higher degree of chemical fluctuation than the Zr54Ni16Cu14Ti10Al6 BMG. These findings are intriguing and warrant further research on the underlying physics.

In order to gage the relative importance of the data descriptors, we followed the literature16,74 and removed each data descriptor, one at a time, from the training of the classification and regression models. We ran out all eight data descriptors and measured the accuracy loss in terms of RMSE (see Supplementary Fig. 15). According to our results, \(\varepsilon _ -\) is the most influential descriptor seconded by \(\sigma _{\frac{{\delta N}}{N}}\) for both models. To further probe the difference between MGs and non-MGs, we obtained the parallel coordinate plots (PCPs)75 from our dataset of ~7000 compositions. In the literature, PCP is widely used for the visualization of sensitivity of multi-dimensional data75. For descriptors \(\sigma _{\frac{{\delta N}}{N}}\), \(\left\langle {\varepsilon ^2} \right\rangle ^{\frac{1}{2}}\), Scorr, \(\varepsilon _ -\) and \(\bar \varepsilon\), the PCP bands for MGs are narrower and denser than those for non-MGs (see Supplementary Fig. 16). By contrast, the bands of descriptors \(\left| {\overline {\delta c} } \right|\), \(\left| {\overline {\frac{{\delta N}}{N}} } \right|\), and \(\sigma _{\delta c}\) for MGs look similar to those for non-MGs. To reveal the difference, we plotted the distributions of the three descriptors for MGs and non-MGs (see Supplementary Fig. 17). The averages of the three descriptors for MGs are generally larger than those for non-MGs, which indicates the slight difference in local packing deficiency and local chemical fluctuation between MGs and non-MGs. In principle, PCP provides us a comparative view for the distributions of multiple descriptors76, the effectiveness of which in machine learning can be inferred by the difference if there is any, in the band structures. Besides, we studied the difference of BMGs and glass ribbons for different classes of MGs with PCPs (e.g., Zr-based, Fe-based, Cu-based, and La-based). As shown in Fig. 6a–h, it is evident that the descriptor values are further squeezed into a much narrower band for BMGs than for glass ribbons, which suggests a comparatively limited compositional space that allows for the discovery of BMGs.

To sum up, we develop a hybrid ML model in this work for the design of MGs with a targeted GFA, which is based on the largest dataset available in the literature and has only 8 data descriptors derived in conformity with the theoretical models. Our hybrid ML model exhibits a high computational performance in classification and regression, and validated by the experiments that systematically studied the GFAs of several ternary glass-forming systems. We also demonstrate the predictability of the hybrid ML model through the development of chemically complex MGs—from quaternary to senary systems—based on the ML predictions. It is interesting to note that the values of the data descriptors also provide important clues to the hidden structural characteristics of our designed MGs, which are also validated by the experiments. These findings are important, which may pave the way towards the computational discovery of chemically complex MGs with unusual amorphous structures.

Methods

ML algorithms

Our ML algorithms are implemented by Matlab R2020a with Statistics and Machine Learning Toolbox and Deep Learning Toolbox.

Adaptive boosting (AB)

AB model is trained in the Classification Learner App from Statistics and Machine Learning Toolbox. 10-fold cross-validation is applied. Model type is set to Boosted Trees with the maximum number of splits set to 342, the number of learners to 143, and learning rate to 0.961. Other parameters are set automatically to achieve the best training results.

Support vector machine (SVM)

SVM model is trained in the Classification Learner App from Statistics and Machine Learning Toolbox. 10-fold cross-validation is applied. Model type is set to Fine Gaussian SVM with the kernel scale set to 0.71 and the box constraint to 1. Other parameters are set automatically to achieve the best training results.

K-nearest neighbor (KNN)

KNN model is trained in the Classification Learner App from Statistics and Machine Learning Toolbox. 10-fold cross-validation is applied. We use Euclidean distance metric with equal weight and the number of neighbors is set to 10. Other parameters are set automatically to achieve the best training results.

Artificial neural network (ANN)

ANN in our work consists of one hidden layer with an activation function \({{{\mathrm{S}}}}\left( {{{{\mathrm{a}}}}_{{{\mathrm{i}}}}} \right) = \frac{1}{{1 + {{{\mathrm{e}}}}^{ - {{{\mathrm{a}}}}_{{{\mathrm{i}}}}}}}\) and 20 hidden neurons determined through pre-training (See Supplementary Fig. 18). We use the Matlab function net=feedforwardnet(hiddenneurons, ‘trainingfunction’). Different training functions are applied to train the ANN-based ML model, including Levenberg–Marquardt backpropagation (LMANN), Broyden–Fletcher–Goldfarb–Shanno quasi-Newton backpropagation (BFGANN), conjugate gradient backpropagation with Powell-Beale restarts (CGBANN), conjugate gradient backpropagation with Polak–Ribiere updates (CGPANN), gradient descent backpropagation (GDANN), and gradient descent with adaptive learning rate backpropagation (GDAANN). To avoid overfitting, we applied a hold-out validation method to the ANN-based ML models and divided the dataset into three subsets with 70% data for training, 15% for validation, and 15% for testing. The training parameters are set as net.trainParam.Max_fail = 10; net.trainParam.goal = 0.02. The initial biases and weight matrices of input and layer are generated randomly.

Gaussian processed regression (GPR)

GPR models are trained in the Regression Learner App from Statistics and Machine Learning Toolbox. 10-fold cross-validation is applied. We employ three different kernels to train the GPR-based ML model, including the rational quadratic kernel (RQGPR), the squared exponential kernel (SqExpGPR), and the Exponential kernel (ExpGPR). For RQGPR model, the model type is set to Rational Quadratic GPR; the kernel function is set to Rational Quadratic \({{{\mathrm{k}}}}\left( {{{{\mathrm{x}}}},{{{\mathrm{x}}}}^\prime \left| {\uptheta} \right.} \right) = \sigma _{{{\mathrm{f}}}}^2\left( {1 + \frac{{\left| {{{{\mathrm{x}}}} - {{{\mathrm{x}}}}^\prime } \right|^2}}{{2{\upalpha}\sigma _{{{\mathrm{l}}}}^2}}} \right)^{ - {\upalpha}}\); the training parameters are set as σf = 2.19, σl = 0.89, and α = 0.16. For SqExpGPR model, the model type is set to Squared Exponential GPR; the kernel function is set to Squared Exponential \({{{\mathrm{k}}}}\left( {{{{\mathrm{x}}}},{{{\mathrm{x}}}}^\prime \left| {\uptheta} \right.} \right) = {\upsigma}_{{{\mathrm{f}}}}^2{{{\mathrm{exp}}}}\left[ { - \frac{1}{2}\frac{{\left| {{{{\mathrm{x}}}} - {{{\mathrm{x}}}}^\prime } \right|^2}}{{{\upsigma }}_{{{\mathrm{l}}}}^2}} \right]\); the training parameters are set as σf = 1.48, σl = 0.70, β = 0.50, ε = 0.48; For ExpGPR model, the model type is set to Exponential GPR; the kernel function is set to Exponential \({{{\mathrm{k}}}}\left( {{{{\mathrm{x}}}},{{{\mathrm{x}}}}^\prime \left| {\uptheta} \right.} \right) = {\upsigma}_{{{\mathrm{f}}}}^2{{{\mathrm{exp}}}}\left[ { - \frac{{\left| {{{{\mathrm{x}}}} - {{{\mathrm{x}}}}^\prime } \right|}}{{{\upsigma }}_{{{\mathrm{l}}}}}} \right]\); the parameters are set as σf = 1.86, σl = 2.79, β = 0.53, ε = 0.28. Other parameters are set automatically to achieve the best training results.

Support vector regression (SVR)

SVR model is trained in the Regression Learner App from Statistics and Machine Learning Toolbox. 10-fold cross-validation is applied. The model type is set to Fine Gaussian SVM; the kernel function is set to Gaussian \({{{\mathrm{k}}}}\left( {{{{\mathrm{x}}}},{{{\mathrm{x}}}}^\prime } \right) = {{{\mathrm{exp}}}}( - \left\| {{{{\mathrm{x}}}} - {{{\mathrm{x}}}}^\prime } \right\|^{ 2})\); the kernel scale is set to 0.71 while the box constraint is set to 0.93 and ε = 0.093. Other parameters are set automatically.

Random forest (RF)

The RF model is trained in the Regression Learner App from Statistics and Machine Learning Toolbox. 10-fold cross-validation is applied. The model type is set to Boosted Trees; minimum leaf size is set to 8; number of learning is set to 30; the learning rate is set to 0.1. Other parameters or options are set automatically.

Arc-melting

Pure metals, including Ti, Zr, Hf, Al, Co, Ni, Fe, Cu, and Nb, with a purity level higher than 99.95% are used to prepare rod samples. We use a lab-scale arc-melting furnace to melt the pure metals with vacuum level as high as 8 × 10−4 Pa and a melted Ti ingot to avoid possible oxidation. Then the melted samples are casted in copper molds with dimensions of Ф2 mm, Ф3 mm, and Ф5 mm.

Melt spinning

We first prepare ingot samples by casting mentioned above. Then the ingots are melted in a lab-scale induction-melting furnace with a vacuum as high as 8 × 10−4 Pa and the ribbons are prepared by a single copper roller melt spinning with a rotating speed of 75 r/s.

Differential scanning calorimetry (DSC)

DSC experiments are performed using both DSC3/700 and TGA DSC3+HT/1600 (METTLER TOLEDO) with a heating rate of 20 K/min and argon flow with a rate of 50 mL/min.

Scanning transmission electron microscope (STEM)

STEM samples are prepared by PIPS II MODEL 695 (GANTAN) and the experiments are carried out by JEM-ARM300F transmission electron microscope equipped with double spherical aberration correctors.

Atom probe tomography (APT)

Needle-shaped APT specimens are fabricated by lift-outs and annular milled in a FEI Scios focused ion beam/scanning electron microscope (FIB/SEM). The APT characterizations are performed in a local electrode atom probe (CAMEACA LEAP 5000 XR). The specimens are analyzed at 70 K in voltage mode, at a pulse repetition rate of 200 kHz, a pulse fraction of 20%, and an evaporation detection rate of 0.2% atom per pulse. Imago Visualization and Analysis Software (IVAS) version 3.8 is used for creating the 3D reconstructions and data analysis.

Data availability

The exact datasets used in this study are freely available at https://citrination.com/datasets/198590/. The source code used in this study could be downloaded from GitHub (https://github.com/ZHOU-Ziqing/ML_Metallicglass_GFA). Additional data related to this work is available on reasonable request.

References

Klement, W., Willens, R. H. & Duwez, P. O. L. Non-crystalline structure in solidified gold-silicon alloys. Nature 187, 869–870 (1960).

Wang, W. H. The elastic properties, elastic models and elastic perspectives of metallic glasses. Prog. Mater. Sci. 57, 487–656 (2012).

Johnson, W. L. Bulk glass-forming metallic alloys: science and technology. MRS Bull. 24, 42–56 (1999).

Ashby, M. F. & Greer, A. L. Metallic glasses as structural materials. Scr. Mater. 54, 321–326 (2006).

Hu, Y. C. et al. A highly efficient and self-stabilizing metallic-glass catalyst for electrochemical hydrogen generation. Adv. Mater. 28, 10293–10297 (2016).

Inoue, A., Zhang, T. & Masumoto, T. Glass-forming ability of alloys. J. Non Cryst. Solids 156–158, 473–480 (1993).

Na, J. H. et al. Compositional landscape for glass formation in metal alloys. Proc. Natl Acad. Sci. USA. 111, 9031–9036 (2014).

Johnson, W. L., Na, J. H. & Demetriou, M. D. Quantifying the origin of metallic glass formation. Nat. Commun. 7, 1–7 (2016).

Lu, Z. P. & Liu, C. T. A new glass-forming ability criterion for bulk metallic glasses. Acta Mater. 50, 3501–3512 (2002).

Inoue, A. Stabilization of metallic supercooled liquid and bulk amorphous alloys. Acta Mater. 48, 279–306 (2000).

Wada, T., Jiang, J., Yubuta, K., Kato, H. & Takeuchi, A. Septenary Zr–Hf–Ti–Al–Co–Ni–Cu high-entropy bulk metallic glasses with centimeter-scale glass-forming ability. Materialia 7, 100372 (2019).

Zhao, S. F. et al. Pseudo-quinary Ti20Zr20Hf20Be20(Cu20-xNix) high entropy bulk metallic glasses with large glass forming ability. Mater. Des. 87, 625–631 (2015).

Li, M. X. et al. High-temperature bulk metallic glasses developed by combinatorial methods. Nature 569, 99–103 (2019).

Ren, F. et al. Accelerated discovery of metallic glasses through iteration of machine learning and high-throughput experiments. Sci. Adv. 4, eaaq1566 (2018).

Zhou, Z. et al. Machine learning guided appraisal and exploration of phase design for high entropy alloys. npj Comput. Mater. 5, 1–9 (2019).

Liu, X. et al. Machine learning-based glass formation prediction in multicomponent alloys. Acta Mater. 201, 182–190 (2020).

Butler, K. T. et al. Machine learning for molecular and materials science. Nature 559, 547–555 (2018).

Zhang, Y. & Ling, C. A strategy to apply machine learning to small datasets in materials science. npj Comput. Mater. 4, 28–33 (2018).

Ward, L. et al. A machine learning approach for engineering bulk metallic glass alloys. Acta Mater. 159, 102–111 (2018).

Xiong, J., Shi, S.-Q. & Zhang, T.-Y. A machine-learning approach to predicting and understanding the properties of amorphous metallic alloys. Mater. Des. 187, 108378 (2019).

Sun, Y. T., Bai, H. Y., Li, M. Z. & Wang, W. H. Machine learning approach for prediction and understanding of glass-forming ability. J. Phys. Chem. Lett. 8, 3434–3439 (2017).

Xiong, J., Zhang, T. Y. & Shi, S. Q. Machine learning prediction of elastic properties and glass-forming ability of bulk metallic glasses. MRS Commun. 9, 576–585 (2019).

Suryanarayana, C., Seki, I. & Inoue, A. A critical analysis of the glass-forming ability of alloys. J. Non Cryst. Solids 355, 355–360 (2009).

Butler, K. T., Davies, D. W., Cartwright, H., Isayev, O. & Walsh, A. Machine learning for molecular and materials science. Nature 559, 547–555 (2018).

Guo, S. & Liu, C. T. New glass forming ability criterion derived from cooling consideration. Intermetallics 18, 2065–2068 (2010).

Lu, Z. P., Bei, H. & Liu, C. T. Recent progress in quantifying glass-forming ability of bulk metallic glasses. Intermetallics 15, 618–624 (2007).

Liu, W. Y., Zhang, H. F., Wang, A. M., Li, H. & Hu, Z. Q. New criteria of glass forming ability, thermal stability and characteristic temperatures for various bulk metallic glass systems. Mater. Sci. Eng. A 459, 196–203 (2007).

Tan, H., Zhang, Y., Ma, D., Feng, Y. P. & Li, Y. Optimum glass formation at off-eutectic composition and its relation to skewed eutectic coupled zone in the La based La-Al-(Cu,Ni) pseudo ternary system. Acta Mater. 51, 4551–4561 (2003).

Komatsu, T. Application of fragility concept to metallic glass formers. J. Non Cryst. Solids 185, 199–202 (1995).

Long, Z. et al. A new criterion for predicting the glass-forming ability of bulk metallic glasses. J. Alloy. Compd. 475, 207–219 (2009).

Gu, J.-L., Shao, Y. & Yao, K.-F. The novel Ti-based metallic glass with excellent glass forming ability and an elastic constant dependent glass forming criterion. Materialia 8, 100433 (2019).

Long, Z. et al. A new correlation between the characteristics temperature and glass-forming ability for bulk metallic glasses. J. Therm. Anal. Calorim. 132, 1645–1660 (2018).

Inoue, A., Kitamura, A. & Masumoto, T. The effect of aluminium on mechanical properties and thermal stability of (Fe, Ni) -Al-P ternary amorphous alloys. J. Mater. Sci. 18, 753–758 (1983).

Ye, Y. F., Liu, X. D., Wang, S., Liu, C. T. & Yang, Y. The general effect of atomic size misfit on glass formation in conventional and high-entropy alloys. Intermetallics 78, 30–41 (2016).

He, Q. F., Ye, Y. F. & Yang, Y. Formation of random solid solution in multicomponent alloys: from Hume-Rothery rules to entropic stabilization. J. Phase Equilibria Diffus. 38, 416–425 (2017).

Ye, Y. F., Wang, Q., Lu, J., Liu, C. T. & Yang, Y. High-entropy alloy: challenges and prospects. Mater. Today 19, 349–362 (2016).

Miracle, D. B. & Senkov, O. N. A critical review of high entropy alloys and related concepts. Acta Mater. 122, 448–511 (2017).

Yeh, J. et al. Formation of simple crystal structures in Cu-Co-Ni-Cr-Al-Fe-Ti-V alloys with multiprincipal metallic elements. Metall. Mater. Trans. 35, 2533–2536 (2004).

Yeh, J. W. et al. Nanostructured high-entropy alloys with multiple principal elements: novel alloy design concepts and outcomes. Adv. Eng. Mater. 6, 299–303 (2004).

Samaei, A. T., Mirsayar, M. M. & Aliha, M. R. M. The microstructure and mechanical behavior of modern high temperature alloys. Eng. Solid Mech. 3, 1–20 (2015).

Guo, S. & Liu, C. T. Phase stability in high entropy alloys: formation of solid-solution phase or amorphous phase. Prog. Nat. Sci. Mater. Int. 21, 433–446 (2011).

Tsai, M. H. Physical properties of high entropy alloys. Entropy 15, 5338–5345 (2013).

Wang, Z., Guo, S. & Liu, C. T. Phase selection in high-entropy alloys: from nonequilibrium to equilibrium. JOM 66, 1966–1972 (2014).

Suzuki, K., Kataoka, N., Inoue, A., Makino, A. & Masumoto, T. High saturation magnetization and soft magnetic properties of bcc Fe–Zr–B alloys with ultrafine grain structure. Mater. Trans. JIM 31, 743–746 (1990).

Senkov, O. N., Miller, J. D., Miracle, D. B. & Woodward, C. Accelerated exploration of multi-principal element alloys with solid solution phases. Nat. Commun. 6, 1–10 (2015).

Zhang, Y., Yang, X. & Liaw, P. K. Alloy design and properties optimization of high-entropy alloys. JOM 64, 830–838 (2012).

Ding, Z. Y., He, Q. F. & Yang, Y. Exploring the design of eutectic or near-eutectic multicomponent alloys: from binary to high entropy alloys. Sci. China Technol. Sci. 61, 159–167 (2018).

Villars, P. Handbook of ternary alloy phase diagrams/ P. Villars, A. Prince and H. Okamoto. (American society for metals, 1995).

Egami, T. & Waseda, Y. Atomic size effect on the glass forming ability of metallic alloys. J. Non Cryst. Solids 64, 113–134 (1984).

Ye, Y. F., Liu, C. T. & Yang, Y. A geometric model for intrinsic residual strain and phase stability in high entropy alloys. Acta Mater. 94, 152–161 (2015).

He, Q. F., Ding, Z. Y., Ye, Y. F. & Yang, Y. Design of high-entropy alloy: a perspective from nonideal mixing. JOM 69, 2092–2098 (2017).

Hu, Y. C., Schroers, J., Shattuck, M. D. & O’Hern, C. S. Tuning the glass-forming ability of metallic glasses through energetic frustration. Phys. Rev. Mater. 3, 85602 (2019).

Zhong, L., Wang, J., Sheng, H., Zhang, Z. & Mao, S. X. Formation of monatomic metallic glasses through ultrafast liquid quenching. Nature 512, 177–180 (2014).

Shintani, H. & Tanaka, H. Frustration on the way to crystallization in glass. Nat. Phys. 2, 200–206 (2006).

Egami, T. Universal criterion for metallic glass formation. Mater. Sci. Eng. A 226, 261–267 (1997).

Miracle, D. B., Directorate, M., Force, A. & Base, W. A. F. A structural model for metallic glasses. Nat. Mater. 3, 697–702 (2004).

Wang, W. H. High-entropy metallic glasses. JOM 66, 2067–2077 (2014).

Ye, Y. F. et al. Atomic-scale distorted lattice in chemically disordered equimolar complex alloys. Acta Mater. 150, 182–194 (2018).

Lindsay Greer, A. Confusion by design. Nature 366, 303–304 (1993).

Adam, G. & Gibbs, J. H. On the temperature dependence of cooperative relaxation properties in glass-forming liquids. J. Chem. Phys. 43, 139–146 (1965).

He, Q. F. et al. Understanding chemical short-range ordering/demixing coupled with lattice distortion in solid solution high entropy alloys. Acta Mater. 216, 117140 (2021).

Chawla, N. V., Bowyer, K. W., Hall, L. O. & Kegelmeyer, W. P. SMOTE: Synthetic Minority Over-sampling Technique. J. Artif. Intell. Res. 16, 321–357 (2002).

Fernández, A., García, S., Herrera, F. & Chawla, N. V. SMOTE for learning from imbalanced data: progress and challenges, marking the 15-year anniversary. J. Artif. Intell. Res. 61, 863–905 (2018).

Inoue, A. & Takeuchi, A. Recent development and application products of bulk glassy alloys. Acta Mater. 59, 2243–2267 (2011).

Bhatt, J. et al. Optimization of bulk metallic glass forming compositions in Zr-Cu-Al system by thermodynamic modeling. Intermetallics 15, 716–721 (2007).

Kim, W. C. et al. Formation of crystalline phase in the glass matrix of Zr-Co-Al glass-matrix composites and its effect on their mechanical properties. Met. Mater. Int. 23, 1216–1222 (2017).

Guo, S., Hu, Q., Ng, C. & Liu, C. T. More than entropy in high-entropy alloys: forming solid solutions or amorphous phase. Intermetallics 41, 96–103 (2013).

Yang, B., Liu, C. T. & Nieh, T. G. Unified equation for the strength of bulk metallic glasses. Appl. Phys. Lett. 88, 2006–2008 (2006).

Ye, J. C., Lu, J., Yang, Y. & Liaw, P. K. Extraction of bulk metallic-glass yield strengths using tapered micropillars in micro-compression experiments. Intermetallics 18, 385–393 (2010).

Huang, B. et al. Density fluctuations with fractal order in metallic glasses detected by synchrotron X-ray nano-computed tomography. Acta Mater. 155, 69–79 (2018).

Lu, Y. M. et al. Structural signature of plasticity unveiled by nano-scale viscoelastic contact in a metallic glass. Sci. Rep. 6, 1–9 (2016).

Qiao, J. C. et al. Structural heterogeneities and mechanical behavior of amorphous alloys. Prog. Mater. Sci. 104, 250–329 (2019).

Liu, Y. H. et al. Super plastic bulk metallic glasses at room temperature. Science 315, 1385–1388 (2007).

Huang, W., Martin, P. & Zhuang, H. L. Machine-learning phase prediction of high-entropy alloys. Acta Mater. 169, 225–236 (2019).

Inselberg, A. Multidimensional detective. in Proceedings of the IEEE Symposium on Information Visualization 100–107 (1997).

Li, J., Martens, J. B. & Van Wijk, J. J. Judging correlation from scatterplots and parallel coordinate plots. Inf. Vis. 9, 13–30 (2010).

Acknowledgements

The research of YY is supported by the Research Grant Council, the Hong Kong Government, through the General Research Fund (GRF) with the grant numbers CityU11209317, CityU11213118, and CityU11200719. Atom probe tomography research was conducted by Dr. JH LUAN at the Inter-University 3D Atom Probe Tomography Unit of City University of Hong Kong, which is supported by the CityU grant 9360161.

Author information

Authors and Affiliations

Contributions

Y.Y. supervised the project. Y.Y. and Z.Q.Z. conceived the idea. Z.Q.Z. developed the ML modeling. Z.Q.Z. and Q.F.H. carried out experiments on alloy casting, melt spinning, DSC, TEM, EDS. X.D.L. performed XRD. Q.W. performed HRTEM and STEM. J.H.L. and C.T.L. performed APT. Z.Q.Z. and Y.Y. wrote the manuscript.

Corresponding author

Ethics declarations

Competing interests

Y.Y., Z.Q.Z., and Q.F.H. are inventors on a filed patent related to the newly discovered BMG compositions described in this work. The rest of the authors declare no competing interest.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhou, Z.Q., He, Q.F., Liu, X.D. et al. Rational design of chemically complex metallic glasses by hybrid modeling guided machine learning. npj Comput Mater 7, 138 (2021). https://doi.org/10.1038/s41524-021-00607-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41524-021-00607-4

This article is cited by

-

Metallic glass-based triboelectric nanogenerators

Nature Communications (2023)

-

A generative deep learning framework for inverse design of compositionally complex bulk metallic glasses

npj Computational Materials (2023)

-

Machine learning atomic dynamics to unfold the origin of plasticity in metallic glasses: From thermo- to acousto-plastic flow

Science China Materials (2022)