Abstract

In order to delineate which minimalistic physical primitives can enable the full power of universal quantum computing, it has been fruitful to consider various measurement based architectures which reduce or eliminate the use of coherent unitary evolution, and also involve operations that are physically natural. In this context previous works had shown that the triplet-singlet measurement of two qubit angular momentum (or equivalently two qubit exchange symmetry) yields the power of quantum computation given access to a few additional different single qubit states or gates. However, Freedman, Hastings and Shokrian-Zini1 recently proposed a remarkable conjecture, called the ‘STP=BQP’ conjecture, which states that the two-qubit singlet/triplet measurement is quantum computationally universal given only an initial ensemble of maximally mixed single qubits. In this work we prove this conjecture. This provides a method for quantum computing that is fully rotationally symmetric (i.e. reference frame independent), using primitives that are physically very-accessible, naturally resilient to certain forms of error, and provably the simplest possible.

Similar content being viewed by others

Introduction

Since the origin of quantum computation, it has been of fundamental interest to understand which types of physical operations enable universality. Beyond the standard textbook gate model of quantum computation, it was soon realised that measurements could be used not only for readout of information but also as dynamical elements. The most widely known example is perhaps the scheme of Raussendorf and Briegel2, wherein given a particular fixed many-particle entangled state (the ‘cluster state’) a quantum computation can be executed by adaptively performing (destructive) single-qubit measurements. Originally it was envisaged that the cluster state would be pre-generated by multi-qubit entangling unitary operations. In light of such, one may wonder whether one can push the role of measurements yet further still, completely eliminating any use of coherent unitary operations.

The first scheme that required no unitary operations at all was proposed by Nielsen3; it required being able to perform multiple distinct (non-destructive) 4-qubit measurements. This was simplified in various ways in subsequent works. Fenner and Zhang4 provided a scheme using multiple different 2- and 3-qubit measurements. Leung5 proposed a scheme with multiple different 2-qubit measurements or a single 4-qubit measurement. Perdrix6 devised a method using three different single-qubit measurements and only additional measurement of the two-qubit observable X ⊗ Z (X, Z refer to the standard Pauli operators). At about the same time, the universality of 2-qubit (destructive) 'fusion' measurements, physically natural for photonic qubits, was proven7. Note that in some of these works additional assumptions were needed on the initial single-qubit sources available.

Partly motivated by a desire to develop quantum computational schemes using more physically natural measurements, in ref. 8 we constructed a quantum computational scheme based upon the measurement of two-qubit total angular momentum. More explicitly, this measurement—which we refer to as the singlet/triplet measurement or s/t measurement (following the notation of ref. 1)—consists of only two outcomes, one corresponding to the projector

onto the singlet state \(\left|{{{\Psi }}}^{-}\right\rangle :\!\!=(\left|01\right\rangle -\left|10\right\rangle )/\sqrt{2}\), and the other corresponding to the ‘triplet’ projector onto its orthogonal complement:

(which projects onto the subspace spanned by the ‘triplet’ of states \(\left|00\right\rangle,\left|11\right\rangle,(\left|01\right\rangle+\left|10\right\rangle )/\sqrt{2}\)). Note that because

for any single-qubit unitary U, this measurement has the important property, relevant to later discussion, that it is rotationally invariant, i.e., invariant under local changes of basis/reference frame. The manifest rotational and permutational invariance of the singlet/triplet projectors underpins their generic physical naturalness - these subspaces will often be energetically separated, even in physical systems with degrees of freedom unrelated to the total angular momentum of spin-1/2 particles.

While the scheme of ref. 8 used only the s/t measurement for all dynamical and readout purposes, an additional necessary assumption was that input qubits could be prepared in at least three single-qubit (possibly mixed) states ρa, ρb, ρc with linearly independent Bloch vectors. Rotational invariance then implies that s/t measurements are universal given only an initial qubit mixed state of the form

where the integral represents a uniform average, over the Haar measure dU, of all possible single-qubit unitaries U performed identically on every qubit in the system. Here 3N, the total number of qubits involved in implementing the computation, grows only polynomially in the underlying algorithm size.

The universality of s/t measurements under other assumptions was reconsidered recently by Freedman, Hastings and Shokrian-Zini1, in which the authors proposed a remarkable conjecture, referred to as the ‘STP=BQP conjecture’, namely that s/t measurements alone are universal given essentially arbitrary input states. In ref. 1, the authors posed the conjecture by formally assuming that the inputs are a supply of singlet states. However, it is clear that the inputs could be chosen in many other ways. For instance, maximally mixed qubits are an equivalent choice as they can be produced from singlet states by discarding one particle, and can produce singlet states when measured with the s/t measurement. Moreover, as s/t measurements can create singlet states from almost every input source, the only exception being inputs of such high symmetry they return only (or mostly) triplet outcomes, the STP=BQP conjecture in fact implies that the s/t measurement is quantum universal given almost any input states.

In this paper, we show that the STP=BQP conjecture is in fact true. We do so by demonstrating that replacing Eq. (4) with even a resource of only maximally mixed single-qubits suffices to make the s/t measurement universal. We choose to assume that the inputs are maximally mixed, as these are the only rotationally invariant single-qubit states, and this choice renders our scheme more obviously minimalistic.

Results

In this section, we give an overview of the main ingredients of the proof, including some required elements from previous works1, 8. Technical details are deferred to the 'Methods'.

Prior work

First let us briefly summarise the parts of refs. 1, 8 that we will need. In refs. 1, 8, various ways were proposed for using s/t measurements to perform quantum computation using additional single-qubit resources, which may be either states8 or unitaries1. In any such scheme, it is clear that as the s/t measurement is the only multiparticle operation, it must be the resource that is used to build multiparty entanglement. Further, as the s/t measurement gives probabilistic outcomes, this has to be done offline, so that we only use the entanglement once we are satisfied that it has been created to a sufficient quality.

In ref. 8, this was achieved by building cluster states2, using the triplet outcome of the s/t measurement to fuse smaller entangled clusters into bigger ones, having initially started from entanglement created by the singlet outcome. To execute the computation, single particle measurements were constructed by using s/t measurements and ancilla qubits prepared in known states along the desired measurement axis. In both building the cluster states and executing the measurements, it was initially assumed in8 that there are supplies of highly pure single-qubit states. However, as shown in detail in ref. 8, this assumption could be relaxed significantly to assume only the input ensemble of Eq. (4). Hence the ensemble (4), and s/t measurements on arbitrary pairs of qubits, are sufficient for universal quantum computation.

In ref. 1, the computational power of s/t measurements was considered in other contexts, with the aim of proposing and providing supporting evidence for the STP=BQP conjecture. In addition to demonstrating quantum universality when the s/t measurement is supplemented by single-qubit X, Z gates1, demonstrated that the s/t measurement alone is at least as powerful as the weak model of permutational quantum computation9, and with the addition of post selection, it is equivalent to post-BQP. A sampling problem was also proposed that by definition could be efficiently solved using s/t measurements, in spite of suggestions that it might not be possible classically efficiently.

A key primitive proposed in ref. 1, which we will also make use of in the present work, is the implementation of (an exponentially good) measurement of the total angular momentum by using only repeated pairwise s/t measurements.

Proof strategy

We show that the STP=BQP conjecture is indeed correct by following the approach of our previous work8, but dropping the assumption that we have been given the resource (4). We show instead how such a state may be approximately created efficiently using only s/t measurements acting upon maximally mixed input qubits.

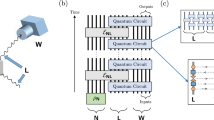

The construction proceeds in two steps, a maximally mixed symmetric state preparation step (‘MMSS preparation’), and a relative localisation step (Fig. 1).

a MMSS preparation: Bringing up a maximally mixed qubit to an MMSS \({\rho }_{K}^{sym}\) of K qubits, and measuring its total angular momentum using a procedure from1 implements a biased random walk that probabilistically generates an MMSS of increasing size. b Relative Localisation: Begin with two MMSS states of M + N qubits. Each can be interpreted as a random mixture of many copies of an unknown pure state. By pairing up and measuring M qubits from each using the s/t measurement (red ovals) we build up information about the relative angle the Bloch vectors of these unknown pure states make against each other. This allows us to estimate the unknown pure states to high accuracy, up to an unimportant global unitary transformation. The remaining qubits can hence be used, together with s/t measurements, to implement cluster state quantum computation, as detailed in ref. 8.

To understand these steps, let us ask ourselves how we might go about creating the state (4)? It is clear that we cannot produce (say) N copies of a particular pure state \({\left|\psi \right\rangle }^{\otimes N}\) just using our available resources, as \({\left|\psi \right\rangle }^{\otimes N}\) is not invariant under an arbitrary U⊗N, whereas the s/t measurements and maximally mixed states are. However, we might consider trying to produce the following state, which we call a maximally mixed symmetric state (‘MMSS’):

This state has an ensemble interpretation of comprising N copies of an unknown pure state, and it is invariant under arbitrary U⊗N. From standard results in the theory of symmetric group representations and/or the theory of quantum angular momentum, it is also equal to the maximally mixed state on the subspace of symmetric states, hence the symbol \({\rho }_{N}^{sym}\). The first step of our argument—the MMSS preparation step—is to show how we can efficiently prepare a state that is a good approximation to \({\rho }_{N}^{sym}\). We do this by starting with two maximally mixed qubits and measuring them with an s/t measurement. With probability 3/4 the qubits are left in the state \({\rho }_{2}^{sym}\). We then add maximally mixed qubits one at a time, and each time we do this we perform a total angular momentum measurement using a procedure described in ref. 1. We will see that if this measurement yields the right outcome then we successfully transform \({\rho }_{n}^{sym}\to {\rho }_{n+1}^{sym}\) to high accuracy, whereas if it fails we transform \({\rho }_{n}^{sym}\to {\rho }_{n-1}^{sym}\) to high accuracy. It turns out that probabilities of each outcome are such that this ‘random walk’ reaches our target \({\rho }_{N}^{sym}\) with only a polynomial cost. We note that this protocol is very similar in spirit to the protocols of section 2 of ref. 1, and it is possible that an appropriate variant of their splitting protocol could be used to create \({\rho }_{N}^{sym}\) more efficiently (the splitting protocol is a primitive in which given an initial multi-qubit state of total angular momentum S, one divides the qubits into two sets A, B and uses s/t measurements to efficiently realise with high accuracy any desired local total angular momenta SA, SB of each subset consistent with the constraint ∣SA − SB∣ ≤ S ≤ SA + SB, and parts of the protocol target the generation of symmetric subspace states on certain subsystems).

In the second step, “relative localisation” we see how to transform three copies of \({\rho }_{{N}^{{\prime} }}^{sym}\) into a good quality copy of (4) for an \({N}^{{\prime} }\) that need only be polynomially larger than N. This is done using measurement-induced relative localisation, in full analogy with a similar phenomenon for optical phase, Bose-Einstein condensate phase, and particle position studied in detail in ref. 10. To understand what we mean by relative localisation, consider two input copies of \({\rho }_{{N}^{{\prime} }}^{sym}\). Each can be interpreted as a supply of (generically different) unknown pure states, say \(\left|a\right\rangle\) and \(\left|b\right\rangle\). If we were to take a qubit in \(\left|a\right\rangle\) and a qubit in \(\left|b\right\rangle\) and measure them with a s/t measurement, then the probability of getting a triplet outcome is given by:

The probability of getting a triplet therefore tells us the angle between the Bloch vectors of the two unknown states \(\left|a\right\rangle\) and \(\left|b\right\rangle\). Hence, we may take two copies of \({\rho }_{{N}^{{\prime} }}^{sym}\) and repeatedly take one qubit from each source, measuring them using s/t measurements. The observed frequency of triplet outcomes will allow us to estimate the angle between the Bloch vectors to high precision. Once we are satisfied with the statistical precision that we have reached, we stop and use the remaining unmeasured qubits for computation. They can be considered to be in a state that is a good approximation of

for some arbitrary \(\left|a\right\rangle\), \(\left|b\right\rangle\), with 0 < ∣〈a∣b〉∣2 < 1 determined by the observed frequency of triplet outcomes. Creating (4) follows exactly the same process, but starting with three copies of \({\rho }_{{N}^{{\prime} }}^{sym}\) rather than two. Up to picking an arbitrary handedness of our coordinate system, it turns out that the frequency of triplet outcomes observed between the three sources allows us to create (4) with high accuracy at polynomial cost. This allows us to conclude that the STP=BQP conjecture is indeed correct.

We note that our constructions are unlikely to be optimal, and it is likely that further work could make them significantly more efficient.

Discussion

If we consider operations needed to build a quantum computer from scratch (i.e., without a prior source of entanglement), it is clear that for quantum resources we at least need (i) a supply of qubits, (ii) at least one two-qubit operation to generate entanglement, and (iii) at least one binary outcome measurement so that the computation readout is humanly accessible. The STP=BQP theorem is noteworthy because it meets (ii) and (iii) with only a single two-outcome, two-qubit measurement, with no other dynamical operation or measurement needed. It is hence even more remarkable that it meets (i) in a manner almost completely agnostic about the initial state of the qubit resource: provided that we are promised that a sufficient number of singlet outcomes will occur, the singlet outcomes can be used to prepare the single-qubit maximally mixed states required.

The model is also minimal with respect to its use of rotationally invariant primitives. This could be of practical import for systems subject to collective decoherence.

From a foundational perspective, it also is interesting that the computation would be described identically, and using real numbers only, in every choice of reference frame.

Elaborating on this last point: consider two non-communicating parties observing a physical system performing a classical computation, and each writing down a mathematical description of such. It can reasonably be arranged that these descriptions are identical, perhaps up to an ambiguity about which physical state of a bit in the machine corresponds to mathematical 0, and which to 1.

The same is not true if the parties instead observe a device implementing a quantum computation via the standard circuit architecture. While some physical systems do have an intrinsic natural (say) Z eigenbasis (e.g., right/left circular polarisation of a photon, ground/excited atomic states), agreeing on the X eigenbasis (corresponding to agreeing on an orientation in space for polarisation, or origin of time for atomic energy levels) for all known physical qubits requires extra exchange of physical information to align the reference frame in question11. This lack of agreement could be annoying. As one example, if one observer does a universal computation using a simple (say, real-valued) gate set the other would typically disagree and say that it was messy and complex-valued. Another is that because the parties disagree on the correct Pauli bases, procedures that would be manifestly fault-tolerant using the stabiliser formalism to one observer would not be so to the other.

By contrast, the scheme presented in this work has the following property: no matter how many such disagreeably misaligned observers there are, we can arrange for them to all describe a single universal quantum computation being performed in such a way that every single experimenter will at all times during the computation assign the exact same mathematical description in terms of states and operators to all elements of the computation. (Again up to an ambiguity as to which of the outcomes Ps/t is assigned 0/1.) It is perhaps also philosophically interesting that this description would at all times remain real-valued.

While other schemes for achieving similar basis independence can be constructed using methods reviewed in ref. 12 on encoding and processing information in decoherence-free subsystems, they are considerably more complex than the methods of this work. For example, they require encoding in large multi-qubit states, more complicated unitary gates, and often make use of intermediate operations that are not, in fact, rotationally invariant.

We do not anticipate that our scheme is optimal in terms of resource scaling, nor is it explicitly fault-tolerant in its present form. In this regard it is worth noting that a considerably simpler approach than generation of increasingly large cluster states and subsequent simulation of single-qubit measurements (as was done in ref. 8) would probably be to implement fusion-based quantum computing13. In that approach, one need only show the ability to create small, constant-sized states and the ability to implement a Bell measurement (or one of its fusion variations). That approach also will automatically yield fault tolerance. A deeper analysis of such may merit further attention.

Methods

In this section, we present details of the proof that STP=BQP. As described in the Results there are two steps to the procedure: the MMSS preparation step, and the relative localisation step. In the first subsection, we describe the MMSS preparation, and in the following two subsections we describe the relative localisation.

MMSS preparation

The construction proceeds recursively. Suppose that we start with K qubits prepared in \({\rho }_{K}^{sym}\). We bring in a new qubit in the maximally mixed state and randomly pick pairs of qubits to undergo a polynomial number of s/t measurements (more efficient choices than random pairings will certainly exist—for example, interpreting the switches of the networks in ref. 14 as s/t measurement locations). If we only ever find triplet outcomes, we perform an exponentially good approximate projection1 into the state \({\rho }_{K+1}^{sym}\). This occurs with probability P(K) = (K + 2)/(2K + 2) > 1/2. If we ever find a singlet outcome we discard those two qubits and the remaining K − 1 qubits are left in \({\rho }_{K-1}^{sym}\).

We can interpret the protocol as a 1-d random walk process where we begin at K = 1, and have a probability P(K) of stepping to the right, and 1 − P(K) of stepping to the left. The boundary at K = 0 is absorbing (fail, restart) and let us consider our target to be creating \({\rho }_{N}^{sym}\) for some fixed N. The solution to this problem can be found in ref. 15. Note that the particle must eventually be absorbed at one or the other of the boundaries. Eq. (2.7) of ref. 15 for our case yields that the probability it is absorbed at the right hand boundary is (N + 1)/2N, i.e., slightly higher than 1/2. Thus we have finite probability of eventual success. To ensure the resources consumed (qubits/time steps) are polynomial, we need to compute the conditional mean for the number of steps before stopping (absorption at a boundary). This can be found by solving the recurrence relations (3.1)–(3.3) in ref. 15. For starting at K = 1, we find the expected number of steps before absorption is (N2 + 3N − 4)/6, which grows polynomially with N.

Relative localisation

The second step is to see how two sufficiently large maximally mixed symmetric states can be converted into an ensemble equivalent to a Haar–twirled product state over pure states with fixed overlap, i.e., a state of the form:

for some arbitrary (but known) \(\left|a\right\rangle\), \(\left|b\right\rangle\) with 0 < ∣〈a∣b〉∣2 < 1.

This can be done by using measurement-induced localisation of the relative angle between initial (mixtures of) spin coherent states, similar to the cases studied in ref. 10. Once we localise two such ensembles, we can use the same procedure to relationally localise further ensembles to the first two—we leave that analysis to the next subsection, and here consider only two ensembles.

The basic intuition is simple: we start with two sources \({\rho }_{N+M}^{sym}\otimes {\rho }_{N+M}^{sym}\), as created in the first step, and interpret each as an ensemble of N + M copies of a randomly selected pure state. We pair up M of the spins from each source and perform the singlet/triplet measurement on each pair, enabling us to get a good estimate of the overlap between the two (random) pure states. We then use the remaining 2N qubits for computation, under the assumption that the overlap is the estimated one (we remark that the relative localisation could possibly be induced more efficiently using approximate total angular measurement protocols of ref. 1 together with some form of global angular momentum inference scheme, see ref. 16). As we now demonstrate, a fixed overall error across the 2N qubits requires M to grow only polynomially in N.

Because of the collective unitary freedom, we are free to decide that the first MMSS is actually a source of \({\left|0\right\rangle }^{\otimes N+M}\), and the second state \({\left|\theta \right\rangle }^{\otimes N+M}\) is specified by the relative angle θ ∈ [0, π) its Bloch vector makes with the first source state, where θ has p.d.f \(\sin (\theta )/2\). An s/t measurement on \(\left|0\right\rangle \otimes \left|\theta \right\rangle\) gives a triplet outcome with probability \(q=(1+{\cos }^{2}(\theta /2))/2=(3+\cos (\theta ))/4.\) The total probability over the M measurements of obtaining n1 triplet outcomes is

This has the convenient interpretation that the probability of seeing a given number of triplets is described by a Bernoulli trial with a uniformly chosen q in the interval [1/2, 1]. Estimating θ corresponds to estimating q given the observed M, n1, so we will also write \(\left|q\right\rangle :=\left|\theta \right\rangle\).

Considering the function

we can use standard identities (en.wikipedia.org/wiki/binomial_coefficient) for partial sums of binomial coefficients to see that T(a, b) is exponentially close (in M = (a + b)) to \({\left(\left(\begin{array}{c}a+b\\ a\end{array}\right)(a+b+1)\right)}^{-1}\) when a > (a + b)/2. Applying this to P(n1), we find that it is exponentially close to 2/(M + 1), which means that with high probability on any given run of the procedure we will observe n1 > M/2 triplet outcomes, and from now on, we consider only situations where this has occurred.

The probability density of q given n1 triplet outcomes is (over the domain \(q\in \left[1/2,\, 1\right]\)):

from which we wish to bound the goodness of our estimated value of q (and hence θ). The mean and variance for this inference problem are given by

where ≈ denotes exponential closeness. A simple upper bound on the variance is then σ2 < 1/M.

Now, we are roughly in the following situation: we will operate as if q = μ, i.e., the state of the second N qubits is \({\left|\mu \right\rangle }^{\otimes N}\) (by collective rotational freedom taken to be a state in the right semicircle of the XZ plane in the Bloch sphere), but with a low probability (≤1/h2 by the Chebyshev inequality (en.wikipedia.org/wiki/chebyshev’s_inequality)) the actual value of q could be further than hσ from this. In later calculations, we will pick h = M1/6. The error we want to understand will ultimately arise from the trace distance between the estimated state and the actual one, and so we wish to bound this.

To make things simpler we first ask, for any pair of q1, q2 with a fixed value of ∣q1 − q2∣, what is the largest possible trace distance between the corresponding quantum states \(\left|{q}_{1}\right\rangle\), \(\left|{q}_{2}\right\rangle\)? Elementary considerations yield:

This can be shown as follows. The state \(\left|q\right\rangle\) has z component of its Bloch vector given by z = 4q − 3, so a given value of ∣q1 − q2∣ constrains the Bloch vectors of \(\left|{q}_{1}\right\rangle\), \(\left|{q}_{2}\right\rangle\) to have a projection on the z axis to a fixed interval 4∣q1 − q2∣. Positioning one end of this interval at either the north or south poles of the Bloch sphere yields the largest possible trace distance consistent with this projected value. We can use Eq. (15) to bound the overall error via:

where the first inequality is the triangle inequality, the second is because for pure states it holds that \(\parallel {\psi }^{\otimes N}-{\phi }^{\otimes N}\parallel \le \sqrt{N-1}\parallel \psi -\phi \parallel\), the third is from the bound (15), the fourth is concavity of the square root, the fifth is from the largest probabilities (and ∣q − μ∣ values) consistent with the Chebyshev inequality, and the last is obtained by using \(\sigma \le 1/\sqrt{M}\) and picking h = M1/6.

We deduce that given the target overall error of ϵ, we can choose \(M \sim {(N/{\epsilon }^{2})}^{3}\), which is a polynomial cost.

Relatively localising a further source

We now describe how the errors arising from the relative localisation of a further source to the first two may be controlled. The method is essentially analogous to the discussion in the previous subsection, albeit with some modifications to control more complicated integrals. Let us begin by assuming that we have already taken two MMSS sources, and have relatively localised them. One source is (by the protocol) exactly \(\left|0\right\rangle\), the other is \(\left|\mu \right\rangle\), which is subject to statistical error. However, we will proceed as if it is exact, noting by our previous argument that the error introduced by assuming this can be made arbitrarily small at polynomial cost. Recall that \(\left|\mu \right\rangle\) can be assumed to have Bloch vector components x > 0 and y = 0 (i.e., is in the positive x direction of the XZ plane). Now we bring in a third MMSS, which we consider to be a source of a random state \(\left|\psi \right\rangle\). This source will give a Bloch vector linearly independent from the other two sources almost surely. We will localise it relative to the other two sources by using triplet measurements. We will call this ‘two source relative localisation’, and refer to the previous relative localisation as ‘single source relative localisation’. As \(\left|0\right\rangle\) and \(\left|\mu \right\rangle\) are in the XZ plane, the two source relative localisation will give us information on the x and z components of the Bloch vector of \(\left|\psi \right\rangle\). As \(\left|\psi \right\rangle\) is pure, the y component will then be fixed up to a sign as \(y=\pm \sqrt{1-{x}^{2}-{z}^{2}}\). We are free to pick one sign as that corresponds to choosing the handedness of our coordinate system, so we will assume that \(y=+ \sqrt{1-{x}^{2}-{z}^{2}}\). Denote the Bloch vectors of \(\left|0\right\rangle\) and \(\left|\mu \right\rangle\) by (0, 0, 1) and \((\sin (\theta ),0,\cos (\theta ))\) (where θ ∈ (0, π)), respectively, and denote the Bloch vector of the random state \(\left|\psi \right\rangle\) by (x, y, z). The probabilities of getting triplet outcomes when measuring \(\left|0\right\rangle \otimes \left|\psi \right\rangle\) and \(\left|\mu \right\rangle \otimes \left|\psi \right\rangle\) are the random variables given by:

respectively. A pair (qa, qb) is hence in one-to-one correspondence with (x, z), and so through observed estimates of (qa, qb), we will be able to estimate \(\left|\psi \right\rangle\) (as we take \(y=+ \sqrt{1-{x}^{2}-{z}^{2}}\)). If there were no connection between \(\left|0\right\rangle\) and \(\left|\mu \right\rangle\) there would be no correlation between qa, qb. However, because of Eq. (22), there will be restrictions on the possible values of qa, qb. Let Q be the set {qa, qb∣qa ∈ [1/2, 1], qb ∈ [1/2, 1]} of all possible qa, qb pairs, when we neglect correlations, and denote by R ⊂ Q the subset of values of qa, qb permitted by Eq. (22). Note R depends on the value of θ. However, we suppress this dependence as it will not play a significant role. Over Q let us denote the p.d.f. of qa, qb by f(qa, qb) - although this will be zero on Q\R, it is convenient to define it on the whole of Q. Again, f(qa, qb) depends on θ but the precise details will not be needed. To simplify our computations we will later neglect the correlations between qa, qb, and perform inference as if they come from a product distribution on the whole of Q. This will allow us to utilise bounds computed for the single-source relative localisation. Even though this adds an additional layer of approximation, it allows relatively straightforward bounds on error to be computed, and the overall error incurred can still be made arbitrarily small at polynomial cost.

We begin by constructing for the two source case, a bound similar to Eq. (15). First consider two pure states \(\left|{\psi }_{1}\right\rangle\), \(\left|{\psi }_{2}\right\rangle\) with Bloch vectors \(({x}_{1},\, {y}_{1}=+ \sqrt{1-{x}_{1}^{2}-{z}_{1}^{2}},\, {z}_{1})\) and \(({x}_{2},\, {y}_{2}=+ \sqrt{1-{x}_{2}^{2}-{z}_{2}^{2}},\, {z}_{2})\) respectively. Consider the projections of the Bloch vectors in the XZ plane (i.e., (x1, z1) and (x2, z2)), these projections have a separation \(l=\sqrt{{({x}_{1}-{x}_{2})}^{2}+{({z}_{1}-{z}_{2})}^{2}}\). What is the largest possible trace distance between the two pure states, consistent with a given value of l? It is not difficult to show that the solution is the same as given in Eq. (15), i.e.,

It is convenient to derive an upper bound to l utilising qa, qb as our coordinates instead of x, z. Given two pure states \(\left|{\psi }_{1}\right\rangle\), \(\left|{\psi }_{2}\right\rangle\) represented by (qa, qb) and (qa + Δqa, qb + Δqb), respectively, let us bound the value of l. We note that we can write Eq. (22) as:

Define a matrix A such that

(A is well-defined under our assumption that θ ∈ (0, π)). Denoting the operator norm of A by constant c (while this depends upon θ, in this stage of the relative localisation we are treating θ as a constant), and using the triangle inequality, we find that

We then may put this together with Eq. (23), and use the triangle inequality once more, to give:

It will be convenient to use this inequality is it separates contributions from errors in estimates of qa and errors in estimates of qb, and this will allow us to straightforwardly apply the single-source analysis. Let na, nb be the number of triplet outcomes observed when localising to M copies of \(\left|0\right\rangle\), and M copies of \(\left|\mu \right\rangle\), respectively. Let (omitting the ‘dqadqb’ in this and all subsequent double integrals to keep the notation uncluttered)

be the probability density of the state being described by qa, qb conditioned upon observing na, nb triplets when measuring against M copies of \(\left|0\right\rangle\) and M copies of \(\left|\mu \right\rangle\). As we will be neglecting correlations between qa and qb when estimating them in our two source relative localisation, we use the single-source estimates. Consequently, let μa, μb be the mean values of qa, qb, and \({\sigma }_{a}^{2},{\sigma }_{b}^{2}\) the corresponding variances, as constructed in Eq. (14) for the single-source case. Let \(\sigma=\max \{{\sigma }_{a},{\sigma }_{b}\}\le 1/\sqrt{M}\). Let \(\left|{\mu }_{a},{\mu }_{b}\right\rangle\) be the pure state corresponding to setting qa = μa and qb = μb exactly, and \(\left|{q}_{a},{q}_{b}\right\rangle\) be the pure state corresponding to setting qa and qb exactly. In analogy to the single-source relative localisation, we have (omitting steps that are essentially identical to the previous case):

We would hence like to control expressions like:

where Pr(qa, qb∣na, nb, M) is given by Eq. (28). Intuitively, it is clear that while the conditional probability Pr(qa, qb∣na, nb, M) is correlated across qa, qb, after doing many observations we should still expect the posterior distribution on qa, qb to become strongly peaked around μa, μb anyway, and so our estimates adapted from the single-source scheme should still be close. Let us show that this is indeed the case with high enough probability. Let Ch ⊂ Q be the ‘close’ set {qa, qb∣qa ∈ [μa − hσ, μa + hσ], qb ∈ [μb − hσ, μb + hσ]} of qa, qb values that are within hσ of the estimates, and Fh = Q\Th be the ‘far’ set of qa, qb values that are more than hσ from the estimates, where σ is the maximum of the two variances. We will pick the value of h later. Let us also define the product measure Prod(qa, qb∣na, nb, M) ≔ Pr(qa∣na, M)Pr(qb∣nb, M) that would arise if there were no correlation (i.e., if we were relatively localising to two sources that are independent of each other, using the single-source scheme). Explicitly:

(although the inclusion of the 1/4 in both the numerator and denominator is superfluous, we include it as it is the analogue of f(qa, qb)). Let us define the following integrals:

From the definitions of these integrals, we have that

To control this error, we need to first need to upper bound F/C. We will do this by first computing an upper bound to F and a lower bound to C. For F we first note that:

where the relative entropy \(H({\overrightarrow{n}}_{a}| {\overrightarrow{q}}_{a})\) and Shannon entropy \(H({\overrightarrow{n}}_{a})\) are constructed from the probability distributions defined by

with similar definitions for b. We may now appeal to Pinsker’s inequality (en.wikipedia.org/wiki/pinsker’s_inequality) to lower bound the relative entropy. Together with the fact that \(H({\overrightarrow{n}}_{a}),\, H({\overrightarrow{n}}_{b})\ge 0\) we obtain:

where the norm represents the 1-norm. To a high degree of approximation μa = na/M and μb = nb/M, and so from our definition of Fh, this becomes:

Now let us turn to lower bounding C. Let us tentatively assume that \(\mathop{\min }\limits_{{C}_{h}}f({q}_{a},\, {q}_{b}) \, > \, k\), for some constant k > 0 (which up to some relatively mild restrictions we will be able to choose). We will later discuss why we may make this assumption with high enough probability. Hence we have:

We now note that the integral in this lower bound is the probability, under the product distribution, of each of qa, qb being within hσ of their means. Exploiting the Chebyshev inequality (applied independently to both parts of the product distribution) we hence get

Putting the upper bound on F together with the lower bound for C gives

If we now pick, for example, h = M1/4, then the previous bound becomes

As with the single-source case, this leads to a polynomial overhead for any desired target error.

What remains is demonstrating that we may pick a suitable constant k > 0. For a given (large enough) value of M and observed values of na, nb we would like the resulting set Ch (which is fixed by na, nb and by our choice of h = M1/4) to be such that \(\mathop{\min }\limits_{{C}_{h}}f({q}_{a},{q}_{b}) \, > \, k\). For a given k consider the upper level set Lk of f(qa, qb), i.e., Lk ≔ {(qa, qb)∣f(qa, qb) ≥ k}, so our requirement can be re-expressed as the requirement Ch ⊂ Lk. Assume that we have picked a k such that Lk is of non-zero size. For a small constant tolerance ϵ > 0 that we shortly choose, consider the subset \({W}_{k}^{\epsilon }\subset {L}_{k}\) of (qa, qb) ∈ Lk such that for Δ ≔ hσ + ϵ, the neighbourhood (qa − Δ, qa + Δ) × (qb − Δ, qb + Δ) is contained in Lk. As the upper bound hσ ≤ 1/M1/4 is not increasing in M, we can assume (say by assuming we do not consider M less than some large enough constant) that this target subset \({W}_{k}^{\epsilon }\) is of constant size. So with constant probability the values of qa, qb that are realised will fall within \({W}_{k}^{\epsilon }\). The values of na/M ≈ μa, nb/M ≈ μb will converge to these qa, qb exponentially quickly in M, and so by choosing the tolerance ϵ to accommodate this, with constant probability the observed na, nb will be such that Ch ⊂ Lk. If the observed na, nb do not satisfy Ch ⊂ Lk, we may simply abandon and repeat until we get a suitable na, nb.

Data availability

No data were generated or used in the work.

Code availability

No code was generated or used in the work.

References

Freedman, M. H., Hastings, M. B. & Shokrian Zini, M. Symmetry protected quantum computation. Quantum 5, 554 (2021).

Raussendorf, R. & Briegel, H. J. A one-way quantum computer. Phys. Rev. Lett. 86, 5188 (2001).

Nielsen, M. A. Quantum computation by measurement and quantum memory. Phys. Lett. A 308, 96 (2003).

Fenner, S. A. & Zhang, Y. Universal quantum computation with two- and three-qubit projective measurements. Preprint at https://arxiv.org/abs/quant-ph/0111077 (2001).

Leung, D. W. Quantum computation by measurements. Int. J. Quantum Inf. 02, 33 (2004).

Perdrix, S. State transfer instead of teleportation in measurement-based quantum computation. Int. J. Quantum Inf. 03, 219 (2005).

Browne, D. E. & Rudolph, T. Resource-efficient linear optical quantum computation. Phys. Rev. Lett. 95, 010501 (2005).

Rudolph, T. & Virmani, S. S. A relational quantum computer using only two-qubit total spin measurement and an initial supply of highly mixed single-qubit states. N. J. Phys. 7, 228 (2005).

Jordan, S. P. Permutational quantum computing. Quantum Inf. Comput. 10, 470 (2010).

Cable, H., Knight, P. L. & Rudolph, T. Measurement-induced localization of relative degrees of freedom. Phys. Rev. A 71, 042107 (2005).

Rudolph, T. Quantum information is physical too. Preprint at https://arxiv.org/abs/quant-ph/9904037 (1999).

Bartlett, S. D., Rudolph, T. & Spekkens, R. W. Reference frames, superselection rules, and quantum information. Rev. Mod. Phys. 79, 555 (2007).

Bartolucci, S. et al. Fusion-based quantum computation. Nat. Commun. 14, 912 (2023).

Czumaj, A. Random permutations using switching networks.Random permutations using switching networks. In Proceedings of The Forty-seventh Annual ACM Symposium on Theory of Computing 705–712 (ACM, 2015).

El-Shehawey, M. A. Absorption probabilities for a random walk between two partially absorbing boundaries: I. J. Phys. A Math. Gen. 33, 9005 (2000).

Fanizza, M., Rosati, M., Skotiniotis, M., Calsamiglia, J. & Giovannetti, V. Beyond the swap test: optimal estimation of quantum state overlap. Phys. Rev. Lett. 124, 060503 (2020).

Acknowledgements

S.S.V. acknowledges thought-provoking discussions with Nihaal Virmani. The authors thank Imperial College London Open Access Fund for funding the open access costs for this article.

Author information

Authors and Affiliations

Contributions

T.R. and S.S.V. contributed equally to this work.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks the anonymous reviewer(s) for their contribution to the peer review of this work. A peer review file is available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Rudolph, T., Virmani, S.S. The two-qubit singlet/triplet measurement is universal for quantum computing given only maximally-mixed initial states. Nat Commun 14, 7800 (2023). https://doi.org/10.1038/s41467-023-43481-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41467-023-43481-y

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.