1 Introduction

Community detection is the task of dividing a network — typically one which is large — into many smaller groups of nodes that have a similar contribution to the overall network structure. With such a division, we can better summarize the large-scale structure of a network by describing how these groups are connected, rather than describing each individual node. This simplified description can be used to digest an otherwise intractable representation of a large system, providing insight into its most important patterns, how these patterns relate to its function, and the underlying mechanisms responsible for its formation.

Because of its important role in network science, community detection has attracted substantial attention from researchers, specially in the last 20 years, culminating in an abundant literature (see Refs. [Reference Fortunato1, Reference Fortunato and Hric2] for a review). This field has developed significantly from its early days, specially over the last 10 years, during which the focus has been shifting towards methods that are based on statistical inference (see e.g. Refs. [Reference Moore3–Reference Peixoto, Doreian, Batagelj and Ferligoj5]).

Despite this shift in the state-of-the-art, there remains a significant gap between the best practices and the adopted practices in the use of community detection for the analysis of network data. It is still the case that some of the earliest methods proposed remain in widespread use, despite their many serious shortcomings that have been uncovered over the years. Most of these problems have been addressed with more recent methods, that also contributed to a much deeper theoretical understanding of the problem of community detection [Reference Moore3, Reference Abbe4, Reference Decelle, Krzakala, Moore and Zdeborová6, Reference Krzakala7].

Nevertheless, some misconceptions remain and are still promoted. Here we address some of the more salient ones, in an effort to dispel them. These misconceptions are not uniformly shared; and those that pay close attention to the literature will likely find few surprises here. However, it is possible that many researchers employing community detection are simply unaware of the issues with the methods being used. Perhaps even more commonly, there are those that are in fact aware of them, but not of their actual solutions, or the fact that some supposed countermeasures are ineffective.

Throughout the following we will avoid providing “black box” recipes to be followed uncritically, and instead try as much as possible to frame the issues within a theoretical framework, such that the criticisms and solutions can be justified in a principled manner.

We will set the stage by making a fundamental distinction between “descriptive” and “inferential” community detection approaches. As others have emphasized before [Reference Schaub, Delvenne, Rosvall and Lambiotte8], community detection can be performed with many goals in mind, and this will dictate which methods are most appropriate. We will provide a simple “litmus test” that can be used to determine which overall approach is more adequate, based on whether our goal is to seek inferential interpretations. We will then move to a more focused critique of the method that is arguably the most widely employed — modularity maximization. This method has an exemplary character, since it contains all possible pitfalls of using descriptive methods for inferential aims. We will then follow with a discussion of myths, pitfalls, and half-truths that obstruct a more effective analysis of community structure in networks.

(We will not give a throughout technical introduction to inferential community detection methods, which can be obtained instead in Ref. [Reference Peixoto, Doreian, Batagelj and Ferligoj5]. For a practical guide on how to use various inferential methods, readers are referred to the detailed HOWTOFootnote 1 available as part of the graph-tool Python library [Reference Peixoto9].)

2 Descriptive vs. inferential community detection

At a very fundamental level, community detection methods can be divided into two main categories: “descriptive” and “inferential.”

Descriptive methods attempt to find communities according to some context-dependent notion of a good division of the network into groups. These notions are based on the patterns that can be identified in the network via an exhaustive algorithm, but without taking into consideration the possible rules that were used to create the patterns uncovered. These patterns are used only to describe the network, not to explain it. Usually, these approaches do not articulate precisely what constitutes community structure to begin with, and focus instead only on how to detect such patterns. For this kind of method, concepts of statistical significance, parsimony, and generalizability are usually not evoked.

Inferential methods, on the other hand, start with an explicit definition of what constitutes community structure, via a generative model for the network. This model describes how a latent (i.e. not observed) partition of the nodes would affect the placement of the edges. The inference consists on reversing this procedure to determine which node partitions are more likely to have been responsible for the observed network. The result of this is a “fit” of a model to data, that can be used as a tentative explanation of how the network came to be. The concepts of statistical significance, parsimony, and generalizability arise naturally and can be quantitatively assessed in this context.

Descriptive community detection methods are by far the most numerous, and those that are in most widespread use. However, this contrasts with the current state-of-the-art, which is composed in large part of inferential approaches. Here we point out the major differences between them and discuss how to decide which is more appropriate, and also why one should in general favor the inferential varieties whenever the objective is to derive generative interpretations from data.

2.1 Describing vs. explaining

We begin by observing that descriptive clustering approaches are the methods of choice in certain contexts. For instance, such approaches arise naturally when the objective is to divide a network into two or more parts as a means to solve a variety of optimization problems. Arguably, the most classic example of this is the design of very large scale integrated (VLSI) circuits [Reference Jacob Baker10]. The task is to combine from up to billions of transistors into a single physical microprocessor chip. Transistors that connect to each other must be placed together to take less space, consume less power, reduce latency, and reduce the risk of cross-talk with other nearby connections. To achieve this, the initial stage of a VLSI process involves the partitioning of the circuit into many smaller modules with few connections between them, in a manner that enables their efficient spatial placement, i.e. by positioning the transistors in each module close together and those in different modules farther apart.

Another notable example is parallel task scheduling, a problem that appears in computer science and operations research. The objective is to distribute processes (i.e. programs, or tasks in general) between different processors, so they can run at the same time. Since processes depend on the partial results of other processes, this forms a dependency network, which then needs to be divided such that the number of dependencies across processors is minimized. The optimal division is the one where all tasks are able to finish in the shortest time possible.

Both examples above, and others, have motivated a large literature on “graph partitioning” dating back to the 70s [Reference Kernighan11–Reference Bichot and Siarry13], which covers a family of problems that play an important role in computer science and algorithmic complexity theory.

Although reminiscent of graph partitioning, and sharing with it many algorithmic similarities, community detection is used more broadly with a different goal [Reference Fortunato1, Reference Fortunato and Hric2]. Namely, the objective is to perform data analysis, where one wants to extract scientific understanding from empirical observations. The communities identified are usually directly used for representation and/or interpretation of the data, rather than as a mere device to solve a particular optimization problem. In this context, a merely descriptive approach will fail at giving us a meaningful insight into the data, and can be misleading, as we will discuss in the following.

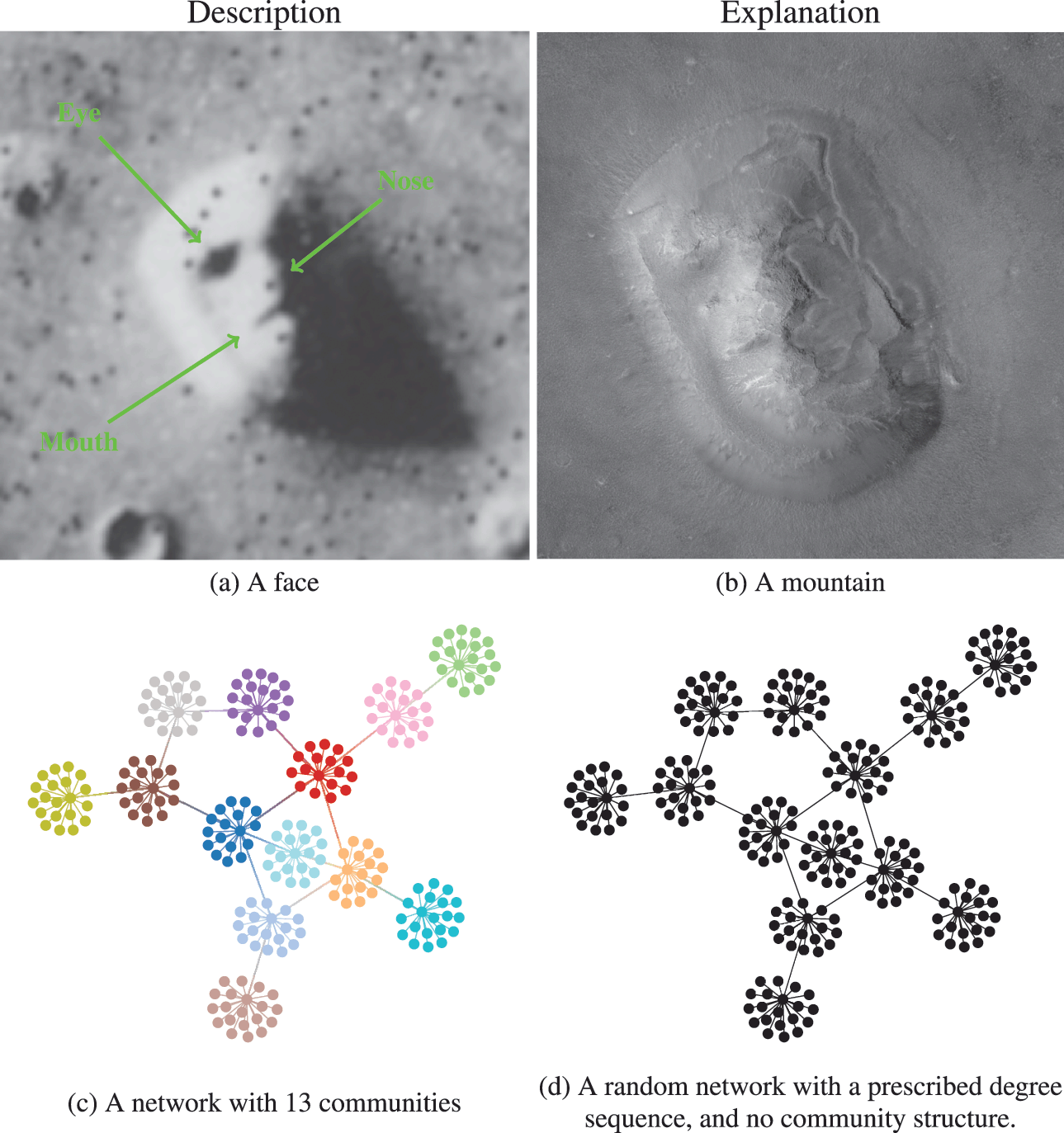

We illustrate the difference between descriptive and inferential approaches in Fig. 1. We first make an analogy with the famous “face” seen on images of the Cydonia Mensae region of the planet Mars. A merely descriptive account of the image can be made by identifying the facial features seen, which most people immediately recognize. However, an inferential description of the same image would seek instead to explain what is being seen. The process of explanation must invariably involve at its core an application of the law of parsimony, or Occam’s razor. This principle predicates that when considering two hypotheses compatible with an observation, the simplest one must prevail. Employing this logic results in the conclusion that what we are seeing is in fact a regular mountain, without denying that it looks like a face in that picture and instead acknowledging that it does so accidentally. In other words, the “facial” description is not useful as an explanation, as it emerges out of random features rather than exposing any underlying mechanism.

Figure 1 Difference between descriptive and inferential approaches to data analysis. As an analogy, in panels (a) and (b) we see two representations of the Cydonia Mensae region on Mars. Panel (a) is a descriptive account of what we see in the picture, namely a face. Panel (b) is an inferential representation of what lies behind it, namely a mountain (this is a more recent image of the same region with a higher resolution to represent an inferential interpretation of the figure in panel (a)). More concretely for the problem of community detection, in panels (c) and (d) we see two representations of the same network. Panel (c) shows a descriptive division into 13 assortative communities. In panel (d) we see an inferential representation as a degree-constrained random network, with no communities, since this is a more likely model of how this network was formed (see Fig. 2).

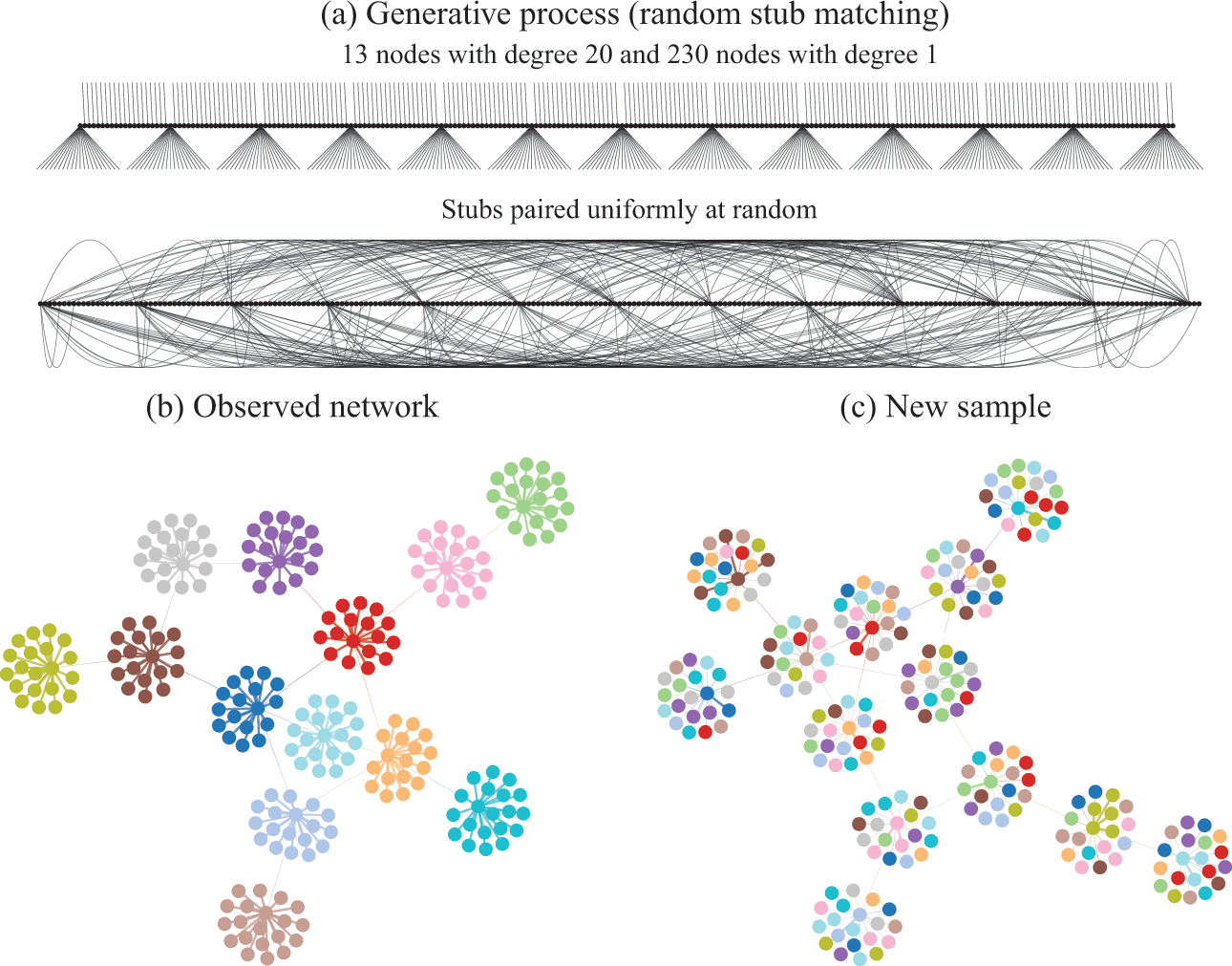

Figure 2 Descriptive community detection finds a partition of the network according to an arbitrary criterion that bears in general no relation to the rules that were used to generate it. In (a) is shown the generative model we consider, where first a degree sequence is given to the nodes (forming “stubs”, or “half-edges”) which then are paired uniformly at random, forming a graph. In (b) is shown a realization of this model. The node colors show the partition found with virtually any descriptive community detection method. In (c) is shown another network sampled from the same model, together with the same partition found in (b), which is completely uncorrelated with the new apparent communities seen, since they are the mere byproduct of the random placement of the edges. An inferential approach would find only a single community in both (b) and (c), since no partition of the nodes is relevant for the underlying generative model.

Going out of the analogy and back to the problem of community detection, in Fig. 1(c) and (d) we see a descriptive and an inferential account of an example network, respectively. The descriptive one is a division of the nodes into 13 assortative communities, which would be identified with many descriptive community detection methods available in the literature. Indeed, we can inspect visually that these groups form assortative communities,Footnote 2 and most people would agree that these communities are really there, according to most definitions in use: these are groups of nodes with many more internal edges than external ones. However, an inferential account of the same network would reveal something else altogether. Specifically, it would explain this network as the outcome of a process where the edges are placed at random, without the existence of any communities. The communities that we see in Fig. 1(c) are just a byproduct of this random process, and therefore carry no explanatory power. In fact, this is exactly how the network in this example was generated, i.e. by choosing a specific degree sequence and connecting the edges uniformly at random.

In Fig. 2(a) we illustrate in more detail how the network in Fig. 1 was generated: The degrees of the nodes are fixed, forming “stubs” or “half-edges,” which are then paired uniformly at random forming the edges of the network.Footnote 3 In Fig. 2(b), like in Fig. 1, the node colors show the partition found with descriptive community detection methods. However, this network division carries no explanatory power beyond what is contained in the degree sequence of the network, since it is generated otherwise uniformly at random. This becomes evident in Fig. 2(c), where we show another network sampled from the same generative process, i.e. another random pairing, but partitioned according to the same division as in Fig. 2(b). Since the nodes are paired uniformly at random, constrained only by their degree, this will create new apparent “communities” that are always uncorrelated with one another. Like the “face” on Mars, they can be seen and described, but they cannot (plausibly) explain how the network came to be.

We emphasize that the communities found in Fig. 2(b) are indeed really there from a descriptive point of view, and they can in fact be useful for a variety of tasks. For example, the cut given by the partition, i.e. the number of edges that go between different groups, is only 13, which means that we need only to remove this number of edges to break the network into (in this case) 13 smaller components. Depending on context, this kind of information can be used to prevent a widespread epidemic, hinder undesired communication, or, as we have already discussed, distribute tasks among processors and design a microchip. However, what these communities cannot be used for is to explain the data. In particular, a conclusion that would be completely incorrect is that the nodes that belong to the same group would have a larger probability of being connected between themselves. As shown in Fig. 2(a), this is clearly not the case, as the observed “communities” arise by pure chance, without any preference between the nodes.

2.2 To infer or to describe? A litmus test

Given the above differences, and the fact that both inferential and descriptive approaches have their uses depending on context, we are left with the question: Which approach is more appropriate for a given task at hand? In order to help answering this question, for any given context, it is useful to consider the following “litmus test”:

Litmus test: to infer or to describe?

Q: “Would the usefulness of our conclusions change if we learn, after obtaining the communities, that the network being analyzed is maximally random?”

If the answer is “yes,” then an inferential approach is needed.

If the answer is “no,” then an inferential approach is not required.

If the answer to the above question is “yes,” then an inferential approach is warranted, since the conclusions depend on an interpretation of how the data were generated. Otherwise, a purely descriptive approach may be appropriate since considerations about generative processes are not relevant.

It is important to understand that the relevant question in this context is not whether the network being analyzed is actually maximally random,Footnote 4 since this is rarely the case for empirical networks. Instead, considering this hypothetical scenario serves as a test to evaluate if our task requires us to separate between actual latent community structures (i.e. those that are responsible for the network formation), from those that arise completely out of random fluctuations, and hence carry no explanatory power. Furthermore, most empirical networks, even if not maximally random, like most interesting data, are better explained by a mixture of structure and randomness, and a method that cannot tell those apart cannot be used for inferential purposes.

Returning to the VLSI and task scheduling examples we considered in the previous section, it is clear that the answer to the litmus test above would be “no,” since it hardly matters how the network was generated and how we should interpret the partition found, as long as the integrated circuit can be manufactured and function efficiently, or the tasks finish in the minimal time. Interpretation and explanations are simply not the primary goals in these cases.Footnote 5

However, it is safe to say that in network data analyses very often the answer to the question above question would be “yes.” Typically, community detection methods are used to try to understand the overall large-scale network structure, determine the prevalent mixing patterns, make simplifications and generalizations, all in a manner that relies on statements about what lies behind the data, e.g. whether nodes were more or less likely to be connected to begin with. A majority of conclusions reached would be severely undermined if one would discover that the underlying network is in fact completely random. This means that these analyses suffer the substantial risk of yielding misleading answers when using purely descriptive methods, since they are likely to be overfitting the data — i.e. confusing randomness with underlying generative structure.Footnote 6

2.3 Inferring, explaining, and compressing

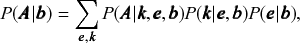

Inferential approaches to community detection (see Ref. [Reference Peixoto, Doreian, Batagelj and Ferligoj5] for a detailed introduction) are designed to provide explanations for network data in a principled manner. They are based on the formulation of generative models that include the notion of community structure in the rules of how the edges are placed. More formally, they are based on the definition of a likelihood

![]() for the network

for the network

![]() conditioned on a partition

conditioned on a partition

![]() , which describes how the network could have been generated, and the inference is obtained via the posterior distribution, according to Bayes’ rule, i.e.

, which describes how the network could have been generated, and the inference is obtained via the posterior distribution, according to Bayes’ rule, i.e.

(1)

(1)

where

![]() is the prior probability for a partition

is the prior probability for a partition

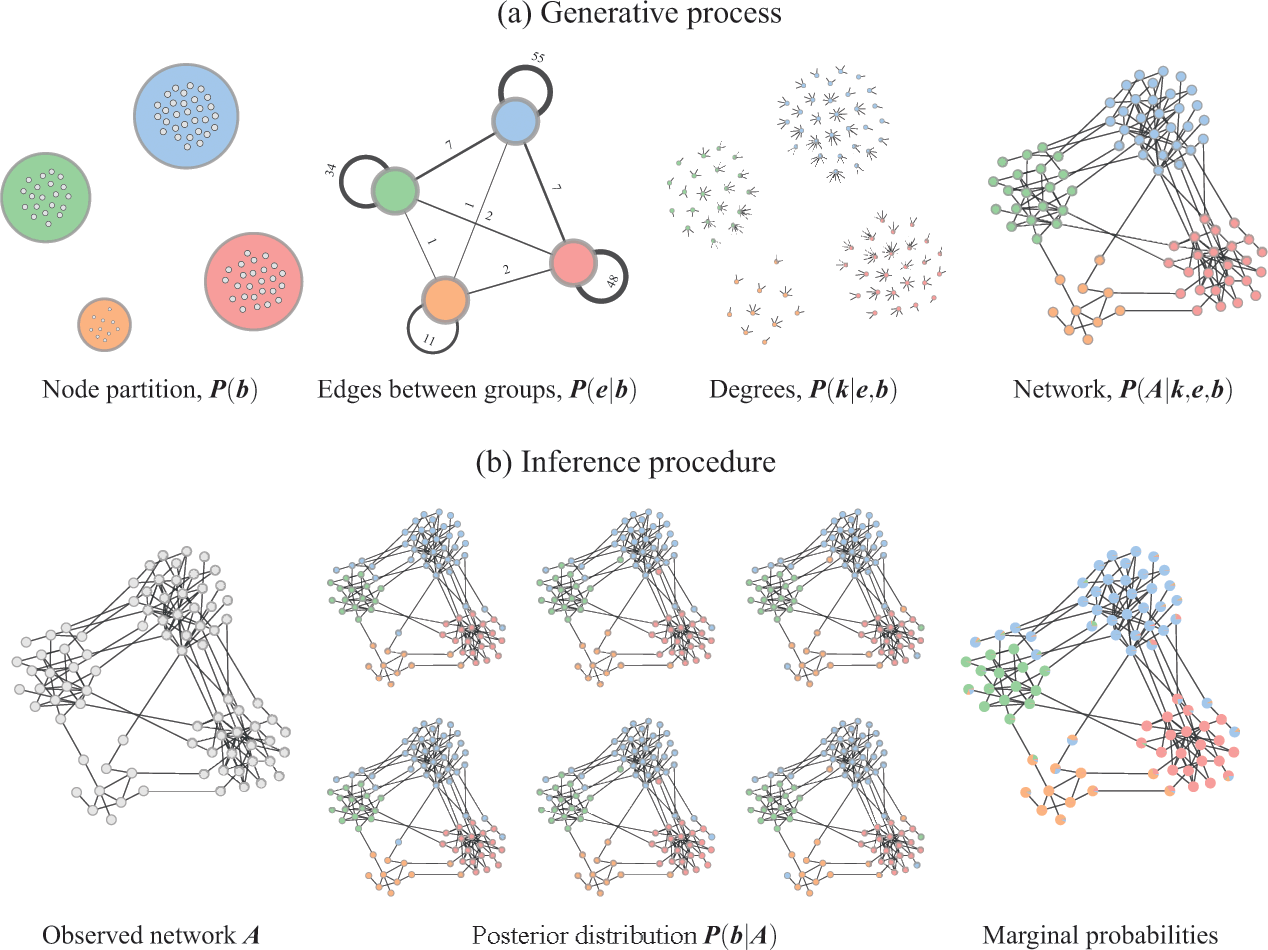

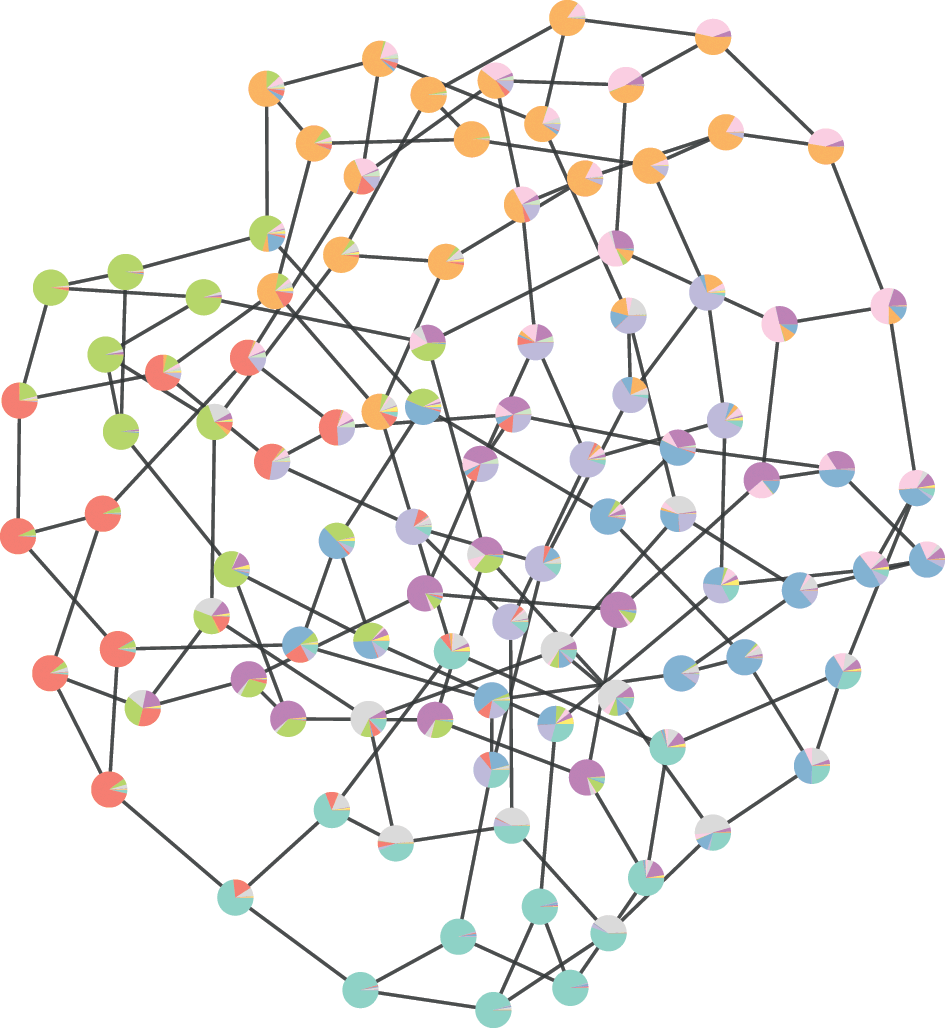

![]() . The inference procedure consists in sampling from or maximizing this distribution, which yields the most likely division(s) of the network into groups, according to the statistical evidence available in the data (see Fig. 3).

. The inference procedure consists in sampling from or maximizing this distribution, which yields the most likely division(s) of the network into groups, according to the statistical evidence available in the data (see Fig. 3).

Figure 3 Inferential community detection considers a generative process (a), where the unobserved model parameters are sampled from prior distributions. In the case of the DC-SBM, these are the priors for the partition

![]() , the number of edges between groups

, the number of edges between groups

![]() , and the node degrees,

, and the node degrees,

![]() . Finally, the network itself is sampled from its model,

. Finally, the network itself is sampled from its model,

![]() . The inference procedure (b) consists on inverting the generative process given an observed network

. The inference procedure (b) consists on inverting the generative process given an observed network

![]() , corresponding to a posterior distribution

, corresponding to a posterior distribution

![]() , which then can be summarized by a marginal probability that a node belongs to a given group (represented as pie charts on the nodes).

, which then can be summarized by a marginal probability that a node belongs to a given group (represented as pie charts on the nodes).

Overwhelmingly, the models used to infer communities are variations of the stochastic block model (SBM) [Reference Holland, Laskey and Leinhardt14], where in addition to the node partition, it takes the probability of edges being placed between the different groups as an additional set of parameters. A particularly expressive variation is the degree-corrected SBM (DC-SBM) [Reference Karrer and Newman15], with a marginal likelihood given by [Reference Peixoto16]

(2)

(2)

where

![]() is a matrix with elements

is a matrix with elements

![]() specifying how many edges go between groups

specifying how many edges go between groups

![]() and

and

![]() , and

, and

![]() are the degrees of the nodes. Therefore, this model specifies that, conditioned on a partition

are the degrees of the nodes. Therefore, this model specifies that, conditioned on a partition

![]() , first the edge counts

, first the edge counts

![]() are sampled from a prior distribution

are sampled from a prior distribution

![]() , followed by the degrees from the prior

, followed by the degrees from the prior

![]() , and finally the network is wired together according to the probability

, and finally the network is wired together according to the probability

![]() , which respects the constraints given by

, which respects the constraints given by

![]() ,

,

![]() , and

, and

![]() . See Fig. 3(a) for a illustration of this process.

. See Fig. 3(a) for a illustration of this process.

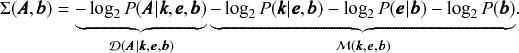

This model formulation includes maximally random networks as special cases — indeed the model we considered in Fig. 2 corresponds exactly to the DC-SBM with a single group. Together with the Bayesian approach, the use of this model will inherently favor a more parsimonious account of the data, whenever it does not warrant a more complex description — amounting to a formal implementation of Occam’s razor. This is best seen by making a formal connection with information theory, and noticing that we can write the numerator of Eq. 1 as

(3)

(3)

where the quantity

![]() is known as the description length [Reference Rissanen17–Reference Rissanen19] of the network. It is computed asFootnote 7

is known as the description length [Reference Rissanen17–Reference Rissanen19] of the network. It is computed asFootnote 7

The second set of terms

![]() in the above equation quantifies the amount of information in bits necessary to encode the parameters of the model.Footnote 8 The first term

in the above equation quantifies the amount of information in bits necessary to encode the parameters of the model.Footnote 8 The first term

![]() determines how many bits are necessary to encode the network itself, once the model parameters are known. This means that if Bob wants to communicate to Alice the structure of a network

determines how many bits are necessary to encode the network itself, once the model parameters are known. This means that if Bob wants to communicate to Alice the structure of a network

![]() , he first needs to transmit

, he first needs to transmit

![]() bits of information to describe the parameters

bits of information to describe the parameters

![]() ,

,

![]() , and

, and

![]() , and then finally transmit the remaining

, and then finally transmit the remaining

![]() bits to describe the network itself. Then, Alice will be able to understand the message by first decoding the parameters

bits to describe the network itself. Then, Alice will be able to understand the message by first decoding the parameters

![]() from the first part of the message, and using that knowledge to obtain the network

from the first part of the message, and using that knowledge to obtain the network

![]() from the second part, without any errors.

from the second part, without any errors.

What the above connection shows is that there is a formal equivalence between inferring the communities of a network and compressing it. This happens because finding the most likely partition

![]() from the posterior

from the posterior

![]() is equivalent to minimizing the description length

is equivalent to minimizing the description length

![]() used by Bob to transmit a message to Alice containing the whole network.

used by Bob to transmit a message to Alice containing the whole network.

Data compression amounts to a formal implementation of Occam’s razor because it penalizes models that are too complicated: if we want to describe a network using many communities, then the model part of the description length

![]() will be large, and Bob will need many bits to transmit the model parameters to Alice. However, increasing the complexity of the model will also reduce the first term

will be large, and Bob will need many bits to transmit the model parameters to Alice. However, increasing the complexity of the model will also reduce the first term

![]() , since there are fewer networks that are compatible with the bigger set of constraints, and hence the second part of Bob’s message will need to be shorter to convey the network itself once the parameters are known. Compression (and hence inference), therefore, is a balancing act between model complexity and quality of fit, where an increase in the former is only justified when it results in an even larger increase of the second, such that the total description length is minimized.

, since there are fewer networks that are compatible with the bigger set of constraints, and hence the second part of Bob’s message will need to be shorter to convey the network itself once the parameters are known. Compression (and hence inference), therefore, is a balancing act between model complexity and quality of fit, where an increase in the former is only justified when it results in an even larger increase of the second, such that the total description length is minimized.

The reason why the compression approach avoids overfitting the data is due to a powerful fact from information theory, known as Shannon’s source coding theorem [Reference Shannon21], which states that it is impossible to compress data sampled from a distribution

![]() using fewer bits per symbol than the entropy of the distribution,

using fewer bits per symbol than the entropy of the distribution,

![]() — indeed, it’s a remarkable fact from Shannon’s theory that a statement about a single sample (how many bits we need to describe it) is intrinsically connected to the distribution from which it came. Therefore, as the dataset becomes large, it also becomes impossible to compress the data more than can be achieved by using a code that is optimal according to its true distribution. In our context, this means that it is impossible, for example, to compress a maximally random network using a SBM with more than one group.Footnote 9 This means, for example, that when encountering an example like in Fig. 2, inferential methods will detect a single community comprising all nodes in the network, since any further division does not provide any increased compression, or equivalently, no augmented explanatory power. From the inferential point of view, a partition like Fig. 2(b) overfits the data, since it incorporates irrelevant random features — a.k.a. “noise” — into its description.

— indeed, it’s a remarkable fact from Shannon’s theory that a statement about a single sample (how many bits we need to describe it) is intrinsically connected to the distribution from which it came. Therefore, as the dataset becomes large, it also becomes impossible to compress the data more than can be achieved by using a code that is optimal according to its true distribution. In our context, this means that it is impossible, for example, to compress a maximally random network using a SBM with more than one group.Footnote 9 This means, for example, that when encountering an example like in Fig. 2, inferential methods will detect a single community comprising all nodes in the network, since any further division does not provide any increased compression, or equivalently, no augmented explanatory power. From the inferential point of view, a partition like Fig. 2(b) overfits the data, since it incorporates irrelevant random features — a.k.a. “noise” — into its description.

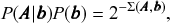

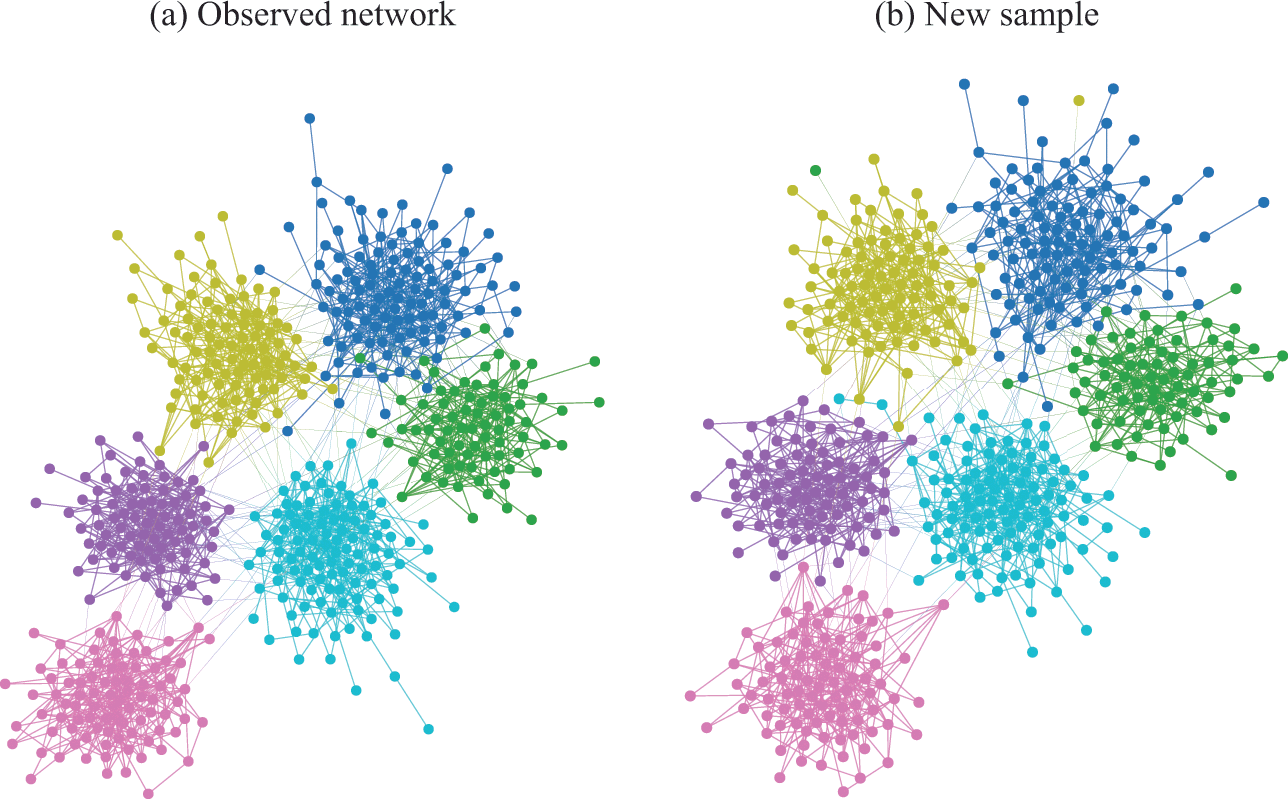

In Fig. 4(a) is shown an example of the results obtained with an inferential community detection algorithm, for a network sampled from the SBM. As shown in Fig. 4(b), the obtained partitions are still valid when carried over to an independent sample of the model, because the algorithm is capable of separating the general underlying pattern from the random fluctuations. As a consequence of this separability, this kind of algorithm does not find communities in maximally random networks, which are composed only of “noise.”

Figure 4 Inferential community detection aims to find a partition of the network according to a fit of a generative model that can explain its structure. In (a) is shown a network sampled from a stochastic block model (SBM) with 6 groups, and where the group assignments were hidden from view. The node colors show the groups found via Bayesian inference of the SBM. In (b) is shown another network sampled from same SBM, together with the same partition found in (a), showing that it carries a substantial explanatory power — very differently from the example in Fig. 2 (c).

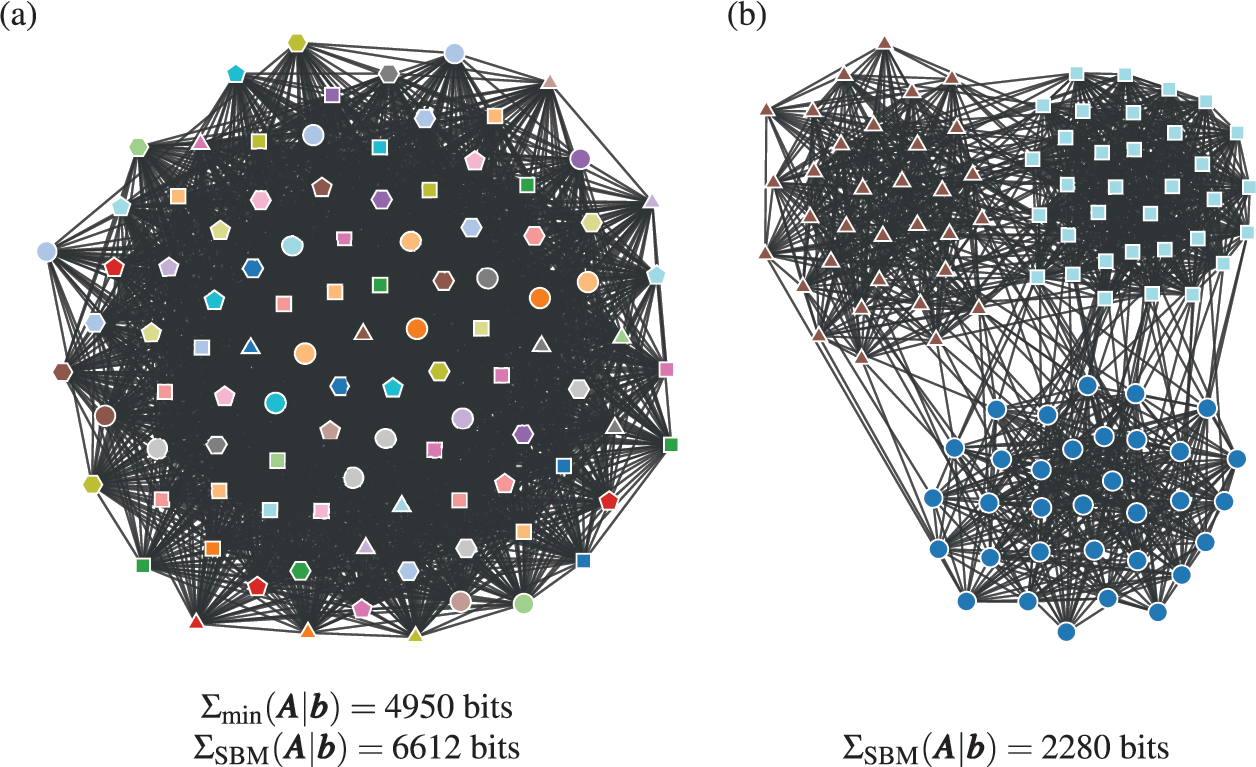

The concept of compression is more generally useful than just avoiding overfitting within a class of models. In fact, the description length gives us a model-agnostic objective criterion to compare different hypotheses for the data generating process according to their plausibility. Namely, since Shannon’s theorem tells us that the best compression can be achieved asymptotically only with the true model, then if we are able to find a description length for a network using a particular model, regardless of how it is parametrized, this also means that we have automatically found an upper bound on the optimal compression achievable. By formulating different generative models and computing their description length, we have not only an objective criterion to compare them against each other, but we also have a way to limit further what can be obtained with any other model. The result is an overall scale on which different models can be compared, as we move closer to the limit of what can be uncovered for a particular network at hand.

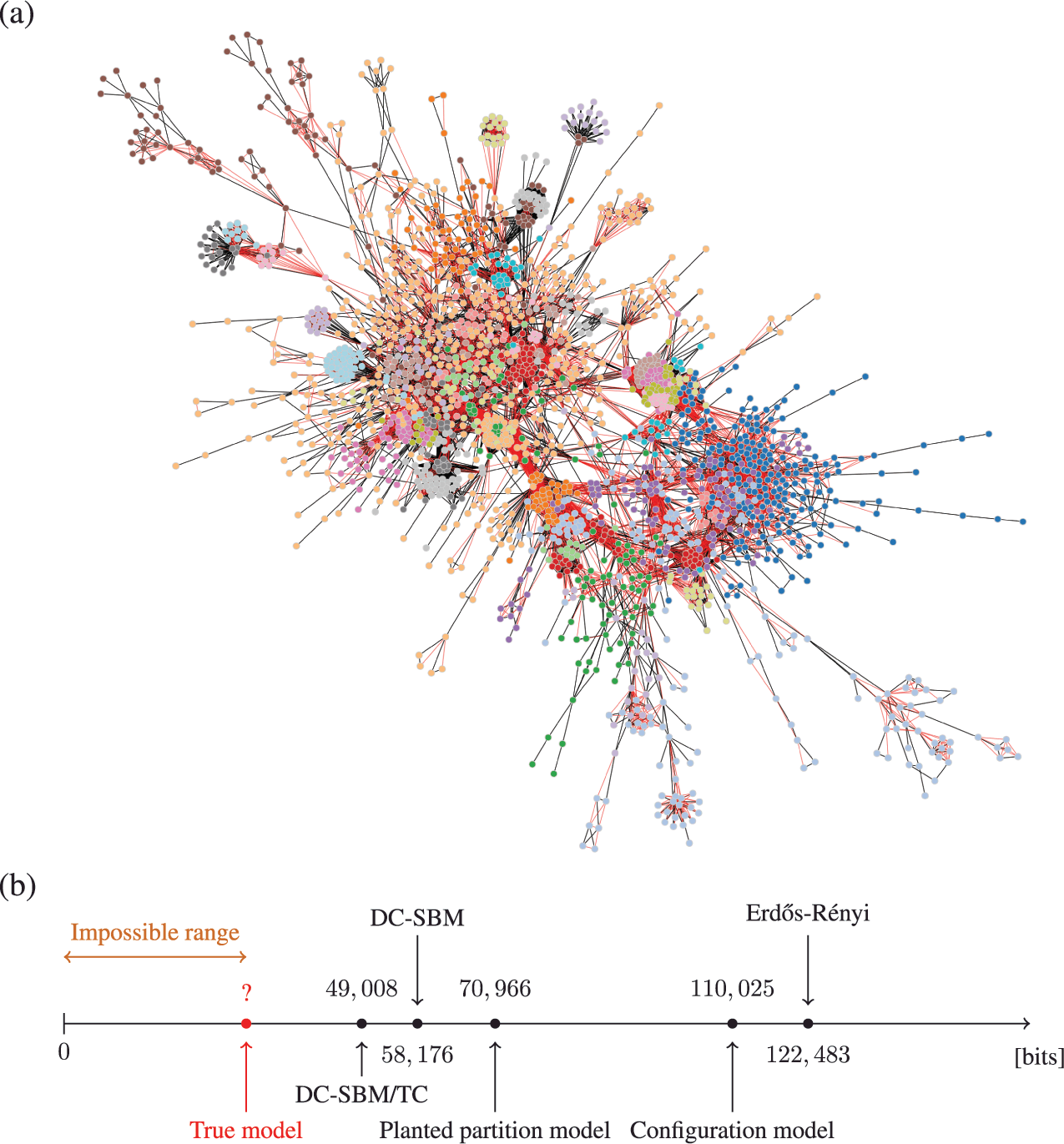

As an example, in Fig. 5 we show the description length values with some models obtained for a protein-protein interaction network for the organism Meleagris gallopavo (wild turkey) [Reference Zitnik, Sosič, Feldman and Leskovec22]. In particular, we can see that with the DC-SBM/TC (a version of the model with the addition of triadic closure edges [Reference Peixoto23]) we can achieve a description length that is far smaller than what would be possible with networks sampled from either the Erdős-Rényi, configuration, or planted partition (a SBM with strictly assortative communities [Reference Zhang and Peixoto24]) models, meaning that the inferred model is much closer to the true process that actually generated this network than the alternatives. Naturally, the actual process that generated this network is different from the DC-SBM/TC, and it likely involves, for example, mechanisms of node duplication which are not incorporated into this rather simple model [Reference Pastor-Satorras, Smith and Solé25]. However, to the extent that the true process leaves statistically significant traces in the network structure,Footnote 10 computing the description length according to it should provide further compression when compared to the alternatives.Footnote 11 Therefore, we can try to extend or reformulate our models to incorporate features that we hypothesize to be more realistic, and then verify if this in fact the case, knowing that whenever we find a more compressive model, it is moving closer to the true model — or at least to what remains detectable from it for the finite data.

Figure 5 Compression points towards the true model. (a) Protein-protein interaction network for the organism Meleagris gallopavo [Reference Zitnik, Sosič, Feldman and Leskovec22]. The node colors indicate the best partition found with the DC-SBM/TC [Reference Peixoto23] (there are more groups than colors, so some colors are repeated), and the edge colors indicate whether they are attributed to triadic closure (red) or the DC-SBM (black). (b) Description length values according to different models. The unknown true model must yield a description length value smaller than the DC-SBM/TC, and no other model should be able to provide a superior compression that is statistically significant.

The discussion above glosses over some important technical aspects. For example, it is possible for two (or, in fact, many) models to have the same or very similar description length values. In this case, Occam’s razor fails as a criterion to select between them, and we need to consider them collectively as equally valid hypotheses. This means, for example, that we would need to average over them when making specific inferential statements [Reference Peixoto26] — selecting between them arbitrarily can be interpreted as a form of overfitting. Furthermore, there is obviously no guarantee that the true model can actually be found for any particular data. This is only possible in the asymptotic limit of “sufficient data,” which will vary depending on the actual model. Outside of this limit (which is the typical case in empirical settings, in particular when dealing with sparse networks [Reference Yan, Shalizi, Jensen, Krzakala, Moore, Zdeborová, Zhang and Zhu27]), fundamental limits to inference are unavoidable,Footnote 12 which means in practice that we will always have limited accuracy and some amount of error in our conclusions. However, when employing compression, these potential errors tend towards overly simple explanations, rather than overly complex ones. Whenever perfect accuracy is not possible, it is difficult to argue in favor of a bias in the opposite direction.

We emphasize that it is not possible to “cheat” when doing compression. For any particular model, the description length will have the same form

(5)

(5)

where

![]() is some arbitrary set of parameters. If we constrain the model such that it becomes possible to describe the data with a number of bits

is some arbitrary set of parameters. If we constrain the model such that it becomes possible to describe the data with a number of bits

![]() that is very small, this can only be achieved, in general, by increasing the number of parameters

that is very small, this can only be achieved, in general, by increasing the number of parameters

![]() , such that the number of bits

, such that the number of bits

![]() required to describe them will also increase. Therefore, there is no generic way to achieve compression that bypasses actually formulating a meaningful hypothesis that matches statistically significant patterns seen in the data. One may wonder, therefore, if there is an automatized way of searching for hypotheses in a manner that guarantees optimal compression. The most fundamental way to formulate this question is to generalize the concept of minimum description length as follows: for any binary string

required to describe them will also increase. Therefore, there is no generic way to achieve compression that bypasses actually formulating a meaningful hypothesis that matches statistically significant patterns seen in the data. One may wonder, therefore, if there is an automatized way of searching for hypotheses in a manner that guarantees optimal compression. The most fundamental way to formulate this question is to generalize the concept of minimum description length as follows: for any binary string

![]() (representing any measurable data), we define

(representing any measurable data), we define

![]() as the length in bits of the shortest computer program that yields

as the length in bits of the shortest computer program that yields

![]() as an output. The quantity

as an output. The quantity

![]() is known as Kolmogorov complexity [Reference Cover and Thomas29, Reference Ming and Vitányi30], and if we would be able to compute it for a binary string representing an observed network, we would be able to determine the “true model” value in Fig. 5, and hence know how far we are from the optimum.Footnote 13

is known as Kolmogorov complexity [Reference Cover and Thomas29, Reference Ming and Vitányi30], and if we would be able to compute it for a binary string representing an observed network, we would be able to determine the “true model” value in Fig. 5, and hence know how far we are from the optimum.Footnote 13

Unfortunately, an important result in information theory is that

![]() is not computable [Reference Ming and Vitányi30]. This means that it is strictly impossible to write a computer program that computes

is not computable [Reference Ming and Vitányi30]. This means that it is strictly impossible to write a computer program that computes

![]() for any string

for any string

![]() .Footnote 14 This does not invalidate using the description length as a criterion to select among alternative models, but it dashes any hope of fully automatizing the discovery of optimal hypotheses.

.Footnote 14 This does not invalidate using the description length as a criterion to select among alternative models, but it dashes any hope of fully automatizing the discovery of optimal hypotheses.

2.4 Role of inferential approaches in community detection

Inferential approaches based on the SBM have an old history, and were introduced for the study of social networks in the early 80’s [Reference Holland, Laskey and Leinhardt14]. But despite such an old age, and having appeared repeatedly in the literature over the years [Reference Snijders and Nowicki31–Reference Mørup and Hansen39] (also under different names in other contexts e.g. [Reference Boguñá and Pastor-Satorras40, Reference Bollobás, Janson and Riordan41]), they entered the mainstream community detection literature rather late, arguably after the influential paper by Karrer and Newman that introduced the DC-SBM [Reference Karrer and Newman15] in 2011, at a point where descriptive approaches were already dominating. However, despite the dominance of descriptive methods, the existence of inferential criteria was already long noticeable. In fact, in a well-known attempt to systematically compare the quality of a variety of descriptive community detection methods, the authors of Ref. [Reference Lancichinetti, Fortunato and Radicchi42] proposed the now so-called Lancichinetti–Fortunato–Radicchi (LFR) benchmark, offered as a more realistic alternative to the simpler Newman-Girvan benchmark [Reference Girvan and Newman43] introduced earlier. Both are in fact generative models, essentially particular cases of the DC-SBM, containing a “ground truth” community label assignment, against which the results of various algorithms are supposed to be compared. Clearly, this is an inferential evaluation criterion, although, historically, virtually all of the methods compared against that benchmark are descriptive in nature [Reference Lancichinetti and Fortunato44] (these studies were conducted mostly before inferential approaches had gained more traction). The use of such a criterion already betrays that the answer to the litmus test considered previously would be “yes,” and therefore descriptive approaches are fundamentally unsuitable for the task. In contrast, methods based on statistical inference are not only more principled, but in fact provably optimal in the inferential scenario: an estimation based on the posterior distribution obtained from the true generative model is called “Bayes optimal,” since there is no procedure that can, on average, produce results with higher accuracy. The use of this inferential formalism has led to the development of asymptotically optimal algorithms and the identification of sharp transitions in the detectability of planted community structure [Reference Decelle, Krzakala, Moore and Zdeborová6, Reference Decelle, Krzakala, Moore and Zdeborová45].

The conflation one often finds between descriptive and inferential goals in the literature of community detection likely stems from the fact that while it is easy to define benchmarks in the inferential setting, it is substantially more difficult to do so in a descriptive setting. Given any descriptive method (modularity maximization [Reference Newman46], Infomap [Reference Rosvall and Bergstrom47], Markov stability [Reference Lambiotte, Delvenne and Barahona48], etc.) it is usually problematic to determine for which network these methods are optimal (or even if one exists), and what would be a canonical output that would be unambiguously correct. In fact, the difficulty with establishing these fundamental references already serve as evidence that the task itself is ill-defined. On the other hand, taking an inferential route forces one to start with the right answer, via a well-specified generative model that articulates what the communities actually mean with respect to the network structure. Based on this precise definition, one then derives the optimal detection method by employing Bayes’ rule.

It is also useful to observe that inferential analyses of aspects of the network other than directly its structure might still be only descriptive of the structure itself. A good example of this is the modelling of dynamics that take place on a network, such as a random walk. This is precisely the case of the Infomap method [Reference Rosvall and Bergstrom47], which models a simulated random walk on a network in an inferential manner, using for that a division of the network into groups. While this approach can be considered inferential with respect to an artificial dynamics, it is still only descriptive when it comes to the actual network structure (and will suffer the same problems, such a finding communities in maximally random networks). Communities found in this way could be useful for particular tasks, such as to identify groups of nodes that would be similarly affected by a diffusion process. This could be used, for example, to prevent or facilitate the diffusion by removing or adding edges between the identified groups. In this setting, the answer to the litmus test above would also be “no,” since what is important is how the network “is” (i.e. how a random walk behaves on it), not how it came to be, or if its features are there by chance alone. Once more, the important issue to remember is that the groups identified in this manner cannot be interpreted as having any explanatory power about the network structure itself, and cannot be used reliably to extract inferential conclusions about it. We are firmly in a descriptive, not inferential setting with respect to the network structure.

Another important difference between inferential and descriptive approaches is worth mentioning. Descriptive approaches are often tied to very particular contexts, and cannot be directly compared to one another. This has caused great consternation in the literature, since there is a vast number of such methods, and little robust methodology on how to compare them. Indeed, why should we expect that the modules found by optimizing task scheduling should be comparable to those that optimize the description of a dynamics? In contrast, inferential approaches all share the same underlying context: they attempt to explain the network structure; they vary only in how this is done. They are, therefore, amenable to principled model selection procedures [Reference MacKay20, Reference Gelman, Carlin, Stern, Dunson, Vehtari and Rubin49, Reference Bishop50], designed to evaluate which is the most appropriate fit for any particular network, even if the models used operate with very different parametrizations, as we discussed already in Sec. 2.3. In this situation, the multiplicity of different models available becomes a boon rather than a hindrance, since they all contribute to a bigger toolbox we have at our disposal when trying to understand empirical observations.

Finally, inferential approaches offer additional advantages that make them more suitable as part of a scientific pipeline. In particular, they can be naturally extended to accommodate measurement uncertainties [Reference Newman51–Reference Peixoto53] — an unavoidable property of empirical data, which descriptive methods almost universally fail to consider. This information can be used not only to propagate the uncertainties to the community assignments [Reference Peixoto26] but also to reconstruct the missing or noisy measurements of the network itself [Reference Clauset, Moore and Newman37, Reference Guimerà and Sales-Pardo54]. Furthermore, inferential approaches can be coupled with even more indirect observations such as time-series on the nodes [Reference Hoffmann, Peel, Lambiotte and Jones55], instead of a direct measurement of the edges of the network, such that the network itself is reconstructed, not only the community structure [Reference Peixoto56]. All these extensions are possible because inferential approaches give us more than just a division of the network into groups; they give us a model estimate of the network, containing insights about its formation mechanism.

2.5 Behind every description there is an implicit generative model

Descriptive methods of community detection — such as graph partitioning for VLSI [Reference Kernighan11] or Infomap [Reference Rosvall and Bergstrom47] — are not designed to produce inferential statements about the network structure. They do not need to explicitly articulate a generative model, and the quality of their results should be judged solely against their manifestly noninferential goals, e.g. whether a chip design can be efficiently manufactured in the case of graph partitioning.

Nevertheless, descriptive methods are often employed with inferential aims in practice. This happens, for example, when modularity maximization is used to discover homophilic patterns in a social network, or when Infomap is used to uncover latent communities generated by the LFR benchmark. In these situations, it is useful to consider to what extent can we expect any of these methods reveal meaningful inferential results, despite their intended use.

From a purely mathematical perspective, there is actually no formal distinction between descriptive and inferential methods, because every descriptive method can be mapped to an inferential one, according to some implicit model. Therefore, whenever we are attempting to interpret the results of a descriptive community detection method in an inferential way — i.e. make a statement about how the network came to be — we cannot in fact avoid making implicit assumptions about the data generating process that lies behind it. (At first this statement seems to undermine the distinction we have been making between descriptive and inferential methods, but in fact this is not the case, as we will see below.)

It is not difficult to demonstrate that it is possible to formulate any conceivable community detection method as a particular inferential method. Let us consider an arbitrary quality function

(6)

(6)

which is used to perform community detection via the optimization

(7)

(7)

We can then interpret the quality function

![]() as the “Hamiltonian” of a posterior distribution

as the “Hamiltonian” of a posterior distribution

(8)

(8)

with normalization

![]() . By making

. By making

![]() we recover the optimization of Eq. 7, or we may simply try to find the most likely partition according to the posterior, in which case

we recover the optimization of Eq. 7, or we may simply try to find the most likely partition according to the posterior, in which case

![]() remains an arbitrary parameter. Therefore, employing Bayes’ rule in the opposite direction, we obtain the following effective generative model:

remains an arbitrary parameter. Therefore, employing Bayes’ rule in the opposite direction, we obtain the following effective generative model:

(9)

(9)

(10)

(10)

where

![]() is the marginal distribution over networks, and

is the marginal distribution over networks, and

![]() is the prior distribution for the partition. Due to the normalization of

is the prior distribution for the partition. Due to the normalization of

![]() we have the following constraint that needs to be fulfilled:

we have the following constraint that needs to be fulfilled:

(11)

(11)

Therefore, not all choices of

![]() and

and

![]() are compatible with the posterior distribution and the exact possibilities will depend on the actual shape of

are compatible with the posterior distribution and the exact possibilities will depend on the actual shape of

![]() . However, one choice that is always possible is a maximum-entropy one,

. However, one choice that is always possible is a maximum-entropy one,

(12)

(12)

with

![]() and

and

![]() . Taking this choice leads to the effective generative model

. Taking this choice leads to the effective generative model

(13)

(13)

Therefore, inferentially interpreting a community detection algorithm with a quality function

![]() is equivalent to assuming the generative model

is equivalent to assuming the generative model

![]() and prior

and prior

![]() of Eqs. 13 and 12 above. Furthermore, this also means that any arbitrary community detection algorithm implies a description length given (in nats) byFootnote 15

of Eqs. 13 and 12 above. Furthermore, this also means that any arbitrary community detection algorithm implies a description length given (in nats) byFootnote 15

(14)

(14)

What the preceding results show is that there is no such thing as a “model-free” community detection method, since they are all equivalent to the inference of some generative model. The only difference to a direct inferential method is that in that case the modelling assumptions are made explicitly, inviting rather than preventing scrutiny. Most often, the effective model and prior that are equivalent to an ad hoc community detection method will be difficult to interpret, justify, or even compute (in general, Eq. 14 cannot be written in closed form).

Furthermore there is no guarantee that the obtained description length of Eq. 14 will yield a competitive or even meaningful compression. In particular, there is no guarantee that this effective inference will not overfit the data. Although we mentioned in Section 2.3 that inference and compression are equivalent, the compression achieved when considering a particular generative model is constrained by the assumptions encoded in its likelihood and prior. If these are poorly chosen, no actual compression might be achieved, for example when comparing to the one obtained with a maximally random model. This is precisely what happens with descriptive community detection methods: they overfit because their implicit modelling assumptions do not accommodate the possibility that a network may be maximally random, or contain a balanced mixture of structure and randomness.

Since we can always interpret any community detection method as inferential, is it still meaningful to categorize some methods as descriptive? Arguably yes, because directly inferential approaches make their generative models and priors explicit, while for a descriptive method we need to extract them from reverse engineering. Explicit modelling allows us to make judicious choices about the model and prior that reflect the kinds of structures we want to detect, relevant scales or lack thereof, and many other aspects that improve their performance in practice, and our understanding of the results. With implicit assumptions we are “flying blind,” relying substantially on serendipity and trial-and-error — not always with great success.

It is not uncommon to find criticisms of inferential methods due to a perceived implausibility of the generative models used — such as the conditional independence of the placement of the edges present in the SBM [Reference Schaub, Delvenne, Rosvall and Lambiotte8] — although these assumptions are also present, but only implicitly, in other methods, like modularity maximization (see Sec. 4.1). We discuss this issue further in Sec. 4.8.

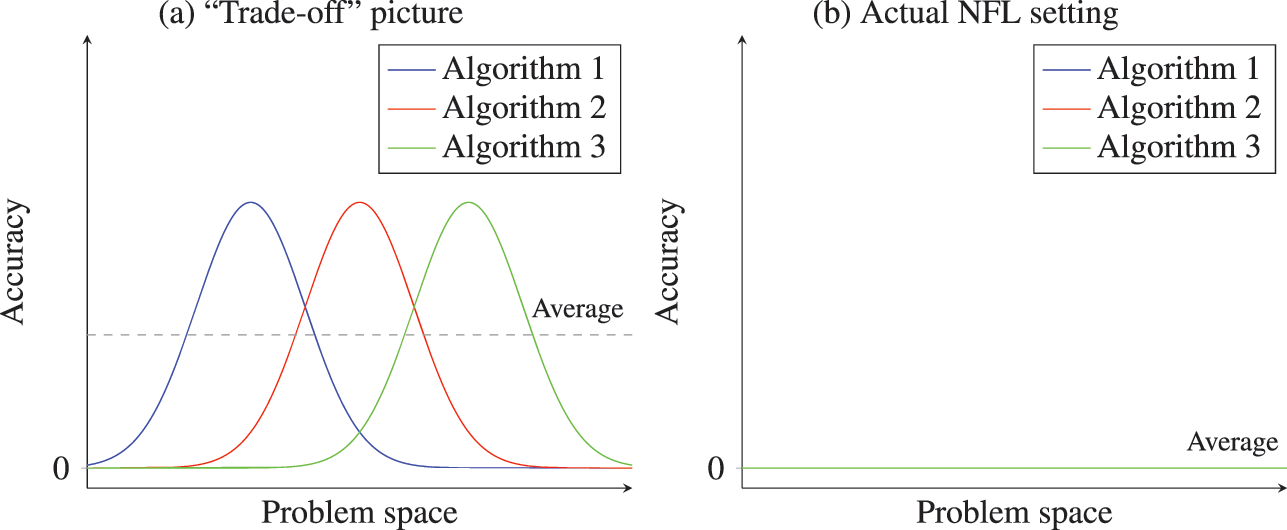

The above inferential interpretation is not specific to community detection, but is in fact valid for any learning problem. The set of explicit or implicit assumptions that must come with any learning algorithm is called an “inductive bias.” An algorithm is expected to function optimally only if its inductive bias agrees with the actual instances of the problems encountered. It is important to emphasize that no algorithm can be free of an inductive bias, we can only chose which intrinsic assumptions we make about how likely we are to encounter a particular kind of data, not whether we are making an assumption. Therefore, it is particularly problematic when a method does not articulate explicitly what these assumptions are, since even if they are hidden from view, they exist nonetheless, and still need to be scrutinized and justified. This means we should be particularly skeptical of the impossible claim that a learning method is “model-free,” since this denomination is more likely to signal an inability or unwillingness to expose the underlying modelling assumptions, which could potentially be revealed as unappealing and fragile when eventually forced to come under scrutiny.

2.6 Caveats and challenges with inferential methods

Inferential community detection is a challenging task, and is not without its caveats. One aspect they share with descriptive approaches is algorithmic complexity (see Sec. 4.9), and the fact that they in general try to solve NP-hard problems. This means that there is no known algorithm that is guaranteed to produce exact results in a reasonable amount of time, except for very small networks. That does not mean that every instance of the problem is hard to answer, in fact it can be shown that in key cases robust answers can be obtained [Reference Decelle, Krzakala, Moore and Zdeborová45], but in general all existing methods are approximative, with the usual trade-off between accuracy and speed. The quest for general approaches that behave well while being efficient is still ongoing and is unlikely to exhausted soon.

Furthermore, employing statistical inference is not a “silver bullet” that automatically solves every problem. If our models are “misspecified,” i.e. represent very poorly the structure present in the data, then our inferences using them will be very limited and potentially misleading (see Sec. 4.8) — the most we can expect from our methodology in this case is to obtain good diagnostics of when this is happening [Reference Peixoto26]. There is also a typical trade-off between realism and simplicity, such that models that more closely match reality are more difficult to express in simple terms with tractable models. Usually, the more complex a model is, the more difficult becomes its inference. The technical task of using algorithms such as Markov chain Monte Carlo (MCMC) to produce reliable inferences for a complex model is nontrivial and requires substantial expertise, and is likely to be a long-living field of research.

In general it can be said that, although statistical inference does not provide automatic answers, it gives us an invaluable platform where the questions can be formulated more clearly, and allows us to navigate the space of answers using more robust methods and theory.

3 Modularity maximization considered harmful

The most widespread method for community detection is modularity maximization [Reference Newman46], which happens also to be one the most problematic. This method is based on the modularity function,

(15)

(15)

where

![]() is an entry of the adjacency matrix,

is an entry of the adjacency matrix,

![]() is the degree of node

is the degree of node

![]() ,

,

![]() is the group membership of node

is the group membership of node

![]() , and

, and

![]() is the total number of edges. The method consists in finding the partition

is the total number of edges. The method consists in finding the partition

![]() that maximizes

that maximizes

![]() ,

,

(16)

(16)

The motivation behind the modularity function is that it compares the existence of an edge

![]() to the probability of it existing according to a null model,

to the probability of it existing according to a null model,

![]() , namely that of the configuration model [Reference Fosdick, Larremore, Nishimura and Ugander57] (or more precisely, the Chung-Lu model [Reference Chung and Linyuan58]). The motivation for this method is that we should consider a partition of the network meaningful if the occurrence of edges between nodes of the same group exceeds what we would expect with a random null model without communities.

, namely that of the configuration model [Reference Fosdick, Larremore, Nishimura and Ugander57] (or more precisely, the Chung-Lu model [Reference Chung and Linyuan58]). The motivation for this method is that we should consider a partition of the network meaningful if the occurrence of edges between nodes of the same group exceeds what we would expect with a random null model without communities.

Despite its widespread adoption, this approach suffers from a variety of serious conceptual and practical flaws, which have been documented extensively [Reference Fortunato1, Reference Fortunato and Hric2, Reference Guimerà, Sales-Pardo and Nunes Amaral59–Reference Good, Yves-Alexandre and Clauset61]. The most problematic one is that it purports to use an inferential criterion — a deviation from a null generative model — but is in fact merely descriptive. As has been recognized very early, this method categorically fails in its own stated goal, since it always finds high-scoring partitions in networks sampled from its own null model [Reference Guimerà, Sales-Pardo and Nunes Amaral59]. Indeed, the generative model we used in Fig. 2(a) is exactly the null model considered in the modularity function, which if maximized yields the partition seen in Fig. 2(a). As we already discussed, this result bears no relevance to the underlying generative process, and overfits the data.

The reason for this failure is that the method does not take into account the deviation from the null model in a statistically consistent manner. The modularity function is just a re-scaled version of the assortativity coefficient [Reference Newman62], a correlation measure of the community assignments seen at the endpoints of edges in the network. We should expect such a correlation value to be close to zero for a partition that is determined before the edges of the network are placed according to the null model, or equivalently, for a partition chosen at random. However, it is quite a different matter to find a partition that optimizes the value of

![]() , after the network is observed. The deviation from a null model computed in Eq. 15 completely ignores the optimization step of Eq. 16, although it is a crucial part of the algorithm. As a result, the method of modularity maximization tends to massively overfit, and find spurious communities even in networks sampled from its null model. If we search for patterns of correlations in a random graph, most of the time we will find them. This is a pitfall known as “data dredging” or “

, after the network is observed. The deviation from a null model computed in Eq. 15 completely ignores the optimization step of Eq. 16, although it is a crucial part of the algorithm. As a result, the method of modularity maximization tends to massively overfit, and find spurious communities even in networks sampled from its null model. If we search for patterns of correlations in a random graph, most of the time we will find them. This is a pitfall known as “data dredging” or “

![]() -hacking,” where one searches exhaustively for different patterns in the same data and reports only those that are deemed significant, according to a criterion that does not take into account the fact that we are doing this search in the first place.

-hacking,” where one searches exhaustively for different patterns in the same data and reports only those that are deemed significant, according to a criterion that does not take into account the fact that we are doing this search in the first place.

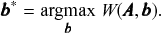

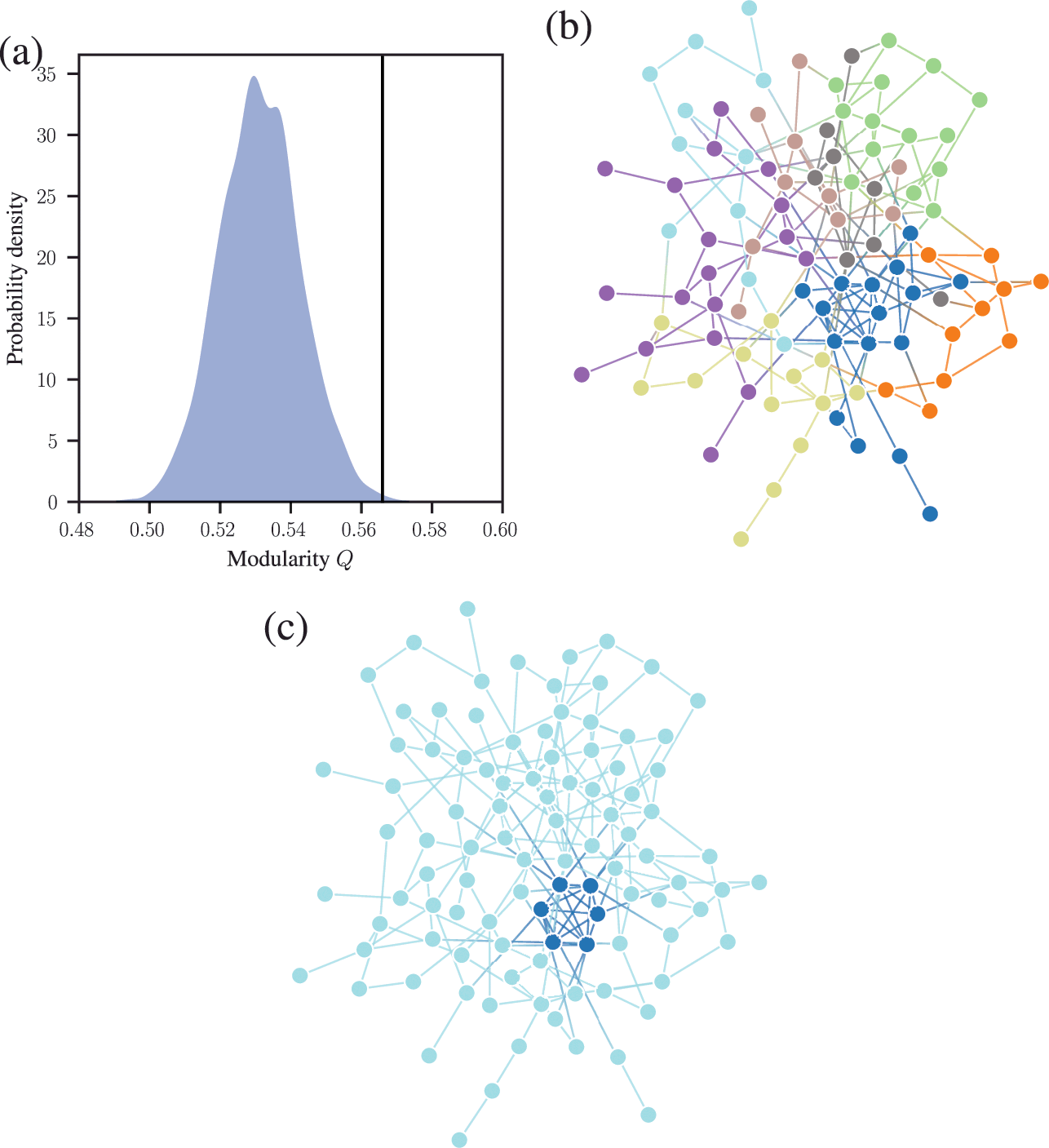

We demonstrate this problem in Fig. 6, where we show the distribution of modularity values obtained with a uniform configuration model with

![]() for every node

for every node

![]() , considering both a random partition and the one that maximizes

, considering both a random partition and the one that maximizes

![]() . While for a random partition we find what we would expect, i.e. a value of

. While for a random partition we find what we would expect, i.e. a value of

![]() close to zero, for the optimized partition the value is substantially larger. Inspecting the optimized partition in Fig. 6(c), we see that it corresponds indeed to 15 seemingly clear assortative communities — which by construction bear no relevance to how the network was generated. They have been dredged out of randomness by the optimization procedure.

close to zero, for the optimized partition the value is substantially larger. Inspecting the optimized partition in Fig. 6(c), we see that it corresponds indeed to 15 seemingly clear assortative communities — which by construction bear no relevance to how the network was generated. They have been dredged out of randomness by the optimization procedure.

Figure 6 Modularity maximization systematically overfits, and finds spurious structures even its own null model. In this example we consider a random network model with

![]() nodes, with every node having degree

nodes, with every node having degree

![]() . (a) Distribution of modularity values for a partition into 15 groups chosen at random, and for the optimized value of modularity, for

. (a) Distribution of modularity values for a partition into 15 groups chosen at random, and for the optimized value of modularity, for

![]() networks sampled from the same model. (b) Adjacency matrix of a sample from the model, with the nodes ordered according to a random partition. (c) Same as (b), but with the nodes ordered according to the partition that maximizes modularity.

networks sampled from the same model. (b) Adjacency matrix of a sample from the model, with the nodes ordered according to a random partition. (c) Same as (b), but with the nodes ordered according to the partition that maximizes modularity.

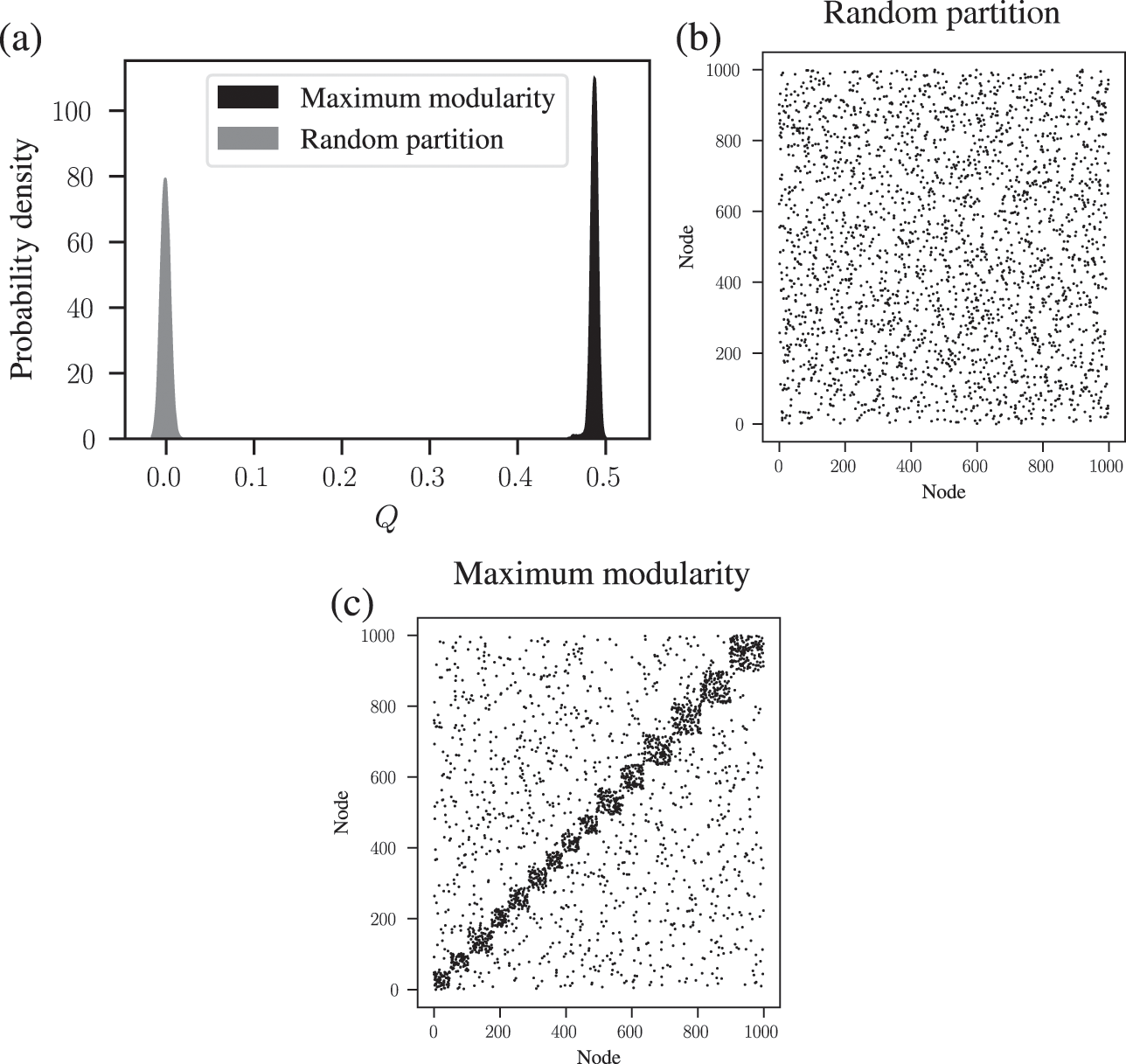

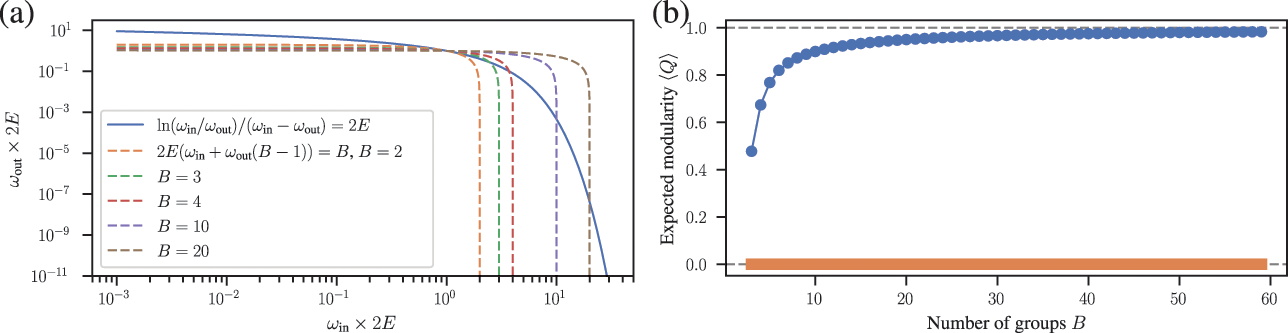

Somewhat paradoxically, another problem with modularity maximization is that in addition to systematically overfitting, it also systematically underfits. This occurs via the so-called resolution limit: in a connected networkFootnote 16 the method cannot find more than

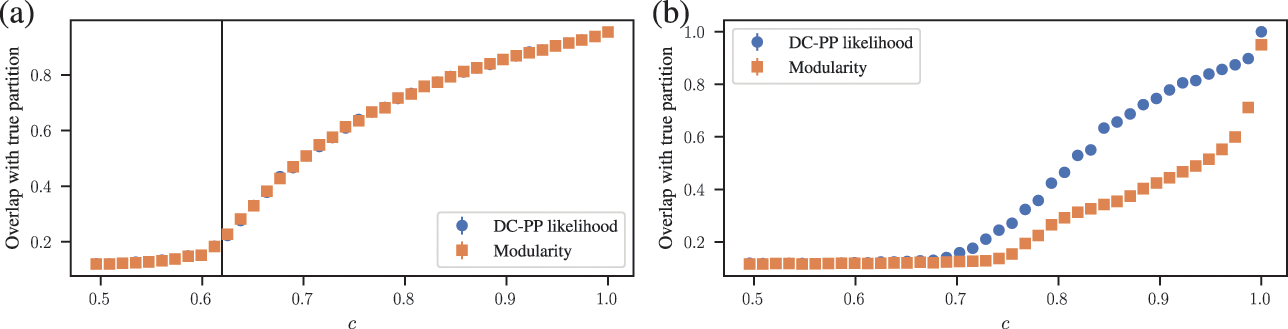

![]() communities [Reference Fortunato and Barthélemy60], even if they seem intuitive or can be found by other methods. An example of this is shown in Fig. 7, where for a network generated with the SBM containing 30 communities, modularity maximization finds only 18, while an inferential approach has no problems finding the true structure. There are attempts to counteract the resolution limit by introducing a “resolution parameter” to the modularity function, but as we discuss in Sec. 4.4 they are in general ineffective.

communities [Reference Fortunato and Barthélemy60], even if they seem intuitive or can be found by other methods. An example of this is shown in Fig. 7, where for a network generated with the SBM containing 30 communities, modularity maximization finds only 18, while an inferential approach has no problems finding the true structure. There are attempts to counteract the resolution limit by introducing a “resolution parameter” to the modularity function, but as we discuss in Sec. 4.4 they are in general ineffective.

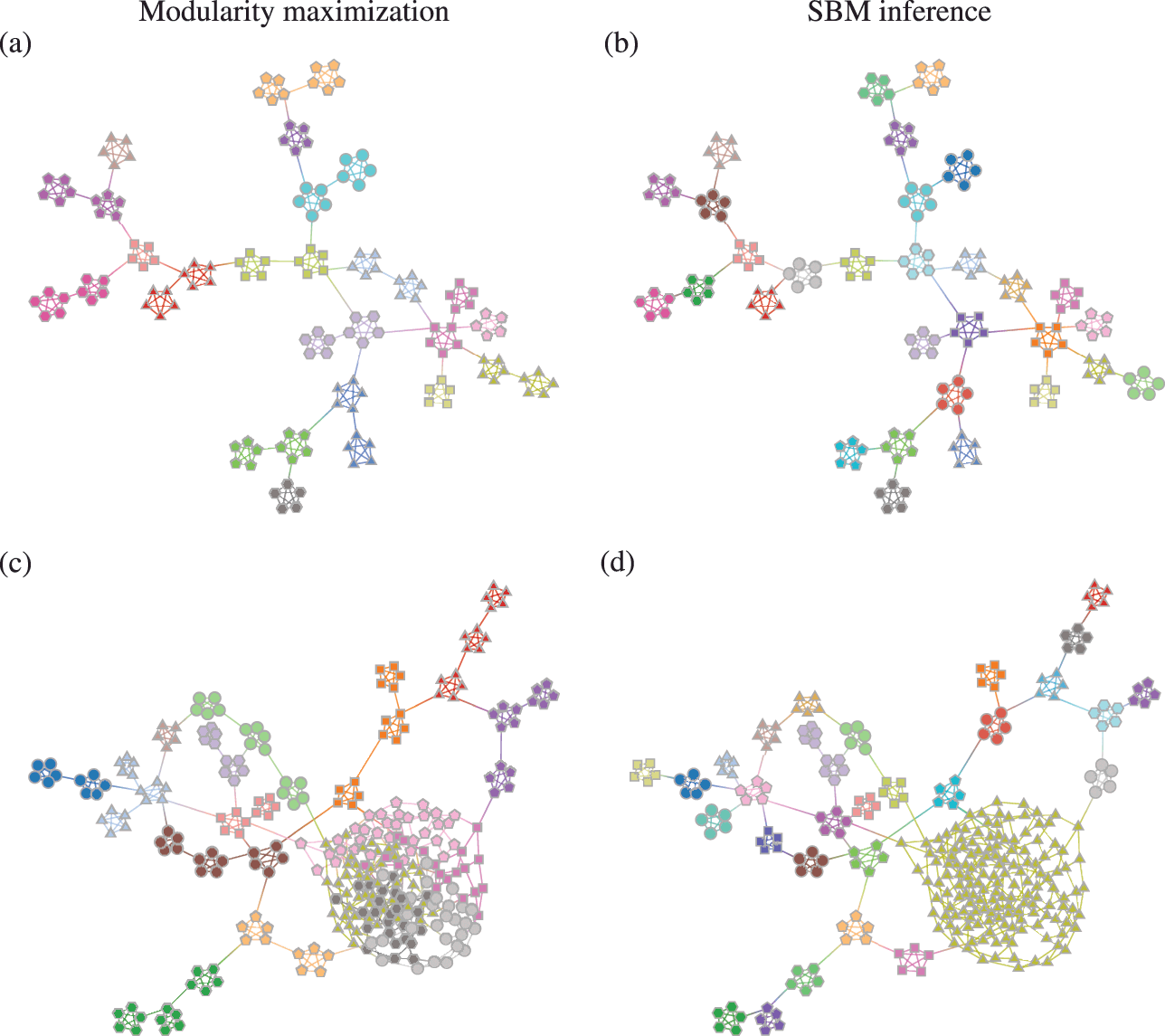

Figure 7 The resolution limit of modularity maximization prevents small communities from being identified, even if there is sufficient statistical evidence to support them. Panel (a) shows a network with

![]() communities sampled from an assortative SBM parametrization. The colors indicate the

communities sampled from an assortative SBM parametrization. The colors indicate the

![]() communities found with modularity maximization, where several pairs of true communities are merged together. Panel (b) shows the inference result of an assortative SBM [Reference Zhang and Peixoto24], recovering the true communities with perfect accuracy. Panels (c) and (d) show the results for a similar model where a larger community has been introduced. In (c) we see the results of modularity maximization, which not only merges the smaller communities together, but also splits the larger community into several spurious ones — thus both underfitting and overfitting different parts of the network at the same time. In (d) we see the result obtained by inferring the SBM, which once again finds the correct answer.

communities found with modularity maximization, where several pairs of true communities are merged together. Panel (b) shows the inference result of an assortative SBM [Reference Zhang and Peixoto24], recovering the true communities with perfect accuracy. Panels (c) and (d) show the results for a similar model where a larger community has been introduced. In (c) we see the results of modularity maximization, which not only merges the smaller communities together, but also splits the larger community into several spurious ones — thus both underfitting and overfitting different parts of the network at the same time. In (d) we see the result obtained by inferring the SBM, which once again finds the correct answer.

These two problems — overfitting and underfitting — can occur in tandem, such that portions of the network dominated by randomness are spuriously revealed to contain communities, whereas other portions with clear modular structure can have those obstructed. The result is a very unreliable method to capture the structure of heterogeneous networks. We demonstrate this in Fig. 7(c) and (d).

In addition to these major problems, modularity maximization also often possesses a degenerate landscape of solutions, with very different partitions having similar values of

![]() [Reference Good, Yves-Alexandre and Clauset61]. In these situations the partition with maximum value of modularity can be a poor representative of the entire set of high-scoring solutions and depend on idiosyncratic details of the data rather than general patterns — which can be interpreted as a different kind of overfitting.Footnote 17

[Reference Good, Yves-Alexandre and Clauset61]. In these situations the partition with maximum value of modularity can be a poor representative of the entire set of high-scoring solutions and depend on idiosyncratic details of the data rather than general patterns — which can be interpreted as a different kind of overfitting.Footnote 17

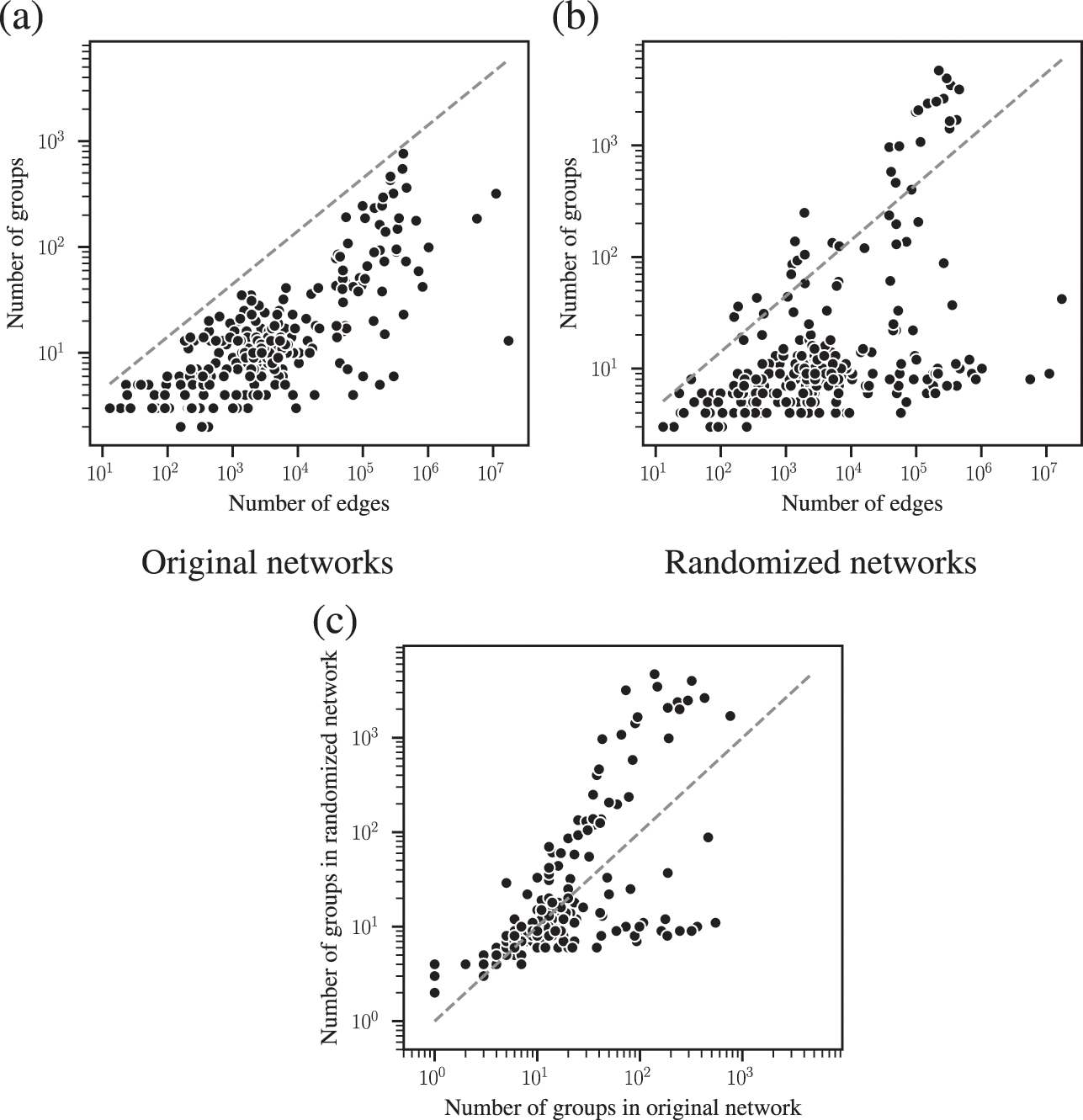

The combined effects of underfitting and overfitting can make the results obtained with the method unreliable and difficult to interpret. As a demonstration of the systematic nature of the problem, in Fig. 8(a) we show the number of communities obtained using modularity maximization for 263 empirical networks of various sizes and belonging to different domains [Reference Zhang and Peixoto64], obtained from the Netzschleuder catalogue [Reference Peixoto65]. Since the networks considered are all connected, the values are always below

![]() , due to the resolution limit; but otherwise they are well distributed over the allowed range. However, in Fig. 8(b) we show the same analysis, but for a version of each network that is fully randomized, while preserving the degree sequence. In this case, the number of groups remains distributed in the same range (sometimes even exceeding the resolution limit, because the randomized versions can end up disconnected). As Fig. 8(c) shows, the number of groups found for the randomized networks is strongly correlated with the original ones, despite the fact that the former have no latent community structure. This is a strong indication of the substantial amount of noise that is incorporated into the partitions found with the method.

, due to the resolution limit; but otherwise they are well distributed over the allowed range. However, in Fig. 8(b) we show the same analysis, but for a version of each network that is fully randomized, while preserving the degree sequence. In this case, the number of groups remains distributed in the same range (sometimes even exceeding the resolution limit, because the randomized versions can end up disconnected). As Fig. 8(c) shows, the number of groups found for the randomized networks is strongly correlated with the original ones, despite the fact that the former have no latent community structure. This is a strong indication of the substantial amount of noise that is incorporated into the partitions found with the method.

Figure 8 Modularity maximization incorporates a substantial amount of noise into its results. (a) Number of groups found using modularity maximization for 263 empirical networks as a function of the number of edges. The dashed line corresponds to the

![]() upper bound due to the resolution limit. (b) The same as in (a) but with randomized versions of each network. (c) Correspondence between the number of groups of the original and randomized network. The dashed line shows the diagonal.

upper bound due to the resolution limit. (b) The same as in (a) but with randomized versions of each network. (c) Correspondence between the number of groups of the original and randomized network. The dashed line shows the diagonal.

The systematic overfitting of modularity maximization — as well as other descriptive methods such as Infomap — has been also demonstrated recently in Ref. [Reference Ghasemian, Hosseinmardi and Clauset66], from the point of view of edge prediction, on a separate empirical dataset of 572 networks from various domains.

Although many of the problems with modularity maximization were long known, for some time there were no principled solutions to them, but this is no longer the case. In the table below we summarize some of the main problems with modularity and how they are solved with inferential approaches.

| Problem | Principled solution via inference |

|---|---|

Modularity maximization overfits, and finds modules in maximally random networks. [Reference Guimerà, Sales-Pardo and Nunes Amaral59] | Bayesian inference of the SBM is designed from the ground up to avoid this problem in a principled way and systematically succeeds [Reference Peixoto, Doreian, Batagelj and Ferligoj5]. |

Modularity maximization has a resolution limit, and finds at most

| Inferential approaches with hierarchical priors [Reference Peixoto16, Reference Peixoto67] or strictly assortative structures [Reference Zhang and Peixoto24] do not have any appreciable resolution limit, and can find a maximum number of groups that scales as

|

Modularity maximization has a characteristic scale, and tends to find communities of similar size; in particular with the same sum of degrees (see Sec. 4.4). | Hierarchical priors can be specifically chosen to be a priori agnostic about characteristic sizes, densities of groups and degree sequences [Reference Peixoto16], such that these are not imposed, but instead obtained from inference, in an unbiased way. |

Modularity maximization can only find strictly assortative communities. | Inferential approaches can be based on any generative model. The general SBM will find any kind of mixing pattern in an unbiased way, and has no problems identifying modular structure in bipartite networks, core-periphery networks, and any mixture of these or other patterns. There are also specialized versions for bipartite [Reference Larremore, Clauset and Jacobs68], core-periphery [Reference Zhang, Martin and Newman69], and assortative patterns [Reference Zhang and Peixoto24], if these are being searched exclusively. |

The solution landscape of modularity maximization is often degenerate, with many different solutions with close to the same modularity value [Reference Good, Yves-Alexandre and Clauset61], and with no clear way of how to select between them. | Inferential methods are characterized by a posterior distribution of partitions. The consensus or dissensus between the different solutions [Reference Peixoto26] can be used to determine how many cohesive hypotheses can be extracted from inference, and to what extent is the model being used a poor or a good fit for the network. |

Because of the above problems, the use of modularity maximization should be discouraged, since it is demonstrably not fit for purpose as an inferential method. As a consequence, the use of modularity maximization in any recent network analysis that relies on inferential conclusions can be arguably considered a “red flag” that strongly indicates methodological inappropriateness. In the absence of secondary evidence supporting the alleged community structures found, or extreme care to counteract the several limitations of the method (see Secs. 4.2, 4.3 and 4.4 for how typical attempts usually fail), the safest assumption is that the results obtained with that method tend to contain a substantial amount of noise, rendering any inferential conclusion derived from them highly suspicious.

As a final note, we focus on modularity here not only for its widespread adoption but also because of its exemplary character. At a fundamental level, all of its shortcoming are shared with any descriptive method in the literature — to varied but always non-negligible degrees.

4 Myths, pitfalls, and half-truths

In this section we focus on assumed or asserted statements about how to circumvent pitfalls in community detection, which are in fact better characterized as myths or half-truths, since they are either misleading, or obstruct a more careful assessment of the true underlying nature of the problem. The following subsections each deal with one of these pitfalls.

4.1 “Modularity maximization and SBM inference are equivalent methods.”

As we have discussed in Sec. 2.5, it is possible to interpret any community detection algorithm as the inference of some generative model. Because of this, the mere fact that an equivalence with an inferential approach exists cannot be used to justify the inferential use of a descriptive method, or to use it as a criterion to distinguish between approaches that are statistically principled or not. To this aim, we need to ask instead whether the modelling assumptions that are implicit in the descriptive approach can be meaningfully justified, and whether they can be used to consistently infer structures from networks.

Some recent works have detailed some specific equivalences of modularity maximization with statistical inference [Reference Zhang and Moore70, Reference Newman71]. As we will discuss in this section, these equivalences are far more limited than commonly interpreted. They serve mostly to understand in more detail the reasons why modularity maximization fails as a reliable method, but do not prevent it from failing — they expose more clearly its sins, but offer no redemption.

We start with a very interesting connection revealed by Zhang and Moore [Reference Zhang and Moore70] between the effective posterior distribution we obtain when using the modularity function as a Hamiltonian,

(17)

(17)

and the posterior distribution of the strictly assortative DC-SBM, which we refer here as the degree-corrected planted partition model (DC-PP),

(18)

(18)

which has a likelihood given by

(19)

(19)

where

(20)

(20)

This model assumes that there are constant rates

![]() and

and

![]() controlling the number of edges that connect to nodes of the same and different communities, respectively. In addition, each node has its own propensity

controlling the number of edges that connect to nodes of the same and different communities, respectively. In addition, each node has its own propensity

![]() , which determines the relative probability it has of receiving an edge, such that nodes inside the same community are allowed to have very different degrees. This is a far more restrictive version of the full DC-SBM we considered before, since it not only assumes assortativity as the only mixing pattern, but also that all communities share the same rate

, which determines the relative probability it has of receiving an edge, such that nodes inside the same community are allowed to have very different degrees. This is a far more restrictive version of the full DC-SBM we considered before, since it not only assumes assortativity as the only mixing pattern, but also that all communities share the same rate

![]() , which imposes a rather unrealistic similarity between the different groups.

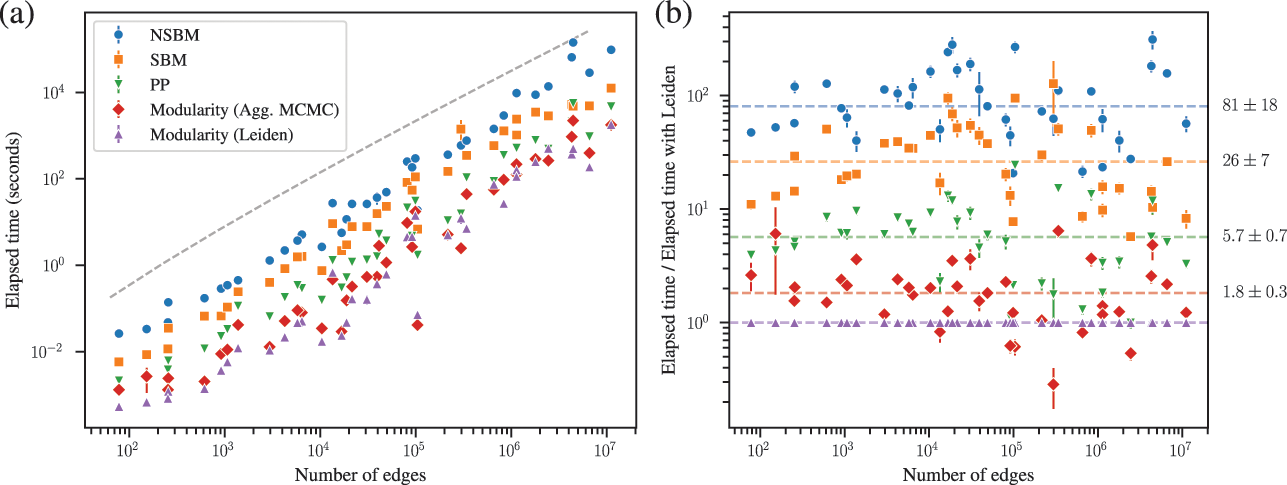

, which imposes a rather unrealistic similarity between the different groups.