Abstract

Purpose

Due to the pandemic, we restructured our medical student knot-tying simulation to a virtual format. This study evaluated curriculum feasibility and effectiveness.

Methods

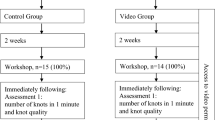

Over 4 weeks, second-year medical students (n = 229) viewed a video tutorial (task demonstration, errors, scoring) and self-practiced to proficiency (no critical errors, < 2 min) using at-home suture kits (simple interrupted suture, instrument tie, penrose drain model). Optional virtual tutoring sessions were offered. Students submitted video performance for proficiency verification. Two sets of 14 videos were viewed by two surgeons until inter-rater reliability (IRR) was established. Students scoring “needs remediation” attended virtual remediation sessions. Non-parametric statistics were performed using RStudio.

Results

All 229 medical students completed the curriculum within 1–4 h; 1.3% attended an optional tutorial. All videos were assessed: 4.8% “exceeds expectations”, 60.7% “meets expectations”, and 34.5% “needs remediation.” All 79 needing remediation due to critical errors achieved proficiency during 1-h group sessions. IRR Cohen’s κ was 0.69 (initial) and 1.0 (ultimate). Task completion time was 56 (47–68) s (median [IQR]); p < 0.01 between all pairs. Students rated the overall curriculum (79.2%) and overall curriculum and video tutorial effectiveness (92.7%) as “agree” or “strongly agree”. No definitive preference emerged regarding virtual versus in-person formats; however, 80.2% affirmed wanting other at-home skills curricula. Comments supported home practice as lower stress; remediation students valued direct formative feedback.

Conclusions

A completely virtual 1-month knot-tying simulation is feasible and effective in achieving proficiency using video-based assessment and as-needed remediation strategies for a large student class.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Transitions in medical education have been found to be critical points in the educational experience [1]. While many curricula address the transition from graduating medical school to residency, the transition within medical school pre-clinical to clinical may be associated with a steep learning curve [2, 3, 4, 5].

Several years ago, at the University of Texas Southwestern (UTSW) Medical Center, our leadership initiated a weeklong course entitled “Transitions to Clerkships.” This course, taken prior to clerkships, aims to prepare medical students for their clinical rotations and includes didactic, interactive, and simulation components. One of the simulation activities is a suturing and knot-tying curriculum in which students are taught how to tie a simple interrupted knot on a penrose drain using an instrument-tying technique. This is a skill applicable to all students regardless of career path and can allow for more engagement on core clerkships, such as general surgery or obstetrics/gynecology [6]. Indeed, advancing technical skills such as suturing and knot tying are critical needs that have been identified as shared training goals by many students [7, 8]. The use of simulation in medical education has demonstrated the effectiveness of suturing and knot-tying curricula in increasing trainee comfort and confidence in skills, improving task performance by expert assessment, and providing a more conducive training environment than learning in the operating room [6, 9, 10].

Our previous instructional design consisted of a single-hour, in-person, group-learning format during which students had an orientation to the basics of suturing orientation and then the task. This was followed by expert-guided small-group training in instructor to learner ratios of 1:6. Sessions were organized in four sessions of up to 60 students each to accommodate the entire second-year medical school class. All students were able to achieve proficiency by the end of the session, using real-time rapid cycle deliberate practice and remediation as needed. This format was efficient and reliably met the learning objectives; in addition, the activity was well received by students and received high ratings on annual surveys.

However, the COVID-19 pandemic and social distancing restrictions made this in-person instructional design impossible [11]. Given these circumstances, we opted to transform this curriculum into a virtual format using at-home simulation training and video-based assessment. The purpose of this study was to evaluate the feasibility and effectiveness of this modified curriculum with a new instructional design, hypothesizing that all students would achieve task proficiency by the end of the activity, similar to previous years.

Methods

This virtual activity was implemented for all second-year medical students participating in the fall 2020 Transitions to Clerkships course. Data were analyzed under a protocol approved by the UTSW Internal Review Board.

Curriculum development and implementation

The curriculum team consisted of three course directors (established education faculty) and two course design collaborators (one surgical research resident and the simulation center director of operations).

Task goal and objectives

Using the established in-person suturing and knot-tying curriculum as a template, we modified the instructional design to a virtual format. The task required students to perform a simple interrupted knot, followed by a surgeons knot and two square knots with an instrument tying technique on a penrose drain model using 2–0 silk suture on an SH needle. Therefore, our activity goal was to introduce students to suturing and knot-tying using an instrument tie to close a simple wound. Our objectives included: (1) demonstrate appropriate instrument handling, (2) demonstrate safe handling of suture needles, and (3) achieve proficiency in suturing and knot-tying using an instrument tying technique for a simple interrupted suture.

Suturing kit development

The curriculum team determined the necessary suture kit contents by evaluating the task and our previous curriculum for supplies (Fig. 1). Surgical instruments included in the kit (forceps, needle driver, and scissors) were obtained from various vendors and tested to determine which instruments were most reflective of those that students would use on their clerkships with actual patients. Once cost-effectiveness was considered and consensus was obtained, all contents were purchased and kits were assembled for students to pick up by the simulation team. Additional supplies for further practice, such as the silicone skin pad made by our simulation center, were also included to provide students with supplies and contents that could support ongoing training during the clerkship phase of their education.

Suturing kit provided to all medical students. Contents dedicated to this curriculum included penrose drain model (× 5), Velcro for penrose drain model (× 5), forceps, needle driver, scissors, 2–0 silk SH needle (× 10), and a sharps container (needle board). Contents for future curricula included an in-house developed silicone skin pad with various wound shapes and sizes for further practice

Video content creation

With assistance from the audio–visual department utilizing allocated institutional funding, the curriculum team created a course video replicating the instructional content in the day-of task orientation from the previous in-person curriculum. This video began with a primer on suturing equipment basics (the anatomy of a needle, needle sizes and types, suture materials and sizes) and instrument basics (how to hold and use the forceps and the needle driver, how to load a needle), and kit contents. The video then explained the task expectations, metrics and errors, and provided task demonstrations both in slow detail as well as in real time. Detailed demonstrations were included for all errors and common pitfalls to avoid. It was determined by the curriculum team that to have the most complete view of performance, students needed to record videos from a directly opposing view with a 45° downward angle using a laptop webcam or cellphone. Instructions were provided to learners to ensure that they captured successful videos of themselves performing the task. From the large instructional video, we also extracted and distributed a shorter video segment, which demonstrated the task in real time for those who needed refreshing on the task without needing to rewatch the entire course video. The full video length (min:sec) was 24:50 and the short video length was 00:53.

Metrics and errors

We maintained our in-person scoring metrics to ensure consistency in expectations. Critical errors were categorized as safety errors or technique errors. Additional common pitfalls, which were not scored, were also included (Table 1). Students were expected to self-train to proficiency. Proficiency was defined as performing the task with no critical errors in less than 120 s Three ratings were defined: (1) “exceeds expectations”—performs task without any critical errors, avoids pitfalls and performs with superior skill, (2) “meets expectations”—performs task without any critical errors, and (3) “needs more practice”—does not meet expectations, commits any critical error, and requires remediation. Students were required to achieve “meets expectations” or “exceeds expectations” to receive a passing rating for the activity. These ratings were developed for the previous in-person instructional design through a process of expert consensus. The 120 s cutoff was established based on previous observations (in-person format) and to limit time required for video review.

Curriculum structure

Students then were given access to the online module, which was housed on their institutional Desire2Learn (D2L) education platform [12]. This module contained the course video as well as an activity syllabus, which listed the goals and objectives, metrics and assessment criteria, the task instructions, the course timeline, and zoom links to the optional tutorial sessions. Optional tutorial sessions were scheduled and included 1-h long sessions, held once a week at various days and times, with a faculty or senior surgical resident available for directed feedback and questions.

One month prior to the Transitions to Clerkship course, the curriculum was initiated. Students were expected to self-practice to proficiency within a 4-week time period, using the live-virtual tutorial sessions as needed. By the week preceding the Transitions to Clerkships course, students were required to submit a video of their performance for video-based assessment. Two surgeons independently rated initial submissions to assess inter-rater reliability (IRR), after which a single surgeon evaluated all remaining submissions. Students received ratings and summative performance feedback through D2L and those who required remediation were scheduled for a virtual-live remediation session during the Transitions to Clerkship week. Summative feedback was individualized for each student, and included both positive and constructive comments, regarding instrument holding and handling, knot-tying technique, and safety (video time stamps were referenced). This was provided in D2L at the time of video assessment, so students had both their overall evaluation (needs remediation/meets expectations/exceeds expectations) and detailed feedback. An example would include, “Rating of fail. Proper hold of instruments and good wrist rotation to manipulate the needle. You need to place the needle driver in between the suture at all times for the wraps. You, however, had it on the outside on the surgeon's knot and, therefore, wrapped incorrectly, counterclockwise. On the surgeon's knot it is supposed to be clockwise. See 0:51 s.” Subsequently, remediation was conducted virtually using the Zoom platform [13] during 2 days within the transitions course, while accommodating other learner assignments. To maximize relevance and efficiency, we organized students by type of error: instrument holding, knot tying, or multiple errors (both instrument holding and knot-tying errors). During the remediation session, students had their task performance evaluated with directed formative feedback and were then reassessed for proficiency by the end of the session.

At the end of the week, all students were sent an anonymous survey, which requested information regarding total training time to task completion, their perception of the virtual platform curriculum, video content, optional tutorial sessions, and finally their preference for future at-home skill training and virtual versus in-person format (Appendix 1).

Analytic variables and statistical analyses

Our primary outcome was the number of students who ultimately achieved proficiency using this curriculum. Secondary outcomes included time and cost resources, handedness, rating breakdown, the number of students requiring remediation, error types and number, and time to task completion. Descriptive statistics are presented as median [interquartile range (IQR)]. Continuous variables were analyzed using the non-parametric Wilcox-Rank-Sum test. Categorical variables were assessed using a Chi-squared test. IRR was assessed using a Cohen’s Kappa. All statistical analyses were performed using RStudio (Version 1.3.959); a p-value < 0.05 was considered significant.

Results

All (229/229) second-year medical students participated in and successfully completed the curriculum. The vast majority of trainees performed the task right-handed 97.4% (223/229) as compared to left-handed 2.6% (6/229). Only 1.3% (3/229) of students attended the optional tutoring sessions.

The first 14 videos were independently rated by two surgeons with an IRR of 0.69. Following a detailed discussion on scoring discrepancies to gain consensus, the next set of 14 videos were independently rated by the same two surgeons with an IRR of 1.0. The remaining videos were rated by a single surgeon.

Outcome analysis

On video review, 65.5% (150/229) passed the curriculum: 4.8% (10/229) rated “exceeds expectations”, 60.7% (139/229) “meets expectations”, and 34.5% (79/229) “needs more practice.” There was no significant difference in performance using right-handed or left-handed technique (p = 0.4). Of those students requiring remediation, the majority of errors performed were instrument holding errors at 59.5% (47/79), followed by multiple errors 21.5% (17/79), and knot-tying errors 19.0% (15/79). Of the instrument holding errors, the majority incorrectly held the forceps 97.5% (77/79) followed by incorrectly held the needle driver 2.5% (2/79). Remediation was scheduled in instructor to learner ratios ranging from 1:2 to 1:9 and in 1–2 h sessions. Smaller virtual group sizes and longer sessions were used for students with multiple errors. Three instructors held a total of 18 remediation sessions, concurrently over a 2-day period. The zoom platform allowed instructors to demonstrate correct performance of the task and observe each learner performing the task until proficiency was verified. At curriculum completion, all students, 100% (229/229), met proficiency metrics.

When examining the time to task completion, all students performed under the requisite 120 s (range 26–114 s). When comparing time by rating, there was a significant difference between all pairwise comparisons (p < 0.01); students achieving “exceeds expectations” performed the task at 35 s [31–38], “meets expectations” at 54 s [46–65.5], and “needs more practice” at 62 s [51–76.5] (Fig. 2).

Survey results

The survey response rate was 41.9% (96/229). Nearly all students 99.0% (95/96) reported self-training to proficiency in under 4 h with the majority achieving proficiency in less than 2 h 76.0% (73/96). When asked if they felt the virtual platform with at-home practice adequately taught the skill, 79.2% (76/96) agreed (rating agreed or strongly agreed) and 10.4% (10/96) were undecided. When asked if the video content was relevant and helpful, 92.7% (89/96) agreed. When asked if they would like the opportunity to practice other skills at home, 80.2% (77/96) agreed and 15.6% (15/96) were undecided. When asked if they would prefer to continue virtual format teaching over in-person teaching in the future after COVID-19 restrictions lifted, there was no consensus: 41.7% (40/96) agreed, 42.7% (41/96) disagreed (rating disagreed or strongly disagreed), and 15.6% (15/96) were undecided.

Free text responses were provided by 6.2% (6/96) and included positive and constructive feedback on both the curriculum structure and virtual format learning. Positive feedback on the curriculum structure included comments on the quality and convenience of the supplies, the thoroughness of the guidelines, and the quick development of a detailed virtual curriculum. Constructive feedback on the instructional design structure included the need for additional detail in videos to prevent practicing incorrect techniques, such as the instrument holding errors, and the inclusion of resources for practicing future surgical techniques. Regarding virtual format learning, positive feedback included the ability to practice the skill in a “no-stakes” environment rather than feeling rushed or conscious in a single-session observed environment, and the desire for a hybrid format of self-training and in-person directed feedback prior to evaluation. Additional small-group feedback given during the remediation session indicated that some students were grateful for the directed feedback opportunity provided during the remediation session and, therefore, felt better prepared and more confident with the skill as a result.

Resources required

This year, the resources required to transition to a virtual curriculum were $10,636 and 104 person-hours. To maintain the novel virtual format curriculum in future years, the estimated cost and man-hours are $9,636 and 54 person-hours, respectively. The previous curriculum maintenance resource needs were estimated at $10,740 and 14 person-hours (Tables 2 and 3).

Discussion

Because of pandemic constraints, we developed an at-home suturing and knot-tying curriculum for medical students; in this study we aimed to evaluate the feasibility and effectiveness of this curriculum. All 229 medical students enrolled in our university’s required Transitions to Clerkships course were able to self-train and submit videos in time for video-based review and those requiring more practice were successfully remediated. Though the implementation of video-based assessment techniques with having students submit their videos using readily available technology (phone camera, laptop webcam, and a Zoom platform) was novel, we had no difficulties in both learners and instructors adapting to this format. In addition, we were able to establish an appropriate IRR to ensure the accuracy of our assessments. Thus, from a cost-effectiveness standpoint this was feasible as the implementation costs and time were comparable from the in-person modality to virtual modality with similar effectiveness on students’ ability to reach proficiency. Indeed, we verified that all students met proficiency benchmarks by the end of the curriculum meeting our learning objectives.

Regarding performance metrics, the distributions of students who exceeded expectations, met expectations, and needed more practice seemed similar to our experience from previous years. It is unclear what benefit the directed feedback during the optional tutorial sessions would have had given the limited attendance; however, we suspect that minor errors such as instrument holding would have been caught and corrected earlier. Indeed, many students who underwent remediation mentioned their appreciation of the directed feedback. Students also expressed a desire to have received feedback sooner, citing that this may have allowed them to correct errors earlier and reinforce proper technique. We designed the optional tutorial sessions to provide an opportunity for early formative feedback; however, attendance at these sessions was poor due to a lack of awareness amongst the students as well as a need for students to prioritize other activities.

One interesting observation was the relationship between the received rating and task completion time. Even though time was not part of our rating system, learners who demonstrated superior skill (“exceeds expectations”) performed the task significantly quicker compared to other groups (p < 0.01). We suspect that superior performance could have been due to aspects of natural ability, previous experience, or variation in the amount of training (time or repetitions). However, given limitations in our data, we were not able to analyze these potential relationships.

The strengths of our curriculum demonstrate that thorough instructional design and structure along with high-quality, cost-effective products can provide students the tools to acquire basic suturing and knot tying skills. While the overall time and cost was higher compared to our previous in-person curriculum, the majority of resources were related to the initial investment in video and virtual platform creation. Initial virtual activity development and implementation costs led to increased resources required this year compared to the year prior. In future years, we estimate a fifty percent decrease in effort and costs to maintain the virtual format. The most labor-intensive component was the 24 person-hours for remediation with supervision by two faculty and one resident. It is important to note that in our resources estimates, there was no cost allocated to instructor efforts for both video assessment and remediation. On the other hand, this virtual remediation required the coordination of 3 instructions as opposed to the previous in-person curriculum, which required 6 instructors for each of the 4 sessions. With better communication about the optional tutorial sessions and the correction of instrument holding errors earlier, we suspect that the remediation time investment could be further substantially reduced. Furthermore, the cost to maintain the virtual curriculum actually becomes cheaper than the cost to maintain the in-person curriculum. There is an additional benefit of all students being provided a personal suture kit with instruments and training material for their own use rather than shared instruments that remain at the simulation center.

While we do not have comparison data from the remediation requirements of previous years, the most frequent mistake of incorrectly holding the forceps in the virtual curriculum was consistent with the in-person training. This we consider a minor technical error as it is easily correctable and does not affect the quality of the final knot. However, the number of students who performed the technical knot tying error (knot tying and multiple errors) totaled 40% (32/79). Again, without direct comparison data it is difficult to determine the consistency of the virtual format with the in-person format remediation needs. Importantly, no students performed a critical safety error.

Survey responses indicated that students, even for a simple suturing task, learn at different rates and have a variety of feedback needs. Furthermore, there was no consensus on the preference of virtual versus in-person formats. Despite a 42% response rate, we feel this is reflective of the total population and demonstrates an additional difference in learning environment needs. Literature has shown repeatedly that mastery learning occurs by distributed practice with directed feedback [14, 15]. It is possible that the availability of a computer-based module in the virtual platform may have actual advantages in providing learners with the tools for distributed self-practice that were not available in the in-person curriculum [16]. In addition, transitioning from massed practice (single session, large groups, continued practice without rest) to distributed practice has been shown to improve learning and skill acquisition [15, 17, 18].

Our student sample had a smaller percentage of left-handed students (2.6%) as compared to the population average (10%). We did not specify which hand the student should use to perform the task and it is possible that some students might have been left-hand dominant but chose to perform the skill in a right-handed fashion given the instruments and curriculum structure. It is important to note that our video tutorial did not address these complexities related to performing the task in a left-handed fashion, compared to in-person sessions, where some real-time adjustments could be made for the learner. Despite this situation, there was no significant difference in performance ratings or time to task completion between left- and right-handed students.

The authors recognize some limitations to the study. First, the data collected were limited to a post-test self-submission. There were no baseline performance data collected or learning curve analysis performed. Indeed, we did not collect discrete data regarding the number of repetitions. Therefore, correlations could not be explored regarding the received rating and practice repetitions. Furthermore, the total training time did not allow analysis of practice patterns to determine any effects of massed versus distributed training. Second, the virtual platform as implemented only allowed for written summative feedback after video submission. The limited optional tutorial session attendance prohibited our ability to provide formative feedback during self-training which would have been ideal for deliberate practice [15]. In future iterations of this curriculum, we may modify the structure to ensure formative feedback is delivered.

The benefit of providing students with a low stress environment for distributed practice was further support by survey and feedback received. Some future steps to consider include testing baseline skills as a comparison to post-performance, using baseline video submission as a resource for earlier formative feedback interventions, creating a hybrid format that allows for in-person directed feedback, allowing students to choose their format of preference and assessing the resulting skill acquisition, having students perform video-based self-assessments, and analyzing learning curve and self-practice data with at-home training supplies to understand skill acquisition with repetition count and massed/distributed training sessions [19, 20]. One additional method we are eagerly pursuing is the development of artificial intelligence technology to perform video-based performance assessment which would obviate the resources associated with human video review.

Conclusion

An at-home knot tying and suturing curriculum created for 229 medical students during the pandemic demonstrates both feasibility in self-training and video-based review as well as effectiveness with all students meeting proficiency benchmarks at curriculum completion. The lack of consensus on the preference of virtual format to in-person training is especially relevant in developing hybrid curricula to cater to learner preferences as we return to normal practice.

Data availability

The data sets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

The American Board of Surgery, The American College of Surgeons, The Association of Program Directors in Surgery, The Association of Surgical Education. Statement on surgical pre-residency preparatory courses. J Am Coll Surg. 2014;219(5):851–2. https://doi.org/10.1007/s00268-014-2749-y.

Cho KK, Marjadi B, Langendyk V, Hu W. Medical student changes in self-regulated learning during the transition to the clinical environment. BMC Med Educ. 2017;17(1):59. https://doi.org/10.1186/s12909-017-0902-7.

Dunham L, Dekhtyar M, Gruener G, CichoskiKelly E, Deitz J, Elliott D, Stuber ML, Skochelak SE. Medical student perceptions of the learning environment in medical school change as students transition to clinical training in undergraduate medical school. Teach Learn Med. 2017;29(4):383–91. https://doi.org/10.1080/10401334.2017.1297712.

Lipman JM, Terhune K, Sarosi G Jr, Minter R, Sachdeva AK, Delman KA. Easing the Transition to Surgical Residency: The ACS/APDS/ASE Resident Prep Curriculum. Chicago, IL: Available at: https://www.facs.org/Education/Division-of-Education/Publications/RISE/articles/transition-to-residency. Accessed 28 May 2021.

Malau-Aduli BS, Roche P, Adu M, Jones K, Alele F, Drovandi A. Perceptions and processes influencing the transition of medical students from pre-clinical to clinical training. BMC Med Educ. 2020. https://doi.org/10.1186/s12909-020-02186-2.

DiMaggio PJ, Waer AL, Desmarais TJ, Sozanski J, Timmerman H, Lopez JA, Poskus DM, Tatum J, Adamas-Rappaport WJ. The use of a lightly preserved cadaver and full thickness pig skin to teach technical skills on the surgery clerkship–a response to the economic pressures facing academic medicine today. Am J Surg. 2010;200(1):162–6. https://doi.org/10.1016/j.amjsurg.2009.07.039.

Glass CC, Acton RD, Blair PG, Campbell AR, Deutsch ES, Jones DB, Liscum KR, Sachdeva AK, Scott DJ, Yang SC. American College of Surgeons/Association for Surgical Education medical student simulation-based surgical skills curriculum needs assessment. Am J Surg. 2014;207(2):165–9. https://doi.org/10.1016/j.amjsurg.2013.07.032.

McKinley SK, Kochis M, Cooper CM, Saillant N, Haynes AB, Petrusa E, Phitayakorn R. Medical students’ perceptions and motivations prior to their surgery clerkship. Am J Surg. 2019;218(2):424–9. https://doi.org/10.1016/j.amjsurg.2019.01.010.

Naylor RA, Hollett LA, Valentine RJ, Mitchell IC, Bowling MW, Ma AM, Dineen SP, Bruns BR, Scott DJ. Can medical students achieve skills proficiency through simulation training? Am J Surg. 2009;198(2):277–82. https://doi.org/10.1016/j.amjsurg.2008.11.036.

Riboh J, Curet M, Krummel T. Innovative introduction to surgery in the preclinical years. Am J Surg. 2007;194(2):227–30. https://doi.org/10.1016/j.amjsurg.2006.12.038.

Dedeilia A, Sotiropoulos MG, Hanrahan JG, Janga D, Dedeilias P, Sideris M. Medical and surgical education challenges and innovations in the COVID-19 era: a systematic review. In Vivo. 2020;34(3 Suppl):1603–11. https://doi.org/10.21873/invivo.11950.

The University of Texas Southwestern Simulation Center. 2020. TTC 1501: Transition To Clerkships [Course Content]. Available at: https://d2l.utsouthwestern.edu/d2l/loginh/. Accessed June 9, 2021.

Zoom Video Communications Inc. 2016. Security guide. Zoom Video Communications Inc. Available at: https://d24cgw3uvb9a9h.cloudfront.net/static/81625/doc/Zoom-Security-White-Paper.pdf. Accessed 9 June 2021.

Benjamin AS, Tullis J. What makes distributed practice effective? Cogn Psychol. 2010;61(3):228–47. https://doi.org/10.1016/j.cogpsych.2010.05.004.

Ericsson KA. Deliberate practice and acquisition of expert performance: a general overview. Acad Emerg Med. 2008;15(11):988–94. https://doi.org/10.1111/j.1553-2712.2008.00227.x.

Xeroulis GJ, Park J, Moulton CA, Reznick RK, Leblanc V, Dubrowski A. Teaching suturing and knot-tying skills to medical students: a randomized controlled study comparing computer-based video instruction and (concurrent and summary) expert feedback. Surgery. 2007;141(4):442–9. https://doi.org/10.1016/j.surg.2006.09.012.

Moulton CA, Dubrowski A, Macrae H, Graham B, Grober E, Reznick R. Teaching surgical skills: what kind of practice makes perfect?: a randomized, controlled trial. Ann Surg. 2006;244(3):400–9. https://doi.org/10.1097/01.sla.0000234808.85789.6a.

Pender C, Kiselov V, Yu Q, Mooney J, Greiffestein P, Paige JT. All for knots: evaluating the effectiveness of a proficiency-driven, simulation-based knot tying and suturing curriculum for medical students during their third-year surgery clerkship. Am J Surg. 2017;213(2):362–70. https://doi.org/10.1016/j.amjsurg.2016.06.028.

Wright AS, McKenzie J, Tsigonis A, Jensen AR, Figueredo EJ, Kim S, Horvath K. A structured self-directed basic skills curriculum results in improved technical performance in the absence of expert faculty teaching. Surgery. 2012;151(6):808–14. https://doi.org/10.1016/j.surg.2012.03.018.

Hu Y, Tiemann D, Brunt ML. Video self-assessment of basic suturing and knot tying skills by novice trainees. J Surg Educ. 2013;70(2):279–83. https://doi.org/10.1016/j.jsurg.2012.10.003.

Acknowledgements

The authors gratefully acknowledge support provided by the UT Southwestern Simulation Center and the Undergraduate Medical Education office. In addition, we would like to thank the medical students who took part in this.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by MBN, KKC and RVR, APM, and DJS. The first draft of the manuscript was written by MBN and DJS and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Appendix 1. Survey feedback request from medical students at the completion of the virtual suturing curriculum

Appendix 1. Survey feedback request from medical students at the completion of the virtual suturing curriculum

Transitions course—virtual suturing and knot-tying simulation activity

-

1.

How much total time did you spend on the suturing and knot-tying simulation activity?

-

a.

< 1 h

-

b.

1–2 h

-

c.

2–3 h

-

d.

3–4 h

-

e.

4+ h

-

a.

For all of the following: 1 (Not at all) to 5 (Very much)–

-

2.

Did you feel the virtual platform with at home practice adequately taught you the skill?

-

3.

Was the video content relevant and helpful?

-

4.

If you attended any optional tutoring sessions, were they helpful?

-

5.

If COVID restrictions were lifted, would you recommend continuing the virtual format over the traditional in-person format in the future?

-

6.

Would you like to have the opportunity to practice other skills at home?

-

7.

Comments?

Rights and permissions

About this article

Cite this article

Nagaraj, M.B., Campbell, K.K., Rege, R.V. et al. At-home medical student simulation: achieving knot-tying proficiency using video-based assessment. Global Surg Educ 1, 4 (2022). https://doi.org/10.1007/s44186-022-00007-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44186-022-00007-2