Abstract

There are increasing concerns about university students’ mental health with mindfulness-based interventions (MBIs) showing promising results. The effect of MBIs delivered digitally to a broad range of university students and study attrition rates remain unclear. This review aimed to explore the effectiveness of online MBIs on university students’ mental health, academic performance and attrition rate of online MBIs. Four databases were searched; both randomised and non-randomised controlled trials were included. Outcomes included mental health-related outcomes and academic performance. Twenty-six studies were identified with outcomes related to mental health. When compared with non-active controls, small to medium statistically significant effect sizes in favour of online MBIs were found for depression, stress, anxiety, psychological distress and psychological well-being at post-intervention. However, these benefits were not seen when online MBIs were compared to active controls and other treatments at post-intervention or follow-up. University students in online MBI arms were more likely to drop out compared to non-active controls and active controls, but no differences were found compared to other treatments. Generally, the included studies’ risk of bias was moderate to high. Online MBIs appear beneficial for improving university students’ mental health when compared to non-active controls post-intervention, but not active controls or other treatments. Findings related to active controls and other treatments should be interpreted with caution due to the small number of studies, the small number of participants in included studies and the degree of heterogeneity in effect sizes.

Similar content being viewed by others

Introduction

There are increasing concerns about university students’ mental health and well-being (Huang et al., 2018; Pedrelli et al., 2015). In an extensive international survey of 21 countries, 20% of college students met diagnostic criteria for at least one mental health condition over a 12-month period (Auerbach et al., 2016). University students are particularly at risk due to stressors such as academic pressure and difficulty of coping with failure, financial burden and transitional stressors (Aldiabat et al., 2014). Thus, this suggests a need to provide support to prevent and alleviate mental health difficulties in students (Aldiabat et al., 2014; Gazzaz et al., 2018).

Mindfulness is ‘awareness, cultivated by paying attention in a sustained and practical way: on purpose, in the present moment, and non-judgmentally’ (Kabat-Zinn, 2012, p. 1). Mindfulness-based interventions (MBIs) have been implemented in many formats, in-person and online, in both group and individual settings. Recently, universities have started initiatives to develop and implement MBIs within the university context in order to help students adapt to academic life and promote their mental health (Modrego-Alarcón et al., 2018). Furthermore, previous reviews have reported the effectiveness of MBIs for promoting university students’ mental health (Bamber & Morpeth, 2019; Chiodelli et al., 2020; Daya & Hearn, 2018; Halladay et al., 2019; McConville et al., 2017; O'Driscoll et al., 2017; Reangsing et al., 2022; Yogeswaran & El Morr, 2021). Most of these reviews reported beneficial effects such as reductions in levels of depression, anxiety and stress levels. However, these reviews focused on specific university student populations, for example medical and health professional students (Daya & Hearn, 2018; McConville et al., 2017; Yogeswaran & El Morr, 2021) and health and social care students (O'Driscoll et al., 2017), included only in-person MBIs (Chiodelli et al., 2020), or focused on certain mental health outcomes such as depression, anxiety and stress (Bamber & Morpeth, 2019; Halladay et al., 2019; Reangsing et al., 2022). This, however, limits the generalisability of findings to broader student populations, different MBI formats and other mental health outcomes.

One systematic review and meta-analysis that was not limited to a particular student population and explored the effectiveness of MBIs for improving mental and physical health and academic performance was conducted by Dawson et al. (2020). They included in-person and self-help MBIs comprising online, written and printed bibliotherapies. The results varied depending on outcomes and comparison groups. For example, there were improvements in levels of distress and well-being, but not in worry, sleep or life satisfaction when MBIs were compared to passive controls. They could not report on academic performance due to insufficient data. Although this was a comprehensive review, they excluded studies that included participants with a health diagnosis, for example clinical depression. Additionally, the findings need updating given that the searches were completed in 2017, and other studies may have been published since then.

To the authors’ knowledge, no review has examined the specific effectiveness of MBIs delivered online among broader university student populations or explored attrition rates for these interventions. Investigating the effect of MBIs on university students’ academic performance is also needed, as the previous review was unable to report on this. Although similar reviews have been conducted, they were not able to address this because they (I) focused on specific university student populations (Daya & Hearn, 2018; McConville et al., 2017; Yogeswaran & El Morr, 2021; O'Driscoll et al., 2017), (II) focused on specific mental health outcomes (Bamber & Morpeth, 2019; Halladay et al., 2019; Reangsing et al., 2022), (III) included only in-person MBIs, both in-person and self-help MBIs, delivered via a range of delivery modes including online and via bibliotherapy, or delivery modes were unclear (Bamber & Morpeth, 2019; Dawson et al., 2020; Halladay et al., 2019), (IV) only included randomised controlled trials and excluded studies based on some participant characteristics (Dawson et al., 2020). Furthermore, findings need updating as literature database searches were completed a few years ago (e.g. 2017 in the case of Dawson et al., 2020). There is an increasing use of technology in providing health care and a growing interest in MBIs in recent years (Chiodelli et al., 2020; Plaza et al., 2013) and so more studies may have been published since then.

Consequently, this review aimed to examine the effectiveness of online MBIs on university students’ mental health and academic performance and to explore the attrition rate of online MBIs among this population. This may facilitate students’ access to psychological support and may inform universities and policymakers about the provision of effective and efficient well-being services.

Method

Eligibility Criteria

The inclusion criteria were (1) randomised controlled trials (RCTs) and non-randomised controlled trials (non-randomised CTs). Unpublished studies were included, to reduce publication bias; (2) part-time or full-time university students aged ≥ 18 years old who were working towards any university-based undergraduate or postgraduate degree; (3) mental health outcomes (i.e. any outcomes related to mental or psychological health such as depression or well-being) or measure of academic performance (i.e. changes in academic grades); (4) any MBIs. Mindfulness was defined as paying deliberate attention to and being aware of one’s experiences in the present moment non-judgmentally (Kabat-Zinn, 2012); (5) MBIs had to be delivered online via any digital format; and (6) both online MBIs with or without facilitators were included, as long as there was no in-person communication.

Studies were excluded if (1) they only explored behavioural outcomes (e.g. eating, coping strategies, sleep-related outcomes), cognitive outcomes (e.g. attention, reasoning) or trait outcomes (e.g. mindfulness, perfectionism) when none of the relevant outcomes was identified; (2) MBIs were delivered in-person, via offline bibliotherapy, via a blended format (i.e. in-person and online), or were delivered via an online mindfulness practice during an in-person session; (3) mindfulness did not form the majority of the intervention; (4) the online MBI included components of other psychological treatments (e.g. muscle relaxation training, behavioural activation); (5) online MBIs were delivered in experimental settings (e.g. listening to audio files of MBIs in laboratory-based settings); (6) non-English studies; and (7) they had a small sample size (i.e. ≤ 5 participants per arm) (Lin, 2018).

Information Sources and Search Strategy

Four electronic databases (PubMed, Web of Science, PsycInfo and Embase) were searched separately from date of conception to 25/11/2021. Search terms were developed based on key concepts relating to mindfulness, online interventions and university students (see Appendix A; Online Resource). There were no specific search limitations or restrictions. The reference lists of included studies, relevant reviews and studies were searched manually by one researcher (DA).

Selection Process and Data Collection

After removal of duplicates, titles and abstracts were screened by two researchers (DA and KC) independently and blindly. Full-text articles were retrieved for potentially relevant studies and assessed against inclusion criteria by two researchers (DA and KC) independently and blindly. A standardised data extraction form was created, and data were extracted in relation to characteristics of studies, participants, interventions, outcomes and results (see Appendix A, Online Resource). Outcome data including mean, standard deviation and number of participants in each arm at pre-intervention, post-intervention and follow-up were also extracted. If necessary, authors were contacted to provide clarification about queries or obtain missing data. Data extraction was conducted by two researchers (DA and KC) independently and blindly. Disagreements were resolved via discussion with two other researchers (RG and CM).

Narrative Synthesis

A narrative synthesis was used for outcomes that were not entered into meta-analyses due to insufficient data.

Meta-analyses

RevMan version 5.4 software was used to conduct meta-analyses. Random effects meta-analyses using a DerSimonian and Laird estimator based on inverse variance weights were conducted as studies were anticipated to be heterogeneous in terms of participant and intervention characteristics (Boland et at., 2017; Cochrane Collaboration, 2020).

Standardised mean differences (SMDs) were calculated within RevMan, with a 95% confidence interval for continuous data, as studies measured outcomes using different validated self-reported scales (Boland et al., 2017). SMDs were calculated using means, standard deviations (or standard errors transformed into standard deviations) and number of participants for each outcome, in each arm, at post-intervention and follow-up timepoints. Studies with insufficient outcome data were not included in meta-analyses. Pooled SMDs were interpreted using standard convention: small (0.2), medium (0.5) and large (0.8) (Davies et al., 2014; Hofmann et al., 2010).

Mantel–Haenszel odds ratios with a 95% confidence interval were calculated for dichotomous data (Davies et al., 2014). That is, outcome data related to attrition rate (defined as the number of dropouts after randomisation at post-intervention) were extracted in each arm and submitted into separate meta-analyses for different comparison groups, as highlighted below.

Statistical heterogeneity across studies was assessed using the chi-square test and the degree of heterogeneity was measured using the I2 statistic. Values of ≤ 30%, 31–49% and ≥ 50% were considered to reflect low, moderate and substantial heterogeneity, respectively (Higgins et al., 2019). Funnel plots (i.e. asymmetrical inspection) were used to assess publication bias when there were ≥ 10 studies, as previously recommended (Higgins et al., 2019).

Data were entered into separate meta-analyses for different comparison groups: (1) intervention vs. non-active controls (e.g. waiting list group, treatment-as-usual), (2) intervention vs. active controls (defined as any placebo comparator that controls for non-specific therapeutic effects such as social support and attention, e.g. a discussion group or psychoeducation) and (3) intervention vs. other treatments. Separate meta-analyses were conducted for different outcomes (e.g. depression, stress and anxiety). Separate meta-analyses were also conducted for different assessment timepoints: (1) post-intervention, (2) ‘shorter follow-up’ (defined here as ≤ 8 weeks) and (3) ‘longer follow-up’ (defined here as > 8 weeks).

Data were entered into meta-analyses when reported by at least two studies at the same assessment timepoint (Cochrane Consumers & Communication Review Group, 2016; Valentine et al., 2010). As some studies reported multiple types of analyses (e.g. intention-to-treat vs. completer analyses), used multiple measures to assess the same facet of mental health, included multiple follow-up timepoints, assessed multiple types of MBIs, data were selected for inclusion based on rules outlined in Appendix B; Online Resource to avoid data duplication and enhance homogeneity.

Risk of Bias Assessment

The Revised Cochrane Risk-of-Bias Tool for Randomised Trials (ROB-2) was used to evaluate the risk of bias in the RCTs (Sterne et al., 2019). It assesses five domains: randomisation process, deviations from the intended intervention, missing outcome data, outcome measurement and selection of the reported result. Risk of bias was rated as ‘low’, ‘some concern’ or ‘high-risk’. The Cochrane Risk-of-Bias in Non-randomised Studies of Interventions (ROBINS-1) was used for non-randomised CTs (Sterne et al., 2016). The assessment domains were risk of bias on confounding, participants’ selection, intervention classification, deviations from the intended intervention, missing outcome data, outcome measurement and selection of the reported result. Risk of bias was rated as ‘low’, ‘moderate’, ‘serious’, ‘critical’ or ‘no information’. Two researchers (DA and KC) evaluated the risk of bias independently and blindly and any disagreements were resolved via discussion.

Results

Study Selection

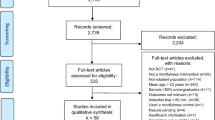

Figure 1 presents the PRISMA flowchart. The search identified 1637 records, with 26 studies being included in the review after screening for inclusion criteria.

PRISMA flowchart for studies selection. From Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ 2021;372:n71. https://doi.org/10.1136/bmj.n71

Study Characteristics

Study characteristics are presented in Tables 1–5, Appendix C; Online Resource. Twenty-three studies were RCTs, and three studies were non-randomised CTs. Out of these studies, one was an unpublished PhD thesis (Rhine, 2020). Most studies (76.9%) were published in the past 5 years (≥ 2018). Studies were conducted in eleven different countries in which the majority (84.6%) were from Western countries. All studies recruited participants from non-clinical university settings, except for one study that recruited participants from the waiting list of a university counselling centre (Levin et al., 2020a).

Sample sizes ranged from 23 (Levin et al., 2020a) to 427 (Chung et al., 2021), with a total of 3364 participants included across all studies. Half of the studies conducted an a priori power analysis to determine the sample size, with 4 failing to recruit the targeted sample size. Participants ranged in age from 18 to 57 years old, with a mean age of 22.6 years old across studies reporting these data. Participants were predominantly female (64–100% of the total sample) in the majority of studies (76.9%). Ethnicity was not reported in 12 studies (46.1%). In those studies that did report this, participants self-identified as white (20–94%) in 11 out of 14 studies, while participants self-identified as Chinese or Malay in the remaining three studies.

Students were recruited from different university departments and courses (e.g. psychology, social care, mixed health and non-health courses) in 22 studies (84.6%), and medical and health-related courses in 4 studies (15.3%). Six studies involved students with mental health difficulties including self-reported stress and self-criticism (Andersson et al., 2021), exposure to traumatic events and meeting self-reported criteria of complex/post-traumatic stress disorder (Dumarkaite et al., 2021), exposure to traumatic event and unwanted memories (Knabb et al., 2021), being in the wait list of the counselling and psychological services centre at the university (Levin et al., 2020a), and elevated levels of anxiety and depression symptoms (Rodriguez et al., 2021; Sun et al., 2021). Twelve studies (46.1%) recruited undergraduate students, 6 studies (23%) included a mixed sample of undergraduates and postgraduates, and 8 studies (30.7%) did not report students’ educational level.

No academic-related outcomes were identified in any study. All reported outcomes were related to mental health, which varied from psychological distress to psychological well-being. The majority of studies (20/26, 76.9%) also measured mindfulness. Six studies (23%) specified an inclusion criterion in relation to mental health: all of these relied on self-report questionnaires rather than clinical diagnosis. All studies reported findings at post-intervention. However, 18 studies (69.2%) had no follow-up, 7 studies (26.9%) had a short follow-up (≤ 8 weeks) and only one study had a long follow-up (6 months) (Phang et al., 2015).

Intervention Characteristics

Studies varied in terms of the content and intensity of online MBIs, ranging from brief mindfulness breath techniques to courses based on mindfulness-based stress reduction and mindfulness-based cognitive therapy programme. Studies delivered the intervention via an external platform (e.g. website, Zoom; 38.4%), followed by a software application (30.7%).

Intervention duration ranged from 10 days (Flett et al., 2019) to 11 weeks (Lyzwinski et al., 2019). One study did not report the intervention duration (Rhine, 2020). Twenty-one studies (80.7%) were two-armed trials that compared online MBIs to non-active controls (46.1%), active controls (19.2%) or other treatments (15.3%). The remaining studies were three-armed trials in which three studies (11.5%) had two mindfulness intervention arms vs. non-active controls or active controls (Ahmad et al., 2020; Flett et al., 2019; Nguyen-Feng et al., 2017) and two studies (7.6%) had one intervention arm and two control arms (active controls or other treatments and non-active controls) (Andersson et al., 2021; Messer et al., 2016). Non-active controls comprised solely of wait list controls. Active controls comprised of expressive writing, psychoeducation, social support or cognitive training. Other treatments included cognitive behavioural therapy, acceptance-based therapy, mindfulness in conjunction with other elements or a brief version, meditation, compassion or relaxation. All comparison arms were delivered online.

Several studies (65.3%) incorporated online interaction forums which allowed communication between researchers, psychologists or other trained professionals and participants during the implementation of online MBIs. Methods of interaction with research participants varied. Some studies (26.9%) had live virtual communication (e.g. Zoom classes) and real-time communication such as peer-to-peer support and group-based board discussion. Several studies sent e-mails or messages as reminders when modules were available (30.7%) to encourage participants to practice and complete tasks (11.5%), or when participants missed practice training (3.8%). One study (3.8%) encouraged participants to turn on the application notification to serve as a reminder. A third of studies (30.7%) did not incorporate any form of interaction during the intervention.

Treatment fidelity (i.e. assessing participants’ basic knowledge and attention to the contents of the intervention) was measured in one study, but the findings were not reported (Knabb et al., 2021). Participants’ attendance and completion of the intervention and its practice were reported by 21 studies (80.7%). Measures of engagement varied across studies, from measuring session completion to mindfulness exercises completion (see Table 5; Appendix C; Online Resource).

Risk of Bias

Figures 2 and 3 show the risk of bias in included studies: risk of bias was rated as moderate to high in all studies, with no study receiving a rating of low risk of bias. All studies measured outcomes subjectively through self-reported questionnaires, leading to a rating of ‘some concerns’ for bias in measurement of outcomes. The pre-specified study protocol was not reported in most RCT studies (78.2%), which led to a rating of ‘some concerns’ for the reporting bias. Two studies had a ‘high’ risk of bias due to incomplete reporting (Ritvo et al., 2021; Rodriguez et al., 2021). Most RCTs used appropriate randomisation methods (86.9%). However, blinding was not conducted in any RCT, except for one study which conducted a single-blind trial (Warnecke et al., 2011).

Narrative Synthesis Results

Six mental health outcomes (quality of life, post-traumatic stress or complex post-traumatic stress symptoms, rumination, trauma-related symptoms, worry, and positive and negative affect) were not entered into meta-analyses due to insufficient data. Quality of life was significantly increased among university students who received a full or partial mindfulness virtual community compared to a waiting list at post-intervention (Ahmad et al., 2020). There was a statistically significant reduction in complex post-traumatic stress disturbances in self-organisation symptoms in students who received the intervention compared to waiting list at post-intervention. However, this reduction was not observed for overall post-traumatic stress symptoms (Dumarkaite et al., 2021).

Inconsistent findings were reported for the effects of MBIs on different types of rumination; cognitive-affective rumination was significantly reduced in students who received the intervention compared to the waiting list at post-intervention (Hosseinzadeh Asl & İl, 2021). However, traumatic-based rumination did not differ between students who received the intervention to those in the waiting list at post-intervention (Knabb et al., 2021).

Furthermore, no statistically significant differences were found when MBIs were compared to control groups in (1) other trauma-related symptoms at post-intervention (Knabb et al., 2021); (2) worry at post-intervention or at 2/4-week follow-up (Nguyen-Feng et al., 2017); and (3) positive and negative affect at post-intervention (Noone & Hogan, 2018).

Meta-analyses Results

A total of 16 meta-analyses were conducted. Five outcomes reported in 21 studies were entered into separate meta-analyses: depression, stress, anxiety, psychological distress and psychological well-being. Table 1 shows meta-analytic findings. Figures are presented in Appendix D; Online Resource.

Depression

As shown in Fig. 1, there was a small statistically significant pooled SMD of − 0.41 (95% CI = − 0.59, − 0.23) in favour of online MBIs, in comparison to non-active controls at post-intervention, with moderate but non-significant heterogeneity in effect sizes being found (I2 = 33%). This benefit was not seen when online MBIs were compared to active controls at post-intervention or at short follow-up (pooled SMD − 0.02 [95% CI = − 0.32 to 0.29]; pooled SMD − 0.10 [95% CI = − 0.48 to 0.28], respectively) (Figs. 2 and 3). However, there was evidence of statistically significant, substantial heterogeneity in effect sizes at both timepoints (I2 = 67% and 73%, respectively). Similarly, there was no statistically significant benefit seen when online MBIs were compared to other treatments at post-intervention (pooled SMD 0.16 [95% CI = − 0.13 to 0.44]) (Fig. 4). There were not enough studies to permit any other meta-analyses.

Stress

As shown in Fig. 5, there was a small statistically significant pooled SMD of − 0.40 (95% CI = − 0.53, − 0.27) in favour of online MBIs, in comparison to non-active controls at post-intervention. However, this benefit was not seen when online MBIs were compared to active controls at post-intervention or at short follow-up (pooled SMD − 0.16 [95% CI = − 0.63, 0.32]; − 0.11 [95% CI = − 0.34, 0.13], respectively), with statistically significant, substantial heterogeneity in effect sizes being found at post-intervention (I2 = 83%) (Figs. 6 and 7). Furthermore, there was a small statistically significant pooled SMD of 0.32 (95% CI = 0.13, 0.52) in favour of other treatments, in comparison to online MBIs at post-intervention (Fig. 8). There were not enough studies to permit any other meta-analyses.

Anxiety

As shown in Fig. 9, there was a small statistically significant pooled SMD of − 0.45 (95% CI = − 0.63, − 0.28) in favour of online MBIs, in comparison to non-active controls at post-intervention. However, this benefit was not seen when online MBIs were compared to active controls at post-intervention or short follow-up (pooled SMD 0.09 [95% CI = − 0.16, 0.33]; pooled SMD − 0.09 [95% CI = − 0.42, 0.25], respectively) (Figs. 10 and 11). However, moderate heterogeneity (I2 = 43%) and substantial heterogeneity (I2 = 65%) in effect sizes were found at post-intervention and follow-up, respectively, though neither were statistically significant. There was no statistically significant benefit seen when online MBIs were compared to other treatments at post-intervention (pooled SMD 0.20 [95% CI = − 0.09 to 0.48]) (Fig. 12). There were not enough studies to permit any other meta-analyses.

Psychological Distress

Psychological distress refers to outcome measures that examined overall psychological distress across a range of conditions. These as are outlined in Appendix E; Online Resource. As shown in Fig. 13, there was a medium statistically significant pooled SMD of − 0.54 (95% CI = − 0.85, − 0.23) in favour of online MBIs, in comparison to non-active controls at post-intervention. However, this gain was not observed when online MBIs were compared to other treatments at post-intervention (pooled SMD 0.13 [95% CI = − 0.21, 0.46]) (Fig. 14). There were not enough studies to permit any other meta-analyses.

Psychological Well-being

Psychological well-being comprised different components of well-being including personal, social, environmental, emotional, and cognitive aspects, and life satisfaction (Keyes, 2005; Lukat et al., 2016; McDowell, 2006; Tennant et al., 2007). See Appendix E; Online Resource for measures that were grouped to define this outcome. As shown in Fig. 15, there was a small statistically significant pooled SMD of 0.45 (95% CI = 0.24, 0.65) in favour of online MBIs, in comparison to non-active controls at post-intervention. However, this gain was not observed when online MBIs were compared to other treatments at post-intervention (pooled SMD 0.20 [95% CI = − 0.08, 0.48]) (Fig. 16). There were not enough studies to permit any other meta-analyses.

Attrition Rate

Twenty-three studies were included in 3 meta-analyses (Table 2). In total, 33.8% of participants dropped out of online MBI arms regardless of comparison arms (n = 497/1470). Participants were significantly more likely to drop out of online MBI arms (37.7%) compared to non-active controls (20%) (Fig. 17). Likewise, participants were significantly more likely to drop out of online MBI arms (25.3%) compared to active controls (16.7%) (Fig. 18). However, no significant difference was observed in attrition rate between online MBIs (31.5%) and other treatments (35%), with statistically significant, substantial heterogeneity being observed (I2 = 59%) (Fig. 19).

Publication Bias

Funnel plots were used to assess publication bias when there were ≥ 10 studies, as recommended. Visual analysis of funnel plots did not suggest any significant evidence of publication bias for online MBIs vs. non-active controls for stress at post-intervention or for attrition rate when comparing online MBIs vs. non-active controls (Figs. 20 and 21).

Discussion

This systematic review explored the effectiveness of online MBIs on university students’ mental health and academic performance. The studies revealed noticeable growth in this field, as most (76.9%) were published in the past 5 years. All identified outcomes were related to mental health, with none being related to academic performance. When compared with non-active controls, online MBIs significantly reduced levels of depression, stress, anxiety and psychological distress symptoms and increased well-being at post-intervention, with small to medium effect sizes being found.

In contrast, no statistically significant differences were found between online MBIs and active controls for any outcome at post-intervention or at short follow-up. However, moderate to substantial heterogeneity in effect sizes was detected across studies for these outcomes, indicating high variability among them, which limits the conclusions that can be drawn. One reason for this could be the variability in content of the active controls, including expressive writing, stress management psychoeducation, social support and cognitive training. Additionally, potentially beneficial effects of MBIs on health outcomes may have been ‘diluted’ by placebo attention controls that were more akin to alternative treatments, given their previously reported effectiveness, rather than active controls (e.g. expressive writing: Pennebaker & Beall, 1986; stress psychoeducation: Amanvermez et al., 2020). Another possible explanation for the statistically non-significant differences might be that analyses were insufficiently powered to detect meaningful group differences due to small number of included studies (k = 2–4).

Similarly, no differences were found between online MBIs and other treatments for any outcome at post-intervention, with the exception of stress whereby other treatments outperformed online MBIs for reducing stress at post-intervention. This might be due to the similarity between the other treatments and online MBIs, which was either the same MBI combined with stress management skills (Nguyen-Feng et al., 2017); a less comprehensive version of the MBI (e.g. without peer support) (Rodriguez et al., 2021); used similar potential mechanisms of change such as compassion (Andersson et al., 2021); or examined another third-wave therapy such as acceptance and commitment therapy (Levin et al., 2020b). These findings must be interpreted with caution given the small number of studies (K = 2–4) and total participants (N = 138–404) included in analyses: analyses might be insufficiently powered to examine differences between other treatments and MBIs.

The current findings are in line with the broader benefits reported in previous reviews that have examined the effect of MBIs delivered via mixed modes on university students’ levels of depression, stress and anxiety symptoms (Bamber & Morpeth, 2019; Dawson et al., 2020; Daya & Hearn, 2018; Halladay et al., 2019; McConville et al., 2017; Reangsing et al., 2022). This review found no significant differences between online MBIs and active controls in relation to depression, stress and anxiety at post-intervention or at short follow-up, with moderate to high heterogeneity being observed across studies. The finding for depression was consistent with other reviews (Dawson et al., 2020; Halladay et al., 2019). However, previous research has reported mixed findings for stress and anxiety. Dawson et al. (2020) reported a reduction in levels of stress and anxiety at post-intervention, whereas Halladay et al. (2019) did not observe any significant reduction in these two outcomes. These mixed findings might be due to heterogeneity in inclusion criteria and definitions of outcomes, MBIs and active controls. Furthermore, Reangsing et al. (2022) reported an overall significant improvement in depressive symptoms in comparison to controls, unlike this review’s findings. The most likely reason for the discrepancy between this review’s findings and those of Reansing et al. is that the current review distinguished between non-active and active controls whereas Reansing et al. did not.

Psychological distress comprised measures of overall psychological distress such as the total score on the Depression, Anxiety and Stress Scale. Overall psychological distress was not examined in other reviews and so no comparisons can be made with previous findings. Regarding well-being outcomes, the results were consistent with Dawson et al.’s (2020) findings on enhancing subjective well-being compared to passive controls at post-intervention.

The current review found that attrition rates were higher in online MBIs compared to non-active and active controls. This might be because non-active controls did not require time and/or effort apart from enrolment, while active controls included tasks that were simple in nature and/or less demanding than online MBIs, such as expressive writing and psychoeducation. However, encouragingly, there were no attrition rate differences between online MBIs and other treatments, which may indicate that attrition reflected an issue common to all active conditions rather than a specific consequence of receiving online MBIs (Davies et al., 2014).

Certainly, our findings reflect similar patterns of attrition reported for internet-based psychotherapies compared to non-active controls, but not active controls (Davies et al., 2014). The reason for inconsistent results in attrition rate when online interventions were compared to active controls is unclear, but might be due to the type of intervention itself (i.e. MBIs vs. different type of psychotherapies). Clearly, further research is needed before conclusions can be drawn about attrition in online MBIs compared to active controls. Future research should also seek to explore ways of enhancing students’ engagement and adherence in online MBIs, for example by incorporating human interaction through peer support into online MBIs (Rodriguez et al., 2021).

Interestingly, almost half of the studies in this review (12/26) have been included in previous meta-analyses: 8 studies by Reangsing et al. (2022), 6 studies by Halladay et al. (2019), 3 studies by Dawson et al. (2020), 2 studies by McConville et al. (2017) and 1 study by Bamber and Morpeth (2019). Differences in included studies across reviews are mostly due to differences in eligibility criteria and when previous searches were conducted, in addition to an explosion of studies in this area in the past few years. These reviews unanimously agreed on the effectiveness of MBIs for students’ mental health despite differences in meta-analytic methods used. Nonetheless, 14/26 studies included in this review have not been explored in previous reviews, which may also have contributed to the discrepancy between the current review’s findings and those of others (e.g. Reangsing et al., 2022).

A final issue that needs to be addressed is that of clinical significance vs. statistical significance. It is important to determine whether functional health outcomes are not just statistically significant, but also clinically meaningful (e.g. Ferguson et al., 2002). Halladay et al. (2019) transformed meta-analytic effect estimates of MBIs back into clinical scores and found that although these estimates were statistically significant for certain outcomes such as depression, anxiety and perceived stress, they might not reflect clinically relevant changes, therefore indicating clinical uncertainty. Given that online MBIs in this review were beneficial for depression, stress, anxiety, psychological distress and psychological well-being only at post-intervention and only when compared to non-active controls, much uncertainty remains as to whether these effects were clinically meaningful. Few studies (19.2%) included in this review reported on clinical significance. Therefore, future studies should examine minimum clinically important differences or reliable change indices when examining the effects of online MBIs on mental health outcomes.

Strengths and Limitations

This is a comprehensive pre-registered systematic review and meta-analysis that evaluated the effectiveness of online MBIs for improving mental health and academic performance in undergraduate and postgraduate students and explored the attrition rate of online MBIs among this population. However, there are a number of limitations of the review. The risk of bias was rated as moderate to high in all studies, leading to possible methodological flaws that might affect the findings of included studies (Furuya-Kanamori et al., 2021). As a result, the findings of this review may be partially explained by biases due to the poor methodological quality of included studies. It was not possible to conduct sensitivity analyses to examine the effects of removing high-risk studies as all included studies were rated as having a moderate to high risk of bias.

A priori power calculations were not performed by half of the included studies and among those studies who conducted it, few (30.7%) did not reach the targeted sample size. Performing a priori power calculations is important to determine the sample size needed to detect meaningful effects (Kyonka, 2019) and when a trial has an inadequate sample size, it may lack the power to detect an intervention’s effects (van Hoorn et al., 2017). Pooled sample sizes were adequate when comparing online MBIs with non-active controls, indicating robust findings. In contrast, pooled sample sizes were much smaller when online MBIs were compared to active controls and other treatments. This made it difficult to draw robust conclusions regarding online MBIs compared to active controls or other treatments.

There was a lack of information on the effect of online MBIs at both short- and long-term follow-up. Most studies did not conduct follow-up assessments and focused only on the period immediately after the intervention, or they did not report descriptive follow-up data. Therefore, it is unclear whether the effects of online MBIs are maintained beyond post-intervention.

This review included all university populations; however, the generalisability of findings is limited by the fact that most participants were female, and most studies were conducted in Western countries. This means that the results of the review may not generalise to broader populations.

There was variability in content of the active controls, with some of the placebo attention controls containing non-specific therapeutic components (e.g. expressive writing and stress psychoeducation). Although it would have been informative to analyse the effectiveness of online MBIs in relation to different types of active controls, it was not possible to conduct this analysis due to the limited number of studies, which constrained the interpretation of findings.

No studies reported academic outcomes; therefore, this review could not evaluate the effect of online MBIs on academic performance. Additionally, no pre-specified subgroup analyses could be conducted for undergraduates versus postgraduates, intervention duration or for different types of MBIs due to insufficient data.

Studies that did not report descriptive results were not included in meta-analyses and non-English publications were excluded; therefore, this review might have missed relevant studies. Finally, this review conducted random effect meta-analyses when there were two or more studies following previous guidance (Cochrane Consumers & Communication Review Group, 2016; Valentine et al., 2010). However, others have suggested that a minimum of five studies is required to conduct such analyses (Tufanaru et al., 2015).

Future Implications

Future research should measure outcomes at follow-up timepoints, to explore the longer-term effects of online MBIs. Also, future research should explore the effect of online MBIs on university students’ academic performance. Recruiting more diverse participants in terms of gender and examining online MBIs in non-Western countries is also needed. Additionally, further adequately powered research is needed to examine the effect of online MBIs in comparison to active controls and other treatments, to draw more robust conclusions about the effect of online MBIs in comparison to counterpart interventions. Future studies should also investigate whether online MBIs can alleviate distress levels and enhance well-being among university students meeting clinical thresholds at baseline. Regarding attrition, future systematic reviews should examine whether attrition rates differ when online interventions are interactive, such as including reminders and virtual discussions, compared to online interventions without interaction. This was not addressed in this review due to insufficient data.

Conclusion

This review aimed to explore the effect of online MBIs on university students’ mental health and academic performance and to explore the attrition rate of online MBIs among this population. Twenty-six studies were identified. Online MBIs appear to be better alternatives than no intervention for improving students’ mental health and well-being at post-intervention, but not when compared to active controls and other treatments. However, these findings should be interpreted with caution due to moderate to high risk of bias among the included studies, small sample sizes and heterogeneity across studies in some analyses and participant characteristics. These findings and limitations restrict the recommendations that can be made with respect to clinical practice at this time, and uncertainty remains about the clinically meaningful effects of online MBIs on university students’ mental health.

Registration and Protocol

This review follows the preferred reporting items for systematic reviews and meta-analyses (PRISMA) (Page et al., 2021). See Appendix F; Online Resource for the PRISMA checklist. The protocol for this review was pre-registered with PROSPERO (CRD42021296717). Several amendments were undertaken (see Appendix G; Online Resource). The data that supports the findings are available in the Online Resource of this study.

Data Availability

The data that supports the findings are available in the Online Resource of this study.

References

Ahmad, F., El Morr, C., Ritvo, P., Othman, N., & Moineddin, R. (2020). An eight-week, web-based mindfulness virtual community intervention for students’ mental health: Randomized controlled trial. JMIR Mental Health, 7(2), e15520.

Aldiabat, K. M., Matani, N. A., & Navenec, C. L. (2014). Mental health among undergraduate university students: A background paper for administrators, educators and healthcare providers. Universal Journal of Public Health, 2(8), 209–214.

Amanvermez, Y., Rahmadiana, M., Karyotaki, E., de Wit, L., Ebert, D. D., Kessler, R. C., & Cuijpers, P. (2020). Stress management interventions for college students: A systematic review and meta-analysis. Clinical Psychology: Science and Practice.

Andersson, C., Bergsten, K. L., Lilliengren, P., Norbäck, K., Rask, K., Einhorn, S., & Osika, W. (2021). The effectiveness of smartphone compassion training on stress among Swedish university students: A pilot randomized trial. Journal of Clinical Psychology, 77(4), 927–945.

Auerbach, R. P., Alonso, J., Axinn, W. G., Cuijpers, P., Ebert, D. D., Green, J. G., Hwang, I., Kessler, R. C., Liu, H., Mortier, P., Nock, M. K., Pinder-Amaker, S., Sampson, N. A., Aguilar-Gaxiola, S., Al-Hamzawi, A., Andrade, L. H., Benjet, C., Caldas-de-Almeida, J. M., Demyttenaere, K., … Bruffaerts, R. (2016). Mental disorders among college students in the World Health Organization World Mental Health Surveys. Psychological Medicine, 46(14), 2955–2970.

Bamber, M. D., & Morpeth, E. (2019). Effects of mindfulness meditation on college student anxiety: A meta-analysis. Mindfulness, 10(2), 203–214.

Boland, A., Cherry, G., & Dickson, R. (2017). Doing a systematic review: A student’s guide.

Cavanagh, K., Strauss, C., Cicconi, F., Griffiths, N., Wyper, A., & Jones, F. (2013). A randomised controlled trial of a brief online mindfulness-based intervention. Behaviour Research and Therapy, 51(9), 573–578.

Chiodelli, R., Mello, L. T. N. de, Jesus, S. N. de, Beneton, E. R., Russel, T., & Andretta, I. (2020). Mindfulness-based interventions in undergraduate students: A systematic review. Journal of American College Health, 1–10.

Chung, J., Mundy, M. E., Hunt, I., Coxon, A., Dyer, K. R., & McKenzie, S. (2021). An evaluation of an online brief mindfulness-based intervention in higher education: A pilot conducted at an Australian University and a British University . In Frontiers in Psychology (Vol. 12).

Cochrane Consumers and Communication Review Group. (2016). Cochrane Consumers and Communication Group: meta-analysis. Retrieved on July 4 2022, from http://cccrg.cochrane.org

Cochrane Collaboration. (2020). Review Manager (RevMan) [Computer program] (5.4). The Cochrane Collaboration.

Davies, E. B., Morriss, R., & Glazebrook, C. (2014). Computer-delivered and web-based interventions to improve depression, anxiety, and psychological well-being of university students: A systematic review and meta-analysis. Journal of Medical Internet Research, 16(5), e3142.

Dawson, A. F., Brown, W. W., Anderson, J., Datta, B., Donald, J. N., Hong, K., Allan, S., Mole, T. B., Jones, P. B., & Galante, J. (2020). Mindfulness-based interventions for university students: A systematic review and meta-analysis of randomised controlled trials. Applied Psychology. Health and Well-Being, 12(2), 384–410.

Daya, Z., & Hearn, J. H. (2018). Mindfulness interventions in medical education: A systematic review of their impact on medical student stress, depression, fatigue and burnout. Medical Teacher, 40(2), 146–153.

Dumarkaite, A., Truskauskaite-Kuneviciene, I., Andersson, G., Mingaudaite, J., & Kazlauskas, E. (2021). Effects of mindfulness-based internet intervention on ICD-11 posttraumatic stress disorder and complex posttraumatic stress disorder symptoms: A pilot randomized controlled trial. Mindfulness, 1–13.

El Morr, C., Ritvo, P., Ahmad, F., & Moineddin, R. (2020). Effectiveness of an 8-week web-based mindfulness virtual community intervention for university students on symptoms of stress, anxiety, and depression: Randomized controlled trial. JMIR Mental Health, 7(7), e18595.

Ferguson, R. J., Robinson, A. B., & Splaine, M. (2002). Use of the reliable change index to evaluate clinical significance in SF-36 outcomes. Quality of Life Research, 11(6), 509–516.

Flett, J. A. M., Hayne, H., Riordan, B. C., Thompson, L. M., & Conner, T. S. (2019). Mobile mindfulness meditation: A randomised controlled trial of the effect of two popular apps on mental health. Mindfulness, 10(5), 863–876.

Furuya-Kanamori, L., Xu, C., Hasan, S. S., & Doi, S. A. (2021). Quality versus risk-of-bias assessment in clinical research. Journal of Clinical Epidemiology, 129, 172–175.

Gazzaz, Z. J., Baig, M., & al Alhendi, B. S. M., al Suliman, M. M. O., al Alhendi, A. S., Al-Grad, M. S. H., & Qurayshah, M. A. A. (2018). Perceived stress, reasons for and sources of stress among medical students at Rabigh Medical College, King Abdulaziz University, Jeddah. Saudi Arabia. BMC Medical Education, 18(1), 29.

Greer, C. (2015). An online mindfulness intervention to reduce stress and anxiety among college students. University of Minnesota.

Halladay, J. E., Dawdy, J. L., McNamara, I. F., Chen, A. J., Vitoroulis, I., McInnes, N., & Munn, C. (2019). Mindfulness for the mental health and well-being of post-secondary students: A systematic review and meta-analysis. Mindfulness, 10(3), 397–414.

Higgins, J. P. T., Thomas, J., Chandler, J., Cumpston, M., Li, T., Page, M. J., & Welch, V. A. (2019). Cochrane handbook for systematic reviews of interventions. John Wiley & Sons.

Hofmann, S. G., Sawyer, A. T., Witt, A. A., & Oh, D. (2010). The effect of mindfulness-based therapy on anxiety and depression: A meta-analytic review. Journal of Consulting and Clinical Psychology, 78(2), 169–183.

Hosseinzadeh Asl, N. R., & İl, S. (2021). The effectiveness of a brief mindfulness-based program for social work students in two separate modules: Traditional and online. Journal of Evidence-Based Social Work, 1–22.

Huang, J., Nigatu, Y. T., Smail-Crevier, R., Zhang, X., & Wang, J. (2018). Interventions for common mental health problems among university and college students: A systematic review and meta-analysis of randomized controlled trials. Journal of Psychiatric Research, 107, 1–10.

Kabat-Zinn, J. (2012). Mindfulness for beginners: Reclaiming the present moment—and your life. Sounds True.

Keyes, C. L. M. (2005). Mental illness and/or mental health? Investigating axioms of the complete state model of health. Journal of Consulting and Clinical Psychology, 73(3), 539.

Knabb, J. J., Vazquez, V. E., Pate, R. A., Garzon, F. L., Wang, K. T., Edison-Riley, D., Slick, A. R., Smith, R. R., & Weber, S. E. (2021). Christian meditation for trauma-based rumination: A two-part study examining the effects of an internet-based 4-week program. Spirituality in Clinical Practice, No Pagination Specified-No Pagination Specified.

Kvillemo, P., Brandberg, Y., & Bränström, R. (2016). Feasibility and outcomes of an internet-based mindfulness training program: A pilot randomized controlled trial. JMIR Ment Health, 3(3), e33.

Kyonka, E. G. E. (2019). Tutorial: Small-N power analysis. Perspectives on Behavior Science, 42(1), 133–152.

Levin, M. E., An, W., Davis, C. H., & Twohig, M. P. (2020b). Evaluating acceptance and commitment therapy and mindfulness-based stress reduction self-help books for college student mental health. Mindfulness, 11(5), 1275–1285.

Levin, M. E., Hicks, E. T., & Krafft, J. (2020a). Pilot evaluation of the stop, breathe & think mindfulness app for student clients on a college counseling center waitlist. Journal of American College Health : J of ACH, 70(1), 165–173.

Lin, L. (2018). Bias caused by sampling error in meta-analysis with small sample sizes. PLoS ONE, 13(9), e0204056.

Lukat, J., Margraf, J., Lutz, R., van der Veld, W. M., & Becker, E. S. (2016). Psychometric properties of the positive mental health scale (PMH-scale). BMC Psychology, 4(1), 1–14.

Lyzwinski, L. N., Caffery, L., Bambling, M., & Edirippulige, S. (2019). The mindfulness app trial for weight, weight-related behaviors, and stress in university students: Randomized controlled trial. JMIR MHealth and UHealth, 7(4), e12210.

McConville, J., McAleer, R., & Hahne, A. (2017). Mindfulness training for health profession students—the effect of mindfulness training on psychological well-being, learning and clinical performance of health professional students: A systematic review of randomized and non-randomized controlled trials. Explore, 13(1), 26–45.

McDowell, I. (2006). Measuring health: A guide to rating scales and questionnaires. Oxford University Press.

Messer, D., Horan, J. J., Turner, W., & Weber, W. (2016). The effects of internet-delivered mindfulness training on stress, coping, and mindfulness in university students. AERA Open, 2(1), 2332858415625188.

Modrego-Alarcón, M., García Campayo, J., Benito, E., Delgado Suárez, I., Navarro Gil, M., López del Hoyo, Y., & Herrera Mercadal, P. (2018). Mindfulness at universities: An increasingly present reality. Educational Research Applications, ERCA-149.

Nguyen-Feng, V. N., Greer, C. S., & Frazier, P. (2017). Using online interventions to deliver college student mental health resources: Evidence from randomized clinical trials. Psychological Services, 14(4), 481–489.

Noone, C., & Hogan, M. J. (2018). A randomised active-controlled trial to examine the effects of an online mindfulness intervention on executive control, critical thinking and key thinking dispositions in a university student sample. BMC Psychology, 6(1), 13.

O’Driscoll, M., Byrne, S., Byrne, H., Lambert, S., & Sahm, L. J. (2019). An online mindfulness-based intervention for undergraduate pharmacy students: Results of a mixed-methods feasibility study. Currents in Pharmacy Teaching & Learning, 11(9), 858–875.

O’Driscoll, M., Byrne, S., Mc Gillicuddy, A., Lambert, S., & Sahm, L. J. (2017). The effects of mindfulness-based interventions for health and social care undergraduate students–a systematic review of the literature. Psychology, Health & Medicine, 22(7), 851–865.

Page, M. J., Moher, D., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., Shamseer, L., Tetzlaff, J. M., Akl, E. A., & Brennan, S. E. (2021). PRISMA 2020 explanation and elaboration: Updated guidance and exemplars for reporting systematic reviews. Bmj, 372.

Pedrelli, P., Nyer, M., Yeung, A., Zulauf, C., & Wilens, T. (2015). College students: Mental health problems and treatment considerations. Academic Psychiatry, 39(5), 503–511.

Pennebaker, J. W., & Beall, S. K. (1986). Confronting a traumatic event: Toward an understanding of inhibition and disease. Journal of Abnormal Psychology, 95(3), 274.

Phang, K. C., Firdaus, M., Normala, I., Keng, L. S., & Sherina, M. S. (2015). Effects of a DVD-delivered mindfulness-based intervention for stress reduction in medical students: A randomized controlled study. Education in Medicine Journal, 7(3).

Plaza, I., Demarzo, M. M. P., Herrera-Mercadal, P., & García-Campayo, J. (2013). Mindfulness-based mobile applications: Literature review and analysis of current features. JMIR MHealth and UHealth, 1(2), e2733.

Reangsing, C., Abdullahi, S. G., & Schneider, J. K. (2022). Effects of online mindfulness-based interventions on depressive symptoms in college and university students: A systematic review and meta-analysis. Journal of Integrative and Complementary Medicine.

Rhine, T. (2020). Effects of computerized cognitive behavioral therapy and mindfulness based mobile app on job stress and anxiety in active-duty military students. Hampton University.

Ritvo, P., Ahmad, F., El Morr, C., Pirbaglou, M., & Moineddin, R. (2021). A mindfulness-based intervention for student depression, anxiety, and stress: Randomized controlled trial. JMIR Ment Health, 8(1), e23491.

Rodriguez, M., Eisenlohr-Moul, T. A., Weisman, J., & Rosenthal, M. Z. (2021). The use of task shifting to improve treatment engagement in an internet-based mindfulness intervention among Chinese university students: Randomized controlled trial. JMIR Formative Research, 5(10), e25772.

Simonsson, O., Bazin, O., Fisher, S. D., & Goldberg, S. B. (2021). Effects of an eight-week, online mindfulness program on anxiety and depression in university students during COVID-19: A randomized controlled trial. Psychiatry Research, 305, 114222.

Sterne, J. A. C., Hernán, M. A., Reeves, B. C., Savović, J., Berkman, N. D., Viswanathan, M., Henry, D., Altman, D. G., Ansari, M. T., Boutron, I., Carpenter, J. R., Chan, A.-W., Churchill, R., Deeks, J. J., Hróbjartsson, A., Kirkham, J., Jüni, P., Loke, Y. K., Pigott, T. D., … Higgins, J. P. T. (2016). ROBINS-I: A tool for assessing risk of bias in non-randomised studies of interventions. BMJ, 355, i4919.

Sterne, J., Savović, J., Page, M., Elbers, R., Blencowe, N., Boutron, I., Cates, C., Cheng, H.-Y., Corbett, M., Eldridge, S., Hernán, M., Hopewell, S., Hróbjartsson, A., Junqueira, D., Jüni, P., Kirkham, J., Lasserson, T., Li, T., McAleenan, A., … Higgins, J. (2019). RoB 2: A revised tool for assessing risk of bias in randomised trials. BMJ, 366(l4898).

Sun, S., Lin, D., Goldberg, S., Shen, Z., Chen, P., Qiao, S., Brewer, J., Loucks, E., & Operario, D. (2021). A mindfulness-based mobile health (mHealth) intervention among psychologically distressed university students in quarantine during the COVID-19 pandemic: A randomized controlled trial. Journal of Counseling Psychology.

Tennant, R., Hiller, L., Fishwick, R., Platt, S., Joseph, S., Weich, S., Parkinson, J., Secker, J., & Stewart-Brown, S. (2007). The Warwick-Edinburgh Mental Well-being Scale (WEMWBS): Development and UK validation. Health and Quality of Life Outcomes, 5(1), 63.

Tufanaru, C., Munn, Z., Stephenson, M., & Aromataris, E. (2015). Fixed or random effects meta-analysis? Common methodological issues in systematic reviews of effectiveness. International Journal of Evidence-Based Healthcare, 13(3), 196–207.

Valentine, J. C., Pigott, T. D., & Rothstein, H. R. (2010). How many studies do you need? A primer on statistical power for meta-analysis. Journal of Educational and Behavioral Statistics, 35(2), 215–247.

van Hoorn, R., Tummers, M., Booth, A., Gerhardus, A., Rehfuess, E., Hind, D., Bossuyt, P. M., Welch, V., Debray, T. P. A., Underwood, M., Cuijpers, P., Kraemer, H., van der Wilt, G. J., & Kievit, W. (2017). The development of CHAMP: A checklist for the appraisal of moderators and predictors. BMC Medical Research Methodology, 17(1), 173.

Walsh, K. M., Saab, B. J., & Farb, N. A. S. (2019). Effects of a mindfulness meditation app on subjective well-being: Active randomized controlled trial and experience sampling study. JMIR Ment Health, 6(1), e10844.

Warnecke, E., Quinn, S., Ogden, K., Towle, N., & Nelson, M. R. (2011). A randomised controlled trial of the effects of mindfulness practice on medical student stress levels. Medical Education, 45(4), 381–388.

Yang, E., Schamber, E., Meyer, R. M. L., & Gold, J. I. (2018). Happier healers: Randomized controlled trial of mobile mindfulness for stress management. The Journal of Alternative and Complementary Medicine, 24(5), 505–513.

Yogeswaran, V., & el Morr, C. (2021). Effectiveness of online mindfulness interventions on medical students’ mental health: A systematic review. BMC Public Health, 21(1), 1–12.

Author information

Authors and Affiliations

Contributions

All authors contributed to this systematic review and meta-analysis. The authors’ contributions were outlined in the “Method” section. Databases searches and search strategies were devised by D.A, R.G and C.M and were conducted by D.A. Title and abstract screening, full-text screening, data extraction and risk of bias assessment were conducted by D.A and K.C. Disagreements were resolved via discussion with R.G and C.M. The draft of the manuscript was written by D.A and R.G and C.M commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics Approval

The ethical statement does not apply to this systematic review and meta-analysis.

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Alrashdi, D.H., Chen, K.K., Meyer, C. et al. A Systematic Review and Meta-analysis of Online Mindfulness-Based Interventions for University Students: An Examination of Psychological Distress and Well-being, and Attrition Rates. J. technol. behav. sci. (2023). https://doi.org/10.1007/s41347-023-00321-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s41347-023-00321-6