Abstract

There is a gap between mechanical measurement methods under laboratory conditions and those under real conditions of structural monitoring. This paper proposes a method that applies well-established computer vision developments to photomechanics in difficult conditions. It is therefore a technique that is flexible and versatile while maintaining satisfying accuracy. It consists in marker tracking using ArUco fiducial markers as measurement points. It allows locating markers in non-optimal conditions of camera orientation and position. A homography process was used to analyse pictures taken with a view angle. The accuracy of the method was estimated, especially in case of out-of-plane motions, and the impact of the view angle and of the distance between camera and markers on the location error was studied. The method was applied in creep tests to measure crack parameters as well as the transverse expansion of wooden beams. In the application example presented, it enabled to compute distances between markers with only 0.28% of relative error and hence to measure the crack parameters and the long-term shrinkage-swelling of the wooden beams. However, the impacts of brightness variations and camera parameters have not been estimated. This method is very promising when experimental conditions are variable and when multiple measurements are necessary.

Similar content being viewed by others

Abbreviations

- U :

-

Zero-order tensor U, a scalar.

- \(\underline{U}\) :

-

First order tensor U, a vector.

- \(\underline{\underline{U}}\) :

-

Second order tensor U, a matrix.

- \(\underline{\underline{A}}\) :

-

Homography resolution matrix.

- CL:

-

Crack length.

- CO:

-

Crack opening.

- D :

-

Distance between camera and calibration target.

- dy12:

-

y distance between markers 1 and 2, 40 mm apart.

- dy34:

-

y distance between markers 3 and 4, 175 mm apart.

- dx12:

-

x distance between markers 1 and 2, should be 0 mm.

- dx34:

-

x distance between markers 3 and 4, should be 0 mm.

- EMC:

-

Equilibrium moisture content.

- \(\underline{\underline{H}}\) :

-

Homography matrix.

- \(H_{p1}, H_{p2}\) :

-

Distance between markers in calibration target’s plane, in beam’s plane.

- \(h_{ij}\) :

-

Homography matrix components.

- \(\tilde{h}_{ij}\) :

-

Homography matrix components divided by \(h_{33}\).

- ht, wt:

-

Index of use of respectively horseshoe target marker and window target.

- \(L_{0}\) :

-

Initial length of a calibration target.

- \(\underline{X}\) :

-

Real world coordinates vector.

- X, Y, Z :

-

Real world coordinates components.

- \(\underline{\hat{X}}\) :

-

Real world coordinates vector divided by Z.

- \(\hat{x}, \hat{y}\) :

-

Real world coordinates components divided by Z.

- \(\underline{x}\) :

-

Homogeneous 2D image coordinates vector.

- x, y :

-

Homogeneous 2D image coordinates components.

- \(\alpha _{al}\) :

-

Thermal expansion coefficient of aluminium.

- \(\Delta L\) :

-

Length variation of a calibration target.

- \(\Delta T\) :

-

Temperature variation.

- \(\omega \) :

-

Scale factor.

References

Brémand F, Cottron M, Doumalin P, Dupré J-C, Germaneau A, Valle V (2011) Mesures en mécanique par méthodes optiques. Techniques de l’ingénieur, 28

Bornert M, Doumalin Brémand F, P, Dupré J-C, Fazzini M, Grédiac M, Hild F, Mistou S, Molimard J, Orteu J-J, Robert L, Surrel Y, Vacher P, Wattrisse B, (2009) Assessment of Digital Image Correlation Measurement Errors: Methodology and Results. Experimental Mechanics 49(3):353–370. https://doi.org/10.1007/s11340-008-9204-7. Accessed 02 March 2022

Muñoz-Salinas R, Marín-Jimenez MJ, Yeguas-Bolivar E, Medina-Carnicer R (2017) Mapping and Localization from Planar Markers. arXiv:1606.00151 [cs]. Accessed 05 Jan 2022

Germanese D, Leone GR, Moroni D, Pascali MA, Tampucci M (2018) Long-term monitoring of crack patterns in historic structures using UAVs and planar markers: a preliminary study. J Imag 4(8):99. https://doi.org/10.3390/jimaging4080099. Number: 8 Publisher: Multidisciplinary Digital Publishing Institute. Accessed 05 Jan 2022

Valença J, Dias-da-Costa D, Júlio E, Araújo H, Costa H (2013) Automatic crack monitoring using photogrammetry and image processing. Measurement 46(1):433–441. https://doi.org/10.1016/j.measurement.2012.07.019. Accessed 23 March 2022

Garrido-Jurado S, Muñoz-Salinas R, Madrid-Cuevas FJ, Marín-Jiménez MJ (2014) Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recog 47(6):2280–2292. https://doi.org/10.1016/j.patcog.2014.01.005. Accessed 30 Aug 2021

Forstner G (1987) A fast operator for detection and precise location of distinct points, corners and centres of circular features. In: Conference on fast processing of photogrammetric data, 25

AFNOR (2012) NF EN 408+A1 - Structures en bois. Bois de structure et bois lamellé-collé, AFNOR Editions

Malbezin P, Piekarski W, Thomas BH (2002) Measuring ARTootKit accuracy in long distance tracking experiments. In: The First IEEE international workshop agumented reality toolkit, pp 2, https://doi.org/10.1109/ART.2002.1107000

Abawi DF, Bienwald J, Dorner R (2004) Accuracy in optical tracking with fiducial markers: an accuracy function for ARToolKit. In: Third IEEE and ACM international symposium on mixed and augmented reality, pp 260–261, https://doi.org/10.1109/ISMAR.2004.8

Guitard D (1987) Mécanique du Matériau Bois et Composites, Cépaduès edn. NABLA, vol 1. Librairie Eyrolles

Pedersen CR (1990) Combined heat and moisture transfer in building constructions. PhD thesis, Technical University of Denmark

Merakeb S, Dubois F, Petit C (2009) Modélisation des hystérésis de sorption dans les matériaux hygroscopiques. Comptes Rendus Mécanique 337(1):34–39. https://doi.org/10.1016/j.crme.2009.01.001. Accessed 08 July 2020

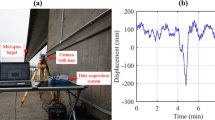

Pambou Nziengui CF, Moutou Pitti R, Fournely E, Gril J, Godi G, Ikogou S (2019) Notched-beam creep of Douglas fir and white fir in outdoor conditions: Experimental study. Construction and Building Materials 196:659–671. https://doi.org/10.1016/j.conbuildmat.2018.11.139. Accessed 08 July 2020

Hartley R, Zisserman A (2004) Multiple View Geometry in Computer Vision, 2nd edn. Cambridge University Press, Cambridge. https://doi.org/10.1017/CBO9780511811685. https://www.cambridge.org/core/books/multiple-view-geometry-in-computer- vision/0B6F289C78B2B23F596CAA76D3D43F7A Accessed 13 Jan 2022

Acknowledgements

The authors would like to thank the Auvergne Rhône-Alpes region for the Ph.D. scholarship as well as the funding of the Hub-Innovergne program of the Clermont Auvergne University. Moreover, the method could only be developed thanks to the significant contributions of the OpenCV community. Finally the authors thank Benoît Blaysat for lending equipment and fruitful discussions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Homography

Appendix: Homography

The aim of the homography is to project the coordinates in a given plane into another, as illustrated in Fig. 13 coming from [15]. In the left picture the photo is taken with a view angle and on the right picture it is projected onto the plane parallel to the wall.

In this study, the goal is to go from the virtual image plane to the physical real world plane, where the real distance between two markers is defined by their coordinates. The detectMarkers function of OpenCV returns the position of the markers into the 2D image coordinates (i.e., in pixels).

In order to get real world coordinates (i.e., in meters), a homography must be computed:

Where:

-

\(\underline{X}=(X,Y,Z)\) are the real world coordinates;

-

\(\underline{x}=(x,y,1)\) are the homogeneous 2D image coordinates, related to the projective space. When passing from the Euclidean coordinates to the homogeneous coordinates, a dimension is added. This distance is fixed to 1 and allows to get mathematical coherence;

-

\(\underline{\underline{H}}\) is the \(3 \times 3\) homography matrix:

$$\begin{aligned} \underline{\underline{H}} = \left[ \begin{array}{lll} h_{11} &{} h_{12} &{} h_{13} \\ h_{21} &{} h_{22} &{} h_{23} \\ h_{31} &{} h_{32} &{} h_{33} \end{array}\right] \end{aligned}$$(A1) -

\(\omega \) is a scale factor. In homogeneous coordinates, (x, y, 1) and \((\omega x, \omega y, \omega )\) represent the same point for \(\omega \) a non-zero scalar. It may be fixed at \(\omega = \frac{1}{h_{33}}\) and injected into \(\underline{\underline{H}}\). In this case let’s note:

$$\begin{aligned} \tilde{h_{ij}} = \frac{h_{ij}}{h_{33}}, \quad \quad (i, j) = ((1,2,3), (1,2,3)) \end{aligned}$$

The transformation needed to get the homogeneous 2D real-world coordinates is:

It becomes:

For a given point the equations are:

Thus:

By transforming \(\underline{\underline{H}}\) into a vector, the following equation can be written:

Basically:

Solving this problem, avoiding \(\tilde{\underline{H}} = \underline{0}\), is the homography computation. It is a minimization problem as the solution is never perfect for images obtained experimentally. It is worth noting that 1 point in a picture with known real-world positions gives 2 equations, and the homography matrix consists of 8 unknowns. As a consequence, at least 4 points with known physical positions are necessary to compute the homography matrix. These 4 points may be the 4 corners of a single marker, or as in the case of this study 4 centers of 4 markers. There is at least two reasons for using 4 centers of 4 markers instead of 4 corners of a single marker:

-

The centers are more precisely located than the corners;

-

The projection of the pixels in the physical real world plane is globally better with 4 centers rather than 4 corners. Indeed, the projection with 4 corners is good mainly around that reference marker but not for remote pixels.

Obviously, 3 collinear points give a useless redundant equation, so that no more than two should be alined. If more than 4 points are available, the homography solution should gives a better minimization.

The homography used to get real world coordinates of a given picture is the same for all markers. Thus, they are all projected on the same plane. As a consequence, the homography has to be computed one time for each photo so that the distance between two markers is correctly computed even with a view angle involving a perspective.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bontemps, A., Godi, G., Fournely, E. et al. Implementation of an Optical Measurement Method for Monitoring Mechanical Behaviour. Exp Tech 48, 115–128 (2024). https://doi.org/10.1007/s40799-023-00636-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40799-023-00636-2