Abstract

Social group optimization (SGO), a population-based optimization technique is proposed in this paper. It is inspired from the concept of social behavior of human toward solving a complex problem. The concept and the mathematical formulation of SGO algorithm is explained in this paper with a flowchart. To judge the effectiveness of SGO, extensive experiments have been conducted on number of different unconstrained benchmark functions as well as standard numerical benchmark functions taken from the IEEE Congress on Evolutionary Computation 2005 competition. Performance comparisons are made between state-of-the-art optimization techniques, like GA, PSO, DE, ABC and its variants, and the recently developed TLBO. The investigational outcomes show that the proposed social group optimization outperforms all the investigated optimization techniques in computational costs and also provides optimal solutions for most of the functions considered in our work. The proposed technique is found to be very simple and straightforward to implement as well. It is believed that SGO will supplement the group of effective and efficient optimization techniques in the population-based category and give researchers wide scope to choose this in their respective applications.

Similar content being viewed by others

Introduction

Population-based optimization algorithms motivated from nature commonly locate near-optimal solution to optimization problems. Every population-based algorithm has the common characteristics of finding out global solution of the problem. A population begins with initial solutions and gradually moves toward a better solution area of search space based on the information of their fitness. Over the last few decades, numbers of successful population-based algorithms have been emerged for solving complex optimization problems. Some of the well-known population-based optimization techniques are comprehensively cited below, and readers can refer details in the respective papers. Genetic algorithms (GAs) [1], being the most popular ones, are based on genetic science and natural selection operators. The differential evolution (DE) [2] is based on similar concept of GA but it offers all solutions an equal chance irrespective of their fitness to get selected as parents, unlike GA, and has found to be recently very well known to optimization researchers. Bacteria foraging (BF) [3] based on the social foraging behavior of Escherichia coli, shuffled frog leaping (SFL) [4] inspired by natural memetics providing beauty of local search and global information exchange, simulated annealing (SA) [5] based on steel annealing process, and ant colony optimization (ACO) [6] motivated from the manners of real ant colony. A technique based on swarm behavior such as fish schooling and bird flocking in nature known as Particle Swarm Optimization (PSO) [7] has been widely researched and applied to various fields of engineering-allied subjects. Artificial bee colony (ABC) [8] algorithm based on the intelligent foraging behavior of honeybee swarm, the gravitational search algorithm (GSA) [9] based on the law of gravity and notions of mass interactions, cuckoo search [10] inspired by the obligate brood parasitism of some cuckoo species by laying their eggs in the nests of other host birds (of other species) are gaining popularity as well among users. Biogeography-based optimization (BBO) [11] based on the idea of the migration strategy of animals or other species for solving optimization problems, the intelligent water drops (IWDs) [12] algorithm enthused from observing natural water drops that flow in rivers and find almost optimal paths to their destination, the firefly algorithm (FA) [13] inspired by the flashing behavior of fireflies in nature, the honey bee mating optimization (HBMO) [14, 15] algorithm inspired by the process of marriage in real honey bees, the bat algorithm (BA) [16] inspired by the echolocation behavior of bats are few more population-based techniques in this category. The harmony search (HS) [17] optimization algorithm inspired by the improvising process of composing a piece of music, the big bang–big crunch (BB-BC) optimization [18] based on one of the theories of the evolution of the universe [18], black hole (BH) [19] optimization inspired by the black hole phenomenon have also been extensively tried successfully for solving various problems in engineering. Recently, teaching–learning-based optimization (TLBO) [20] based on the effect of the influence of a teacher on the output of learners in a class is being extensively studied by researchers to solve a variety of optimization problems in engineering applications. Even though all these algorithms are good enough for solving optimization problems, however, issues like finding optimal solutions, providing fast convergence without over fitting (computational efforts), choosing and controlling algorithm parameters, algorithm stability and robustness, consistency in providing solutions, adaptability to wide variety of applications, etc., have been the subjects of extensive research in optimization community. To address the aforementioned issues, researchers have developed many variants of the above-mentioned optimization algorithms, and even hybridization of several algorithms has also been attempted.

In an attempt to address few challenges like computational efforts, optimal solutions and consistency in providing optimal solutions, this paper proposes a new optimization technique named social group optimization (SGO) based on the human behavior of learning and solving complex problems.

In this work, we have done extensive study to further investigate the performance of our proposed SGO algorithm on many simple benchmark functions as well as benchmark functions from CEC 2005 competitions. Many advanced versions of state-of-the art algorithms like PSO, DE and ABC etc., and their variants are simulated to compare the performances with SGO. Also, the performance of SGO is compared with recently developed TLBO algorithms. Convergence characteristics of SGO are presented in plots. Results are reported in Tables with the mean and standard deviation values for each algorithm on each function over several simulation runs. To compare the significance of the proposed algorithm, we have done Wilcoxon’s rank-sum statistical tests.

The remaining of the paper is organized as follows: in “Social Group Optimization”, we give a comprehensive description of SGO algorithm. The next section “Implementation of SGO for optimization” discusses the implementation of SGO for optimization followed by discussion about “Experimental results”. The paper concludes with further research in “Conclusion”.

Social group optimization (SGO)

There are many behavioral traits such as honesty, dishonesty, caring, compassion, courage, fear, justness, fairness, tolerance or respectfulness etc., lying dormant in human beings, which need to be harnessed and channelized in the appropriate direction to enable him/her to solve complex tasks in life. Few individuals might have required level of all these behavioral traits to be capable of solving, effectively and efficiently, complex problems in life. But very often, complex problems can be solved with the influence of traits from one person to other or from one group to other groups in the society. It has been observed that human beings are great imitators or followers in solving any task. Group solving capability has emerged to be more effective than individual capability in exploiting and exploring different traits of each individual in the group to solve a given problem. Based upon this concept, a new optimization technique is proposed which is named as social group optimization (SGO).

In SGO, each person (a candidate solution) is empowered with some sort of knowledge having a level of capacity for solving a problem. SGO is another population-based algorithm similar to other algorithms discussed in the previous section. For SGO, the population is considered as a group of persons (candidate solutions). Each person acquires knowledge and, thereby, possesses some level of capacity for solving a problem. This is corresponding to the ‘fitness’. The best person is the best solution. The best person tries to propagate knowledge amongst all persons, which will, in turn, improve the knowledge level of the entire members in the group.

The procedure of SGO is divided into two parts. The first part consists of the ‘improving phase’; the second part consists of the ‘acquiring phase’. In ‘improving phase,’ the knowledge level of each person in the group is enhanced with the influence of the best person in the group. The best person in the group is the one having the highest level of knowledge and capacity to solve the problem. And in the ‘acquiring phase,’ each person enhances his/her knowledge with the mutual interaction with another person in the group and the best person in the group at that point in time. The basic mathematical interpretation of this concept is presented below.

Let \(X_j\), \(j=1,2,3,{\ldots }N\) be the persons of social group, i.e., social group contains N persons and each person \(X_j\) is defined by \(X_j =(x_{j1} ,x_{j2} ,x_{j3} ,\ldots ,x_{jD})\), where D is the number of traits assigned to a person which determines the dimensions of a person and \(f_j\), \(j=1,2,{\ldots }N\) are their corresponding fitness values, respectively.

Improving phase

The best person (gbest) in each social group tries to propagate knowledge among all persons, which will, in turn, help others to improve their knowledge in the group.

In the improving phase, each person gets knowledge (here knowledge refers to change of traits with the influence of the best person’s traits) from the group’s best (gbest) person. The updating of each person can be computed as follows:

where c is known as self-introspection parameter. Its value can be set from \(0< c < 1\).

Acquiring phase

In the acquiring phase, a person of social group interacts with the best person (gbest) of that group and also interacts randomly with other persons of the group for acquiring knowledge. A person acquires new knowledge if the other person has more knowledge than him or her. The best knowledgeable person (here known as person having ‘gbest’) has the greatest influence on others to learn from him/her. A person will also acquire something new from other persons if they have more knowledge than him or her in the group.

where \(r_1\) and \(r_2\) are two independent random sequences, \(r_1\sim U(0,1)\) and \(r_2 \sim U(0,1)\) . These sequences are used to affect the stochastic nature of the algorithm as shown above in Eq. (4).

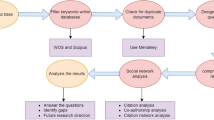

For further clarity and ease of implementation, the entire process is now presented in an easy-to-understand flowchart (Fig. 1)

Implementation of SGO for optimization

The step-wise procedure for the implementation of SGO is given in this section.

-

Step 1:

Enumeration of the problem and Initialization of parameters Initialize the population size (N), number of generations (g), number of design variables (D), and limits of design variables \(( U_L ,L_L )\). Define the optimization problem as: Minimize f (X). Subject to = \((x_1 ,x_2 ,x_3 ,\ldots \ldots ,x_D)\), so that \(X_j =(x_{j1} ,x_{j2},x_{j3} ,\ldots \ldots ,x_{jD} )\), where f(X) is the objective function, and X is a vector for design variables such that \(L_{L,i} \le x_{,i} \le U_{L,i}\).

-

Step 2:

Initialize the population A random population is generated based on the features (number of parameters) and the size of population chosen by user. For SGO, the population size indicates the number of persons and the features indicate the number of traits of a person. This population is articulated as:

$$\begin{aligned} \hbox {Population}=\left[ { {\begin{array}{lll} {x_{1,1}\, x_{1,2}\, x_{1,3}}&{} \cdots &{} {x_{1,\hbox {D}}} \\ \vdots &{} \ddots &{} \vdots \\ {x_{N,1}\, x_{N,2}\, x_{N,3\hbox {}}}&{} \cdots &{} {x_{N,\hbox {D}}} \\ \end{array}}} \right] \end{aligned}$$Calculate the fitness of the population f (X).

-

Step 3:

Improving Phase Then, determine \(\hbox {gbest}_g\) using Eq. (1), which is the best solution for that iteration. As in the improving phase, each person gets knowledge from their group’s best, i.e., gbest

$$\begin{aligned}&~~~\hbox {For i} = 1 : \hbox {N}\\&~~~\quad \quad \hbox {For j} =1:\hbox {D}\\&~~~\quad \quad \quad \quad \quad Xnew_{ij} =c*Xold_{ij} +r*(gbest(j)-Xold_{ij} )\\&~~~\quad \quad \hbox {End for}\\&~~~\hbox {End for} \end{aligned}$$The value of c is self-introspection factor. The value of c can be empirically chosen for a given problem. We have set it to 0.2 in this work after thorough study of our investigated problems and r is a random number, \(r\sim U(0,1)\). Accept Xnew if it gives better function value.

-

Step 4:

Acquiring phase As explained above, in the acquiring phase, a person of social group interacts with the best person, i.e., gbest of the group and also interacts randomly with other persons of the group for acquiring knowledge. The mathematical expression is defined in “Acquiring phase”.

-

Step 5:

Termination criterion Stop the simulation if the maximum generation number is achieved; otherwise, repeat from Steps 3–4.

Experimental results

In this paper, the performance of SGO is compared with many classical population-based optimization techniques as well as their advanced variants using some basic benchmark functions and 25 test functions proposed in the CEC2005 special session on real-parameter optimization. A description of some basic benchmark functions is given in Appendix, and others are referred from their respective papers, and a detailed description of these 25 CEC2005 test functions can be found in [21]. In “Experiment 1:SGO vs. GA, PSO, DE, ABC, and TLBO” to “Experiment 8. SGO vs FIPS-PSO, CPSO-H, DMSPSO-LS, CLPSO, APSO, SSG-PSO, SSG-PSO-DFP, SSG-PSO-BFGS, SSG-PSO-NM, SSG-PSO-PS”, we have described the experimentation on basic benchmark functions; in “Experiment 9: SGO vs. jDE, SaDE, EPSDE, CoDE, MPEDE , CLPSO, CMA-ES,GL-25 and TLBO”, CEC2005 test functions are experimented; and experiments on composite test functions are discussed and experimented in “Experiment 10: SGO vs. PSO, CPSO, CLPSO, CMA-ES, G3-PCX, DE, and TLBO using Composite functions”. To have statistically sound conclusions, Wilcoxon’s rank-sum test at a 0.05 significance level was conducted on the experimental results, and the last three rows of each respective table summarize the experimental results.

For comparing the speed of the algorithms, the first thing we require is a fair time measurement. The number of iterations or generations cannot be accepted as a time measure, since the algorithms perform different amount of works in their inner loops, and they have different population sizes. Hence, we choose the number of fitness function evaluations(FEs) as a measure of computation time instead of generations or iterations. Since the algorithms are stochastic in nature, the results of two successive runs usually do not match. Hence, we have taken different independent runs (with different seeds of the random number generator) of each algorithm.

Finally, we would like to point out that all the experiment codes are implemented in MATLAB 7. The experiments are conducted on a Pentium 4, 1 GB memory desktop in Windows XP 2002 environment.

Experiment 1:SGO vs. GA, PSO, DE, ABC, and TLBO

In this section, for fair comparison of the performances of algorithms, the results are directly gained form [22] for GA, PSO, DE and ABC algorithms. However, the simulations have been carried out by us for TLBO and our proposed SGO algorithm. The common parameter such as population size is set to 20 for both TLBO and SGO. The maximum number for function evaluation is set to 2000 for TLBO and 1000 for SGO. The other specific parameters of algorithms are given below:

TLBO settings For TLBO, there is no such constant to set.

SGO settings For SGO, there is only one constant self-introspection factor for optimum self-effort c. The value of c is empirically set to 0.2 for better results.

The 25 benchmark functions which are considered for simulations include many different kinds of problems such as unimodal, multimodal, regular, irregular, separable, non-separable and multidimensional. All problems are divided into four categories such as US, MS, UN, MN, and the range, formulation, characteristics and the dimensions of these problems are described in Appendix.

Each of the experiments for TLBO and SGO is repeated 30 times (we have taken the same number of experimentations which have been done in [22] to make the comparison fair) with different random seeds, and the best mean values produced by the algorithms have been recorded. Comparison criteria are the mean solution and the standard solution for different independent runs. The mean solution describes the average ability of the algorithm to find the global solution, and the standard deviation describes the variation in solution from the mean solution. To make the comparison clear, the values below \(10^{-12}\) are assumed to be 0. Also, to have statistically sound conclusions, Wilcoxon’s rank-sum test at a 0.05 significance level has been conducted on the experimental results, and the last three rows of Table 1 summarize the results. The results for GA, PSO, DE and ABC are taken from the paper [22]. The Table 1 presents the comparison of fitness values of GA, PSO, DE and ABC gained from [22] and for TLBO and SGO computed by us. In Table 1, “NA” stands for experiment is not conducted for that particular function. The best optimal values are shown in bold face.

From Table 1, it is clear that SGO provides better optimal results in as many as 12 functions compared to GA, 5 functions compared to PSO, 4 functions compared to DE, 3 functions compared to ABC and 2 functions compared to TLBO. It can be arrived at a conclusion here that SGO is very competitive compared to ABC and especially with TLBO. However, we have noted that SGO is faster when compared to other algorithms. It takes 1/500\(\mathrm{th}\) function evaluations compared to GA, PSO, DE and ABC for all functions except for Rosenbrock wherein it takes 1/50\(\mathrm{th}\) of total function evaluations. And it is computing almost in half of the total function evaluations compared to TLBO except for Rosenbrock for which SGO takes 1/5\(\mathrm{th}\) number of function evaluation as against TLBO. From the above findings, we may arrive at a conclusion that our proposed algorithm not only performs better compared to many state-of-the art algorithms like GA, PSO, DE but also very competitive with ABC and TLBO in providing optimal solutions. Importantly, SGO takes less computation efforts compared to all other algorithms investigated in this section.

Experiment 2: SGO vs. HS, IBA, ABC, and TLBO

In this experiment, five different benchmark problems from Karaboga and Akay [23] are considered, and comparison is carried out between SGO, the harmony search algorithm (HS), improved bee algorithm (IBA), artificial bee colony optimization (ABC) and teaching–learning-based optimization (TLBO) [24]. To compare the results, the mean solution and the standard solution for different independent runs are taken. In our simulation, we run TLBO for maximum 2000 FEs with 10 as the population size for all functions except Rosenbrock, whereas HS, IBA and ABC run for 50,000 FEs with 50 as population size. For Rosenbrock function, TLBO takes 50,000 FEs with population size of 50. For our optimization algorithm, i.e., SGO, maximum FEs are set to 1000 with 10 as the population size for all functions except Rosenbrock for which it is set to 50,000 FEs with population size of 50. The results are gathered for different independent runs in each case, and the mean and standard deviation are calculated for the results obtained in different runs. Description of the functions is given in Appendix.

In this simulation, different dimensions (D) of the benchmark functions are chosen for study. Values starting from as small as 5 to 1000 are taken. The results for dimensions 5, 10, 30, 50, 100 are directly lifted from [24] for all investigated algorithms and put in Table 2. For SGO algorithm, we have computed results for all dimensions and generated results for two large-scale dimensions, such as 500 and 1000, to investigate the performance of SGO for large-dimension problems. The maximum number of FEs for SGO is set 1/50\(\mathrm{th}\) of maximum FEs taken for HS, IBA and ABC for all functions except Rosenbrock. And, for Rosenbrock, it is 1/5\(\mathrm{th}\) of HS, IBC and ABC. However, it is exactly half that of TLBO in all functions [24, 25]. It may be emphasized here that the reduced value of maximum number of FEs for SGO is deliberately chosen to investigate the effectiveness and efficiency over other algorithms. Table 2 shows the results for this experiment. In Table, “NA” stands for experiment is not conducted for that particular function. The best optimal values are shown in bold face. To have statistically sound conclusions, Wilcoxon’s rank-sum test at a 0.05 significance level has been conducted on the experimental results, and the last three rows of Table summarize the results. It can be seen from Table 2 that SGO has outperformed than all the algorithms for all the functions in almost all dimensions. This experiment shows that SGO is effective in finding the optimum solution with increase in dimensions. However, the performance of other algorithms in higher dimensions has not been ascertained in this work. Our preliminary literature study reveals that they do not perform well in higher dimensions.

Experiment 3: SGO vs OEA, HPSO-TVAC, CLPSO, APSO, OLPSO-L and OLPSO-G

In this section, comparisons of SGO versus OEA, HPSO-TVAC (Self-organizing hierarchical particle swarm optimizer with time-varying acceleration coefficients) [26], APSO (adaptive particle swarm optimization) [27], CLPSO (comprehensive learning particle swarm optimization) [28], OLPSO (orthogonal learning particle swarm optimization)-L [29] and OLPSO-G [29] on nine benchmarks listed in Appendix are carried out. OEA uses \(3.0 \times 10^{5}\) FEs, HPSO-TVAC, CLPSO, APSO, OLPSO-L and OLPSO-G use \(2.0 \times 10^{5}\) FEs, whereas SGO runs for \(3 \times 10^{3}\) FEs for sphere, schwefel 1.2, schwefel 2.22 function, \(1.0 \times 10^{2}\) FEs for step, \(4.0 \times 10^{2}\) FEs for rastrigin, noncontinuous rastrigin and griwank, \(1.0 \times 10^{3}\) FEs for Ackley and quartic function. The results of OEA, HPSO-TVAC, CLPSO and APSO are gained from [28] and [27] directly, and for OLPSO-L and OLPSO-G, results are gained from [29] directly and put in Table 3. In Table, “NA” stands for experiment is not conducted for that particular function. The best optimal values are shown in bold face. To have statistically sound conclusions, Wilcoxon’s rank-sum test at a 0.05 significance level has been conducted on the experimental results, and the last three rows of Table summarize the results. According to Wilcoxon’s rank-sum test, SGO performs superior than OEA in seven test functions and comparable to one test function out of eight test functions, improved than HPSO-TVAC in eight test functions and equivalent to one test function out of nine test functions. It is also found to be better than APSO in eight test functions and equivalent to one test function out of nine test functions. In our work, we observe that compared to OLPSO-L, our proposed SGO is better in two OLPSO-G in four test functions and equivalent to one test function out of five test functions. Hence, it can be claimed that even though the maximum number of fitness evaluations for SGO is less than the other algorithms, still SGO is either better than or equivalent to other algorithms for each benchmark function according to the Wilcoxon’s rank-sum test.

Experiment 4: SGO vs JADE, jDE, SaDE, CoDE and EPSDE

The experiments in this section constitute the comparison of the SGO algorithm versus SaDE [30], jDE [31], JADE [32], CoDE [33] and EPSDE [34] on nine benchmark functions which are listed in Appendix. The results of JADE, jDE and SaDE are gained from [32] directly and put in Table 4. For CoDE and EPSDE, we have generated results using codes given in website Q. Zhang’s homepage:http://dces.essex.ac.uk/staff/qzhang/. For CoDE, EPSDE and SGO, we have considered population size as 20. The maximum number of fitness evaluations for each function is different, and FEs are noted in bracket of each cell in Table 4. Fitness values are shown in Table 4 in means and standard deviations. In Table, “NA” stands for experiment is not conducted for that particular function. The best optimal values are shown in bold face. To have statistically sound conclusions, Wilcoxon’s rank-sum test at a 0.05 significance level has been conducted on the experimental results, and the last three rows of Table summarize the results. According to Wilcoxon’s rank-sum test, it can be noted that the performance of SGO is always better than all other algorithms except EPSDE in reporting the optimal value, where SGO performs better than EPSDE in five test functions and equivalent to three test functions out of eight test functions. So, it is interesting to note that even though the maximum number of fitness evaluations for SGO is less than the other algorithms, still SGO is better than or equivalent with all variants of DE algorithm in this experiment according to Wilcoxon’s rank-sum test.

Experiment 5: SGO vs. CABC, GABC, RABC and IABC

In this section, we compare SGO with CABC [35], GABC [36], RABC [37] and IABC [38] on eight benchmark functions. The parameters of the algorithms are identical to [36]. The maximum number of fitness evaluations for each function is different, and FEs are noted in bracket of each cell in Table 5. The results have been summarized in Table 5. The fitness value in terms of mean and standard deviation is reported. The best optimal values are shown in bold face. To have statistically sound conclusions, Wilcoxon’s rank-sum test at a 0.05 significance level has been conducted on the experimental results, and the last three rows of Table summarize the results. It can be observed from Table 5 that SGO performs better in comparison to all algorithms. So, we can say that even though the maximum number of fitness evaluations for SGO is less than the other algorithms, still SGO is better than all variants of ABC algorithm in this experiment.

Experiment 6: SGO vs. TLBO

In this experiment, we compare only TLBO and SGO algorithms. As TLBO is relatively new compared to other algorithms investigated in our work, we have devoted a special section to compare our approach with TLBO. In this experiment, our main objective is to see how SGO performs against TLBO in terms of optimal solution and computational costs. The common parameter such as population size is taken as 20 and maximum number fitness function evaluation is taken as 1,000 for both TLBO and SGO.

We used 25 benchmark problems to test the performance of the TLBO and our proposed SGO algorithms. The initial range, formulation, characteristics and the dimensions of these problems are listed in Appendix. Each simulation runs for 30 times. The simulation is terminated on attaining maximum number of evaluations or obtaining global minimum values with different random seeds. The mean value and standard deviations of fitness value produced by the algorithms have been recorded in Table 6. At the same time, mean value and standard deviations of number of fitness evaluation produced by the algorithms have also been recorded in Table 7. The best optimal values are shown in bold face. To have statistically sound conclusions, Wilcoxon’s rank-sum test at a 0.05 significance level has been conducted on the experimental results, and the last three rows of Table summarize the results. It is observed that SGO performs better in 23 test functions and equivalent in 2 test functions.

From Tables 6 and 7, it is clear that except step and six-hump camel-back function, in all cases, SGO has shown better result than TLBO, and the maximum number of fitness evaluations for easom, bohachevsky1, bohachevsky2, bohachevsky3, rastrigin, noncontinuous rastrigin, multimod and for weierstrass function is less than that of TLBO algorithm and all these functions have reached to optimal solution. In both step and six-hump camel-back function cases, both TLBO and SGO algorithms have performed equivalently and given optimal result, however, in both cases, SGO reaches optimum value with lesser number of fitness evaluations than TLBO. So, we can say that SGO is better than TLBO algorithm in all cases in this experiment. The convergence characteristics of both algorithms have been shown in the graphs below (Fig. 2).

Experiment 7: SGO vs. SAABC, GABC, IABC, ABC/Best1, GPSO, DBMPSO, TCPSO and VABC

In this section, we compare SGO with both ABC and PSO variants of algorithm such as GABC(Gbest-guided artificial bee colony algorithm) [36], IABC [38], ABC/Best1 [39], SAABC(simulated annealing-based artificial bee colony) [40], VABC(velocity-based artificial bee colony algorithm) [41], CPSO(Chaotic particle swarm optimization) [42], DBMPSO(particle swarm optimization with double-bottom chaotic maps) [43], TCPSO(two-swarm cooperative particle swarms optimization) [44] on 23 benchmark functions out of which 15 are multidimensional and 8 are fixed-dimensional benchmark functions. All functions are described in [41]. The maximum number of fitness evaluations is taken as 40,000, and population size is 40, and the parameters of the algorithms are identical to [41]. The results of SAABC, GABC, IABC, ABC/Best1, VABC, CPSO, DBMPSO and TCPSO are gained from [41] for comparison with SGO. The comparison results are shown in Tables 8, 9, 10 in terms of means and standard deviations (Std) of the solutions in the 30 independent runs. Tables 8, 9 show the results for 60 and 100 dimensions, respectively, on multidimensional functions, and Table 10 reports the results on the fixed-dimensional functions. The best optimal values are shown in bold face.

As seen from the Tables 8, 9 results, SGO found the global optimal values for all the functions except \( F_6 \), \( F_7 \), \( F_9 \), \( F_{12} \), \( F_{14} \) and \( F_{15} \) function for both 60 and 100 dimension. On the other hand, for test functions \( F_9 \), \( F_{12} \), \( F_{14} \) and \( F_{15} \), the objective values obtained by SGO are extremely close to global optima. Again, as seen from Table 10 results, SGO found the global optimal values for the functions \( F_{16} \), \( F_{17} \) and \( F_{21} \) of fixed-dimensional functions. On the other hand, for test functions \( F_{19} \) and \( F_{20} \), the objective values obtained by SGO are extremely close to global optima.

To have statistically sound conclusions, Wilcoxon’s rank-sum test at a 0.05 significance level has been conducted on the experimental results, and the last three rows of Table summarize the results. In 60-dimensional case, according to Wilcoxon’s rank-sum test, SGO performs superior than SAABC in 14 test functions and comparable to one test function out of a 5 test functions, improved than GABC in all 15 test functions. It is also found to be better than IABC, ABC/Best1, CPSO, DBMPSO and TCPSO in all 15 test functions. In our work, we observe that compared to VABC, our proposed SGO is better in 11 test functions and equivalent to 2 test functions out of 15 test functions.

In 100-dimensional case, according to Wilcoxon’s rank-sum test, SGO performs superior than all algorithms except VABC algorithm in all 15 multidimensional test functions. Compared to VABC, our proposed SGO is better in 12 test functions and equivalent to 1 test functions out of 15 test functions.

In fixed-dimensional case, according to Wilcoxon’s rank-sum test, SGO performs superior than SAABC in four test functions and equivalent with three test functions out of eight test functions, improved than GABC in five test functions and equivalent with two test function out of eight test functions. It is also found to be better than IABC, ABC/Best1, VABC, CPSO, DBMPSO, TCPSO in seven, five, three, two, three and seven test functions, respectively, out of eight test functions and similarly equivalent with one, two, four, five, four and zero test functions, respectively, out of eight test functions.

So, it is interesting to note that the performance of SGO is better than other algorithms according to Wilcoxon’s rank-sum test.

Experiment 8: SGO vs. GPSO, LPSO, FIPS, SPSO, CLPSO, OLPSO and SLPSOA

In this section, the comparison of SGO versus GPSO(global PSO) [45], LPSO(local PSO) [46], FIPS(fully informed particle swarm) [47],SPSO(standard for particle swarm optimization) [48], CLPSO [28], OLPSO [29] and CLPSOA(scatter learning particle swarm optimization Algorithm) [49], on 14 benchmark functions described in paper [49]. The maximum number of fitness evaluations is taken as 200,000, and population size is 40, and the parameters of the algorithms are identical to [49]. The comparison results are shown in Table 11 in terms of means and standard deviations (Std) of the solutions in the 25 independent runs. The results of GPSO, LPSO, FIPS, SPSO, CLPSO, OLPSO and SLPSOA are gained from [49]. As seen from Table 11 results, SGO found the global optimal solution for the functions sphere, schwefel 2.22, rastrigin, griewank, rotated rastrigin and rotated griewank. On the other hand, for the test functions noise, Ackley, generalized penalized, generalized penalised1, and rotated Ackley, the objective values obtained by SGO are extremely close to global optima. The best optimal values are shown in bold face.

To have statistically sound conclusions, Wilcoxon’s rank-sum test at a 0.05 significance level has been conducted on the experimental results, and the last three rows of Table summarize the results. According to Wilcoxon’s rank-sum test, SGO performs superior than GPSO, LPSO, FIPS, SPSO, CLPSO, OLPSO and SLPSOA in 13, 14, 13, 12, 12, 6, and 7 test functions, respectively, out of 14 test functions. The SGO algorithm is equivalent with OLPSO in four test functions and with SLPSOA in two test functions. So, it is interesting to tell according to this experiment that SGO is better than other algorithms according to Wilcoxon’s rank-sum test.

Experiment 8. SGO vs FIPS-PSO, CPSO-H, DMSPSO-LS, CLPSO, APSO, SSG-PSO, SSG-PSO-DFP, SSG-PSO-BFGS, SSG-PSO-NM, SSG-PSO-PS

To comprehensively compare the performance of SGO with the performance of SSG-PSO (Superior solutions guided PSO with the individual level based mutation operator) and its several variants with different local search techniques, 21 test benchmark functions of different types were used, including unimodal functions, multimodal functions, miscalled functions and rotated functions. The detailed information of test functions is displayed in [50]. Here, the popular PSO variants are FIPS-PSO (fully informed PSO), CPSO-H (cooperative based PSO), DMSPSO-LS (dynamic multi-swarm particle swarm optimizer with local search), CLPSO (comprehensive learning PSO), APSO (adaptive particle swarm optimization), and different variants of SSG-PSO with different local search techniques are SSG-PSO-DFP (SSG-PSO with Davidon–Fletcher–Powell method), SSG-PSO-BFGS (SSG-PSO with Broyden–Fletcher–Goldfarb–Shanno method), SSG-PSO-NM (SSG-PSO with Nelder–Mead simplex search), SSG-PSO-PS (SSG-PSO with Pattern Search).

The maximum number of fitness evaluations is taken as 300,000, and population size is 40, and the parameters of the algorithms are identical to [50]. The comparison results are shown in Table 12 in terms of means and standard deviations (Std) of the solutions in the 30 independent runs. The results of all PSO variants and different variants of SSG-PSO with different local search techniques are gained from [50].

As seen from Table 12 results, SGO found the global optimal solution for all the test functions except Rosenbrock, Ackley, scaled Rosenbrock 100, rotated Rosenbrock and rotated Ackley. On the other hand, for the test functions Ackley and rotated Ackley, the objective values obtained by SGO are extremely close to global optima. The best optimal values are shown in bold face. To have statistically sound conclusions, Wilcoxon’s rank-sum test at a 0.05 significance level has been conducted on the experimental results, and the last three rows of Table summarize the results. According to Wilcoxon’s rank-sum test, SGO performs superior than FIPS-PSO, CPSO-H, DMSPSO-LS, CLPSO, APSO, SSG-PSO, SSG-PSO-DFP, SSG-PSO-BFGS, SSG-PSO-NM, and SSG-PSO-PS in 20, 21, 17, 14, 20, 14, 13, 13, 13 and 12 test functions, respectively, and equivalent with 1, 0, 2, 6, 1, 6, 6, 6, 6 and 6 test functions, respectively, out of 21 test functions. So, it is interesting to tell according to this experiment that SGO is better than other algorithms according to Wilcoxon’s rank-sum test.

Experiment 9: SGO vs. jDE, SaDE, EPSDE, CoDE, MPEDE, CLPSO, CMA-ES,GL-25 and TLBO

To study the performance of proposed SGO, 25 test functions proposed in the 2005 special session on real parameter optimization were used. A detailed description of these test functions can be found in [21]. The number of decision variables or dimension of the function was set to 30 for all test functions. For each algorithm and each test function, 25 independent runs were conducted with 300,000 function evaluations (FEs) as the termination.

SGO was compared with six DE variants, i.e., JADE [32], jDE [31], SaDE [30], EPSDE [34], CoDE [33] and MPEDE[51] and four other approaches, i.e., CLPSO [28], CMA-ES [52], GL-25 [53] and TLBO [24]. In our experiments, the parameter settings of these methods were the same as their original papers. The number of FFs in all these methods was 300,000, and each method was run 25 times on each test function. For the proposed SGO algorithm, we have considered population size to 100, and FEs is same with other methods and is 300,000. The best optimal values are shown in bold face. To have statistically sound conclusions, Wilcoxon’s rank-sum test at a 0.05 significance level has been conducted on the experimental results, and the last three rows of Tables 13 and 14 summarize the experimental results.

According to Wilcoxon’s rank-sum test, it is clear that SGO performs better than JADE in 15 test functions and equivalent to 2 test functions out of 25 test functions. It is better than jDE in 16 test functions and equivalent to 2 test functions out of 25 test functions. It can be seen that compared to SaDE, it performs better in 16 test functions and equivalent to 2 test functions out of 25 test functions. SGO is better than EPSDE in 14 test functions and equivalent to 1 test function out of 25 functions. From the table, it can be verified that SGO is better than CoDE in 12 test functions and equivalent to 2 test functions out of 25 test functions, again better than MPEDE in 13 test functions and equivalent to 2 test functions out of 25 test functions and better than CLPSO in 16 test functions and equivalent to 3 test functions out of 25 test functions. Compared to CMA-ES, our proposed technique is better in 16 test functions and equivalent to 2 test functions out of 25 test functions. We have verified that SGO is better than GL-25 in 22 test functions and equivalent to 2 test functions out of 25 functions, and better than TLBO in 17 test functions and equivalent to 4 test functions out of 25 test functions. So, it is interesting to note that the performance of SGO is better than other algorithms according to Wilcoxon’s rank-sum test.

Experiment 10: SGO vs. PSO, CPSO, CLPSO, CMA-ES, G3-PCX, DE, and TLBO using composite functions

In this experiment, we have considered six composite test functions and eight novel algorithms, particle swarm optimizer (PSO) [7], cooperative PSO (CPSO) [54], comprehensive learning PSO (CLPSO) [28], evolution strategy with covariance matrix adaptation (CMA-ES) [55], G3 model with PCX crossover (G3-PCX) [56], differential evolution (DE) [2], teaching–learning-based optimization [24] and proposed SGO for testing their performances. The detailed descriptions of these functions are given in papers [57] and [25], and the algorithms in their respective papers. Parameter settings for the composite functions are as in [25].

Table 15 shows the results obtained using the eight algorithms on six composite functions. For each test function, each algorithm is run 20 times, and the maximum fitness evaluations are 50,000 for all algorithms. For our proposed algorithm, we have considered population size as 100. The mean values of the results are recorded in Table 15. The best optimal values are shown in bold face. To have statistically sound conclusions, Wilcoxon’s rank-sum test at a 0.05 significance level has been conducted on the experimental results, and the last three rows of Table summarize the experimental results.

According to Wilcoxon’s rank-sum test, it is clear that SGO performs better than PSO in six test functions out of all six test functions, better than CPSO in six test functions out of all six test functions but better than CLPSO in three test functions out of six test functions. SGO is better than CMA-ES in six test functions out of all six functions, better than G3-PCX in five test functions out of six test functions but better than DE in three test functions and equivalent to one test function out of six test functions; whereas it is better than TLBO in three test functions out of six test functions. So, from Table 15, it is clear that out of seven algorithms, in all cases except CLPSO and TLBO, SGO is showing better result; however, with CLPSO and TLBO, SGO is showing equivalent result. From the last column of Table 15, it is also clear that SGO sometimes reaches to optimal solution.

Conclusion

This paper proposes a new efficient optimization algorithm that is inspired by the social behavior of humans toward solving a complex problem. Whenever a problem/task has been solved by a single person, it becomes too difficult to solve or the problem may remain unsolvable. But when the same problem has been solved by a group of persons, the difficulty becomes easy and the unsolvable problem may become solvable. In a social group, people are influenced by the characteristics (i.e., traits) of the successful person, and eventually, they also change/modify their traits accordingly and become capable to solve/address complex problems/situations. This concept has motivated us to propose a new optimization algorithm known as social group optimization (SGO). The concept and the mathematical formulation of SGO algorithm are explained in this paper with a flowchart. To judge the effectiveness of SGO, extensive experiments have been conducted on number of different unconstrained benchmark functions as well as 25 standard numerical benchmark functions taken from the IEEE Congress on Evolutionary Computation 2005 competition. Performance comparisons are made with state-of-the-art optimization techniques like GA, PSO, DE, ABC and its variants and the recently developed TLBO. Different variants of the popular evolutionary optimization techniques are also taken into consideration for comparing them with SGO. The experimental results show that the proposed social group optimization outperforms all investigated optimization techniques in computational costs and also provides optimal solutions for most of the considered functions. One of the best things in this algorithm is that it is easier to understand and to implement in comparison to other algorithms and their variants. It remains to see how SGO works for multi-objective optimization problems in future.

References

Tang KS et al (1996) Genetic algorithms and their applications. IEEE Signal Process Mag 13:22–37

Storn R, Price K (2010) Differential evolution—a simple and efficient heuristic for global optimization over continuous spaces. J Global Optim 23:689–694

Passino K (2002) Biomimicry of bacterial foraging for distributed optimization and control. IEEE Control Syst Mag 22(3):52–67

Eusuff M et al (2006) Shuffled frog-leaping algorithm: a memetic meta-heuristic for discrete optimization. Eng Optim 38(2):129–154

Johnson DS et al (1989) Optimization by simulated annealing. An experimental evaluation Part I. Graph partitioning. Oper Res 37:865–892

Dorigo M, Gambardella LM (1997) Ant colony system: a cooperative learning approach to the traveling salesman problem. IEEE Trans Evol Comput 12:53–66

Kennedy J, Eberhart R (1995) Particle swarm optimization. In: Proc. IEEE Congr. Evol. Comput. Australia, pp 1942–1948

Karaboga D (2005) An idea based on honeybee swarm for numerical optimization. Technical Report TR06. Erciyes University, Engineering Faculty, Computer Engineering Department

Rashedi E et al (2009) GSA: a gravitational search algorithm. Inf Sci 179:2232–2248

Yang XS, Deb S (2010) Engineering optimisation by cuckoo search. Int J Math Modell Numer Optim 1(4):330–343

Simon D (2008) Biogeography-based optimization. IEEE Trans Evol Comput 12:702–713

Hosseini HS (2007) Problem solving by intelligent water drops. In: IEEE Congress on Evolutionary Computation, CEC vol 2007, pp 3226–3231

Bramer M et al. (2010) Firefly algorithm, Lévy flights and global optimization, Research and Development in Intelligent Systems. vol XXVI, Springer, London, pp 209–218

Fathian M et al (2007) Application of honey-bee mating optimization algorithm on clustering. Appl Math Comput 190:1502–1513

Marinakis Y et al (2011) Honey bees mating optimization algorithm for the Euclidean traveling salesman problem. Inf Sci 181:4684–4698

Gonzalez J, Pelta D, Cruz C, Terrazas G, Krasnogor N, Yang X-S (2011) A new metaheuristic bat-inspired algorithm. In: Nature Inspired Cooperative Strategies for Optimization (NICSO 2010), Springer, Berlin/Heidelberg, pp 65–74

Geem Z, Yang X-S (2009) Harmony search as a metaheuristic algorithm. In: Music-Inspired Harmony Search Algorithm. Springer, Berlin/Heidelberg, pp 1–14

Erol OK, Eksin I (2006) A new optimization method: big bang-big crunch. Adv Eng Softw 37:106–111

Hatamlou A (2013) Black hole: a new heuristic optimization approach for data clustering. Inf Sci 222:175–184

Rao RV et al (2011) teaching-learning-based optimization: a novel method for constrained mechanical design optimization problems. Elsevier Comput Aided Des 43:303–315

Suganthan PN et al (2005) Problem definitions and evaluation criteria for the CEC 2005 special session on real-parameter optimization, Nanyang Technol. Univ., Singapore, IIT Kanpur, Kanpur, India, Tech. Rep. KanGAL #2005005, May 2005

Karaboga Dervis, Akay Bahriye (2009) A comparative study of Artificial Bee Colony algorithm. Appl Math Comput 214:108–132

Karaboga D, Akay B (2009) Artificial bee colony (ABC), harmony search and bees algorithms on numerical optimization. In: Proceeding of IPROMS-2009 on Innovative Production Machines and Systems, Cardiff, UK

Rao RV, Savsani VJ, Vakharia DP (2012) Teaching learning based optimization: an optimization method for continuous non-linear large scale problems. Inf Sci 183:1–15

Rao RV, Waghmare GG (2013) Solving Composite Test Functions Using Teaching-Learning-Based Optimization Algorithm. In: Proc. of. Int. of front. In Intelligent Computing. Springer AISC 199, pp 395–403,

Ratnaweera A, Halgamuge S, Watson H (2004) Self-organizing hierarchical particle swarm optimizer with time-varying acceleration coefficients. IEEE Trans Evol Comput 8:240–255

Zhan ZH, Zhang J, Li Y, Chung SH (2009) Adaptive particle swarm optimization. IEEE Trans Syst Man Cybern B Cybern 39:1362–1381

Liang JJ, Qin AK, Suganthan PN, Baskar S (2006) Comprehensive learning particle swarm optimizer for global optimization of multimodal functions. IEEE Trans Evol Comput 10(3):281–295

Zhan Z-H, Zhang J, Li Y, Shi Y-H (2011) Orthogonal learning particle swarm optimization. IEEE Trans Evol Comput 15(6):832–847

Qin AK, Huang VL, Suganthan PN (2009) Differential evolution algorithm with strategy adaptation for global numerical optimization. IEEE Trans Evol Comput 13(2):398–417

Brest J et al (2006) Selfadapting control parameters in differential evolution: a comparative study on numerical benchmark problems. IEEE Trans Evol Comput 10(6):646–657

Zhang J, Sanderson AC (2009) JADE: adaptive differential evolution with optional external archive. IEEE Trans Evol Comput 13(5):945–958

Wang Y, Cai Z, Zhang Q (2011) Differential evolution with composite trial vector generation strategies and control parameters. IEEE Trans Evol Comput 15(1):55–66

Mallipeddi R et al (2011) Differential evolution algorithm with ensemble of parameters and mutation strategies. Appl Soft Comput 11(2):1679–1696

Alatas B (2010) Chaotic bee colony algorithms for global numerical optimization. Expert Syst Appl 37:5682–5687

Zhu GP, Kwong S (2010) Gbest-guided artificial bee colony algorithm for numerical function optimization. Appl Math Comput 217:3166–3173

Kang F, Li JJ, Ma ZY (2011) Rosenbrock artificial bee colony algorithm for accurate global optimization of numerical functions. Inform Sci 12:3508–3531

Gao Weifeng, Liu Sanyang (2011) Improved artificial bee colony algorithm for global optimization. Inf Process Lett 111:871–882

Gao W, Liu S, Huang L (2012) A global best artificial bee colony algorithm for global optimization. J Comput Appl Math 236:2741–2753

Chen S-M, Sarosh A, Dong Y-F (2012) Simulated annealing based artificial bee colony algorithm for global numerical optimization. Appl Math Comput 219:3575–3589

Imanian N, Shiri ME, Moradi P (2014) Velocity based artificial bee colony algorithm for high dimensional continuous optimization problems. Eng Appl Artif Intell 36:148–163

Chuang L-Y, Hsiao C-J, Yang C-H (2011) Chaotic particle swarm optimization for data clustering. Expert Syst Appl 38:14555–14563

Yang C-H, Tsai S-W, Chuang L-Y, Yang C-H (2012) An improved particle swarm optimization with double-bottom chaotic maps for numerical optimization. Appl Math Comput 219:260–279

Sun S, Li J (2014) A two-swarm cooperative particle swarms optimization. Swarm Evol Comput 15:1–18

Shi, YH, Eberhart RC (1998) A modified particle swarm optimizer. In: Proc. IEEE World Congr. Comput. Intell. May 1998, pp 69–73

Kennedy J (1999) Small worlds and mega-minds: effects of neighborhood topology on article swarm performance. In: Proc. IEEE Congr. Evol. Comput., July 1999, pp 1931–1938

Mendes R, Kennedy J, Neves J (2004) The fully informed particle swarm: simpler, maybe better. IEEE Trans Evol Comput 8(3):204–210

Bratton D, Kennedy J (2007) Defining a standard for particle swarm optimization. In: Proc. IEEE Swarm Intel. Symp. April 2007, pp 120–127

Ren Z, Zhang A, Wen C, Feng Z (2014) A scatter learning particle swarm optimization algorithm for multimodal problems. IEEE Trans Cybern 44(7):1127–1140

Wu G, Qiu D, Yu Y, Pedrycz W, Ma M, Li H (2014) Superior solution guided particle swarm optimization combined with local search techniques. Expert Syst Appl 41:7536–7548

Wu G, Mallipeddi R, Suganthan PN, Wang R, Chen H (2015) Differential evolution with multi-population based ensemble of mutation strategies. Inf Sci. doi:10.1016/j.ins.2015.09.009

Hansen N, Ostermeier A (2001) Completely derandomized self adaptation in evolution strategies. Evol Comput 9(2):159–195

Garcia-Martinez C, Lozano M, Herrera F, Molina D, Sanchez AM (2008) Global and local real-coded genetic algorithms based on parent-centric crossover operators. Eur J Oper Res 185(3):1088–1113

van den Berghm F, Engelbrecht AP (2004) A cooperative approach to particle swarm optimization. IEEE Trans Evol Comput 8(3):225–239

Hansen N, Muller SD, Koumoutsakos P (2003) Reducing the time complexity of the derandomized evolution strategy with covariance matrix adaptation (CMA-ES). Evol Comput 11(1):1–18

Deb K, Anand A, Joshi D (2002) A computationally efficient evolutionary algorithm for real-parameter optimization. KanGAL Report No. 2002003, April 2002

Liang JJ, Suganthan PN, Deb K (2005) Novel composition test functions for numerical global optimization. IEEE Trans Evol Comput 5(1):1141–1153

Author information

Authors and Affiliations

Corresponding author

Appendix: Benchmark functions

Appendix: Benchmark functions

All problems are divided into four categories such as US, MS, UN, MN, and its range, formulation, characteristics and the dimensions of these problems are listed in the following Table 16.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Satapathy, S., Naik, A. Social group optimization (SGO): a new population evolutionary optimization technique. Complex Intell. Syst. 2, 173–203 (2016). https://doi.org/10.1007/s40747-016-0022-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-016-0022-8