Abstract

Although VPs have been applied in various medical and dental education programmes, it remains uncertain whether their design was based on specific instructional design (ID) principles, and therefore, how to improve on them. In this study, we evaluated the extent to which ID principles have played a role in the development of VPs for clinical skills training purposes in dental education. First, as frame of reference we mapped key ID principles identified in VPs and medical simulations on the widely used four-component instructional design (4C/ID) model. Next, a literature search was conducted using Libsearch, a single search tool accessing databases such as MEDLINE, PubMed, Embase, CINAHL, ERIC, PsycInfo and Scopus. Following the PRISMA framework for systematic reviews, we selected 21 studies that used screen-based VP simulations for undergraduate dental students. The data analysis consisted of a review of each study analysing the key instructional design features connected to the components of the 4C/ID model. Overall, the results indicate that a structured approach to the design and implementation of VPs likely will have a positive effect on their use. While some ID-principles are shared such as the importance of clinical task variation to improving the transfer of learning. Others, however, such as the choice of learning mode or the use of cognitive feedback are ambiguous. Given its impact on students’ ability to establish effective cognitive schemas and the option to compare and study designs we recommend a more rigorous approach such as 4C/ID for the design of VPs.

Similar content being viewed by others

Dental education increasingly emphasises the need to obtain complex theoretical knowledge, to integrate this knowledge and skills and to apply these to new clinical scenarios and situations. Although it is essential to offer students diverse clinical practice opportunities, this is not always possible due to a lack of sufficient suitable patients, the existing gap between theoretical knowledge and practical skills, an increased focus on patient safety, a lack of representation of thinking processes in the reasoning process, time-intensive tutor guidance and difficult planning (Kononowicz et al., 2016; Postma & White, 2015; Ray et al., 2017; Wang et al., 2020). Therefore, alternative teaching tools and methods such as virtual patients (VPs) have become increasingly important in dental education and they have been accepted by now as a viable supplementary method (Kleinert et al., 2007; Weiner et al., 2016).

Using VPs in dental education can afford students the opportunity to practise clinical skills in a safe learning situation at their own pace, whilst also receiving individual feedback (Kleinert et al., 2007). The aim of VPs is to provide novices with clinical training on how to deliver cases, but without the risks to patients that clinical rotations may involve (Clark et al., 2012). They allow students to practise real simulated interactions with patients and help them to learn complex interviewing skills, make risk assessments, and develop strategies in treatment planning before their first real encounter with patients in the clinic (Boynton et al., 2007; Weiner et al., 2016). As such, VPs can bridge the gap between the preclinical and clinical setting, and between theoretical knowledge and real patient practice (Boynton et al., 2007). Their major benefit is that they allow learners to repetitively and consciously practise diverse clinical skills at anytime, anywhere and at any point in the curriculum (Weiner et al., 2016).

Despite these benefits, Kononowicz et al. (2016) expressed several concerns in their systematic review about the use of VPs. For instance, rather than being used as a supplementary tool, VPs might sometimes replace real patients altogether, learners may be less empathic as VPs are text-intensive, students have little control over the interaction with a VP and VP contents may not be adequately integrated with the overall intended learning outcomes. The authors went on to argue that VP practices should therefore be adjusted to these outcomes and be incorporated within educational programmes and the curriculum; new opportunities might otherwise be ignored, rejected or cause a learning overload (Kononowicz et al., 2016). In a similar vein, previous literature reviews (Chiniara et al., 2013; Cook et al., 2013; Fries et al., 2021) have concluded that there are substantial gaps in the empirical evidence regarding the value, methodologies and outcomes of simulation in healthcare. Hence, we need more evidence on how to design simulations and integrate them into curricula or training programmes (Chiniara et al., 2013).

In the literature (Cook et al., 2013; Ellaway et al., 2014; Issenberg et al., 2005), we have identified different approaches to describe the research on VP design and implementation in medical and dental education. Ellaway (2014), for instance, focused on embedding VPs and concluded that medical education must find the proper ways to use them because their effectiveness depends on how they are designed and the educational activities around them. Few studies, however, have focused on the extent to which existing instructional design (ID) principles and effective use of VPs have been considered. Issenberg et al. (2005) identified 10 ID features of high-fidelity medical simulations that lead to most effective learning, which were: ‘feedback, repetitive practice, curriculum integration, range of difficulty level, multiple learning strategies, capture clinical variation, controlled environment, individualised learning, defined outcomes and simulator validity’. In another comprehensive review, Cook et al. (2013) evaluated and compared the effectiveness of ID features and concluded that the empirical evidence supports the following features: ‘range of difficulty, repetitive practice, distributed practice, cognitive interactivity, multiple learning strategies, individualised learning, mastery learning, feedback, longer time, and clinical variation’.

Although these reviews connected specific ID principles to effective simulation design, they are not linked to any existing, integrated ID model and are therefore of limited use in building future VPs. This is important because the quality and effectiveness of a learning experience are the product of sound instructional design. Essentially, ID theories offer a framework for the use of proper strategies and predict their effectiveness. ID models are used in effective learning environments to foster ID strategies that elicit appropriate cognitive processes. It is with good reason, therefore, that Cook et al. (2013) called for future research that directly compares alternate IDs in order to shed more light on the mechanisms of effective simulation-based instruction.

Chiniara et al. (2013) established a conceptual framework for simulation in healthcare education. Although spanning four levels, the proposed framework is not very specific about how a simulation should be designed, nor does it investigate whether and how it helps. When education is effective, students are able to transfer their knowledge to real clinical practice. Early education, however, often focuses on theoretical aspects and science-oriented presentations of knowledge without making ample connections to clinical practice (Kononowicz et al., 2016). New educational approaches have emphasised instructional methods that ensure the integration and application of students' knowledge and skills in effective clinical practice (Allaire, 2015; van Merriënboer & Kester, 2014). One of these approaches is the four-component instructional design (4C/ID) model that targets the development of complex skills and the subsequent transfer of learning from the classroom to new and real situations. The model is accompanied by a ten-step process (van Merriënboer & Kirschner, 2018) detailing how to design instruction for complex learning. The assumption underlying this model is that the design of complex learning environments should encompass four basic components, specifically: (a) learning tasks that are based on authentic, whole tasks, (b) supportive information, (c) procedural information and (d) part-task practice (van Merriënboer & Kirschner, 2018).

Few literature reviews have gone beyond a set of ID principles by selecting an ID model and scrutinising its application in the selected studies. The present study aimed to determine whether or not and to what extent the principles of a given, proven and closely connected ID model have been applied in VP design in dental education and what we can learn from this in terms of strengths and weaknesses. The 4C/ID model aims to help instructional designers develop educational programmes aimed to teach complex skills or professional competencies. We selected this model, not only because it targets the development of complex skills and the transfer of learning from theory to practice, but also because it has been widely researched and used for various types of medical simulations (e.g. de Melo et al., 2017; Maggio et al., 2015; Vandewaetere et al., 2015). Moreover, the model synthesises many of the points that were considered in other reviews. In the following paragraphs, we will first introduce the 4C/ID model and connect it with the features identified in the aforementioned reviews (Cook et al., 2013; Issenberg et al., 2005). Based on this knowledge, we will subsequently review a set of studies on VPs in dental education with the aim to understand how much they considered these ID principles to make effective use of VPs in education.

Theoretical framework: the 4C/ID model and key ID features

As stated above, the underlying framework of this study is the 4C/ID model. The assumption underlying this model is that the design of complex learning environments should encompass four basic components. To focus our study, we will introduce each of these components and connect them with the features identified in previous reviews:

-

(a)

Learning tasks. These tasks should be authentic and based on real-life situations so as to facilitate the integration of knowledge, skills and attitudes as well as the transfer of learning. Moreover, sets of learning tasks should exhibit a high degree of variability and have decreasing levels of learner support and guidance within each task class (van Merriënboer & Kirschner, 2018). That is to say, tasks should warrant clinical/task variation that refers to the diversity of patients' clinical scenarios (Cook et al., 2013; Issenberg et al., 2005) and the use of different instructional strategies to scaffold learning by means of tasks that vary from worked example to patient case (Cook et al., 2013). The design of these learning tasks, moreover, should take account of the learning mode. That is, will the task be presented to an individual or a group of learners? This decision should be based on their learning needs or the complexity of the task (Issenberg et al., 2005; Kirschner et al., 2009), which brings us to another ID feature: task difficulty. According to this principle, tasks should not only vary content-wise, but also in terms of their complexity, which depends on the number of elements included in the task and their degree of interaction (van Merriënboer & Kirschner, 2018). Previous studies have indeed flagged the range of task complexity as a significant variable in simulation-based medical education and concluded that the practice of medical skills across a range of difficulty levels can improve learning (Issenberg et al., 2005). Finally, instructional designers should also ensure that the learning tasks be integrated with the remaining three components (supportive and procedural information and part-task practice). Such integration is essential to facilitate learners’ understanding of key concepts by connecting theory and practice through learning tasks (Mora & Coto, 2014). As Issenberg et al. (2005) argued, rather than being offered as an exceptional or stand-alone activity, simulation-based education should be part of the standard curriculum and be spread over a period of time in the form of distributed practice.

-

(b)

Supportive information. Defined per task class, this information offers learners a bridge between knowledge and practice, allowing them to learn successfully and carry out the non-routine aspects of learning tasks. The information explains how a domain is organised, includes strategies to approach problems in a systematic problem-solving process (e.g. diagnose – treatment– follow-up) and introduces the general or exploratory principles that help learners to complete each phase successfully (van Merriënboer & Kirschner, 2018). In addition, it includes cognitive interactivity/feedback, which is defined as ‘the receipt, processing, and formulation of the learner's internal thoughts and other cognitive processes in response to events in the instructional environment’ (Song et al., 2014, p. 2). This interactivity should also encompass reflection strategies such as comparison, elaboration, and entrapment to encourage learners' cognitive engagement. Through prompts, cues and questions, cognitive feedback induces students to reflect on the quality of problem-solving processes and solutions in order to create more effective cognitive schemas and improve future performance (van Merriënboer & Kirschner, 2018).

-

(c)

Procedural information. Necessary to perform routine aspects of a learning task, this information is best presented just in time when learners are working on the task to facilitate schema automation. It quickly fades as the students gain more expertise. At this point, learners may receive corrective feedback pertinent to the outcomes of their performance. Based on this as well as the cognitive feedback, learners are able to self-assess and monitor their progress in the learning process. Unlike cognitive feedback, however, the procedural information helps learners to identify and correct errors relevant to the task at hand (van Merriënboer & Kirschner, 2018).

-

(d)

Part-task practice. This final component calls for extensive repetitive practice of routine aspects of a task in order to reach a high level of automaticity. As such, it is typically required when learners must attain a determined standard for routine aspects of a task to qualify for a task level. This is the case when the learning tasks do not provide the required amount of practice. The aim of part-task practice is to prompt skills acquisition and the transfer of skilled behaviour from simulator settings to patient care settings (Cook et al., 2013).

Questions

Using the 4C/ID model, we attempted to structure key ID features that apply to the design of virtual patients in dental education. Such an overview might aid the systematic analysis of VP design. The results of such an analysis will not only reveal the strengths and weaknesses of a specific design under scrutiny, but it will also give an indication as to whether there is sufficient coverage of the model to recommend its use for future VP design. The adoption of a shared ID model looks beyond mere ID principles and will facilitate future research that compares alternate IDs.

In this study, we therefore, addressed the following research questions:

-

To what extent have the principles of a given, proven and task-focused ID model been applied in VP design in dental education?

-

What can we learn from this application in terms of strengths and weaknesses?

To this end, we reviewed studies on VPs in dental education to find out how much they considered the ID aspects from the perspective of the 4C/ID model.

Methods

Protocol

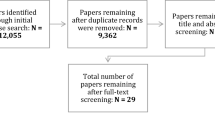

We conducted the narrative review at hand according to the PRISMA guideline (as far as applicable) which includes preferred reporting items for systematic reviews and meta-analyses (Page et al., 2021). Figure 1 gives an overview of the main flow of the study.

Databases and search strategies

In the period spanning 2000 through to 23 February 2022, we systematically searched Embase, ERIC, PubMed, MEDLINE, CINAHL, Scopus and PsycInfo for studies that used VPs in dental education. For this search, we used a single tool: the LibSearch engine. We restricted our search to peer-reviewed articles in English only. The search strategy included terms that addressed VP simulation as follows:

(kw:(“virtual patient” OR “Patient Simulation” OR “Computer Simulation” OR “Computeri?ed Model”) OR ab:(“virtual patient” OR “Patient Simulation” OR “Computer Simulation” OR “Computeri?ed Model”))

To zoom in on dental education, we narrowed down the search by adding the following terms:

AND (kw:(dentistry) OR ab:(dentistry) OR kw:(“dental”) OR ab:(“dental”)) AND (kw:(“ educ*” OR “teach*” OR “train*” OR “learn*” OR “instruct*” OR “stud*”) OR ab: (“educ*” OR “teach *” OR “train*” OR “learn*” OR “instruct*” OR “stud*”))

Consequently, the final search string read:

(kw:(“virtual patient” OR “Patient Simulation” OR “Computer Simulation” OR “Computeri?ed Model”) OR ab:(“virtual patient” OR “Patient Simulation” OR “Computer Simulation” OR “Computeri?ed Model”)) AND (kw:(dentistry) OR ab:(dentistry) OR kw:(“dental”) OR ab:(“dental”)) AND (kw:(“educ*” OR “teach*” OR “train*” OR “learn*” OR “instruct*” OR “stud*”) OR ab:(“educ*” OR “teach*” OR “train*” OR “learn*” OR “instruct*” OR “stud*”))

Study inclusion and eligibility criteria

We included peer-reviewed studies published in English that investigated the use of screen-based (computer-based) VPs representing patient scenarios with the aim to teach dental students at any stage of training or practice. We excluded studies in which VPs were combined with other kinds of simulation, such as immersive virtual-augmented or mixed-reality environments, mannequins and standardised patients, as well as studies in which VPs represented individual organs or focused on psychomotor skills training, without presenting the case in a clinically relevant scenario. Finally, we also excluded studies that were not original research such as other reviews and meta-analyses, and studies that focused on postgraduate participants or other types of professional training.

Study selection process

The study selection process comprised the following steps: First, we removed duplicates, using EndNote X8 reference manager software. Second, the first author (FJ) scanned the abstracts for eligibility, screening an article in full if the respective abstract lacked information. She subsequently discussed the results in the research team where articles were accepted for further selection by mutual agreement. FJ then followed the same procedure to review and discuss the articles hitherto selected. As a final step, we identified additional papers, using the snowballing technique.

Data collection and analysis

Before analysing the extent to which ID principles were considered, we reviewed the selected studies in terms of their design approach and research methods used, including their study goals, participants, scenario path type, field of dentistry and their main findings (see Appendix for more information). Subsequent review of the studies under scrutiny was guided by the 4C/ID model elements. Figure 2 visualises the analysis framework which we explained in the introduction, the upper part presenting the 4C/ID model components and the lower part listing the associated ID features identified in previous studies. To find out which ID features were applied in each study, the first author subjected the studies to a thorough scrutiny. In case one or more of the features seemed ambiguous, the second author analysed the respective study as well. All authors subsequently discussed the results and finalised the analysis by mutual agreement. By means of descriptive statistics, we brought into focus the frequency with which ID features were applied across studies. For each study listed in the appendix, we generated codes that related to each ID feature of which we will provide descriptions in the Results section.

Results

Study selection

Our initial search returned 6744 studies, of which 5,805 remained after duplicates were removed (see Fig. 3). An initial screening of titles and abstracts led to the exclusion of another 5653 studies, either because they were not original research articles or because they combined various types of simulation; whilst some did not use screen-based VPs, yet others used dissimilar types of simulation or mixed these up with augmented or virtual reality (see Fig. 3). This preliminary screening eventually led to the selection of 152 studies for full-text review. After reviewing these texts in full, we excluded those studies that focused on postgraduate learners or mixed training levels, because undergraduates were our focus. Once we had also excluded the studies with vague study designs and studies focusing on psychomotor skills training, 21 studies were ultimately found eligible for inclusion in the present review.

General study characteristics

The studies applied different approaches, using comparative (cross-sectional, single-group, case–control, cohort-study, and trial) or non-comparative (focus-group, case-study, survey, narrative) designs. More specifically, 15 studies used a comparative approach, one a non-comparative and five a mixed-methods approach. The study goals were mainly to introduce VPs, compare VPs together or with the regular curriculum (Table 1). The number of participants varied from 9 to 171 undergraduate students. In terms of scenario path type, 12 studies used a linear (passive/active) approach involving a single path from introduction through to history taking, examination, diagnosis and treatment planning in which the learner had to finish one section before moving to the next. Six studies, by contrast, had a branching design in which the pathway depended on the learner’s decision at every strategic node in the VP, creating multiple potential paths that could lead to a common or multiple end points. In 17 studies, the focus was on teaching undergraduate students clinical management or clinical reasoning skills such as history taking, clinical examination and treatment planning (Clark et al., 2012; Weiner et al., 2016; Zary et al., 2009, 2012). Four other studies (Boynton et al., 2007; Kleinert et al., 2007; Papadopoulos et al., 2013; Sanders et al., 2008), on the other hand, focused on training students to apply communication and nonclinical skills, for instance when dealing with developmentally disabled paediatric patients. Appendix 1 gives a complete overview of the articles we reviewed and their study features.

Learning task principles

With respect to the first component of the 4C/ID model (Table 2), we found that 14 studies used a variety of tasks ranging from two VP cases (Allaire, 2015; Zary et al., 2012) to train critical thinking and reasoning skills to eight VPs (Clark et al., 2012; Marei et al., 2017, 2018a, 2018b; Weiner et al., 2016) based on complex situations to prepare students for stressful moments in the clinic and to examine different study settings. One study (Zary et al., 2009) even used 24 VP cases for diagnostics and therapy planning in general dentistry during two educational semesters. Another two studies focused specifically on creating different levels of task complexity: Woelber et al. (2012) developed two VPs on localised aggressive periodontitis that differed in their level of complexity from easy to complex. Littlefield et al. (2003), moreover, designed three VPs for undergraduate dental students and general dentists that ranged from low to moderate difficulty. The studies also used different task types or instructional strategies such as patient cases or worked examples (Kleinert et al., 2007; Sanders et al., 2008) to facilitate learning and provide a bridge between knowledge and practice, thereby facilitating the transfer of learning.

During the VP programme, students can take responsibility for their learning progress either individually or in a group, depending on the learning mode. In the majority of studies (n = 16), VPs were employed for individual use. This means that, during the simulation programme, VPs gave instructions and assessed students’ learning outcomes or their general use based on the following information: the number of questions asked, tests ordered, examinations performed, students’ final scores, the total time spent during the programme (Clark et al., 2012; Schittek Janda et al., 2004; Zary et al., 2012), their knowledge and perceived difficulty level (Boynton et al., 2007; Kleinert et al., 2007; Papadopoulos et al., 2013; Sanders et al., 2008; Woelber et al., 2012; Yoshida et al., 2012), their self-assessment of the learning process and general acceptance (Zary et al., 2009), outcomes of the group discussion at the end of the individual sessions (Allaire, 2015; Lund et al., 2011; Marei et al., 2018a, 2018b), information from alternative tools for teaching and assessing students’ diagnostic skills (Littlefield et al., 2003) and the transfer of knowledge (Marei et al., 2017). In seven studies, by contrast, VPs served as group training activities that involved two or more learners and were followed by reflection sessions (Allaire, 2015; Lund et al., 2011; Marei et al., 2019, 2018a, 2018b; Seifert et al., 2019; Weiner et al., 2016). Of these studies, two employed both individual and collaborative group training, by randomly assigning students to each group (Marei et al., 2019, 2018a, 2018b) to compare their effectiveness. The latter study by Marei et al., (2018a, 2018b) found that a collaborative activity could enhance students’ knowledge acquisition and retention, especially when the activity concerned difficult topics that involved multiple, interacting elements.

According to 4C/ID, curriculum or course integration is a prerequisite for the transfer of learning and filling the gap between theory and practice. In most studies (n = 15), the simulation programme was a formal, integral part of the curriculum that served to help students bridge the gap between what they had learnt in the classroom and the provision of patient care. More specifically, VPs were embedded in the standard curriculum before the actual encounters with patients to prepare students for clinical training. In most cases (n = 13), simulation training was distributed over a period of time ranging from one day (Antoniou et al., 2014; Clark et al., 2012; Lund et al., 2011; Weiner et al., 2016), through one week (Papadopoulos et al., 2013; Schittek Janda et al., 2004), to one or more educational semesters with planned/unlimited access to VPs (Allaire, 2015; Zary et al., 2009).

Supportive information principles

As for the second component of the 4C/ID model (Table 2), only a few studies (n = 2) provided students with access to case-specific background information to support schema formation. Allaire (2015), for instance, did so by offering additional sources such as journal articles and videos. Each section of the case included guided questions that could be answered individually or in pairs, and then discussed with a faculty facilitator in a small group. In the study by Clark et al. (2012), moreover, each participant received a guide sheet outlining the common features of a medical interview and physical examination of the head and neck region. As mentioned before, cognitive interactivity/feedback is another important part of the supportive information in the 4C/ID model. It facilitates the transfer of learning from the classroom to a clinical setting and assists learners in creating cognitive schemas and problem-solving strategies. The studies we reviewed employed a variety of reflection and interaction strategies to promote learners' cognitive engagement. To help learners reflect on the quality of problem-solving processes, some studies used debriefing, summarisation and group discussion after each VP or at the end of the session (Allaire, 2015; Littlefield et al., 2003; Lund et al., 2011; Marei et al., 2019, 2018a, 2018b; Weiner et al., 2016). Other studies provided cognitive feedback from the instructor or facilitator (Allaire, 2015; Antoniou et al., 2014; Lund et al., 2011; Seifert et al., 2019) or during tutor-aided group discussion (Marei et al., 2019, 2018a, 2018b; Weiner et al., 2016) to encourage reflection. In a similar fashion, Zary et al. (2009) provided constructive feedback in the form of an automatically generated checklist that matched student recommendations and compared them to expert recommendations to promote reflection. The strategy used to reinforce learning in the study by Sanders et al. (2008) was to demonstrate the point of teaching. That is to say, after students had entered their responses, they were shown a video clip giving them immediate feedback on their decisions. Schittek Janda et al. (2004), by contrast, described an approach in which learners, rather than being presented with pre-selected options, were forced to mobilise their own cognitive processes in order to make a decision about a particular case. Finally, Woelber et al. (2012) used cognitive interactivity, by providing collaborative discussion between students at decision points and giving feedback on their actions.

Procedural information principles

According to the third component of the 4C/ID model (Table 2), learners must receive feedback at the procedural level to help them perform routine aspects of the learning tasks. The majority of the studies under scrutiny did provide different types of feedback during or after the simulation activity. We already mentioned the cognitive feedback that served to promote reflection on tasks in some studies. At this level, however, we considered corrective feedback that helped students to monitor their performance and to identify and correct their errors. We found that some studies (n = 9) provided immediate feedback after each information and decision point during the programme and presented the correct or incorrect answer depending on students’ responses (Abbey et al., 2003; Boynton et al., 2007; Kleinert et al., 2007; Lund et al., 2011; Mardani et al., 2020; Marei et al., 2019, 2018a, 2018b; Seifert et al., 2019; Zary et al., 2009). Kleinert et al. (2007), for instance, provided immediate and corrective feedback on students’ response after each decision point. In some other studies (Boynton et al., 2007; Marei et al., 2019, 2018a, 2018b; Papadopoulos et al., 2013; Seifert et al., 2019; Woelber et al., 2012), students immediately received pertinent information that included a text-based description of the consequences of their choices. The purpose of this approach was to ensure that students could not proceed to the next step until the correct option was chosen.

Some studies (n = 5) provided just-in-time information during practice in the form of detailed instructions via the chat window before students entered their choices. Seifert et al. (2019), for instance, presented additional information via text boxes (glossary) to compensate for the lack of on-site interaction with a supervisor. In Clark et al. (2012)’s study, moreover, students received a guide sheet outlining the common elements of a medical interview and physical examination. Finally, in both Kleinert et al. (2007) and Sanders et al. (2008)’s study, students received a concise summary of information pertinent to the case during so-called ‘information points’.

Part-task practice principles

Our scrutiny of the selected studies did not yield any information on part-task practice, the fourth component of the 4C/ID model.

Discussion

In reviewing the body of empirical studies, we encountered a range of perspectives on VP design and implementation. Previous studies have emphasised the role of specific ID features and associated these with effective VP design (Cook et al., 2013; Issenberg et al., 2005). In doing so, however, they did not link to an existing, integrated ID model, and their recommendations are therefore difficult to apply in future VP design. VPs will be effective only when both the simulation and the educational activities around them have been properly designed (Ellaway, 2014). The aim of the present study was therefore to determine whether or not and to what extent the 4C/ID principles as part of a given, proven and closely connected ID model have been applied in VP design in dental education and what we can learn from this in terms of strengths and weaknesses. Our overall conclusion is that a structured approach to the design and implementation of VPs is lacking. Although all but one of the key ID principles identified were covered in at least part of the studies, none of them used an integrated design.

Learning tasks

According to the 4C/ID model, learning tasks should, in addition to being varied so as to reflect the variety encountered in real life and to promote transfer, have a constant level of complexity within each task class that should, moreover, increase as tasks become more advanced (van Merriënboer & Kirschner, 2018). In general, most VP designers appeared to be well aware that clinical task variation is crucial to promote the transfer of learning.

With regard to the learning mode, the studies by Marei et al., (2018a, 2018b, 2019) confirm that such decision should depend on the complexity of the task (Issenberg et al., 2005; Kirschner et al., 2009). Marei et al., (2018a, 2018b) suggested that when VP cases are complex and involve more interaction between multiple elements, the decision to use collaborative learning with VPs is more obvious. In Marei et al. (2019)’s study, the collaborative group performed significantly better and experienced less intrinsic and extraneous cognitive load than when VPs were used independently. Other studies, by contrast, laid a greater emphasis on the benefits of individual VP use. Yoshida et al. (2012), for instance, concluded that VPs can help students to become self-directed lifelong learners because they can act independently, in a self-paced manner, to solve problems. Most studies, however, did not motivate their choice of learning mode. We therefore welcome additional research on how best to strike a balance between individual and group-based learning with VPs.

As another important feature of ID, curriculum integration has been addressed by previous studies which emphasised that VPs should be aligned with the learning goals and be properly incorporated into educational programmes and curricula (Kononowicz et al., 2016). More specifically, Marei et al. (2019) suggested that VPs should be designed in accordance with their intended use, which should therefore already receive consideration in the early stages of VP development. More than half of the studies we reviewed had embedded simulations as an integral part of their curriculum or training programme, and a few had even investigated how they could achieve such integration effectively (Boynton et al., 2007; Lund et al., 2011; Schittek Janda et al., 2004). Finally, Seifert et al. (2019) concluded in their study that it is, indeed, feasible to integrate VP cases within a curriculum and that this leads to substantial growth of clinical competence.

Supportive information

The second component of the 4C/ID model specifies that learners should receive supportive information explaining how to approach a problem, suggesting how to organise the domain or offering foundational knowledge. When teaching complex topics, the preferred method is to sequence VPs after lectures in a deductive approach as it could facilitate knowledge transfer. Importantly, inductive or deductive approaches to sequencing VPs should differ in the amount of guidance depending on students’ prior knowledge and VP complexity (Marei et al., 2017).

In a minority of the studies, cognitive interactivity/feedback was used to promote reflection on learning processes. The approach to achieve this described in Antoniou et al. (2014) was to offer students constructive feedback in each step of task completion. In other studies, such feedback was delivered by VPs (Boynton et al., 2007) or the instructor (Allaire, 2015; Antoniou et al., 2014; Lund et al., 2011; Seifert et al., 2019), either during the programme or after it in a tutor-aided group discussion (Marei et al., 2019, 2018a, 2018b; Weiner et al., 2016). According to Lund et al. (2011), the successful application of VPs depended on the amount and type of feedback, as well as on the feedback given in the teacher-aided discussion. Lack of attention to cognitive feedback could undermine students’ ability to establish effective cognitive schemas that improve their future understanding and performance (van Merriënboer & Kirschner, 2018).

Procedural information

At procedural level, in a narrow majority of the studies, learners received feedback in a corrective form, although this feedback was not always clearly distinguishable from cognitive feedback. In these cases, learners received immediate feedback after each decision point and, depending on their responses, were shown the correct or incorrect answer (Abbey et al., 2003; Boynton et al., 2007; Kleinert et al., 2007; Lund et al., 2011; Marei et al., 2019, 2018a, 2018b; Seifert et al., 2019; Zary et al., 2009). Sometimes, this just-in-time information took the form of detailed instructions with a brief explanation of the consequences of their choices (Marei et al., 2019, 2018a, 2018b; Papadopoulos et al., 2013; Seifert et al., 2019; Woelber et al., 2012).

Part-task practice

As the final component of the 4C/ID model, part-task practice is only needed when a routine aspect of a complex skill requires some degree of automation. As such, repetitive practice did not receive any attention in the studies under review. This may be attributable to the nature of the subjects and type of practices we included. Since we only considered full patient cases about clinical reasoning and management skills to the exclusion of psychomotor skills training, our inclusion criteria may have obviated the need for automation.

Conclusion

VP training affords students the opportunity to experience early clinical exposure by practising real patient scenarios in a safe environment. This study attempted to structure applied ID principles with the aim to design VPs in dental education based on the 4C/ID model. At a general level, we found that the key ID principles were well represented in our studies. This suggests that it is feasible to proceed to the next step in designing VPs, that is, by using a structured ID approach. An integrated design approach such as 4C/ID will improve the effectiveness of VPs (Ellaway, 2014) and will enable us to compare alternate IDs in order to shed more light on the mechanisms of effective simulation-based instruction (Cook et al, 2013). In the next sentences, we will discuss our key findings and propose future design applications based on the strengths and weaknesses of the features applied. During VP training, students received immediate or delayed feedback from the system and sometimes also participated in an instructor-guided reflection session after the programme. Lack of attention to cognitive feedback might undermine students’ ability to establish effective cognitive schemas that improve their future understanding and performance. The 4C/ID model emphasises the provision of cognitive feedback precisely to create such schemas and reflect on the learning process. Another factor that is essential to facilitate learning and connect theory to practice is curriculum integration. When properly incorporated into curricula, VP training has the potential to promote self-directed learning in students, helping them to formulate learning objectives and to improve the quality of their knowledge structure in the process. The choice of learning mode, moreover, should depend on the aims and complexity of the respective VP case; nevertheless, we need additional research on how best to strike a balance between individual and group-based learning with VPs. Finally, from our review findings we may infer that most VP designers generally appreciate the importance of clinical task variation to improving the transfer of learning.

Lessons learnt from strengths and weaknesses

As a matter of conclusion, below we will summarise the key lessons learnt from this review with respect to the strengths and weaknesses of applying the 4C/ID model in VP design in dental education:

-

Most VP designers generally appreciate the importance of clinical task variation to improving the transfer of learning.

-

Collaborative VPs are clearly beneficial in complex cases where multiple elements interact. However, we need additional research on how best to strike a balance between individual and group-based learning with VPs.

-

VPs should be aligned with the learning goals and be properly incorporated into curricula.

-

Lack of attention to cognitive feedback might undermine students’ ability to establish effective cognitive schemas that improve their future understanding and performance.

Finally, to further improve the design of VPs and to enable the comparison and study of their effectiveness, we recommend a structured ID approach such as 4C/ID.

Prospects and limitations

This review addressed the substantial gaps in a design and curriculum integration of VPs that is based on the ID model/principles. It also underscored the need for more research that directly compares alternate ID principles and the interaction effects among them, to better understand the role of specific ID features in designing effective simulation-based instruction.

In this study, we only focused on screen-based, whole VP scenarios designed for clinical reasoning and management skills training in dentistry. Consequently, our inclusion criteria (we did not consider psychomotor skills) affected the attention that the studies under review paid to certain ID features.

Finally, we only had access to the study reports and not to the VPs themselves or the information on how they were actually incorporated into the curriculum.

Data availability statement

Data are available on request from the authors.

References

Abbey, L. M., Arnold, P., Halunko, L., Huneke, M. B., & Lee, S. (2003). CASE STUDIES for dentistry: Development of a tool to author interactive, multimedia, computer-based patient simulations. Journal of Dental Education, 67(12), 1345–1354.

Allaire, J. L. (2015). Assessing critical thinking outcomes of dental hygiene students utilizing virtual patient simulation: A mixed methods study. Journal of Dental Education, 79(9), 1082–1092.

Antoniou, P. E., Athanasopoulou, C. A., Dafli, E., & Bamidis, P. D. (2014). Exploring design requirements for repurposing dental virtual patients from the web to second life: A focus group study. Journal of Medical Internet Research, 16(6), e151. https://doi.org/10.2196/jmir.3343

Boynton, J. R., Green, T. G., Johnson, L. A., Nainar, S. M., & Straffon, L. H. (2007). The virtual child: Evaluation of an internet-based pediatric behavior management simulation. Journal of Dental Education, 71(9), 1187–1193.

Chiniara, G., Cole, G., Brisbin, K., Huffman, D., Cragg, B., Lamacchia, M., & Norman, D. (2013). Simulation in healthcare: A taxonomy and a conceptual framework for instructional design and media selection. Medical Teacher, 35(8), e1380-1395. https://doi.org/10.3109/0142159x.2012.733451

Clark, G. T., Suri, A., & Enciso, R. (2012). Autonomous virtual patients in dentistry: System accuracy and expert versus novice comparison. Journal of Dental Education, 76(10), 1365–1370.

Cook, D. A., Hamstra, S. J., Brydges, R., Zendejas, B., Szostek, J. H., Wang, A. T., Erwin, P. J., & Hatala, R. (2013). Comparative effectiveness of instructional design features in simulation-based education: Systematic review and meta-analysis. Medical Teacher, 35(1), e867-898. https://doi.org/10.3109/0142159x.2012.714886

de Melo, B. C. P., Falbo, A. R., Muijtjens, A. M. M., van der Vleuten, C. P. M., & van Merriënboer, J. J. G. (2017). The use of instructional design guidelines to increase effectiveness of postpartum hemorrhage simulation training. International Journal of Gynecology & Obstetrics, 137(1), 99–105. https://doi.org/10.1002/ijgo.12084

Ellaway, R. H. (2014). Virtual patients as activities: Exploring the research implications of an activity theoretical stance. Perspect Med Educ, 3(4), 266–277. https://doi.org/10.1007/s40037-014-0134-z

Fries, L., Son, J. Y., Givvin, K. B., & Stigler, J. W. (2021). Practicing connections: A framework to guide instructional design for developing understanding in complex domains. Educational Psychology Review, 33(2), 739–762. https://doi.org/10.1007/s10648-020-09561-x

Issenberg, S. B., McGaghie, W. C., Petrusa, E. R., Lee Gordon, D., & Scalese, R. J. (2005). Features and uses of high-fidelity medical simulations that lead to effective learning: A BEME systematic review. Medical Teacher, 27(1), 10–28. https://doi.org/10.1080/01421590500046924

Kirschner, F., Paas, F., & Kirschner, P. A. (2009). A cognitive load approach to collaborative learning: United brains for complex tasks. Educational Psychology Review, 21(1), 31–42. https://doi.org/10.1007/s10648-008-9095-2

Kleinert, H. L., Sanders, C., Mink, J., Nash, D., Johnson, J., Boyd, S., & Challman, S. (2007). Improving student dentist competencies and perception of difficulty in delivering care to children with developmental disabilities using a virtual patient module. Journal of Dental Education, 71(2), 279–286.

Kononowicz, A. A., Woodham, L., Georg, C., Edelbring, S., Stathakarou, N., Davies, D., Masiello, I., Saxena, N., Tudor Car, L., Car, J., &, et al. (2016). Virtual patient simulations for health professional education. Cochrane Database of Systematic Reviews. https://doi.org/10.1002/14651858.CD012194

Littlefield, J. H., Demps, E. L., Keiser, K., Chatterjee, L., Yuan, C. H., & Hargreaves, K. M. (2003). A multimedia patient simulation for teaching and assessing endodontic diagnosis. Journal of Dental Education, 67(6), 669–677.

Lund, B., Fors, U., Sejersen, R., Sallnäs, E. L., & Rosén, A. (2011). Student perception of two different simulation techniques in oral and maxillofacial surgery undergraduate training. BMC Medical Education, 11, 82. https://doi.org/10.1186/1472-6920-11-82

Maggio, L. A., Cate, O. T., Irby, D. M., & O’Brien, B. C. (2015). Designing evidence-based medicine training to optimize the transfer of skills from the classroom to clinical practice: Applying the four component instructional design model. Academic Medicine, 90(11), 1457–1461. https://doi.org/10.1097/acm.0000000000000769

Mardani, M., Cheraghian, S., Naeeni, S. K., & Zarifsanaiey, N. (2020). Effectiveness of virtual patients in teaching clinical decision-making skills to dental students. Journal of Dental Education, 84(5), 615–623. https://doi.org/10.1002/jdd.12045

Marei, H. F., Donkers, J., Al-Eraky, M. M., & van Merrienboer, J. J. G. (2017). The effectiveness of sequencing virtual patients with lectures in a deductive or inductive learning approach. Medical Teacher, 39(12), 1268–1274. https://doi.org/10.1080/0142159X.2017.1372563

Marei, H. F., Al-Eraky, M. M., Almasoud, N. N., Donkers, J., & Van Merrienboer, J. J. G. (2018a). The use of virtual patient scenarios as a vehicle for teaching professionalism. European Journal of Dental Education, 22(2), e253–e260. https://doi.org/10.1111/eje.12283

Marei, H. F., Donkers, J., & Van Merrienboer, J. J. G. (2018b). The effectiveness of integration of virtual patients in a collaborative learning activity. Medical Teacher, 40(sup1), S96–S103. https://doi.org/10.1080/0142159X.2018.1465534

Marei, H. F., Donkers, J., Al-Eraky, M. M., & Van Merrienboer, J. J. G. (2019). Collaborative use of virtual patients after a lecture enhances learning with minimal investment of cognitive load. Medical Teacher, 41(3), 332–339. https://doi.org/10.1080/0142159X.2018.1472372

Mora, S., & Coto, M. (2014). Curriculum integration by projects: Opportunities and constraints a case study in systems engineering. CLEI Electronic Journal, 17, 11–11.

Page, M. J., Moher, D., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., Shamseer, L., Tetzlaff, J. M., Akl, E. A., Brennan, S. E., Chou, R., Glanville, J., Grimshaw, J. M., Hróbjartsson, A., Lalu, M. M., Li, T., Loder, E. W., Mayo-Wilson, E., McDonald, S., & McKenzie, J. E. (2021). PRISMA 2020 explanation and elaboration: Updated guidance and exemplars for reporting systematic reviews. BMJ, 372, n160. https://doi.org/10.1136/bmj.n160

Papadopoulos, L., Pentzou, A.-E., Louloudiadis, K., & Tsiatsos, T.-K. (2013). Design and evaluation of a simulation for pediatric dentistry in virtual worlds. Journal of Medical Internet Research, 15(10), 240. https://doi.org/10.2196/jmir.2651

Postma, T. C., & White, J. G. (2015). Developing clinical reasoning in the classroom – analysis of the 4C/ID-model. European Journal of Dental Education, 19(2), 74–80. https://doi.org/10.1111/eje.12105

Ray, M., Milston, A., Doherty, P., & Crean, S. (2017). How prepared are foundation dentists in England and Wales for independent general dental practice? British Dental Journal, 223(5), 359–368. https://doi.org/10.1038/sj.bdj.2017.766

Sanders, C., Kleinert, H. L., Boyd, S. E., Herren, C., Theiss, L., & Mink, J. (2008). Virtual patient instruction for dental students: Can it improve dental care access for persons with special needs? Special Care in Dentistry, 28(5), 205–213. https://doi.org/10.1111/j.1754-4505.2008.00038.x

Schittek Janda, M., Mattheos, N., Nattestad, A., Wagner, A., Nebel, D., Färbom, C., Lê, D. H., & Attström, R. (2004). Simulation of patient encounters using a virtual patient in periodontology instruction of dental students: Design, usability, and learning effect in history-taking skills. European Journal of Dental Education, 8(3), 111–119. https://doi.org/10.1111/j.1600-0579.2004.00339.x

Seifert, L. B., Socolan, O., Sader, R., Russeler, M., & Sterz, J. (2019). Virtual patients versus small-group teaching in the training of oral and maxillofacial surgery: A randomized controlled trial. BMC Medical Education, 19(1), 454. https://doi.org/10.1186/s12909-019-1887-1

Song, H. S., Pusic, M., Nick, M. W., Sarpel, U., Plass, J. L., & Kalet, A. L. (2014). The cognitive impact of interactive design features for learning complex materials in medical education. Computers & Education, 71, 198–205. https://doi.org/10.1016/j.compedu.2013.09.017

van Merriënboer, J. J. G., & Kester, L. (2014). The four-component instructional design Model: Multimedia principles in environments for complex learning. In R. E. Mayer (Ed.), The Cambridge handbook of multimedia learning (2nd ed., pp. 104–148). Cambridge University Press. https://doi.org/10.1017/CBO9781139547369.007

van Merriënboer, J. J. G., & Kirschner, P. A. (2018). Ten steps to complex learning: A systematic approach to four-component instructional design (3rd ed.). Routledge. https://doi.org/10.4324/9781315113210

Vandewaetere, M., Manhaeve, D., Aertgeerts, B., Clarebout, G., Van Merriënboer, J. J. G., & Roex, A. (2015). 4C/ID in medical education: How to design an educational program based on whole-task learning: AMEE Guide No. 93. Medical Teacher, 37(1), 4–20. https://doi.org/10.3109/0142159X.2014.928407

Wang, S. Y., Chen, C. H., & Tsai, T. C. (2020). Learning clinical reasoning with virtual patients. Medical Education, 54(5), 481. https://doi.org/10.1111/medu.14082

Weiner, C. K., Skålén, M., Harju-Jeanty, D., Heymann, R., Rosén, A., Fors, U., & Lund, B. (2016). Implementation of a web-based patient simulation program to teach dental students in oral surgery. Journal of Dental Education, 80(2), 133–140.

Woelber, J. P., Hilbert, T. S., & Ratka-Krüger, P. (2012). Can easy-to-use software deliver effective e-learning in dental education? A randomised controlled study. European Journal of Dental Education, 16(3), 187–192. https://doi.org/10.1111/j.1600-0579.2012.00741.x

Yoshida, N., Aso, T., Asaga, T., Okawa, Y., Sakamaki, H., Masumoto, T., Matsui, K., & Kinoshita, A. (2012). Introduction and evaluation of computer-assisted education in an undergraduate dental hygiene course. International Journal of Dental Hygiene, 10(1), 61–66. https://doi.org/10.1111/j.1601-5037.2011.00528.x

Zary, N., Johnson, G., & Fors, U. (2009). Web-based virtual patients in dentistry: Factors influencing the use of cases in the Web-SP system. European Journal of Dental Education, 13(1), 2–9. https://doi.org/10.1111/j.1600-0579.2007.00470.x

Zary, N., Johnson, G., & Fors, U. G. H. (2012). Impact of the virtual patient introduction on the clinical reasoning process in dental education. Bio-Algorithms and Med-Systems, 8(2), 173–184. https://doi.org/10.2478/bams-2012-0011

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Literature search performed by FJ and data analysis were performed by FJ and PVR. The first draft of the manuscript was written by FJ and PVR critically revised and commented on the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethical Statement

This study is a literature review and did not involve human or animal subjects. Therefore, ethical approval was not required.

Competing interest

The authors have no conflict of interest to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Overview of the articles we reviewed and their specific study features (i.e. the research design used, number of participants, learning mode applied, scenario path type chosen, field of dentistry and summary of findings).

Appendix: Overview of the articles we reviewed and their specific study features (i.e. the research design used, number of participants, learning mode applied, scenario path type chosen, field of dentistry and summary of findings).

Study | Method (research design) | Qualitative /quantitative | Study goals | Knowledge/skills acquisition or motivational outcomes | Participants | Scenario path type: linear/branching | Field of dentistry | Findings/results | |

|---|---|---|---|---|---|---|---|---|---|

1 | Marei et al. (2019) | 3 experimental groups | Quantitative | To investigate different instructional methods (collaborative/individual) | Collaborative use of VPs after a lecture is the most efficient instructional method | 171 dental students Saudi Arabia | Branching | Oral and maxillofacial surgery | The collaborative deductive group performed significantly better and experienced less intrinsic and extraneous cognitive load |

2 | Seifert et al. (2019) | Randomised controlled trial (RCT) | Mixed methods | To investigate VPs as an alternative to lecturer-led small-group teaching in a curriculum | VP group felt better prepared for future encounters with real patients | 57 fourth-year dental students (mean age = 25) Germany | Linear (with return option) | Oral and maxillofacial Surgery | There was no significant difference between both groups (p = 0.55). With regard to self-assessed competence, the VP group felt better prepared to diagnose and treat real patients and regarded VPs as a rewarding learning experience |

3 | 4 experimental groups | Quantitative (2 × 2 factorial design) | To compare the effect of using VPs as collaborative or individual learning activity | The use of collaborative VPs could enhance students’ knowledge acquisition and retention | 82 (topic 1) 76 (topic 2) fourth-year dental students. Saudi Arabia | Branching | Oral and maxillofacial surgery | Collaborative use of VPs is more effective than independent groups in enhancing students’ knowledge acquisition and retention. However, collaboration is not enough for consistent results, and other interactive factors should be considered | |

4 | 2 groups (male /female) | Mixed methods | To investigate the perceived value of VP use and to explore the main features of VPs that promote professionalism | To teach professionalism, we need high-fidelity VPs with specific dramatic structure and multiple ending-points followed by a reflection session | 65 fifth-year dental students Saudi Arabia | Branching | Oral and maxillofacial surgery | To develop ethical reasoning skills, high-fidelity VPs were perceived as significantly better than low-fidelity VPs | |

5 | Marei et al. (2017) | Four experimental groups | Quantitative (2 × 2 factorial design) | To investigate the effect of sequencing VPs with lectures in a deductive or inductive approach on students’ knowledge acquisition, retention and transfer | Inductive or deductive approaches to sequencing VPs should differ in the amount of guidance depending on students’ prior knowledge and VP complexity | 84 dental students Saudi Arabia | Branching | Oral and maxillofacial surgery | Inductive and deductive learning approaches to sequencing VPs lead to no significant differences in student performance when students receive full guidance in the inductive approach |

6 | Weiner et al. (2016) | One comparison group | Quantitative (pre/post-test design) | To evaluate the effect of VP use on students’ learning, knowledge and attitudes | VPs are a valuable tool for teaching clinical reasoning and patient evaluation | 67 third-year dental students Sweden | Linear | Oral surgery education | Significant increase in knowledge (p < 0.001) Positive attitude towards VP use |

7 | Allaire (2015) | One comparison group | Quantitative (pre/post-test design) | To determine the effects of VPs on critical thinking skills and nonclinical components of patient care | Insight into educational strategies that promote critical thinking | 34 fourth-year dental hygiene students Houston, Texas | – | Dental hygiene | No significant increase in critical thinking scores (p = − 0.075), but students perceived VPs as an effective teaching method to promote critical thinking, problem-solving and their confidence |

8 | Antoniou et al. (2014) | Focus group | Qualitative | To assess the suitability and explore the requirements and specifications needed to meaningfully repurpose VPs | Identification of guidelines and best practices regarding the pedagogically correct repurposing of VP content from the Web to a 3D virtual environment | 9 undergraduate dental studentsGreece | Branching | Periodontology | A comparison between the Web-based and the Second Life VP revealed the inherent advantages of the more experiential and immersive Second Life virtual environment |

9 | Papadopoulos et al. (2013) | Comparison groups (experimental-control) | Quantitative | To design a VP that serves as a supplemental tool to teach clinical management skills and to evaluate its use | 103 fourth-year dental students Greece | Linear | Paediatric dentistry | There was a significant difference between the two groups (p < 0.001), showing that the VP had a positive learning effect. The majority of the participants evaluated the aspects of the simulation very positively, whilst 69% of the simulation group expressed their preference for using this module as an additional teaching tool | |

10 | Clark et al. (2012) | Comparison groups (experts-novices) | Quantitative | To examine the use of an autonomous VP by novices and experts to identify differences between them | 10 experts ( aged 40 ± 11) and 26 novices or fourth-year dental students (aged 27 ± 3) United States | Linear (active) | Orofacial pain and oral medicine | Novices perceived VPs as a valuable educational experience. Experts scored higher and ordered fewer diagnostic tests and medications than did the novices | |

11 | Zary et al. (2012) | Double-blind RCT | Quantitative | To evaluate whether and how the absence of vignettes affects students’ therapeutic decisions and reasoning in taking a virtual patient’s history | The use of detailed vignettes or case introductions might be more useful at earlier stages of student learning such as early in the semester but not as preparation for the final exam | 57 fourth-year dental students Sweden | Linear | History taking and therapy decision in general dentistry | There were no statistically significant differences in therapeutic decision scores (p = 0.16) between the two groups |

12 | Woelber et al. (2012) | RCT with 2 experimental groups (high-low-interactivity) | Quantitative | To evaluate the overall efficiency and students’ perceptions of VPs that were produced with either easy-to-use or complex software | Learners should have enough time to read written information before moving on and the integration of a few simple and activating tasks within the programme might prevent students from becoming less motivated | 85 dental students (from 6-8th semester) Germany | – | Periodontology | Learners in the easy-software group showed better results on the post-test (p < 0.044). Even easy-to-use software tools have the potential to be beneficial in dental education. Students were showing a high acceptance and ability in using both e-learning environments |

13 | Yoshida et al. (2012) | One study group | Qualitative | To design a VP that promotes students’ basic dental hygiene practice skills based on students’ perceptions and to evaluate its effectiveness | Using VPs to practise basic decision-making processes in dental hygiene is effective, even if there were some infrastructure problems | 43 s-year dental hygiene studentsJapan | – | Dental hygiene | 83% of the students felt that VP practice enabled them to improve their independent study of clinical practice. 93%, moreover, felt that the exercises should be continued in the future, and 88% felt that this virtual practice deepened their interest in other classes and training sessions. All students found the VP beneficial for their learning |

14 | Lund et al. (2011) | One study group | Quantitative | To investigate undergraduate students’ perceptions of two different simulation methods for practising clinical reasoning and technical skills | Most students agreed that the future curriculum would benefit from permanent inclusion of simulated exercises. To fulfil the aims of the seminar, i.e. to improve clinical reasoning and technical skills, the students must be exposed to more VPs | 47 fifth-year dental students (aged 23 to 45) Sweden | Linear (active) | Oral and maxillofacial surgery | Although students rated the possible improvement of their clinical reasoning skills as moderate, they were positive about the VP cases. Their perceptions of improved technical skills after training in the mandibular third molar surgery simulator were rated high. The majority of the students agreed that both simulation techniques should be included in the curriculum and strongly agreed that it was a good idea to use two simulators in concert. The importance of feedback from senior experts during simulator training was emphasised |

15 | Zary et al. (2009) | Two study groups | Quantitative | To evaluate the acceptance and utilisation of VPs as well as important factors for the utilisation of VPs for self-assessment over two consecutive years | Students found creative ways to use VPs for their own learning needs and purposes. Most of the students used feedback when available, and showed feedback-seeking behaviour even when it was not available | 68 (group A) and 53 (group B) undergraduate dental students (from 7 and 8th semester) Sweden | Linear (active) | Diagnostics and therapy planning in general dentistry | Both year groups studied found the Web-SP system easy to use and their overall opinion of Web-SP was positive (Median: 5, p25-p75: 4–5). They found the VPs engaging, realistic, fun to use, instructive and relevant to their course. The students clearly favoured VPs with feedback compared to those without (p < 0.001) |

16 | Kleinert et al. (2007) | One comparison group (pre/post-test design) | Quantitative | To investigate students’ satisfaction with the use of a VP child with Down syndrome regarding its potential to increase their competences and decrease perceived level of difficulty | Communication, modelling and other strategies for adapting treatment procedures for this population may well generalise across paediatric patients with other developmental disabilities | 51 third-year dental students (aged 25 to 31) United States | Linear | Paediatric dentistry (developmental disabilities); problem-solving and communication skills | Significant changes were found in both knowledge and perceived difficulty levels (p < 0.001) for students Participants reported overall satisfaction with the modules |

17 | Sanders et al. (2008) | One comparison group (pre/post-test design) | Quantitative | To produce a VP child with special needs with the aim to increase dental students’ clinical exposure to patients with developmental disabilities | VPs afford students the opportunity to practise and reflect upon their potential responses and decision-making strategies before they are called to treat actual patients with developmental disabilities in clinical settings | 44 third-year dental students (aged 25 to 31) United States | Linear | Paediatric dentistry developmental disabilities (deafness and blindness) | Significant results were obtained in students’ perceived comfort and knowledge base (p _ 0.001). Participants reported overall satisfaction using the modules |

18 | Boynton et al. (2007) | Comparison groups (simulation-control) | Quantitative | To evaluate the effectiveness of a VP child in developing students’ clinical management knowledge | Adding clinical management simulation to the traditional lecture curriculum improved dental students' knowledge of behaviour management | 109 (control group) and 98 (simulation group) dental studentsUnited States | Linear | Paediatric dentistry (clinical management) | Students from the simulation group performed significantly better in both parts of the examination (objective section: 0.028; open-ended section: 0.012); the simulation was evaluated and perceived by students as an effective addition to the curriculum |

19 | Schittek Janda et al. (2004) | RCT | Quantitative | To develop and evaluate a VP for the training and assessment of dental students in clinical decision-making and treatment planning | The findings indicate that the use of VPs and the process of writing questions in working with VPs stimulate students to organise their knowledge and result in more confident behaviour towards the patient | 39 s-year dental students (PBL) Sweden | – | Periodontology | The VP group asked more relevant questions, spent more time on patient issues, performed a more complete history interview and displayed more empathy compared with the control group |

20 | Mardani et al. (2020) | Comparison groups (simulation-control) | Quantitative | To evaluate the effect of VP training on clinical decision-making | Group discussions should be held alongside VPs in order to ensure maximum retention of the topics learnt | 76 dental students Iran | Branching | Oral and maxillofacial surgery | Students’ problem-solving ability was significantly higher (p < 0.001) in the VP group than in the control group. VPs can improve learning and the clinical decision-making ability of dental students |

21 | Littlefield et al. (2003) | – | Quantitative | To develop a VP for teaching or assessing endodontic diagnosis and to evaluate its effectiveness | Novices chose large amounts of diagnostic data, an approach to diagnosis that, although common among novice clinicians, does not parallel expert diagnosis and also requires a great deal of time investment in real life | 74 dental students for pilot test; 182 dental students for field test United States | – | Endodontic diagnosis | The authors concluded that multimedia patient simulations offer a promising alternative for teaching and assessing student diagnostic skills |

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Janesarvatan, F., Van Rosmalen, P. Instructional design of virtual patients in dental education through a 4C/ID lens: a narrative review. J. Comput. Educ. (2023). https://doi.org/10.1007/s40692-023-00268-w

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40692-023-00268-w